1. Introduction

In the realm of autonomous driving research, sensors play a pivotal role. Cameras, for instance, perceive incoming light through their lenses and image sensors. Radar, short for “radio detection and ranging”, employs electromagnetic waves to estimate position and relative velocity.

Lidar, or “light detection and ranging”, stands out as a key sensor in autonomous driving research. It gauges position by measuring the time it takes for a laser beam to be emitted, reflect off an object, and return. Lidar, especially the time-of-flight (TOF) variant, is widely used in autonomous vehicles to acquire point cloud data, aiding in object detection, localization, and map construction. Integrating lidar into roadside infrastructure is emerging, and some studies have been conducted using roadside lidar [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10].

A high-channel lidar, typically having 64 channels or more, offers advantages such as narrower beam spacing, facilitating feature extraction and the detection of distant objects. However, these sensors are expensive, making it difficult to install multiple units in one space. Moreover, they are constrained by their limited measurement range and susceptibility to occlusion.

Research on the placement of multiple lidars has primarily focused on vehicles. S. Roos et al. [

11] placed multiple lidars and assessed their performance using the CARLA simulator. T. Kim et al. [

12] introduced a multi-lidar placement approach that takes into account blind spots created by other vehicles. They placed a 2D occupancy grid board at a specified distance and calculated occupancy using the point cloud reflected on the board. Furthermore, various studies have proposed techniques for placing multiple sensors within a single vehicle [

13,

14,

15,

16,

17].

Existing research on lidar placement primarily focuses on vehicles and does not consider the placement problem for infrastructure sensors. Urban environments often feature numerous obstructions, such as streetlights and traffic signals, at intersections. These obstructions can lead to a decline in the quality of raw data due to occlusion.

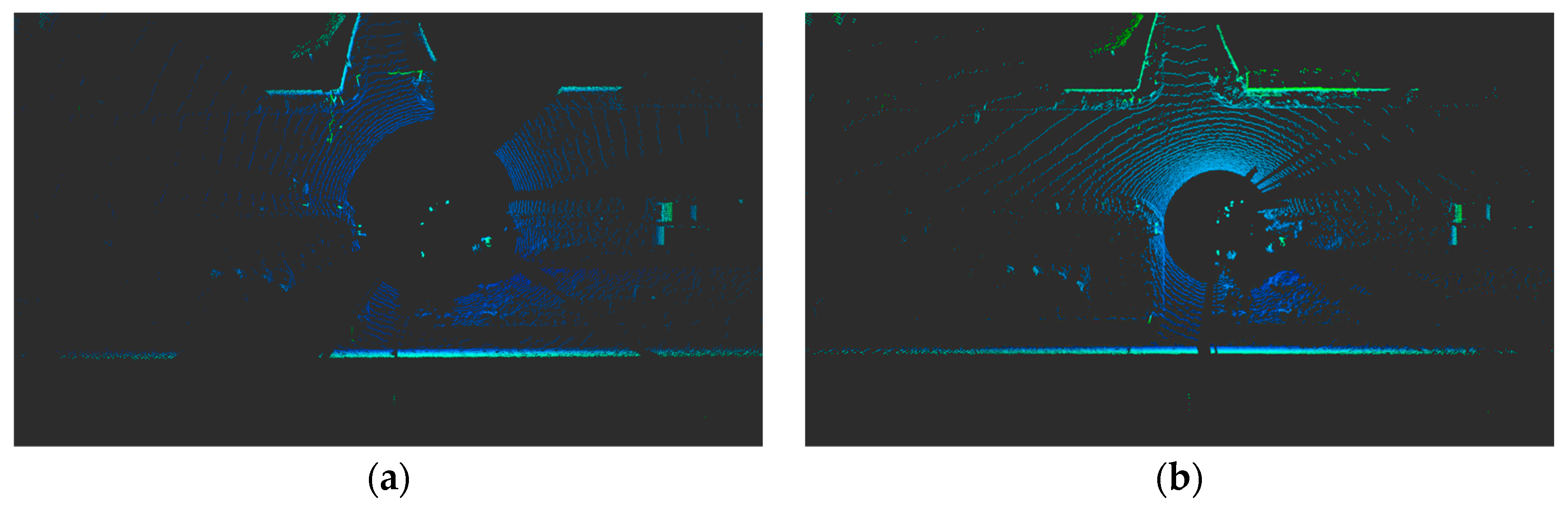

Figure 1 shows a point cloud acquired from a typical intersection. The quality of these raw data varies depending on lidar placement, leading to a drop in object detection performance. Therefore, the optimization of sensor placement in urban settings emerges as a crucial challenge. Infrastructure lidar placement offers a higher degree of freedom, requiring consideration of both the xyz positions and roll, pitch, and yaw angles [

18]. X. Cai et al. [

18] conducted a study where they placed multiple lidars at an intersection, analyzing their impact on recognition performance based on their placements. S. Jin et al. [

19] put forth an evaluation method for lidar placement, but it lacks a systematic approach, including accounting for blind spots in modeling the real environment.

A. Qu et al. [

20] proposed implementing the environment in a simulation and placing sensors, designating candidate locations, and optimizing them using the point cloud projected on the road surface while excluding buildings, sidewalks, etc. L. Kloeker et al. [

21] proposed optimizing lidar placement in infrastructure by utilizing a 3D digital map. They divided the road into triangles based on the OpenDRIVE [

22] format map and optimized lidar placement by considering the number of points projected onto these triangles.

Several studies have used multiple lidars. However, they often fail to reflect the unique features of urban roads, characterized by numerous buildings, poles, and more, or have primarily relied on simulations. In this study, we propose a method for multiple sensors placement, aiming to identify the optimal position and orientation that maximizes data detection range. Our proposed method takes into account blind spots arising from the road environment. To quantify parameters for sensor placement, we introduce a novel approach for evaluating occupancy through voxelization of a map. Additionally, we employ a genetic algorithm to address the sensor placement problem. The sensor array is represented by chromosomes, and new chromosomes are generated through crossover and mutation operators. The experiments of this study were conducted in a simulation replicating the real environment, and the results affirm the effectiveness of our proposed method. The key contributions of this paper are as follows:

Modeling the lidar placement environment based on real-world data rather than simulation.

Converting the point cloud map and lidar beams into a computable discrete signal format (voxel) for quantitative evaluation.

Optimizing the positions and directions of the lidars using genetic algorithm chromosomes and introducing a 2-opt local optimization method.

Proposing a placement method for multiple lidars (two or more) on infrastructure, replicating and validating the simulation placement results in a real environment.

This paper is structured as follows.

Section 2 introduces a multiple lidar system in an urban environment, and

Section 3 provides a mathematical description of the problem.

Section 4 describes the optimization algorithm, while

Section 5 presents the experimental results.

2. Multiple Lidar System in an Urban Environment

The mechanically rotating 3D lidar radiates channel laser beams in the vertical direction, forming a rotating laser beam array in the horizontal direction. The angle between these beams in the vertical or horizontal direction is referred to as the resolution, denoted by and , respectively. The lowest and highest angles in the vertical direction are represented as , and , respectively.

The lidar sensor calculates distances by measuring the time taken for emitted laser beams to reflect off objects and return. This information is represented as points.

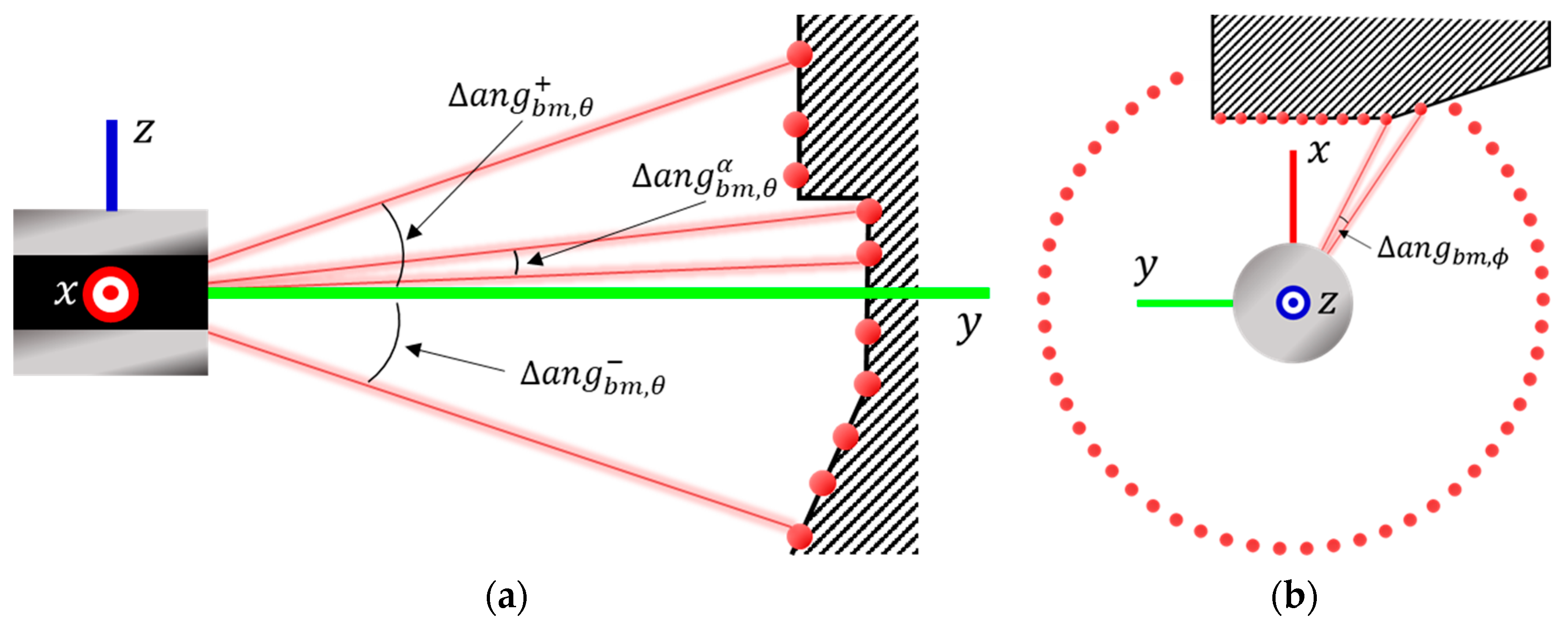

Figure 2 shows the resolution and reflection points of the lidar.

When a lidar is placed along an urban road, it may encounter obstacles like buildings that can block the laser beams, causing what is commonly known as a “dead zone”. To minimize dead zones, multiple lidars are strategically positioned at intersections. Each lidar covers a specific area, resulting in overlapping coverage regions.

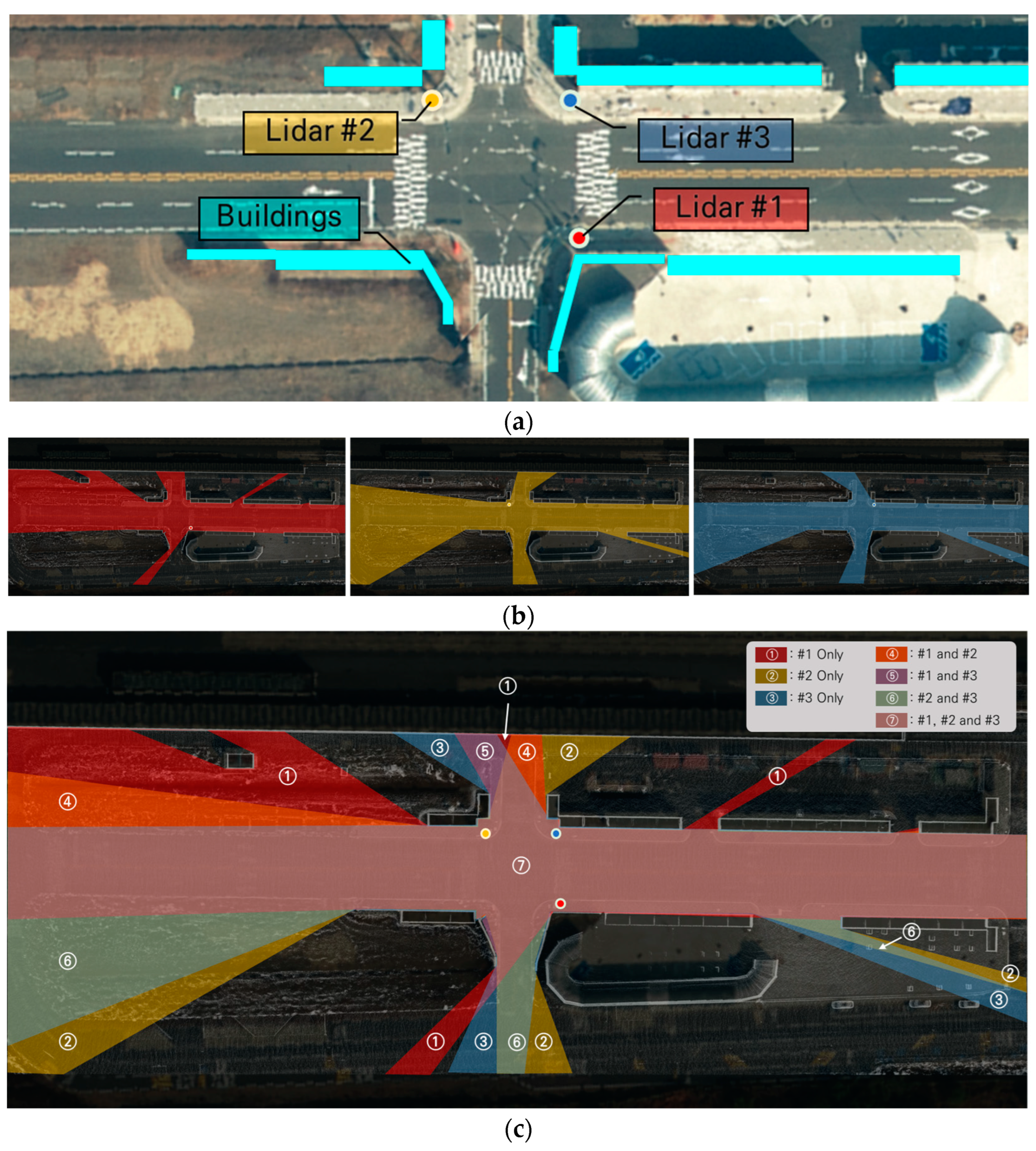

Figure 3 provides an example of multiple lidar systems, their coverage areas, and the overlapping coverage regions.

Figure 3a displays the positions of three lidars placed at an intersection, with the cyan polygons representing buildings that replicate the urban environment and create blind spots in sensing.

Figure 3b shows the coverage of each lidar in relation to the blind spots caused by the buildings.

Figure 3c demonstrates the overlapping coverage provided by the three lidars, effectively reducing blind spots at intersections through their strategic deployment. Almost all areas around intersections are covered by lidar.

In a multiple lidar system containing

lidars, the position of the

-th lidar is as follows.

where

is the set of potential positions for the placement of lidars. The position (

) of the first (reference) lidar is fixed by the user, while the positions of the remaining lidars are determined using the proposed method.

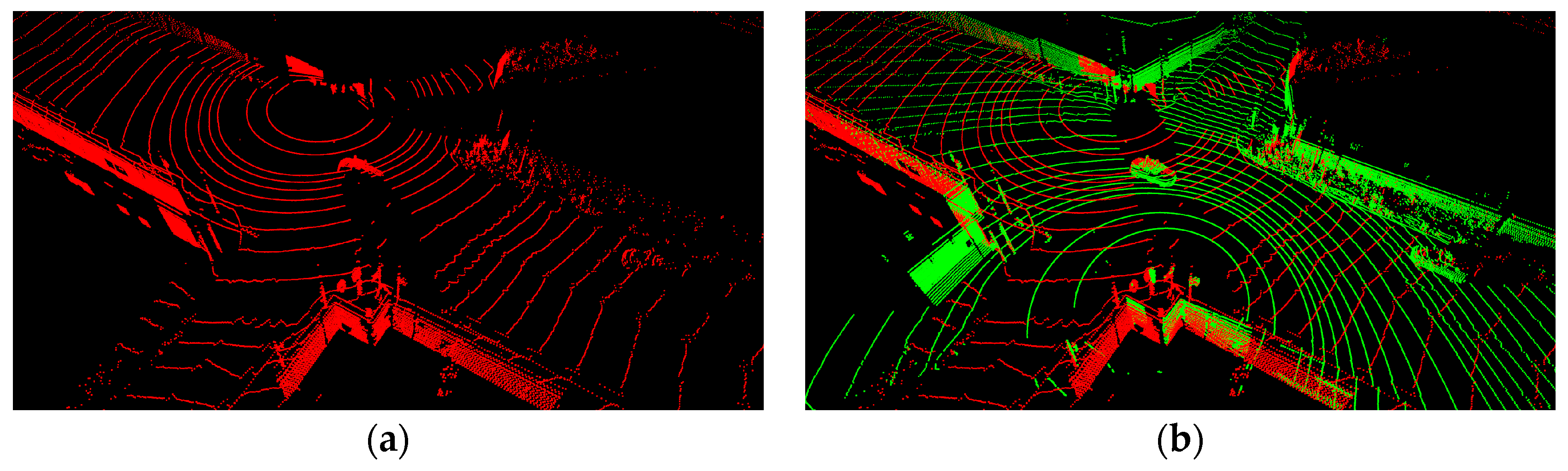

The number and density of lidar points increase in areas where the coverage overlaps. Efficient placement of multiple lidars reduces dead zones at urban intersections, as shown in

Figure 4, and enables the acquisition of high-resolution data, similar to that achievable with high-channel lidars.

3. Problem Formulation

The main problem of multiple lidar placement in urban environments is minimizing blind spots while maximizing point density. One of the main problems is that the locations for lidar placement are restricted to specific areas, such as the roadside, to avoid interfering with the road where vehicles are in operation. Therefore, an optimization method is required to identify the optimal placement while modeling the real environment to reduce blind spots.

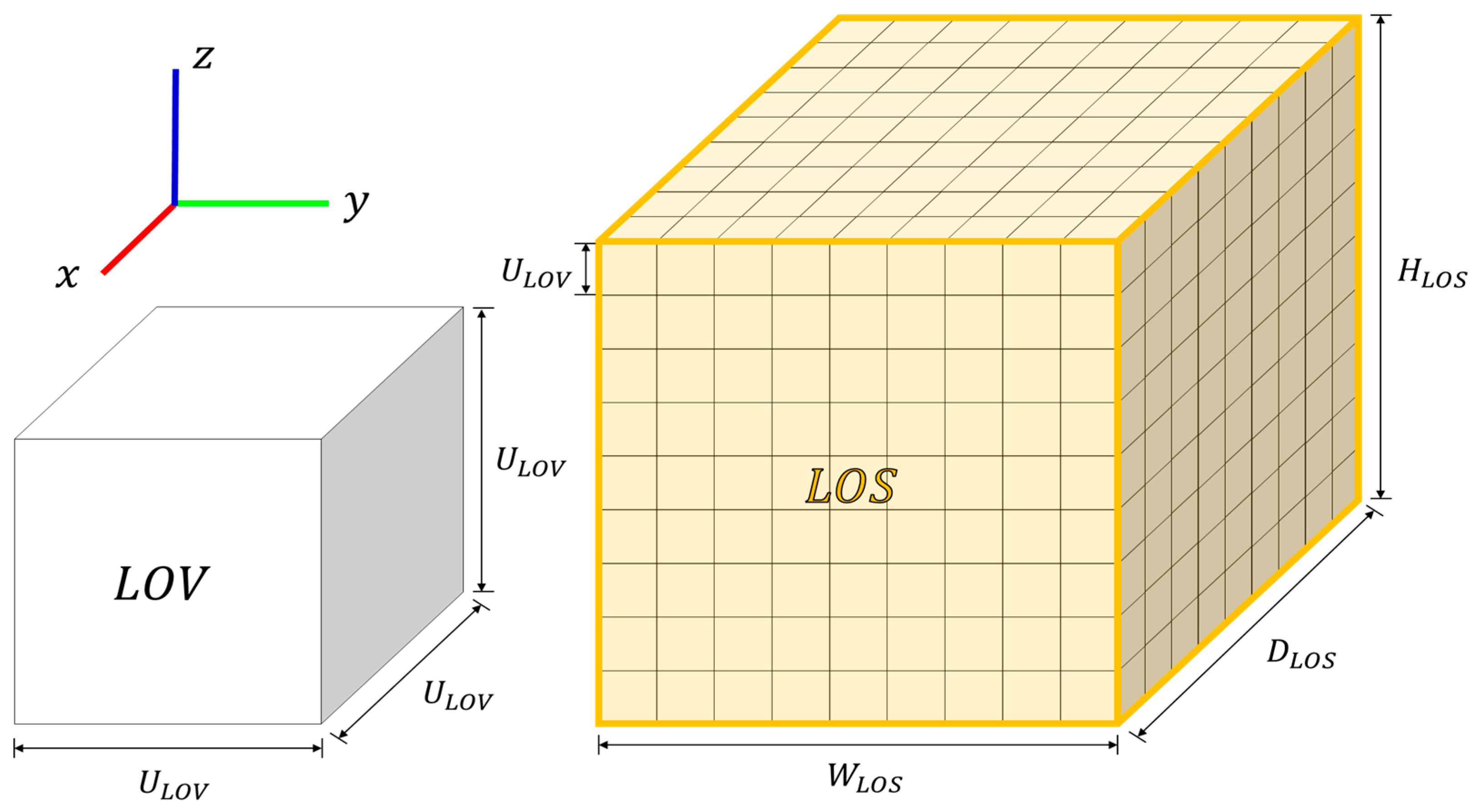

In this study, we introduce the concept of Lidar Occupancy Space (LOS) for optimization. LOS comprises voxels, which are a down-sampling method that reduce the number of point clouds and converts them into a normalized discrete signal format. This enables the modeling of the real environment for computational purposes and reduces the computational load for layout optimization. An LOS is generated to match the size of the user’s region of interest . Since the point cloud map, derived from real lidar data, accurately reflects the actual environment, the LOS is weighted using this map, and the occupancy rate is determined as the lidar beams traverse the LOS.

Additionally, we propose a Lidar Occupancy Voxel (LOV) grid to assess the distribution of lidar beams. LOV consists of unit-length cubes (

). The LOS is divided into

voxel grids, which are determined according to the size of the LOS and the unit length (

) of the LOV.

Figure 5 shows LOV and LOS, where LOS is an aggregation of LOVs.

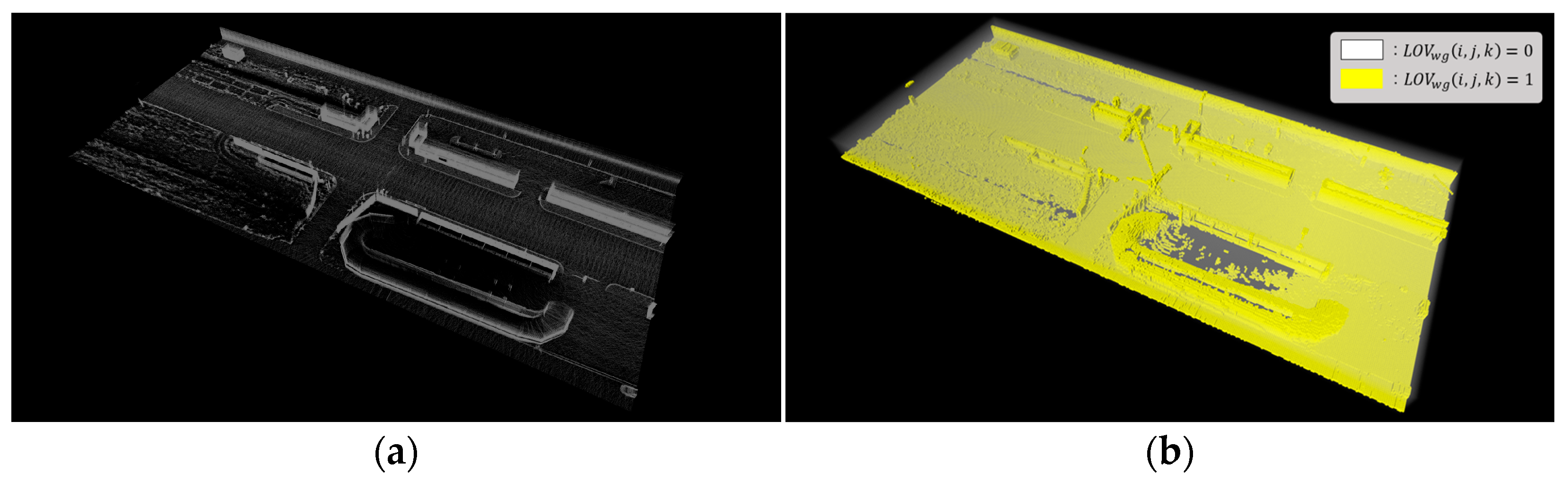

To ensure the accurate weighting of LOS using the point cloud map, the point cloud map must undergo preprocessing (filtering) to match the size of (

). When the LOS and point cloud map overlap, the distribution of points within each LOV will vary, as shown in

Figure 6.

Figure 6a presents the point cloud map, while

Figure 6b shows the LOS generated from this map. The weight of the LOV comprising the LOS is determined by the number of points contained within the corresponding voxel, with yellow indicating the presence of weight. For the voxel grid

, the weight (

is defined as follows.

where

is the number of points of the point cloud map included in

.

denotes whether the grid is occupied by the point cloud map, and has a weight if it is occupied.

In the proposed method, occupancy is evaluated based on whether the lidar beam passes through the voxel grid that constitutes the LOS, and is determined as follows:

Step 1. Set lidar counter to 1.

Step 2. Set the horizontal angle counter to 0.

Step 3. Set the vertical angle counter to 1, and set the vertical angle variable to .

Step 4. Calculate the intersection

of the six outermost surfaces of the LOS and the lidar beam

as follows.

where

is the distance between the lidar and the intersection.

Step 5. Using the Bresenham algorithm [

23], store index

of the voxel grid

included in the line segment between

and

in the array

.

where

is the number of voxel grids included in the line segment. The Bresenham algorithm is a computer graphics algorithm designed for drawing straight lines using integer calculations exclusively, avoiding the complexity and slowness associated with real number calculations. Since actual computer screens consist of pixels, and pixels are inherently integers, a straight line drawn through a straight-line equation may span multiple pixels. In this study, we generated LOS by introducing voxels and efficiently approximated straight lines within the LOV that constitutes the LOS.

Step 6. Set the index search counter to 0.

Step 7. If , change to to 1 and move to Step 9.

Step 8. Increment the index search counter and move to Step 7.

Step 9. Increase the vertical angle counter

and calculate

as in the following equation. If

, move to Step 4.

Step 10. Increase the horizontal angle counter . If , move to step 3.

Step 11. Increase lidar counter , If , move to Step 2.

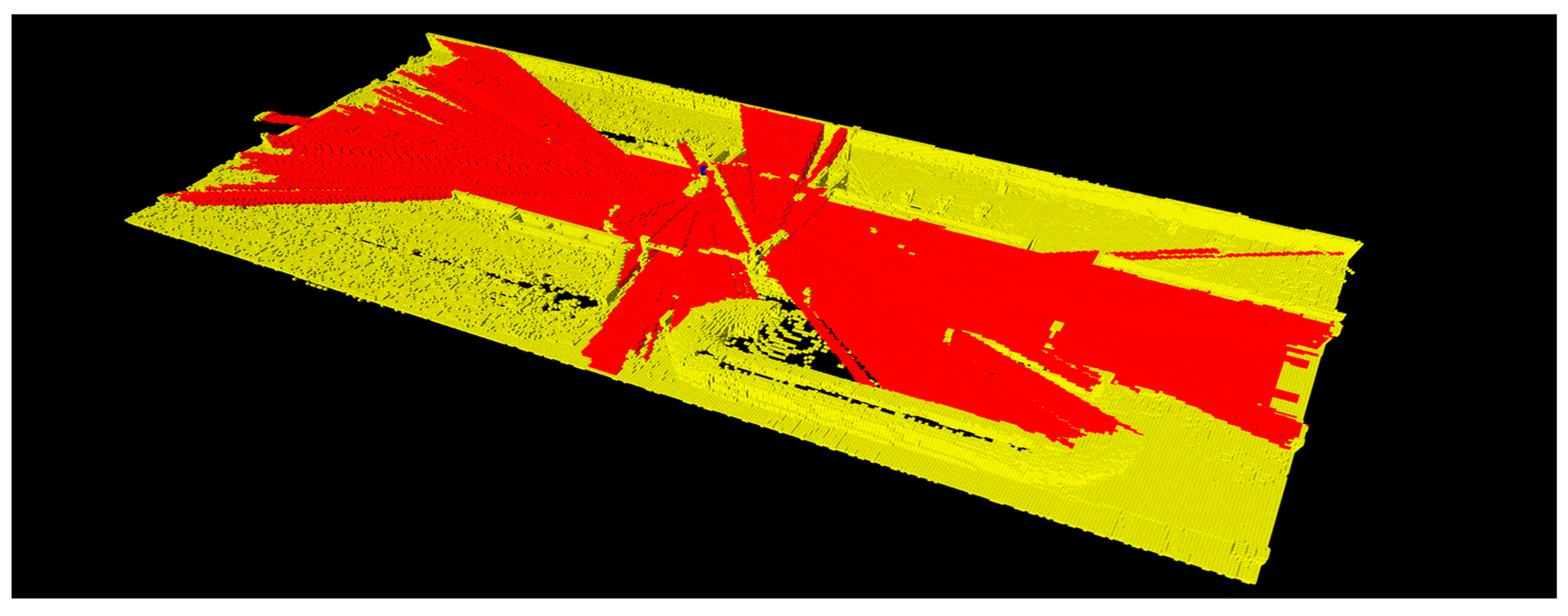

The total lidar occupancy

of lidar beams relative to the LOV, as shown in

Figure 7, is the sum of all voxel grids, and is calculated as follows.

On the other hand, lidar occupancy

is the ratio of

to the total number of voxel grids.

The lidar placement problem aims to determine the lidar locations

to maximize the lidar occupancy LO. The voxel grid value depends on the lidar’s placement, and the mathematical formula is defined as follows.

4. Optimization

This section describes the placement optimization algorithm. The placement algorithm is designed to find a near-optimal solution. The genetic algorithm is a search method that identifies the optimal solution by simulating the way organisms evolve and adapt to their environment. This algorithm operates by selecting the chromosome with the best fitness from a set of chromosomes and iteratively refining the search in the direction of the optimal solution. The sensor placement algorithm is as follows:

Step 1. Place the first (reference) lidar.

Step 2. Create lidar occupancy space (LOS) using the point cloud map.

Step 3. Assign weights to the voxel grid.

Step 4. Set the lidar count to 2.

Step 5. Find the that maximizes the lidar occupancy (LO) using a genetic algorithm.

Step 6. Increment the lidar counter. If go to Step 5.

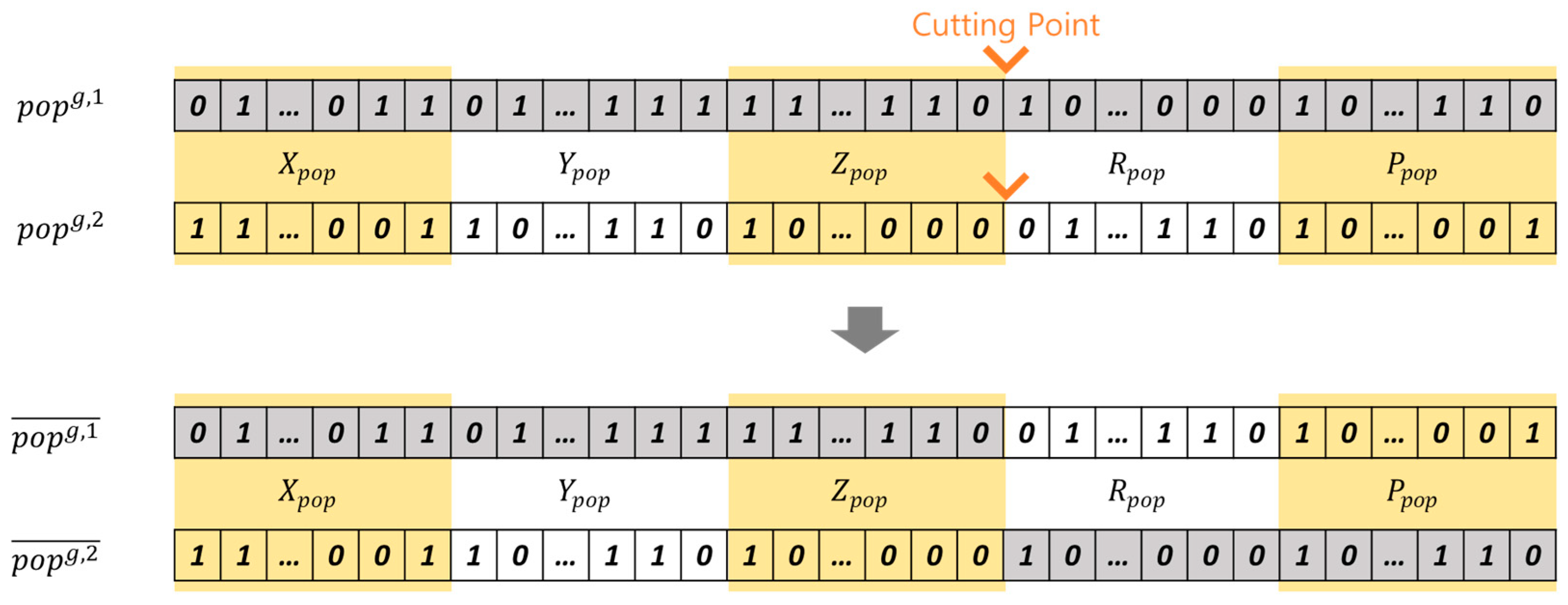

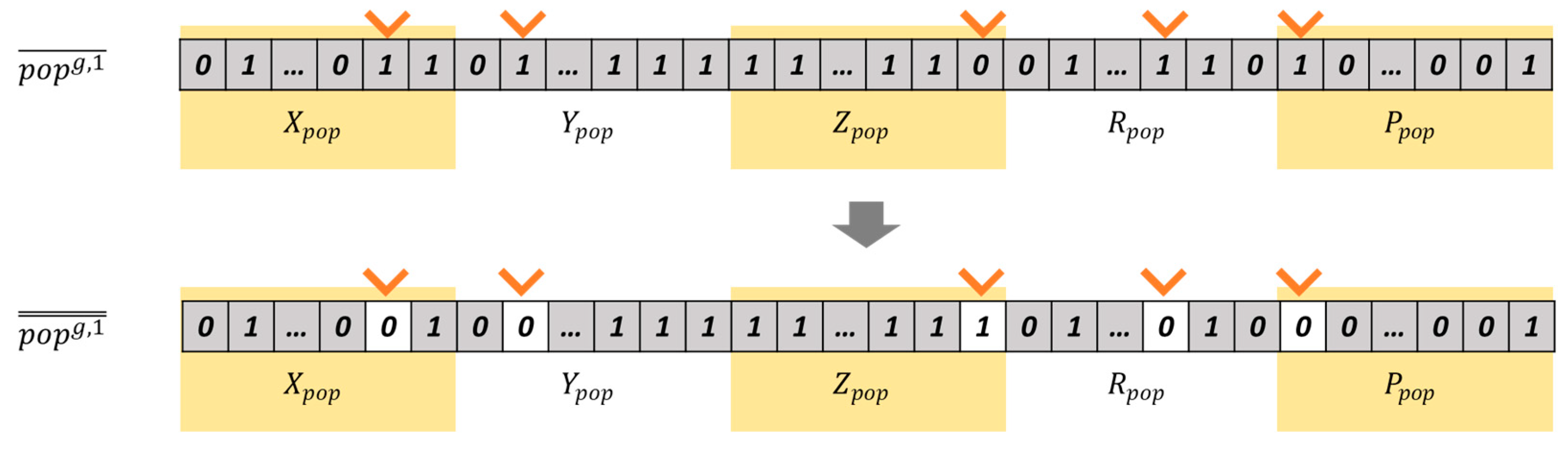

In Step 5, a genetic algorithm (GA) is applied to determine the placement of the sensors. The chromosome

is a binary string divided by five sections as:

where

, and

are matched with the lidar placement

, respectively.

The initial population, denoted as

, is generated through random number generation. The next population is formed by the selection operator, with the remaining stochastic sampling [

24] reproduces to chromosomes with higher fitness, where fitness is defined as the total lidar occupancy

.

The crossover and mutation operators create a range of new chromosomes, enhancing the optimization of lidar placements. Chromosomes are randomly selected based on the crossover probability (

), and the crossover point is randomly determined at the boundary of sections, as showed in

Figure 8. New chromosomes

and

are generated through the crossover operation of

and

. Similarly, chromosomes are randomly selected according to the mutation probability (

). For chromosome

, one bit from each section (

is selected. A new chromosome

is generated by inverting the selected bits, as depicted in

Figure 9.

Our genetic algorithm is summarized as follows:

Step 5-1. Generate initial population randomly.

Step 5-2. Set the generation counter to 1.

Step 5-3. Calculate the fitness, and reproduce chromosomes by the remainder stochastic sampling.

Step 5-4. Pairs of chromosomes are randomly selected, and crossover operation is performed between chromosomes.

Step 5-5. Chromosomes are randomly selected, and mutation operation is performed.

Step 5-6. Find the best chromosome and vary it by 2-opt improvement [

25]. The 2-opt method is a local search algorithm that examines all feasible combinations and swaps them to find a solution.

Step 5-7. Make increments to the generation counter . If the exit condition is not satisfied, go to Step 5-3.

Step 5-8. The final best chromosome is decoded into placement of lidar position .

5. Experiments

5.1. Experimental Setup

The proposed sensor placement algorithm was validated using 3D lidars. Two or three forty-channel lidars (HESAI Pandar40P, Shanghai, China) were simulated and used for placement optimization. For performance comparison, a single 128-channel lidar (Velodyne VLS-128, San Jose, CA, USA) was utilized. The algorithm was executed on a workstation running Linux Ubuntu 20.04 and ROS Noetic, with the program developed in the C++ language. The specifications of the lidars used in the experiment are detailed in

Table 1.

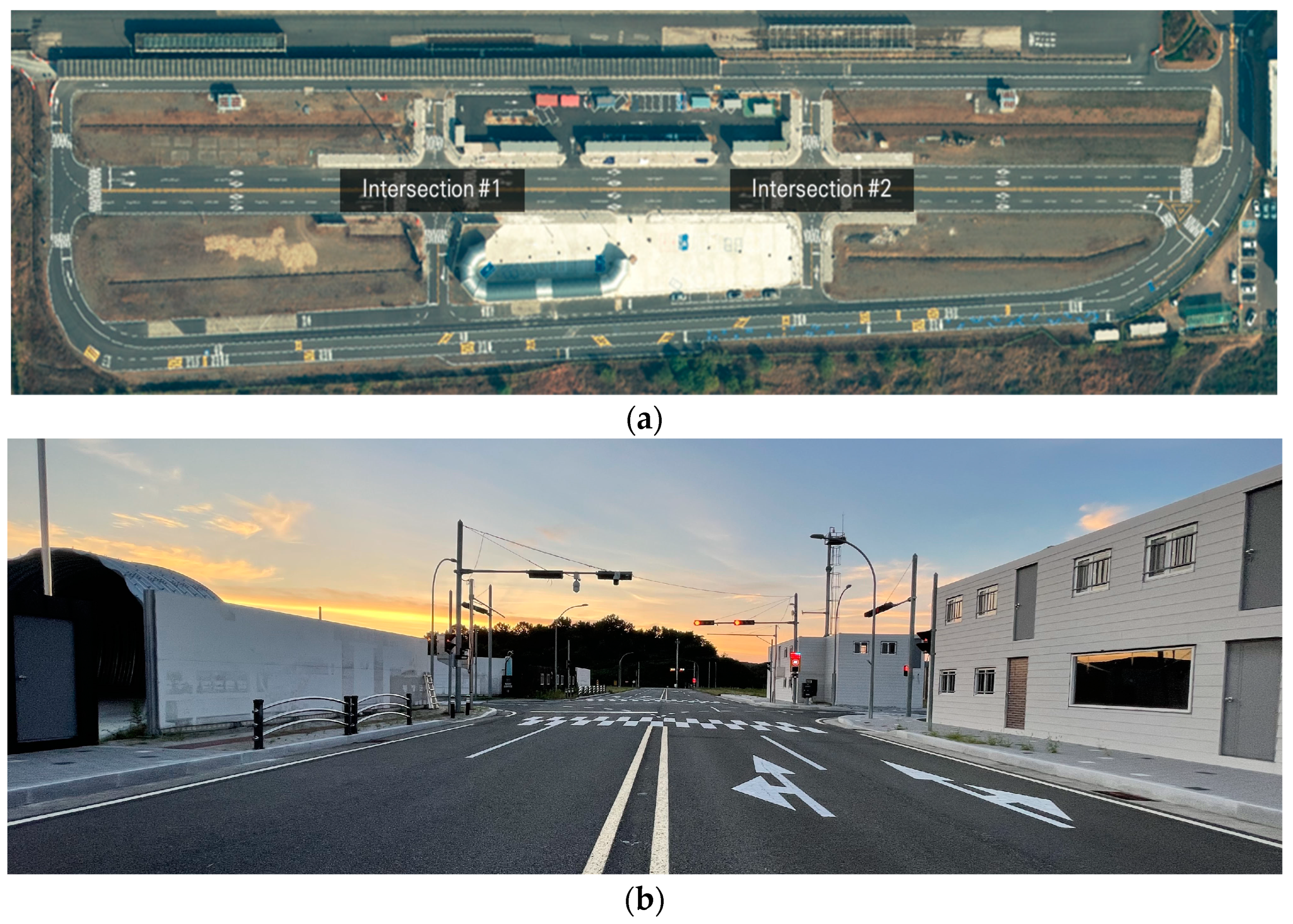

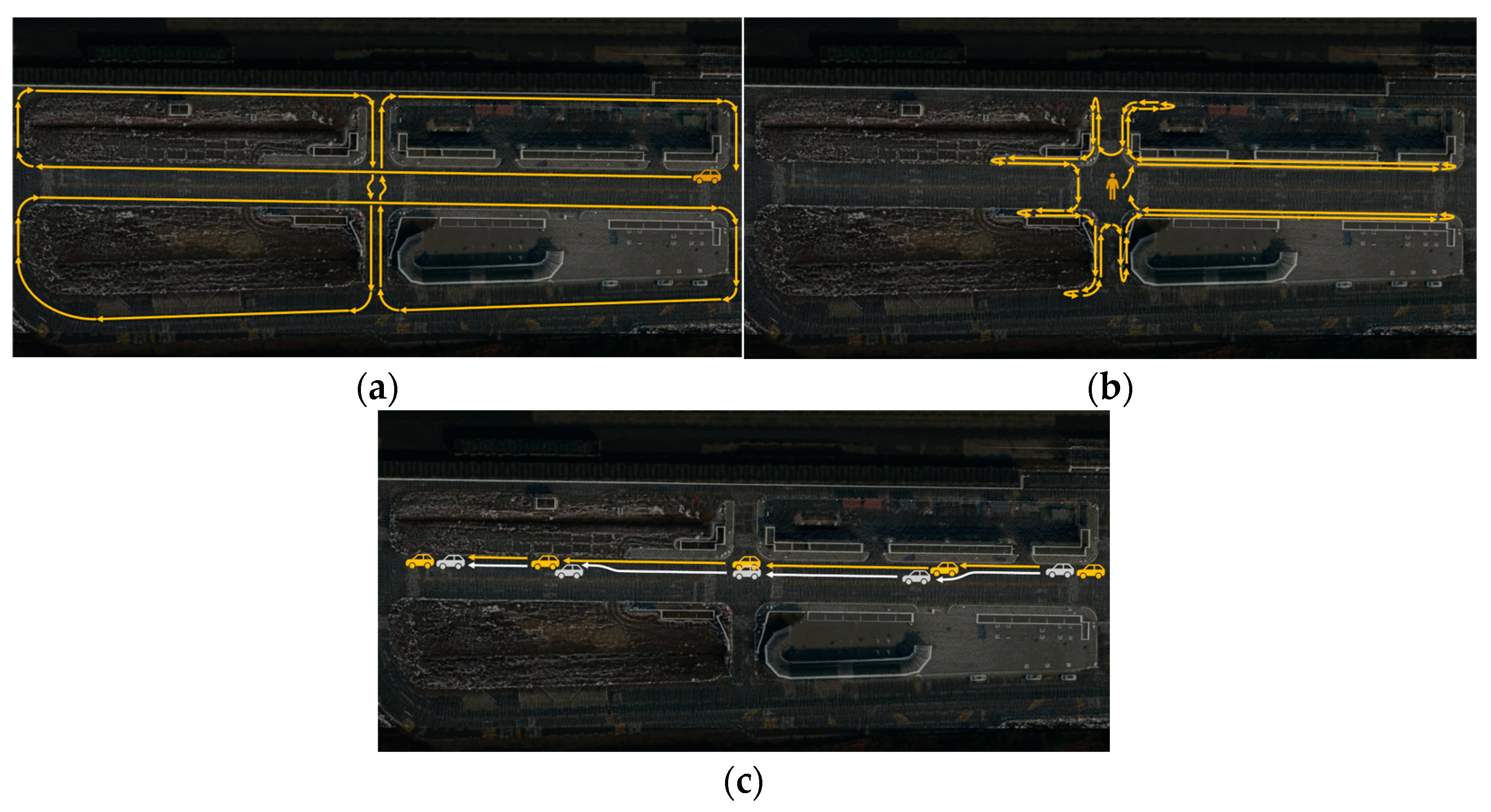

The test area selected for the experiment was the proving ground of the Korea Automotive Parts Promotion Institute (KIAPI). KIAPI’s proving ground encompasses various test facilities, including an autonomous vehicle test road, a multipurpose test track, and a high-speed circuit. This experiment specifically focused on the autonomous vehicle test road, which features two four-way intersections. These intersections replicate an urban environment and exhibit blind spots created by buildings, as shown in

Figure 10.

Table 2 provides a comprehensive overview of the experiment. As part of the simulation, the placement optimization results of two or three forty-channel lidars and one one-hundred-and-twenty-eight-channel lidar were compared at Intersection #1 (S1~S3) and Intersection #2 (S4~S6). The optimization of lidar placement involved adjusting the crossover and mutation probabilities.

The results of the optimal placement in the simulator at Intersection #1 were subsequently replicated in a real environment (R1~R6). In the real environment, the vehicle was driven, and its detection was based on data acquired from the placed lidars, with a comparison of detection ranges (R1~R2). Additionally, pedestrians walking in the real environment were detected using data from the lidars, and their detection ranges were compared (R3~R4). Finally, when two vehicles were driven, one vehicle was partially obscured, and the detection range was compared by detecting the vehicles from the data acquired by the placed lidars. To place lidars on a simulator using the proposed method and verify their performance, an experiment was conducted at two intersections with different environments. Furthermore, by optimally placing multiple 40-channel lidars, which is relatively cost effective, it was intended to reduce the blind spot of the intersection and maintain the detection performance. We sought to validate the effectiveness of the proposed method by applying the simulation results to a real environment. We aimed to verify the utility of the proposed placement method by evaluating the detection performance of objects primarily found in infrastructure, such as vehicles and pedestrians, and demonstrating its reproducibility.

5.2. Experimental Results

5.2.1. Placement Optimization Simulation

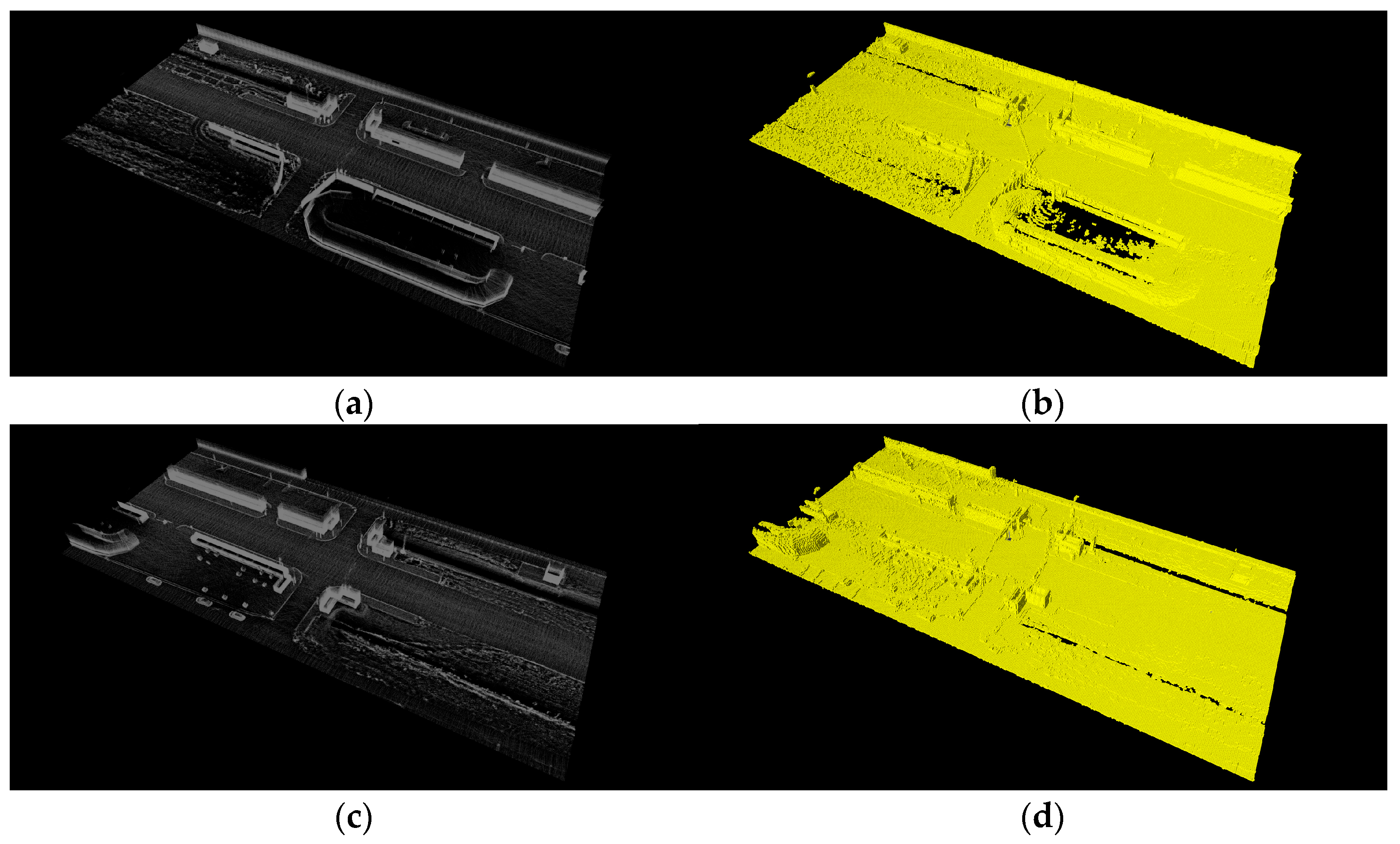

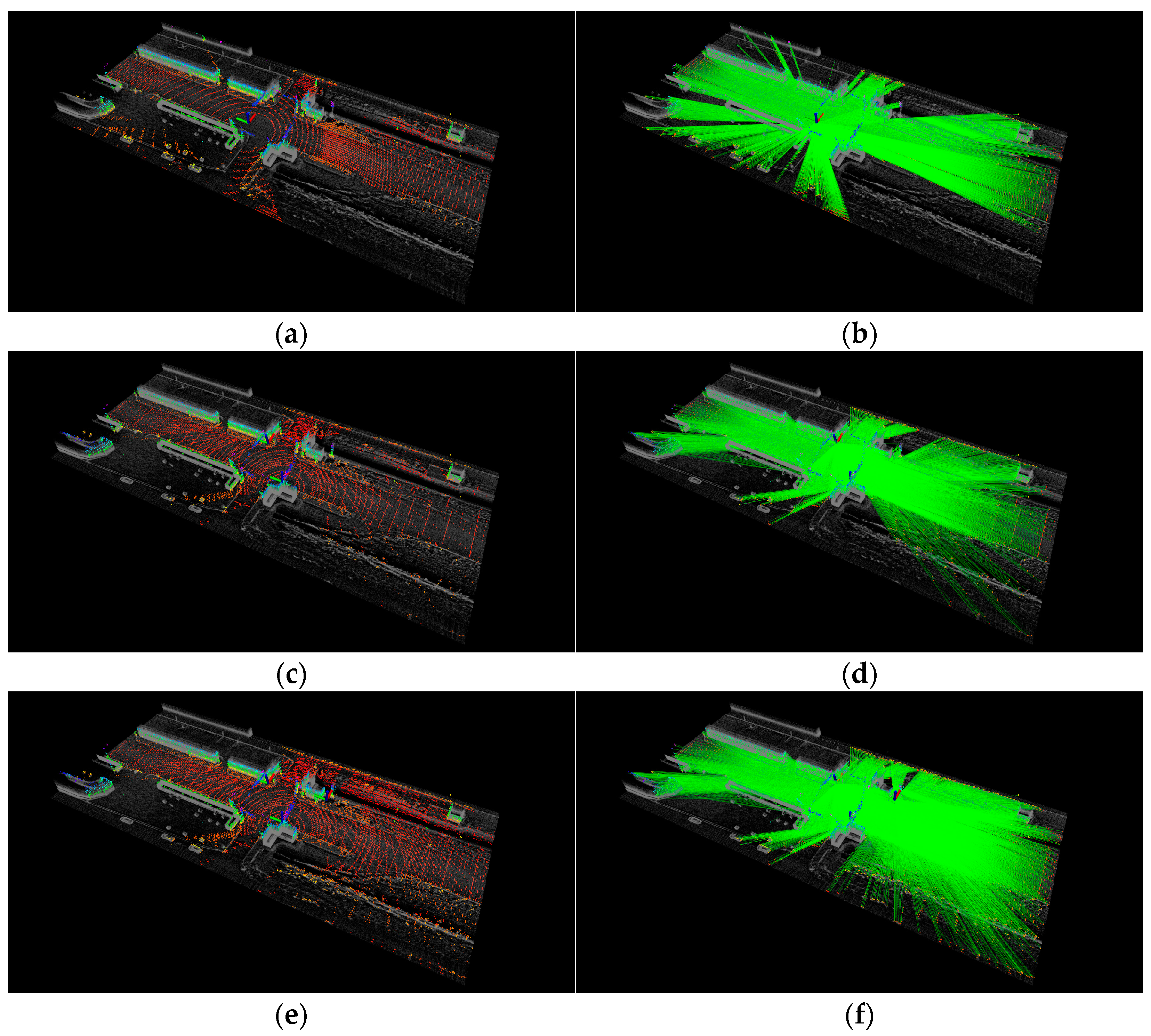

The parameters for the genetic algorithm were set as follows: a population of 100 and 300 iterations. The crossover probability ranged from 0.4 to 0.2, and the mutation probability ranged from 0.2 to 0.05, respectively. The size of LOS was (160 m, 72 m, 10 m), with a unit distance of LOV set at 0.4 m, as depicted in

Figure 11. In the experiment involving two and three forty-channel lidars at Intersections #1 and #2, the placement of the first (reference) lidar was at (−7.9, −9.1, 2.9, −0.4, 0.5).

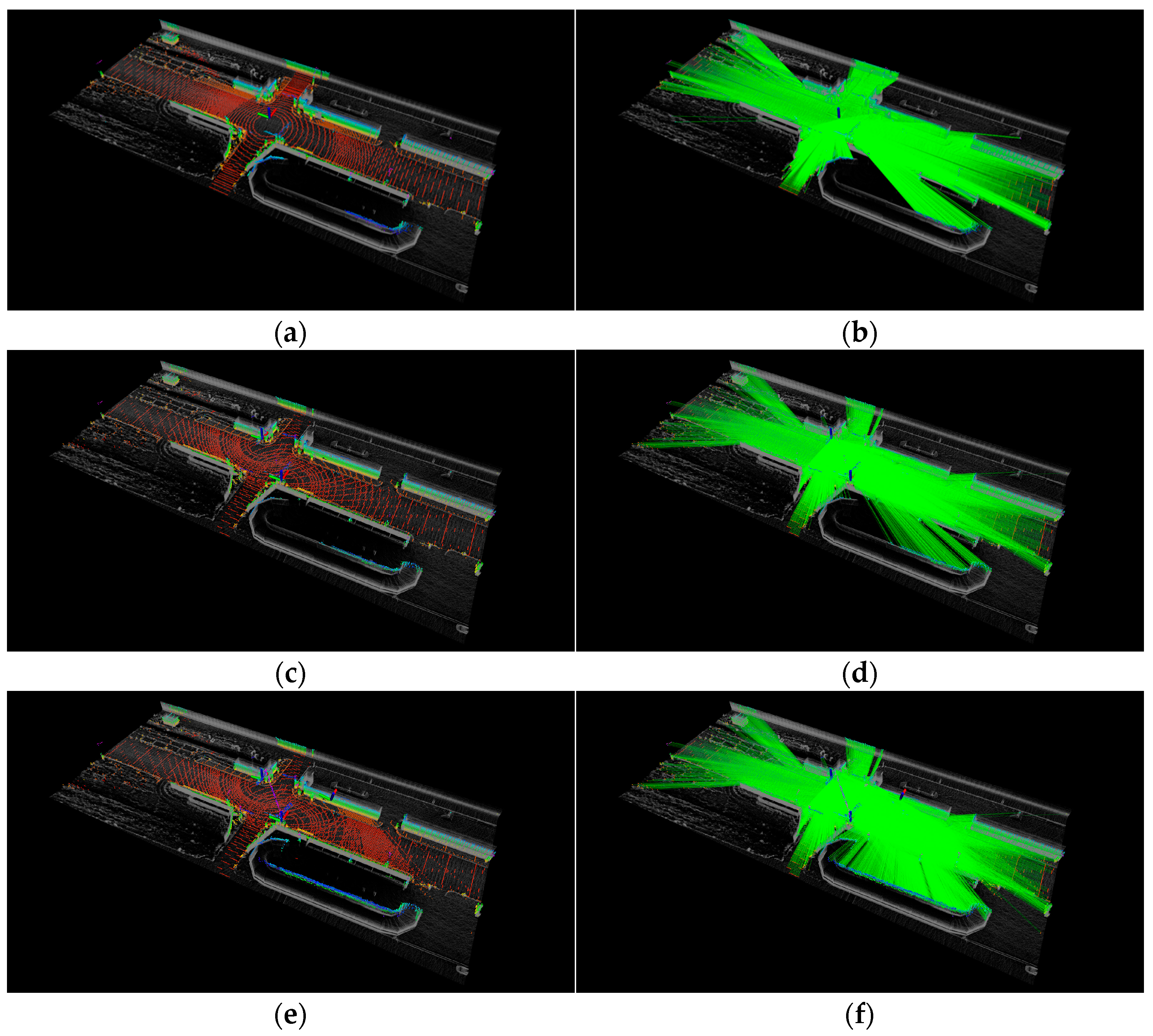

The first experiment (S1~S3) was conducted at Intersection #1.

Table 3 presents the LO for two forty-channel lidars, three forty-channel lidars, and one one-hundred-and-twenty-eight-channel lidar.

Figure 12 displays the placement optimization results for each configuration. In the case of two forty-channel lidars (S1), the placement of the second lidar was determined using the proposed method. Additionally, for three forty-channel lidars (S2), the location of the third lidar was found after the optimal placement in S1 was determined.

Figure 12b,c validate the enhancement in measurements achieved by the proposed method.

Table 3 shows that the proposed method exhibits performance comparable to that of a 128-channel lidar sensor. The mean and maximum values of LO demonstrate improvements with the proposed method.

The second experiment (S4~S6) was conducted at Intersection #2.

Table 4 shows the LO at Intersection #2, and

Figure 13 shows the results of placement optimization. As evident from

Figure 13b,c, the proposed method improves measurements. Furthermore, it is evident that the proposed method, involving 40-channel multi-lidar placement, demonstrates performance similar to that of the 128-channel lidar, as shown in

Figure 13b,c. The average and maximum LO values are either similar to or improved with the proposed method.

5.2.2. Placement and Evaluation in Real Environment

Finally, we placed lidars in the real environment and acquired point cloud data. Among the simulation results for Intersection #1, the optimal placements for two forty-channel lidars (S2) and one one-hundred-and-twenty-eight-channel lidar (S1) were recreated in the real environment.

Figure 14 shows the placement of lidars in the actual environment. Three lidars (two forty-channel lidars and one one-hundred-and-twenty-eight-channel lidar) were simultaneously placed, and point clouds were obtained while vehicles and pedestrians were in motion. The point clouds acquired from the two forty-channel lidars and the 128-channel lidar were input into a deep learning-based detection algorithm [

28] to compare the positions of detected vehicles and pedestrians. The detection algorithm receives point cloud input from lidar and detects objects such as vehicles and pedestrians based on artificial intelligence, and outputs the type, location, and size of the object in 3D space.

Figure 15 shows a detailed scenario, which is as follows.

R1, R2: Point clouds were acquired while vehicles were driven on the road near an intersection. Using a deep learning algorithm, vehicles were detected, and their detection ranges were compared (

Figure 15a).

R3, R4: Point clouds were acquired as people walked on the sidewalk near the intersection. Pedestrians were detected based on a deep learning algorithm, and the recognized ranges were compared (

Figure 15b).

R5, R6: Point clouds were acquired as two vehicles were driven on the road near the intersection. While driving, one vehicle was positioned to obscure an area by other vehicles. Using a deep learning algorithm, two vehicles were detected, and the recognition of obscured areas was compared (

Figure 15c).

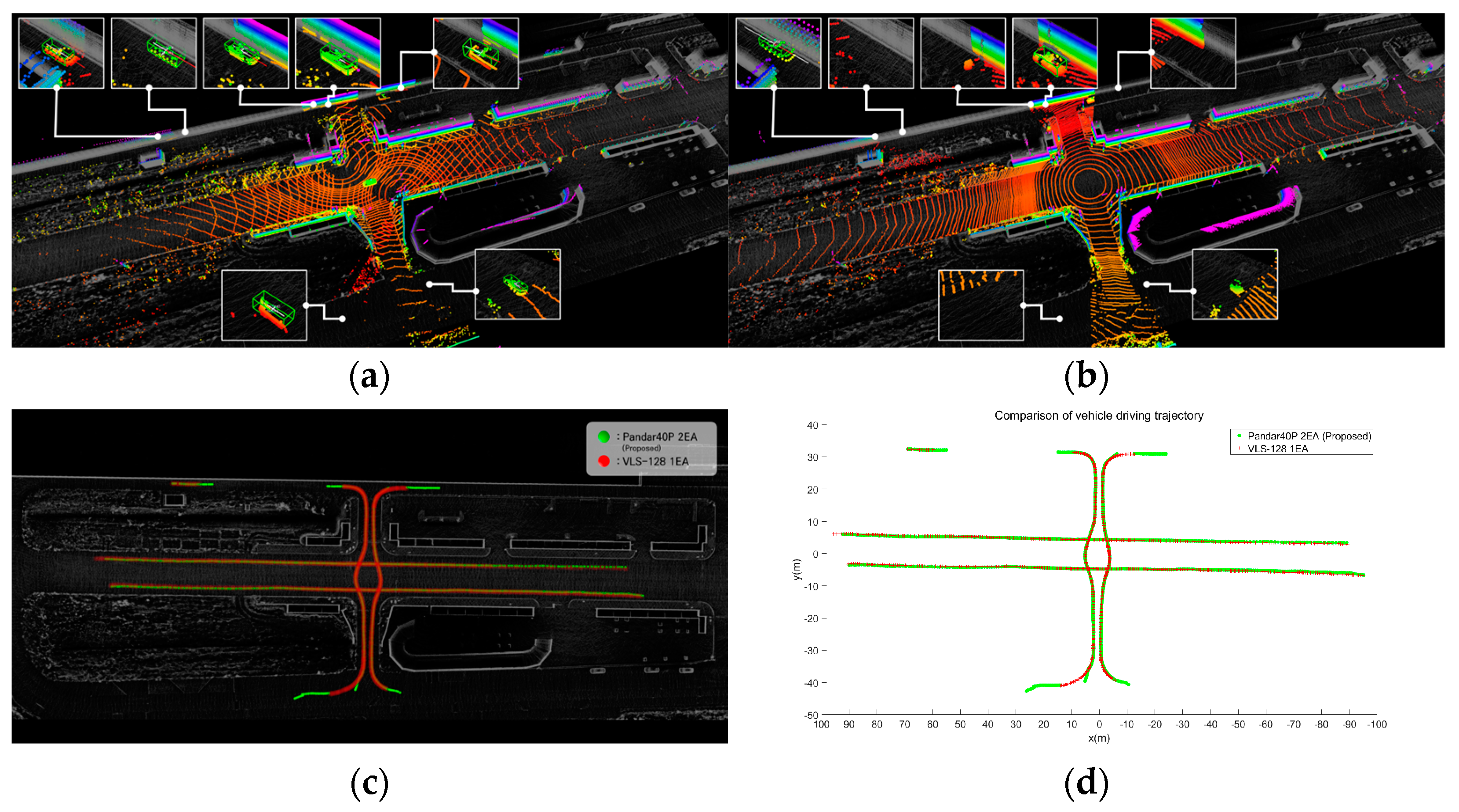

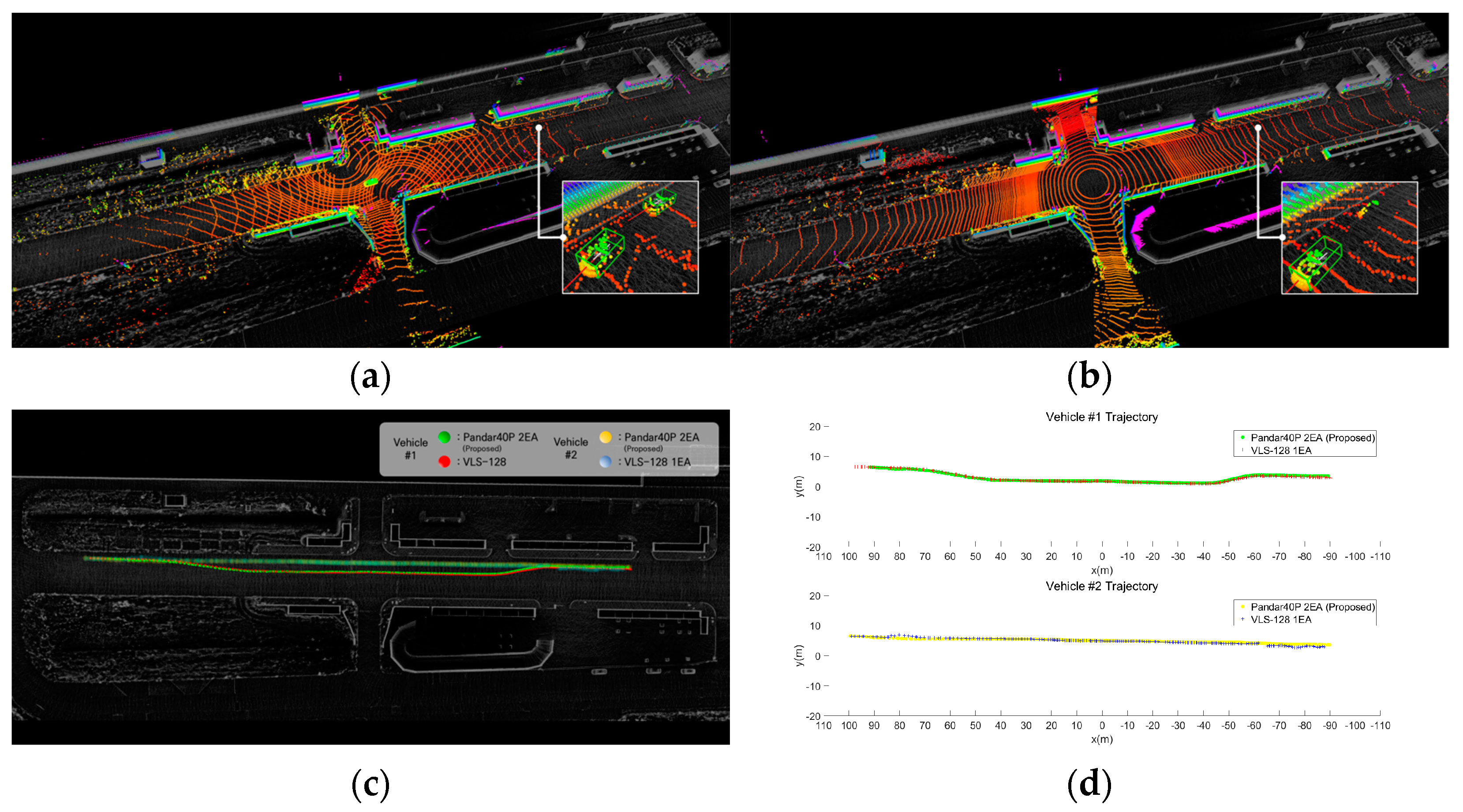

Figure 16 shows the vehicle detection results from the acquired point clouds.

Figure 16a shows the results of vehicle detection from the point cloud acquired using the proposed method with two forty-channel lidars.

Figure 16b shows the results of vehicle detection from the point cloud acquired with a single 128-channel lidar.

Figure 16c,d provide a comparison of the trajectories where vehicles were detected.

Table 5 shows the maximum detection position (x, y) and distance. The experiment revealed that the maximum detection position and distance exhibited similar results. However, in areas with sensing blind spots due to buildings, the utilization of low-channel multiple lidars through placement optimization yielded superior detection results. The results of pedestrian detection from the acquired data are shown in

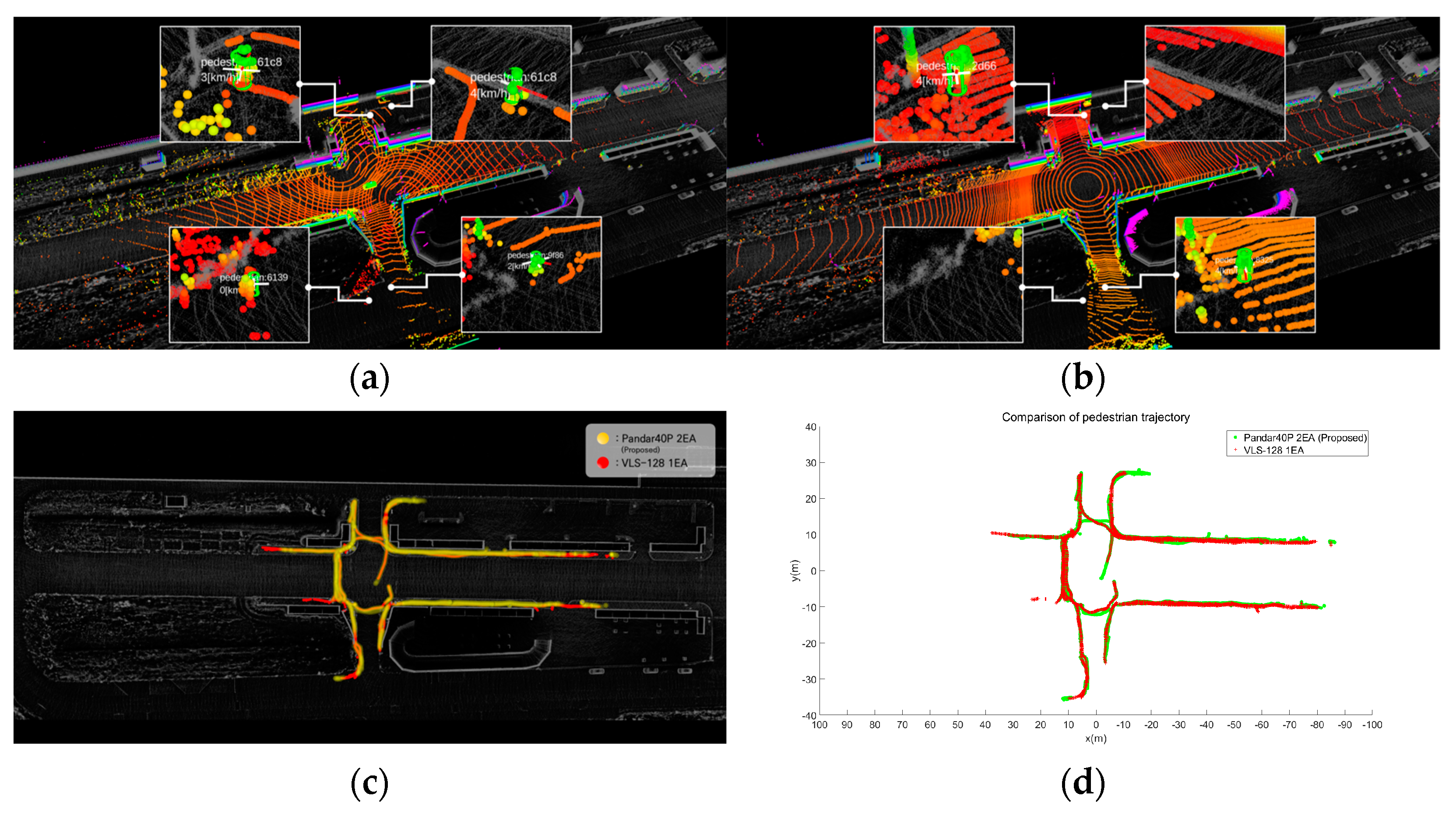

Figure 17.

Figure 17a shows the results of pedestrian detection from the point cloud acquired using the proposed method with two forty-channel lidars.

Figure 17b shows the results of pedestrian detection from the point cloud acquired with a single 128-channel lidar.

Figure 17c,d show a comparison of the trajectories where pedestrians were detected.

Table 6 presents the maximum detection position (x, y) and distance. The experiment demonstrated that the maximum detection position and distance yielded similar results. Finally,

Figure 18 shows the results of detecting an obscured driving vehicle.

Figure 18a shows the results of occluded driving vehicle detection from the point cloud acquired using the proposed method with two forty-channel lidars.

Figure 18b shows the results of occluded driving vehicle detection from the point cloud acquired with a single 128-channel lidar.

Figure 18c,d show a comparison of the trajectories where occluded driving vehicles were detected.

Table 7 shows the maximum detection position and distance. Vehicle #1 changes lanes in front of vehicle #2, resulting in temporary obscuration of vehicle #2 when changing lanes. This observation highlights that only occluded vehicles can be detected using the proposed method. It was confirmed that the multi-sensor placement method using the proposed approach is effective in handling occlusions between objects.

The experimental results in the real environment were similar to the simulation results of the proposed method, demonstrating the feasibility of the proposed method. Lidar occupancy was compared by implementing the proposed method in the simulation. Multiple lidars were placed to reduce blind spots at urban intersections, resulting in a higher occupancy rate than high-cost lidar. Multiple lidar placements were replicated in a real environment, and vehicles and pedestrians were detected using point clouds acquired from the lidars. The reproducibility and effectiveness of the proposed method were validated by comparing the detection ranges of the acquired data. In a real environment, multiple lidar placements exhibited a detection range similar to that achieved with a 128-channel lidar. It was evident that placing multiple lidars using the proposed method enhanced measurements, as demonstrated through comparative experiments in a real environment using lidar. For the placement of lidars in an actual urban environment, multiple lidars can be efficiently placed by generating a point cloud map of the environment and applying the proposed method, as demonstrated by experimental results. Our method has showcased that environmental information can be acquired from infrastructures. The acquired environmental information can then be processed to extend the vehicle’s detection range and transmit it to connected vehicles using V2X (Vehicle-to-Infrastructure) communication. This approach not only helps in reducing sensor blind spots in autonomous vehicles, enhancing the safety of other vehicles and pedestrians, but it also holds promise for further research in this domain.

6. Conclusions

In this paper, the lidar placement problem was defined as the problem of determining the lidar placement in an urban environment to minimize blind spots and optimize the number of beams reaching the point cloud. To mathematically formalize this problem, a point cloud map was processed and defined as the lidar occupancy space (LOS). Experimental results demonstrated that performance can be enhanced through our lidar placement method. Future work will involve expanding the proposed method to systems utilizing multiple lidar, radar, and cameras, and integrating it with edge–cloud infrastructure and V2X communication technology.

Author Contributions

Methodology, T.-H.K. and T.-H.P.; Software, T.-H.K. and G.-H.J.; Validation, T.-H.K., G.-H.J. and H.-S.Y.; Resources, H.-S.Y.; Data curation, G.-H.J. and H.-S.Y.; Writing—original draft, T.-H.K.; Writing—review & editing, K.-S.Y. and T.-H.P.; Visualization, T.-H.K.; Supervision, T.-H.P.; Project administration, K.-S.Y. and T.-H.P.; Funding acquisition, K.-S.Y. All authors have read and agreed to the published version of the manuscript.

Funding

Institute of Information & communications Technology Planning & Evaluation(IITP): 2021-0-01314; MSIT (Ministry of Science and ICT), Korea: IITP-2023-2020-0-01462.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data will be made available on request.

Acknowledgments

This work was supported by the Institute of Information & communications Technology Planning & Evaluation (IITP), funded by the Korean government (MSIT) under grant No. 2021-0-01314, for the development of driving environment data stitching technology to provide data on shaded areas for autonomous vehicles. Additionally, this research was supported by the Ministry of Science and ICT (MSIT), Korea, under the Grand Information Technology Research Center support program (IITP-2023-2020-0-01462) supervised by the IITP.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ren, H.; Zhang, Y. TrajMatch: Towards Automatic Spatio-temporal Calibration for Roadside LiDARs through Trajectory Matching. arXiv 2023, arXiv:2302.02157. [Google Scholar] [CrossRef]

- Lan, X.; Wang, C.; Lv, B.; Li, J.; Zhang, M.; Zhang, Z. 3D Point Cloud Stitching for Object Detection with Wide FoV Using Roadside LiDAR. Electronics 2023, 12, 703. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, J.; Tao, Y.; Xiao, Y.; Yu, S.; Asiri, S.; Li, J.; Li, T. Traffic Sign Based Point Cloud Data Registration with Roadside LiDARs in Complex Traffic Environments. Electronics 2022, 11, 1559. [Google Scholar] [CrossRef]

- Guan, F.; Xu, H.; Tian, Y. Evaluation of Roadside LiDAR-Based and Vision-Based Multi-Model All-Traffic Trajectory Data. Sensors 2023, 12, 5377. [Google Scholar]

- Wen, X.; Hu, J.; Chen, H.; Huang, S.; Hu, H.; Zhang, H. Research on an Adaptive Method for the Angle Calibration of Roadside LiDAR Point Clouds. Sensors 2023, 17, 7542. [Google Scholar] [CrossRef] [PubMed]

- Lin, C.; Sun, G.; Wu, D.; Xie, C. Vehicle Detection and Tracking with Roadside LiDAR Using Improved ResNet18 and the Hungarian Algorithm. Sensors 2023, 19, 8143. [Google Scholar]

- Liu, H.; Lin, C.; Gong, B.; Liu, H. Lane-Level and Full-Cycle Multivehicle Tracking Using Low-Channel Roadside LiDAR. IEEE Trans. Instrum. Meas. 2023, 72. [Google Scholar] [CrossRef]

- Zhou, S.; Xu, H.; Zhang, G.; Ma, T.; Yang, Y. Leveraging deep convolutional neural networks pre-trained on autonomous driving data for vehicle detection from roadside LiDAR data. IEEE Trans. Intell. Transp. Syst. 2022, 23, 22367–22377. [Google Scholar] [CrossRef]

- Zhang, J.; Xiao, W.; Mills, J. Optimizing Moving Object Trajectories from Roadside Lidar Data by Joint Detection and Tracking. Remote Sens. 2022, 14, 2124. [Google Scholar] [CrossRef]

- Wang, L.; Lan, J. Adaptive Polar-Grid Gaussian-Mixture Model for Foreground Segmentation Using Roadside LiDAR. Remote Sens. 2022, 14, 2522. [Google Scholar] [CrossRef]

- Roos, S. A Framework for Simulative Evaluation and Optimization of Point Cloud-Based Automotive Sensor Sets. In Proceedings of the 2021 IEEE Intelligent Transportation Systems Conference, Indianapolis, IN, USA, 19–22 September 2021. [Google Scholar]

- Kim, T. Placement optimization of multiple lidar sensors for autonomous vehicles. IEEE Trans. Intell. Transp. Syst. 2019, 21, 2139–2145. [Google Scholar] [CrossRef]

- Mou, S. An Optimal LiDAR Configuration Approach for Self-Driving Cars. arXiv 2018, arXiv:1805.07843. [Google Scholar]

- Berens, F. Genetic Algorithm for the Optimal LiDAR Sensor Configuration on a Vehicle. IEEE Sens. J. 2021, 22, 2735–2743. [Google Scholar] [CrossRef]

- Hu, H. Investigating the impact of multi-lidar placement on object detection for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022. [Google Scholar]

- Gu, J. Range Sensor Overview and Blind-Zone Reduction of Autonomous Vehicle Shuttles. IOP Conf. Ser. Mater. Sci. Eng. 2021, 1140, 012006. [Google Scholar]

- Liu, Z. Where should we place lidars on the autonomous vehicle-an optimal design approach, In Proceedings of the 2019 International Conference on Robotics and Automation, Montreal, QC, Canada, 20–24 May 2019.

- Cai, X. Analyzing Infrastructure LiDAR Placement with Realistic LiDAR Simulation Library. arXiv 2022, arXiv:2211.15975. [Google Scholar]

- Jin, S. A Novel Information Theory-Based Metric for Evaluating Roadside LiDAR Placement. IEEE Sens. J. 2022, 22, 21009–21023. [Google Scholar] [CrossRef]

- Qu, A. SEIP: Simulation-based Desing and Evaluation of Infrastructure-based Collective Perception. arXiv 2023, arXiv:2305.17892. [Google Scholar]

- Kloeker, L. Generic Approach to Optimized Placement of Smart Roadside Infrastructure Sensors Using 3D Digital Maps. In Proceedings of the 2022 IEEE International Conference on Intelligent Transportation Systems, Macau, China, 8–12 October 2022. [Google Scholar]

- OpenDrive. Available online: https://www.opendrive.com (accessed on 23 October 2023).

- Bresenham, J.E. Algorithm for computer control of a digital plotter. IBM Syst. J. 1965, 4, 25–30. [Google Scholar] [CrossRef]

- Brindle, A. Genetic Algorithm for Function Optimization. Ph.D. Dissertation, Department of Computer Science, University of Alberta, Edmonton, AB, Canada, 1980. [Google Scholar]

- Croes, G.A. A method for solving traveling-salesman problems. Oper. Res. 1958, 6, 791–812. [Google Scholar] [CrossRef]

- HESAI. Available online: https://www.hesaitech.com/product/pandar40p (accessed on 23 October 2023).

- Velodyne. Available online: https://velodynelidar.com/products/alpha-prime (accessed on 23 October 2023).

- Autoware. Available online: https://autoware.org (accessed on 23 October 2023).

Figure 1.

Differences in quality of raw data depending on lidar placement. (a) Placement #1. (b) Placement #2.

Figure 1.

Differences in quality of raw data depending on lidar placement. (a) Placement #1. (b) Placement #2.

Figure 2.

(a) Vertical angular resolution of lidar. (b) Horizontal angular resolution of lidar.

Figure 2.

(a) Vertical angular resolution of lidar. (b) Horizontal angular resolution of lidar.

Figure 3.

Multiple lidar system in an urban environment. (a) Placement of three lidars. (b) Coverage of each lidar. (c) Total coverage.

Figure 3.

Multiple lidar system in an urban environment. (a) Placement of three lidars. (b) Coverage of each lidar. (c) Total coverage.

Figure 4.

(a) Placement of single lidar. (b) Placement of dual lidars. (red: point cloud of lidar #1, green: point cloud of lidar #2).

Figure 4.

(a) Placement of single lidar. (b) Placement of dual lidars. (red: point cloud of lidar #1, green: point cloud of lidar #2).

Figure 5.

Lidar Occupancy Space (LOS) and Lidar Occupancy Voxel grid (LOV).

Figure 5.

Lidar Occupancy Space (LOS) and Lidar Occupancy Voxel grid (LOV).

Figure 6.

(a) Point cloud map. (b) Weight for lidar occupancy space.

Figure 6.

(a) Point cloud map. (b) Weight for lidar occupancy space.

Figure 7.

Total lidar occupancy (red voxels).

Figure 7.

Total lidar occupancy (red voxels).

Figure 8.

Generation of new chromosomes via the crossover operation.

Figure 8.

Generation of new chromosomes via the crossover operation.

Figure 9.

Generation of new chromosomes via the mutation operation.

Figure 9.

Generation of new chromosomes via the mutation operation.

Figure 10.

Experimental environment. (a) Satellite map. (b) 4-way intersection (#1).

Figure 10.

Experimental environment. (a) Satellite map. (b) 4-way intersection (#1).

Figure 11.

Point cloud map and lidar occupancy space. (a,b) Intersection #1. (c,d) Intersection #2.

Figure 11.

Point cloud map and lidar occupancy space. (a,b) Intersection #1. (c,d) Intersection #2.

Figure 12.

Lidar occupancy space and lidar beams at Intersection #1. (a,b) One one-hundred-and-twenty-eight-channel lidar (S3). (c,d) Two forty-channel lidars (S1). (e,f) Three forty-channel lidars (S2).

Figure 12.

Lidar occupancy space and lidar beams at Intersection #1. (a,b) One one-hundred-and-twenty-eight-channel lidar (S3). (c,d) Two forty-channel lidars (S1). (e,f) Three forty-channel lidars (S2).

Figure 13.

Lidar occupancy space and lidar beams at Intersection #2. (a,b) One one-hundred-and-twenty-eight-channel lidar (S6). (c,d) Two forty-channel lidars (S4). (e,f) Three forty-channel lidars (S5).

Figure 13.

Lidar occupancy space and lidar beams at Intersection #2. (a,b) One one-hundred-and-twenty-eight-channel lidar (S6). (c,d) Two forty-channel lidars (S4). (e,f) Three forty-channel lidars (S5).

Figure 14.

Lidars placed on real environment. (a) First 40-channel lidar. (b) Second 40-channel lidar. (c) The 128-channel lidar.

Figure 14.

Lidars placed on real environment. (a) First 40-channel lidar. (b) Second 40-channel lidar. (c) The 128-channel lidar.

Figure 15.

The detailed scenarios. (a) Comparison of driving vehicle detection range. (b) Comparison of walking pedestrian detection range. (c) Comparison of detection range for blocked vehicles.

Figure 15.

The detailed scenarios. (a) Comparison of driving vehicle detection range. (b) Comparison of walking pedestrian detection range. (c) Comparison of detection range for blocked vehicles.

Figure 16.

Comparison of vehicle detection range. (a) Two forty-channel lidars. (b) One one-hundred-and-twenty-eight-channel lidar. (c) Visualization of vehicle trajectory. (d) Graph of vehicle trajectory.

Figure 16.

Comparison of vehicle detection range. (a) Two forty-channel lidars. (b) One one-hundred-and-twenty-eight-channel lidar. (c) Visualization of vehicle trajectory. (d) Graph of vehicle trajectory.

Figure 17.

Comparison of pedestrian detection range. (a) Two forty-channel lidars. (b) One one-hundred-and-twenty-eight-channel lidar. (c) Visualization of pedestrian trajectory. (d) Graph of pedestrian trajectory.

Figure 17.

Comparison of pedestrian detection range. (a) Two forty-channel lidars. (b) One one-hundred-and-twenty-eight-channel lidar. (c) Visualization of pedestrian trajectory. (d) Graph of pedestrian trajectory.

Figure 18.

Comparison of occluded vehicle detection range. (a) Two forty-channel lidars. (b) One one-hundred-and-twenty-eight-channel lidar. (c) Visualization of vehicle trajectory. (d) Graph of vehicle trajectory.

Figure 18.

Comparison of occluded vehicle detection range. (a) Two forty-channel lidars. (b) One one-hundred-and-twenty-eight-channel lidar. (c) Visualization of vehicle trajectory. (d) Graph of vehicle trajectory.

Table 1.

Lidar specifications [

26,

27].

Table 1.

Lidar specifications [

26,

27].

| Item | Unit | Specifications |

|---|

| Pandar40P | VLS-128 |

|---|

| Scan planes | Channel | 40 | 128 |

| Range | m | Up to 200 | Up to 245 |

| Range accuracy | cm | 2 | 3 |

| FOV (vertical) | Degree | 40 (−25 to +15) | 40 (−25 to +15) |

| Resolution (vertical) | Degree | 0.33 (non-linear) | 0.11 (non-linear) |

| FOV (horizontal) | Degree | 360 | 360 |

| Resolution (horizontal) | Degree | 0.2 (10 Hz), 0.4 (20 Hz) | 0.2 (10 Hz), 0.4 (20 Hz) |

| Frame rate | Hz | 10, 20 | 5 to 20 |

Table 2.

Experimental setup.

Table 2.

Experimental setup.

| Config | Environment | Intersection | Sensor | Description |

|---|

| Model | Number |

|---|

| S1 | Simulator | #1 | Pandar40P | 2 | Lidar placement optimization simulation at Intersection #1 |

| S2 | 3 |

| S3 | VLS128 | 1 |

| S4 | #2 | Pandar40P | 2 | Lidar placement optimization simulation at Intersection #2 |

| S5 | 3 |

| S6 | VLS128 | 1 |

| R1 | Real | #1 | Pandar40P | 2 | Reproducing optimal placement and comparing vehicle detection ranges |

| R2 | VLS128 | 1 |

| R3 | Pandar40P | 2 | Reproducing optimal placement and comparing pedestrian detection ranges |

| R4 | VLS128 | 1 |

| R5 | Pandar40P | 2 | Reproducing optimal placement and comparing detection ranges for occluded vehicles |

| R6 | VLS128 | 1 |

Table 3.

Lidar occupancy according to each parameter (in Intersection #1).

Table 3.

Lidar occupancy according to each parameter (in Intersection #1).

| Probability | One One-Hundred-and-Twenty-Eight-Channel

Lidar (S3) | Two Forty-Channel

Lidars (S1) | Three Forty-Channel

Lidars (S2) |

|---|

| Crossover | Mutation | LO | Best

Placement | LO | Best

Placement

(Second Lidar) | LO | Best

Placement (Third Lidar) |

|---|

| 0.4 | 0.2 | 154,522 |

=

(−1.5,

0.3,

2.8,

−1.6,

−1.0) | 138,903 |

=

(6.8,

6.0,

4.9,

−0.9,

6.0) | 174,774 |

=

(6.5,

−21.1,

3.8,

12.4,

−12.4) |

| 0.1 | 146,039 | 157,905 | 166,702 |

| 0.05 | 151,800 | 147,529 | 169,019 |

| 0.02 | 148,972 | 152,257 | 168,000 |

| 0.3 | 0.2 | 146,201 | 137,886 | 192,507 |

| 0.1 | 157,397 | 141,684 | 175,029 |

| 0.05 | 151,451 | 140,585 | 165,479 |

| 0.02 | 150,172 | 141,407 | 169,823 |

| 0.2 | 0.2 | 146,840 | 145,036 | 172,465 |

| 0.1 | 155,187 | 147,487 | 168,328 |

| 0.05 | 144,968 | 148,546 | 166,546 |

| 0.02 | 150,810 | 152,469 | 180,816 |

| 0.1 | 0.2 | 150,838 | 143,189 | 170,464 |

| 0.1 | 157,053 | 158,553 | 169,239 |

| 0.05 | 140,229 | 152,964 | 172,629 |

| 0.02 | 162,423 | 141,022 | 169,309 |

| Mean LO | 150,931 | 146,714 | 171,946 |

| Max LO | 162,423 | 158,553 | 192,507 |

Table 4.

Lidar occupancy according to each parameter (in intersection #2).

Table 4.

Lidar occupancy according to each parameter (in intersection #2).

| Probability | One One-Hundred-and-Twenty-Eight-Channel

Lidar (S3) | Two Forty-Channel

Lidars (S1) | Three Forty-Channel

Lidars (S2) |

|---|

| Crossover | Mutation | LO | Best

Placement | LO | Best

Placement

(Second Lidar) | LO | Best

Placement (Third Lidar) |

|---|

| 0.4 | 0.2 | 144,519 |

=

(−6.9,

−5.5,

4.7,

1.7,

−0.9) | 144,376 |

=

(6.4,

4.7,

4.1,

−2.2,

−1.7) | 197,441 |

=

(7.7,

−19.6,

4.9,

3.5,

−6.7) |

| 0.1 | 143,080 | 140,789 | 194,700 |

| 0.05 | 140,606 | 149,373 | 189,926 |

| 0.02 | 146,431 | 145,034 | 194,462 |

| 0.3 | 0.2 | 146,760 | 149,409 | 192,075 |

| 0.1 | 143,894 | 152,930 | 197,398 |

| 0.05 | 151,688 | 152,350 | 203,682 |

| 0.02 | 145,399 | 166,184 | 191,239 |

| 0.2 | 0.2 | 142,365 | 149,438 | 191,872 |

| 0.1 | 148,304 | 147,046 | 192,287 |

| 0.05 | 139,525 | 148,515 | 194,342 |

| 0.02 | 141,120 | 164,734 | 187,869 |

| 0.1 | 0.2 | 143,224 | 146,827 | 187,606 |

| 0.1 | 142,970 | 151,856 | 174,500 |

| 0.05 | 153,015 | 155,803 | 174,708 |

| 0.02 | 155,200 | 159,735 | 169,895 |

| Mean LO | 145,506 | 151,525 | 189,625 |

| Max LO | 155,200 | 166,184 | 203,682 |

Table 5.

Comparison of maximum detection position and distance.

Table 5.

Comparison of maximum detection position and distance.

| | One One-Hundred-and-Twenty-Eight-Channel Lidar (R2) | Two Forty-Channel Lidars (R1) |

|---|

| X | Y | Maximum Distance | X | Y | Maximum Distance |

|---|

| Easting | −95.3 | −42.7 | 95.5 | −94.8 | −41.0 | 95.9 |

| Northing | 92.2 | 32.4 | 95.7 | 32.4 |

| Sum | 187.5 | 75.1 | 190.5 | 73.4 |

Table 6.

Comparison of maximum detection position and distance.

Table 6.

Comparison of maximum detection position and distance.

| | One One-Hundred-and-Twenty-Eight-Channel Lidar (R4) | Two Forty-Channel Lidars (R3) |

|---|

| X | Y | Maximum Distance | X | Y | Maximum Distance |

|---|

| Easting | −86.4 | −35.8 | 86.7 | −85.1 | −35.4 | 85.5 |

| Northing | 31.6 | 28.0 | 37.7 | 27.1 |

| Sum | 118.0 | 63.8 | 122.8 | 62.5 |

Table 7.

Comparison of maximum detection position and distance.

Table 7.

Comparison of maximum detection position and distance.

| | One One-Hundred-and-Twenty-Eight-Channel Lidar (R6) | Two Forty-Channel Lidars (R5) |

|---|

| X | Y | Maximum Distance | X | Y | Maximum Distance |

|---|

| Vehicle #1 | Easting | −89.2 | 1.1 | 91.8 | −90.3 | 0.9 | 97.8 |

| Northing | 91.6 | 6.4 | 97.6 | 6.5 |

| Sum | 180.8 | 5.3 | 187.9 | 5.6 |

| Vehicle #2 | Easting | −89.9 | 3.6 | 99.7 | −87.8 | 2.7 | 99.2 |

| Northing | 99.5 | 6.6 | 99.1 | 6.8 |

| Sum | 189.4 | 3.0 | 186.9 | 4.1 |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).