Abstract

In this paper, we propose a novel low-complexity hand gesture recognition framework via a multiple Frequency Modulation Continuous Wave (FMCW) radar-based sensing system. In this considered system, two radars are deployed distributively to acquire motion vectors from different observation perspectives. We first independently extract reflection points of the interested target from different radars by applying the proposed neighboring reflection points detection method after processing the traditional 2-dimensional Fast Fourier Transform (2D-FFT). The obtained sufficient corresponding information of detected reflection points, e.g., distances, velocities, and angle information, can be exploited to synthesize motion velocity vectors to achieve a high signal-to-noise ratio (SNR) performance, which does not require knowledge of the relative position of the two radars. Furthermore, we utilize a long short-term memory (LSTM) network as well as the synthesized motion velocity vectors to classify different gestures, which can achieve a significantly high accuracy of gesture recognition with a 1600-sample data set, e.g., . The experimental results also illustrate the robustness of the proposed gesture recognition systems, e.g., changing the environment background and adding new gesture performers.

1. Introduction

Hand gestures are one of the most effective communication means for human beings and have been used in various applications for human–computer interaction (HCI) [1], including smart homes [2], virtual reality (VR) [3], game control [4], and text input [5]. In these applications, accurate gesture recognition plays an important role in enabling sophisticated functionalities and ensuring user experience. As such, many existing works have attempted to develop gesture recognition solutions, for which there are mainly three categories, i.e., wearable device-based solutions [6], visual device-based solutions [7], and wireless sensing-based solutions [8].

Wearable device-based solutions rely on the sensors equipped on wearable devices to collect motion data in real time [9,10,11]. However, the requirement of wearing the devices can lead to annoying inconveniences and hence may limit their applications. Visual device-based solutions rely on camera sensors for gesture recognition [12,13,14] and can be applied in a diverse range of scenarios [15,16,17] due to their exceptional experiential qualities. The main drawback of a visual device-based solution is its potential possibility of the invasion of privacy.

Wireless sensing-based solutions deal with the above problems by measuring and analyzing radio signals that interact with human gestures [8]. One popular sensing-based solution relies on WiFi systems [5,18,19,20], which can classify human gestures via Doppler features extracted from the channel state information (CSI) obtained at WiFi transceivers [21]. One potential limiting factor of WiFi-based systems is that obtaining CSIs from commercial WiFi chips may be difficult since most commercial WiFi CSI tools are proprietary of chip manufactures [22] and are not open.

As an alternative sensing-based gesture recognition solution, mmWave radar-based systems [23,24,25,26] have recently received significant attention due to the recent advances in mmWave radar chip manufacturing [27] and the continuing reduction in the cost and physical size of hardware [28]. Unlike WiFi-based solutions, mmWave radar-based solutions do not need to stick to the CSI measurement intervals specified by WiFi standard [22], hence allowing for more flexible measurements for hand gesture recognition. Moreover, as mmWave radar operates in a dedicated frequency band that is different from most existing communication systems, the impact of mutual interference is less severe.

This works focuses on mmWave radar-based systems for hand gesture recognition. Specifically, we consider mmWave Frequency Modulation Continuous Wave (FMCW) radar for hand gesture recognition, motivated by its capability of simultaneously measuring the range and velocity of the target of interest. Unlike most FMCW-based systems that consider the use of a single FMCW-radar to extract the features of a hand gesture, in this work, we consider a dual FMCW system that utilizes two FMCW radars to measure the signals of human hand gestures. We note that as the chip size and cost are continuing to drop for mmWave FMCW radars, it is possible to deploy multiple mmWave FMCW radar sensors in the same space, e.g., in a smart home, to support diverse applications such as human activity detection, health monitoring, and hand gesture recognition.

To exploit the benefit of two mmWave FMCW radars, we first develop an algorithm to extract the motion velocity vectors of human hand gestures based on the fusion of range and velocity information obtained from the multiple radars. As will be shown later in this paper, the motion velocity vector acts as an distinct feature that can be used to classify different human gestures. Based on the motion velocity vector extracted, a gesture classification model based on a long short-term memory (LSTM) network is proposed to capture the sequential dependence of the velocities. Our main contributions are highlighted below:

- We develop a systematic framework of high-accuracy hand gesture recognition based on two mmWave FMCW radars. The proposed framework first detects the moving target and identifies the reflection points of the target from each frame of range-Doppler (r-D) images generated from the two radars. The angular information of the target is then obtained by applying beamforming. Then, the motion velocity vector is synthesized by combining the angles and velocities of the target estimated by the two radars.

- We propose a novel feature extraction algorithm to synthesize the motion velocity vector that is crucial for hand gesture recognition. The proposed algorithm can effectively estimate the true velocity of the moving target and eliminates the requirement of the knowledge on the relative position of the two radars. This allows for flexible deployment of the two radars

- We propose a deep-learning gesture classification model based on the LSTM network, which effectively captures the sequential correlations between the motion velocities at different time instances. The proposed model takes only the motion velocity vector as the input feature and is shown to achieve satisfactory hand gesture recognition accuracy and robustness. In an experiment contributed by 10 volunteers with 1600 samples, the proposed model achieves 98.0% accuracy.

The rest of the article is organized as follows. Section 2 discusses related work with a focus on existing FMCW-based hand gesture recognition solutions. Section 3 presents some basic principles of FMCW radar processing Section 3. Our proposed hand gesture solution is detailed in Section 4. In Section 5, we present experiment results and provide a comparison with traditional approaches. Conclusions are drawn in Section 6. For ease of exposition, vectors are denoted by bold lowercase characters, whereas matrices are represented by bold uppercase characters.

2. Related Work

Classification algorithms for hand gesture recognition are mainly divided into two categories: machine learning-based solutions and deep learning-based solutions. At the early stage of FMCW-based hand gesture recognition, machine learning is the mainstream method for classification. The method in [23], proposed by the Google team, extracts nine low-dimensional motion features and applies a Random Forest classifier to identify gestures for HCI applications. In addition, Malysa et al. [24] proposed gathering motion feature vectors using a 77 GHz FMCW radar and training them based on Hidden Markov Models (HMM).

With the development of neural networks, there are numerous hand gesture recognition systems incorporating neural networks into their designed hand gesture recognition systems, and the Convolutional Neural Network (CNN) is one of the most widely adopted algorithms. As a representative example, ref. [29] proposed generating micro-Doppler (m-D) images from the radar signals and then using a pre-trained CNN model for hand gesture classification. This approach is a straightforward application of the procedure commonly adopted in imaging processing, but for hand gesture classification, its classification accuracy is limited since the m-D images cannot distinguish between different gestures with similar image patterns, e.g., the gesture of swiping from left to right and the gesture of swiping from right to left. To resolve this issue, ref. [30] proposed a multi-stream CNN model that takes three types of figures as the input, i.e., the range-time figure, the Doppler-time figure, and the angle-time figure, and achieves better classification accuracy. The authors of [31] further improved the accuracy of gesture recognition by exploiting the range-Doppler-angular multi-dimensional gesture features space and exploring optimal multi-dimensional feature combination strategies for a multi-channel CNN. In addition, based on their previous work, the authors in [32] proposed the method of spatial position alignment and adopted a multi-channel CNN for multi-position hand gesture recognition.

The LSTM network is extensively employed as another prominent method in hand recognition systems. For example, the authors of [33] extracted motion profiles from r-D images and used the LSTM encoder to complete the final recognition by extracting the global temporal features. Meanwhile, the authors of [34] obtained the range-Doppler-angle trajectories from a 77 GHz FMCW Multiple Input Multiple Output (MIMO) radar, and built a LSTM network with the reused forward propagation approach to learn the gesture features.

3. Preliminaries

3.1. Radar Signal Model

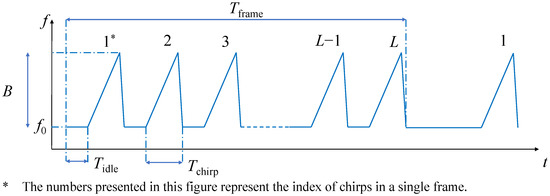

In this subsection, we briefly introduce the principle of the FMCW radar. The transmitted signal of the FMCW radar consists of a series of continuous frequency-modulated waves. The signal within a frequency modulation period is called a chirp. The transmitted FMCW signal frame consists of multiple chirps; see Figure 1 for an illustration, and all notations are listed in Table 1.

Figure 1.

The time frame structure of the transmitted FMCW signal.

Table 1.

A list of notations.

For a single chirp, the transmitted signal can be expressed as [31]:

where t is the fast time, , denotes the complex amplitude coefficient of the transmitted signal, and K is the frequency modulation slope.

The signal echoed from a moving target and received by the radar can be represented as [31]:

where is the complex amplitude coefficient of the received signal, denotes the Doppler shift, is the wavelength, is the carrier frequency of the transmitted signal, v is the velocity, is the flight time of the signal, c is the speed of light, and d denotes the range of the target.

The received signal is mixed with the transmitted signal upon receiving it to produce the intermediate frequency (IF) signal, which can be represented as [31]:

Since is typically much smaller than other terms in Equation (3), the IF signal can be approximated as:

where , is the frequency of the IF signal and

is the time-varying phase.

For different chirps in the same frame, f and will change from one chirp to another. This can be used to estimate of the velocity and the distance of the target of interest. Supposing the IF signal is sampled at a fixed time interval , then the discrete-time samples of a frame can be stored in a two-dimensional matrix , for which each column corresponds to a different chirp. Here, N is the number of samples in each chirp and L is the number of chirps in a frame. Following Equation (4), the entries of can be represented as:

where n is the fast time index, standing for the index of sampling points in the modulation time, , and l is the slow time index, corresponding to the chirp index in a frame, . and are related to the range of target in the l-th chirp.

3.2. Range and Velocity Estimation

To extract the range information of the target, Fast Fourier Transform (FFT) is applied to each column of . For the l-th chirp (column), the frequency domain signal can be represented as:

After the FFT operation, the range of the target can be estimated according to the peak index of the frequency response, denoted as , and the relationship between the , , and can be expressed as follows:

where . Then, the range between the radar and the target can be calculated as:

To estimate the velocity of the target, FFT is applied to each row of matrix . For the n-th row, the IF signal in Equation (6) can be rewritten as:

where is the phase of , is the phase difference of two adjacent elements of , and . For the n-th row, the difference between adjacent values of f is much smaller than ; it can be approximated as . Then, the signal after the FFT operation can be represented as:

Denoting as the frequency index of the peak in the spectrum, the velocity of the target can be calculated as:

3.3. Direction of Target Movement

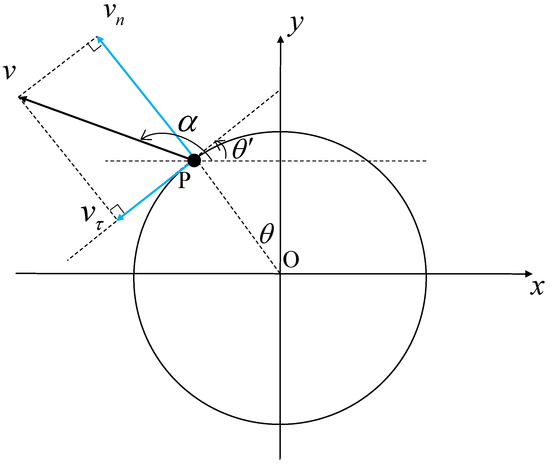

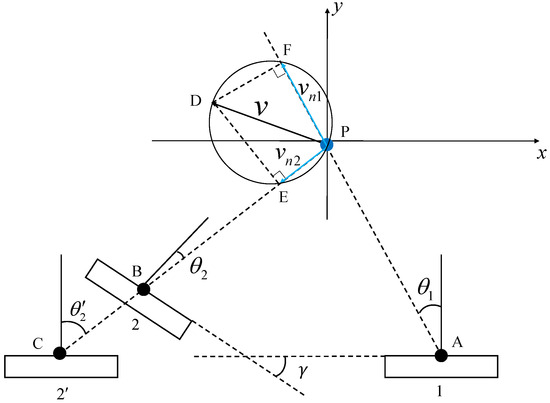

For hand gesture recognition, it is intuitive that the direction of the hand movement is an important feature. However, such a feature is not directly available from single FMCW radar signals. To see this, let us consider a simple example, as illustrated in Figure 2, where a reflecting target P moves in the field of view (FoV) of the radar with velocity v towards direction . We assume that the FMCW radar has multiple antennas. Thus, the instantaneous direction of the target with respect to the radar, i.e., , can be estimated via array signal processing techniques [35].

Figure 2.

The decomposition relationship between the absolute velocity and the radial velocity for gestures.

The velocity of the motion can be decomposed into the radial velocity, , and the tangent velocity, . The radial velocity, , can be expressed as [18]:

As demonstrated, the azimuth angle, , and the direction of motion, , are the primary factors that influence .

The velocity estimated by the principles mentioned in Section 3.2 correspond to the radial velocity since it is estimated based on the Doppler principle. The tangent velocity , which may play an important role in gesture recognition, cannot be estimated. Motivated by this issue, we propose to utilize two mmWave FMCW radars with a MIMO antenna array.

4. The Dual FMCW Radar System for Gesture Recognition

In this section, we introduce the dual FMCW radar system for gesture recognition and detail the signal processing procedure. The dual-radar system consists of two mmWave FMCW radars that are placed at the same height, with their FoVs partially overlapped. The two radars are both connected to the same controller (e.g., a computer) for FMCW control and synchronization. The IF signals sampled from these two radars are both collected at the controller for further signal processing.

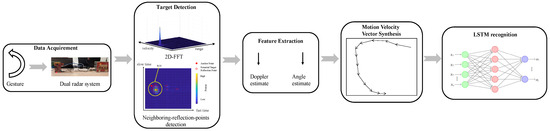

Figure 3 illustrates the overall procedure of the hand gesture recognition system. The IF signals are first processed to remove the static part of the clutter by eliminating mean value of phase, followed by 2-dimensional Fast Fourier Transform (2D-FFT) to convert the time-domain IF signal into the r-D space. A neighboring-reflection-points detection method is then developed to identify the region of interest (ROI) in the r-D space. Upon the identification of the ROI, the information of radial velocity and angle of the target is then extracted from both the radars. The extracted information is then used to synthesize the motion velocity feature, which is fed into an LSTM network for gesture classification.

Figure 3.

The flow chart of the proposed gesture recognition system.

4.1. r-D Region of Interest Detection

As the clutter of environmental background can significantly influence the results of target detection, the proposed algorithm first removes the cluttering signals and finds the ROI from the obtained movement data set to mitigate the impact of environmental background on gesture recognition.

With respect to the static part of the clutter, it is easy to eliminate its impact by averaging the different chirps of IF signals. Specifically, the reference signal, , can be obtained by summing and averaging the column vectors of the IF data matrix (see Section 3.1 for its definition):

Then, after removing signals which are contributed by the static part of the clutter, , from each column of the matrix , the remaining signals of the IF matrix, , containing the dynamic part of the clutter, e.g., reflection points of the moving target, can be expressed as:

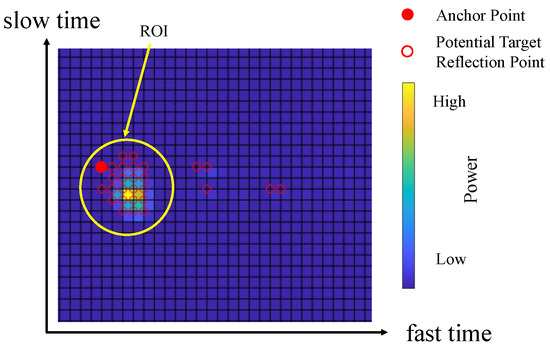

Compared to the elimination of the static part of the clutter, the signal-processing procedure for the dynamic clutter is more complicated. To reduce the impact of dynamic clutter on target detection, we develop a new detection method, as detailed in Algorithm 1. Figure 4 presents a schematic diagram for the signal-processing procedure of removing dynamic clutter. First of all, all the reflection points detected via the constant false alarm rate (CFAR) method are considered as potential target reflection points (red circles). Among potential target reflection points, the one with the highest velocity and being closest to the radar is chosen as the anchor point (red solid circle). Algorithm 1 is applied to search all potential reflection points (see the yellow circle) around the anchor point to form an ROI, and other potential target reflection points are removed in the following processes to reduce the impact of dynamic clutter.

| Algorithm 1 The neighboring-reflection-points detection for reducing dynamic clutter. |

| Input: : the IF matrix after removing the static clutters |

| Output: the index of reflection point |

|

Figure 4.

The demonstration for the proposed neighboring-reflection-points detection method.

4.2. Feature Extraction

Upon the identification of the reflection points of the motion, the relative velocities and distances of the target, , , , and , can be acquired for the two radars using the traditional FMCW processing methods described in Section 3.2. Additionally, since both radars have multiple antennas, the angle of each reflection point, i.e., and with respect to the two radars, can also be obtained using beamforming techniques, specifically [35]:

where denotes the IF data received at the antenna array and is presented as

and denotes the n-th row and l-th column IF data for M-th receiving antennas. In addition, the beam-steering vector with a certain determined angle is used to determine the angle of arrival of the target, and represents the beamforming steering matrix, which is given by:

where the beamformer used for the uniform linear array, , can be represented as [35]:

In Equation (19), is the designed angular resolution of receiving beamforming and . Then, the angle of interest can be obtained by finding the peak value in ; i.e., supposing is the angle index of peak value, then the angle of target can be estimated as .

4.3. Motion Velocity Vector Synthesis

With the features extracted from these two radars, i.e., and , the full velocity of the target can be estimated. Specifically, we consider a Cartesian coordinate system with the horizontal antenna direction of radar 1 as the x-axis. Then, the radial velocity vectors of the target detected at two radars 1 and 2 can be expressed as:

and

respectively.

It can be noted that the normal directions of the antenna arrays of radar 1 and 2 do not need to be parallel to each other. As illustrated in Figure 5, radar 2 deployed at point B is equivalent to radar deployed at point C for estimating the same full velocity. Therefore, radar 2 can be equivalently considered as radar , which is deployed in parallel to radar 1, and the relationship between these azimuths are given as follows:

Figure 5.

Motion velocity vector synthesis.

Based on principles presented in Section 3.2, it can be seen that and are two projection components of v in two different directions. To obtain the true velocity vector of the target, we utilize the radial velocities, and , as well as the azimuth angles and , detected at different radars to resolve the true velocities of the target projected onto the x-axis and the y-axis, which are given by:

where the true velocity projects onto the x-axis, x, and can be expressed as:

Finally, by substituting Equation (24) into Equation (23), we can obtain the value y, which represents the projection of the true velocity onto the y-axis.

The method described above is applied to each frame of the IF data to obtain the velocity of the target in the corresponding frame. After applying this process to consecutive frames, a sequence of the velocities, i.e., the motion velocity vector, is extracted and can be presented as:

4.4. Gesture Classification via LSTM

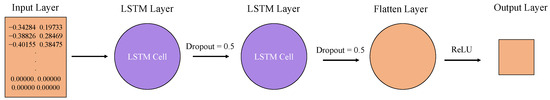

To classify the gestures based on the motion velocity vector, we adopt the LSTM network, which is widely used in sequential data analysis. As the motion velocity vector contains a sequence of velocities that capture the sequential movement of hand gestures, the LSTM is a natural fit to this task. The LSTM considered in this work contains two LSTM layers and one flatten layer, as illustrated in Figure 6.

Figure 6.

The architecture of the LSTM network adopted in the proposed gesture recognition system.

Instead of making any modifications to the traditional structure of the LSTM network, we directly employ a general LSTM network which only has two LSTM layers. The input features are passed through the flattening layer to reduce their dimensionality and subsequently subjected to the ReLU activation function.

5. Experiments and Results

In this section, we evaluate the performance of the dual-radar system driven by the information processing procedure detail in Section 4, using real data measured by radars.

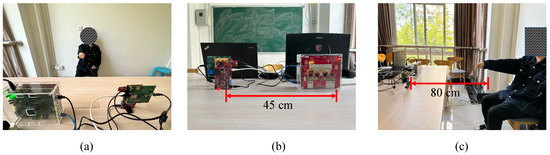

5.1. Radar Parameters and Placement

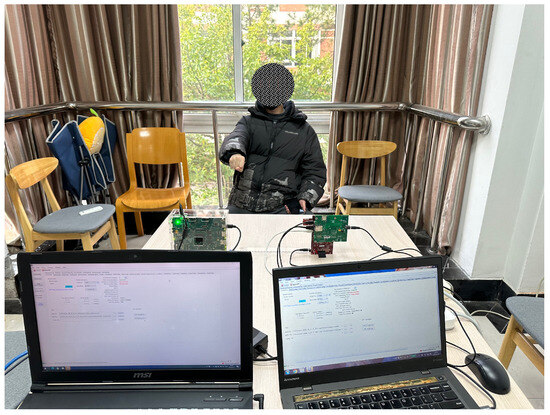

In our experiments, we utilize two TI commercial mmWave FMCW radars for gesture recognition, e.g., one TI MMWCAS-DSP/RF-EVM radar and one TI IWR-6843-AOP-EVM radar.

The TI IWR-6843-AOP-EVM radar consists of three transmit antennas and four receive antennas and operates between 60 GHz and 64 GHz. The TI MMWCAS-DSP/RF-EVM consists of 12 transmit antennas and 16 receive antennas and operates between 76 GHz and 81 GHz. The deployment of the two mmWave FMCW radars and the environment for collecting the gesture data set are shown in Figure 7. The distance between these two radars is approximately 45 cm, as shown in Figure 7b, and the distance between radars and the hand of the gesture performers is approximately 80 cm, as shown in Figure 7c. With the aim of mitigating the influence of non-target objects on the recognition results, we ensure that the experiment encompassed predominantly wall structures within the background setting. The configuration parameters of radars used in our experiments are presented in Table 2 and Table 3.

Figure 7.

The environment of the experiment and the placement of two radars: (a) experimental background; (b) specific placement of radars; (c) user-to-radar spacing.

Table 2.

Radar configuration parameters for IWR-6843-AOP-EVM.

Table 3.

Radar configuration parameters for MMWCAS-DSP/RF-EVM.

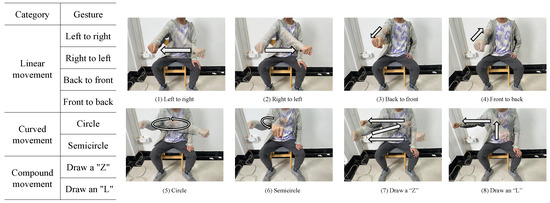

5.2. Data Set

To evaluate the capability of the proposed framework in gesture recognition, we consider eight different gestures, as shown in Figure 8. The gestures can be classified into three categories, namely the linear gestures, the curved gestures and the compound ones. The linear gestures consist of four different basic gestures, e.g., left to right motion, right to left motion, front to back motion, and back to front motion, and the curved gestures contain those drawing a circle and drawing a semicircle. The compound gestures can be decomposed into a combination of several basic linear and curved gestures. For example, the compound gesture of drawing a “Z” in the plane consists of three basic gestures: left to right “→”, front to back “↙” and left to right “→”.

Figure 8.

Diagram of the eight hand gestures.

Based on the gestures defined, a data set with 1600 samples is collected in real experiments. To enhance the diversity of the gesture data set and to better test the robustness of the proposed framework, 10 volunteers (six males and four females) with different heights, weights, and gesture habits were invited to perform the gestures. For each volunteer, each gesture was performed 20 times.

The 1600 samples in the data set are divided randomly into two groups, with the training set containing 1280 samples and the test set containing 320 samples. The training set is used to training the LSTM network, while the test set is used to assess the accuracy of gesture recognition.

5.3. Performance Evaluations

In this subsection, we evaluate the performance of our proposed hand gesture recognition framework.

For comparisons, we consider two methods as baselines. Specifically, the first baseline adopts the conventional classification method based on the m-D image extracted from the radars and a pre-trained CNN. The m-D image is the input feature and the CNN network acts as a classifier. The second baseline converts the motion velocity vector to an image and then uses a pre-trained CNN network as a classifier.

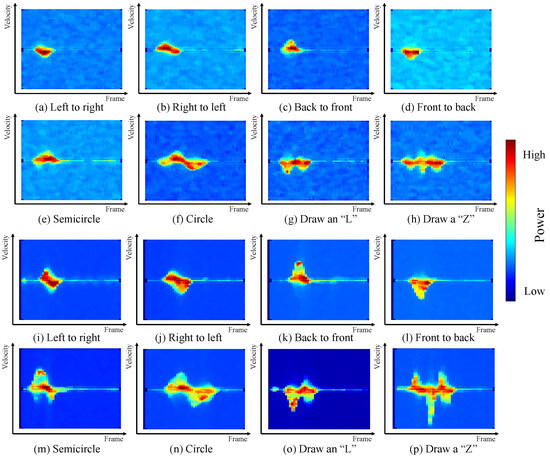

For the first baseline, we consider three types of input feature for a more comprehensive evaluation, including the m-D images obtained from IWR-6843-AOP-EVM (shown in Figure 9a–h), the m-D image obtained from MMWCAS-DSP/RF-EVM (shown in Figure 9i–p) and the m-D images from both radars. For the first two types of input features, a classical CNN architecture with two convolutional layers is adopted. Each layer has 16 convolutional filters and the dimensions of each filter are 3 by 3. For the training of the considered CNN network, the number of epochs is set to 100 and the learning rate is considered as a constant value of .

Figure 9.

The m-D images of different hand gestures: (a–h) obtained from IWR-6843-AOP-EVM; (i–p) obtained from MMWCAS-RF-EVM.

While both the m-D images are adopted as the input features, we train a two-channel CNN network to classify the gestures. For each channel of the CNN network, there are two convolutional layers, each with 32 filters and a kernel size of . The outputs of these two channels of the CNN network are concatenated and flattened, which are then fed into a fully connected layer to produce the gesture label. When training the network, the number of epochs and the learning rate are set to 50 and , respectively.

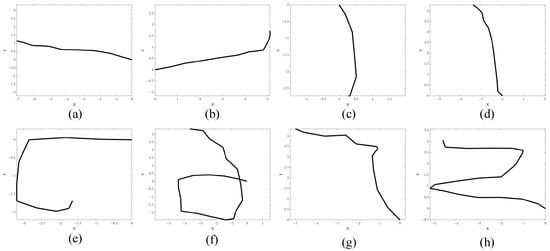

For the second baseline, the image input to the CNN is an image converted from the motion velocity vector extracted from the two radars. To convert the images, we regard each column as a vector in Cartesian coordinates and plot them in chronological order. In Figure 10, we present several examples of the converted images. As can be seen from the figure, some gestures cannot be distinguished from the converted images, e.g., the right to left gesture and left to right gesture cannot be accurately distinguished from the generated images since they are almost same. The parameters of the CNN are identical to those used in baseline 1 with a single image as the input, but the CNN was trained using the motion images.

Figure 10.

Grayscale images of eight different trajectories: (a) left to right, (b) right to left, (c) back to front, (d) front to back, (e) semicircle, (f) circle, (g) draw an “L”, (h) draw a “Z”. “X” and “Y” in figures represent the value of the motion velocity vector in the x and y directions, respectively.

For the proposed solution, the size of the input feature is fixed at into with . The LSTM model has two LSTM layers, and each LSTM layer has 200 hidden units. ReLU is chosen as the activation function. In training process, the batch size is set to 3, while the number of epochs and learning rate are set to 100 and , respectively.

Table 4, Table 5, Table 6 and Table 7 present the confusion matrix to illustrate the recognition accuracy of the proposed framework and the baselines. As can be seen from comparing Table 4 to Table 5, the gesture recognition accuracy for the MMWCAS-DSP/RF-EVM radar is slightly higher compared to that of the IWR-6843-AOP-EVM radar, as expected, since the former has better signal quality. This is evident in Figure 9a–p, which show that MMWCAS-DSP/RF-EVM has lower background noise. However, for both radars, the conventional method using r-D images and CNN cannot offer satisfactory gesture recognition accuracy (from Table 4 and Table 5, the accuracies are and , respectively). Using the m-D images from both radars increases the accuracy to . However, this performance is still inadequate for practical applications.

Table 4.

Confusion matrix for gesture recognition with CNN and m-D images from IWR-6843-AOP-EVM.

Table 5.

Confusion matrix for gesture recognition with CNN and m-D images from MMWCAS-DSP/RF-EVM.

Table 6.

Confusion matrix for gesture recognition with CNN and joint utilization of m-D images from two radars.

Table 7.

Confusion matrix for the recognition with motion images.

As a further enhancement, the second baseline, which utilizes the motion images and the CNN, achieves a significantly higher accuracy than those of baseline 1. As can be seen from Table 7, the gesture recognition accuracy is about . These results show the effectiveness of the motion features extracted from the dual-radar system.

The results of our proposed framework are illustrated in Table 8. As can be seen from the table, our proposed framework achieves an accuracy of about , which is significantly higher than the accuracy of all the baselines.

Table 8.

Confusion matrix for gesture recognition with synthesized motion vectors.

To further evaluate the robustness of our proposed gesture recognition framework against diverse gesture patterns, we perform the following experiment. In the experiment, the training set is built by using data sets extracted from eight randomly chosen volunteers, while the test set comes from the other two volunteers. In such circumstances, the LSTM network is trained only with a part of the volunteer data sets. The experimental results presented in Table 9 show a similar overall accuracy to the ones presented in Table 8, e.g., .

Table 9.

Confusion matrix for gesture recognition with synthesized motion vectors (two users as testing set).

To test the proposed framework against environmental diverseness, we introduce changes in the background environment for gesture recognition, which are demonstrated via a comparison between Figure 7a and Figure 11. In order to keep all other testing conditions unchanged compared to the previous experiment, we ensure that the distance between the two radars remains at 45 cm and the distance between radars and the hand remains at 80 cm.

Figure 11.

Test environment and radar placement.

To simulate a practical environment, several chairs are placed close to the performers of the gestures, as shown in Figure 11. Due to the implementation of the time division multiplexing mode in radar transmission during the experiment, the resulting beam coverage encompassed all regions within the radars’ FoVs. Consequently, non-target objects such as chairs exerted some influence on the recognition results.

By employing the same trained hyper-parameters of the LSTM network, as the previously conducted experiment, the results of which are presented in Table 8, 160 samples for eight gestures are inputted for recognition, and the result for the background-changed comparison is , as illustrated in Table 10, which mirrors the gesture recognition performance given in Table 8.

Table 10.

Confusion matrix for the recognition with vector pairs (change in background environment).

These comparison results validate the robustness of our proposed gesture recognition algorithm and demonstrate its resilience towards the changes in the background environment.

6. Discussion and Conclusions

We primarily discussed two potential sources of error in the experiments. Firstly, we control the number of LSTM layers to satisfy the requirement of low complexity, which will influence the accuracy of recognition. The accuracy can be further improved by increasing the number of LSTM layers or adopting other neural networks with higher complexity. Another factor contributing to errors is the resemblance between the gestures due to arbitrary drawing. For example, when volunteers execute the second stage of the gesture of drawing an “L”, in which the hand moves from left to right, arbitrary drawing leads to a short distance of motion for this specific stage. Consequently, this marginal tangential displacement fails to exhibit pronounced Doppler frequency, thereby leading to a final result that resembles the gesture of moving from front to back.

Furthermore, there are three potential research directions that can be explored in the future development of this system. Regarding the application scenarios of the system, we hope to apply this system in scenarios with multiple people and hands while further improving the recognition accuracy, thus placing higher demands on the extraction of hand reflection points. Significantly, when dealing with the recognition of two hand gestures, a pressing challenge arises in effectively tracking the reflective points of the hands and synthesizing motion velocity vectors when confronted with overlapping trajectories.

In terms of recognizing the types of gestures by the system, our system is currently designed for macro-gestures, and it is hoped that the ability to recognize micro-gestures can be developed by maintaining the utilization of our motion velocity vector algorithm in the recognition process. Given the inherently small magnitude of displacements exhibited by micro-gestures, the extraction of precise velocity and azimuth information is a notable challenge.

For the system hardware, when more than two radars are present in the same space, the synthesis algorithm for radar data necessitates addressing several challenges, including, but not limited to, minimizing placement restrictions of the radars, synchronizing and transmitting radar data, and selecting the most appropriate radar data to be utilized.

In this work, we have proposed a novel gesture recognition framework based on two mmWave FMCW radars. A novel motion velocity vector synthesis algorithm has been developed to extract the gesture characteristics that are crucial for gesture recognition. The proposed motion velocity synthesis algorithm allows for flexible deployment of the mmWave radars and does not require knowledge of the relative position of the two radars. Based on the motion velocity vector, a gesture recognition model based on an LSTM network has been designed. The numerical results from real measurements demonstrate that the proposed framework can achieve satisfactory hand gesture recognition accuracy, even when there is a change in the environment and the training data and the test data are collected from different volunteers.

Author Contributions

Conceptualization, Y.M., L.Z. and C.L.; methodology, Y.M., L.Z. and C.L.; software, Y.M., L.Z. and C.L.; validation, Y.M., L.Z. and C.L.; formal analysis, Y.M., L.Z. and C.L.; investigation, Y.M., L.Z. and C.L.; resources, Y.M., L.Z. and C.L.; data curation, Y.M., L.Z., C.L. and M.L.; writing—original draft preparation, Y.M.; writing—review and editing, L.Z. and C.L.; visualization, Y.M., L.Z. and C.L.; supervision, L.Z. and C.L.; project administration, Y.M., L.Z., C.L. and M.L.; funding acquisition, L.Z. and C.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported in part by the National Natural Science Foundation of China under Grant No. 42176190, the Zhejiang Provincial Natural Science Foundation of China under Grant No. LZ22F010001, and the Fundamental Research Funds for the Provincial Universities of Zhejiang under Grant no. GK229909299001-013.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ahmed, S.; Kallu, K.D.; Ahmed, S.; Cho, S.H. Hand gestures recognition using radar sensors for human-computer-interaction: A review. Remote Sens. 2021, 13, 527. [Google Scholar] [CrossRef]

- Nigam, S.; Shamoon, M.; Dhasmana, S.; Choudhury, T. A complete study of methodology of hand gesture recognition system for smart homes. In Proceedings of the 2019 International Conference on Contemporary Computing and Informatics (IC3I), Singapore, 12–14 December 2019; pp. 289–294. [Google Scholar]

- Chen, T.; Xu, L.; Xu, X.; Zhu, K. Gestonhmd: Enabling gesture-based interaction on low-cost VR head-mounted display. IEEE Trans. Vis. Comput. Graph. 2021, 27, 2597–2607. [Google Scholar] [CrossRef]

- Yin, T.K.; Manders, C.M.; Farbiz, F.; Hwan, L.M.; Guan, C.G.; Ho, C.Y. Intergrating a gesture interface to a commercial online dance game. In Proceedings of the 2009 IEEE International Conference on Multimedia and Expo, New York, NY, USA, 28 June–3 July 2009; pp. 1688–1691. [Google Scholar]

- Niu, K.; Zhang, F.; Jiang, Y.; Xiong, J.; Lv, Q.; Zeng, Y.; Zhang, D. WiMorse: A contactless morse code text input system using ambient WiFi signals. IEEE Internet Things J. 2019, 6, 9993–10008. [Google Scholar] [CrossRef]

- Kudrinko, K.; Flavin, E.; Zhu, X.; Li, Q. Wearable sensor-based sign language recognition: A comprehensive review. IEEE Rev. Biomed. Eng. 2020, 14, 82–97. [Google Scholar] [CrossRef]

- Wu, Y.; Huang, T.S. Vision-based gesture recognition: A review. In International Gesture Workshop; Springer: Berlin/Heidelberg, Germany, 1999; pp. 103–115. [Google Scholar]

- Liu, J.; Liu, H.; Chen, Y.; Wang, Y.; Wang, C. Wireless sensing for human activity: A survey. IEEE Commun. Surv. Tutor. 2019, 22, 1629–1645. [Google Scholar] [CrossRef]

- Lu, Z.; Chen, X.; Li, Q.; Zhang, X.; Zhou, P. A hand gesture recognition framework and wearable gesture-based interaction prototype for mobile devices. IEEE Trans. Hum. Mach. Syst. 2014, 44, 293–299. [Google Scholar] [CrossRef]

- Ling, Y.; Chen, X.; Ruan, Y.; Zhang, X.; Chen, X. Comparative study of gesture recognition based on accelerometer and photoplethysmography sensor for gesture interactions in wearable devices. IEEE Sens. J. 2021, 21, 17107–17117. [Google Scholar] [CrossRef]

- Tubaiz, N.; Shanableh, T.; Assaleh, K. Glove-based continuous Arabic sign language recognition in user-dependent mode. IEEE Trans. Hum.-Mach. Syst. 2015, 45, 526–533. [Google Scholar] [CrossRef]

- Laptev, I.; Marszalek, M.; Schmid, C.; Rozenfeld, B. Learning realistic human actions from movies. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Chen, C.; Liu, K.; Kehtarnavaz, N. Real-time human action recognition based on depth motion maps. J. Real Time Image Process. 2016, 12, 155–163. [Google Scholar] [CrossRef]

- Li, Y.; Miao, Q.; Tian, K.; Fan, Y.; Xu, X.; Li, R.; Song, J. Large-scale gesture recognition with a fusion of RGB-D data based on saliency theory and C3D model. IEEE Trans. Circuits Syst. Video Technol. 2017, 28, 2956–2964. [Google Scholar] [CrossRef]

- Ohn-Bar, E.; Trivedi, M.M. Hand gesture recognition in real time for automotive interfaces: A multimodal vision-based approach and evaluations. IEEE trans. Intell. Transp. Syst. 2014, 15, 2368–2377. [Google Scholar] [CrossRef]

- Wang, Z.r.; Wang, P.; Xing, L.; Mei, L.P.; Zhao, J.; Zhang, T. Leap Motion-based virtual reality training for improving motor functional recovery of upper limbs and neural reorganization in subacute stroke patients. Neural Regen. Res. 2017, 12, 1823. [Google Scholar]

- Al Ayubi, S.; Sudiharto, D.W.; Jadied, E.M.; Aryanto, E. The prototype of hand gesture recognition for elderly people to control connected home devices. In Journal of Physics: Conference Series, Proceedings of the International Conference on Electronics Representation and Algorithm (ICERA 2019), Yogyakarta, Indonesia, 29–30 January 2019; IOP Publishing: Yogyakarta, Indonesia, 2019; Volume 1201, p. 012042. [Google Scholar]

- Niu, K.; Zhang, F.; Wang, X.; Lv, Q.; Luo, H.; Zhang, D. Understanding WiFi signal frequency features for position-independent gesture sensing. IEEE Trans. Mob. Comput. 2021, 21, 4156–4171. [Google Scholar] [CrossRef]

- He, W.; Wu, K.; Zou, Y.; Ming, Z. WiG: WiFi-based gesture recognition system. In Proceedings of the 2015 24th International Conference on Computer Communication and Networks (ICCCN), Las Vegas, NV, USA, 3–6 August 2015; pp. 1–7. [Google Scholar]

- Hao, Z.; Duan, Y.; Dang, X.; Liu, Y.; Zhang, D. Wi-SL: Contactless fine-grained gesture recognition uses channel state information. Sensors 2020, 20, 4025. [Google Scholar] [CrossRef]

- Pu, Q.; Gupta, S.; Gollakota, S.; Patel, S. Whole-home gesture recognition using wireless signals. In Proceedings of the 19th Annual International Conference on Mobile Computing & Networking, Miami, FL, USA, 30 September–4 October 2013; pp. 27–38. [Google Scholar]

- Zhang, D.; Wu, D.; Niu, K.; Wang, X.; Zhang, F.; Yao, J.; Jiang, D.; Qin, F. Practical issues and challenges in CSI-based integrated sensing and communication. In Proceedings of the 2022 IEEE International Conference on Communications Workshops (ICC Workshops), Seoul, Republic of Korea, 16–20 May 2022; pp. 836–841. [Google Scholar]

- Lien, J.; Gillian, N.; Karagozler, M.E.; Amihood, P.; Schwesig, C.; Olson, E.; Raja, H.; Poupyrev, I. Soli: Ubiquitous gesture sensing with millimeter wave radar. ACM Trans. Graph. (TOG) 2016, 35, 1–19. [Google Scholar] [CrossRef]

- Malysa, G.; Wang, D.; Netsch, L.; Ali, M. Hidden Markov model-based gesture recognition with FMCW radar. In Proceedings of the 2016 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Washington, DC, USA, 7–9 December 2016; pp. 1017–1021. [Google Scholar]

- Jhaung, Y.C.; Lin, Y.M.; Zha, C.; Leu, J.S.; Köppen, M. Implementing a hand gesture recognition system based on Range-Doppler Map. Sensors 2022, 22, 4260. [Google Scholar] [CrossRef]

- Li, Y.; Gu, C.; Mao, J. 4-D gesture sensing using reconfigurable virtual array based on a 60-GHz FMCW MIMO radar sensor. IEEE Trans. Microw. Theory. Tech. 2022, 70, 3652–3665. [Google Scholar] [CrossRef]

- Li, C.; Peng, Z.; Huang, T.Y.; Fan, T.; Wang, F.K.; Horng, T.S.; Munoz-Ferreras, J.M.; Gomez-Garcia, R.; Ran, L.; Lin, J. A review on recent progress of portable short-range noncontact microwave radar systems. IEEE Trans. Microw. Theory Tech. 2017, 65, 1692–1706. [Google Scholar] [CrossRef]

- Hasch, J.; Topak, E.; Schnabel, R.; Zwick, T.; Weigel, R.; Waldschmidt, C. Millimeter-wave technology for automotive radar sensors in the 77 GHz frequency band. IEEE Trans. Microw. Theory Tech. 2012, 60, 845–860. [Google Scholar] [CrossRef]

- Kim, Y.; Toomajian, B. Hand gesture recognition using micro-Doppler signatures with convolutional neural network. IEEE Access 2016, 4, 7125–7130. [Google Scholar] [CrossRef]

- Ahmed, S.; Kim, W.; Park, J.; Cho, S.H. Radar-based air-writing gesture recognition using a novel multistream CNN Approach. IEEE Internet Things J. 2022, 9, 23869–23880. [Google Scholar] [CrossRef]

- Xia, Z.; Luomei, Y.; Zhou, C.; Xu, F. Multidimensional feature representation and learning for robust hand-gesture recognition on commercial millimeter-wave radar. IEEE Trans. Geosci. Remote Sens. 2020, 59, 4749–4764. [Google Scholar] [CrossRef]

- Xia, Z.; Xu, F. Time-space dimension reduction of millimeter-wave radar point-clouds for smart-home hand-gesture recognition. IEEE Sens. J. 2022, 22, 4425–4437. [Google Scholar] [CrossRef]

- Choi, J.W.; Ryu, S.J.; Kim, J.H. Short-range radar based real-time hand gesture recognition using LSTM encoder. IEEE Access 2019, 7, 33610–33618. [Google Scholar] [CrossRef]

- Yang, Z.; Zheng, X. Hand gesture recognition based on trajectories features and computation-efficient reused LSTM network. IEEE Sens. J. 2021, 21, 16945–16960. [Google Scholar] [CrossRef]

- Van Veen, B.D.; Buckley, K.M. Beamforming: A versatile approach to spatial filtering. IEEE ASSP Mag. 1988, 5, 4–24. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).