Development of a Universal Validation Protocol and an Open-Source Database for Multi-Contextual Facial Expression Recognition

Abstract

:1. Introduction

1.1. Related Work

- Face detection: it identifies the boundaries of one or more faces.

- Face landmark detection: it extracts the position and shape of eyebrows, eyes, nose, mouth, lips, and chin.

- Face recognition: it identifies individuals in an image.

- Facial expression detection: it determines the expression of a face based on Ekman’s discrete theory of emotions, including the additional neutral class alongside the six basic emotions.

1.2. Objectives

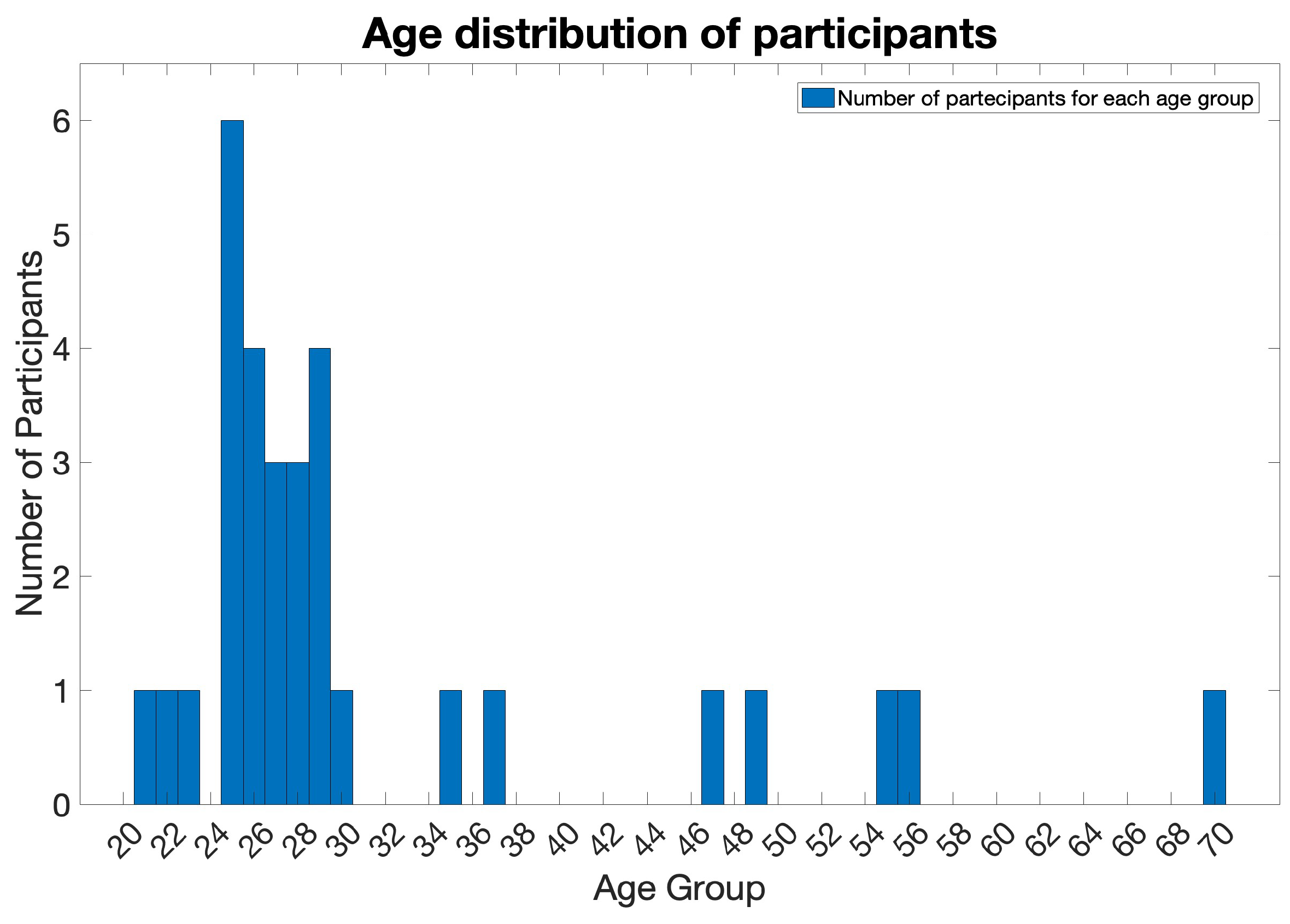

2. Materials and Methods

2.1. Experimental Protocol

- Intel Core i7-1065g7 processor;

- 8 GB Ram;

- Hd 512 GB SSD;

- Display 15.6;

- Windows 11;

- 0.3 MP front camera resolution.

2.2. Selection of Highly Emotive Images

- Negative label to the cluster of images with valence values predominantly in the range [1, 4];

- Neutral label to the cluster of images with valence values predominantly in the range [4, 6];

- Positive label to the cluster of images with valence values predominantly in the range [6, 9].

- Before searching the images for each emotion from the specific clusters, a pre-screening procedure was carried out on the images. In fact, to avoid excessively shocking the subjects’ sensitivity, images that had been assigned an intensity value associated with the emotion “disgust” greater than 4 by the database developers were not considered.

- The neutral emotional state is the only one for which it was not possible to isolate the ten images considering only the labels and intensity values, as the latter information is not provided in the database information table. Therefore, an alternative procedure was implemented:

- It was decided to use the discrete labels and arousal values provided.

- Images belonging to the neutral cluster, for which the database developers had assigned all six basic emotion labels, were considered.

- These images were then reordered according to arousal values, using an increasing sorting order.

- Finally, the top ten images with the lowest arousal values were isolated.

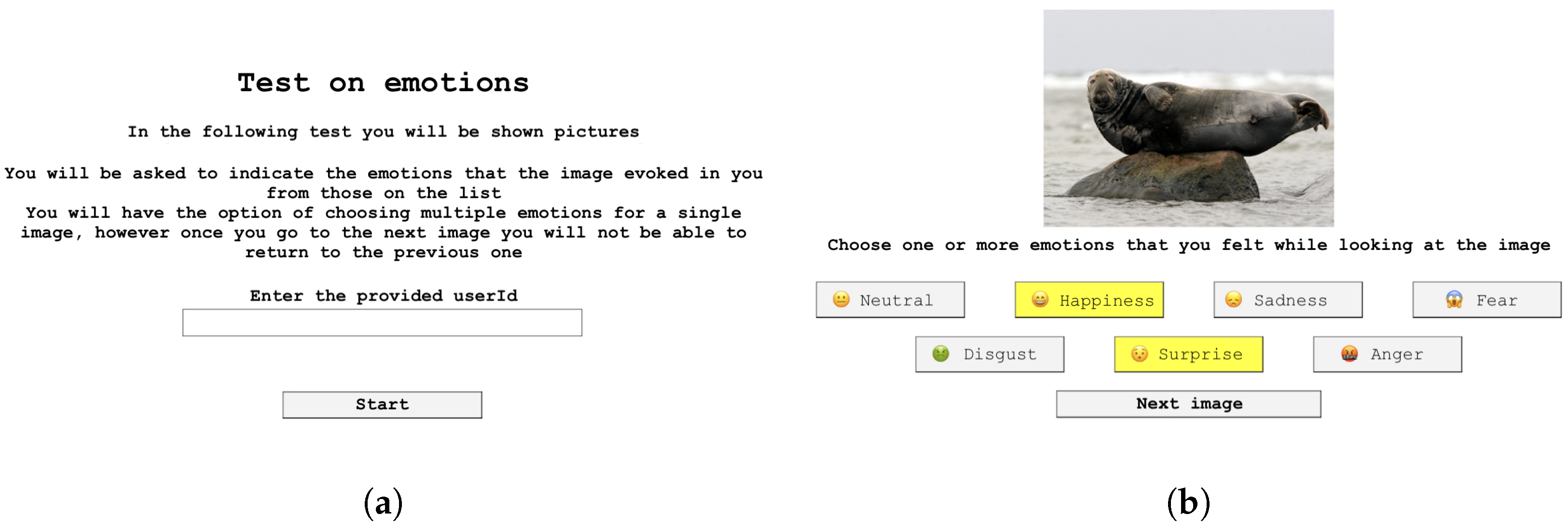

2.3. Web Application

2.4. FeelPix Dataset

- Left meningeal;

- Right meningeal;

- Nasal center;

- Subnasal center.

2.5. Testing Algorithm

- The selection of a specific emotion was associated with class 1;

- The absence of the specific emotion’s selection was associated with class 0.

3. Results

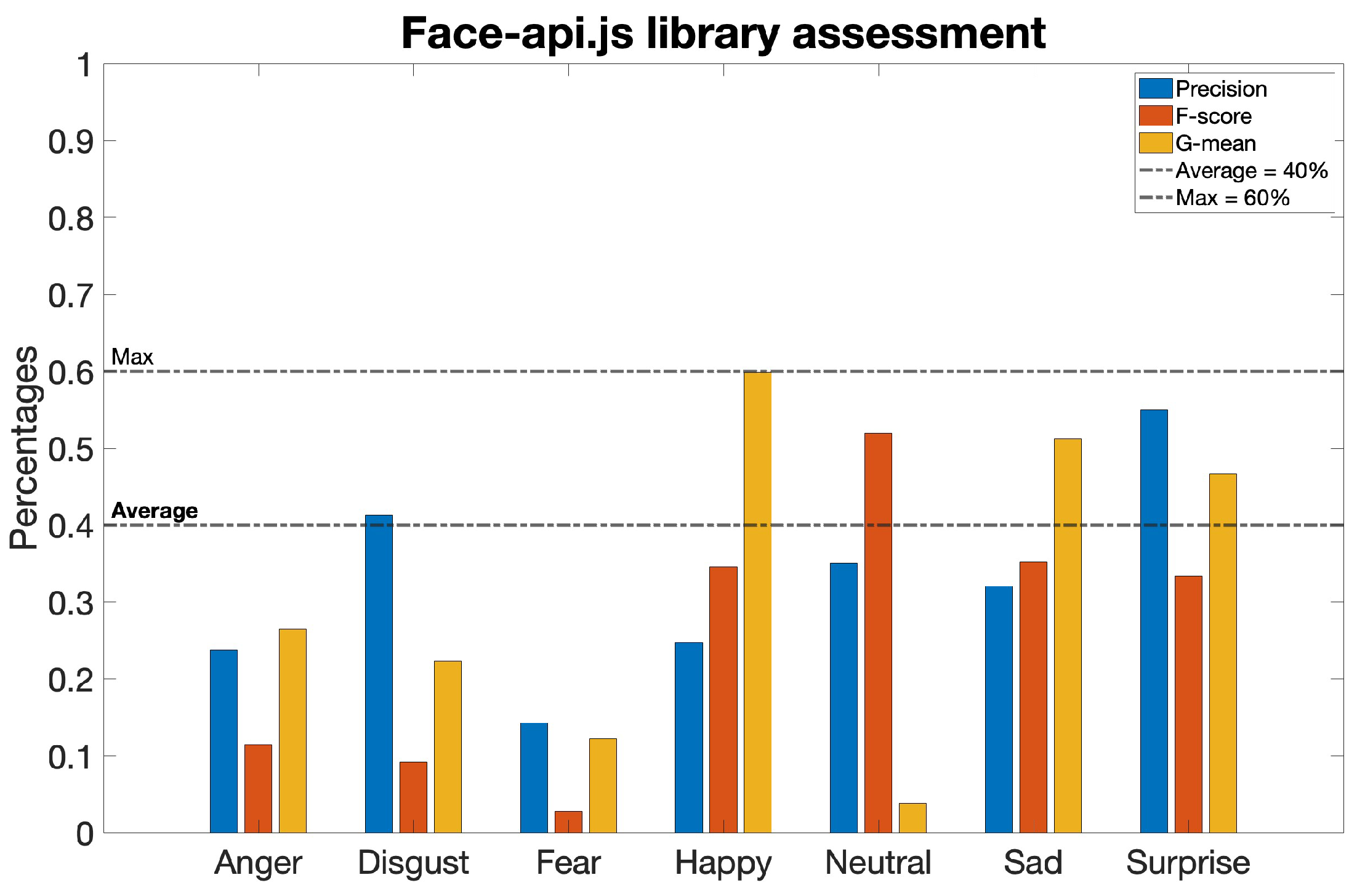

3.1. Investigated Algorithm Results

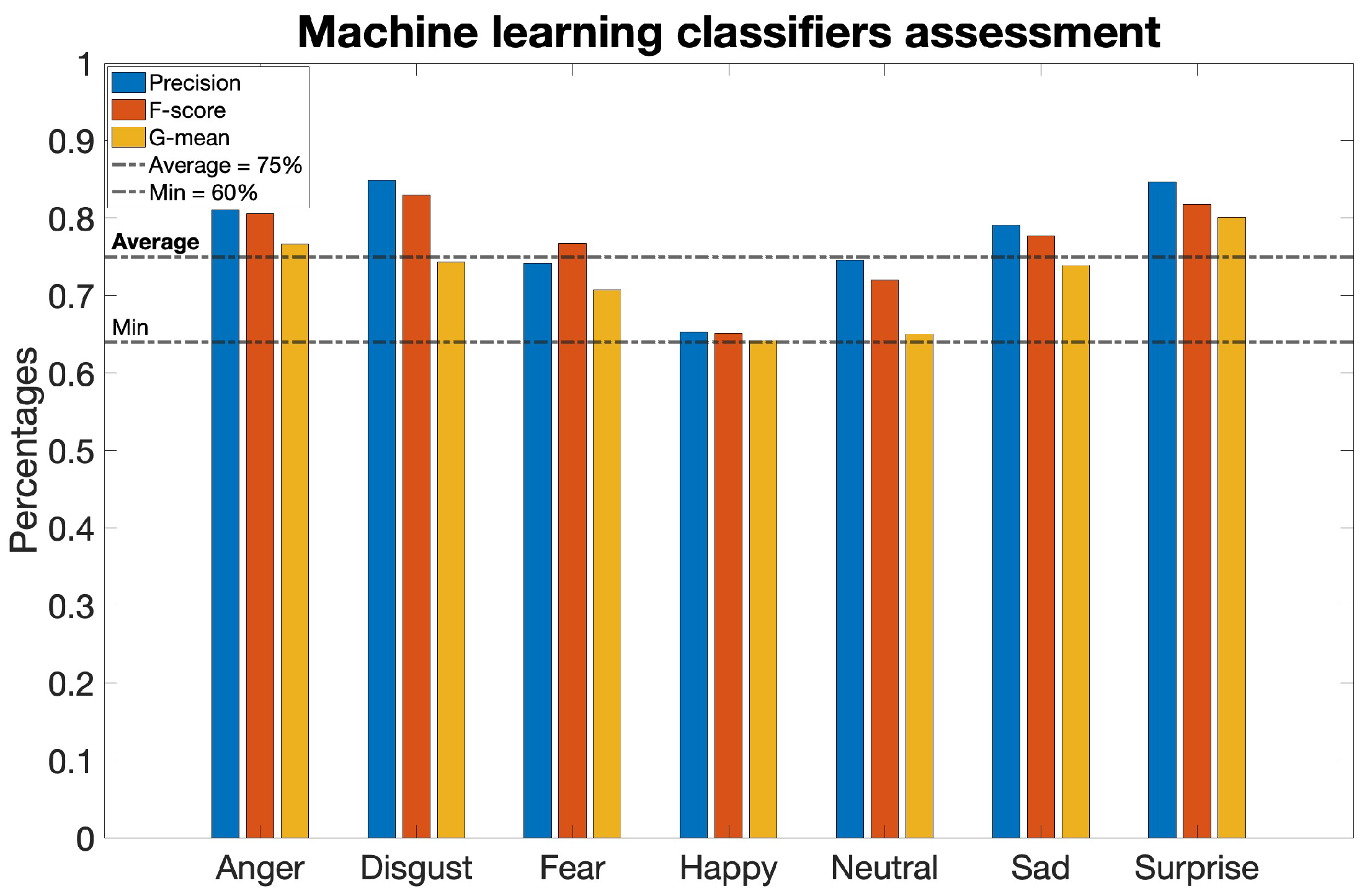

3.2. Outcomes of the Dataset Testing

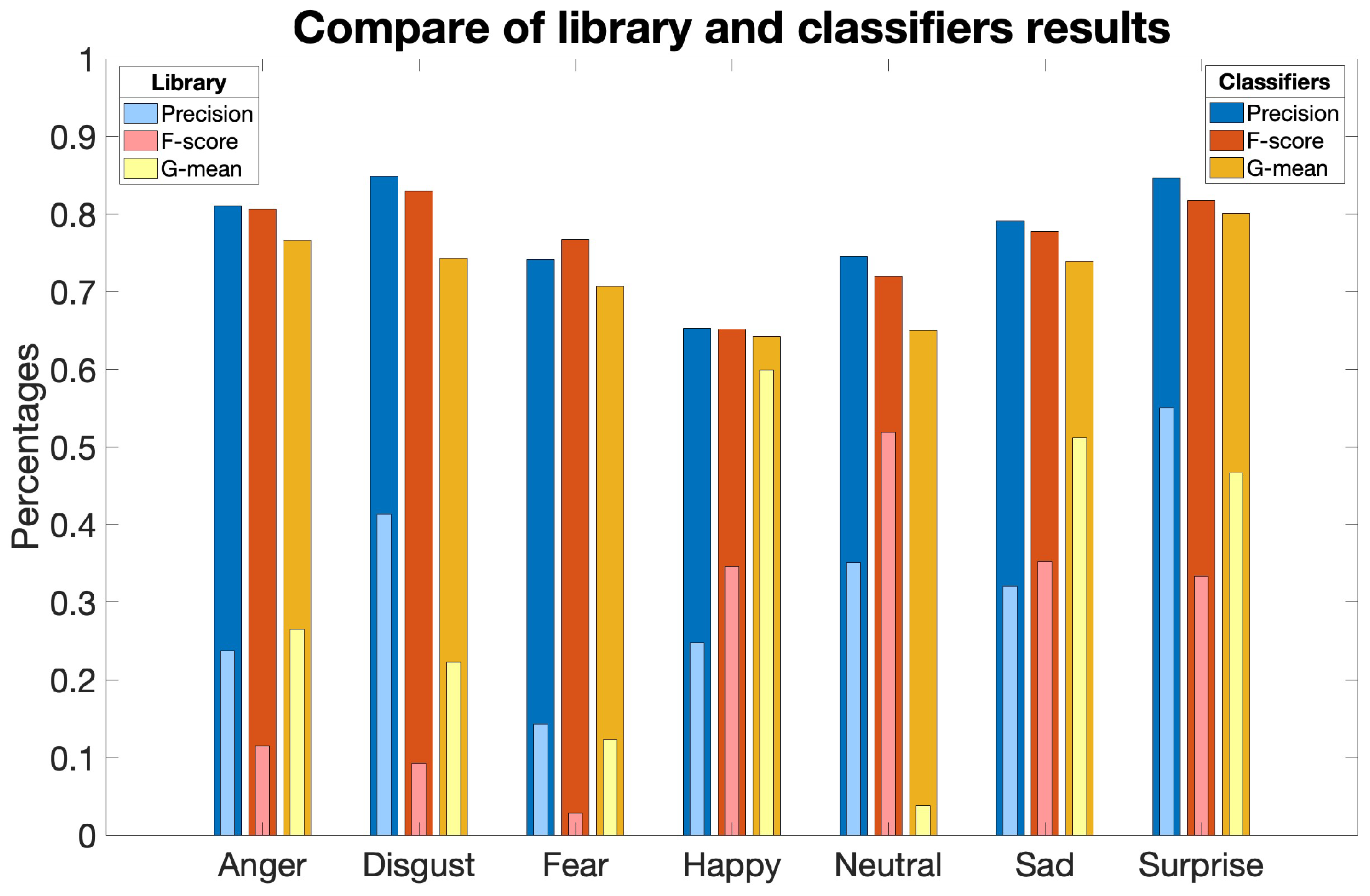

3.3. FER Algorithms’ Comparison

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| API | Application Programming Interface |

| SDK | Software Development Kit |

| CNN | Convolutional Neural Networks |

References

- Mehrabian, A. Nonverbal Communication; Routledge: Oxford, UK, 2017. [Google Scholar]

- Brave, S.; Nass, C. Emotion in human-computer interaction. In The Human-Computer Interaction Handbook; CRC Press: Boca Raton, FL, USA, 2007; pp. 103–118. [Google Scholar]

- Peter, C.; Urban, B. Emotion in human-computer interaction. In Expanding the Frontiers of Visual Analytics and Visualization; Springer: London, UK, 2012; pp. 239–262. [Google Scholar]

- Darwin, C.; Prodger, P. The Expression of the Emotions in Man and Animals; Oxford University Press: New York, NY, USA, 1998. [Google Scholar]

- Martinez, B.; Valstar, M.F. Advances, challenges, and opportunities in automatic facial expression recognition. In Advances in Face Detection and Facial Image Analysis; Springer: Cham, Switzerland, 2016; pp. 63–100. [Google Scholar]

- Valstar, M.F.; Mehu, M.; Jiang, B.; Pantic, M.; Scherer, K. Meta-analysis of the first facial expression recognition challenge. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2012, 42, 966–979. [Google Scholar] [CrossRef] [PubMed]

- Revina, I.M.; Emmanuel, W.S. A survey on human face expression recognition techniques. J. King Saud Univ.-Comput. Inf. Sci. 2021, 33, 619–628. [Google Scholar] [CrossRef]

- Ekman, P. Basic emotions. In Handbook of Cognition and Emotion; John Wiley & Sons, Inc.: New York, NY, USA, 1999; Volume 98, p. 16. [Google Scholar]

- Russell, J.A. A circumplex model of affect. J. Personal. Soc. Psychol. 1980, 39, 1161. [Google Scholar] [CrossRef]

- Anil, J.; Suresh, L.P. Literature survey on face and face expression recognition. In Proceedings of the 2016 International Conference on Circuit, Power and Computing Technologies (ICCPCT), Nagercoil, India, 18–19 March 2016; pp. 1–6. [Google Scholar]

- Ekman, P.; Friesen, W.V. Facial action coding system. Environ. Psychol. Nonverbal Behav. 1978. Available online: https://www.paulekman.com/facial-action-coding-system/ (accessed on 1 September 2022).

- Garcia-Garcia, J.M.; Penichet, V.M.; Lozano, M.D. Emotion detection: A technology review. In Proceedings of the XVIII International Conference on Human-Computer Interaction, Cancun, Mexico, 25–27 September 2017; pp. 1–8. [Google Scholar]

- Verma, A.; Malla, D.; Choudhary, A.K.; Arora, V. A detailed study of azure platform & its cognitive services. In Proceedings of the 2019 International Conference on Machine Learning, Big Data, Cloud and Parallel Computing (COMITCon), Faridabad, India, 14–16 February 2019; pp. 129–134. [Google Scholar]

- McDuff, D.; Mahmoud, A.; Mavadati, M.; Amr, M.; Turcot, J.; Kaliouby, R.E. AFFDEX SDK: A cross-platform real-time multi-face expression recognition toolkit. In Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 3723–3726. [Google Scholar]

- Stöckli, S.; Schulte-Mecklenbeck, M.; Borer, S.; Samson, A.C. Facial expression analysis with AFFDEX and FACET: A validation study. Behav. Res. Methods 2018, 50, 1446–1460. [Google Scholar] [CrossRef] [PubMed]

- Magdin, M.; Benko, L.; Koprda, Š. A case study of facial emotion classification using affdex. Sensors 2019, 19, 2140. [Google Scholar] [CrossRef]

- Deshmukh, R.S.; Jagtap, V. A survey: Software API and database for emotion recognition. In Proceedings of the 2017 International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 15–16 June 2017; pp. 284–289. [Google Scholar]

- Justadudewhohacks. JavaScript API for Face Detection and Face Recognition in the Browser and Nodejs with tensorflow.js. Available online: https://github.com/justadudewhohacks/face-api.js (accessed on 26 September 2022).

- Bartlett, M.S.; Littlewort, G.; Fasel, I.; Movellan, J.R. Real Time Face Detection and Facial Expression Recognition: Development and Applications to Human Computer Interaction. In Proceedings of the 2003 Conference on Computer Vision and Pattern Recognition Workshop, Madison, WI, USA, 16–22 June 2003; Volume 5, p. 53. [Google Scholar]

- Ko, B.C. A brief review of facial emotion recognition based on visual information. Sensors 2018, 18, 401. [Google Scholar] [CrossRef]

- Huang, Y.; Chen, F.; Lv, S.; Wang, X. Facial expression recognition: A survey. Symmetry 2019, 11, 1189. [Google Scholar] [CrossRef]

- Rajan, S.; Chenniappan, P.; Devaraj, S.; Madian, N. Facial expression recognition techniques: A comprehensive survey. IET Image Process. 2019, 13, 1031–1040. [Google Scholar] [CrossRef]

- Mollahosseini, A.; Hasani, B.; Mahoor, M.H. Affectnet: A database for facial expression, valence, and arousal computing in the wild. IEEE Trans. Affect. Comput. 2017, 10, 18–31. [Google Scholar] [CrossRef]

- Lucey, P.; Cohn, J.F.; Kanade, T.; Saragih, J.; Ambadar, Z.; Matthews, I. The extended cohn-kanade dataset (ck+): A complete dataset for action unit and emotion-specified expression. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition-Workshops, San Francisco, CA, USA, 13–18 June 2010; pp. 94–101. [Google Scholar]

- Kanade, T.; Cohn, J.F.; Tian, Y. Comprehensive database for facial expression analysis. In Proceedings of the Fourth IEEE International Conference on Automatic Face and Gesture Recognition (Cat. No. PR00580), Grenoble, France, 28–30 March 2000; pp. 46–53. [Google Scholar]

- Giannopoulos, P.; Perikos, I.; Hatzilygeroudis, I. Deep learning approaches for facial emotion recognition: A case study on FER-2013. In Advances in Hybridization of Intelligent Methods: Models, Systems and Applications; Springer: Cham, Switzerland, 2018; pp. 1–16. [Google Scholar]

- Hadjar, H.; Reis, T.; Bornschlegl, M.X.; Engel, F.C.; Mc Kevitt, P.; Hemmje, M.L. Recognition and visualization of facial expression and emotion in healthcare. In Advanced Visual Interfaces. Supporting Artificial Intelligence and Big Data Applications, Proceedings of the AVI 2020 Workshops, AVI-BDA and ITAVIS, Ischia, Italy, 9 June–29 September 2020; Revised Selected Papers; Springer: Berlin/Heidelberg, Germany, 2021; pp. 109–124. [Google Scholar]

- Ertay, E.; Huang, H.; Sarsenbayeva, Z.; Dingler, T. Challenges of emotion detection using facial expressions and emotion visualisation in remote communication. In Proceedings of the 2021 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2021 ACM International Symposium on Wearable Computers, Online, 21–26 September 2021; pp. 230–236. [Google Scholar]

- Ren, D.; Wang, P.; Qiao, H.; Zheng, S. A biologically inspired model of emotion eliciting from visual stimuli. Neurocomputing 2013, 121, 328–336. [Google Scholar] [CrossRef]

- Grühn, D.; Sharifian, N. Lists of emotional stimuli. In Emotion Measurement; Elsevier: Amsterdam, The Netherlands, 2016; pp. 145–164. [Google Scholar]

- Farnsworth, B.; Seernani, D.; Bülow, P.; Krosschell, K. The International Affective Picture System [Explained and Alternatives]. 2022. Available online: https://imotions.com/blog/learning/research-fundamentals/iaps-international-affective-picture-system/ (accessed on 8 October 2022).

- Lang, P.J.; Bradley, M.M.; Cuthbert, B.N. International affective picture system (IAPS): Technical manual and affective ratings. NIMH Cent. Study Emot. Atten. 1997, 1, 3. [Google Scholar]

- Balsamo, M.; Carlucci, L.; Padulo, C.; Perfetti, B.; Fairfield, B. A bottom-up validation of the IAPS, GAPED, and NAPS affective picture databases: Differential effects on behavioral performance. Front. Psychol. 2020, 11, 2187. [Google Scholar] [CrossRef]

- Kurdi, B.; Lozano, S.; Banaji, M.R. Introducing the open affective standardized image set (OASIS). Behav. Res. Methods 2017, 49, 457–470. [Google Scholar] [CrossRef]

- Marchewka, A.; Żurawski, Ł.; Jednoróg, K.; Grabowska, A. The Nencki Affective Picture System (NAPS): Introduction to a novel, standardized, wide-range, high-quality, realistic picture database. Behav. Res. Methods 2014, 46, 596–610. [Google Scholar] [CrossRef] [PubMed]

- Bradley, M.M.; Lang, P.J. Measuring emotion: The self-assessment manikin and the semantic differential. J. Behav. Ther. Exp. Psychiatry 1994, 25, 49–59. [Google Scholar] [CrossRef]

- Riegel, M.; Żurawski, Ł.; Wierzba, M.; Moslehi, A.; Klocek, Ł.; Horvat, M.; Grabowska, A.; Michałowski, J.; Jednoróg, K.; Marchewka, A. Characterization of the Nencki Affective Picture System by discrete emotional categories (NAPS BE). Behav. Res. Methods 2016, 48, 600–612. [Google Scholar] [CrossRef]

- Ferré, P.; Guasch, M.; Moldovan, C.; Sánchez-Casas, R. Affective norms for 380 Spanish words belonging to three different semantic categories. Behav. Res. Methods 2012, 44, 395–403. [Google Scholar] [CrossRef] [PubMed]

- Kissler, J.; Herbert, C.; Peyk, P.; Junghofer, M. Buzzwords: Early cortical responses to emotional words during reading. Psychol. Sci. 2007, 18, 475–480. [Google Scholar] [CrossRef] [PubMed]

- Russell, J.A.; Mehrabian, A. Evidence for a three-factor theory of emotions. J. Res. Personal. 1977, 11, 273–294. [Google Scholar] [CrossRef]

- Russell, J.A.; Barrett, L.F. Core affect, prototypical emotional episodes, and other things called emotion: Dissecting the elephant. J. Personal. Soc. Psychol. 1999, 76, 805. [Google Scholar] [CrossRef]

- Garg, D.; Verma, G.K.; Singh, A.K. Modelling and statistical analysis of emotions in 3D space. Eng. Res. Express 2022, 4, 035062. [Google Scholar] [CrossRef]

- Horvat, M.; Jović, A.; Burnik, K. Investigation of relationships between discrete and dimensional emotion models in affective picture databases using unsupervised machine learning. Appl. Sci. 2022, 12, 7864. [Google Scholar] [CrossRef]

- An, S.; Ji, L.J.; Marks, M.; Zhang, Z. Two sides of emotion: Exploring positivity and negativity in six basic emotions across cultures. Front. Psychol. 2017, 8, 610. [Google Scholar] [CrossRef] [PubMed]

- Yumatov, E. Duality of the Nature of Emotions and Stress: Neurochemical Aspects. Neurochem. J. 2022, 16, 429–442. [Google Scholar] [CrossRef]

- Samadiani, N.; Huang, G.; Cai, B.; Luo, W.; Chi, C.H.; Xiang, Y.; He, J. A review on automatic facial expression recognition systems assisted by multimodal sensor data. Sensors 2019, 19, 1863. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Deng, W. Deep facial expression recognition: A survey. IEEE Trans. Affect. Comput. 2020, 13, 1195–1215. [Google Scholar] [CrossRef]

- Luca, V.; Costanza, C.; Ludovica, L.M. FeelPix [Landmark Database]. GitHub Repository. 2023. Available online: https://github.com/ludovicalamonica/FeelPix (accessed on 6 March 2023).

| Discrete Emotions | Valence [1, 9] | Arousal [1, 9] | Cluster |

|---|---|---|---|

| Anger | 3.2 | 7.7 | Negative |

| Disgust | 2.6 | 6.4 | Negative |

| Fear | 2.4 | 7.4 | Negative |

| Happy | 8.0 | 6.9 | Positive |

| Neutral | 5.0 | 3.0 | Neutral |

| Sad | 2.4 | 6.1 | Negative |

| Surprise | 6.6 | 7.7 | All clusters |

| Anger | Disgust | Fear | Happy | Neutral | Sad | Surprise | |

|---|---|---|---|---|---|---|---|

| Precision | 24% | 41% | 14% | 25% | 35% | 32% | 55% |

| F score | 11% | 9% | 3% | 35% | 52% | 35% | 33% |

| G mean | 26% | 22% | 12% | 60% | 4% | 51% | 47% |

| Anger | Disgust | Fear | Happy | Neutral | Sad | Surprise | |

|---|---|---|---|---|---|---|---|

| Accuracy | 78% | 78% | 72% | 65% | 67% | 74% | 80% |

| Precision | 81% | 85% | 74% | 65% | 75% | 79% | 85% |

| F score | 81% | 83% | 77% | 65% | 72% | 78% | 82% |

| G mean | 77% | 74% | 71% | 64% | 65% | 74% | 80% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

La Monica, L.; Cenerini, C.; Vollero, L.; Pennazza, G.; Santonico, M.; Keller, F. Development of a Universal Validation Protocol and an Open-Source Database for Multi-Contextual Facial Expression Recognition. Sensors 2023, 23, 8376. https://doi.org/10.3390/s23208376

La Monica L, Cenerini C, Vollero L, Pennazza G, Santonico M, Keller F. Development of a Universal Validation Protocol and an Open-Source Database for Multi-Contextual Facial Expression Recognition. Sensors. 2023; 23(20):8376. https://doi.org/10.3390/s23208376

Chicago/Turabian StyleLa Monica, Ludovica, Costanza Cenerini, Luca Vollero, Giorgio Pennazza, Marco Santonico, and Flavio Keller. 2023. "Development of a Universal Validation Protocol and an Open-Source Database for Multi-Contextual Facial Expression Recognition" Sensors 23, no. 20: 8376. https://doi.org/10.3390/s23208376

APA StyleLa Monica, L., Cenerini, C., Vollero, L., Pennazza, G., Santonico, M., & Keller, F. (2023). Development of a Universal Validation Protocol and an Open-Source Database for Multi-Contextual Facial Expression Recognition. Sensors, 23(20), 8376. https://doi.org/10.3390/s23208376