Autonomous Driving Control Based on the Technique of Semantic Segmentation

Abstract

1. Introduction

2. Preliminary

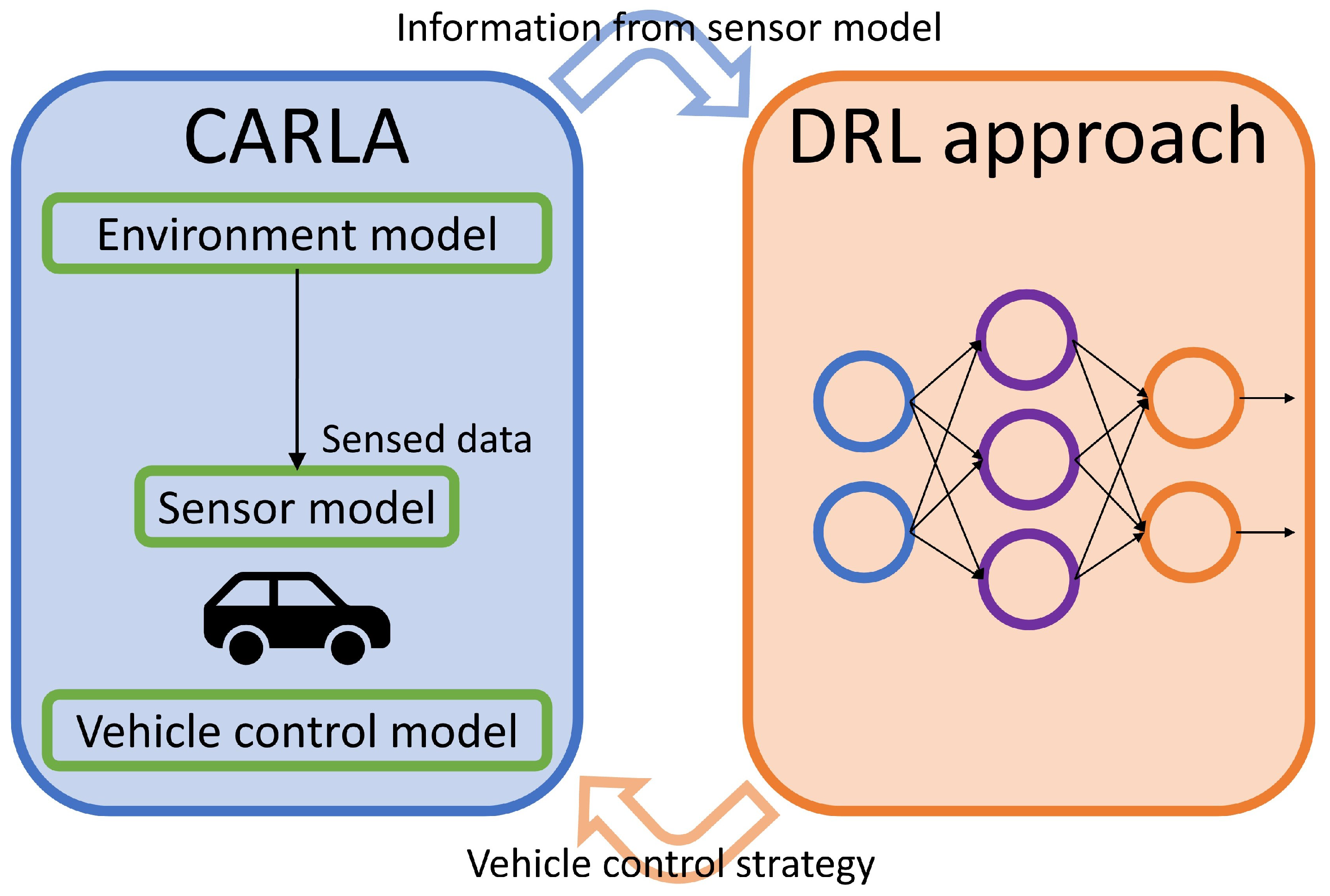

2.1. CARLA Simulator

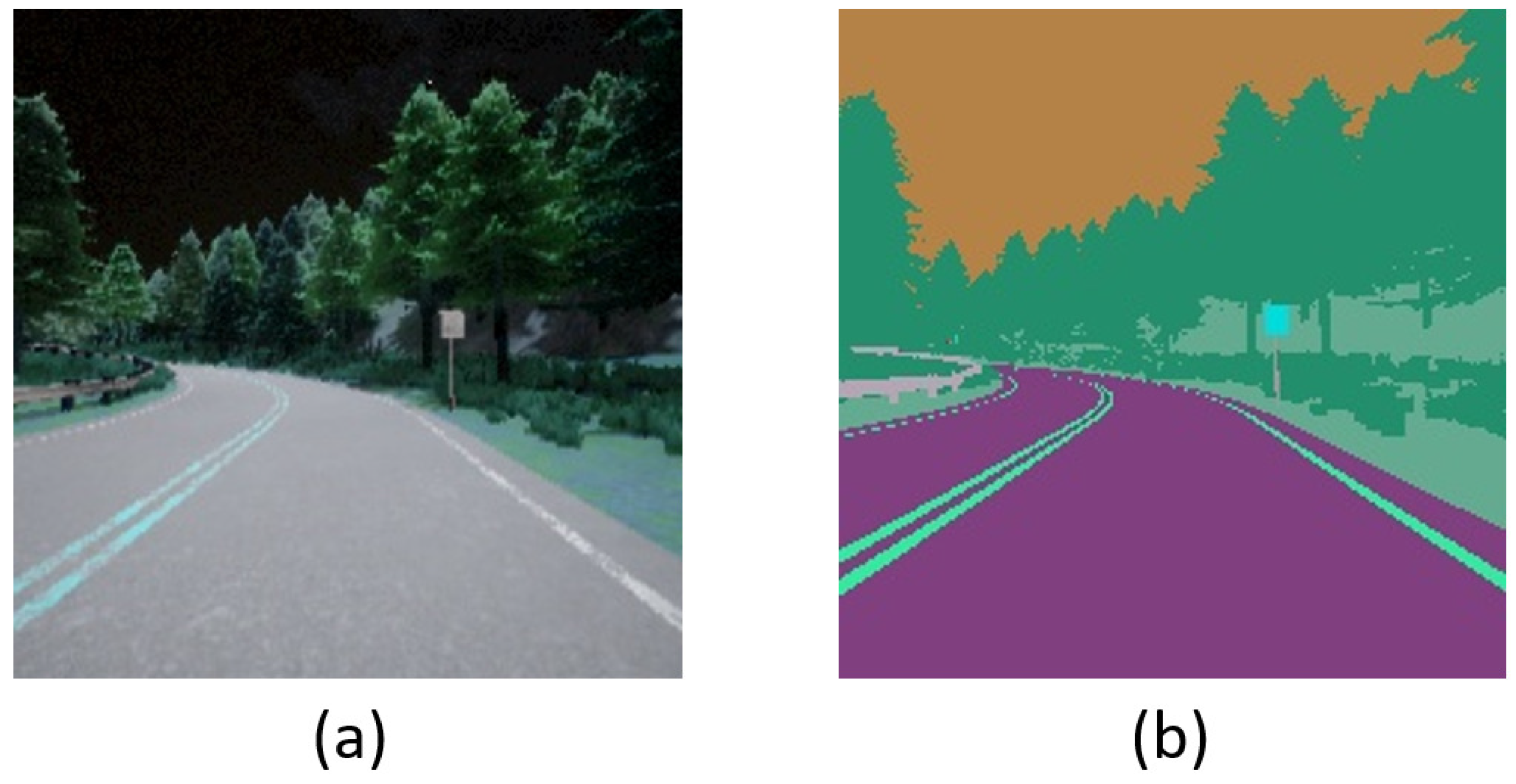

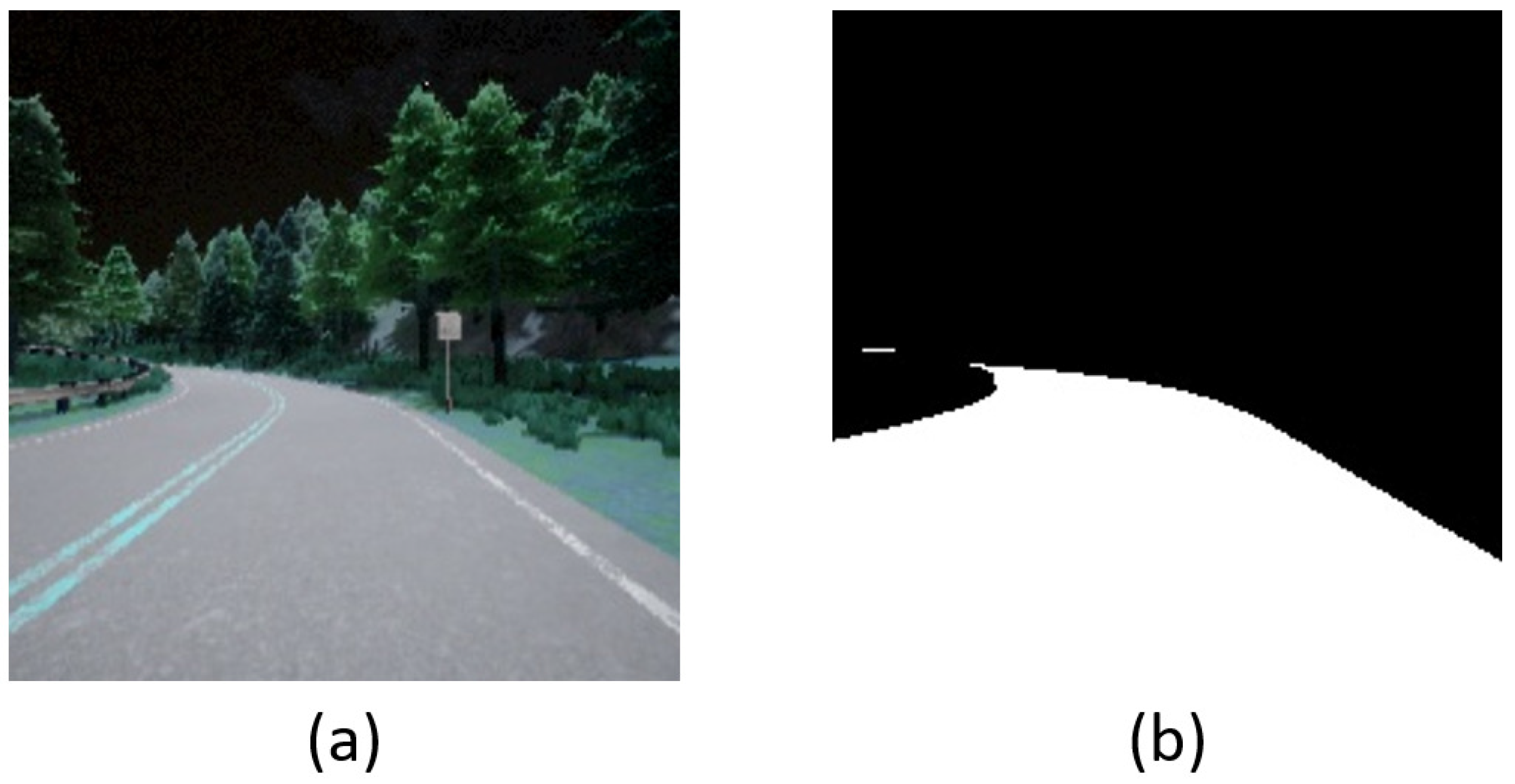

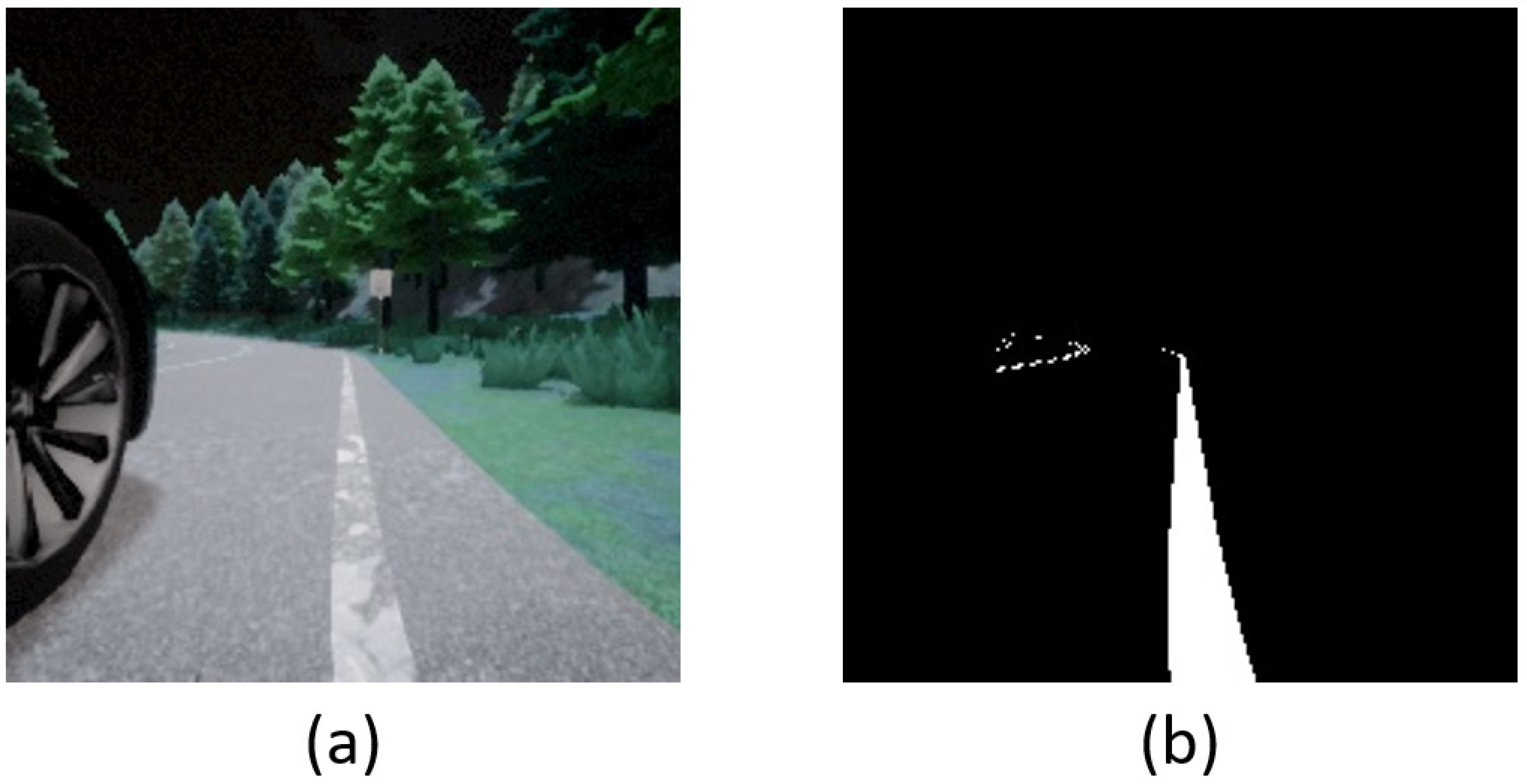

2.2. RGB Camera and Semantic Segmentation Camera

2.3. Constituents of Reinforcement Learning

- The policy: It is a mapping of all perceived environmental states to actions that can be taken during the procedure. It may involve some extended computations or may be a simple lookup table.

- The reward: After each step, the agent will be rewarded with a single number, which is called the reward. Maximizing the total reward during the procedure is the ultimate object. Noteworthily, a step may simultaneously include more than one action for some particular application.

- The value function: The reward only reveals what decision is better or worse instantly. However, in the long term, the value function can reveal what sequence of decisions is worthier or unworthier. That is to say, for a state, the value is the sum of the reward that an agent may amass from now on.

- The environmental model: The environmental model is utilized to mimic the environmental behavior. Via the environmental model, we can infer the possible future before the experiment is actually performed. Namely, it is utilized for preplanning. Noteworthily, a model-based method needs an environmental model to infer the possible future, while a model-free method is simply trial-and-error.

2.4. Model-Based and Model-Free Methods

2.5. Experience Replay

2.6. Target Network

2.7. Policy Gradient

2.8. OU Noise

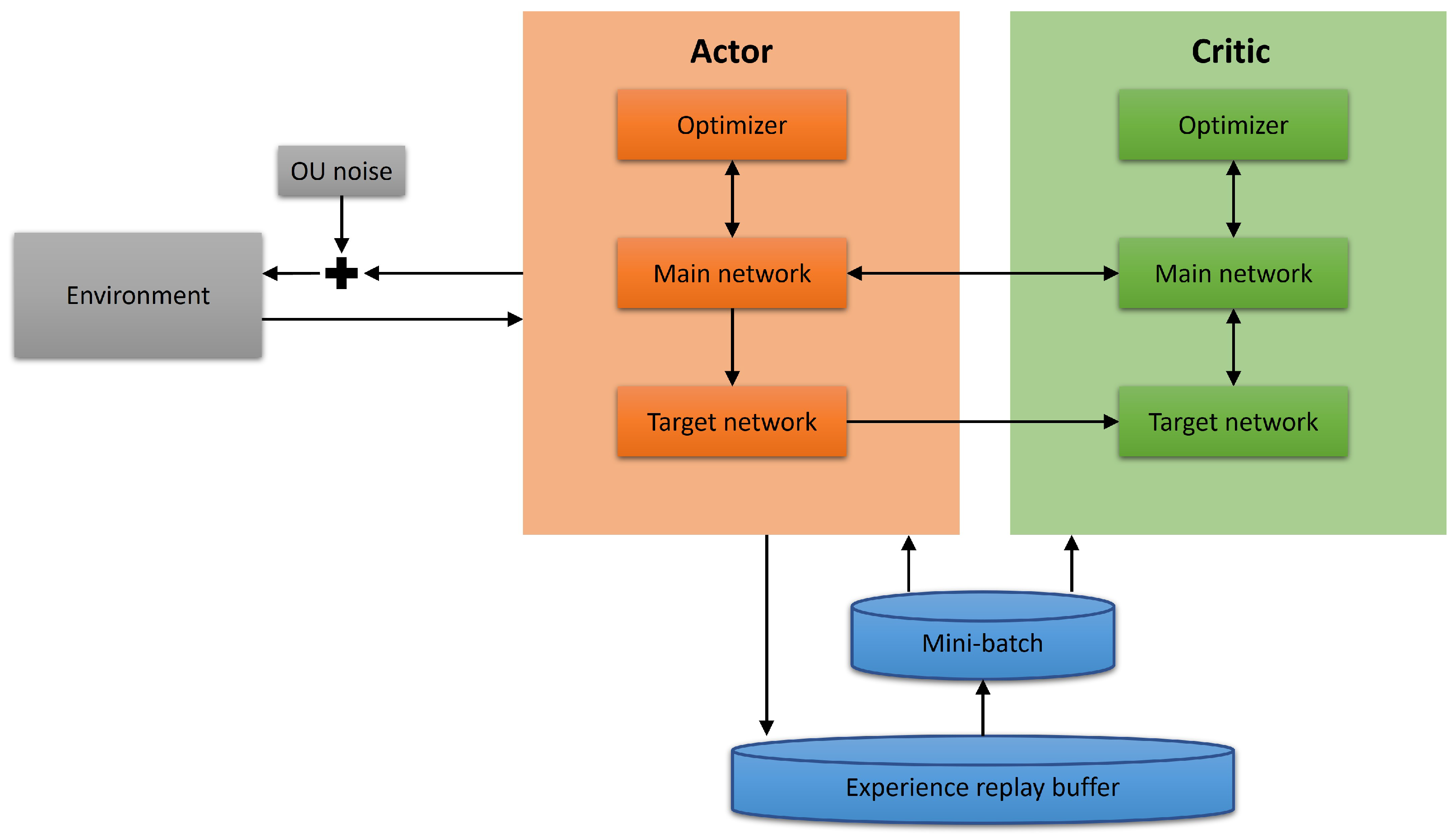

2.9. DRL Architecture

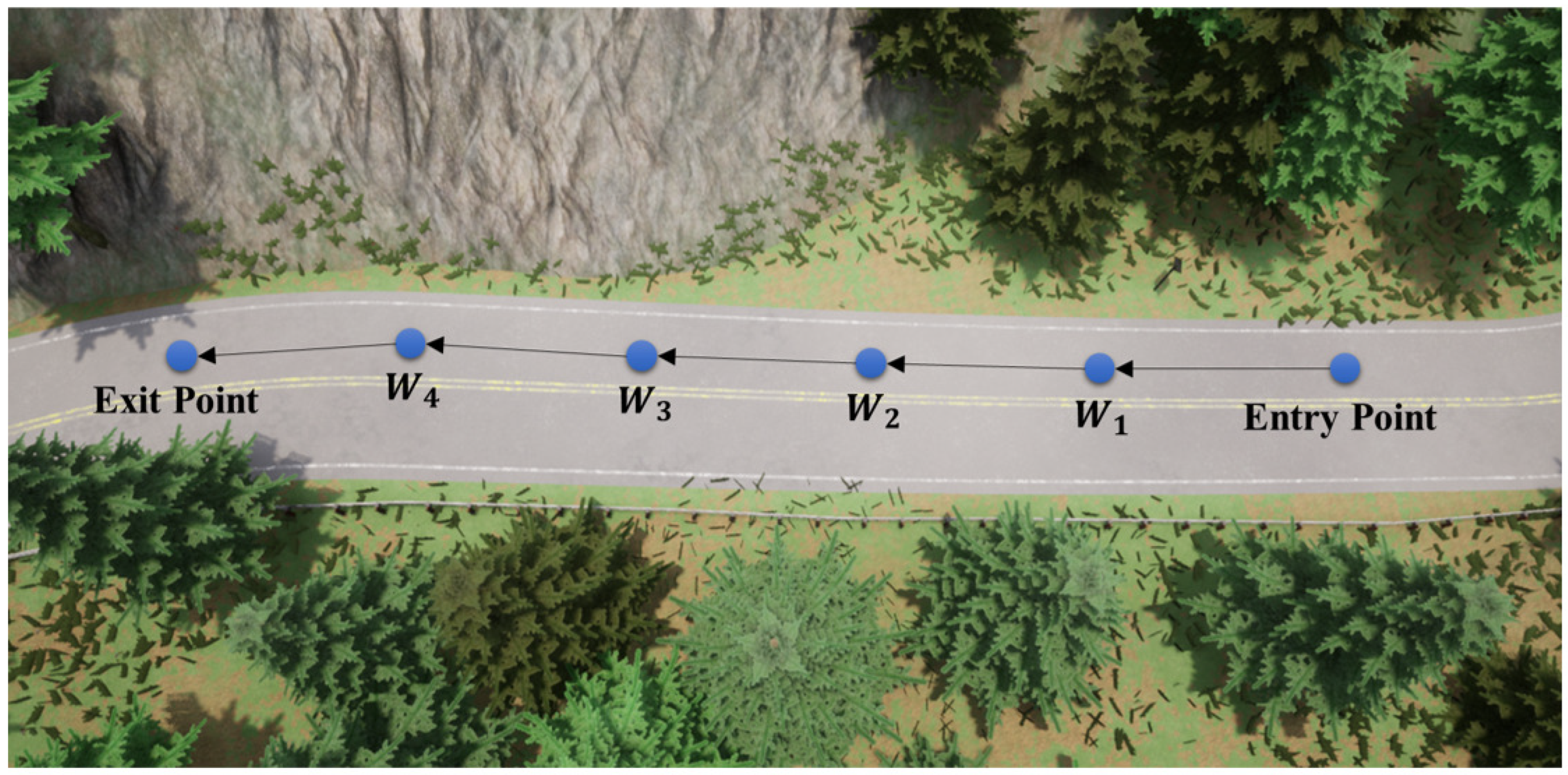

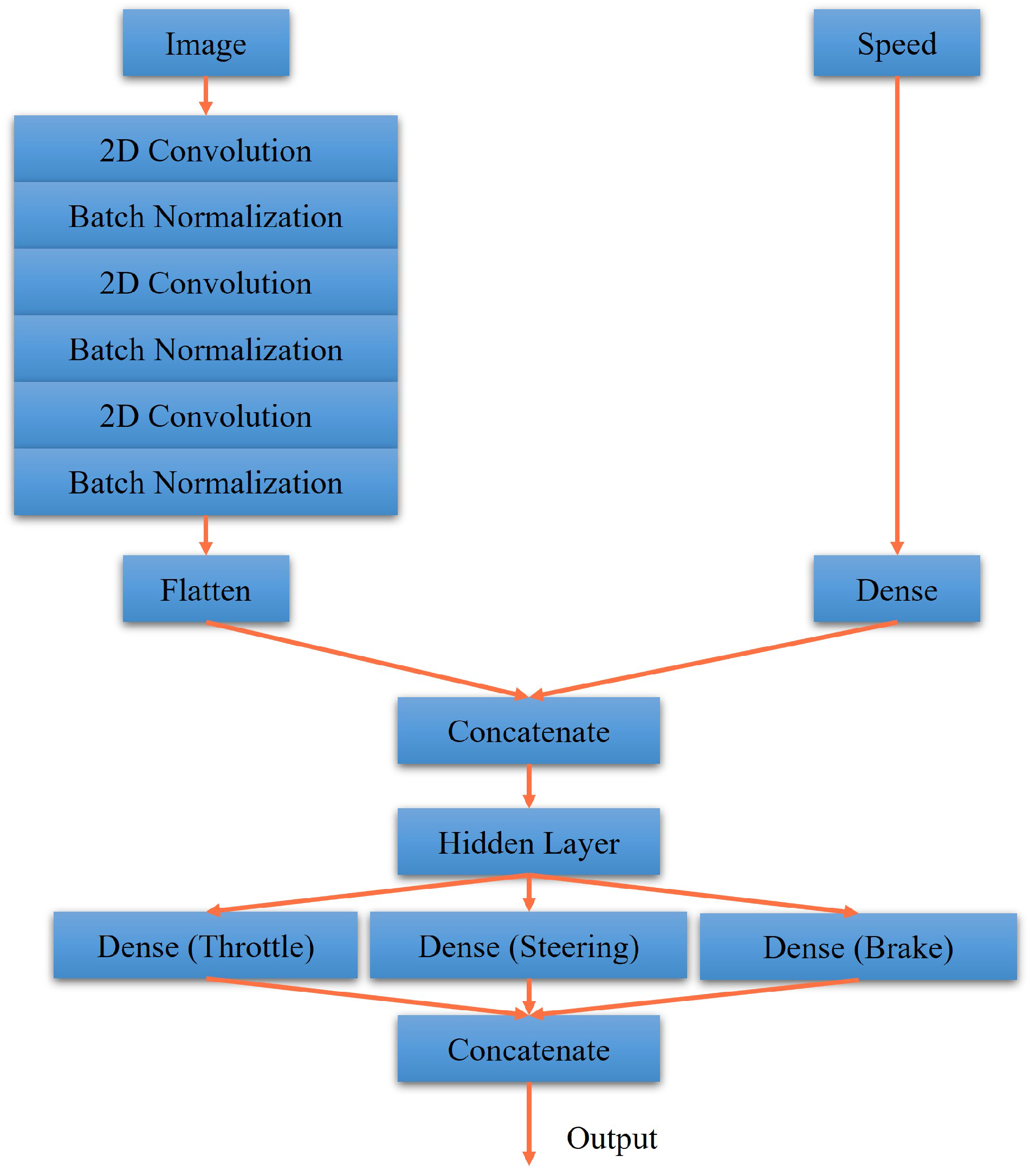

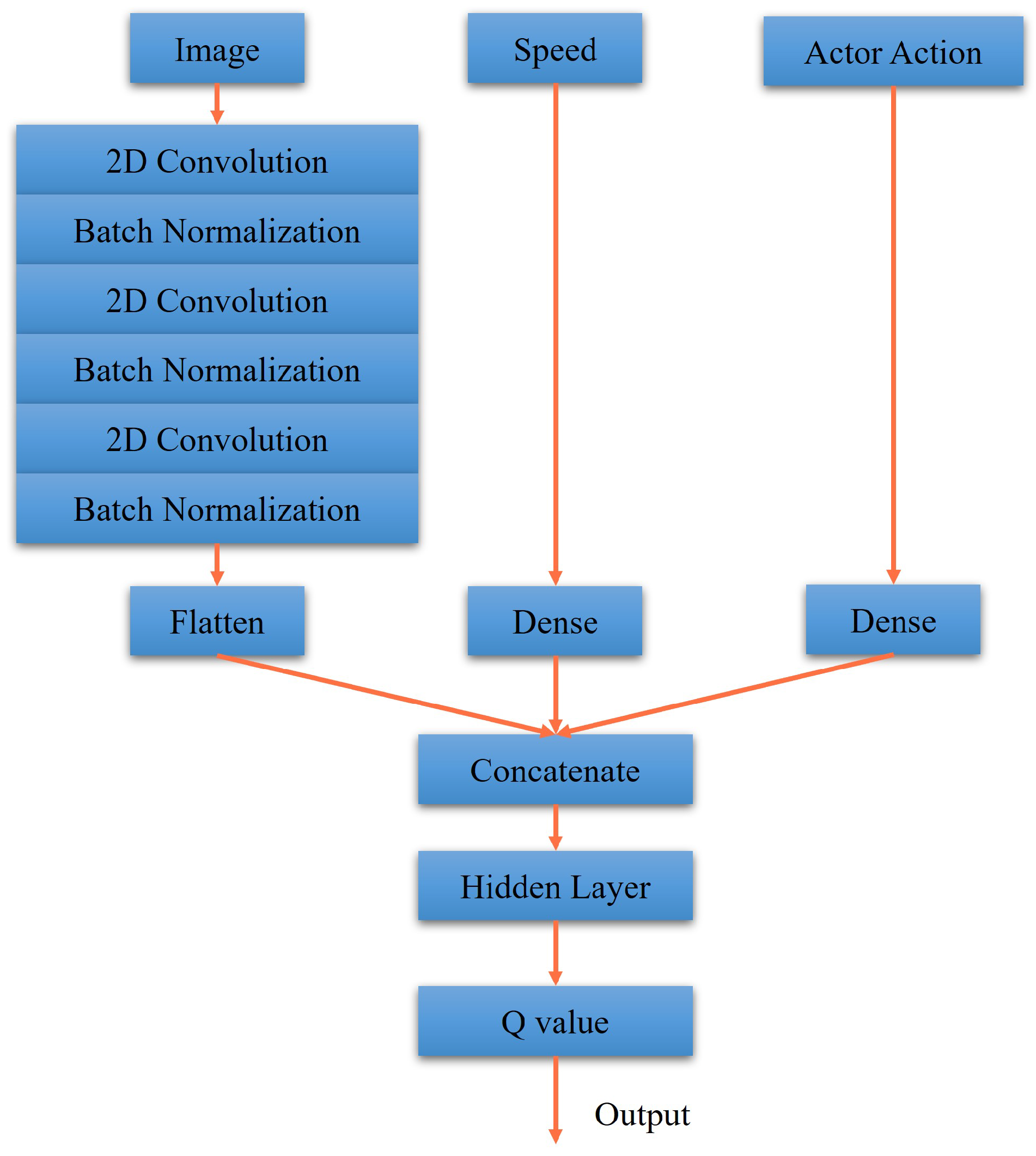

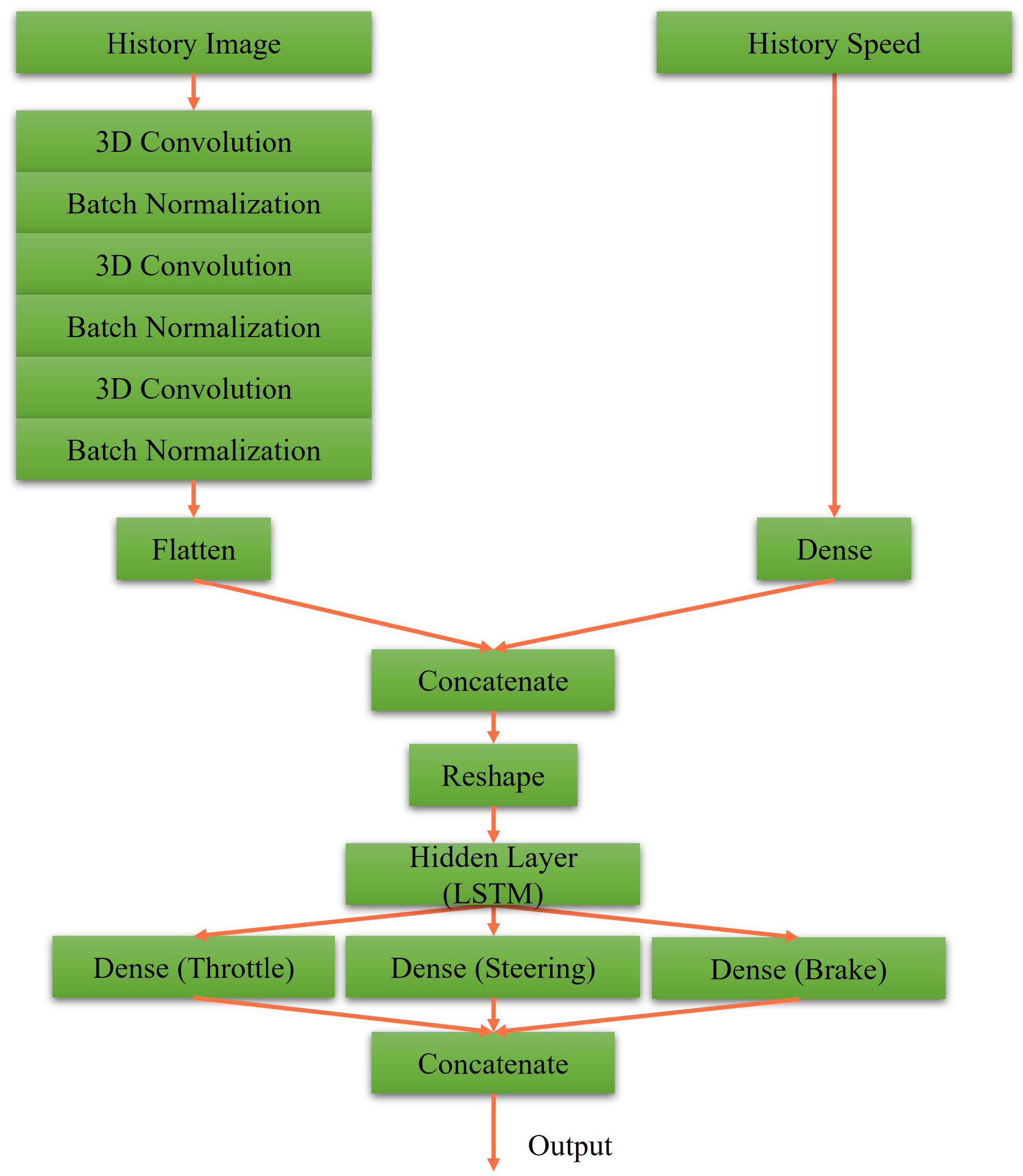

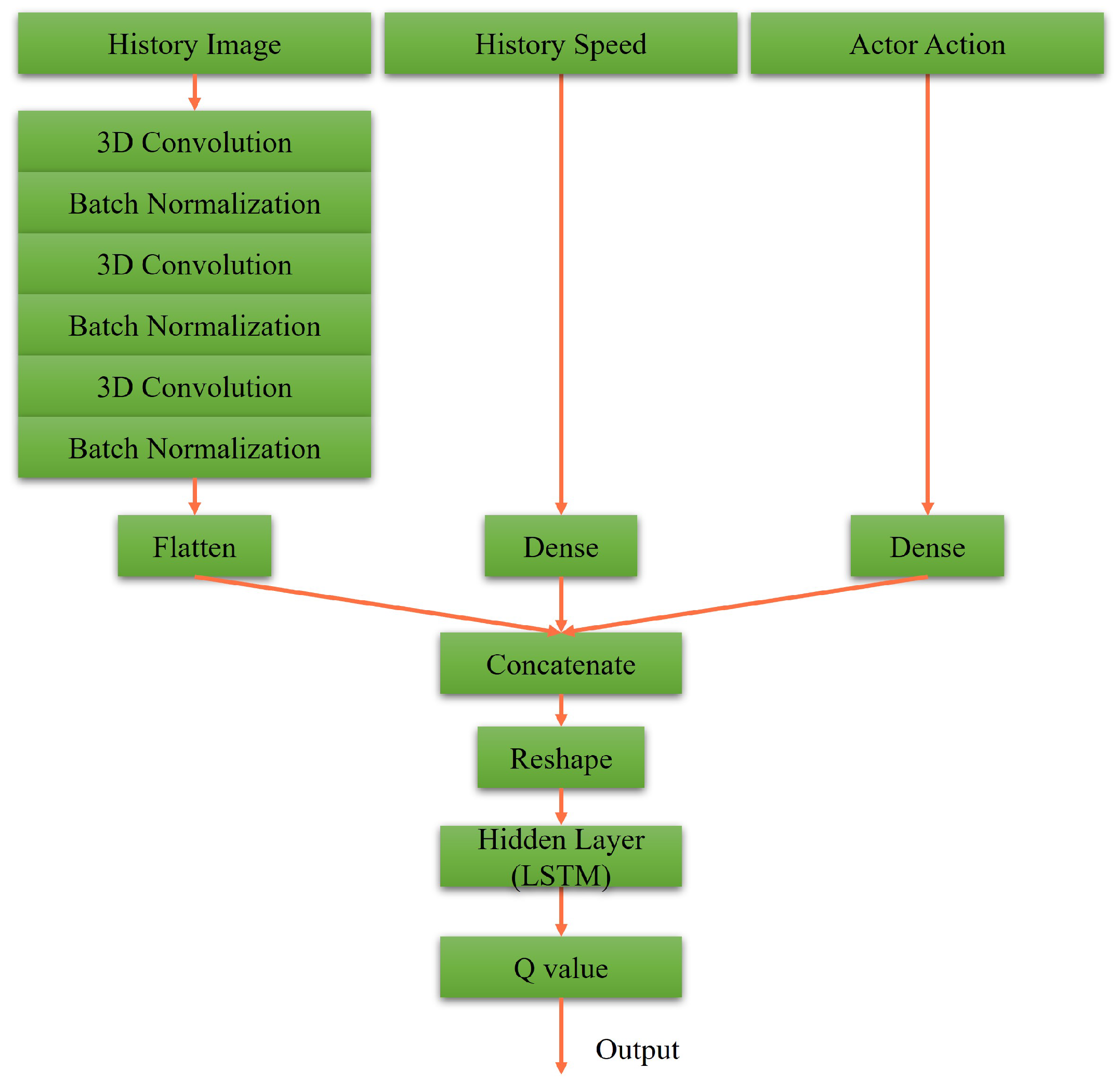

3. The DRL Control Strategies

3.1. Designs of Reward Mechanism

3.2. Designs of Actor-Critic Network

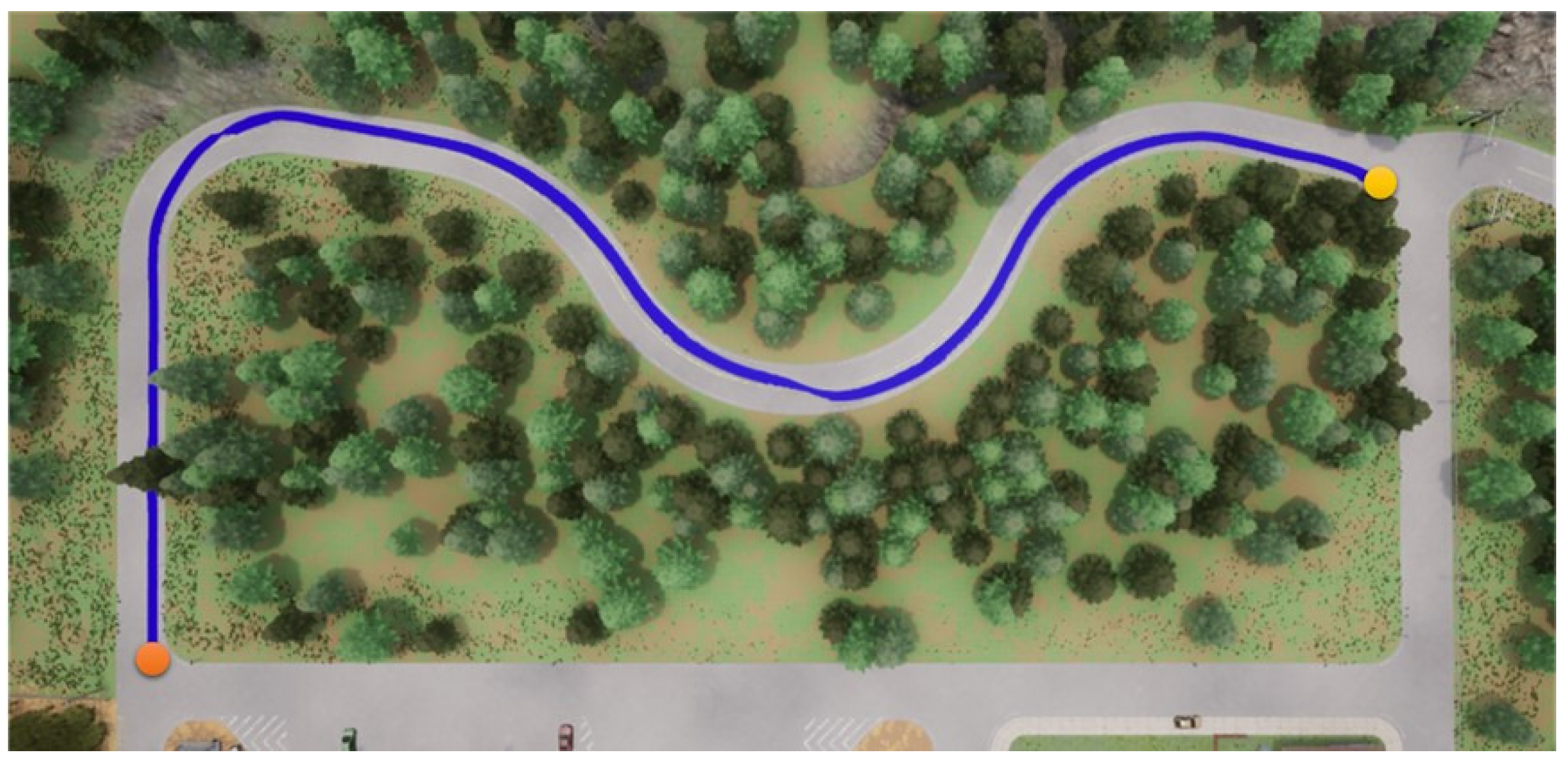

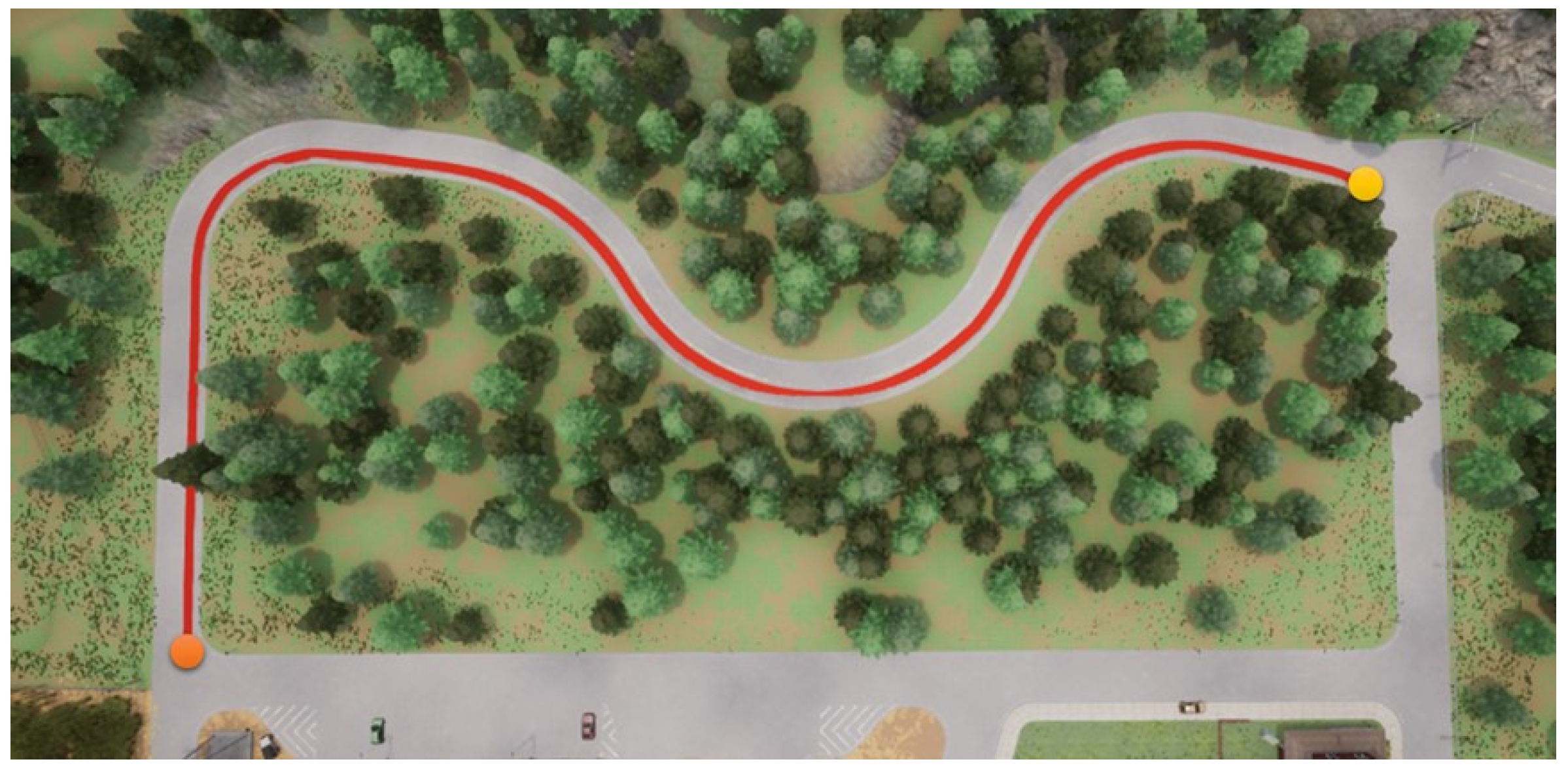

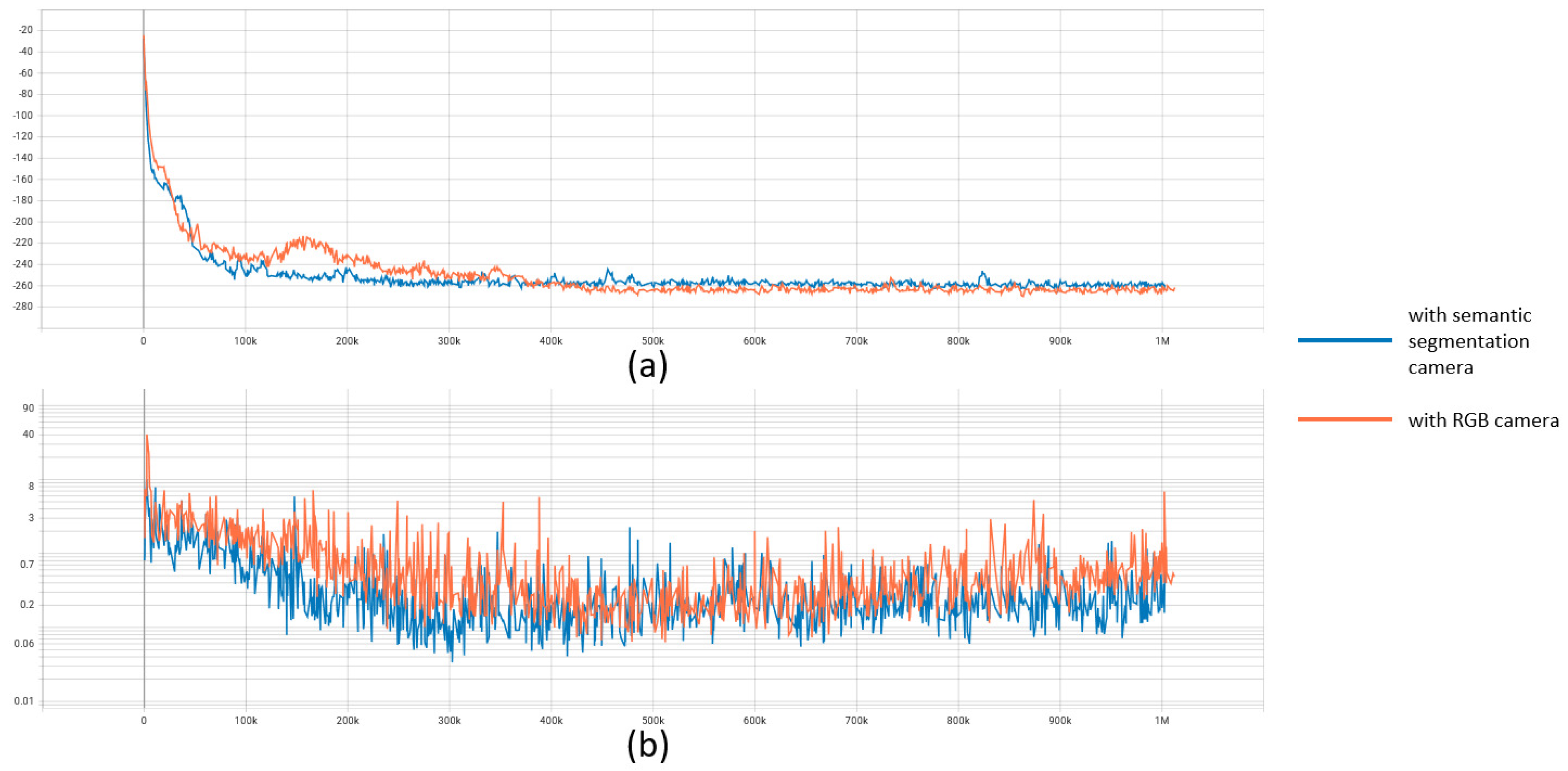

4. Experimental Results

- Two DRL algorithms are adopted for the experiments, i.e., DDPG and RDPG.

- Two kinds of cameras are used to capture the driving vision for the experiments, i.e., RGB and semantic segmentation cameras.

- Hyperparameters:

- -

- Replay buffer of DDPG: 15,000.

- -

- Threshold of replay buffer of DDPG: 500.

- -

- Batch size of DDPG: 150.

- -

- Replay buffer of RDPG: 6000.

- -

- Threshold of replay buffer of RDPG: 500.

- -

- Batch size of RDPG: 150.

- -

- Learning rate: 0.0001 (actor) and 0.001 (critic).

- -

- Learning rate decay: 0.9.

- -

- from the start: 1.

- -

- decay: 0.99.

- -

- Minimum : 0.01.

- -

- : 0.005.

- -

- of OU noise: 0.2 (throttle) and 0 (other).

- -

- of OU noise: 0.35.

- -

- of OU noise: 0.1 (throttle) and 0.2 (other).

- The specification of the computer:

- -

- CPU: Intel Core i7-11700KF.

- -

- GPU: Nvidia GeForce RTX 3090 24GB.

- -

- RAM: 48GB DDR4 3600MHz.

- -

- HDD: 512GB SSD.

- -

- OS: Windows 10.

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cafiso, S.; Graziano, A.D.; Giuffrè, T.; Pappalardo, G.; Severino, A. Managed Lane as Strategy for Traffic Flow and Safety: A Case Study of Catania Ring Road. Sustainability 2022, 14, 2915. [Google Scholar] [CrossRef]

- Zhu, M.; Wang, X.; Wang, Y. Human-Like Autonomous Car-Following Model with Deep Reinforcement Learning. arXiv 2019, arXiv:1901.00569. [Google Scholar] [CrossRef]

- Zhu, M.; Wang, Y.; Pu, Z.; Hu, J.; Ke, X.W.R. Safe, Efficient, and Comfortable Velocity Control Based on Reinforcement Learning for Autonomous Driving. Transp. Res. Part Emerg. Technol. 2020, 117, 102662. [Google Scholar] [CrossRef]

- Chang, C.-C.; Chan, K.-L. Collision Avoidance Architecture Based on Computer Vision with Predictive Ability. In Proceedings of the 2019 International Workshop of ICAROB—Intelligent Artificial Life and Robotics, Beppu, Japan, 10–13 January 2019. [Google Scholar]

- Chang, C.-C.; Tsai, J.; Lin, J.-H.; Ooi, Y.-M. Autonomous Driving Control Using the DDPG and RDPG Algorithms. Appl. Sci. 2021, 11, 10659. [Google Scholar] [CrossRef]

- Home-AirSim [Online]. Available online: https://microsoft.github.io/AirSim/ (accessed on 20 October 2022).

- Tsai, J.; Chang, C.-C.; Ou, Y.-C.; Sieh, B.-H.; Ooi, Y.-M. Autonomous Driving Control Based on the Perception of a Lidar Sensor and Odometer. Appl. Sci. 2022, 12, 7775. [Google Scholar] [CrossRef]

- Gazebo [Online]. Available online: http://gazebosim.org/ (accessed on 20 October 2022).

- Agoston, M.K. Computer Graphics and Geometric Modeling: Implementation and Algorithms; Springer: London, UK, 2005. [Google Scholar]

- Cheng, H.D.; Jiang, X.H.; Sun, Y.; Wang, J. Color Image Segmentation: Advances and Prospects. Pattern Recognit. 2001, 34, 2259–2281. [Google Scholar] [CrossRef]

- CARLA Simulator [Online]. Available online: https://carla.org/ (accessed on 20 October 2022).

- The Most Powerful Real-Time 3D Creation Platform—Unreal Engine [Online]. Available online: https://www.unrealengine.com/en-US/ (accessed on 20 October 2022).

- ASAM OpenDRIVE [Online]. Available online: https://www.asam.net/standards/detail/opendrive/ (accessed on 20 October 2022).

- Alonso, I.; Murillo, A.C. EV-SegNet: Semantic Segmentation for Event-Based Cameras. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Maqueda, A.I.; Loquercio, A.; Gallego, G.; Garcia, N.; Scaramuzza, D. Event-Based Vision Meets Deep Learning on Steering Prediction for Self-Driving Cars. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction. The MIT Press: Cambridge, UK, 2018. [Google Scholar]

- Martin-Guerrero, J.D.; Lamata, L. Reinforcement Learning and Physics. Appl. Sci. 2021, 11, 8589. [Google Scholar] [CrossRef]

- Jembre, Y.Z.; Nugroho, Y.W.; Khan, M.T.R.; Attique, M.; Paul, R.; Shah, S.H.A.; Kim, B. Evaluation of Reinforcement and Deep Learning Algorithms in Controlling Unmanned Aerial Vehicles. Appl. Sci. 2021, 11, 7240. [Google Scholar] [CrossRef]

- Deep Reinforcement Learning [Online]. Available online: https://julien-vitay.net/deeprl/ (accessed on 20 October 2022).

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous Control with Deep Reinforcement Learning. arXiv 2019, arXiv:1509.02971. [Google Scholar]

- Heess, N.; Hunt, J.J.; Lillicrap, T.P.; Silver, D. Memory-based Control with Recurrent Neural Networks. arXiv 2015, arXiv:1512.04455. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 8503. [Google Scholar] [CrossRef]

- Lin, L.-J. Self-improving reactive agents based on reinforcement learning, planning and teaching. Mach. Learn. 1992, 8, 293–321. [Google Scholar] [CrossRef]

- Sewak, M. Deep Reinforcement Learning; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Bibbona, E.; Panfilo, G.; Tavella, P. The Ornstein-Uhlenbeck process as a model of a low pass filtered white noise. Metrologia 2008, 45, S117. [Google Scholar] [CrossRef]

- Vehicle Dynamics [Online]. Available online: https://ritzel.siu.edu/courses/302s/vehicle/vehicledynamics.htm (accessed on 20 October 2022).

- Chaki, N.; Shaikh, S.H.; Saeed, K. Exploring Image Binarization Techniques; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Stockman, G.; Shapiro, L.G. Computer Vision; Prentice Hall PTR: Hoboken, NJ, USA, 2001. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tsai, J.; Chang, C.-C.; Li, T. Autonomous Driving Control Based on the Technique of Semantic Segmentation. Sensors 2023, 23, 895. https://doi.org/10.3390/s23020895

Tsai J, Chang C-C, Li T. Autonomous Driving Control Based on the Technique of Semantic Segmentation. Sensors. 2023; 23(2):895. https://doi.org/10.3390/s23020895

Chicago/Turabian StyleTsai, Jichiang, Che-Cheng Chang, and Tzu Li. 2023. "Autonomous Driving Control Based on the Technique of Semantic Segmentation" Sensors 23, no. 2: 895. https://doi.org/10.3390/s23020895

APA StyleTsai, J., Chang, C.-C., & Li, T. (2023). Autonomous Driving Control Based on the Technique of Semantic Segmentation. Sensors, 23(2), 895. https://doi.org/10.3390/s23020895