Sow Farrowing Early Warning and Supervision for Embedded Board Implementations

Abstract

1. Introduction

2. Materials and Methods

2.1. Animals, Housing, and Data Collection

2.1.1. Animals and Housing

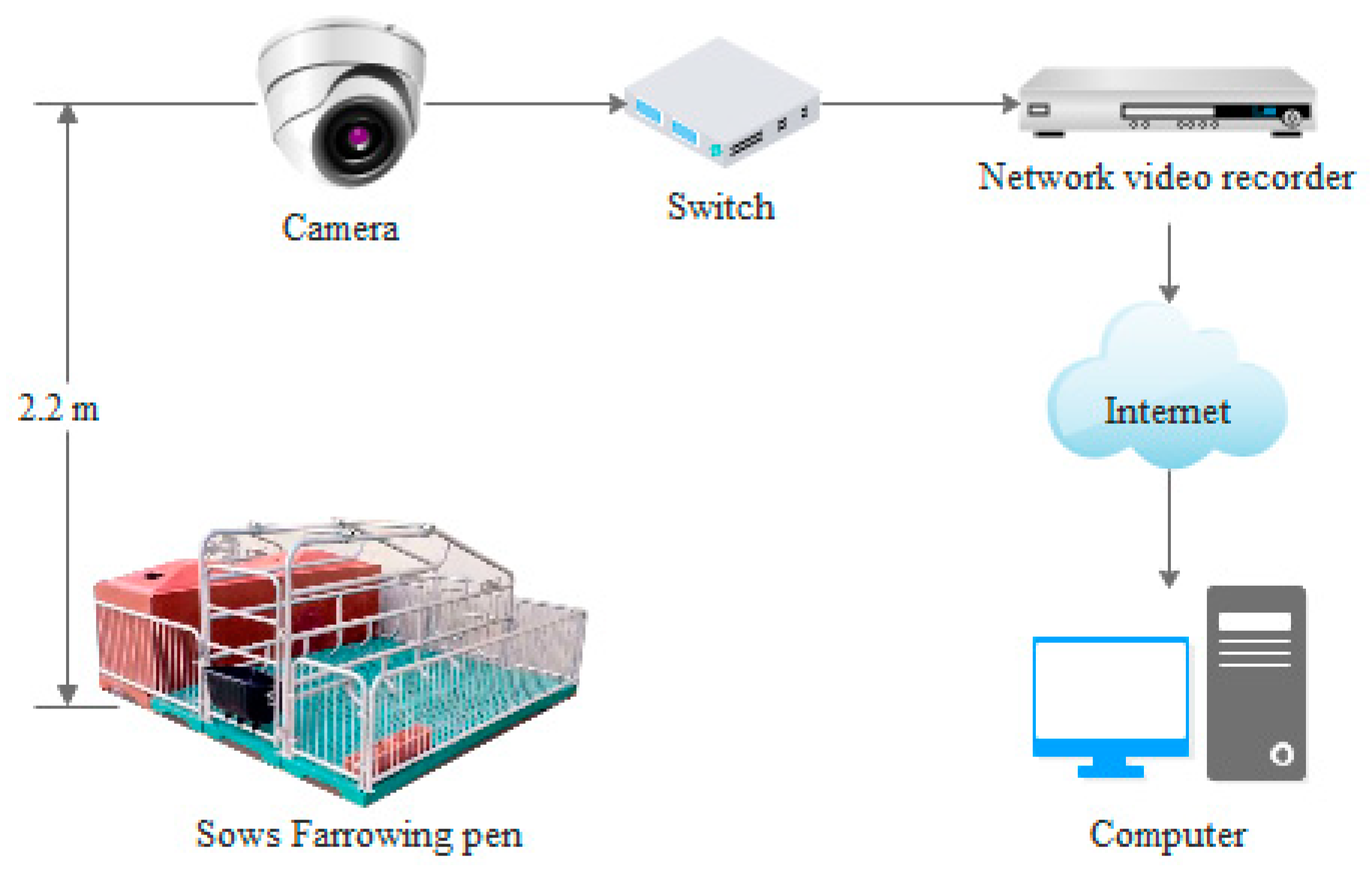

2.1.2. Data-Acquisition Equipment

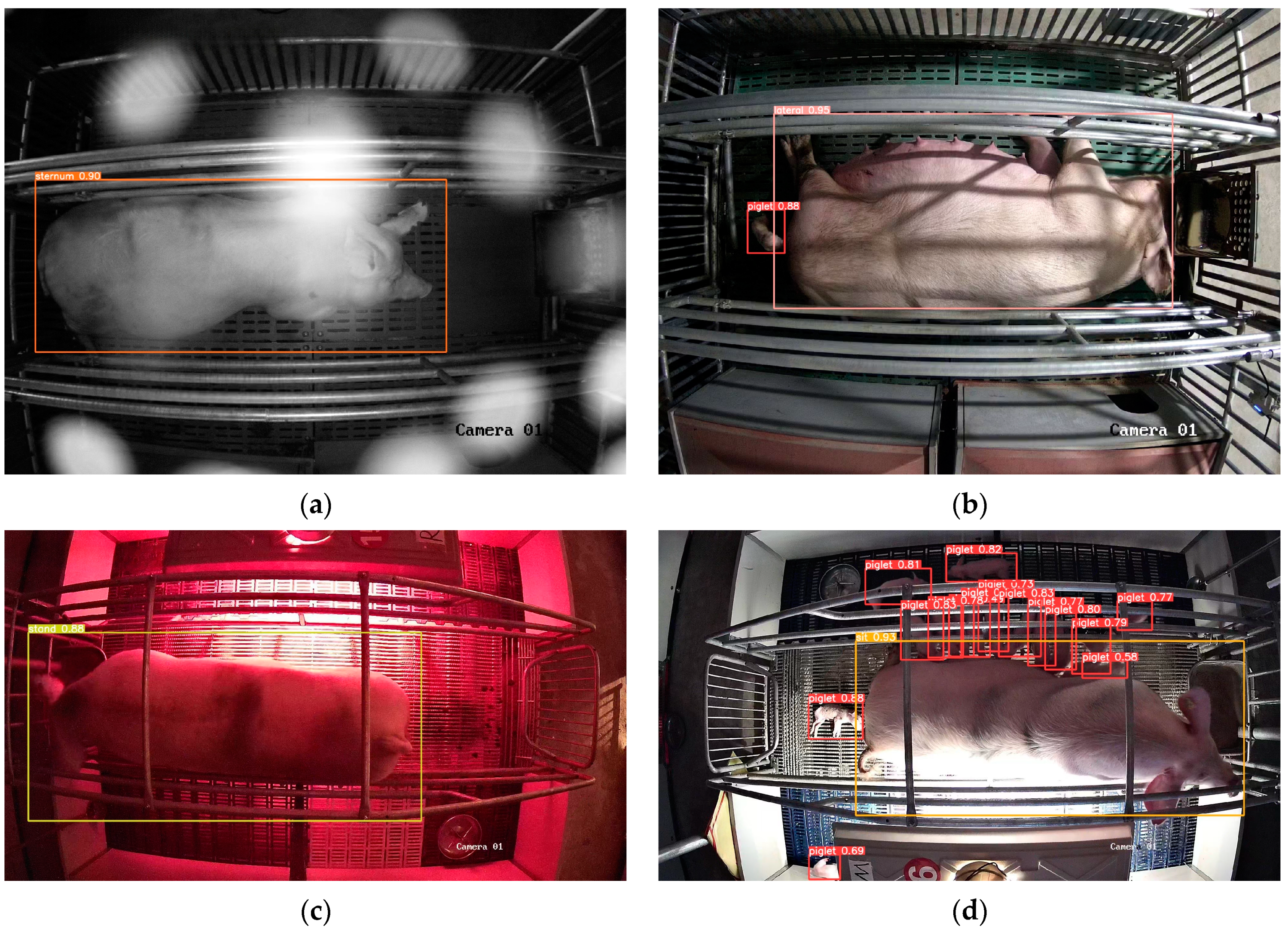

2.1.3. Data Preprocessing

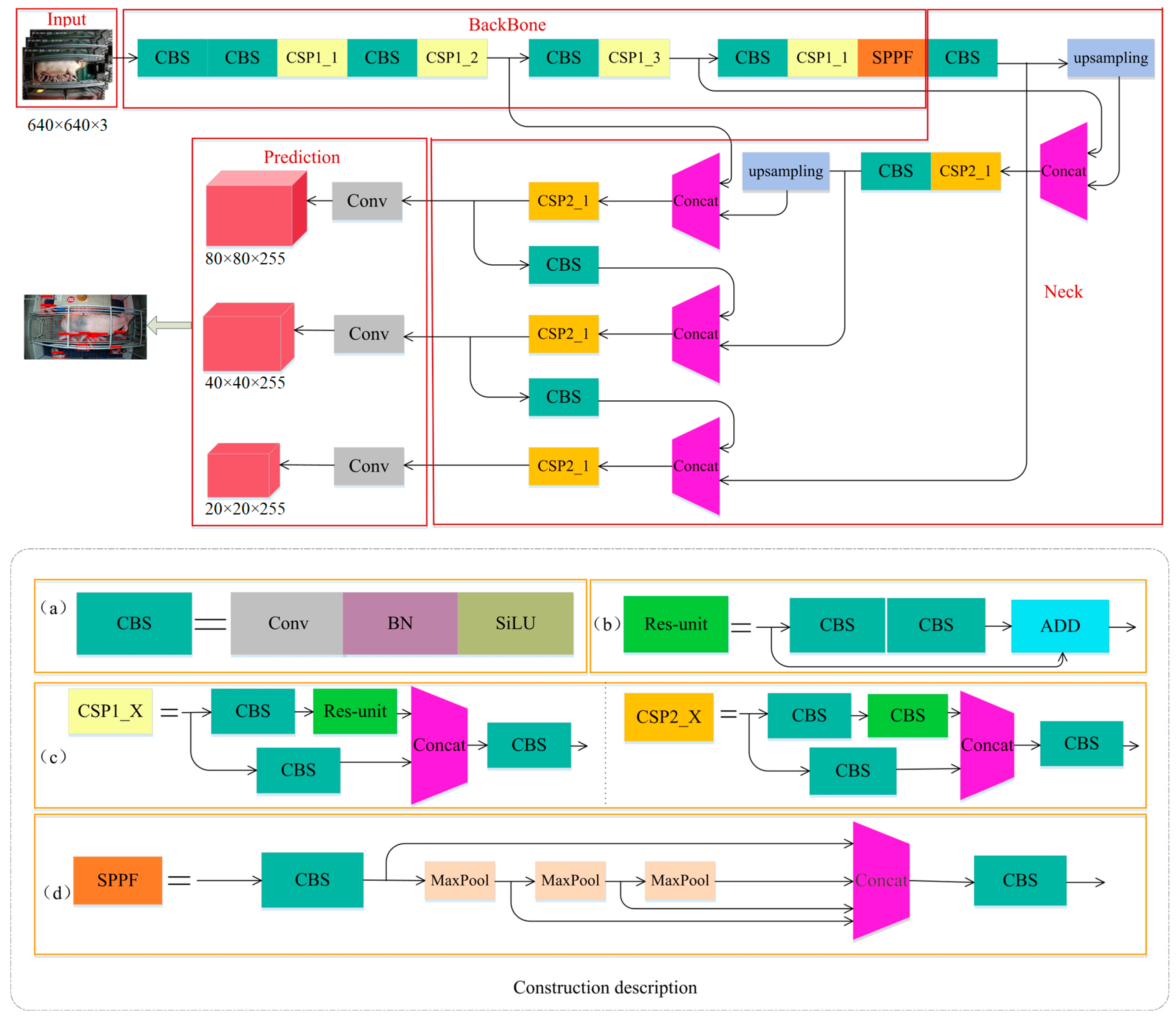

2.2. Object-Detection Algorithm (Computer-Vision Analysis)

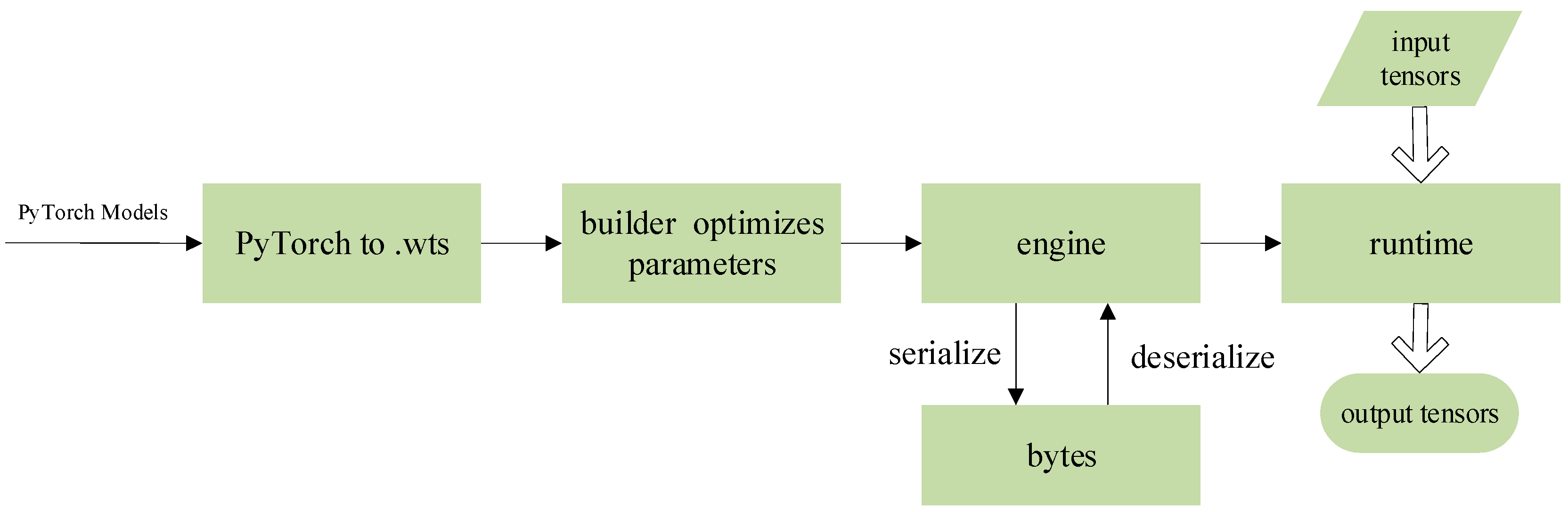

2.3. Model Optimization and Deployment

2.4. Model Evaluation Index

3. Results and Discussion

3.1. Experimental Resources and Model Parameters

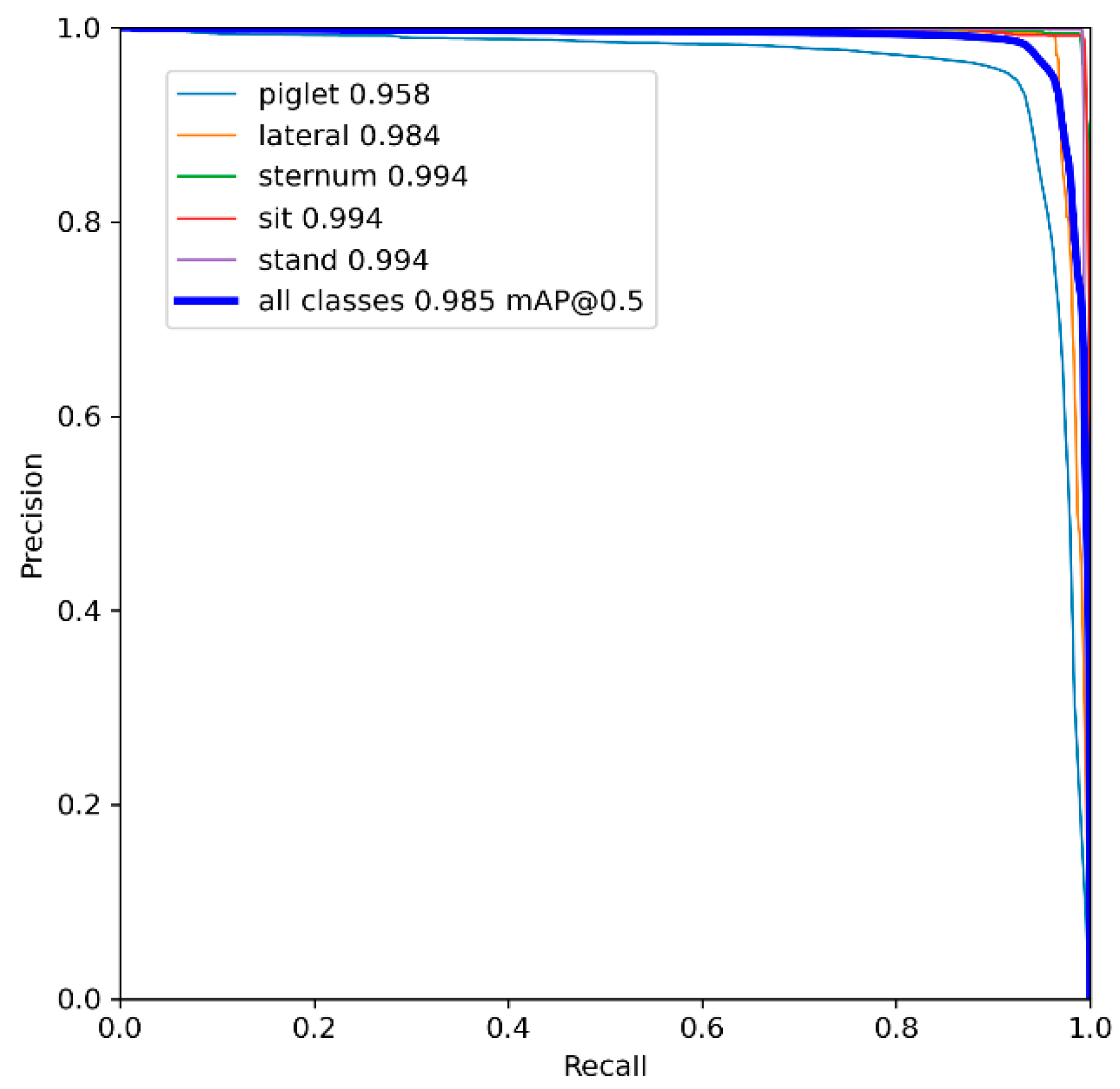

3.2. Evaluation of Detection Performance

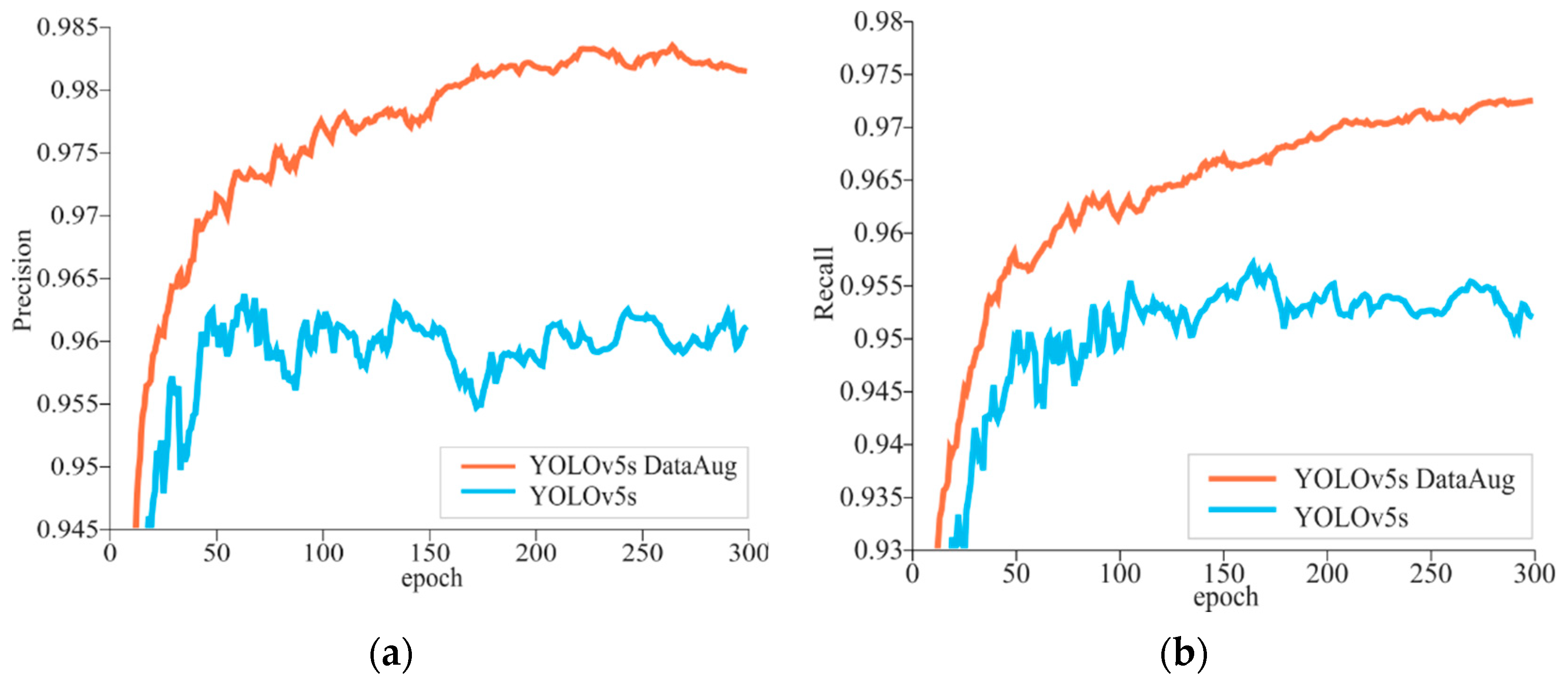

3.2.1. Model Training Results and Analysis

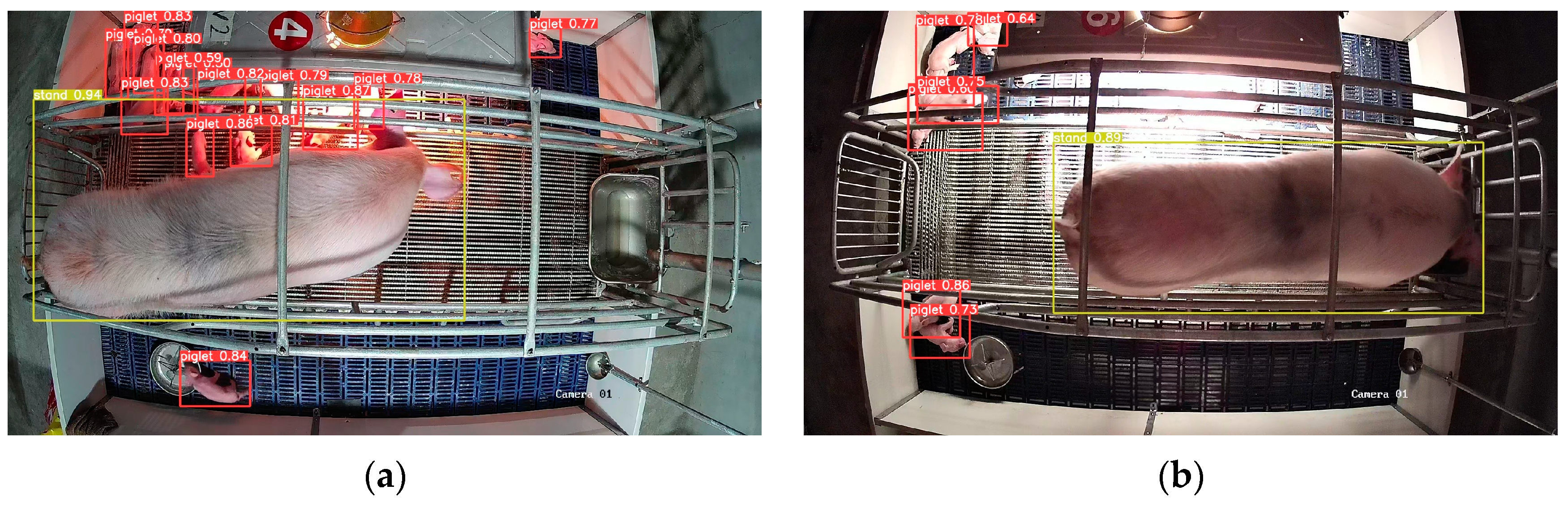

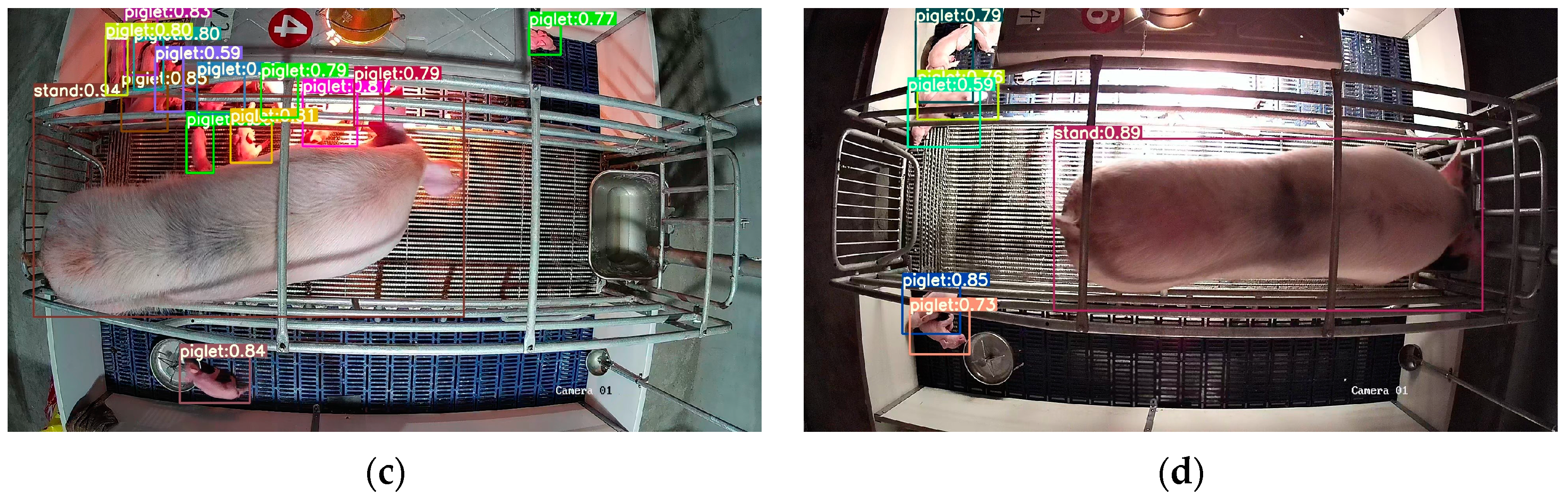

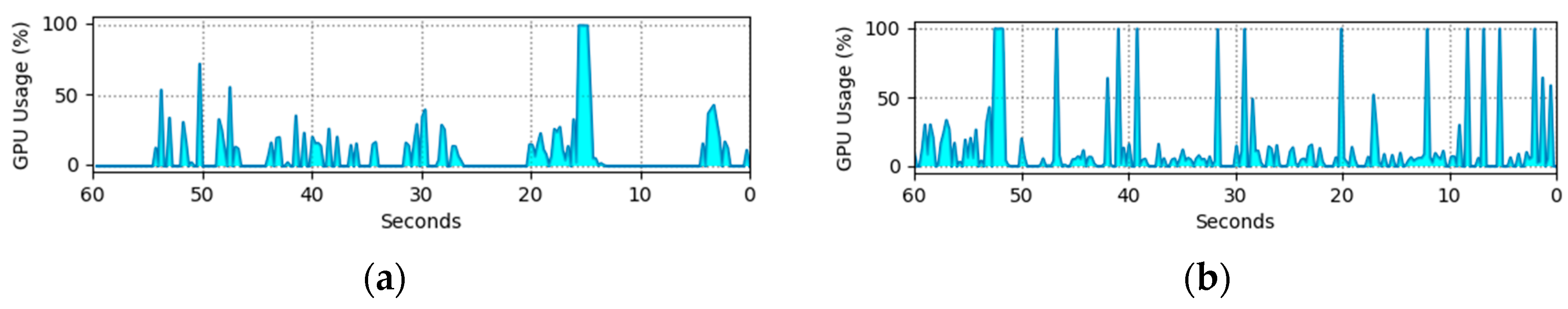

3.2.2. Model Deployment Test Results and Analysis

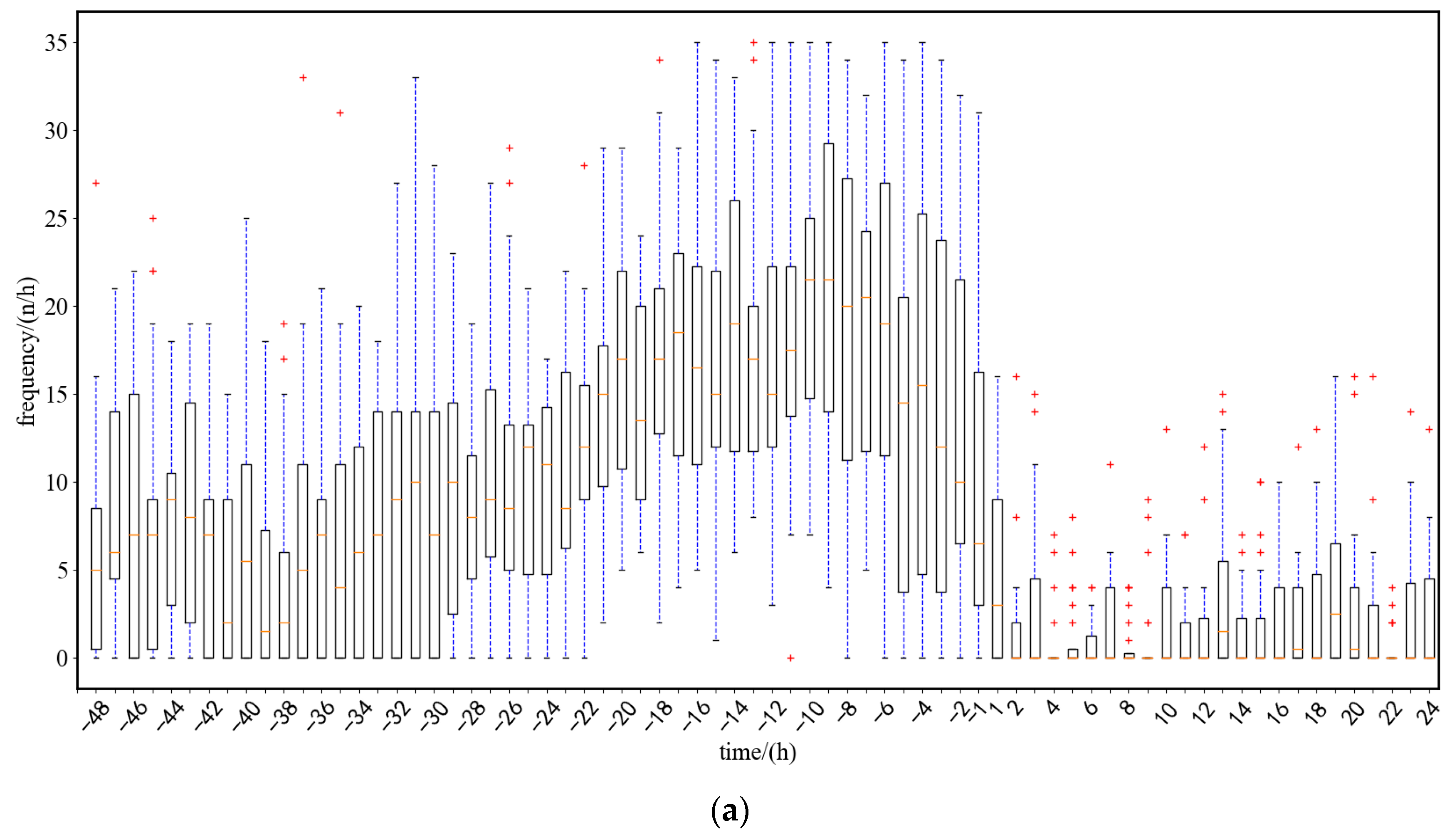

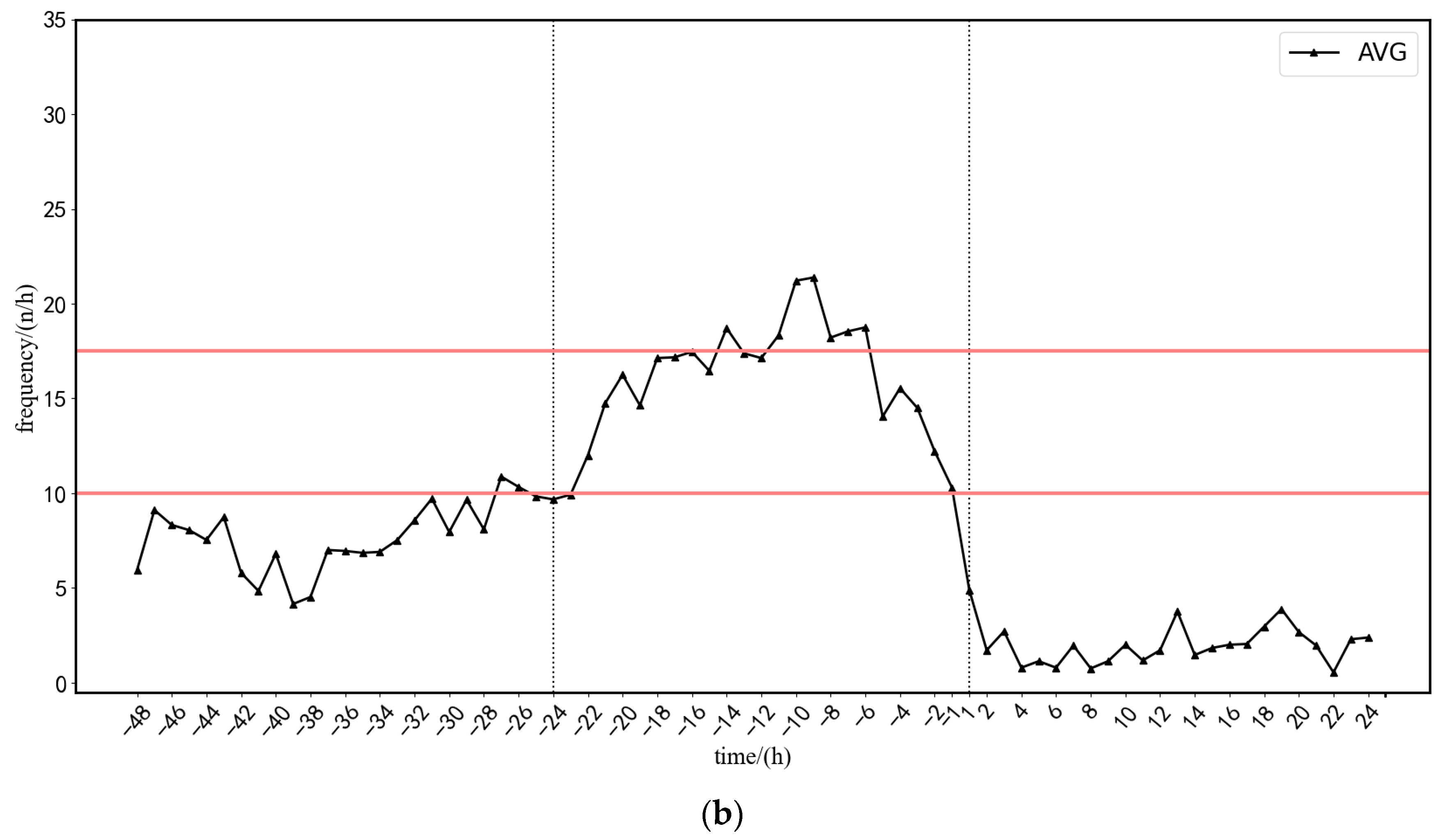

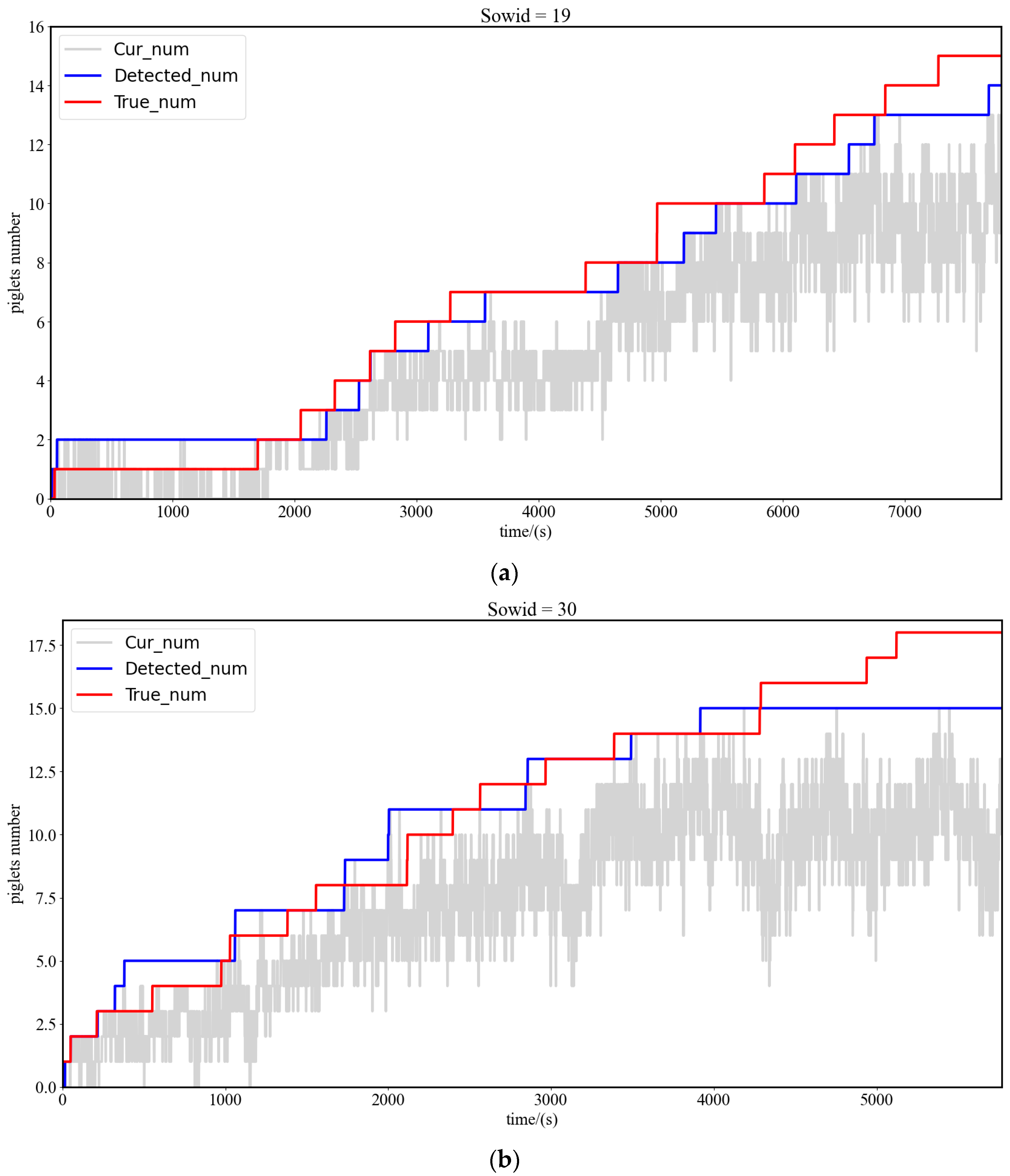

3.3. Implementation of Sow Farrowing Early Warning and Supervision

4. Conclusions

- (1)

- Video data of 35 sows before and after farrowing were collected and preprocessed to construct a dataset containing four types of sow postures as well as newborn piglets, and the model employed for the detection of sow postures and newborn piglets was trained and tested using Quadro P4000. An analysis of the evaluation indices of different algorithms revealed that YOLOv5 algorithms were effective for detecting sows and piglets of different sizes.

- (2)

- An inference engine that supports TensorRT was generated for the model for the detection of sow postures and newborn piglets. The accelerated model was deployed to the embedded development board, after which it ran with a higher throughput and lower latency. The precision of the model after migration to Jetson Nano reached 93.5%, with a recall rate of 92.2%, and the detection speed was increased by a factor larger than 8.

- (3)

- According to the changes in the frequency of sow posture transitions output by the model, early warnings were sent 5 h before the onset of farrowing, with an average error of 1.02 h between the warning time and the actual farrowing time. Alarms for sow farrowing were sent using the approach of “three consecutive detections” for the first newborn piglet, and the piglet number was determined according to the number of newborn piglets detected.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Edwards, S.A. Perinatal mortality in the pig: Environmental or physiological solutions? Livest. Prod. Sci. 2002, 78, 3–12. [Google Scholar] [CrossRef]

- Andersen, I.L.; Haukvik, I.A.; Bøe, K.E. Drying and warming immediately after birth may reduce piglet mortality in loose-housed sows. Animal 2009, 3, 592–597. [Google Scholar] [CrossRef] [PubMed]

- Pastell, M.; Hietaoja, J.; Yun, J.; Tiusanen, J.; Valros, A. Predicting farrowing of sows housed in crates and pens using accelerometers and CUSUM charts. Comput. Electron. Agric. 2016, 127, 197–203. [Google Scholar] [CrossRef]

- Aparna, U.; Pedersen, L.J.; Jørgensen, E. Hidden phase-type Markov model for the prediction of onset of farrowing for loose-housed sows. Comput. Electron. Agric. 2014, 108, 135–147. [Google Scholar] [CrossRef]

- Oliviero, C.; Pastell, M.; Heinonen, M.; Heikkonen, J.; Valros, A.; Ahokas, J.; Vainio, O.; Peltoniemi, O.A. Using movement sensors to detect the onset of farrowing. Biosyst. Eng. 2008, 100, 281–285. [Google Scholar] [CrossRef]

- Traulsen, I.; Scheel, C.; Auer, W.; Burfeind, O.; Krieter, J. Using acceleration data to automatically detect the onset of farrowing in sows. Sensors 2018, 18, 170. [Google Scholar] [CrossRef]

- Manteuffel, C.; Hartung, E.; Schmidt, M.; Hoffmann, G.; Schön, P.C. Towards qualitative and quantitative prediction and detection of parturition onset in sows using light barriers. Comput. Electron. Agric. 2015, 116, 201–210. [Google Scholar] [CrossRef]

- Küster, S.; Kardel, M.; Ammer, S.; Brünger, J.; Koch, R.; Traulsen, I. Usage of computer vision analysis for automatic detection of activity changes in sows during final gestation. Comput. Electron. Agric. 2020, 169, 105177. [Google Scholar] [CrossRef]

- Okinda, C.; Lu, M.; Nyalala, I.; Li, J.; Shen, M. Asphyxia occurrence detection in sows during the farrowing phase by inter-birth interval evaluation. Comput. Electron. Agric. 2018, 152, 221–232. [Google Scholar] [CrossRef]

- Oczak, M.; Bayer, F.; Vetter, S.; Maschat, K.; Baumgartner, J. Comparison of the automated supervision of the sow activity in farrowing pens using video and accelerometer data. Comput. Electron. Agric. 2022, 192, 106517. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Luo, Y.; Zeng, Z.; Lu, H.; Lv, E. Posture detection of individual pigs based on lightweight convolution neural networks and efficient channel-wise attention. Sensors 2021, 21, 8369. [Google Scholar] [CrossRef] [PubMed]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6848–6856. [Google Scholar]

- Wang, J.S.; Wu, M.C.; Chang, H.L.; Young, M.S. Predicting parturition time through ultrasonic measurement of posture changing rate in crated landrace sows. Asian-Australas. J. Anim. Sci. 2007, 20, 682–692. [Google Scholar] [CrossRef]

- Liu, L.; Tai, M.; Yao, W.; Zhao, R.; Shen, M. Effects of heat stress on posture transitions and reproductive performance of primiparous sows during late gestation. J. Therm. Biol. 2021, 96, 102828. [Google Scholar] [CrossRef] [PubMed]

- Khan, W.Z.; Ahmed, E.; Hakak, S.; Yaqoob, I.; Ahmed, A. Edge computing: A survey. Future Gener. Comput. Syst. 2019, 97, 219–235. [Google Scholar] [CrossRef]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge computing: Vision and challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Premsankar, G.; Di Francesco, M.; Taleb, T. Edge computing for the Internet of Things: A case study. IEEE Internet Things J. 2018, 5, 1275–1284. [Google Scholar] [CrossRef]

| Model | Precision | Recall | Speed_Image | Speed_Video | Speed_Camera |

|---|---|---|---|---|---|

| YOLOX-nano | 0.929 | 0.779 | 20.4 ms | 4.8 ms | 7.3 ms |

| NanoDet-m | 0.967 | 0.833 | 8.0 ms | 4.4 ms | 4.2 ms |

| YOLOv5s | 0.961 | 0.952 | - | - | - |

| YOLOv5s_DataAug | 0.982 | 0.973 | 9.9 ms | 9.8 ms | 8.1 ms |

| Scenarios | Missed Detection Rate for Sow Posture | False Detection Rate for Sow Posture | Number of Missed Detections of Piglets | Number of False Detections of Piglets |

|---|---|---|---|---|

| Complex light | 6.33% | 15.19% | 0 | 11 |

| First piglet born | - | - | 2 | 5 |

| Different color of heat lamp | 0 | 1.2% | 7 | 1 |

| Turn on heat lamp at night | 3.36% | 7.56% | 15 | 10 |

| Model Format | Platform | Speed_Image | Speed_Video | Precision | Recall |

|---|---|---|---|---|---|

| .pth | Quadro P4000 | 9.9 ms | 9.8 ms | 0.982 | 0.973 |

| .pth | Jetson Nano | 583.4 ms | 591.4 ms | 0.982 | 0.973 |

| .engine | Jetson Nano | 67.2 ms | 80.3 ms | 0.935 | 0.922 |

| Sow ID | CA | DA | Sow ID | CA | DA | Sow ID | CA | DA |

|---|---|---|---|---|---|---|---|---|

| 03 | 44.9% | 95.0% | 15 | 61.7% | 84.4% | 25 | 58.8% | 99.3% |

| 04 | 50.7% | 91.7% | 16 | 42.3% | 90.3% | 26 | 56.3% | 83.6% |

| 05 | 58.1% | 94.1% | 17 | 52.5% | 89.9% | 27 | 58.6% | 87.1% |

| 06 | 75.2% | 95.9% | 18 | 57.9% | 92.1% | 28 | 66.1% | 99.4% |

| 07 | 52.2% | 96.4% | 19 | 69.0% | 95.2% | 29 | 82.3% | 88.0% |

| 08 | 66.6% | 96.8% | 20 | 93.6% | 98.3% | 30 | 66.2% | 98.4% |

| 09 | 65.7% | 94.3% | 21 | 73.9% | 99.6% | |||

| 10 | 66.2% | 81.2% | 22 | 71.0% | 93.1% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, J.; Zhou, J.; Liu, L.; Shu, C.; Shen, M.; Yao, W. Sow Farrowing Early Warning and Supervision for Embedded Board Implementations. Sensors 2023, 23, 727. https://doi.org/10.3390/s23020727

Chen J, Zhou J, Liu L, Shu C, Shen M, Yao W. Sow Farrowing Early Warning and Supervision for Embedded Board Implementations. Sensors. 2023; 23(2):727. https://doi.org/10.3390/s23020727

Chicago/Turabian StyleChen, Jinxin, Jie Zhou, Longshen Liu, Cuini Shu, Mingxia Shen, and Wen Yao. 2023. "Sow Farrowing Early Warning and Supervision for Embedded Board Implementations" Sensors 23, no. 2: 727. https://doi.org/10.3390/s23020727

APA StyleChen, J., Zhou, J., Liu, L., Shu, C., Shen, M., & Yao, W. (2023). Sow Farrowing Early Warning and Supervision for Embedded Board Implementations. Sensors, 23(2), 727. https://doi.org/10.3390/s23020727