Abstract

Photoacoustic (PA) imaging is a non-invasive biomedical imaging technique that combines the benefits of optics and acoustics to provide high-resolution structural and functional information. This review highlights the emergence of three-dimensional handheld PA imaging systems as a promising approach for various biomedical applications. These systems are classified into four techniques: direct imaging with 2D ultrasound (US) arrays, mechanical-scanning-based imaging with 1D US arrays, mirror-scanning-based imaging, and freehand-scanning-based imaging. A comprehensive overview of recent research in each imaging technique is provided, and potential solutions for system limitations are discussed. This review will serve as a valuable resource for researchers and practitioners interested in advancements and opportunities in three-dimensional handheld PA imaging technology.

1. Introduction

Photoacoustic (PA) imaging is a non-invasive biomedical imaging modality that has been gaining increasing attention due to its unique ability to provide high-resolution structural and functional information on various endogenous light absorbers without needing exogenous contrast agents [1,2,3,4,5]. This imaging technique is based on the PA effect [6], which occurs when a short-pulse laser irradiates a light-absorbing tissue, causing local thermal expansion and extraction to occur and subsequently generating ultrasound (US) waves. Collected US signals are reconstructed to PA images that combine beneficial features of both optics and acoustics, including high spatial resolution, deep tissue penetration, and high optical contrasts. Further, PA images can visualize multi-scale objects from cells to organs using the same endogenous light absorbers [7,8]. Moreover, compared to traditional medical imaging modalities such as magnetic resonance imaging (MRI), computed tomography (CT), and positron emission tomography (PET), PA imaging systems offer several advantages, including simpler system configuration, relatively lower cost, and the use of non-ionizing radiation [9,10]. These benefits have resulted in the emergence of PA imaging as a promising technique for various biomedical applications, including imaging of cancers, peripheral diseases, skin diseases, and hemorrhagic and ischemic diseases [11,12,13,14,15,16,17,18,19,20,21,22].

Two-dimensional (2D) cross-sectional PA imaging systems are widely used due to their relatively simple implementation by adding a laser to a typical US imaging system. Moreover, PA images can be acquired at the same location as conventional US images, thus facilitating clinical quantification analysis [23,24,25,26,27]. However, despite the advantages of 2D imaging, poor reproducibility performance can arise depending on the operator’s proficiency and system sensitivity, which can adversely affect accurate clinical analysis [28]. To address these issues, the development of three-dimensional (3D) PA imaging systems utilizing various scanning methods such as direct, mechanical, and mirror-based scanning has gained momentum. Direct scanning employs 2D hemispherical or matrix-shaped array US transducers (USTs) for real-time acquisition of volumetric PA images [29,30]. Mechanical scanning combines 1D array USTs of various shapes with a motorized stage to obtain large-area PA images, albeit at a relatively slower pace. Mirror-scanning-based 3D PA imaging utilizes single-element USTs with microelectromechanical systems (MEMS) or galvanometer scanners (GS), enabling high-resolution images with fast imaging speed, although it has a limited range of acquisition [31,32].

While the aforementioned fixed 3D PA imaging systems have demonstrated promising results, the inherent fixed nature of these systems can potentially limit the acquisition of PA images due to patient-specific factors or the specific anatomical location of the pathology under consideration [9,33,34,35]. Consequently, to expedite clinical translation, it becomes imperative to develop versatile 3D handheld PA imaging systems. Here, we aim to provide a detailed review of 3D handheld PA imaging systems. Our strategy involves classifying these systems into four scanning techniques—direct [30,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57], mechanical [10,58,59,60,61,62], mirror [31,32,63,64,65,66,67], and freehand [50,67,68,69,70] scanning—and providing a comprehensive summary of the latest research corresponding to each technique. Freehand scanning involves image reconstruction through image processing techniques with or without additional aids such as position sensors and optical patterns. It is not limited by the UST type. Further, we discuss potential solutions to overcome 3D handheld PA imaging system limitations such as motion artifacts, anisotropic spatial resolution, and limited view artifacts. We believe that our review will provide valuable insights to researchers and practitioners in the field of 3D handheld PA imaging to uncover the latest developments and opportunities to advance this technology.

2. Classification of 3D Handheld PA Imaging Systems into Four Scanning Techniques

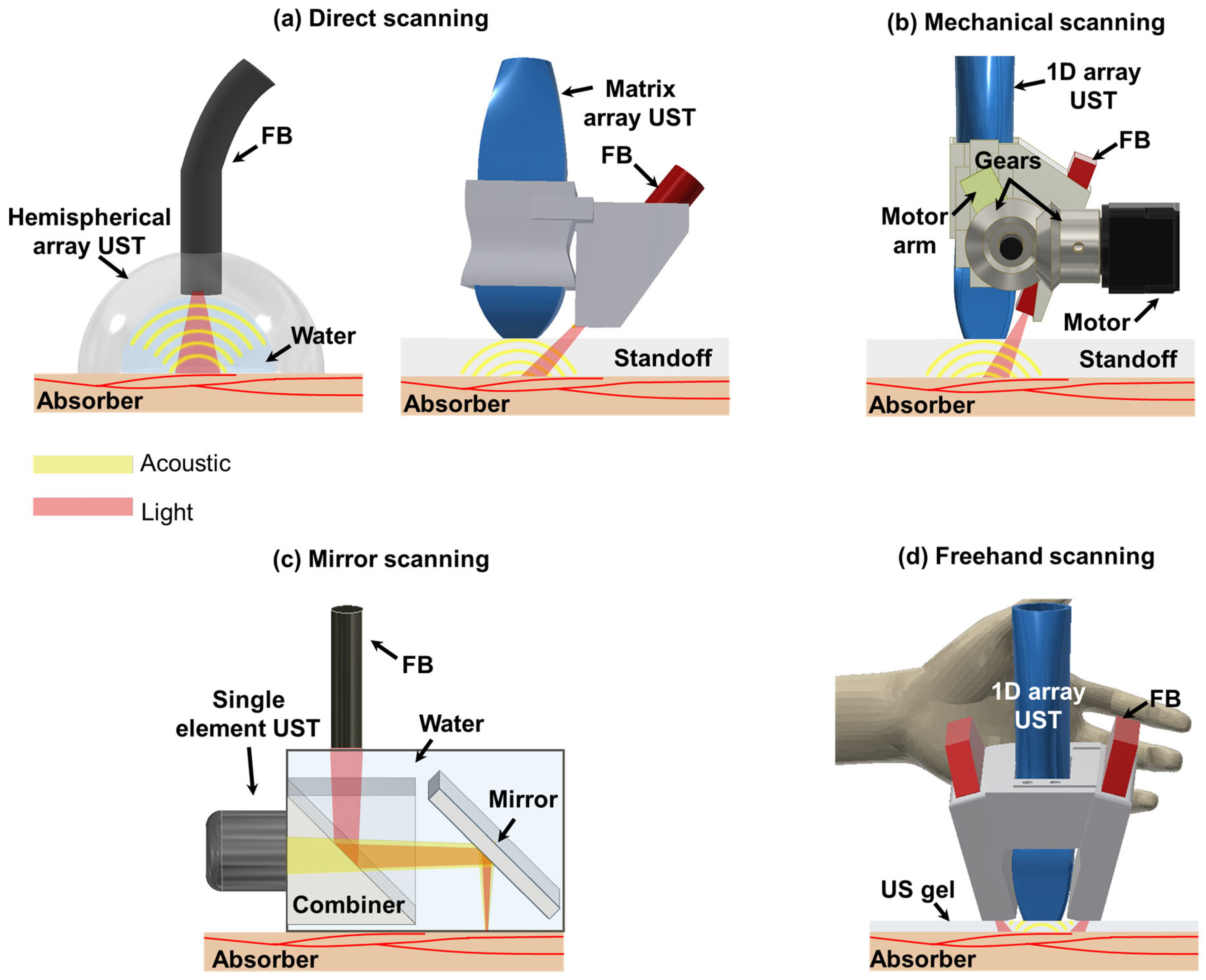

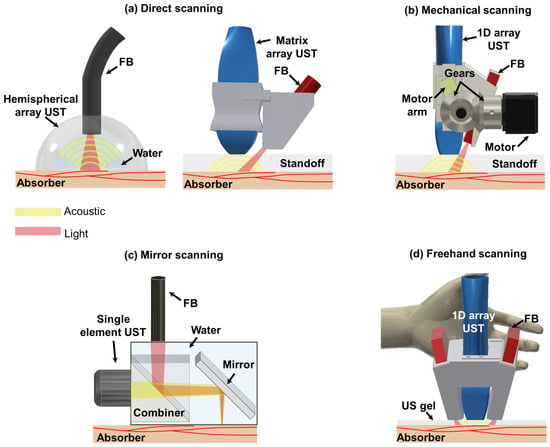

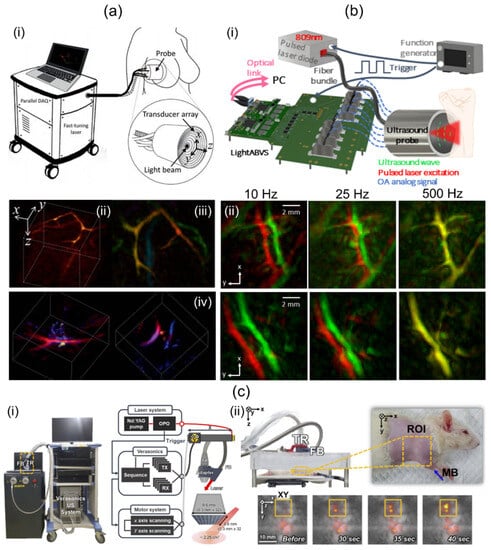

Three-dimensional handheld PA imaging systems can be categorized into four distinct groups based on the scanning mechanism employed: direct scanning, mechanical scanning, mirror-based scanning, and freehand scanning (Figure 1). Each of these systems possesses unique characteristics and limitations. Therefore, it is essential for researchers to comprehensively understand general features associated with each system to facilitate further advancements in this field.

Figure 1.

Schematics of 3D handheld PA imaging systems. (a) Direct scanning with 2D array UST-based 3D handheld PA imaging system. (b) Mechanical scanning with 1D array UST-based 3D handheld PA imaging system. (c) Mirror scanning with single-element UST-based 3D handheld PA imaging system. (d) Freehand scanning with 1D array UST-based 3D handheld PA imaging system. PA, photoacoustic; UST, ultrasound transducer; FB, fibers; LS, linear stage.

Direct scanning involves utilization of 2D array USTs with various shapes such as hemispherical and matrix configurations [30,40,57]. These 2D array USTs enable the acquisition of 3D image data, facilitating direct generation of real-time volumetric PA images. Imaging systems based on 2D array USTs offer isotropic spatial resolutions in both lateral and elevational directions thanks to the arrangement of their transducer elements. Furthermore, a 2D hemispherical array UST provides optimal angular coverage for receiving spherical spreading PA signals [71]. This mitigates the effects of a limited view, thereby enhancing the spatial resolutions of obtained images. It is crucial to maintain coaxiality between US and light beams to achieve good signal-to-noise ratios (SNRs). To accomplish this, some imaging systems based on a 2D hemispherical array UST or certain matrix array USTs incorporate a hole in the center of the UST array, which allows light to be directed towards the US imaging plane [39,46,54].

Mechanical-scanning-based 3D handheld PA imaging systems mainly use 1D array USTs [58,59]. With the help of mechanical movements, 1D array USTs can collect scan data in the scanning direction. Three-dimensional image reconstruction is typically performed by stacking collected data in the scanning direction. Since 1D array USTs provide array elements in the lateral direction, beamforming can be implemented in the lateral direction. However, they do not provide array elements in the elevation direction. Thus, beamforming reconstruction cannot be performed, thereby providing anisotropic spatial resolutions. Although the synthetic aperture focusing technique (SAFT) can be applied as an alternative using scan data in the elevational direction [72], its effectiveness is low due to a tight elevation beam focus fixed by the US lens. Fiber bundles (FBs) are routinely used for light transmission. They are obliquely attached to the side of USTs using an adapter. To cross the US imaging plane and the light area irradiated obliquely, they typically use a stand-off such as water or a gel pad between the bottom of USTs and image targets [9,10,58].

Mirror-scanning-based 3D handheld PA imaging systems use single-element USTs with different mirrors (e.g., MEMS or GS) to ensure high imaging speed [31,32]. Spatial resolutions of these systems are generally superior to array-based imaging systems because they use a single element with high frequency USTs. Some of them can provide tight optical focusing to provide better lateral resolutions [73]. Conversely, they can provide shallower penetration depth than other systems. In particular, penetration depths of these systems providing optical focusing are limited to 1 mm, the mean optical path length of the biological tissue [74]. Thus, these systems are disadvantageous to use for clinical imaging [63]. To ensure coaxial configuration between light and acoustic beams, they typically use beam combiners [64,67].

Freehand scanning can be implemented with all UST types. Additional devices such as a global positioning system (GPS) and optical tracker are attached to UST bodies for recording the scanning location [69,70]. With scan data and the information of the scanning location, 3D image reconstruction is implemented. In addition, by analyzing similarity between successively acquired freehand scan images, position calibration can be performed without additional hardware units [50]. Furthermore, they provide flexibility to combine with different scanning-based 3D PA imaging systems [67]. Therefore, spatial resolutions, penetration depths, and coaxial configurations might vary depending on the UST type and scanning system.

These four scanning mechanisms provide useful criteria for distinguishing 3D handheld PA imaging systems. Their respective characteristics are summarized in Table 1.

Table 1.

General features of 3D clinical handheld imaging systems.

2.1. Three-Dimensional Handheld PA Imaging Systems Using Direct Scanning

Direct 3D scanning is typically achieved using hemispherical or matrix-shaped 2D array USTs. In PA imaging, the PA wave propagates in a three-dimensional direction, which makes hemispherical 2D array USTs particularly effective for wide angular coverage, minimizing limited view artifacts and ensuring high SNRs [71]. Meanwhile, matrix-shaped 2D array USTs can be custom fabricated into smaller sizes, making them highly suitable for handheld applications [54,57]. Furthermore, their unique features make them suitable for use as wearable devices [55].

Dea’n-Ben et al. [39] have introduced a 3D multispectral PA imaging system based on a 2D hemispherical array UST and visualized human breast with the system. They used an optical parametric oscillator (OPO) laser, a hemispherical shaped UST, and a custom designed data acquisition (DAQ) system (Figure 2a(i)). The OPO laser emitted short pulses (<10 ns) and was rapidly tuned over a wavelength range of 690 to 900 nm. The laser fluence and average power were less than 15 mJ/cm2 and 200 mW/cm2, respectively. The hemispherical US TR was composed of 256 piezocomposite elements arranged on a 40 mm radius hemispherical surface that covered a solid angle of 90°. The element was about 3 × 3 mm2. It provided 4 MHz of a center frequency with a 100% bandwidth. At the center of the TR, an 8 mm cylindrical cavity was formed. Light was delivered through the cavity using an FB. Data acquisition was conducted at 40 Msamples/s with the DAQ system. Single-wavelength volume imaging was performed at a rate of 10 Hz. For online image reconstruction, a back-projection algorithm accelerated with a graphics processing unit (GPU) was implemented. For accurate imaging reconstruction, 3D model-based image reconstruction was conducted offline. The spatial resolution was measured by imaging spherical-shaped geometry. It was about 0.2 mm. Spectral unmixing was performed using the least-square method. Unmixed PA oxy-hemoglobin (HbO2), deoxy-hemoglobin (Hb), and melanin images of human breast are shown in Figure 2a(ii).

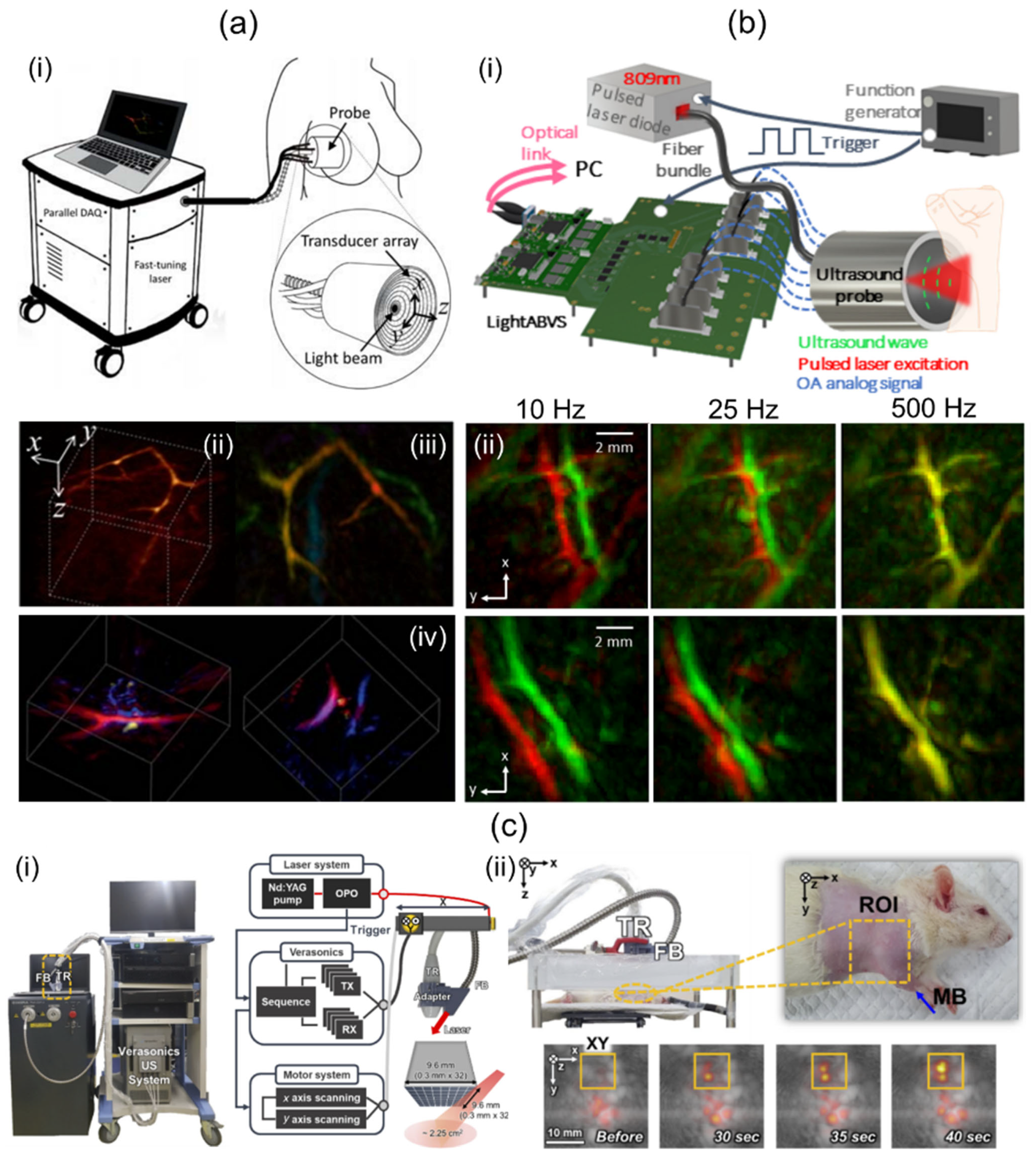

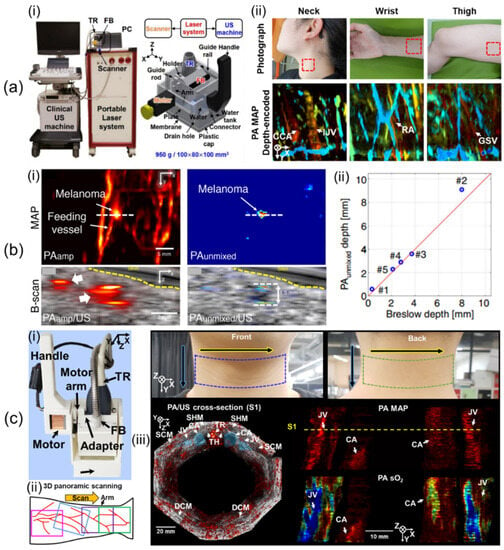

Figure 2.

Direct-scanning-based 3D handheld PA imaging systems and their applications. (a) (i) Schematic diagram of a 2D hemispherical array transducer-based direct 3D imaging system and its clinical application. PA (ii) amplitude (iii) depth-encoded and (iv) sO2 images of human breasts. (b) (i) A cost-effective direct 3D handheld PA imaging system based on a 2D hemispherical array probe. (ii) In vivo human wrist PA MAP images acquired at various acquisition rates. Top and bottom rows indicate different regions around the wrist. (c) (i) Conventional 2D matrix array UST-based 3D PA/US imaging system. (ii) Photograph of the rat and in vivo PA/US MAP images after MB injection. ROI indicates the SLN area. PA, photoacoustic; US, ultrasound; MAP, maximum amplitude projection; SLN, sentinel lymph node; MB, methylene blue; sO2, oxygen saturation. Reprinted with permission from references [35,39,75].

Özsoy et al. [75] have demonstrated a cost-effective and compact 3D PA imaging system based on a 2D hemispherical array UST. Their study was performed using a low-cost diode laser, the hemispherical array UST, and an optical-link-based US acquisition platform [76] (Figure 2b(i)). The diode laser delivered a single-wavelength (809 nm) pulse with a length of about 40 ns. The lasers’ pulse repetition frequency (PRF) was up to 500 Hz. The output energy was measured to be about 0.65 mJ. The laser system was operated by a power supply and synchronized by a function generator. The size of the laser was 9 × 5.6 × 3.4 cm3, which was sufficiently smaller than OPO-based laser systems. The hemispherical array UST consisted of 256 piezoelectric composite elements with a 4 MHz center frequency and 100% bandwidth. It provided a solid angle of 0.59 π°. A total of 480 single-mode fibers were inserted through the central hole of the transducer and used to deliver the beam. The custom DAQ system had a total cost of approximately EUR 9.4. It offered 192 channels. Its measurements were 18 × 22.6 × 10 cm3. The DAQ system offered a 40 MHz sampling frequency with a 12-bit resolution. A total of 1000 sample data were collected for each channel, acquiring a region of 37.5 mm. Data were transmitted to a PC via 100G Ethernet with two 100 G Fireflies. Image reconstruction was performed with back projection [77]. The FOV of the reconstructed volume was 10 × 10 × 10 mm3. In vivo human wrist imaging was performed by freely scanning along a random path using the developed system. Two consecutively acquired PA images were overlaid in red and green as shown in Figure 2b(ii). The top and bottom rows represent different imaged regions around the wrist. As the image acquisition rate increases, the accuracy of spectral unmixing is expected to improve as two successive images cannot be distinguished.

Liu et al. [57] have presented a compact 3D handheld PA imaging system based on a custom-designed 2D matrix array UST. Their research was conducted using a custom fiber-connected laser, the custom fabricated 2D matrix array probe, and a DAQ. The laser delivering 10.7 mJ of pulse energy at the fiber output tip was 27 × 8 × 6 cm3 in size with a weight of 2 kg. The probe contained 72 piezoelectric elements with a size, center frequency, and bandwidth of 1 × 1 mm2, 2.25 MHz, and 65%, respectively. US elements were arranged to make a central hole (diameter: 2 mm). The light was delivered through the hole after passing through a collimator (NA: 0.23 in the air) and a micro condenser lens (diameter: 6 mm, f: 6 mm). The light was then homogenized with an optical diffusing gel pad to reshape a Gaussian-distributed beam in the imaging FOV. The dimensions and weight of the entire probe were <10 cm3 and 44 g, respectively. Then, 72-channel data for each volume were digitized by the DAQ, providing 128-channel 12-bit 1024 samples at 40 Msamples/s. Data acquisition timing was controlled by a programmed micro-control unit (MCU). Acquired volume data were reconstructed by the fast phase shift migration (PSM) method [56], which enabled real-time imaging. Spatial resolution quantification was implemented by imaging human hairs. Lateral and axial resolutions were measured to be 0.8 and 0.73 mm, respectively. The system took 0.1 sec of volumetric imaging time to scan an FOV of 10 × 10 × 10 mm3. The handheld feasibility of the system was demonstrated by imaging the cephalic vein of a human arm.

Although 3D handheld PA/US imaging using a conventional 2D matrix array UST has not been reported, 3D handheld operation is readily possible using stand-off or optical systems. Therefore, studies using 2D matrix array USTs will potentially lead to the development of 3D handheld PA/US imaging systems. Representatively, Kim et al. [35] have demonstrated a conventional 2D matrix array UST-based 3D PA/US imaging system. They used tunable laser, the 2D matrix array UST, an FB, a US imaging platform, and two motorized stages (Figure 2c(i)). The laser wavelength range and PRF were 660–1320 nm and 10 Hz, respectively. The 2D matrix array UST provides 1024 US elements with a center frequency of 3.3 MHz. For light transmission, FB was attached to the side of the 2D matrix array UST obliquely, and light was delivered to the US imaging plane. The US imaging platform offers 256 receive channels. Thus, four laser shots were required for one PA image. To obtain wide-field 3D images, they combined 2D matrix array UST with two motorized stages and implemented 2D raster scanning. For offline image processing, 3D filtered back projection and enveloped detection were conducted. To evaluate system performance, a 54 × 18 mm2 phantom containing 90 μm thick black absorbers was imaged with a total scan time of 30 min at a raster scan step size of 0.9 mm. Quantified axial and lateral resolutions were 0.76 and 2.8 mm, respectively. Further, they implemented in vivo rat sentinel lymph node (SLN) imaging after methylene blue injection (Figure 2c(ii)). Wang et al. [78] have showcased a 3D PA/US imaging system based on a conventional 2D matrix array UST. In their study, a tunable dye laser, the 2D matrix array UST, and a custom-built DAQ were used. The laser’s PRF and pulse duration were 10 Hz and 6.5 ns, respectively. The laser was coupled with bifurcated FB. The matrix array UST has 2500 US elements with a nominal bandwidth of 2–7 MHz. Thirty-six laser shots were required for the acquisition of one PA image. Image data transferred by the DAQ were then reconstructed with a 3D back projection algorithm [79] on a four-core central processing unit (CPU). This took 3 h. The reconstructed PA image’s volume was 2 × 2 × 2 cm3. For the benchmark of their system, gelatin phantom containing a human hair was imaged. Measured profiles in axial, lateral, and elevational directions were 0.84, 0.69, and 0.90 mm, respectively. In addition, in vivo mouse SLN imaging with methylene blue was performed for further verification (Table 2).

Table 2.

Specifications of direct-scanning-based 3D handheld PA/US imaging systems.

While 2D array USTs with a hemispherical shape offer advantages for PA imaging, their high cost, complex electronics, and high computational demands are cited as disadvantages. Additionally, their large element pitch sizes render them unsuitable for US imaging, limiting their use in clinical research. Conversely, matrix-shaped 2D array USTs are highly portable due to their light weight and small size. However, their suboptimal angular coverage for PA signal reception results in poor spatial resolution and limited view artifacts.

2.2. Three-Dimensional Handheld PA Imaging Systems Using Mechanical Scanning

Several 3D handheld PA imaging systems utilizing mechanical scanning methods and various types of USTs have been developed. Typically, these systems employ one- to three-axis motorized stages for 3D scanning. They are relatively inexpensive, requiring simple electronic devices. In this chapter, we will introduce two such systems: one based on a 1D linear array UST and another based on a single-element UST [10,58,59,62].

Lee et al. [58] have developed a 1D linear array UST-based 3D handheld PA/US imaging system that uses a lightweight and compact motor whose scanning method is controlled by a non-linear scanning mechanism. In their study, they used a portable OPO laser with fast pulse tuning, a conventional clinical US machine, the 1D linear array UST, and a custom-built scanner (Figure 3a(i)). The laser delivered light at pulse energies of 7.5 and 6.1 mJ/cm2 at 797 and 850 nm, respectively, with a 10 Hz PRF. With 64 receive channels, the US machine was an FDA-approved US system that offered a programmable environment. The UST had an 8.5 MHz central frequency and a 62% bandwidth at −6 dB. The scanner included the guide rail, handle, guide rod, plate, connector, drain hole, plastic cap, FB, holder, UST, motor, arm, water, water tank, and membrane. The FB was integrated into the holder with the UST. It was inserted at 15° for efficient light transmission. The motor arm moved the holder to drive the UST in the scanning direction after receiving a laser trigger signal. The movement was managed with a non-linear scanning method (i.e., the scotch yoke mechanism) that changed linear motion into rotational movement and vice versa, allowing the use of a small, lightweight motor (170 g) instead of bulky, heavy motor stages. They used a 3 cm deep water tank to avoid PA reflection artifacts within 3 cm of the axial region. Polyvinyl chloride (PVC) membranes with a thickness of 0.2 mm were attached to the water tank to seal the water. Dimensions and weight of the scanner were 100 × 80 × 100 mm3 and 950 g, respectively. The maximum FOV was 40 × 38 mm2, and the maximum scan time for single-wavelength imaging was 20.0 s. Acquired volume data were reconstructed by Fourier-domain beamforming [80]. They measured spatial resolutions by quantifying the full width at half maximum (FWHM) of black threads having a thickness of 90 μm. The calculated axial and lateral resolutions were 191 μm and 799 μm, respectively. To confirm the usefulness of the handheld system for human body imaging, they implemented human in vivo imaging of the wrist, neck, and thigh (Figure 3a(ii)).

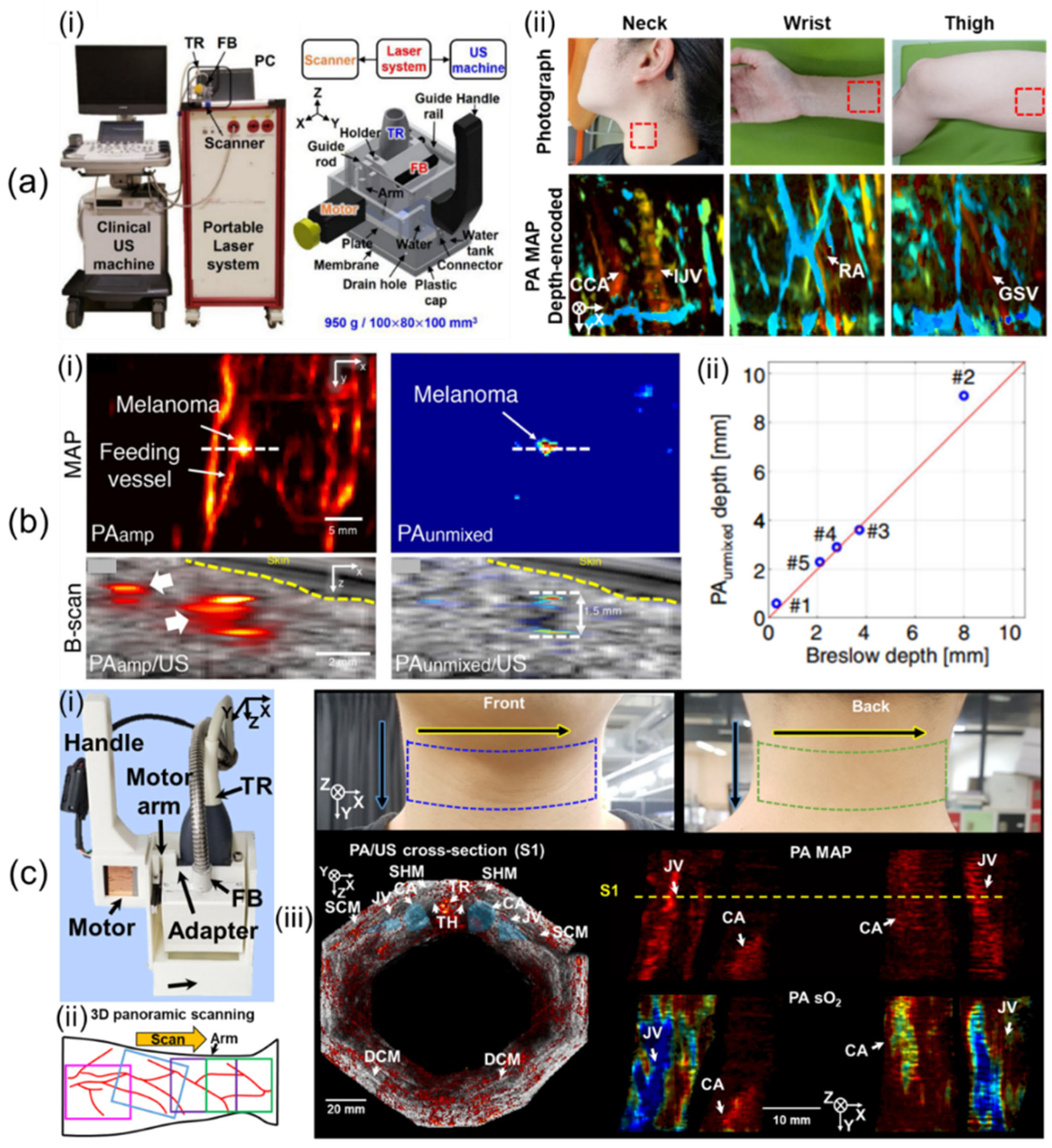

Figure 3.

Three-dimensional clinical handheld PA/US imaging systems based on 1D linear array UST with mechanical scanning and their applications. (a) (i) Photograph of a mechanical-scanning-based 3D handheld PA/US imaging system using a 1D linear array UST. (ii) In vivo human imaging of various human bodies. (b) (i) US, PA amplitude, and unmixed PA melanin images of metastasized melanoma. (ii) Graph of unmixed PA melanin depth vs. histopathological depth. (c) (i) Updated 3D clinical handheld PA/US imager. (ii) Schematic of 3D panoramic scanning. (iii) Three-dimensional panoramic imaging of the human neck. PA, photoacoustic; UST, ultrasound transducer. Reprinted with permission from references [10,58,59].

Using the developed 3D handheld imaging system, Park et al. [59] have conducted a clinical study of various types of cutaneous melanomas. They used multiple wavelengths of 700, 756, 797, 866, and 900 nm. The FOV was scaled to 31 × 38 mm2, resulting in a scan time of 57 sec. They recruited six patients who had in situ, nodular, acral lentiginous, and metastasis types of melanomas. US/PA/PA unmixed melanin images of metastasized melanoma were acquired as shown in Figure 3b(i). They compared unmixed PA melanin depth and histopathological depth and confirmed that the two depths agreed well within a mean absolute error of 0.36 mm (Figure 3b(ii)). Further, they measured a maximum PA penetration depth of 9.1 mm in nodular melanoma.

Lee et al. [10] have showcased updated 3D clinical handheld PA/US imaging scanner and systems. The 3D handheld imager weighs 600 g and measures 70 × 62 × 110 mm3, increasing handheld usability (Figure 3c(i)). The updated system improved SNRs of the system by an average of 11 dB over the previous system using a transparent solid US gel pad with similar attenuation coefficients to water. The previous system could not immediately check 3D image results, making it difficult to filter out motion-contaminated images. Through imaging system updates, PA/US maximum amplitude projection (MAP) preview images considered as 3D images were provided online on the US machine to help select data. Offline 3D panoramic scanning was performed to provide super wide-field images of the human body (Figure 3c(ii)). An updated 3D PHOVIS, providing six-degree mosaic stitching, was used for image position correction. Twelve panoramic scans were performed along the perimeter of the human neck for system evaluation (Figure 3c(iii)). The total scan time was 601.2 s, and the corrected image FOV was 129 × 120 mm2. Hemoglobin oxygen saturation (sO2) levels of the carotid artery and jugular vein were quantified to be 97 ± 8.2% and 84 ± 12.8%, respectively.

Bost et al. [62] have presented a single-element UST with a two-axis linear motorized stage-based 3D handheld PA/US imaging system. In their study, a solid laser, a custom probe, and a digitizer were used. The solid laser produced 532 and 1024 nm pulses with a PRF of 1 kHz and a pulse length of 1.5 ns. The maximum pulse energies at 523 and 1024 nm were 37 and 77 μJ, respectively. The probe integrated a 35 MHz single-element UST with 100% bandwidth, a custom-designed fiber bundle, a two-axis linear stage, and a waterproof polyvinylchloride plastisol membrane for acoustic coupling. The number and geometry of fibers were effectively designed with Monte Carlo simulation. Data were acquired at a sampling rate of 200 MSamples/s. For volumetric US and PA imaging, scan times were 1.5 min and 4 min, respectively, for an area of 9.6 × 9.6 mm2. US and PA lateral resolutions were 86 μm and 93 μm, respectively. A visualized B-scan superimposed US/PA images of a human scapula where the PA vascular network was identified. Furthermore, they acquired a volume-rendered image of a mouse with a subcutaneous tumor (Table 3).

Table 3.

Specifications of mechanical-scanning-based 3D handheld PA/US imaging systems.

Three-dimensional handheld imaging systems using mechanical scanning methods can be implemented with relatively simple devices while still providing acceptable image quality. However, these systems are susceptible to motor noises and vulnerable to motion contamination. Furthermore, the inclusion of motorized parts can make the entire scanner bulky and heavy, which can make handheld operation challenging.

2.3. Three-Dimensional Handheld PA Imaging Systems Using Mirror Scanning

Mechanical scanning is limited in speed due to physical movement of the scanner [81,82]. Additionally, the use of relatively bulky and heavy motor systems adversely affects handheld operation. To overcome these problems, optical scanning (e.g., MEMS- and galvanometer-based mirror scanners) was used to develop single-element UST-based 3D PA imaging systems.

Lin et al. [64] have demonstrated a volumetric PA imaging system implemented using a two-axis MEMS and a single-element UST. For that study, they utilized a fiber laser, a custom handheld probe, and a DAQ. The laser pulse length at 532 nm was 5 nm, and the PRF was 88 kHz. The probe consisted of a single-mode fiber (SMF) for delivering the light, optical lenses for focusing laser beams into an opto-acoustic combiner, prisms for ensuring opto-acoustic coaxial alignment, the MEMS for reflecting focused laser beams and generated PA signals, a 50 MHz center frequency UST for detecting reflected PA signals, an optical correction lens for calibrating prism-induced aberration, and a motorized stage for correcting the location of the MEMS mirror (Figure 4a(i)). Laser pulses synchronized the MEMS scanner and the DAQ system. PA signal data were collected by the DAQ with a sampling frequency of 250 Msamples/s. PA volume imaging was implemented at a repetition rate of 2 Hz. The overall dimension and imaging FOV of the probe were 80 × 115 × 150 mm3 and 2.5 × 2.0 × 0.5 mm3, respectively. Resolution measurements of the system were performed in lateral and axial directions, resulting in 5 µm and 26 µm, respectively. To test the developed 3D handheld imager, a mouse ear (Figure 4a(ii)) and a human cuticle (Figure 4a(iii)) were imaged.

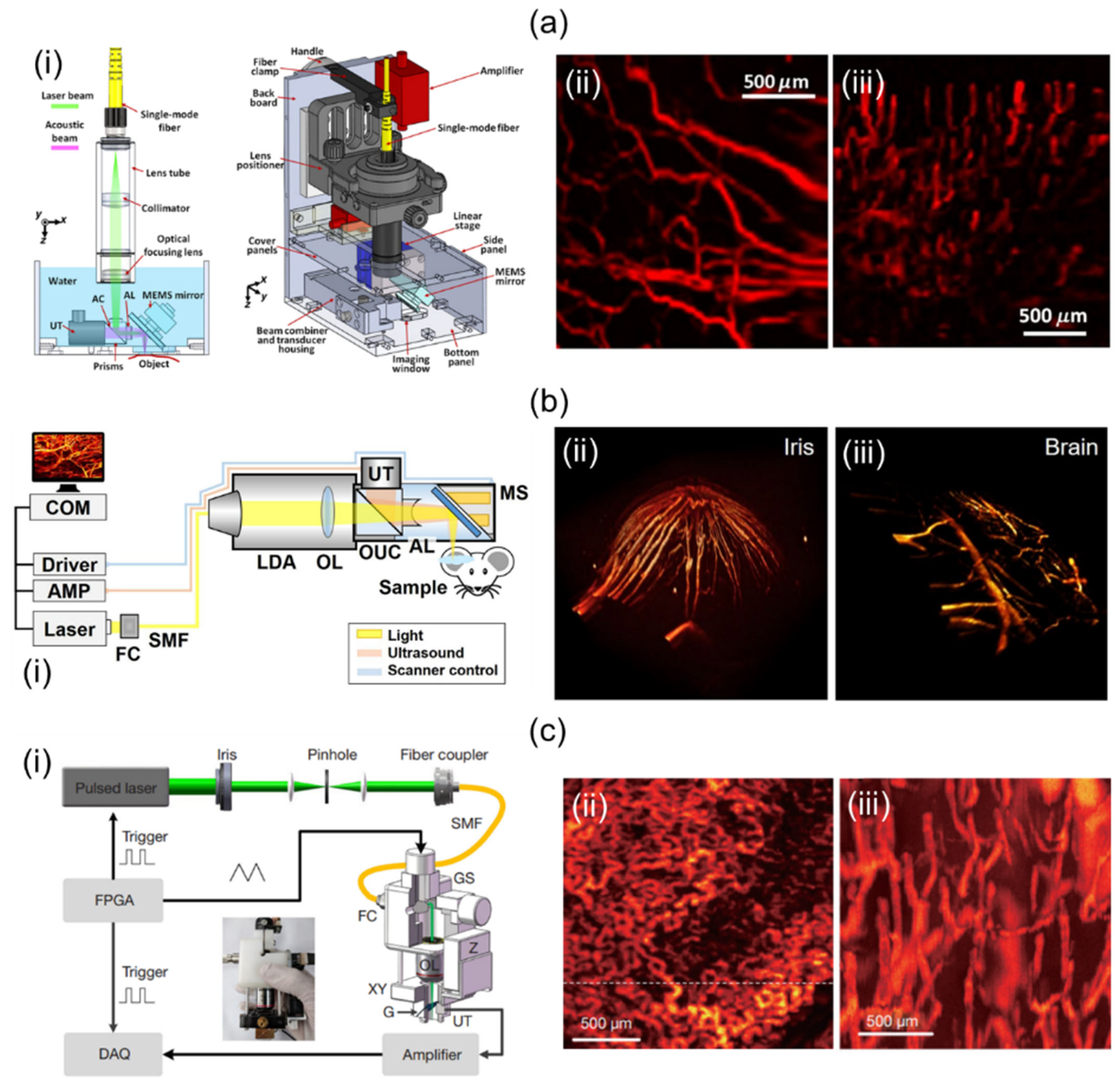

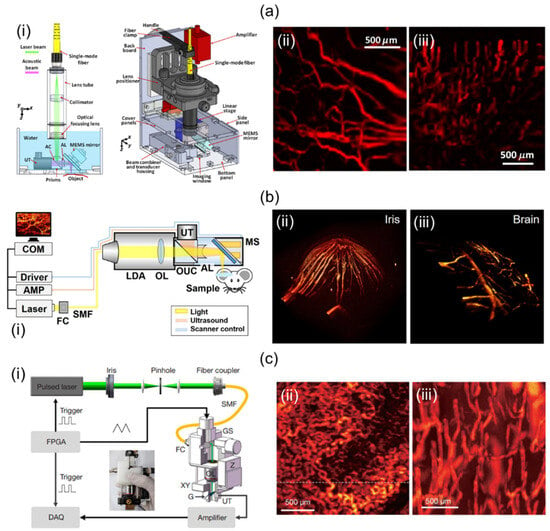

Figure 4.

Mirror-scanning-based 3D handheld PA imaging systems and their applications. (a) (i) Schematic of a single-element UST and 2-axis MEMS-based 3D PA imaging system. In vivo PA vessel visualizations of (ii) a mouse ear and (iii) a human cuticle. (b) (i) Schematic diagram of a 2-axis MEMS and single-element UST-based miniaturized 3D handheld imaging system. In vivo PA volume rendered images of a mouse (ii) iris and (iii) brain. (c) Schematic diagram of a compact single-element UST-based 3D portable imaging system scanning with a galvanometer. In vivo PA MAP visualizations of (ii) the wattle of a Leghorn rooster and (iii) the lower lip of a human. MEMS, microelectromechanical systems; PA, photoacoustic; MAP, maximum amplitude projection. Reprinted with permission from references [31,32,64].

Park et al. [32] have developed a two-axis MEMS and single-element UST-based miniaturized system without using a mechanical stage. A diode laser, an SMF, a custom-designed 3D probe, and DAQ were used in their study. The 532 nm diode laser provided a 50 kHz PRF. It was coupled with optical fiber. The probe integrated light delivery assembly (LDA), objective lens (OL), opto-ultrasound combiner (OUC), the MEMS, an acoustic lens (AL), and a UST (Figure 4b(i)). After the light was delivered from the LDA, it was focused by the OL and delivered to the OUC containing prisms and silicon fluid. The delivered light was reflected by the MEMS scanner. It then illuminated objects that could absorb light. Generated PA signals were reflected from the MEMS and at prisms in OUC. Reflected PA signals were collected at the UST with a central frequency of 50 MHz. The AL attached to the right side of the OUC was used to increase SNRs. The DAQ was used to create trigger signals for operating the laser and the digitizer. At the same time, the DAQ generated sinusoidal and sawtooth waves to control the two-axis MEMS scanner. PA volumetric imaging was implemented at a PRF of 0.05 Hz. The probe was 17 mm in diameter with a weight of 162 g. It provided a maximum FOV of 2.8 × 2 mm2. Lateral and axial resolutions were 12 µm and 30 µm, respectively. To confirm the feasibility of the system, they conducted in vivo mouse imaging in various parts such as the iris and brain, as shown in Figure 4b(ii,iii).

Zhang et al. [31] presented a 2D GS and single-element UST-based 3D handheld system (Figure 4c(i)). Their study was performed using a pulsed laser, a custom-made probe, and a DAQ card. The laser offered a maximum PRF of 10 kHz at 532 nm. It was connected to an SMF (diameter: 4 μm) via a fiber coupler (FC). The light was delivered to a collimator mounted on the side of the probe. The collimated light was scanned with the GS and focused onto a transparent glass with an OL. Generated PA signals were reflected off the 45° tilted glass (G) and delivered to the UST with a center frequency of 15 MHz. The optical focus was adjusted using the probe’s XY- and Z-direction stages. The DAQ card provided a sampling rate of 200 MSamples/s. The 3D imaging took 16 sec. The imaging FOV was 2 × 2 mm2. Lateral and axial resolutions were quantified with the phantom’s sharp blade and carbon rod. The results were 8.9 µm and 113 μm, respectively. Using this system, they conducted in vivo rooster wattle and human lip imaging experiments, as shown in Figure 4c(ii,iii).

Qin et al. [65] have presented a dual-modality 3D handheld imaging system capable of PA and optical coherence tomography (OCT) imaging using lasers, a 2D MEMS, a miniaturized flat UST, and OCT units. For PA imaging, a pulsed laser transmitted light with a high repetition rate of 10 kHz and a pulse duration of 8 ns. For OCT imaging, a diode laser delivered light that provided a center wavelength of 839.8 nm with a FWHM of 51.8 nm. The light for PA and OCT imaging used the same optical path and was reflected by the MEMS mirror, respectively, to scan targets. The dual-modality 3D handheld scanner measured 65 × 30 × 18 mm3, providing an effective FOV of 2 × 2 mm2. Their lateral and axial PA resolutions were quantified to 3.7 μm and 120 μm, respectively, while lateral and axial OCT resolutions were quantified to 5.6 μm and 7.3 μm, respectively (Table 4).

Table 4.

Specifications of mirror-scanning-based 3D handheld PA/US imaging systems.

Optical-scanning-based 3D PA probes provide high-quality PA images with fast acquisition and high handheld usability by eliminating motors and associated systems. However, theses probes have limitations such as small FOVs and shallow penetration depths, which are unfavorable for clinical PA imaging.

2.4. Three-Dimensional Handheld PA Imaging Systems Using Freehand Scanning

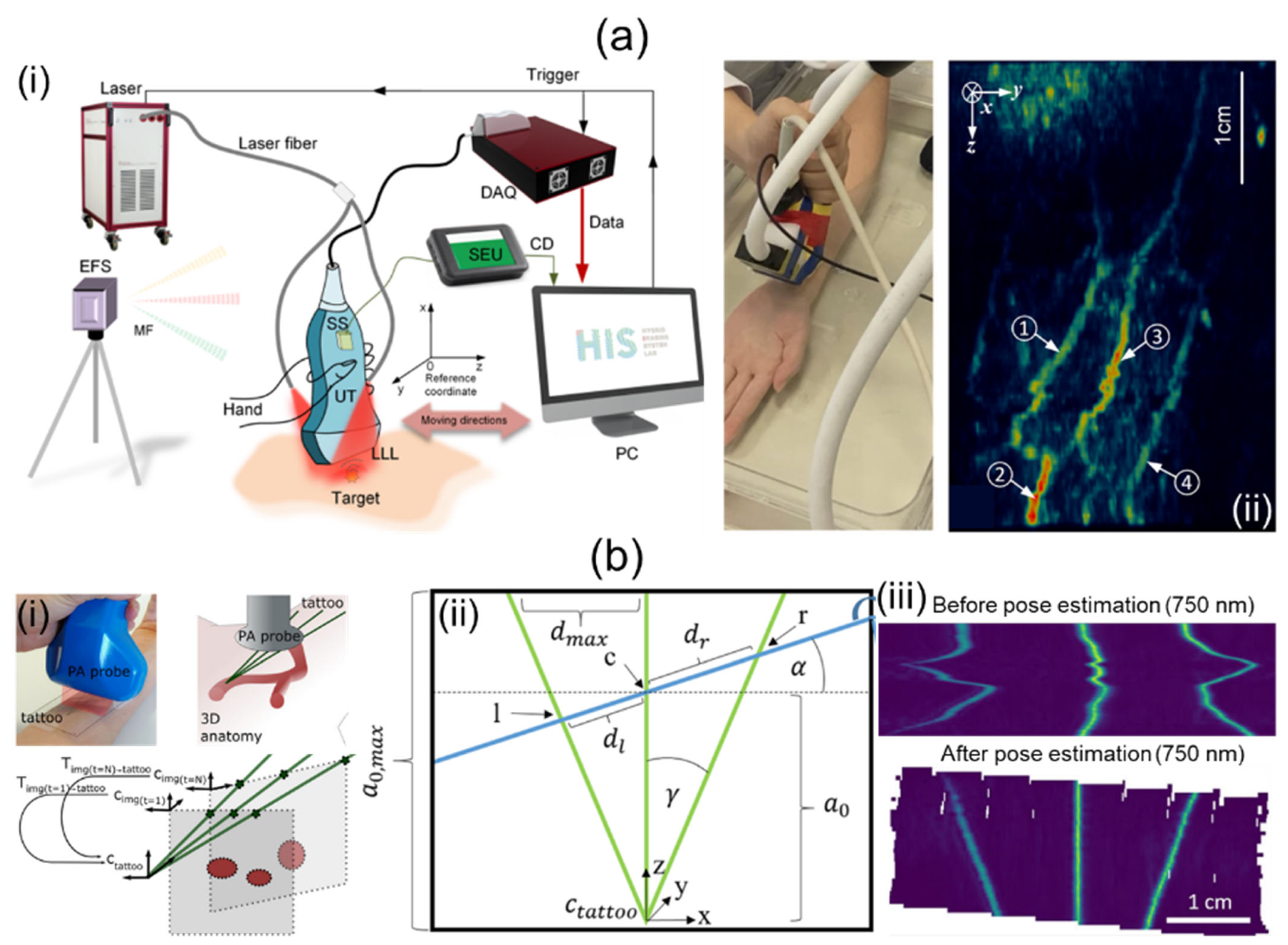

Three-dimensional handheld PA imaging systems utilizing freehand scanning methods have been developed using various types of USTs. This section aims to showcase a range of 3D freehand scanning systems that utilize different aids, including a GPS, an optical pattern, and an optical tracker. Additionally, we will explore freehand scanning imaging systems combined with other scanning-based imaging systems such as mirror-scanning-based and direct-scanning-based imaging systems.

Jiang et al. [69] have proposed a 3D freehand PA tomography (PAT) imaging system using a GPS sensor. For their research, they utilized an OPO laser and a laser providing high power, a linear UST, a DAQ card, and a 3D GPS (Figure 5a(i)). The PRF and wavelength ranges of the OPO laser were 10 Hz and 690–950 nm, respectively. The high-power laser delivered a 1064 nm wavelength at a 10 Hz PRF. The energy fluence was less than 20 mJ/cm2. The linear UST provided a center frequency of 7.5 MHz with 73% bandwidth. The DAQ system sampled data on 128 channels at a rate of 40 Msamples/s. The GPS consisted of a system electronics unit (SEU), a standard sensor (SS), and an electromagnetic field source (EFS). The SS was attached to the US probe as shown in Figure 5a(i). It collected six degrees of freedom (DOF) data at a sampling rate of 120 Hz for each B-mode image. The 6-DOF data contained three translational (x, y, z) and three rotational (α, β, γ) data. Obtained 6-DOF data were transmitted to a PC via the SEU. Imaging positions were accurately tracked within a magnetic field generated by the EFS. GPS calculation errors for translational and rotational motions were 0.2% and 0.139%, respectively. They used the sensor within a translation resolution of 0.0254 mm and an angular resolution of 0.002° for 3D PAT. Free-scan data were acquired at scan speeds of 60–240 mm/min with scan intervals of 0.1–0.4 mm. After PA data collection, a back-projection beamformer was applied for 2D image reconstruction. Three-dimensional PAT was implemented as pixel nearest-neighbor-based fast-dot projection using 6-DOF coordinate data [83,84]. Measured lateral and elevational resolutions were 237.4 and 333.4 µm, respectively. The application of free-scan 3D PAT on a human wrist is shown in Figure 5a(ii).

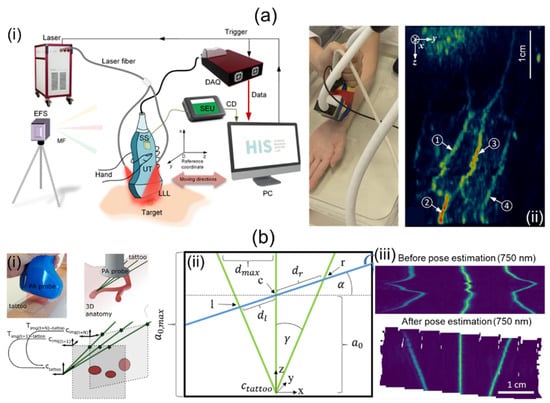

Figure 5.

Freehand-scanning-based 3D handheld PA imaging systems and their applications. (a) (i) Schematic description of a free-scanning-based 3D handheld PA imaging system using a GPS sensor. (ii) In vivo 3D free-scan imaging of a human arm. (b) (i) Conceptual explanation of a 3D freehand scan imaging system using an optical tattoo. (ii) Schematic geometry for the tattoo. l, c, and r represent intersections of a free-scan image and the optical tattoo. (iii) Original and repositioned PA images acquired by freehand scanning. PA, photoacoustic; UT, ultrasound transducer; GPS, global positioning system. Reprinted with permission from references [69,70].

Holzwarth et al. [70] have presented a 3D handheld imaging system using freehand scanning with a 1D concave array UST. They used a tunable ND:YAG laser, a multispectral opto-acoustic tomography (MSOT) Acuity Echo research system, and a custom optical pattern. The laser provided a spectral range of 660–1300 nm, with pulse energy, durations, and an emission rate of 30 mJ, 4–10 ns, and 25 Hz, respectively. The concave UST was 80 mm in diameter. It provided a central frequency of 4 MHz with 256 individual elements. The optical pattern was engraved on a transparent foil and filled with cyan ink. The pattern was attached to regions of interest (ROIs). Free-scan data were acquired within these ROIs. As shown in Figure 5b(i), the trident optical pattern representing the three green lines gave three points corresponding to each probe position in each free scan. They obtained a transformation matrix using probe positions and geometry (Figure 5b(ii)). Based on 2D images and the transformation matrix, 3D volume compounding was implemented [85]. Three-dimensional free-scan images before and after position calibration are shown in Figure 5b(iii).

Fournelle et al. [68] have demonstrated a 3D freehand scan imaging system using an optical tracker and a commercial 1D linear array UST. This study was performed using a solid-state ND:YAG laser, a wavelength tunable OPO laser, the linear array UST, a custom US platform, an optical tracking system, and an FB. Frame rates for the ND:YAG and OPO lasers were 20 and 10 frames/sec, respectively. Light emitted from these laser systems was transmitted through the FB. Due to the opening angle (22°) of the FB, the transmitted light formed a rectangular shape measured 2 × 20 mm2. The laser fluence was 10 mJ/cm2 at 1064 nm. The center frequency and pitch size of the UST were 7.5 MHz and 300 µm, respectively. The US platform digitized 128-channel data at a sampling rate of 80 MSamples/s. The optical tracker provided the position and orientation of each B-mode image with a root-mean-square error (0.3 mm3). An adaptive delay-and-sum beamformer was applied for reconstructing B-mode US and PA images. The reconstruction was accelerated with multi-core processors and parallel graphics processors. Reconstructed images were placed in 3D space using position and orientation information obtained from the optical tracker and corrected with a calibration matrix. Resolution characterization of the system was achieved by imaging the tip of a steel needle. Measured FWHMs in lateral and elevational directions were 600 µm and 1100 µm, respectively. To confirm the availability of the 3D freehand approach for humans, PA/US imaging was performed for a human hand. The total number of image frames acquired for the volume was 150, resulting in a scan time of 15 s.

Chen et al. [67] have showcased a 3D freehand scanning probe based on a resonant-galvo scanner and a single-element UST. The resonant mirror in the probe generated a periodic magnetic field to rotate the reflector at a rate of 1228 Hz. The galvo scanner was attached to the resonant mirror for slow-axis scanning. Taking advantage of the system’s high scanning speed (C-scan rate, 5–10 Hz), they applied simultaneous localization and mapping called SLAM. The SLAM performed feature point extraction between consecutive scan images using the speeded-up robust features (SURF) method and scale-invariant feature transform (SIFT) method [86,87,88,89]. Points were then used to calibrate positions of consecutive images. They demonstrated an extended FOV image of the mouse brain with the developed system and processing method. The expanded FOV was 8.3–13 times larger than the original FOV of ~1.7 × 5 mm2.

Knauer et al. [50] have presented a free-scan method using a hemispherical 3D handheld scanner to extend a limited FOV using acquired volumetric images. Acquired volume images with the hemispherical probe were spatially compounded. Fourier-based orientation and position correction were then applied. For translation (tx, ty, tz) and rotation (θx, θy, θz) corrections, a phase correlation [90] and PROPELLER [91] were used, respectively. Volume areas that overlapped with each other by less than approximately 85% were used to apply the position correction algorithm. To validate their algorithm, they imaged a human palm. The extended volume FOV was 50 × 70 × 15 mm3, which was larger than the single volume image FOV (Table 5).

Table 5.

Specifications of freehand-scanning-based 3D handheld PA/US imaging systems.

Freehand-scanning-based 3D PA imaging systems are implemented with many types of UST. They also offer flexibility to combine with mirror- or direct-scanning-based 3D PA imaging systems. Combined scanning methods can overcome limitations of small FOV systems. However, they can be severely affected by various motion artifacts, making spectroscopic PA analysis difficult.

3. Discussion and Conclusions

In Section 2 of this paper, we classified and provided detailed explanations of four scanning techniques (i.e., direct, mechanical, mirror-based, and freehand scanning) for 3D handheld PA imaging. Subsequently, in Section 2.1, Section 2.2, Section 2.3 and Section 2.4 we introduced recent research on 3D handheld PA imaging corresponding to these four scanning techniques. As mentioned earlier, 3D handheld PA imaging holds great potential for widespread applications, including both preclinical and clinical applications. Despite its active development and utilization, three major limitations need to be overcome: motion artifacts, anisotropic spatial resolution, and limited view artifacts. In the following sections, we will delve into these three limitations in greater detail.

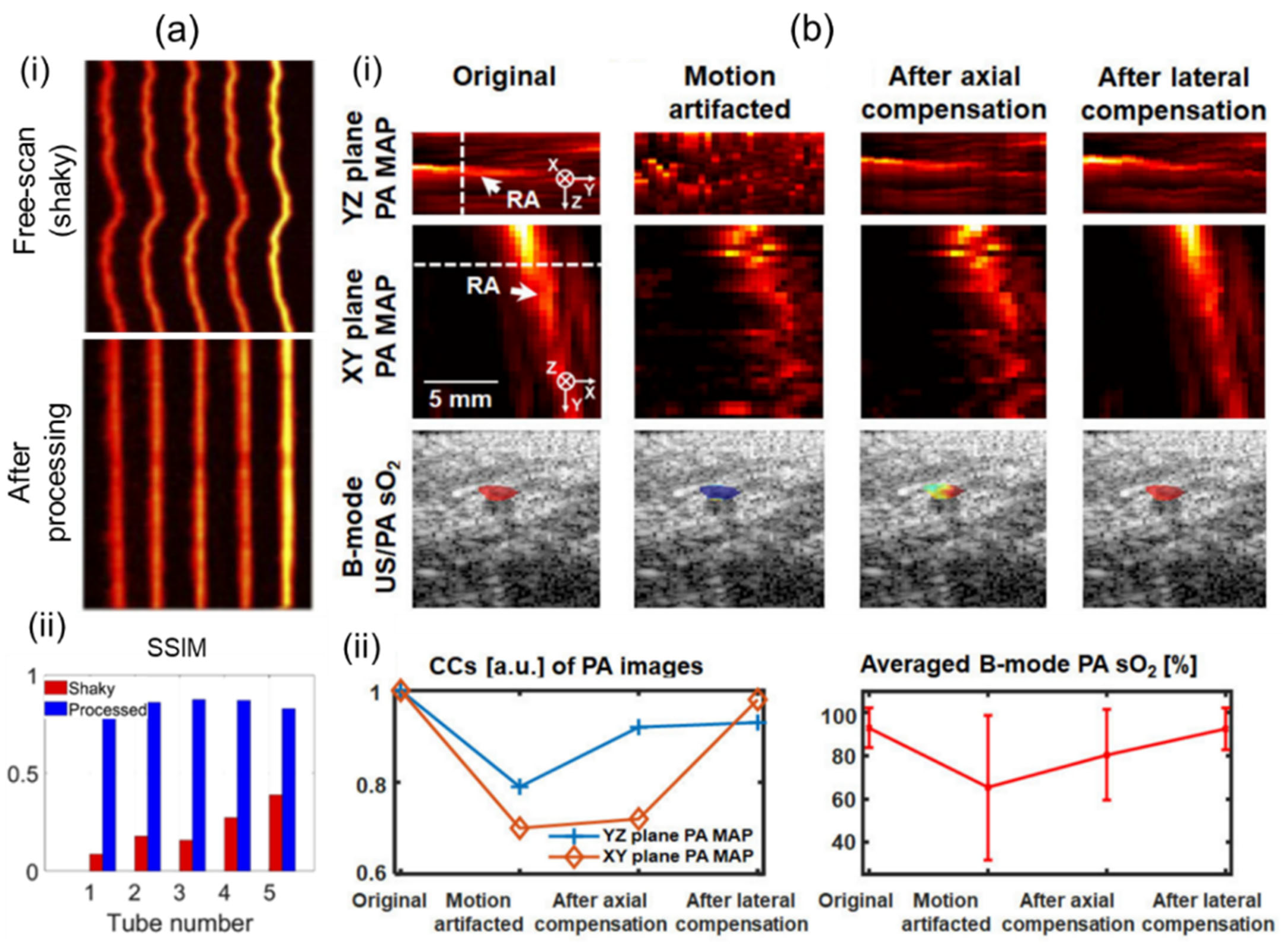

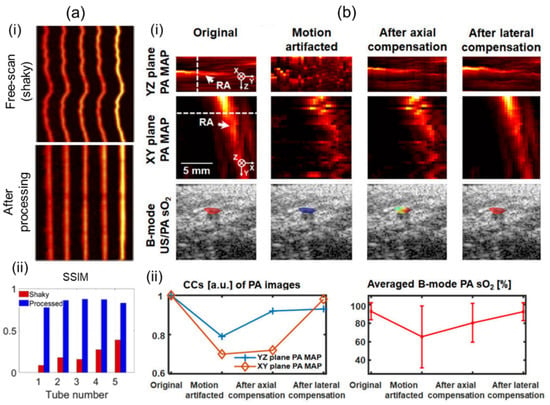

Motion artifacts can occur in all types of scanning-based 3D imaging systems. Artifacts can degrade structural information. They can also deteriorate functional information such as sO2 due to pixel-by-pixel spectral unmixing calculations [60]. For clinical translation, 3D imaging systems should mitigate motion contaminations to ensure accurate imaging and quantifications. Mozaffarzadeh et al. [92] have showcased motion-corrected freehand scanned PA images using the modality independent neighborhood descriptor (MIND), which is based on self-similarity [93]. By applying the MIND algorithm, they corrected a motion-contaminated phantom image as shown in Figure 6a(i). After correction, the structural similarity index (SSIM) was greatly enhanced (Figure 6a(ii)). Yoon et al. [60] have proposed a 3D motion-correction method using a motor-scanning-based 3D imaging system. They applied US motion compensations by maximizing structural similarities of subsequently acquired US skin profiles. PA motion correction was implemented using the corrected US information. The effectiveness of motion compensation was verified through in vivo human wrist imaging (Figure 6b(i)). Structural similarities were measured by quantifying cross-correlations of each method. Significant improvements were confirmed after motion corrections. Further, inaccuracy of the spectral unmixing was greatly reduced after calibrations (Figure 6b(ii)).

Figure 6.

Motion compensations applied to 3D handheld imaging systems. (a) (i) Before and after motion-corrected PA MAP images. Motion contamination occurred in the freehand scan. (ii) Comparison of SSIM before and after motion correction. (b) (i) PA MAP and B-mode US/PA sO2 images before and after motion compensation. (ii) Motion compensation validation charts. Degrees of recovery of structural and functional information were measured. PA, photoacoustic; MAP, maximum amplitude projection; US, ultrasound; SSIM, the structural similarity index; sO2, hemoglobin oxygen saturation. Reprinted with permission from references [60,92].

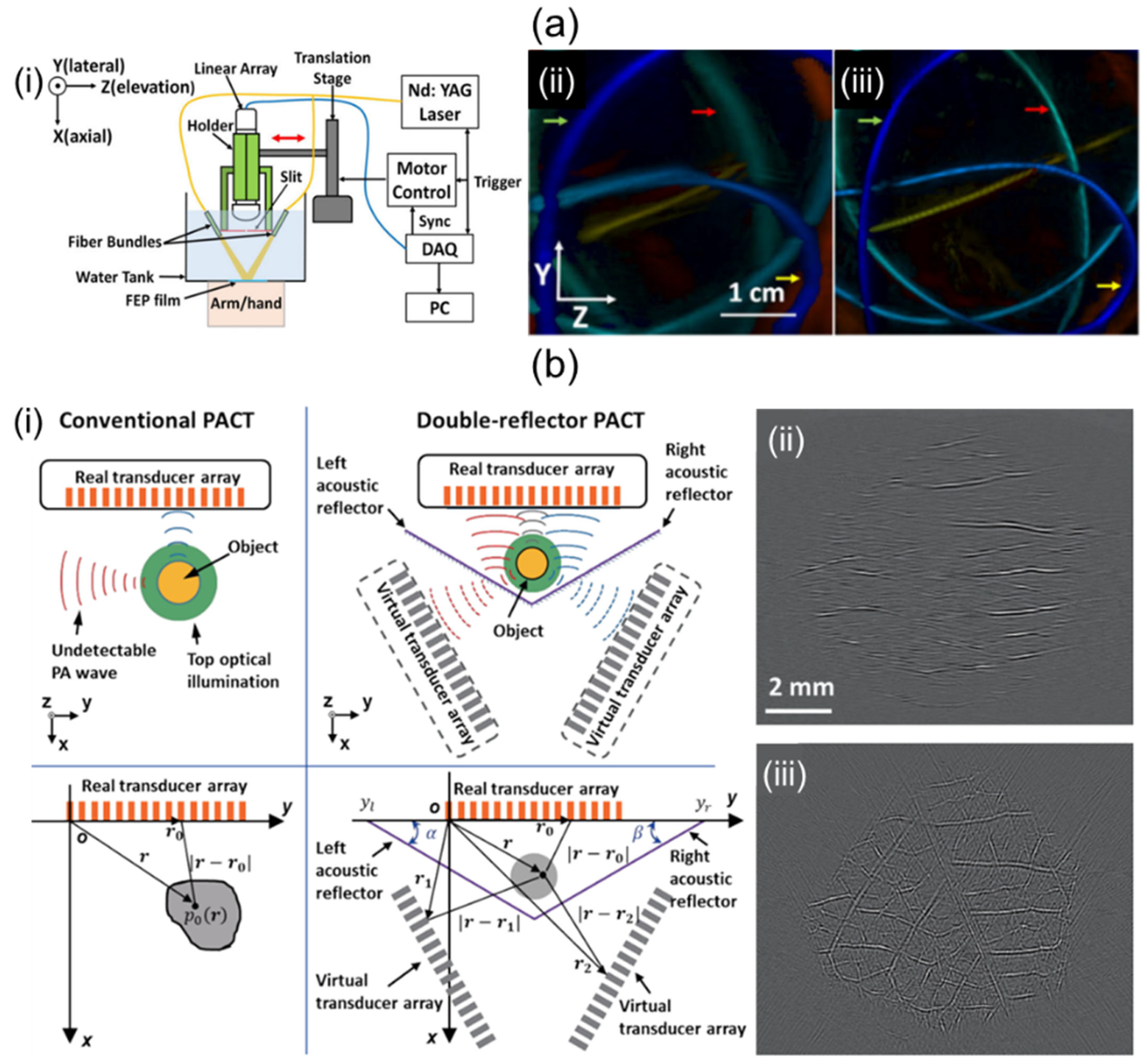

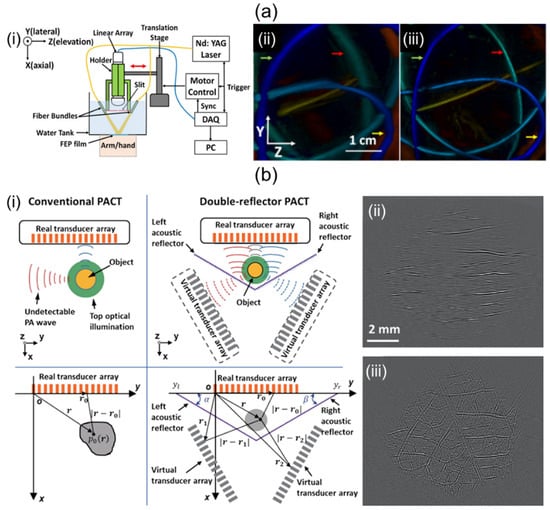

It is known that 1D linear array UST-based 3D imaging systems suffer from low elevation resolution due to their narrow elevation beamwidth and limited view artifacts caused by a narrow aperture size. To overcome poor elevational resolution, Wang et al. [94] have proposed a system using stainless steel at the focal point of a 1D linear UST as shown in Figure 7a(i). The system diffracted PA waves to create wide signal receptions in the elevational direction [95]. They effectively applied the beamformer in the elevation direction with sufficiently overlapping signal areas. Results of depth-encoded tube phantom images before and after slit application are shown in Figure 7a(ii,iii). The elevation resolution of the system with slit was 640 μm, which was superior to the 1500 μm elevation resolution of the system without the slit.

Figure 7.

Potential ways to improve performance of 1D linear array UST-based 3D portable imaging systems. (a) (i) Schematic explanation of a slit-enabled PACT system. (ii) Conventional and (iii) slit-applied tube phantom depth-encoded PA images. (b) (i) Conceptual schematic of conventional and double-reflector PACT. PA leaf skeleton images of (ii) conventional and (iii) double-reflector application. The double-reflector PACT restored the vascular network of the leaf skeleton. PACT, photoacoustic computed tomography; UST, ultrasound transducer. Reprinted with permission from references [94,96].

To mitigate limited-view artifacts, solid angle coverage of a UST should be at least >π. Li et al. [96] have demonstrated a 1D linear array UST-based imaging system that applies two planar acoustic reflectors to virtually increase the detection view angle. The two reflectors were located at an angle of 120° relative to each other (Figure 7b(i)). The system using an increased detection view angle of 240° successfully recovered the vascular network image of the leaf skeleton (Figure 7b(iii)) compared to the original one (Figure 7b(ii)).

Recent advancements in silicon-photonics technology have led to the development of on-chip optical US sensors [97,98]. These sensors offer several advantages over traditional piezoelectric US sensors. Unlike piezoelectric sensors, which often sacrifice sensitivity when miniaturized, optical US sensors exhibit high sensitivity and broadband detection capabilities while maintaining a compact size. Furthermore, there have been recent breakthroughs in achieving parallel interrogation of these on-chip optical US sensors [99]. These innovations open up exciting possibilities for the creation of ultracompact 3D handheld imaging systems. In addition, multispectral imaging, real-time capabilities, hybrid modalities [100], and AI integration [101] hold great promise for improving future 3D handheld PA imaging systems. These systems can unlock new opportunities for patient care, early disease detection and image-guided interventions [102], ultimately enhancing our understanding and management of various medical conditions.

Author Contributions

Conceptualization, C.L. and B.P.; investigation, C.L.; writing—original draft preparation, C.L.; writing—review and editing, C.L., B.P. and C.K.; supervision, B.P. and C.K.; project administration, C.K.; funding acquisition, C.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (MOE) (2020R1A6A1A03047902). It was also partly supported by the national R&D Program through the NRF funded by the Ministry of Science and ICT (MSIT) (2021M3C1C3097624, RS-2023-00210682). It was also supported by an NRF grant funded by MSIT (NRF-2023R1A2C3004880). It was in part supported by grants (1711137875, RS-2020-KD000008) from the Korea Medical Device Development Fund funded by the Ministry of Trade, Industry and Energy (MOTIE). It was also in part supported by the BK21 FOUR project.

Conflicts of Interest

Chulhong Kim has financial interest in OPTICHO. However, OPTICHO did not support this work.

References

- Chen, Q.; Qin, W.; Qi, W.; Xi, L. Progress of clinical translation of handheld and semi-handheld photoacoustic imaging. Photoacoustics 2021, 22, 100264. [Google Scholar] [CrossRef]

- Choi, W.; Park, B.; Choi, S.; Oh, D.; Kim, J.; Kim, C. Recent Advances in Contrast-Enhanced Photoacoustic Imaging: Overcoming the Physical and Practical Challenges. Chem. Rev. 2023, 123, 7379–7419. [Google Scholar] [CrossRef]

- Cao, R.; Zhao, J.; Li, L.; Du, L.; Zhang, Y.; Luo, Y.; Jiang, L.; Davis, S.; Zhou, Q.; de la Zerda, A.; et al. Optical-resolution photoacoustic microscopy with a needle-shaped beam. Nat. Photonics 2022, 17, 89–95. [Google Scholar] [CrossRef]

- Liu, C.; Wang, L. Functional photoacoustic microscopy of hemodynamics: A review. Biomed. Eng. Lett. 2022, 12, 97–124. [Google Scholar] [CrossRef]

- Zhang, Z.; Mu, G.; Wang, E.; Cui, D.; Yang, F.; Wang, Z.; Yang, S.; Shi, Y. Photoacoustic imaging of tumor vascular involvement and surgical margin pathology for feedback-guided intraoperative tumor resection. Appl. Phys. Lett. 2022, 121, 193702. [Google Scholar] [CrossRef]

- Bell, A.G. Upon the production and reproduction of sound by light. J. Soc. Telegr. Eng. 1880, 9, 404–426. [Google Scholar] [CrossRef]

- Yao, J.; Kaberniuk, A.A.; Li, L.; Shcherbakova, D.M.; Zhang, R.; Wang, L.; Li, G.; Verkhusha, V.V.; Wang, L.V. Multiscale photoacoustic tomography using reversibly switchable bacterial phytochrome as a near-infrared photochromic probe. Nat. Methods 2015, 13, 67–73. [Google Scholar] [CrossRef]

- Wang, L.V.; Yao, J. A practical guide to photoacoustic tomography in the life sciences. Nat. Methods 2016, 13, 627–638. [Google Scholar] [CrossRef]

- Choi, W.; Park, E.-Y.; Jeon, S.; Yang, Y.; Park, B.; Ahn, J.; Cho, S.; Lee, C.; Seo, D.-K.; Cho, J.-H.; et al. Three-dimensional multistructural quantitative photoacoustic and US imaging of human feet in vivo. Radiology 2022, 303, 467–473. [Google Scholar] [CrossRef]

- Lee, C.; Cho, S.; Lee, D.; Lee, J.; Park, J.-I.; Kim, H.-J.; Park, S.H.; Choi, W.; Kim, U.; Kim, C. Panoramic Volumetric Clinical Handheld Photoacoustic and Ultrasound Imaging. Photoacoustics 2023, 31, 100512. [Google Scholar] [CrossRef]

- Neuschler, E.I.; Butler, R.; Young, C.A.; Barke, L.D.; Bertrand, M.L.; Böhm-Vélez, M.; Destounis, S.; Donlan, P.; Grobmyer, S.R.; Katzen, J.; et al. A pivotal study of optoacoustic imaging to diagnose benign and malignant breast masses: A new evaluation tool for radiologists. Radiology 2018, 287, 398–412. [Google Scholar] [CrossRef]

- Yang, X.; Chen, Y.-H.; Xia, F.; Sawan, M. Photoacoustic imaging for monitoring of stroke diseases: A review. Photoacoustics 2021, 23, 100287. [Google Scholar] [CrossRef]

- Mantri, Y.; Dorobek, T.R.; Tsujimoto, J.; Penny, W.F.; Garimella, P.S.; Jokerst, J.V. Monitoring peripheral hemodynamic response to changes in blood pressure via photoacoustic imaging. Photoacoustics 2022, 26, 100345. [Google Scholar] [CrossRef]

- Menozzi, L.; Del Águila, Á.; Vu, T.; Ma, C.; Yang, W.; Yao, J. Three-dimensional non-invasive brain imaging of ischemic stroke by integrated photoacoustic, ultrasound and angiographic tomography (PAUSAT). Photoacoustics 2023, 29, 100444. [Google Scholar] [CrossRef]

- Li, H.; Zhu, Y.; Luo, N.; Tian, C. In vivo monitoring of hemodynamic changes in ischemic stroke using photoacoustic tomography. J. Biophotonics 2023, e202300235. [Google Scholar] [CrossRef]

- Li, J.; Chen, Y.; Ye, W.; Zhang, M.; Zhu, J.; Zhi, W.; Cheng, Q. Molecular breast cancer subtype identification using photoacoustic spectral analysis and machine learning at the biomacromolecular level. Photoacoustics 2023, 30, 100483. [Google Scholar] [CrossRef]

- Lin, L.; Wang, L.V. The emerging role of photoacoustic imaging in clinical oncology. Nat. Rev. Clin. Oncol. 2022, 19, 365–384. [Google Scholar] [CrossRef]

- Xing, B.; He, Z.; Zhou, F.; Zhao, Y.; Shan, T. Automatic force-controlled 3D photoacoustic system for human peripheral vascular imaging. Biomed. Opt. Express 2023, 14, 987–1002. [Google Scholar] [CrossRef]

- Zhang, M.; Wen, L.; Zhou, C.; Pan, J.; Wu, S.; Wang, P.; Zhang, H.; Chen, P.; Chen, Q.; Wang, X.; et al. Identification of different types of tumors based on photoacoustic spectral analysis: Preclinical feasibility studies on skin tumors. J. Biomed. Opt. 2023, 28, 065004. [Google Scholar] [CrossRef]

- Kim, J.; Park, B.; Ha, J.; Steinberg, I.; Hooper, S.M.; Jeong, C.; Park, E.-Y.; Choi, W.; Liang, T.; Bae, J.S.; et al. Multiparametric Photoacoustic Analysis of Human Thyroid Cancers in vivo photoacoustic Analysis of Human Thyroid Cancers. Cancer Res. 2021, 81, 4849–4860. [Google Scholar] [CrossRef]

- Park, B.; Kim, C.; Kim, J. Recent Advances in Ultrasound and Photoacoustic Analysis for Thyroid Cancer Diagnosis. Adv. Phys. Res. 2023, 2, 2200070. [Google Scholar] [CrossRef]

- Kim, J.; Kim, Y.; Park, B.; Seo, H.-M.; Bang, C.; Park, G.; Park, Y.; Rhie, J.; Lee, J.; Kim, C. Multispectral ex vivo photoacoustic imaging of cutaneous melanoma for better selection of the excision margin. Br. J. Dermatol. 2018, 179, 780–782. [Google Scholar] [CrossRef] [PubMed]

- Yang, M.; Zhao, L.; He, X.; Su, N.; Zhao, C.; Tang, H.; Hong, T.; Li, W.; Yang, F.; Lin, L.; et al. Photoacoustic/ultrasound dual imaging of human thyroid cancers: An initial clinical study. Biomed. Opt. Express 2017, 8, 3449–3457. [Google Scholar] [CrossRef] [PubMed]

- Garcia-Uribe, A.; Erpelding, T.N.; Krumholz, A.; Ke, H.; Maslov, K.; Appleton, C.; Margenthaler, J.A.; Wang, L.V. Dual-modality photoacoustic and ultrasound imaging system for noninvasive sentinel lymph node detection in patients with breast cancer. Sci. Rep. 2015, 5, 15748. [Google Scholar] [CrossRef] [PubMed]

- Berg, P.J.v.D.; Daoudi, K.; Moens, H.J.B.; Steenbergen, W. Feasibility of photoacoustic/ultrasound imaging of synovitis in finger joints using a point-of-care system. Photoacoustics 2017, 8, 8–14. [Google Scholar] [CrossRef]

- Dima, A.; Ntziachristos, V. Non-invasive carotid imaging using optoacoustic tomography. Opt. Express 2012, 20, 25044–25057. [Google Scholar] [CrossRef]

- Dima, A.; Ntziachristos, V. In-vivo handheld optoacoustic tomography of the human thyroid. Photoacoustics 2016, 4, 65–69. [Google Scholar] [CrossRef]

- Fenster, A.; Downey, D.B. 3-D ultrasound imaging: A review. IEEE Eng. Med. Biol. Mag. 1996, 15, 41–51. [Google Scholar] [CrossRef]

- Yang, J.; Choi, S.; Kim, C. Practical review on photoacoustic computed tomography using curved ultrasound array transducer. Biomed. Eng. Lett. 2021, 12, 19–35. [Google Scholar] [CrossRef]

- Deán-Ben, X.L.; Razansky, D. Portable spherical array probe for volumetric real-time optoacoustic imaging at centimeter-scale depths. Opt. Express 2013, 21, 28062–28071. [Google Scholar] [CrossRef]

- Zhang, W.; Ma, H.; Cheng, Z.; Wang, Z.; Zhang, L.; Yang, S. Miniaturized photoacoustic probe for in vivo imaging of subcutaneous microvessels within human skin. Quant. Imaging Med. Surg. 2019, 9, 807. [Google Scholar] [CrossRef]

- Park, K.; Kim, J.Y.; Lee, C.; Jeon, S.; Lim, G.; Kim, C. Handheld Photoacoustic Microscopy Probe. Sci. Rep. 2017, 7, 13359. [Google Scholar] [CrossRef]

- Moothanchery, M.; Dev, K.; Balasundaram, G.; Bi, R.; Olivo, M. Acoustic resolution photoacoustic microscopy based on microelectromechanical systems scanner. J. Biophotonics 2019, 13, e201960127. [Google Scholar] [CrossRef] [PubMed]

- Toi, M.; Asao, Y.; Matsumoto, Y.; Sekiguchi, H.; Yoshikawa, A.; Takada, M.; Kataoka, M.; Endo, T.; Kawaguchi-Sakita, N.; Kawashima, M.; et al. Visualization of tumor-related blood vessels in human breast by photoacoustic imaging system with a hemispherical detector array. Sci. Rep. 2017, 7, 41970. [Google Scholar] [CrossRef]

- Kim, W.; Choi, W.; Ahn, J.; Lee, C.; Kim, C. Wide-field three-dimensional photoacoustic/ultrasound scanner using a two-dimensional matrix transducer array. Opt. Lett. 2023, 48, 343–346. [Google Scholar] [CrossRef]

- Neuschmelting, V.; Burton, N.C.; Lockau, H.; Urich, A.; Harmsen, S.; Ntziachristos, V.; Kircher, M.F. Performance of a Multispectral Optoacoustic Tomography (MSOT) System equipped with 2D vs. 3D Handheld Probes for Potential Clinical Translation. Photoacoustics 2015, 4, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Dean-Ben, X.L.; Ozbek, A.; Razansky, D. Volumetric real-time tracking of peripheral human vasculature with GPU-accelerated three-dimensional optoacoustic tomography. IEEE Trans. Med. Imaging 2013, 32, 2050–2055. [Google Scholar] [CrossRef] [PubMed]

- Deán-Ben, X.L.; Bay, E.; Razansky, D. Functional optoacoustic imaging of moving objects using microsecond-delay acquisition of multispectral three-dimensional tomographic data. Sci. Rep. 2014, 4, srep05878. [Google Scholar] [CrossRef] [PubMed]

- Deán-Ben, X.L.; Fehm, T.F.; Gostic, M.; Razansky, D. Volumetric hand-held optoacoustic angiography as a tool for real-time screening of dense breast. J. Biophotonics 2015, 9, 253–259. [Google Scholar] [CrossRef] [PubMed]

- Ford, S.J.; Bigliardi, P.L.; Sardella, T.C.; Urich, A.; Burton, N.C.; Kacprowicz, M.; Bigliardi, M.; Olivo, M.; Razansky, D. Structural and functional analysis of intact hair follicles and pilosebaceous units by volumetric multispectral optoacoustic tomography. J. Investig. Dermatol. 2016, 136, 753–761. [Google Scholar] [CrossRef] [PubMed]

- Attia, A.B.E.; Chuah, S.Y.; Razansky, D.; Ho, C.J.H.; Malempati, P.; Dinish, U.; Bi, R.; Fu, C.Y.; Ford, S.J.; Lee, J.S.-S.; et al. Noninvasive real-time characterization of non-melanoma skin cancers with handheld optoacoustic probes. Photoacoustics 2017, 7, 20–26. [Google Scholar] [CrossRef]

- Deán-Ben, X.L.; Razansky, D. Functional optoacoustic human angiography with handheld video rate three dimensional scanner. Photoacoustics 2013, 1, 68–73. [Google Scholar] [CrossRef] [PubMed]

- Ivankovic, I.; Merčep, E.; Schmedt, C.-G.; Deán-Ben, X.L.; Razansky, D. Real-time volumetric assessment of the human carotid artery: Handheld multispectral optoacoustic tomography. Radiology 2019, 291, 45–50. [Google Scholar] [CrossRef] [PubMed]

- Deán-Ben, X.; Fehm, T.F.; Razansky, D. Universal Hand-held Three-dimensional Optoacoustic Imaging Probe for Deep Tissue Human Angiography and Functional Preclinical Studies in Real Time. J. Vis. Exp. 2014, 93, e51864. [Google Scholar] [CrossRef]

- Fehm, T.F.; Deán-Ben, X.L.; Razansky, D. Four dimensional hybrid ultrasound and optoacoustic imaging via passive element optical excitation in a hand-held probe. Appl. Phys. Lett. 2014, 105, 173505. [Google Scholar] [CrossRef]

- Ozsoy, C.; Cossettini, A.; Ozbek, A.; Vostrikov, S.; Hager, P.; Dean-Ben, X.L.; Benini, L.; Razansky, D. LightSpeed: A Compact, High-Speed Optical-Link-Based 3D Optoacoustic Imager. IEEE Trans. Med. Imaging 2021, 40, 2023–2029. [Google Scholar] [CrossRef] [PubMed]

- Deán-Ben, X.L.; Özbek, A.; Razansky, D. Accounting for speed of sound variations in volumetric hand-held optoacoustic imaging. Front. Optoelectron. 2017, 10, 280–286. [Google Scholar] [CrossRef]

- Ron, A.; Deán-Ben, X.L.; Reber, J.; Ntziachristos, V.; Razansky, D. Characterization of Brown Adipose Tissue in a Diabetic Mouse Model with Spiral Volumetric Optoacoustic Tomography. Mol. Imaging Biol. 2018, 21, 620–625. [Google Scholar] [CrossRef]

- Ozsoy, C.; Cossettini, A.; Hager, P.; Vostrikov, S.; Dean-Ben, X.L.; Benini, L.; Razansky, D. Towards a compact, high-speed optical linkbased 3D optoacoustic imager. In Proceedings of the 2020 IEEE SENSORS, Rotterdam, The Netherlands, 25–28 October 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Knauer, N.; Dean-Ben, X.L.; Razansky, D. Spatial Compounding of Volumetric Data Enables Freehand Optoacoustic Angiography of Large-Scale Vascular Networks. IEEE Trans. Med. Imaging 2019, 39, 1160–1169. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Deán-Ben, X.L.; Gottschalk, S.; Razansky, D. Hybrid system for in vivo epifluorescence and 4D optoacoustic imaging. Opt. Lett. 2017, 42, 4577–4580. [Google Scholar] [CrossRef]

- Chuah, S.Y.; Attia, A.B.E.; Long, V.; Ho, C.J.H.; Malempati, P.; Fu, C.Y.; Ford, S.J.; Lee, J.S.S.; Tan, W.P.; Razansky, D.; et al. Structural and functional 3D mapping of skin tumours with non-invasive multispectral optoacoustic tomography. Ski. Res. Technol. 2016, 23, 221–226. [Google Scholar] [CrossRef] [PubMed]

- Fehm, T.F.; Deán-Ben, X.L.; Schaur, P.; Sroka, R.; Razansky, D. Volumetric optoacoustic imaging feedback during endovenous laser therapy—An ex vivo investigation. J. Biophotonics 2015, 9, 934–941. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Tang, K.; Jin, H.; Zhang, R.; Kim, T.T.H.; Zheng, Y. Continuous wave laser excitation based portable optoacoustic imaging system for melanoma detection. In Proceedings of the 2019 IEEE Biomedical Circuits and Systems Conference (BioCAS), Nara, Japan, 17–19 October 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Liu, S.; Tang, K.; Feng, X.; Jin, H.; Gao, F.; Zheng, Y. Toward Wearable Healthcare: A Miniaturized 3D Imager with Coherent Frequency-Domain Photoacoustics. IEEE Trans. Biomed. Circuits Syst. 2019, 13, 1417–1424. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Feng, X.; Jin, H.; Zhang, R.; Luo, Y.; Zheng, Z.; Gao, F.; Zhenga, Y. Handheld Photoacoustic Imager for Theranostics in 3D. IEEE Trans. Med. Imaging 2019, 38, 2037–2046. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Song, W.; Liao, X.; Kim, T.T.-H.; Zheng, Y. Development of a Handheld Volumetric Photoacoustic Imaging System with a Central-Holed 2D Matrix Aperture. IEEE Trans. Biomed. Eng. 2020, 67, 2482–2489. [Google Scholar] [CrossRef] [PubMed]

- Lee, C.; Choi, W.; Kim, J.; Kim, C. Three-dimensional clinical handheld photoacoustic/ultrasound scanner. Photoacoustics 2020, 18, 100173. [Google Scholar] [CrossRef] [PubMed]

- Park, B.; Bang, C.H.; Lee, C.; Han, J.H.; Choi, W.; Kim, J.; Park, G.S.; Rhie, J.W.; Lee, J.H.; Kim, C. 3D wide-field multispectral photoacoustic imaging of human melanomas in vivo: A pilot study. J. Eur. Acad. Dermatol. Venereol. 2020, 35, 669–676. [Google Scholar] [CrossRef]

- Yoon, C.; Lee, C.; Shin, K.; Kim, C. Motion Compensation for 3D Multispectral Handheld Photoacoustic Imaging. Biosensors 2022, 12, 1092. [Google Scholar] [CrossRef] [PubMed]

- Aguirre, J.; Schwarz, M.; Garzorz, N.; Omar, M.; Buehler, A.; Eyerich, K.; Ntziachristos, V. Precision assessment of label-free psoriasis biomarkers with ultra-broadband optoacoustic mesoscopy. Nat. Biomed. Eng. 2017, 1, 0068. [Google Scholar] [CrossRef]

- Bost, W.; Lemor, R.; Fournelle, M. Optoacoustic Imaging of Subcutaneous Microvasculature with a Class one Laser. IEEE Trans. Med. Imaging 2014, 33, 1900–1904. [Google Scholar] [CrossRef]

- Hajireza, P.; Shi, W.; Zemp, R.J. Real-time handheld optical-resolution photoacoustic microscopy. Opt. Express 2011, 19, 20097–20102. [Google Scholar] [CrossRef]

- Lin, L.; Zhang, P.; Xu, S.; Shi, J.; Li, L.; Yao, J.; Wang, L.; Zou, J.; Wang, L.V. Handheld optical-resolution photoacoustic microscopy. J. Biomed. Opt. 2016, 22, 041002. [Google Scholar] [CrossRef]

- Qin, W.; Chen, Q.; Xi, L. A handheld microscope integrating photoacoustic microscopy and optical coherence tomography. Biomed. Opt. Express 2018, 9, 2205–2213. [Google Scholar] [CrossRef] [PubMed]

- Chen, Q.; Guo, H.; Jin, T.; Qi, W.; Xie, H.; Xi, L. Ultracompact high-resolution photoacoustic microscopy. Opt. Lett. 2018, 43, 1615–1618. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Zhang, Y.; Zhu, J.; Tang, X.; Wang, L. Freehand scanning photoacoustic microscopy with simultaneous localization and mapping. Photoacoustics 2022, 28, 100411. [Google Scholar] [CrossRef] [PubMed]

- Fournelle, M.; Hewener, H.; Gunther, C.; Fonfara, H.; Welsch, H.-J.; Lemor, R. Free-hand 3d optoacoustic imaging of vasculature. In Proceedings of the 2009 IEEE International Ultrasonics Symposium, Rome, Italy, 20–23 September 2009; pp. 116–119. [Google Scholar] [CrossRef]

- Jiang, D.; Chen, H.; Zheng, R.; Gao, F. Hand-held free-scan 3D photoacoustic tomography with global positioning system. J. Appl. Phys. 2022, 132, 074904. [Google Scholar] [CrossRef]

- Holzwarth, N.; Schellenberg, M.; Gröhl, J.; Dreher, K.; Nölke, J.-H.; Seitel, A.; Tizabi, M.D.; Müller-Stich, B.P.; Maier-Hein, L. Tattoo tomography: Freehand 3D photoacoustic image reconstruction with an optical pattern. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 1101–1110. [Google Scholar] [CrossRef] [PubMed]

- Deán-Ben, X.L.; Razansky, D. On the link between the speckle free nature of optoacoustics and visibility of structures in limited-view tomography. Photoacoustics 2016, 4, 133–140. [Google Scholar] [CrossRef] [PubMed]

- Jeon, S.; Park, J.; Managuli, R.; Kim, C. A novel 2-D synthetic aperture focusing technique for acoustic-resolution photoacoustic microscopy. IEEE Trans. Med. Imaging 2018, 38, 250–260. [Google Scholar] [CrossRef] [PubMed]

- Yao, J.; Wang, L.V. Sensitivity of photoacoustic microscopy. Photoacoustics 2014, 2, 87–101. [Google Scholar] [CrossRef]

- Cho, S.-W.; Park, S.M.; Park, B.; Kim, D.Y.; Lee, T.G.; Kim, B.-M.; Kim, C.; Kim, J.; Lee, S.-W.; Kim, C.-S. High-speed photoacoustic microscopy: A review dedicated on light sources. Photoacoustics 2021, 24, 100291. [Google Scholar] [CrossRef] [PubMed]

- Ozsoy, C.; Cossettini, A.; Ozbek, A.; Vostrikov, S.; Hager, P.; Dean-Ben, X.L.; Benini, L.; Razansky, D. Compact Optical Link Acquisition for High-Speed Optoacoustic Imaging (SPIE BiOS); SPIE: Bellingham, WA, USA, 2022. [Google Scholar]

- Hager, P.A.; Jush, F.K.; Biele, M.; Düppenbecker, P.M.; Schmidt, O.; Benini, L. LightABVS: A digital ultrasound transducer for multi-modality automated breast volume scanning. In Proceedings of the IEEE International Ultrasonics Symposium (IUS), Glasgow, Scotland, UK, 6–9 October 2019. [Google Scholar]

- Ozbek, A.; Deán-Ben, X.L.; Razansky, D. Realtime parallel back-projection algorithm for three-dimensional optoacoustic imaging devices. In Opto-Acoustic Methods and Applications; Ntziachristos, V., Lin, C., Eds.; Optica Publishing Group: Washington, DC, USA, 2013; Volume 8800, p. 88000I. Available online: https://opg.optica.org/abstract.cfm?URI=ECBO-2013-88000I (accessed on 12 May 2013).

- Wang, Y.; Erpelding, T.N.; Jankovic, L.; Guo, Z.; Robert, J.-L.; David, G.; Wang, L.V. In vivo three-dimensional photoacoustic imaging based on a clinical matrix array ultrasound probe. J. Biomed. Opt. 2012, 17, 0612081–0612085. [Google Scholar] [CrossRef]

- Xu, M.; Wang, L.V. Universal back-projection algorithm for photoacoustic computed tomography. Phys. Rev. E 2005, 71, 016706. [Google Scholar] [CrossRef]

- Rosenthal, A.; Ntziachristos, V.; Razansky, D. Acoustic Inversion in Optoacoustic Tomography: A Review. Curr. Med. Imaging Former. Curr. Med. Imaging Rev. 2013, 9, 318–336. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Maslov, K.; Yao, J.; Rao, B.; Wang, L.V. Fast voice-coil scanning optical-resolution photoacoustic microscopy. Opt. Lett. 2011, 36, 139–141. [Google Scholar] [CrossRef]

- Wang, L.; Maslov, K.; Wang, L.V. Single-cell label-free photoacoustic flowoxigraphy in vivo. Proc. Natl. Acad. Sci. USA 2013, 110, 5759–5764. [Google Scholar] [CrossRef] [PubMed]

- Rohling, R.; Gee, A.; Berman, L. A comparison of freehand three-dimensional ultrasound reconstruction techniques. Med. Image Anal. 1999, 3, 339–359. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.-B.; Zheng, R.; Qian, L.-Y.; Liu, F.-Y.; Song, S.; Zeng, H.-Y. Improvement of 3-D Ultrasound Spine Imaging Technique Using Fast Reconstruction Algorithm. IEEE Trans. Ultrason. Ferroelectr. Freq. Control. 2021, 68, 3104–3113. [Google Scholar] [CrossRef]

- Lasso, A.; Heffter, T.; Rankin, A.; Pinter, C.; Ungi, T.; Fichtinger, G. PLUS: Open-Source Toolkit for Ultrasound-Guided Intervention Systems. IEEE Trans. Biomed. Eng. 2014, 61, 2527–2537. [Google Scholar] [CrossRef] [PubMed]

- Xu, S.; Li, S.; Zou, J. A micromachined water-immersible scanning mirror using BoPET hinges. Sensors Actuators A Phys. 2019, 298, 111564. [Google Scholar] [CrossRef]

- Xu, S.; Huang, C.-H.; Zou, J. Microfabricated water-immersible scanning mirror with a small form factor for handheld ultrasound and photoacoustic microscopy. J. Micro/Nanolithography MEMS MOEMS 2015, 14, 035004. [Google Scholar] [CrossRef]

- Li, Y.; Wang, Y.; Huang, W.; Zhang, Z. Automatic image stitching using SIFT. In Proceedings of the 2008 International Conference on Audio, Language and Image Processing, Shanghai, China, 7–9 July 2008; pp. 568–571. [Google Scholar] [CrossRef]

- Domokos, C.; Kato, Z. Parametric estimation of affine deformations of planar shapes. Pattern Recognit. 2010, 43, 569–578. [Google Scholar] [CrossRef]

- Szeliski, R. Image Alignment and Stitching: A Tutorial; Foundations and Trends® in Computer Graphics and Vision: Hanover, MA, USA, 2007; Volume 2, pp. 1–104. [Google Scholar] [CrossRef]

- AA, T.; Arfanakis, K. Motion correction in PROPELLER and turboprop-MRI. Magn. Reson Med. 2009, 62, 174–182. [Google Scholar]

- Mozaffarzadeh, M.; Moore, C.; Golmoghani, E.B.; Mantri, Y.; Hariri, A.; Jorns, A.; Fu, L.; Verweij, M.D.; Orooji, M.; de Jong, N.; et al. Motion-compensated noninvasive periodontal health monitoring using handheld and motor-based photoacoustic-ultrasound imaging systems. Biomed. Opt. Express 2021, 12, 1543–1558. [Google Scholar] [CrossRef] [PubMed]

- Heinrich, M.P.; Jenkinson, M.; Bhushan, M.; Matin, T.; Gleeson, F.V.; Brady, S.M.; Schnabel, J.A. MIND: Modality independent neighbourhood descriptor for multi-modal deformable registration. Med. Image Anal. 2012, 16, 1423–1435. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, D.; Hubbell, R.; Xia, J. Second generation slit-based photoacoustic tomography system for vascular imaging in human. J. Biophotonics 2017, 10, 799–804. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Wang, D.; Zhang, Y.; Geng, J.; Lovell, J.F.; Xia, J. Slit-enabled linear-array photoacoustic tomography with near isotropic spatial resolution in three dimensions. Opt. Lett. 2015, 41, 127–130. [Google Scholar] [CrossRef] [PubMed]

- Li, G.; Xia, J.; Wang, K.; Maslov, K.; Anastasio, M.A.; Wang, L.V. Tripling the detection view of high-frequency linear-array-based photoacoustic computed tomography by using two planar acoustic reflectors. Quant. Imaging Med. Surg. 2015, 5, 57–62. [Google Scholar] [CrossRef]

- Westerveld, W.J.; Hasan, M.U.; Shnaiderman, R.; Ntziachristos, V.; Rottenberg, X.; Severi, S.; Rochus, V. Sensitive, small, broadband and scalable optomechanical ultrasound sensor in silicon photonics. Nat. Photon. 2021, 15, 341–345. [Google Scholar] [CrossRef]

- Hazan, Y.; Levi, A.; Nagli, M.; Rosenthal, A. Silicon-photonics acoustic detector for optoacoustic micro-tomography. Nat. Commun. 2022, 13, 1488. [Google Scholar] [CrossRef]

- Pan, J.; Li, Q.; Feng, Y.; Zhong, R.; Fu, Z.; Yang, S.; Sun, W.; Zhang, B.; Sui, Q.; Chen, J.; et al. Parallel interrogation of the chalcogenide-based micro-ring sensor array for photoacoustic tomography. Nat. Commun. 2023, 14, 3250. [Google Scholar] [CrossRef] [PubMed]

- Park, J.; Park, B.; Kim, T.Y.; Jung, S.; Choi, W.J.; Ahn, J.; Yoon, D.H.; Kim, J.; Jeon, S.; Lee, D.; et al. Quadruple ultrasound, photoacoustic, optical coherence, and fluorescence fusion imaging with a trans-parent ultrasound transducer. Proc. Natl. Acad. Sci. USA 2021, 118, e1920879118. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Choi, S.; Kim, J.; Park, B.; Kim, C. Recent advances in deep-learning-enhanced photoacoustic imaging. Adv. Photonics Nexus 2023, 2, 054001. [Google Scholar] [CrossRef]

- Park, B.; Park, S.; Kim, J.; Kim, C. Listening to drug delivery and responses via photoacoustic imaging. Adv. Drug Deliv. Rev. 2022, 184, 114235. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).