6D Object Pose Estimation Based on Cross-Modality Feature Fusion

Abstract

:1. Introduction

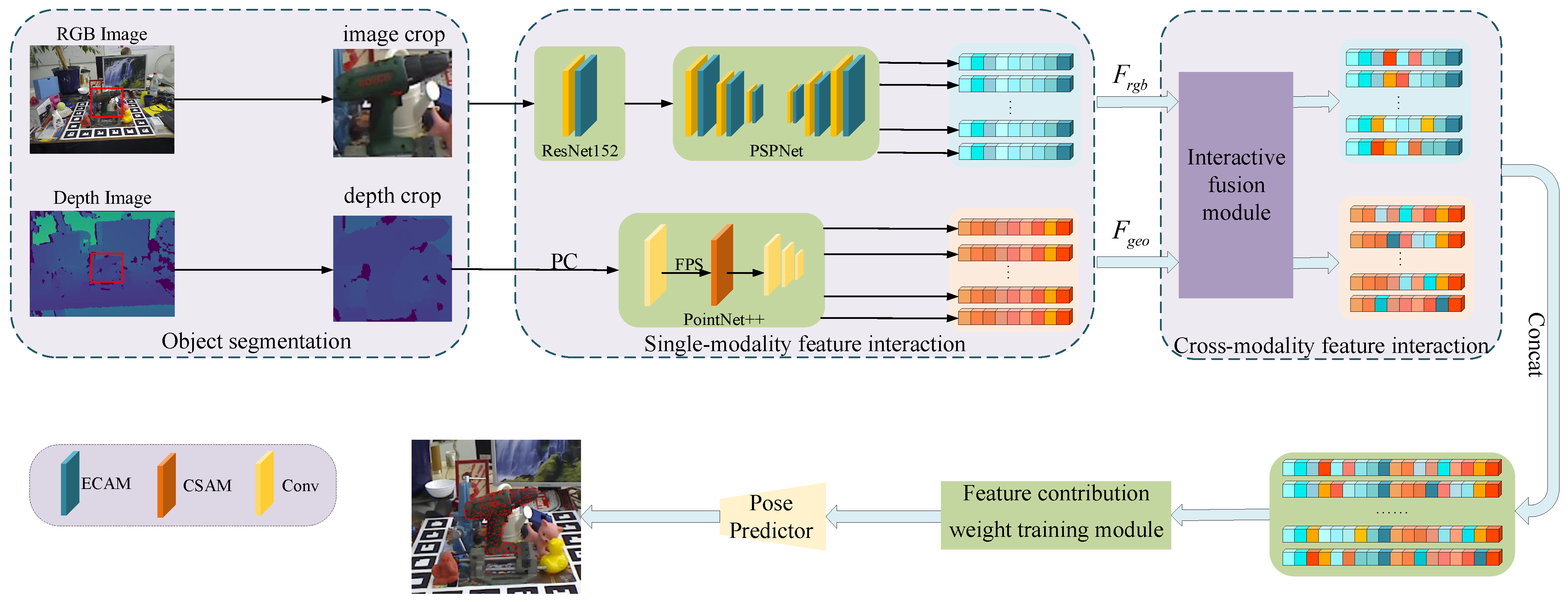

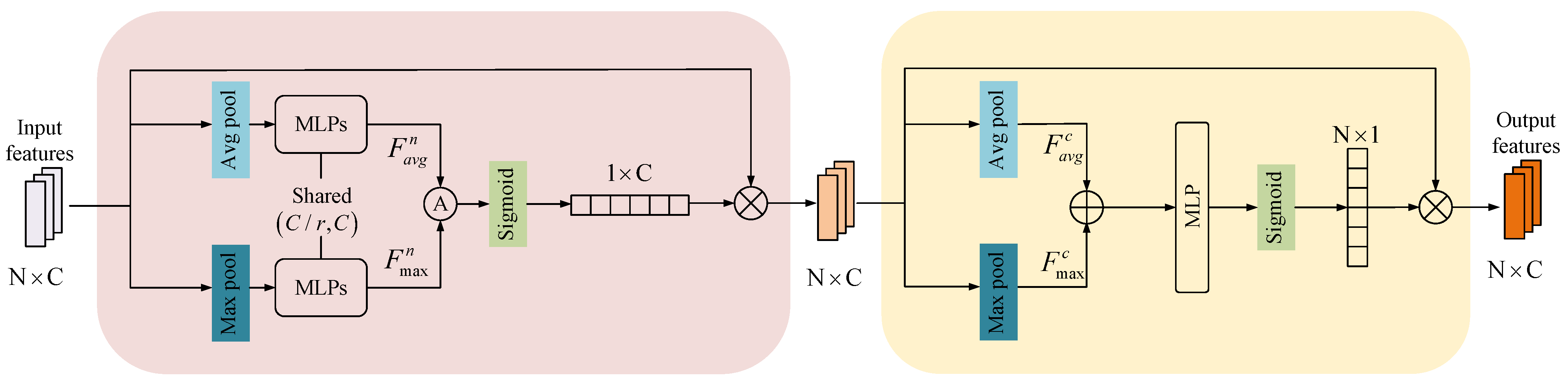

- A new cross-modality feature fusion method is proposed. Firstly, ECAM and CSAM are added to the feature extraction network to obtain contextual information within a single modality; secondly, CFFM is set to obtain interaction information between RGB and depth modalities so that cross-modality features can be extracted and fused efficiently at the pixel level to improve the accuracy of 6D object pose estimation;

- CWTM is proposed to obtain the contribution degrees of different modality data of 6D pose estimation tasks for target objects. The contextual information within each pixel neighborhood is fully utilized to achieve an accurate pose without additional refinement processes;

2. Related Work

2.1. Pose Estimation Based on RGB Data

2.2. Pose Estimation Based on Point Clouds

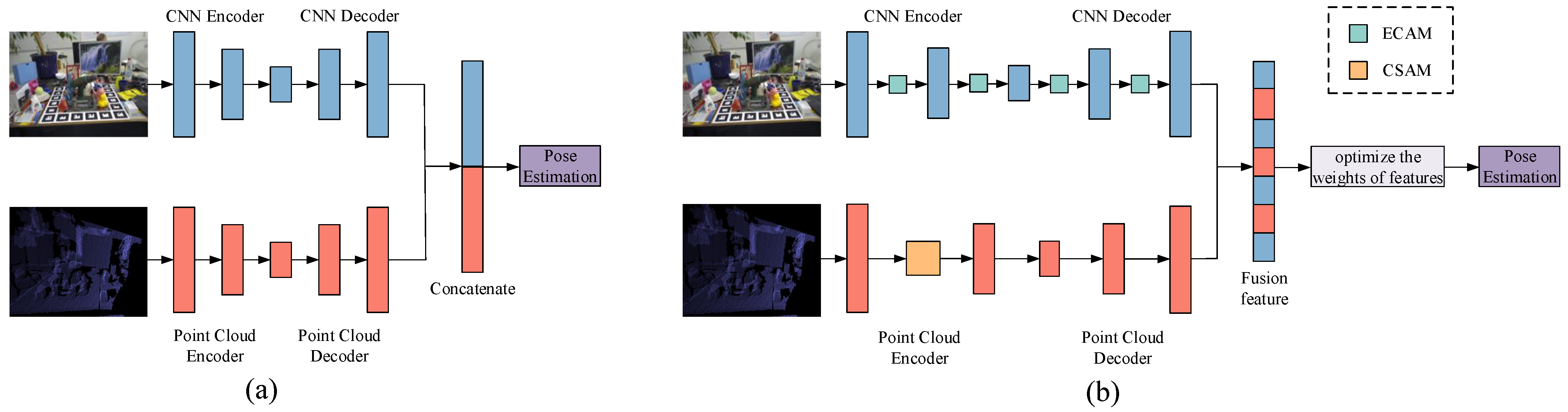

2.3. Pose Estimation Based on RGBD Data

2.4. Summary of 6D Object Pose Estimation Methods

3. Method

3.1. Overview of Our 6D Pose Estimation Method

3.2. Semantic Segmentation Module

3.3. Feature Extraction Module

3.3.1. RGB Image Feature Extraction Network

3.3.2. Point Cloud Feature Extraction Network

3.4. Cross-Modality Feature Fusion Module

3.5. Feature Contribution Weight Training Module

3.6. Object 6D Pose Estimation

4. Experiments

4.1. Datasets

4.2. Evaluation Metrics

4.3. Experimental Details

4.4. Evaluation of the LineMOD Dataset

4.5. Evaluation of the YCB-Video Dataset

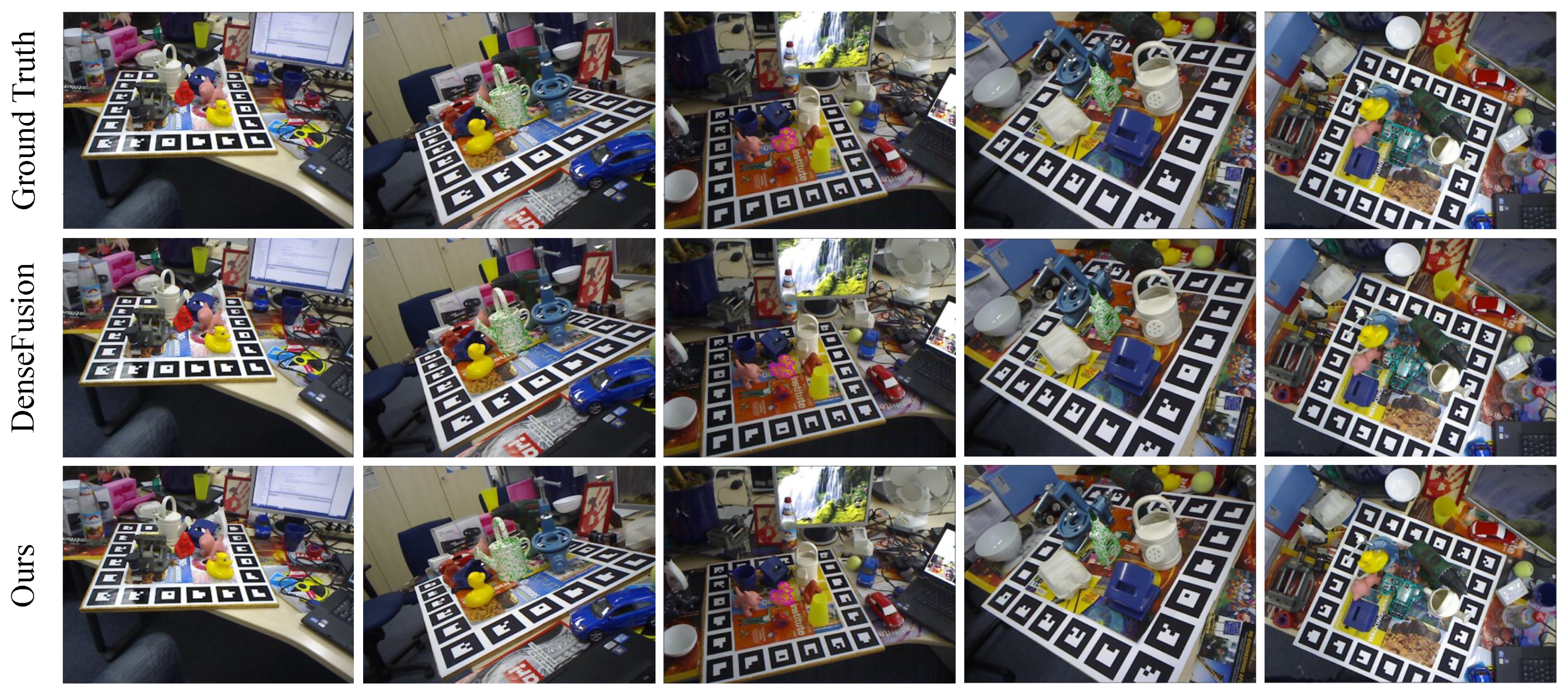

4.6. Noise Experiment

4.7. Ablation Experiments

4.8. Robotic Grasping Experiment

4.9. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CBAM | convolutional block attention module |

| CFFM | cross-modality feature fusion module |

| CNNs | convolution neural networks |

| CSAM | sampling center self-attention module |

| CWTM | contribution weight training module |

| ECAM | efficient channel attention module |

| FPS | farthest point sampling |

| ICP | iterative closest point |

| KNN | K nearest neighbor |

| MLP | multilayer perceptron |

| RANSAC | random sample consensus |

| SENet | squeeze-and-excitation network |

References

- Brachmann, E.; Krull, A.; Michel, F.; Gumhold, S.; Shotton, J.; Rother, C. Learning 6d object pose estimation using 3d object coordinates. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part II 13. Springer: Cham, Switzerland, 2014; pp. 536–551. [Google Scholar]

- Marchand, E.; Uchiyama, H.; Spindler, F. Pose estimation for augmented reality: A hands-on survey. IEEE Trans. Vis. Comput. Graph. 2015, 22, 2633–2651. [Google Scholar] [CrossRef]

- Cavallari, T.; Golodetz, S.; Lord, N.A.; Valentin, J.; Prisacariu, V.A.; Di Stefano, L.; Torr, P.H. Real-time RGB-D camera pose estimation in novel scenes using a relocalisation cascade. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 2465–2477. [Google Scholar] [CrossRef]

- Stoiber, M.; Elsayed, M.; Reichert, A.E.; Steidle, F.; Lee, D.; Triebel, R. Fusing Visual Appearance and Geometry for Multi-modality 6DoF Object Tracking. arXiv 2023, arXiv:2302.11458. [Google Scholar]

- Yu, J.; Weng, K.; Liang, G.; Xie, G. A vision-based robotic grasping system using deep learning for 3D object recognition and pose estimation. In Proceedings of the 2013 IEEE International Conference on Robotics and Biomimetics (ROBIO), Shenzhen, China, 12–14 December 2013; pp. 1175–1180. [Google Scholar]

- Papazov, C.; Haddadin, S.; Parusel, S.; Krieger, K.; Burschka, D. Rigid 3D geometry matching for grasping of known objects in cluttered scenes. Int. J. Robot. Res. 2012, 31, 538–553. [Google Scholar] [CrossRef]

- Azad, P.; Asfour, T.; Dillmann, R. Stereo-based 6d object localization for grasping with humanoid robot systems. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 2 November 2007; pp. 919–924. [Google Scholar]

- Khorasani, M.; Gibson, I.; Ghasemi, A.H.; Hadavi, E.; Rolfe, B. Laser subtractive and laser powder bed fusion of metals: Review of process and production features. Rapid Prototyp. J. 2023, 29, 935–958. [Google Scholar] [CrossRef]

- Kumar, A. Methods and materials for smart manufacturing: Additive manufacturing, internet of things, flexible sensors and soft robotics. Manuf. Lett. 2018, 15, 122–125. [Google Scholar] [CrossRef]

- Hinterstoisser, S.; Holzer, S.; Cagniart, C.; Ilic, S.; Konolige, K.; Navab, N.; Lepetit, V. Multimodal templates for real-time detection of texture-less objects in heavily cluttered scenes. In Proceedings of the 2011 International Conference on Computer Vision, Colorado Springs, CO, USA, 20–25 June 2011; pp. 858–865. [Google Scholar]

- Do, T.T.; Cai, M.; Pham, T.; Reid, I. Deep-6dpose: Recovering 6d object pose from a single rgb image. arXiv 2018, arXiv:1802.10367. [Google Scholar]

- Salti, S.; Tombari, F.; Di Stefano, L. SHOT: Unique signatures of histograms for surface and texture description. Comput. Vis. Image Underst. 2014, 125, 251–264. [Google Scholar] [CrossRef]

- Chen, W.; Jia, X.; Chang, H.J.; Duan, J.; Leonardis, A. G2l-net: Global to local network for real-time 6d pose estimation with embedding vector features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 4233–4242. [Google Scholar]

- Hinterstoisser, S.; Lepetit, V.; Ilic, S.; Holzer, S.; Bradski, G.; Konolige, K.; Navab, N. Model based training, detection and pose estimation of texture-less 3d objects in heavily cluttered scenes. In Proceedings of the ACCV 2012: 11th Asian Conference on Computer Vision, Daejeon, Republic of Korea, 5–9 November 2012; Revised Selected Papers, Part I 11. Springer: Berlin/Heidelberg, Germany, 2013; pp. 548–562. [Google Scholar]

- Wohlhart, P.; Lepetit, V. Learning descriptors for object recognition and 3d pose estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3109–3118. [Google Scholar]

- Sharifi, A. Development of a method for flood detection based on Sentinel-1 images and classifier algorithms. Water Environ. J. 2021, 35, 924–929. [Google Scholar] [CrossRef]

- Sharifi, A.; Amini, J. Forest biomass estimation using synthetic aperture radar polarimetric features. J. Appl. Remote Sens. 2015, 9, 097695. [Google Scholar] [CrossRef]

- Li, C.; Bai, J.; Hager, G.D. A unified framework for multi-view multi-class object pose estimation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 254–269. [Google Scholar]

- Wada, K.; Sucar, E.; James, S.; Lenton, D.; Davison, A.J. Morefusion: Multi-object reasoning for 6d pose estimation from volumetric fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 14540–14549. [Google Scholar]

- Gao, F.; Sun, Q.; Li, S.; Li, W.; Li, Y.; Yu, J.; Shuang, F. Efficient 6D object pose estimation based on attentive multi-scale contextual information. IET Comput. Vis. 2022, 16, 596–606. [Google Scholar] [CrossRef]

- Zuo, L.; Xie, L.; Pan, H.; Wang, Z. A Lightweight Two-End Feature Fusion Network for Object 6D Pose Estimation. Machines 2022, 10, 254. [Google Scholar] [CrossRef]

- Wang, C.; Xu, D.; Zhu, Y.; Martín-Martín, R.; Lu, C.; Fei-Fei, L.; Savarese, S. DenseFusion: 6d object pose estimation by iterative dense fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3343–3352. [Google Scholar]

- Xiang, Y.; Schmidt, T.; Narayanan, V.; Fox, D. Posecnn: A convolutional neural network for 6d object pose estimation in cluttered scenes. arXiv 2017, arXiv:1711.00199. [Google Scholar]

- Hinterstoisser, S.; Cagniart, C.; Ilic, S.; Sturm, P.; Navab, N.; Fua, P.; Lepetit, V. Gradient response maps for real-time detection of textureless objects. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 876–888. [Google Scholar] [CrossRef] [PubMed]

- Kehl, W.; Manhardt, F.; Tombari, F.; Ilic, S.; Navab, N. Ssd-6d: Making rgb-based 3d detection and 6d pose estimation great again. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1521–1529. [Google Scholar]

- Peng, S.; Liu, Y.; Huang, Q.; Zhou, X.; Bao, H. Pvnet: Pixel-wise voting network for 6dof pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4561–4570. [Google Scholar]

- Periyasamy, A.S.; Amini, A.; Tsaturyan, V.; Behnke, S. YOLOPose V2: Understanding and improving transformer-based 6D pose estimation. Robot. Auton. Syst. 2023, 168, 104490. [Google Scholar] [CrossRef]

- Geng, X.; Shi, F.; Cheng, X.; Jia, C.; Wang, M.; Chen, S.; Dai, H. SANet: A novel segmented attention mechanism and multi-level information fusion network for 6D object pose estimation. Comput. Commun. 2023, 207, 19–26. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the 2017 Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Song, S.; Xiao, J. Sliding shapes for 3d object detection in depth images. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part VI 13. Springer: Cham, Switzerland, 2014; pp. 634–651. [Google Scholar]

- Song, S.; Xiao, J. Deep sliding shapes for amodal 3d object detection in rgb-d images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 808–816. [Google Scholar]

- Zou, L.; Huang, Z.; Gu, N.; Wang, G. Learning geometric consistency and discrepancy for category-level 6D object pose estimation from point clouds. Pattern Recognit. 2023, 145, 109896. [Google Scholar] [CrossRef]

- Kehl, W.; Milletari, F.; Tombari, F.; Ilic, S.; Navab, N. Deep learning of local rgb-d patches for 3d object detection and 6d pose estimation. In Proceedings of the 14th European Conference on Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part III 14. Springer: Cham, Switzerland, 2016; pp. 205–220. [Google Scholar]

- Xu, D.; Anguelov, D.; Jain, A. PointFusion: Deep sensor fusion for 3d bounding box estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 244–253. [Google Scholar]

- He, Y.; Sun, W.; Huang, H.; Liu, J.; Fan, H.; Sun, J. Pvn3d: A deep point-wise 3d keypoints voting network for 6dof pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11632–11641. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11534–11542. [Google Scholar]

- Ioannou, Y.; Robertson, D.; Cipolla, R.; Criminisi, A. Deep roots: Improving cnn efficiency with hierarchical filter groups. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1231–1240. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Trigoni, N.; Markham, A. Randla-net: Efficient semantic segmentation of large-scale point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11108–11117. [Google Scholar]

| Methods | Traditional Methods | Deep Learning-Based Methods |

|---|---|---|

| RGB data-based methods | LineMOD [24] | SSD-6D [25], PoseCNN [23], PVNet [26], YOLOPose V2 [27], SANet [28] |

| RGBD data-based methods | [1,14,15] | DenseFusion [22], PointFusion [35], PVN3d [36], our method |

| PoseCNN + ICP | PVNet | SSD6D + ICP | Point Fusion | Dense Fusion | Ours (Inter) | Ours (Inter + Cross) | Ours (Inter + Cross + Weight) | |

|---|---|---|---|---|---|---|---|---|

| ape | 77.0 | 43.6 | 65.0 | 70.4 | 92.3 | 88.2 | 89.1 | 95.8 |

| ben. | 97.5 | 99.9 | 80.0 | 80.7 | 93.2 | 91.5 | 93.5 | 94.1 |

| cam. | 93.5 | 86.9 | 78.0 | 60.8 | 94.4 | 93.4 | 96.3 | 97.6 |

| can | 96.5 | 95.5 | 86.0 | 61.1 | 93.1 | 93.8 | 97.0 | 97.7 |

| cat | 82.1 | 79.3 | 70.0 | 79.1 | 96.5 | 94.4 | 96.4 | 97.6 |

| driller | 95.0 | 96.4 | 73.0 | 47.3 | 87.0 | 91.6 | 93.8 | 94.8 |

| duck | 77.7 | 52.6 | 66.0 | 63.0 | 92.3 | 89.4 | 89.4 | 95.0 |

| egg | 97.1 | 99.2 | 100.0 | 99.9 | 99.8 | 97.3 | 99.8 | 100.0 |

| glue | 99.4 | 95.7 | 100.0 | 99.3 | 100.0 | 99.1 | 99.7 | 100.0 |

| hole | 52.8 | 82.0 | 49.0 | 71.8 | 92.1 | 89.3 | 93.2 | 95.2 |

| iron | 98.3 | 98.9 | 78.0 | 83.2 | 97.0 | 95.7 | 97.2 | 98.0 |

| lamp | 97.5 | 99.3 | 73.0 | 62.3 | 95.3 | 94.2 | 97.6 | 97.2 |

| phone | 87.7 | 92.4 | 79.0 | 78.8 | 92.8 | 93.7 | 96.5 | 97.2 |

| MEAN | 88.6 | 86.3 | 76.7 | 73.7 | 94.3 | 93.2 | 95.3 | 96.9 |

| PoseCNN + ICP | Dense Fusion | YOLOPose V2 | Ours (Inter) | Ours (Inter + Cross) | Ours (Inter + Cross + Weight) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AUC | <2 cm | AUC | <2 cm | AUC | <2 cm | AUC | <2 cm | AUC | <2 cm | AUC | <2 cm | |

| master_chef_cam | 95.8 | 100.0 | 95.2 | 100.0 | 91.3 | - | 94.3 | 100.0 | 95.6 | 100.0 | 96.1 | 100.0 |

| cracker_box | 92.7 | 91.6 | 92.5 | 99.3 | 86.8 | - | 92.2 | 95.3 | 93.8 | 98.5 | 95.2 | 99.6 |

| sugar_box | 98.2 | 100.0 | 95.1 | 100.00 | 92.6 | - | 98.7 | 100.0 | 99.4 | 100.0 | 100.0 | 100.0 |

| tomato_soup_can | 94.5 | 96.9 | 93.7 | 96.9 | 90.5 | - | 93.9 | 95.7 | 95.1 | 97.1 | 95.7 | 97.5 |

| mustard_bottle | 98.6 | 100.0 | 95.9 | 100.0 | 93.6 | - | 97.1 | 100.0 | 97.5 | 100.0 | 98.2 | 100.0 |

| tuna_fish_can | 97.1 | 100.0 | 94.9 | 100.0 | 94.3 | - | 94.8 | 99.0 | 96.6 | 100.0 | 98.4 | 100.0 |

| pudding_box | 97.9 | 100.0 | 94.7 | 100.0 | 92.3 | - | 93.3 | 93.9 | 97.6 | 100.0 | 98.3 | 100.0 |

| gelatin_box | 98.8 | 100.0 | 95.8 | 100.0 | 90.1 | - | 99.1 | 100.0 | 99.3 | 100.0 | 99.4 | 100.0 |

| potted_meat_can | 92.7 | 93.6 | 90.1 | 93.1 | 85.8 | - | 91.6 | 90.8 | 92.9 | 92.3 | 93.7 | 94.6 |

| banana | 97.1 | 99.7 | 91.5 | 93.9 | 95.0 | - | 91.1 | 93.4 | 94.2 | 99.4 | 98.1 | 99.7 |

| pitcher_base | 97.8 | 100.0 | 94.6 | 100.0 | 93.6 | - | 95.2 | 100.0 | 96.8 | 100.0 | 97.1 | 100.0 |

| bleach_cleanser | 96.9 | 99.4 | 94.3 | 99.8 | 85.3 | - | 86.5 | 97.8 | 94.2 | 98.4 | 98.6 | 99.7 |

| bowl | 81.0 | 54.9 | 86.6 | 69.5 | 92.3 | - | 83.8 | 53.2 | 86.7 | 70.3 | 87.2 | 73.9 |

| mug | 95.0 | 99.8 | 95.5 | 100.0 | 84.9 | - | 90.5 | 98.2 | 93.8 | 99.1 | 95.1 | 99.5 |

| power_drill | 98.2 | 99.6 | 92.4 | 97.1 | 92.6 | - | 94.8 | 97.4 | 96.6 | 97.8 | 96.9 | 98.3 |

| wood_block | 87.6 | 80.2 | 85.5 | 93.4 | 84.3 | - | 84.3 | 81.3 | 86.1 | 83.2 | 88.7 | 83.9 |

| scissors | 91.7 | 95.6 | 96.4 | 100.0 | 93.3 | - | 92.8 | 94.7 | 95.6 | 96.3 | 97.4 | 99.4 |

| large_marker | 97.2 | 99.7 | 94.7 | 99.2 | 84.9 | - | 88.6 | 96.7 | 95.4 | 98.7 | 96.2 | 99.0 |

| large_clamp | 75.2 | 74.9 | 71.6 | 78.5 | 92.0 | - | 73.2 | 68.3 | 78.2 | 74.8 | 80.3 | 76.9 |

| extra_large_clamp | 64.4 | 48.8 | 69.0 | 69.5 | 88.9 | - | 62.3 | 62.5 | 67.4 | 65.7 | 74.7 | 67.4 |

| foam_brick | 97.2 | 100.0 | 92.4 | 100.0 | 90.7 | - | 93.3 | 99.6 | 95.4 | 100.0 | 96.8 | 100.0 |

| MEAN | 93.0 | 93.2 | 91.2 | 95.3 | 90.1 | - | 90.5 | 93.5 | 93.4 | 95.8 | 94.7 | 96.5 |

| Noise Range (mm) | 0.0 | 5.0 | 10.0 | 15.0 | 20.0 |

| Accuracy | 98.3% | 96.3% | 95.6% | 95.1% | 93.8% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, M.; Zhang, L.; Wang, X.; Li, S.; Jiao, Y. 6D Object Pose Estimation Based on Cross-Modality Feature Fusion. Sensors 2023, 23, 8088. https://doi.org/10.3390/s23198088

Jiang M, Zhang L, Wang X, Li S, Jiao Y. 6D Object Pose Estimation Based on Cross-Modality Feature Fusion. Sensors. 2023; 23(19):8088. https://doi.org/10.3390/s23198088

Chicago/Turabian StyleJiang, Meng, Liming Zhang, Xiaohua Wang, Shuang Li, and Yijie Jiao. 2023. "6D Object Pose Estimation Based on Cross-Modality Feature Fusion" Sensors 23, no. 19: 8088. https://doi.org/10.3390/s23198088

APA StyleJiang, M., Zhang, L., Wang, X., Li, S., & Jiao, Y. (2023). 6D Object Pose Estimation Based on Cross-Modality Feature Fusion. Sensors, 23(19), 8088. https://doi.org/10.3390/s23198088