Abstract

Outliers can be generated in the power system due to aging system equipment, faulty sensors, incorrect line connections, etc. The existence of these outliers will pose a threat to the safe operation of the power system, reduce the quality of the data, affect the completeness and accuracy of the data, and thus affect the monitoring analysis and control of the power system. Therefore, timely identification and treatment of outliers are essential to ensure stable and reliable operation of the power system. In this paper, we consider the problem of detecting and localizing outliers in power systems. The paper proposes a Minorization–Maximization (MM) algorithm for outlier detection and localization and an estimation of unknown parameters of the Gaussian mixture model (GMM). To verify the performance of the method, we conduct simulation experiments by simulating different test scenarios in the IEEE 14-bus system. Numerical examples show that in the presence of outliers, the MM algorithm can detect outliers better than the traditional algorithm and can accurately locate outliers with a probability of more than . Therefore, the algorithm provides an effective method for the handling of outliers in the power system, which helps to improve the monitoring analyzing and controlling ability of the power system and to ensure the stable and reliable operation of the power system.

1. Introduction

With the rapid development of the power industry around the world, the scale of the power system has gradually expanded, and its operation mode and network structure have become more complex. The combination of power grids and modern information forms smart grids, which pose greater challenges to the safety and reliability of power system operations. In order to improve the operational efficiency and reliability of the power grid, the dispatching system needs to collect complete and reliable real-time data for processing to facilitate online analysis and decision control of advanced application software.

Power system state estimation is one of the core functions of the Energy Management System (EMS) in the power system dispatching center [1,2,3]. Its function refers to the control center to collect various measurement data through sensors and to estimate the current operating state of the power system according to the measurement data. The safe and economical operation of modern power grids depends on the EMS. The many functions of the Energy Management System can be divided into two parts: online application for real-time change analysis of the power grid and offline application for typical power flow section analysis. Power system state estimation is the core of power system online monitoring, analysis, and control functions and plays an important role in the intelligent analysis of power grid dispatching. State estimation is the basis of most advanced software for online applications, and the accuracy of the state estimation results is closely related to the accuracy of the subsequent analysis calculation results. Due to the continuous improvement in the automation of the power system, the quality requirements for real-time measurement data in state estimation are becoming higher and higher. In addition to the measurement noise, there may be outliers in the obtained measurement data. Outliers [4,5,6] can be caused by a variety of factors. The following are some common causes of outliers:

- (1)

- Sensors can malfunction or fail, resulting in inaccurate or incomplete data collection, resulting in outliers.

- (2)

- Human factors, such as incorrect data entry, operational errors, etc., may cause data anomalies.

- (3)

- Natural disasters and emergencies, such as storms, earthquakes, fires, explosions, etc., may cause damage to electric power facilities and thus generate outliers.

- (4)

- Factors such as power equipment failure, damage, data transmission, and processing errors can lead to outliers.

The existence of outliers will cause the state estimation results to deviate significantly from the actual operation of the system, and the performance of the estimator will be seriously degraded, so the work of outlier detection becomes particularly important.

To solve the problem of outliers in measurement data, the traditional detection methods include weighted least squares (WLS) [7,8,9], residual search method [10,11], and non-quadratic criterion method [12,13]. These methods are further elaborated on below. The basic idea of the WLS is to adjust the weights of measurements by assigning larger weights to relatively reliable and accurate measurements and smaller weights to measurements that are relatively unreliable and affected by outliers. By adjusting the weights, the WLS algorithm can reduce the effect of outliers on the fitted results and improve the accuracy of the parameter estimates. However, it is important to note that the WLS algorithm has some limitations when dealing with outliers. When the number of outliers is high or the difference between the outliers and normal values is large, the WLS algorithm may not be able to eliminate the effect of the outliers. The residual search method uses weighted residuals and standard residuals to sort the measurement data. After eliminating the data with large residuals, the state estimation is re-estimated to achieve the purpose of optimal estimation. However, the disadvantage of this method is that the calculation amount is large, and it is easy to have residual pollution and residual submergence, resulting in false detection or missed detection. The strategy adopted by the non-quadratic criterion method is to successively reduce the weight of suspicious data, rather than eliminating suspicious data one by one. Re-estimation is avoided to reduce the amount of calculation. However, this may lead to difficult consequences of convergence, and there is no guarantee that the final estimate will be optimal. In summary, traditional outlier detection algorithms have certain limitations in addressing outlier problems. Therefore, new methods have been proposed to solve the problem of the presence of outliers.

In this paper, the Minorization–Maximization (MM) algorithm [14,15,16,17,18,19,20] is used to detect outliers. Compared with traditional methods for detecting outliers, the MM algorithm can detect outliers more accurately, especially for complex data distributions, and can obtain better performance. The MM algorithm is an optimization algorithm whose basic idea is to optimize the original function to be optimized indirectly by optimizing the alternative function by finding an easier function to be optimized. In each iteration, the algorithm optimizes the original function by transforming the original problem into a more tractable problem through a series of derivations and transformations. The algorithm first appeared in the field of online search in the 1970s [21], when Ortega and Rheinboldt first mentioned the MM principle [22]. Later, de Leeuw proposed the first MM algorithm in a multidimensional scale analysis, and Hunter and Lange named it the MM algorithm [23], which has since been widely used in statistics. MM algorithms are an important tool in optimization problems because of their conceptual simplicity, ease of implementation, and numerical stability. Moreover, MM algorithms are provably convergent optimization algorithms that are guaranteed to find either a globally optimal solution or a locally optimal solution under certain conditions. Overall, the MM algorithm is a very important optimization algorithm with a wide range of application scenarios and excellent properties that can effectively solve many practical problems.

This paper is divided into six main sections. First, in Section 2, the normal measurement model and the measurement model containing outliers are described. Then, Section 3 introduces the basic principles of the MM algorithm, including the iterative process of the MM algorithm, the objective function, and its optimization methods. In Section 4, this paper further introduces the parameter estimation based on the MM algorithm, including the application of the MM algorithm in anomaly detection and the specific implementation method of parameter estimation. In Section 5, the convergence and complexity of the algorithm are analyzed. In Section 6, the simulation results analysis of this paper are given, and the superiority and feasibility of the anomaly detection method based on the MM algorithm are proved through a detailed analysis of the experimental results. Finally, in Section 7, the paper is summarized.

2. System Model

2.1. Measurement Model

The measurement vector is a nonlinear function [24,25] of the state vector and can be expressed as

where is the measurement error vector, and is assumed to be the Gaussian measurement noise with zero mean and covariance , i.e., . , is a nonlinear function relating the ith measurement to the state vector . An alternative expression for the nonlinear relationship between the state vector and the measurement vector can be given by the following:

In this expression, and denote the active and reactive power injection at bus i, respectively; and denote the real and reactive power flow from bus i to bus j, respectively; represents the voltage at bus i; denotes the phase angle at bus i; denotes the phase difference between buses i and j; represents the line conductance between buses i and j; represents the conductance of the branch branch of bus i; and is the set of buses associated with bus i.

In power system SE, the state vector, , is usually composed of all nodes voltage amplitudes and their corresponding angles. The measurement vector, , consists of active and reactive power injection and flow, voltage, and current magnitudes obtained from SCADA.

The estimation of the state vector can be iteratively solved using weighted least squares (WLS); then,

where is the solution vector at the kth iteration; is the measurement error covariance matrix; and is the measurement Jacobian matrix, that is

The weighted least squares iterative algorithm is a common method that is used to estimate state vectors.

2.2. Measurement Model with Outliers

If the observations completely conform to the measurement model in (1), the estimation can be obtained using WLS. However, when a few outliers exist in the measurement, the estimation performance may be seriously degraded. Outliers are deviations or anomalies in data that can be caused by a variety of factors, including sensor failures, communication or human errors, power equipment failures, damages, and errors in data transmission or processing. In the presence of outliers, the observations do not follow the model in (1) exactly, but they can obey the following model:

where the vector denotes the outliers contained in the observations.

Traditional outlier detection methods usually use normalized residuals obtained based on weighted least squares to determine the presence of outliers in the data. However, this method has limitations in facing residual contamination and residual flooding problems. To overcome these problems, some improved outlier detection methods can be considered.

2.3. Gaussian Mixture Model for Measurements

Suppose that there are S outliers for M measurements of the measurement vector z. In the measurement acquisition process, we perform outlier detection by acquiring measurement vectors to determine whether there are outliers in the measurements. In the presence of outliers, the noise characteristics of the data are altered. Therefore, the ith measurement of the lth measurement vector is denoted as follows:

where and obey the Gaussian distribution, respectively, i.e., and . denotes the measurement error vector in the absence of outliers. denotes the measurement error vector in the presence of outliers. Hence, the Gaussian mixture model (GMM) [26,27,28] is introduced to represent the probability density of , and is given by

where denotes the kth component in the mixture model and is the mixture coefficient and satisfies

It is assumed that M represents the number of measurements and S represents the number of outliers, hence , . The parameters that need to be estimated in GMM are . Then, the objective function of can be written as

When unknown parameters are estimated, we cannot use the maximum likelihood method to derive the parameters that maximize the likelihood function as the single Gaussian model (SGM) does. To solve this problem, the Minorization–Maximization (MM) algorithm can be used. Through the MM algorithm, we can iteratively calculate the parameters in GMM.

3. The Minorization–Maximization Algorithm

For all observation data, it is not known in advance which sub-distribution they belong to. Each submodel has unknown parameters, and direct derivation cannot be calculated. It needs to be solved with an iterative approach. Therefore, the Minorization–Maximization algorithm can be used to solve the problem that unknown parameters are difficult to solve.

The MM algorithm is an iterative approach. The basic idea is to find a function that is easier to optimize as a surrogate function for the objective function and to indirectly optimize the objective function by optimizing the surrogate function. In each iteration, a new alternative function is constructed based on the parameter estimates obtained from the previous iteration. The new substitution function is then optimized to obtain the parameter estimate in this iteration and to use it in the calculation of the next iteration. Through continuous iteration, the estimated value of the parameter constantly approaches the optimal solution of the objective function.

According to the basic idea of MM algorithm, the surrogate function should satisfy

where denotes the sth iteration of and the surrogate function is always below the objective function . When , the surrogate function is tangent to the function . Then, the surrogate function is maximized to obtain

as the th iteration of the . Finally, the maximum likelihood algorithm is used to estimate the unknown parameters.

From the basic principles of the MM algorithm, the difficulty of using the MM algorithm to estimate unknown parameters is in constructing a suitable function as a surrogate function of the objective function. The construction of the surrogate function is described in the next section.

4. Parameter Estimation of GMM

Constructing a suitable surrogate function is the key to estimating unknown parameters using the MM algorithm. Tian et al. [17] propose a new Assembly and Decomposition method (AD) to construct the surrogate function. Among them, technology is the basis of the MM algorithm, which guides the construction of the surrogate function. The D technique decomposes the objective function and then optimizes it.

4.1. Construction of Surrogate Function

According to the AD method, Jensen’s inequality is used to construct a surrogate function in the minorization step. Jensen’s inequality is given by the Equation (10), that is,

where we let be a concave function, , and . Therefore, the surrogate function is expressed as

where represents the sth iteration of and the weight function is denoted as

where

Define . By comparing the values of , the following weight function is given.

The weight function satisfies

and

is a constant term independent of parameter .

4.2. Parameter Estimation of , , and

In the th iteration, the maximum likelihood estimation (MLE) of parameter can be constructed by maximizing the surrogate function, that is

The expansion of Equation (24) can be written as

The partial derivative of the surrogate function with respect to unknown parameters can be obtained

and an iterative formula for the parameters can be obtained by solving the above equation, as follows:

Then, The detailed workflow for estimating GMM parameters based on MM algorithm is shown in Algorithm 1.

| Algorithm 1 GMM parameters are estimated based on the MM algorithm |

Input: The measurements ;

|

5. Algorithm Analysis

5.1. Convergence Analysis

Since the MM algorithm is essentially iterative, the following inference is derived from the question of whether this algorithm can guarantee convergence [29].

The convergence of the algorithm refers to the monotonic convergence of the MM algorithm to some stationary point of the log-likelihood function . To prove this inference, we first need to prove the detailed workflow of the MM algorithm shown below.

holds for any in its parameter space. The proof is as follows: For a given a priori position estimate , it is easy to show that updates , , , , and for the Gaussian distribution are global optimal solutions to the corresponding maxi- mization problems. Therefore, we can easily conclude that

where the right-hand side is exactly .

The new estimate is obtained by minimizing

using the BFGS quasi-Newton method with an initial guess set of . The BFGS quasi-Newton method [30,31,32] guarantees downhill progression toward the local minimum in the Newton step of each iteration, so that the new estimate does not make decrease in the th iteration, that is

where the left-hand side is identical to . Thus, (36) can be proved. This means that the value of increases monotonically with iteration. Since it is bounded from above, convergence to some stationary point of the is guaranteed.

5.2. Complexity Analysis

Complexity evaluation is often assessed using floating-point operations (FLOPs) as a metric. Floating point arithmetic is a common metric for measuring the amount of computation required to execute an algorithm. It can be used to compare the computational complexity and efficiency of different algorithms. The computational complexity of the MM algorithm under the Gaussian mixture model is shown as follows. We will first define the floating point operations required for some basic operations.

- (1)

- : FLOPs for addition.

- (2)

- : FLOPs for subtraction.

- (3)

- : FLOPs for multiplication.

- (4)

- : FLOPs for division.

- (5)

- : FLOPs for exponents.

- (6)

- : FLOPs for raising to a real power.

- (7)

- : FLOPs for square roots.

Since the MM algorithm is an iterative algorithm, we analyze the th iteration. Given a priori parameter estimation, the first step of the estimation is to evaluate the of and . This requires a calculation:

for .

for .

for . Thus, Equation (37) requires FLOPs, Equation (38) requires FLOPs, and Equation (39) requires FLOPs. Then,

for . It is easy to prove that Equation (40) requires FLOPs, Equation (41) requires FLOPs, and Equation (42) requires FLOPs. Define as the total number of FLOPS consumed in one MM iteration to estimate . is equal to the total number of FLOPs consumed in Equation (37) through Equation (42), that is

6. Simulation

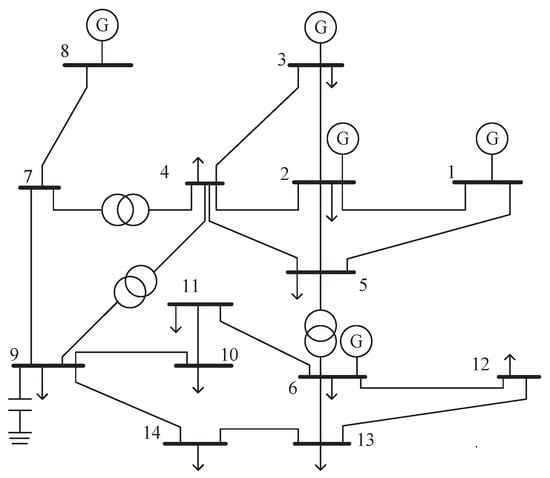

To validate the feasibility of using the MM algorithm to detect outliers, this paper conducts a simulation analysis on the IEEE 14-bus system shown in Figure 1. Based on the relevant data in the Matpower power system simulation package, a conventional power flow calculation is performed, and the obtained system operation data are used as the measurement data of the power system. The simulation parameters utilized throughout the entire simulation process are summarized in Table 1, and the obtained experimental results are shown as follows.

Figure 1.

IEEE 14-bus system.

Table 1.

Simulation parameters.

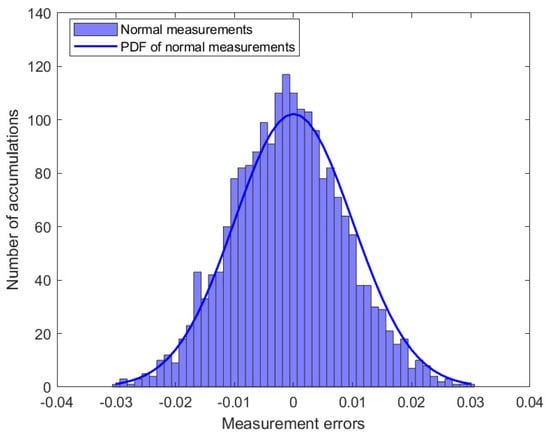

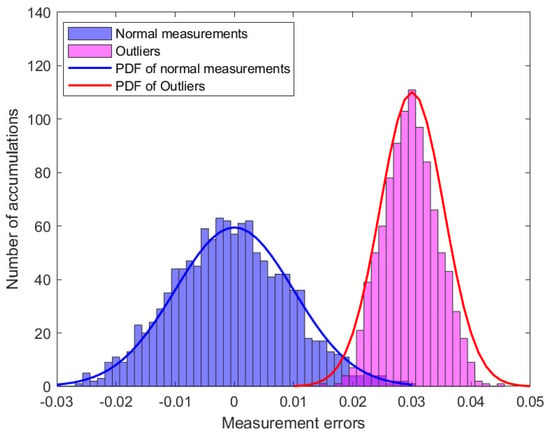

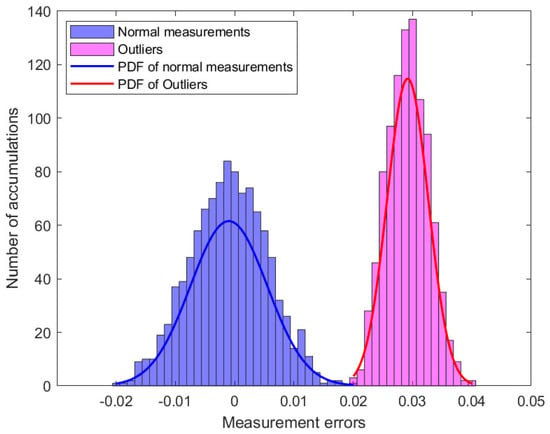

In this study, simulation experiments containing 50 measurement vectors were analyzed. Figure 2 shows the distribution of the 2050 measurement errors. When there are outliers in the power system, part of the measurement error will change, assuming , where denotes the component of the outlier vector . Figure 3 shows the distribution of the measurement errors in the presence of outliers. Subsequently, the MM algorithm was used to classify the measurement errors and is presented in Figure 4.

Figure 2.

The distribution of measurements errors.

Figure 3.

The distribution of measurement errors in the presence of outliers.

Figure 4.

The distribution of measurements errors with MM algorithm.

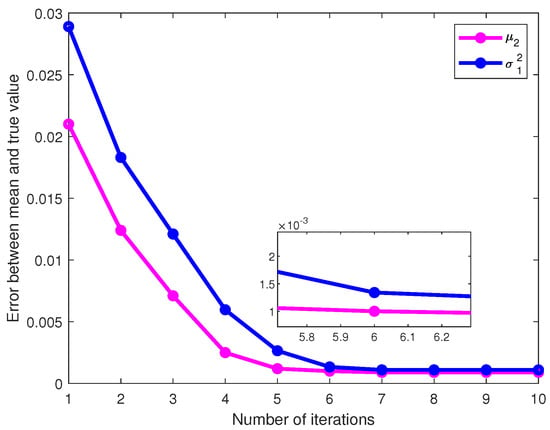

To verify the convergence of the algorithm, it is assumed that M = 41, S = 8, and L = 50. The Monte Carlo method was used to perform 1000 independent experiments to detect the values of parameters . The errors between the mean of the estimated values and the actual values were calculated for each parameter. As shown in Figure 5, as the number of iterations increases, the estimated mean values of the parameters and gradually approach the true values, the error decreases, and the values finally converged to 0.00102 and 0.00134 at the sixth iteration, respectively. And, a variation in these errors was observed as the number of buses with outliers increased. The error between the mean and true values of the parameter estimates obtained from 1000 independent experiments was calculated. The equations are given by

and

Figure 5.

The error of the parameter varies with the number of iterations.

Since , and , then the error for can be expressed as

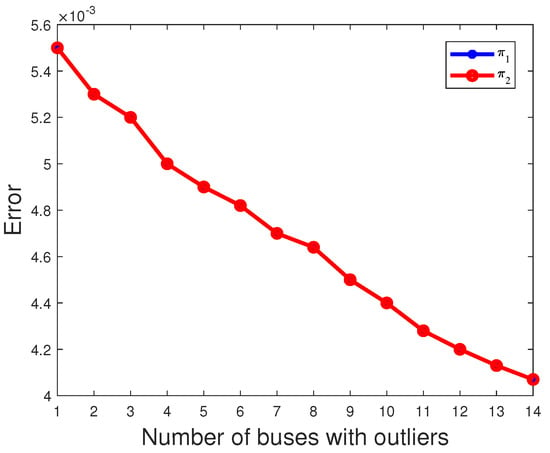

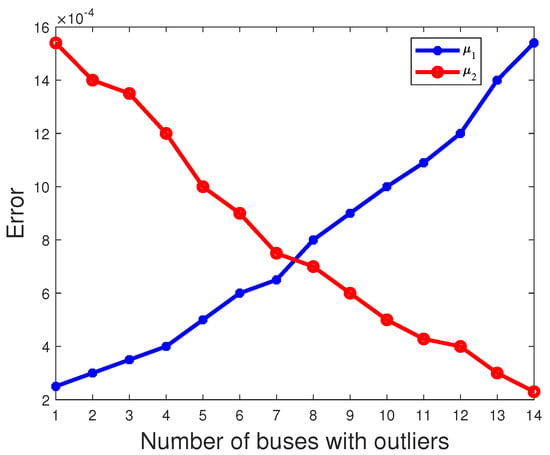

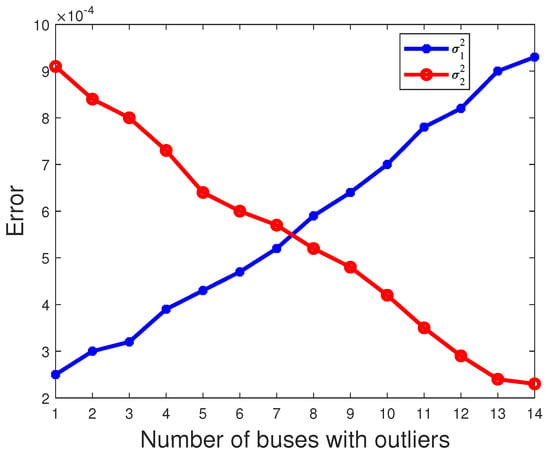

where N = 1000 and denotes the estimate of obtained in the nth experiment. The estimated mean is then obtained by summing over 1000 independent experiments and dividing by N. And, due to , the red line coincides with the blue line in Figure 6. As shown in Figure 6, Figure 7 and Figure 8, it is evident that as the number of buses with outliers gradually increases, we clearly observe a gradual decrease in the error between the estimated mean and the actual values of the parameters , and . This is due to the increasing number of outliers as a proportion of the overall measurements. In this scenario, the estimated mean values of the parameters , , and gradually increase, getting closer to the true values, which leads to a gradual decrease in the error. In other words, as the number of outlier buses increases, we observe a significant improvement in the accuracy of the parameter estimates.

Figure 6.

The error of parameter varies with the number of buses with outliers.

Figure 7.

The error of parameter varies with the number of buses with outliers.

Figure 8.

The error of parameter varies with the number of buses with outliers.

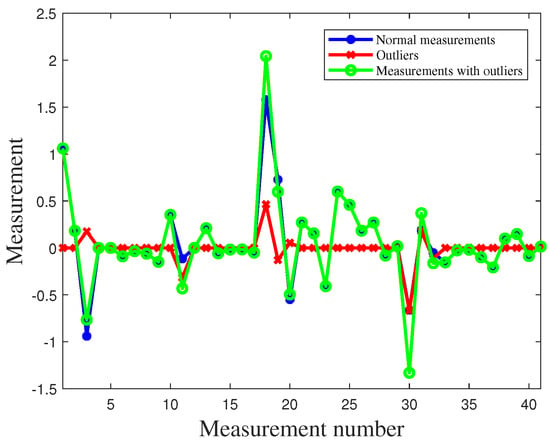

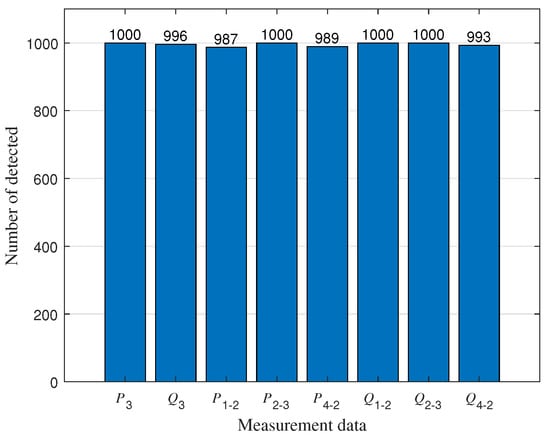

As shown in Figure 9, we assume that outliers exist in the power measurements of , , , , , , , and among the 41 measurements. To detect outliers, we conducted 1000 independent experiments and plotted Figure 10, showing the results of outlier detection based on the experimental results. By observing Figure 10, we can notice that the measurements in ,,, and are closer to the normal measurements. As a result, a small amount of data has been mistakenly identified as normal data. After applying the MM algorithm for outlier detection, we achieved an extremely high detection rate of over . This implies that the vast majority of outlier values can be accurately detected.

Figure 9.

Data distribution before and after outliers in IEEE 14-bus system.

Figure 10.

Outlier detection results.

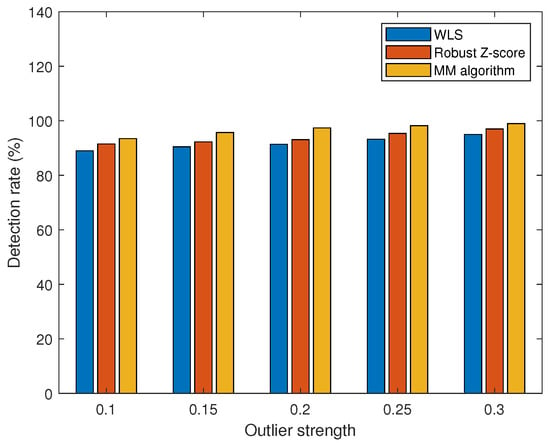

To validate the detection performance of the MM algorithm, WLS, and robust Z-score [33] for different outlier strengths, we use the detection rate as a performance metric. Outlier strength is a metric used to quantify the degree of deviation of the outliers from the normal measurements. The outlier strength is defined as

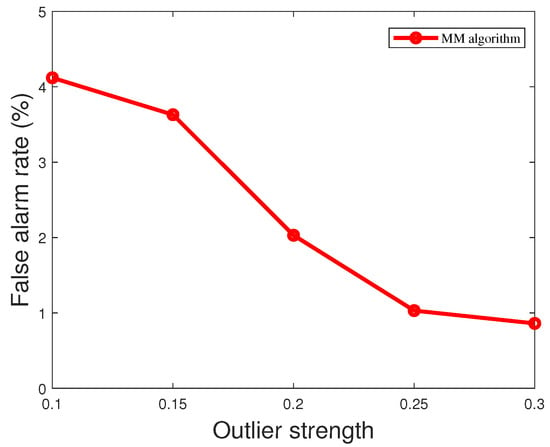

where is the outlier vector and is the measurement vector. When the outlier strength is greater, this means that the deviation of the outlier relative to the normal measurement is greater and may have a more significant effect on the system. As shown in Figure 11, when the outlier strength is low, the outliers are not easily detected. However, the MM algorithm proposed in this paper outperforms WLS and robust Z-score in terms of detection performance. This is due to the iterative optimization process of the MM algorithm, which can gradually approach the optimal solution and shows good convergence, stability, and robustness in practice. Since the MM algorithm uses probabilistic models, it is better able to deal with outliers in the data and to reduce the impact of outliers on the results. The MM algorithm can limit the impact of outliers and provide more accurate parameter estimation, resulting in better performance in outlier detection. In contrast, WLS may suffer from outliers, leading to biased parameter estimates. The robust Z-score is more robust to outliers relative to the traditional Z-score, but MM algorithms are usually better at robustness to outliers in the modeling process. As the strength of the outliers varies, the false alarm rate also varies. As shown in Figure 12, the greater the strength of the outliers, the easier the outliers are detected and the smaller the false alarm rate. When the strength of the outliers is greater than 0.3, the false alarm rate decreases to less than .

Figure 11.

Comparison of detection performance at different outlier strengths.

Figure 12.

False alarm rate of MM algorithm for different outlier strengths.

7. Conclusions

Considering the impact of the presence of outliers on security in power systems, the introduction of MM algorithms for outlier detection and localization is an effective approach. The algorithm clusters and models the measurement errors using GMM and solves the model parameters iteratively using the MM algorithm. The feasibility and convergence of the MM algorithm in power systems are investigated through a simulation analysis on an IEEE 14-bus system, and the performance is compared with the WLS and robust Z-score at the same outlier intensity. The experimental results show that the MM algorithm can efficiently identify outliers in the power system and its detection rate can reach . The results of this research emphasize the superiority of the MM algorithm in power system outlier detection. Compared with WLS and robust Z-score, the MM algorithm can model and detect outliers more accurately by introducing a probabilistic model and an iterative optimization algorithm to improve the security and reliability of the power system.

Author Contributions

Conceptualization, L.Q. and P.H.; methodology, Y.L. and W.G.; software, L.Q. and P.H.; validation, W.G.; formal analysis, F.H.; resources, W.G.; data curation, F.H.; writing—original draft preparation, L.Q.; writing—review and editing, W.G. and X.G.; visualization, L.Q. and P.H.; supervision, W.G.; project administration, Y.L.; funding acquisition, W.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (NSFC) (U21A20146), Open Research Fund of Anhui Province Key Laboratory of Detection Technology and Energy Saving Devices (JCKJ2022A10), Collaborative Innovation Project of Anhui Universities (GXXT-2020-070), and Open Research Fund of Anhui Province Key Laboratory of Electric Drive and Control (DQKJ202103).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We thank the anonymous reviewers for their valuable comments.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhao, J.; Gómez-Expósito, A.; Netto, M.; Mili, L.; Abur, A.; Terzija, V.; Kamwa, I.; Pal, B.; Singh, A.K.; Qi, J.; et al. Power system dynamic state estimation: Motivations, definitions, methodologies, and future work. IEEE Trans. Power Syst. 2019, 34, 3188–3198. [Google Scholar] [CrossRef]

- Kang, J.W.; Xie, L.; Choi, D.H. Impact of data quality in home energy management system on distribution system state estimation. IEEE Access 2018, 6, 11024–11037. [Google Scholar] [CrossRef]

- Wang, Y.; Tian, J.; Sun, Z.; Wang, L.; Xu, R.; Li, M.; Chen, Z. A comprehensive review of battery modeling and state estimation approaches for advanced battery management systems. Renew. Sustain. Energy 2020, 131, 110015. [Google Scholar] [CrossRef]

- Zhao, J.; Mili, L. A theoretical framework of robust H-infinity unscented Kalman filter and its application to power system dynamic state estimation. IEEE Trans. Signal Process. 2019, 67, 2734–2746. [Google Scholar] [CrossRef]

- Liu, S.; Zhao, Y.; Lin, Z.; Liu, Y.; Ding, Y.; Yang, L.; Yi, S. Data-driven event detection of power systems based on unequal-interval reduction of PMU data and local outlier factor. IEEE Trans. Smart Grid 2019, 11, 1630–1643. [Google Scholar] [CrossRef]

- Wei, S.; Xu, J.; Wu, Z.; Hu, Q.; Yu, X. A False Data Injection Attack Detection Strategy for Unbalanced Distribution Networks State Estimation. IEEE Trans. Smart Grid 2023, 14, 3992–4006. [Google Scholar] [CrossRef]

- Abdolkarimzadeh, M.; Aghdam, F.H. A novel and efficient power system state estimation algorithm based on Weighted Least Square (WLS) approach service. J. Power Technol. 2019, 99, 15–24. Available online: https://papers.itc.pw.edu.pl/index.php/JPT/article/view/1258 (accessed on 15 June 2023).

- Wang, L.; Zhou, Q.; Jin, S. Physics-guided deep learning for power system state estimation. J. Mod. Power Syst. Clean Energy 2020, 8, 607–615. [Google Scholar] [CrossRef]

- Kotha, S.K.; Rajpathak, B. Power system state estimation using non-iterative weighted least square method based on wide area measurements with maximum redundancy. Electr. Pow. Syst. Res. 2022, 206, 107794. [Google Scholar] [CrossRef]

- Tan, S.; Wu, W.; Shao, Z.; Li, Q.; Li, B.; Huang, J. CALPA-NET: Channel-pruning-assisted deep residual network for steganalysis of digital images. IEEE Trans. Inf. Forensics Secur. 2020, 16, 131–146. [Google Scholar] [CrossRef]

- Kirchgässner, W.; Wallscheid, O.; Böcker, J. Estimating electric motor temperatures with deep residual machine learning. IEEE Trans. Power Electron. 2020, 36, 7480–7488. [Google Scholar] [CrossRef]

- Bhatti, B.A.; Broadwater, R. Distributed Nash equilibrium seeking for a dynamic micro-grid energy trading game with non-quadratic payoffs. Energy 2020, 202, 117709. [Google Scholar] [CrossRef]

- Asadi, S.; Khayatian, A.; Dehghani, M.; Vafam, N.; Khooban, M.H. Robust sliding mode observer design for simultaneous fault reconstruction in perturbed Takagi-Sugeno fuzzy systems using non-quadratic stability analysis. J. Vib. Control 2020, 26, 1092–1105. [Google Scholar] [CrossRef]

- Sun, Y.; Babu, P.; Palomar, D.P. Majorization-minimization algorithms in signal processing, communications, and machine learning. IEEE Trans. Signal Process. 2016, 65, 794–816. [Google Scholar] [CrossRef]

- Arora, A.; Tsinos, C.G.; Rao, B.S.M.R.; Chatzinotas, S.; Ottersten, B. Hybrid transceivers design for large-scale antenna arrays using majorization-minimization algorithms. IEEE Trans. Signal Process. 2019, 68, 701–714. [Google Scholar] [CrossRef]

- Panwar, K.; Katwe, M.; Babu, P.; Ghare, P.; Singh, K. A majorization-minimization algorithm for hybrid TOA-RSS based localization in NLOS environment. IEEE Commun. Lett. 2022, 26, 1017–1021. [Google Scholar] [CrossRef]

- Tian, G.L.; Huang, X.F.; Xu, J. An assembly and decomposition approach for constructing separable minorizing functions in a class of MM algorithms. Stat. Sin. 2019, 29, 961–982. [Google Scholar] [CrossRef]

- Tian, G.L.; Ju, D.; Chuen Yuen, K.; Zhang, C. New expectation–maximization-type algorithms via stochastic representation for the analysis of truncated normal data with applications in biomedicine. Stat. Methods Med. Res. 2018, 27, 2459–2477. [Google Scholar] [CrossRef]

- Tavakoli, S.; Yooseph, S. Learning a mixture of microbial networks using minorization–maximization. Bioinformatics 2019, 35, i23–i30. [Google Scholar] [CrossRef]

- Shen, K.; Yu, W.; Zhao, L.; Palomar, D.P. Optimization of MIMO device-to-device networks via matrix fractional programming: A minorization–maximization approach. IEEE/ACM Trans. Netw. 2019, 27, 2164–2177. [Google Scholar] [CrossRef]

- Ortega, J.M.; Rheinboldt, W.C. Iterative Solution of Nonlinear Equations in Several Variables; Academic Press: New York, NY, USA, 1970. [Google Scholar]

- De Leeuw, J. Applications of Convex Analysis to Multidimensional Scaling. 2005. Available online: https://escholarship.org/uc/item/7wg0k7xq (accessed on 6 July 2023).

- Lange, K.; Hunter, D.R.; Yang, I. Optimization transfer using surrogate objective functions. J. Comput. Graph. Stat. 2000, 9, 1–20. Available online: https://www.tandfonline.com/doi/abs/10.1080/10618600.2000.10474858 (accessed on 3 July 2023).

- Zhao, J.; Mili, L. A framework for robust hybrid state estimation with unknown measurement noise statistics. IEEE Trans. Industr. Inform. 2017, 14, 1866–1875. [Google Scholar] [CrossRef]

- Zhang, T.; Wang, Y.; Ning, J.; Zhai, M. Detection and identification of bad data based on neural network and k-means clustering. In Proceedings of the IEEE Innovative Smart Grid Technologies-Asia (ISGT Asia), Chengdu, China, 21–24 May 2019; pp. 3634–3639. [Google Scholar] [CrossRef]

- Huang, T.; Peng, H.; Zhang, K. Model selection for Gaussian mixture models. Stat. Sin. 2017, 27, 147–169. Available online: https://www.jstor.org/stable/44114365 (accessed on 5 May 2023). [CrossRef]

- Hosseini, R.; Sra, S. An alternative to EM for Gaussian mixture models: Batch and stochastic Riemannian optimization. Math. Program. 2020, 181, 187–223. Available online: https://archive.ics.uci.edu/ml/datasets (accessed on 2 June 2023). [CrossRef]

- Pfeifer, T.; Protzel, P. Expectation-maximization for adaptive mixture models in graph optimization. International conference on robotics and automation (ICRA). In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 3151–3157. [Google Scholar] [CrossRef]

- Yin, F.; Fritsche, C.; Gustafsson, F.; Zoubir, A.M. EM-and JMAP-ML based joint estimation algorithms for robust wireless geolocation in mixed LOS/NLOS environments. IEEE Trans. Signal Process. 2013, 62, 168–182. [Google Scholar] [CrossRef]

- Eisen, M.; Mokhtari, A.; Ribeiro, A. Decentralized quasi-Newton methods. IEEE Trans. Signal Process. 2017, 65, 2613–2628. [Google Scholar] [CrossRef]

- Li, M. A three term Polak-Ribière-Polyak conjugate gradient method close to the memoryless BFGS quasi-Newton method. J. Ind. Manag. Optim. 2018, 16, 245–260. [Google Scholar] [CrossRef]

- Li, M. A modified Hestense–Stiefel conjugate gradient method close to the memoryless BFGS quasi-Newton method. Optim. Methods Softw. 2018, 33, 336–353. [Google Scholar] [CrossRef]

- Degirmenci, A.; Karal, O. Robust incremental outlier detection approach based on a new metric in data streams. IEEE Access 2021, 9, 160347–160360. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).