Generative Adversarial Network (GAN)-Based Autonomous Penetration Testing for Web Applications

Abstract

:1. Introduction

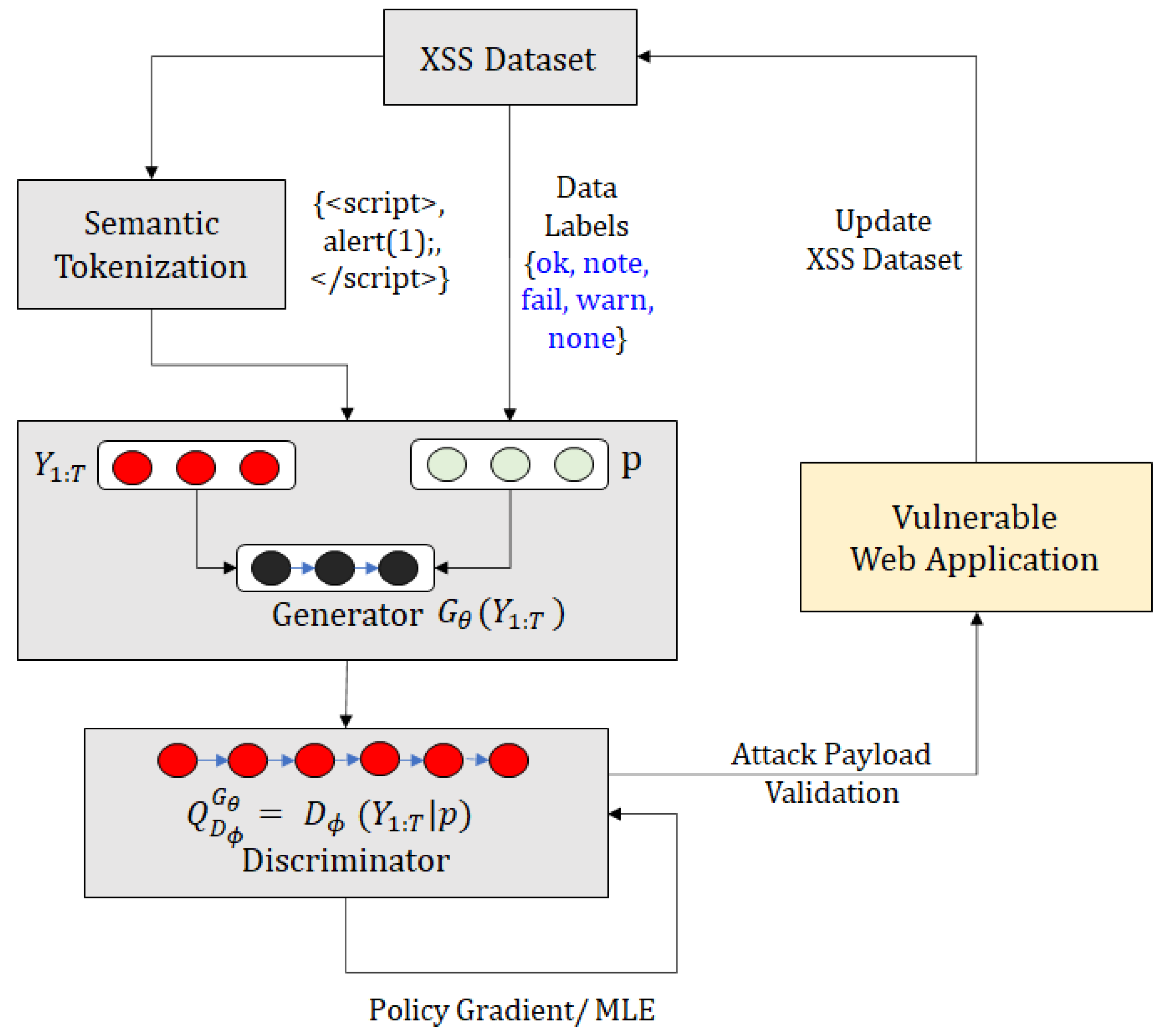

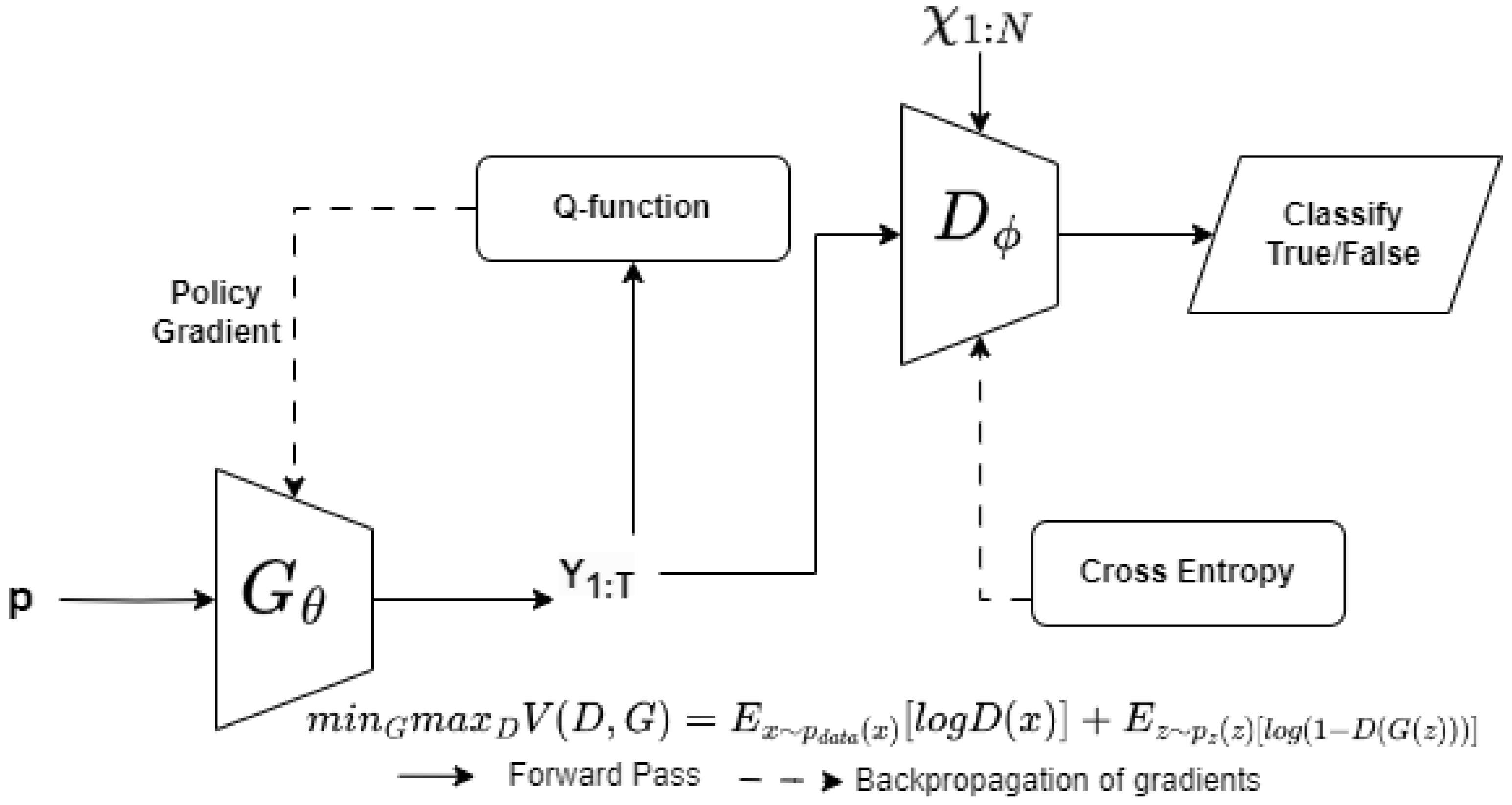

- Conditional sequence generation, by understanding the semantic structure of web attack payloads. The technique helps in improving the training efficiency of the generator and in generating valid attack signatures that can fool the discriminator.

- Evaluation of generated attack samples on production-grade WAFs. We used ModSecurity and AWS WAFs to test the quality of generated web attack samples. We observed that 8.0% of the attack samples targeting AWS WAF allowed listing and that up to 44% of the samples targeting AWS WAF block listing were able to bypass the rules in place for blocking web attacks.

- Generating a GAN-based synthetic attack dataset, by training a GAN model on real and fake attack samples. This synthetic data can help to train web application layer defensive devices, such WAFs, against sophisticated attacks, like APT.

2. Related Work

3. Background

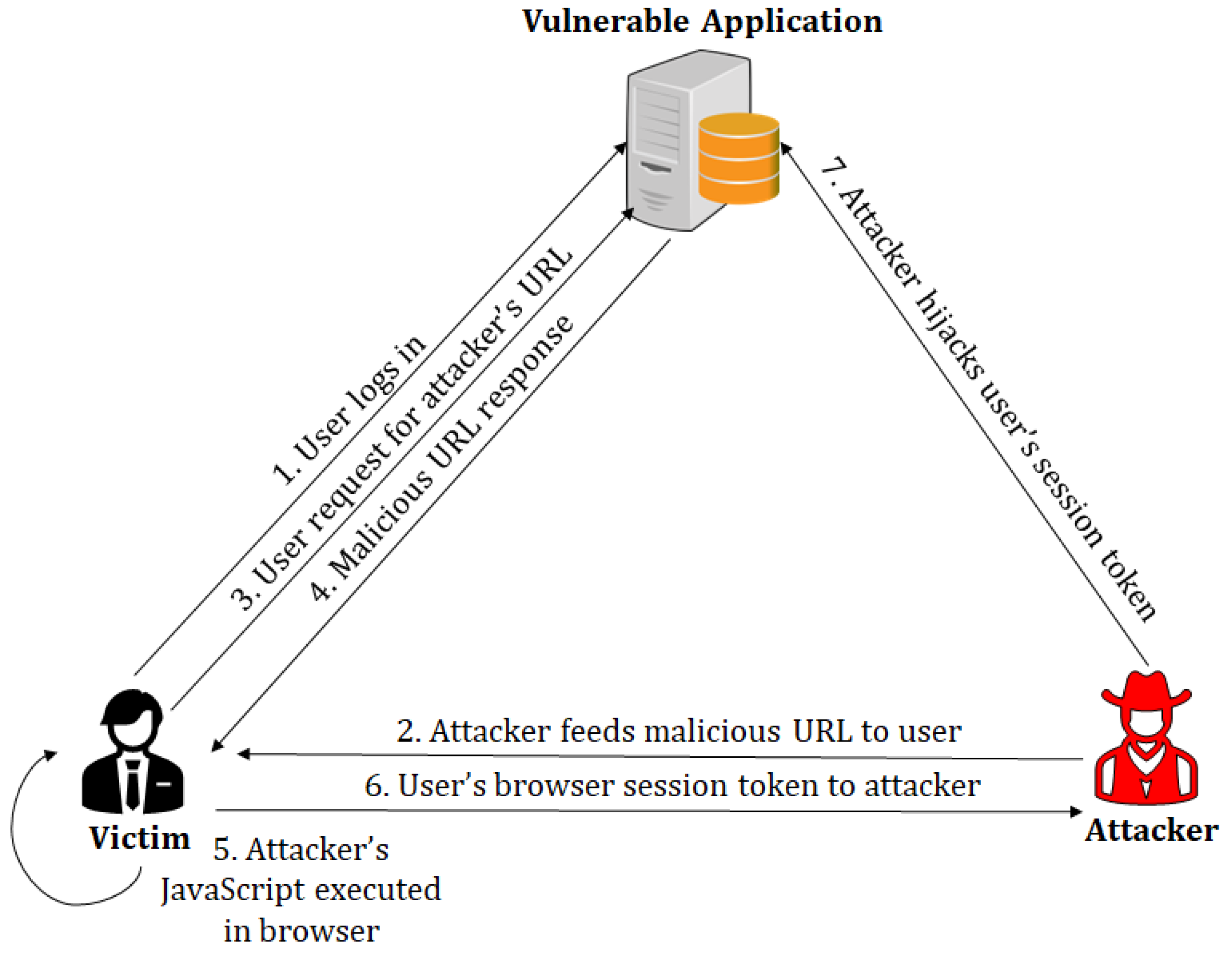

3.1. Web Application Attacks

Defense Mechanisms against Web Attacks

3.2. Generative Adversarial Networks (GANs)

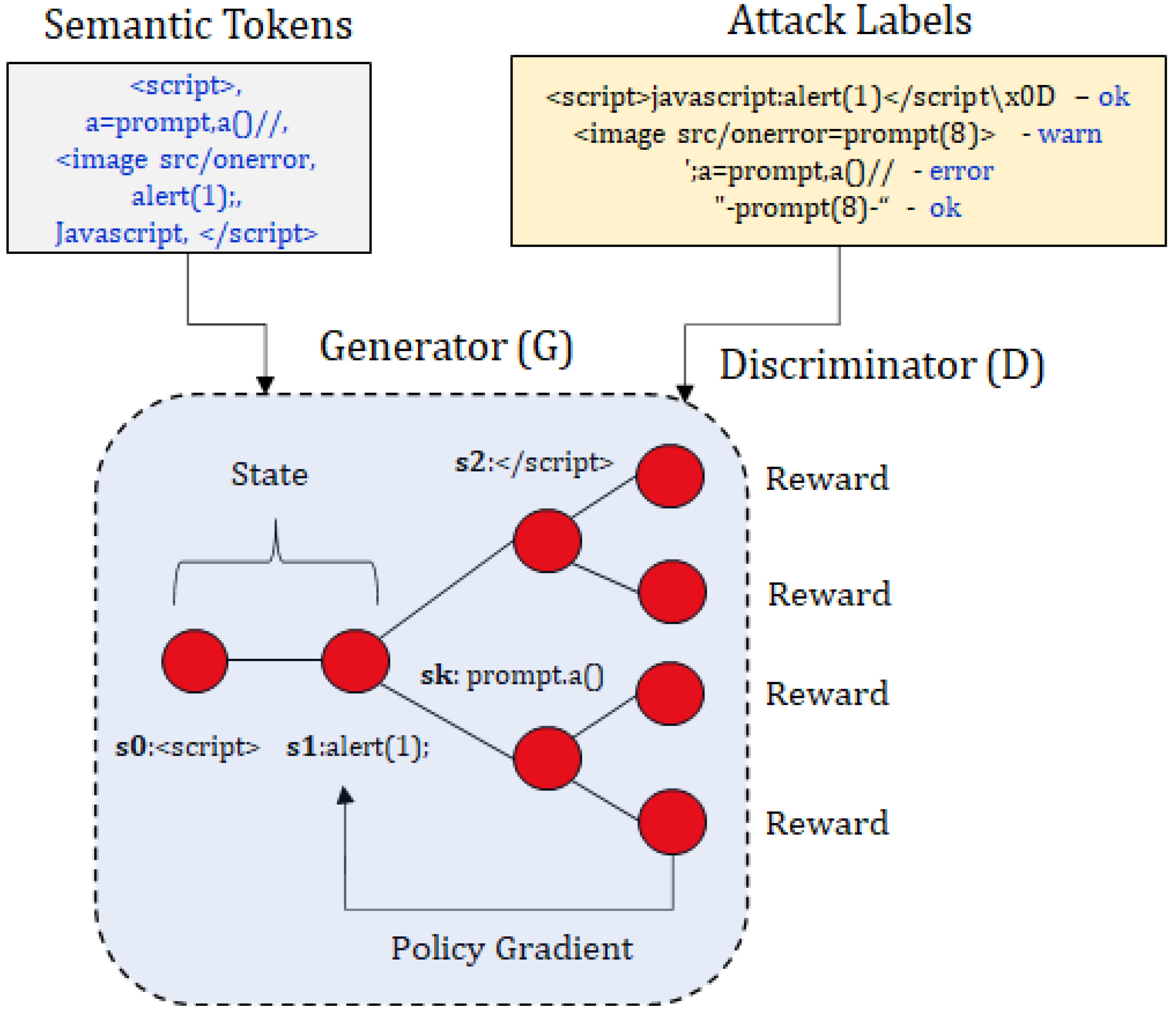

3.3. GANs for Generating Web Attacks

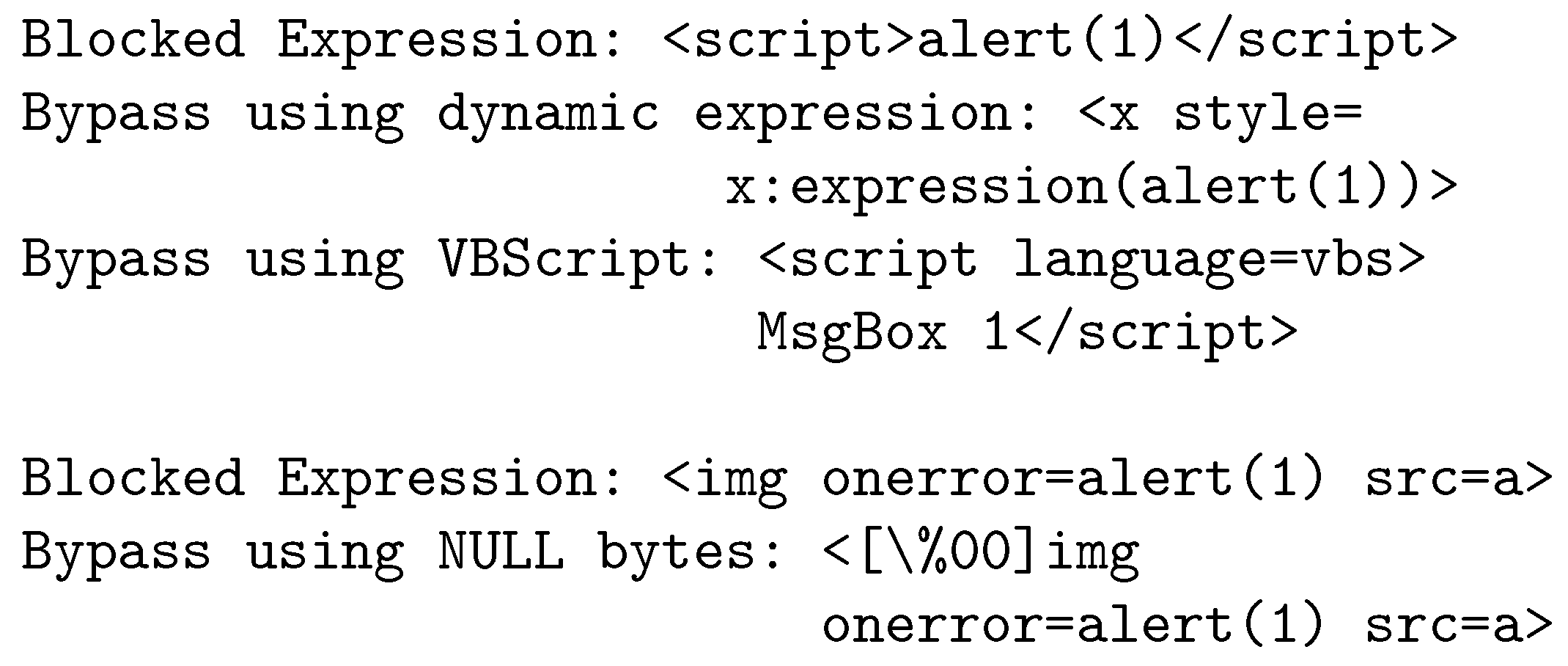

3.3.1. Motivating Example

3.3.2. GAN for Bypassing a Web Application Firewall

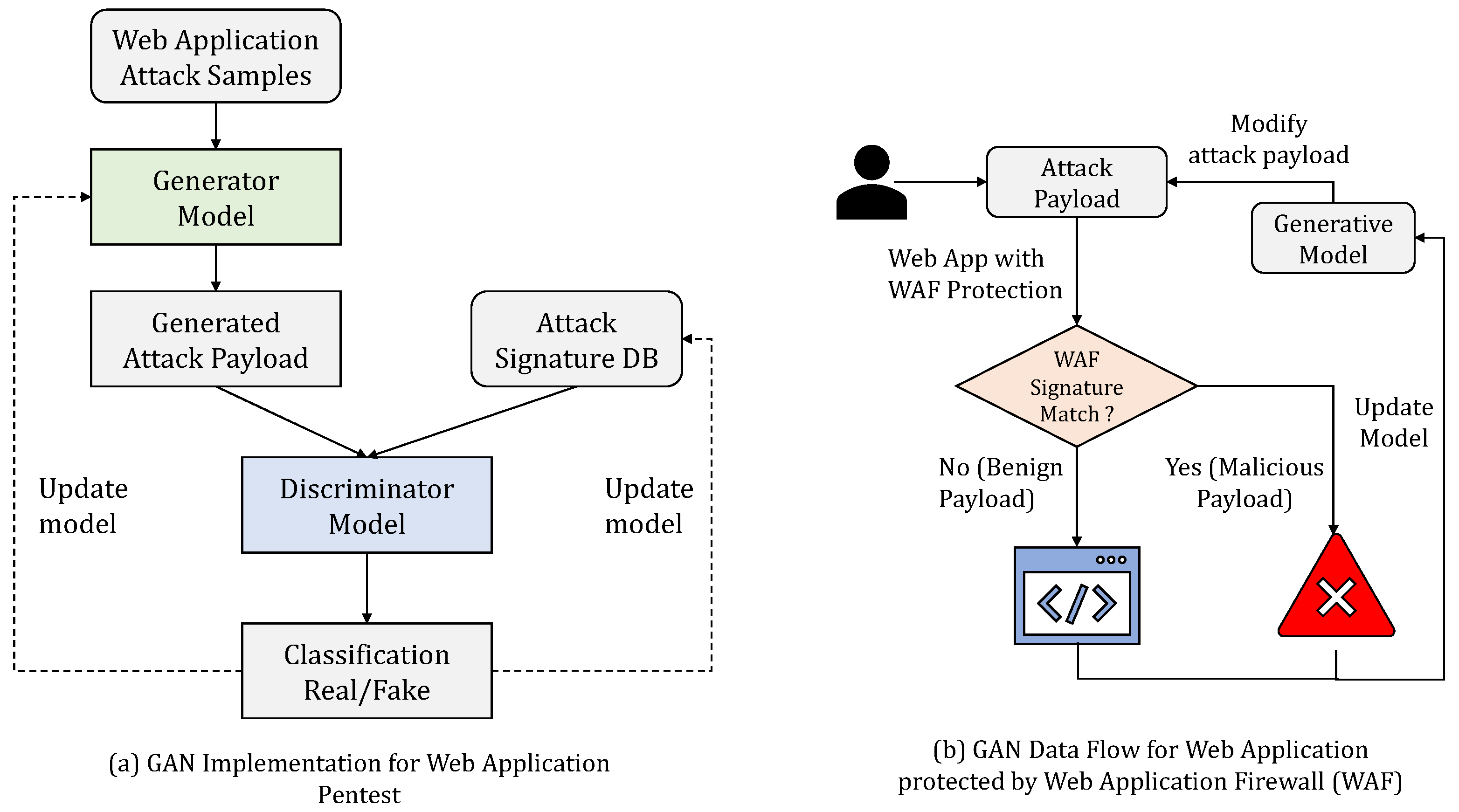

4. Conditional Attack Sequence Generation

4.1. Attack Payload Tokenization

4.2. Conditional Sequencing

Conditional Sequence-Based Attack Generation

| Algorithm 1 Conditional Sequence Generation |

|

5. Experimental Evaluation

5.1. Evaluation of Loss for Conditional GANs

5.2. Web Application Firewall Bypass

5.2.1. ModSecurity WAF Testing

- <script>eval("aler"+(!![]+[])[+[]])("xss")</script>

5.2.2. AWS WAF Testing

5.3. Comparative Analysis with Existing Research

6. Discussion

7. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Duo, W.; Zhou, M.; Abusorrah, A. A survey of cyber attacks on cyber physical systems: Recent advances and challenges. IEEE/CAA J. Autom. Sin. 2022, 9, 784–800. [Google Scholar] [CrossRef]

- Milos Timotic. 9 Web Technologies Every Web Developer Must Know in 2021. Available online: https://tms-outsource.com/blog/posts/web-technologies/ (accessed on 2 October 2021).

- Disawal, S.; Suman, U. An Analysis and Classification of Vulnerabilities in Web-Based Application Development. In Proceedings of the 2021 8th International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 17–19 March 2021; pp. 782–785. [Google Scholar]

- Chowdhary, A.; Huang, D.; Mahendran, J.S.; Romo, D.; Deng, Y.; Sabur, A. Autonomous security analysis and penetration testing. In Proceedings of the 2020 16th International Conference on Mobility, Sensing and Networking (MSN), Tokyo, Japan, 17–19 December 2020; pp. 508–515. [Google Scholar]

- Pang, Z.H.; Fan, L.Z.; Guo, H.; Shi, Y.; Chai, R.; Sun, J.; Liu, G.P. Security of networked control systems subject to deception attacks: A survey. Int. J. Syst. Sci. 2022, 53, 3577–3598. [Google Scholar] [CrossRef]

- Alshamrani, A.; Myneni, S.; Chowdhary, A.; Huang, D. A survey on advanced persistent threats: Techniques, solutions, challenges, and research opportunities. IEEE Commun. Surv. Tutor. 2019, 21, 1851–1877. [Google Scholar] [CrossRef]

- GlobeNewsWire. Global Penetration Testing Market 2020–2025. Available online: https://www.globenewswire.com/en/news-release/2020/07/10/2060450/28124/en/Global-Penetration-Testing-Market-2020-2025-Increased-Adoption-of-Cloud-based-Penetration-Testing-Presents-Opportunities.html (accessed on 2 May 2021).

- Cybersecurity Ventures. Cybersecurity Talent Crunch. Available online: https://cybersecurityventures.com/jobs/ (accessed on 2 July 2021).

- Alturki, R.; Alyamani, H.J.; Ikram, M.A.; Rahman, M.A.; Alshehri, M.D.; Khan, F.; Haleem, M. Sensor-cloud architecture: A taxonomy of security issues in cloud-assisted sensor networks. IEEE Access 2021, 9, 89344–89359. [Google Scholar] [CrossRef]

- Pundir, S.; Wazid, M.; Singh, D.P.; Das, A.K.; Rodrigues, J.J.; Park, Y. Intrusion detection protocols in wireless sensor networks integrated to Internet of Things deployment: Survey and future challenges. IEEE Access 2019, 8, 3343–3363. [Google Scholar] [CrossRef]

- Medeiros, I.; Beatriz, M.; Neves, N.; Correia, M. SEPTIC: Detecting injection attacks and vulnerabilities inside the DBMS. IEEE Trans. Reliab. 2019, 68, 1168–1188. [Google Scholar] [CrossRef]

- Mitropoulos, D.; Louridas, P.; Polychronakis, M.; Keromytis, A.D. Defending against web application attacks: Approaches, challenges and implications. IEEE Trans. Dependable Secur. Comput. 2017, 16, 188–203. [Google Scholar] [CrossRef]

- Mrabet, H.; Alhomoud, A.; Jemai, A.; Trentesaux, D. A secured industrial Internet-of-things architecture based on blockchain technology and machine learning for sensor access control systems in smart manufacturing. Appl. Sci. 2022, 12, 4641. [Google Scholar] [CrossRef]

- Chu, G.; Lisitsa, A. Penetration Testing for Internet of Things and Its Automation. In Proceedings of the 2018 IEEE 20th International Conference on High Performance Computing and Communications, IEEE 16th International Conference on Smart City, IEEE 4th International Conference on Data Science and Systems (HPCC/SmartCity/DSS), Exeter, UK, 28–30 June 2018; pp. 1479–1484. [Google Scholar]

- Lin, Z.; Shi, Y.; Xue, Z. Idsgan: Generative adversarial networks for attack generation against intrusion detection. arXiv 2018, arXiv:1809.02077. [Google Scholar]

- Revathi, S.; Malathi, A. A detailed analysis on NSL-KDD dataset using various machine learning techniques for intrusion detection. Int. J. Eng. Res. Technol. (IJERT) 2013, 2, 1848–1853. [Google Scholar]

- Jakkula, V. Tutorial on Support Vector Machine (SVM); School of EECS, Washington State University: Pullman, WA, USA, 2006; Volume 37. [Google Scholar]

- Rish, I. An empirical study of the naive Bayes classifier. In Proceedings of the IJCAI 2001 Workshop on Empirical Methods in Artificial Intelligence, Seattle, WA, USA, 4–6 August 2001; Volume 3, pp. 41–46. [Google Scholar]

- Noriega, L. Multilayer Perceptron Tutorial; School of Computing, Staffordshire University: Staffordshire, UK, 2005. [Google Scholar]

- Myles, A.J.; Feudale, R.N.; Liu, Y.; Woody, N.A.; Brown, S.D. An introduction to decision tree modeling. J. Chemom. A J. Chemom. Soc. 2004, 18, 275–285. [Google Scholar] [CrossRef]

- Dai, B.; Fidler, S.; Urtasun, R.; Lin, D. Towards diverse and natural image descriptions via a conditional gan. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2970–2979. [Google Scholar]

- Marra, F.; Gragnaniello, D.; Cozzolino, D.; Verdoliva, L. Detection of gan-generated fake images over social networks. In Proceedings of the 2018 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), Miami, FL, USA, 10–12 April 2018; pp. 384–389. [Google Scholar]

- Yu, L.; Zhang, W.; Wang, J.; Yu, Y. Seqgan: Sequence generative adversarial nets with policy gradient. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Shahriar, H.; Haddad, H. Risk assessment of code injection vulnerabilities using fuzzy logic-based system. In Proceedings of the 29th Annual ACM Symposium on Applied Computing, San Francisco, CA, USA, 4–9 February 2014; pp. 1164–1170. [Google Scholar]

- Shibata, Y.; Kida, T.; Fukamachi, S.; Takeda, M.; Shinohara, A.; Shinohara, T.; Arikawa, S. Byte Pair Encoding: A Text Compression Scheme that Accelerates Pattern Matching; Kyushu University: Fukuoka, Japan, 1999. [Google Scholar]

- Singh, J.J.; Samuel, H.; Zavarsky, P. Impact of paranoia levels on the effectiveness of the modsecurity web application firewall. In Proceedings of the 2018 1st International Conference on Data Intelligence and Security (ICDIS), South Padre Island, TX, USA, 8–10 April 2018; pp. 141–144. [Google Scholar]

- Singh, H. Security in Amazon Web Services. In Practical Machine Learning with AWS; Springer: Berlin/Heidelberg, Germany, 2021; pp. 45–62. [Google Scholar]

- Alsaffar, M.; Aljaloud, S.; Mohammed, B.A.; Al-Mekhlafi, Z.G.; Almurayziq, T.S.; Alshammari, G.; Alshammari, A. Detection of Web Cross-Site Scripting (XSS) Attacks. Electronics 2022, 11, 2212. [Google Scholar] [CrossRef]

- Obes, J.L.; Sarraute, C.; Richarte, G. Attack planning in the real world. arXiv 2013, arXiv:1306.4044. [Google Scholar]

- Sarraute, C.; Buffet, O.; Hoffmann, J. Penetration testing== pomdp solving? arXiv 2013, arXiv:1306.4714. [Google Scholar]

- Schwartz, J.; Kurniawati, H. Autonomous penetration testing using reinforcement learning. arXiv 2019, arXiv:1905.05965. [Google Scholar]

- Ghanem, M.C.; Chen, T.M. Reinforcement learning for efficient network penetration testing. Information 2020, 11, 6. [Google Scholar] [CrossRef]

- Schwartz, J.; Kurniawati, H.; El-Mahassni, E. Pomdp + information-decay: Incorporating defender’s behaviour in autonomous penetration testing. In Proceedings of the International Conference on Automated Planning and Scheduling, Nancy, France, 14–19 June 2020; Volume 30, pp. 235–243. [Google Scholar]

- Tran, K.; Standen, M.; Kim, J.; Bowman, D.; Richer, T.; Akella, A.; Lin, C.T. Cascaded reinforcement learning agents for large action spaces in autonomous penetration testing. Appl. Sci. 2022, 12, 11265. [Google Scholar] [CrossRef]

- Zhou, S.; Liu, J.; Hou, D.; Zhong, X.; Zhang, Y. Autonomous penetration testing based on improved deep q-network. Appl. Sci. 2021, 11, 8823. [Google Scholar] [CrossRef]

- Hitaj, B.; Gasti, P.; Ateniese, G.; Perez-Cruz, F. Passgan: A deep learning approach for password guessing. In Proceedings of the International Conference on Applied Cryptography and Network Security, Bogota, Colombia, 5–7 June 2019; Springer: Cham, Switzerland, 2019; pp. 217–237. [Google Scholar]

- Yang, J.; Li, T.; Liang, G.; He, W.; Zhao, Y. A simple recurrent unit model based intrusion detection system with DCGAN. IEEE Access 2019, 7, 83286–83296. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, Y.; Pei, S.; Zhuge, J.; Chen, J. Adversarial examples detection for XSS attacks based on generative adversarial networks. IEEE Access 2020, 8, 10989–10996. [Google Scholar] [CrossRef]

- Sengupta, S.; Chowdhary, A.; Huang, D.; Kambhampati, S. General sum markov games for strategic detection of advanced persistent threats using moving target defense in cloud networks. In Proceedings of the Decision and Game Theory for Security: 10th International Conference, GameSec 2019, Stockholm, Sweden, 30 October–1 November 2019; Proceedings 10. Springer: Cham, Switzerland, 2019; pp. 492–512. [Google Scholar]

- Myneni, S.; Chowdhary, A.; Sabur, A.; Sengupta, S.; Agrawal, G.; Huang, D.; Kang, M. DAPT 2020-constructing a benchmark dataset for advanced persistent threats. In Proceedings of the Deployable Machine Learning for Security Defense: First International Workshop, MLHat 2020, San Diego, CA, USA, 24 August 2020; Proceedings 1. Springer: Cham, Switzerland, 2020; pp. 138–163. [Google Scholar]

- Myneni, S.; Jha, K.; Sabur, A.; Agrawal, G.; Deng, Y.; Chowdhary, A.; Huang, D. Unraveled—A semi-synthetic dataset for Advanced Persistent Threats. Comput. Netw. 2023, 227, 109688. [Google Scholar] [CrossRef]

- Scarfone, K.; Mell, P. An analysis of CVSS version 2 vulnerability scoring. In Proceedings of the 2009 3rd International Symposium on Empirical Software Engineering and Measurement, Lake Buena Vista, FL, USA, 15–16 October 2009; pp. 516–525. [Google Scholar]

- Bilge, L.; Dumitraş, T. Before we knew it: An empirical study of zero-day attacks in the real world. In Proceedings of the 2012 ACM Conference on Computer and Communications Security, Raleigh, NC, USA, 16–18 October 2012; pp. 833–844. [Google Scholar]

- Stuttard, D.; Pinto, M. The Web Application Hacker’s Handbook: Finding and Exploiting Security Flaws; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Prandl, S.; Lazarescu, M.; Pham, D.S. A study of web application firewall solutions. In Proceedings of the International Conference on Information Systems Security, Kolkata, India, 16–20 December 2015; pp. 501–510. [Google Scholar]

- Security Intelligence. Generative Adversarial Networks and Cybersecurity. Available online: https://securityintelligence.com/generative-adversarial-networks-and-cybersecurity-part-1/ (accessed on 2 July 2021).

- Hochreiter, S. The vanishing gradient problem during learning recurrent neural nets and problem solutions. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 1998, 6, 107–116. [Google Scholar] [CrossRef]

- Chen, H.; Jiang, L. Efficient GAN-based method for cyber-intrusion detection. arXiv 2019, arXiv:1904.02426. [Google Scholar]

- Mahajan, A. Burp Suite Essentials; Packt Publishing Ltd.: Birmingham, UK, 2014. [Google Scholar]

- Williams, R.J. Simple statistical gradient-following algorithms for connectionist reinforcement learning. Mach. Learn. 1992, 8, 229–256. [Google Scholar] [CrossRef]

- Ismail Tasdelen. Payload Box. Available online: https://github.com/payloadbox/xss-payload-list (accessed on 8 October 2021).

- AWS. AWS WAF–Web Application Firewall. Available online: https://aws.amazon.com/waf/ (accessed on 8 November 2021).

- Wang, K.; Wan, X. SentiGAN: Generating Sentimental Texts via Mixture Adversarial Networks. In Proceedings of the IJCAI, Stockholm, Sweden, 13–19 July 2018; pp. 4446–4452. [Google Scholar]

- Liu, Z.; Wang, J.; Liang, Z. Catgan: Category-aware generative adversarial networks with hierarchical evolutionary learning for category text generation. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 8425–8432. [Google Scholar]

| Run # | Vanilla GAN | CGAN |

|---|---|---|

| 1 | 10.37% | 7.66% |

| 2 | 7.69% | 8.04% |

| 3 | 17.64% | 9.08% |

| 4 | 0.08% | 12% |

| 5 | 16.19% | 8.28% |

| Matching Rule | % Attack Match | AWS WAF Action |

|---|---|---|

| WAF Bypass | 8.0% | ALLOW |

| AWS-managed XSS | 44.9% | BLOCK |

| Fortinet XSS Rule | 44.0% | BLOCK |

| Misclassified | 3.1% | BLOCK |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chowdhary, A.; Jha, K.; Zhao, M. Generative Adversarial Network (GAN)-Based Autonomous Penetration Testing for Web Applications. Sensors 2023, 23, 8014. https://doi.org/10.3390/s23188014

Chowdhary A, Jha K, Zhao M. Generative Adversarial Network (GAN)-Based Autonomous Penetration Testing for Web Applications. Sensors. 2023; 23(18):8014. https://doi.org/10.3390/s23188014

Chicago/Turabian StyleChowdhary, Ankur, Kritshekhar Jha, and Ming Zhao. 2023. "Generative Adversarial Network (GAN)-Based Autonomous Penetration Testing for Web Applications" Sensors 23, no. 18: 8014. https://doi.org/10.3390/s23188014

APA StyleChowdhary, A., Jha, K., & Zhao, M. (2023). Generative Adversarial Network (GAN)-Based Autonomous Penetration Testing for Web Applications. Sensors, 23(18), 8014. https://doi.org/10.3390/s23188014