Perimeter Control Method of Road Traffic Regions Based on MFD-DDPG

Abstract

1. Introduction

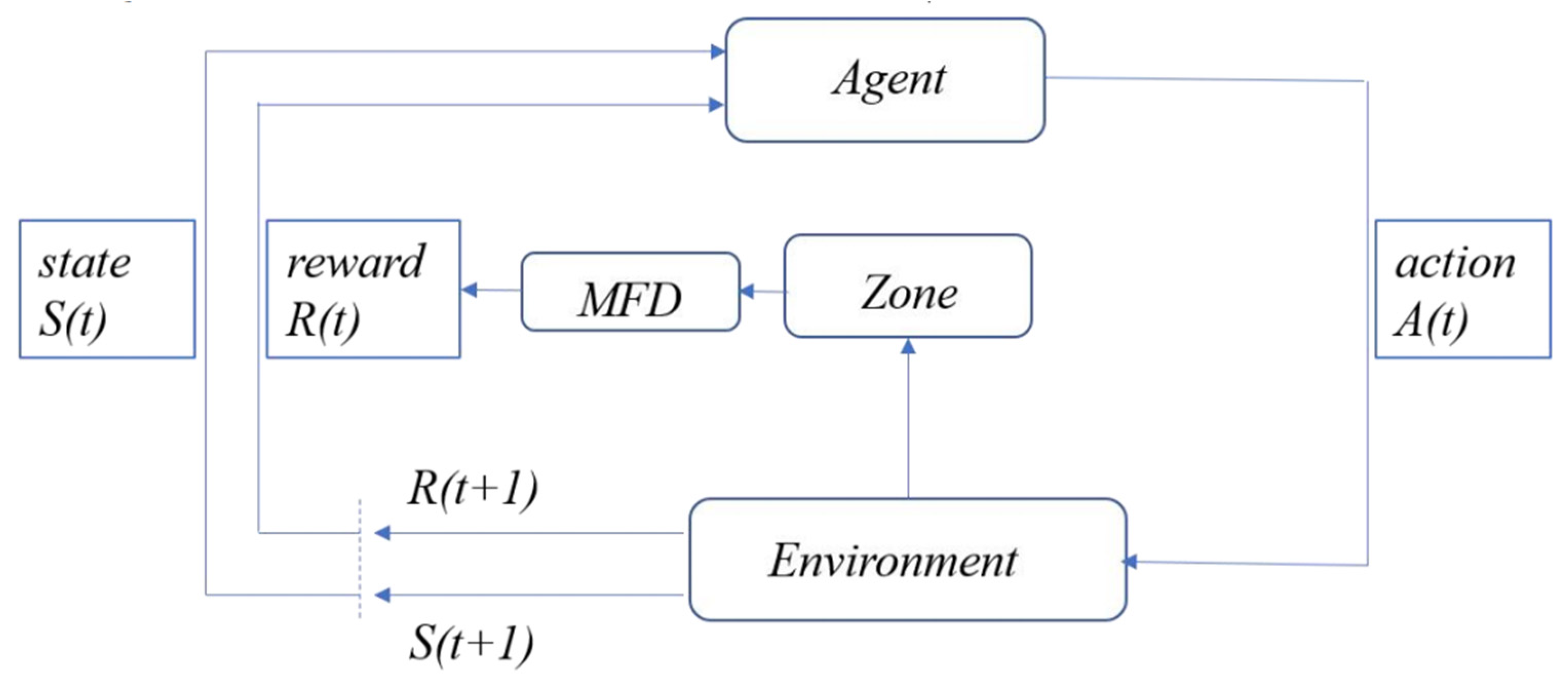

2. Model Construction

2.1. MFD-QL Perimeter Control Model

| Algorithm 1: MFD-QL algorithm |

| Input: Learning rate α, discount factor γ, number of iterations E, iteration step size T, Inititalize Q(s,a) is any value |

| for e=l,…,E do |

| Initialization status s |

| for step=0 to T do |

| Select action a in state ε-greedy based on strategy s |

| Execute action a to obtain the next state st+1 |

| Calculate reward value r through MFD theory and environmental feedback |

| Update Q: Q(s,a)←Q(s,a)+a[r+ymaxaQ(st+1,at+1)-Q(s,a)] |

| s←st+1 |

| end |

| end |

2.2. MFD-DDPG Perimeter Control Model

| Algorithm 2: MFD-DDPG algorithm |

| Input: Number of iterations E, iteration step size T, experience playback Set D, Sample size m, discount factor γ, Inititalize {Current network parameters θQ and θQ’} Inititalize {Target network parameters θu and θu’} Inititalize {Clear Experience Playback Collection D} |

| for e=l, …, E do |

| Obtain the initial state st and random noise sequence N for action selection |

| for t=l, …, T do |

| Based on the current strategy and the selection of noise, select actions and execute at = u (st|θu) to obtain the next step status st+1 |

| Store tuple (st,at,rt,st+1) to experience replay set D |

| Calculate reward value r through MFD theory and environmental feedback |

| Randomly select m samples from replay memory |

| yt=rt+ γQ’(st+1, u’(st+1|θu’)|θQ’) |

| Update current Critical network: |

| Update current Actor network: |

| Update target network: |

| θQ’←τθQ+(1-τ)θQ’ |

| θu’←τθu+(1-τ)θu’ |

| end |

| end |

3. Numerical Simulation and Result Analysis

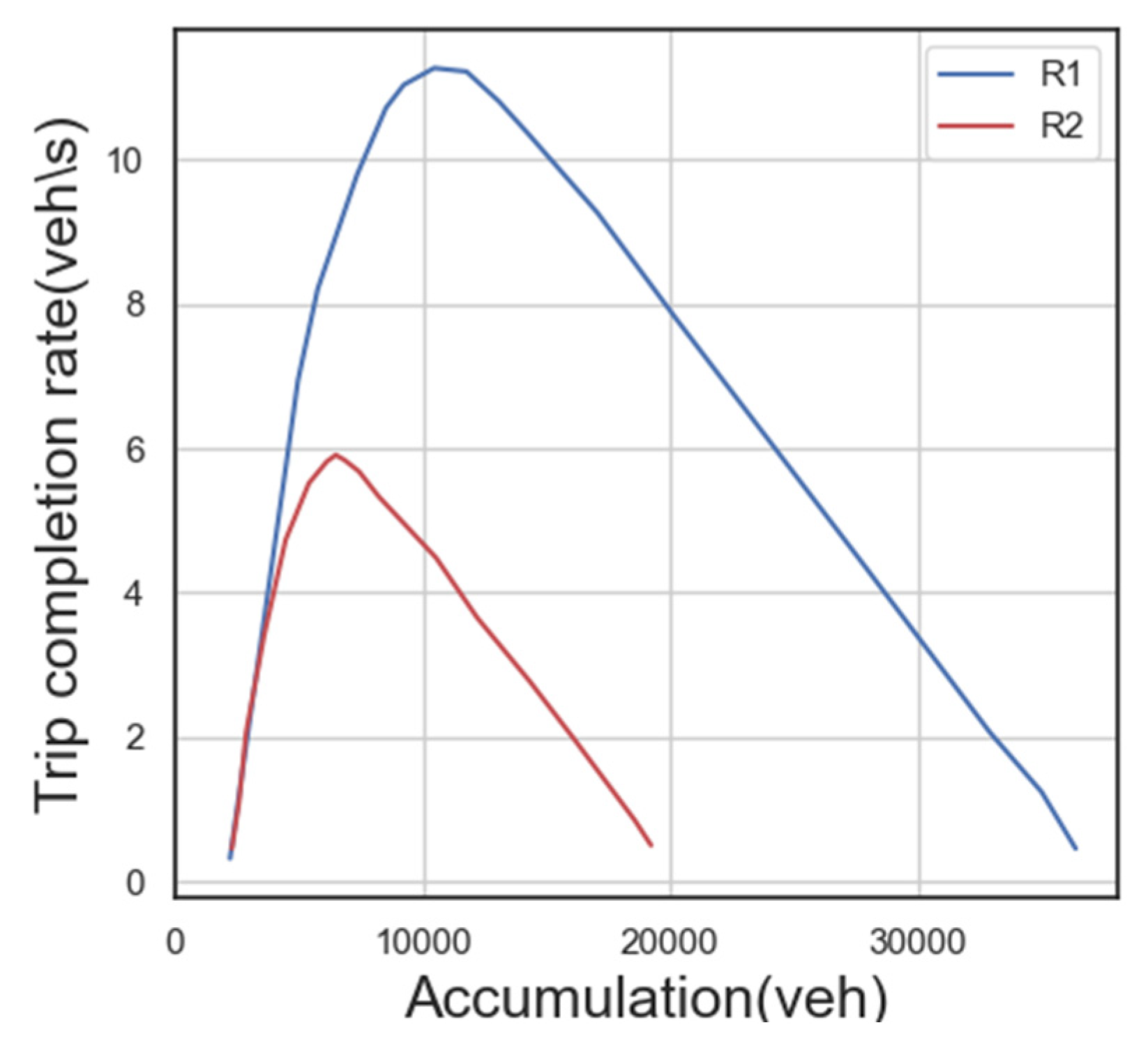

3.1. Experimental Setup

3.2. Parameter Setting

3.2.1. QL Parameter Setting

3.2.2. DDPG Parameter Setting

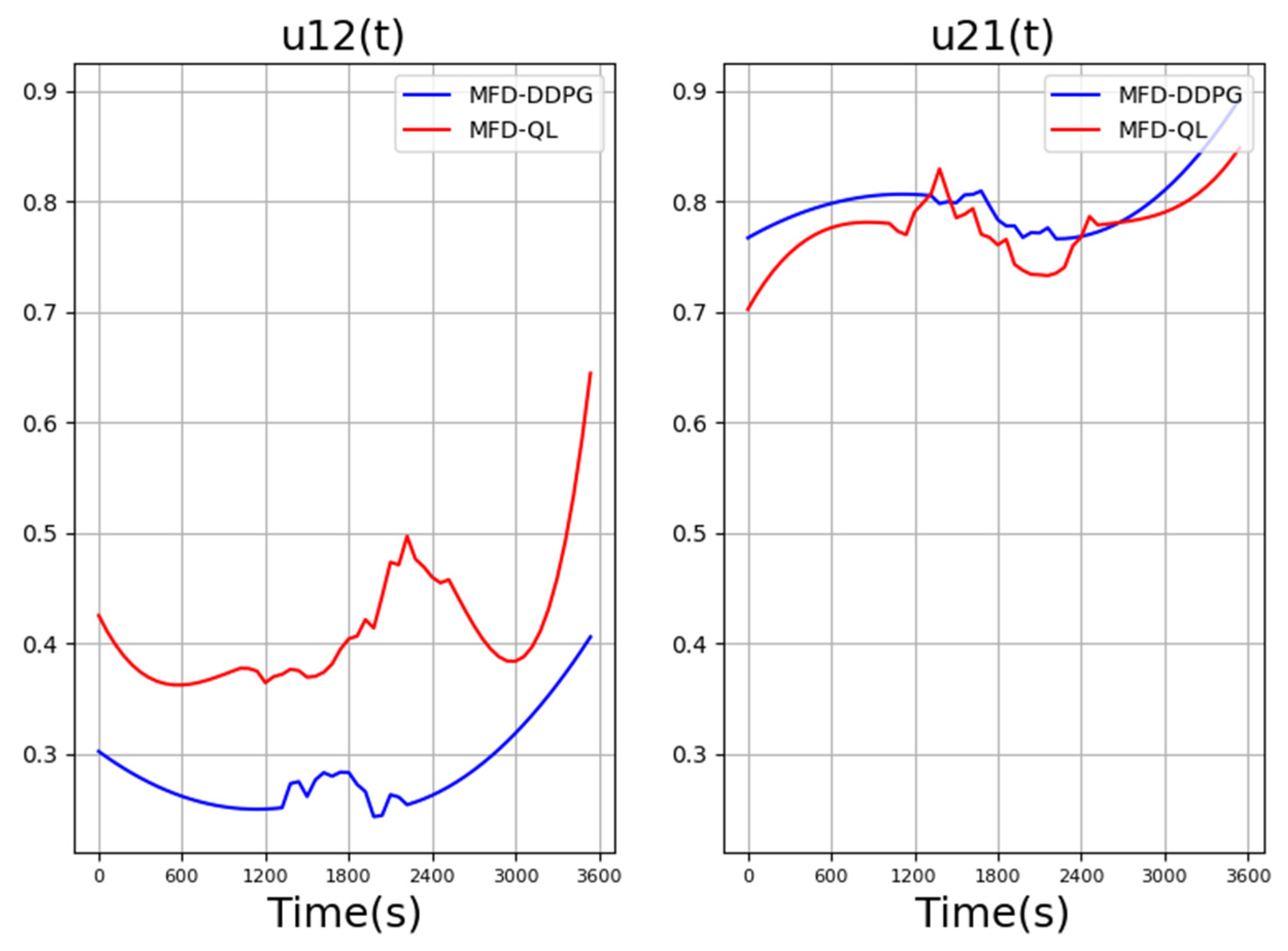

3.3. Result Analysis

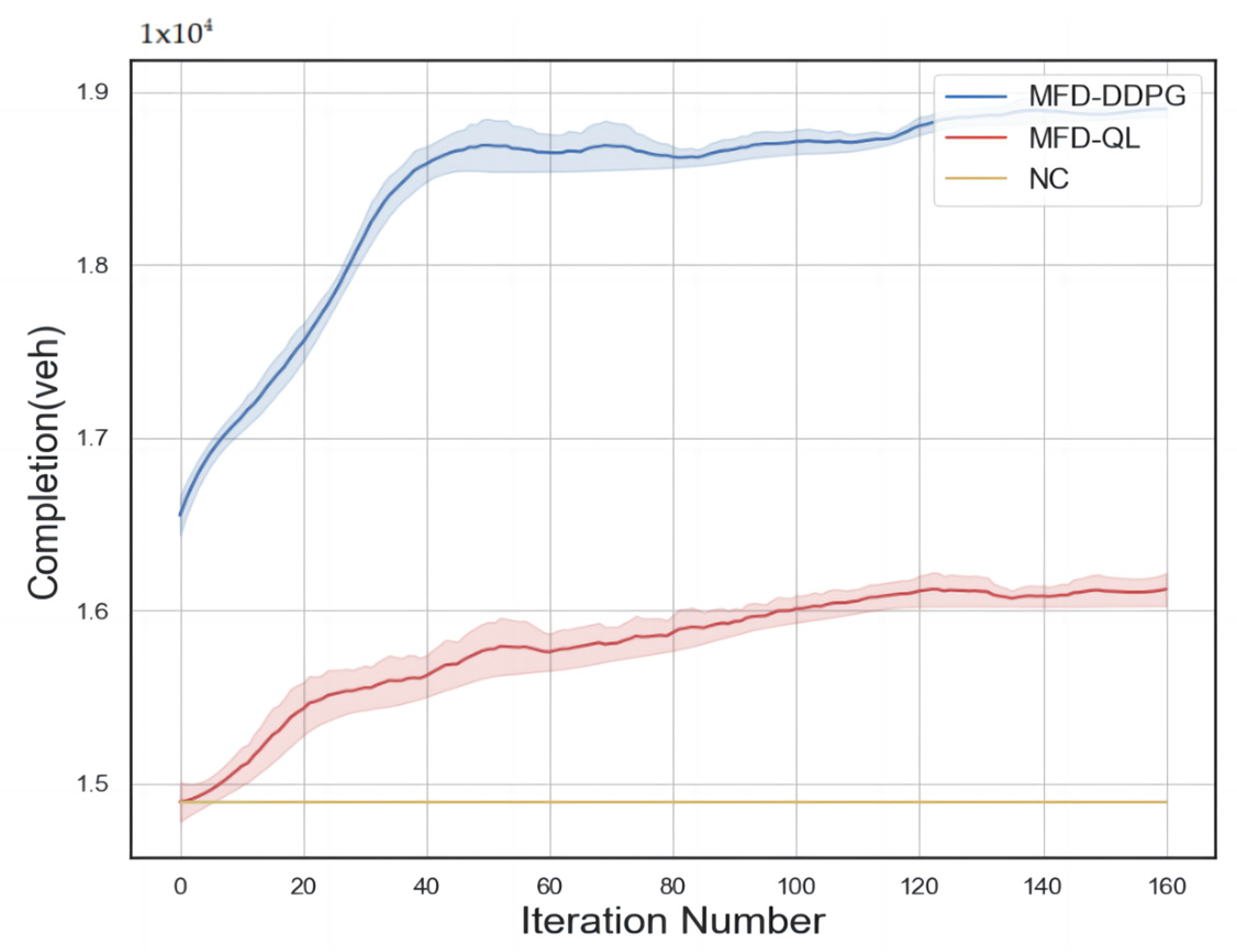

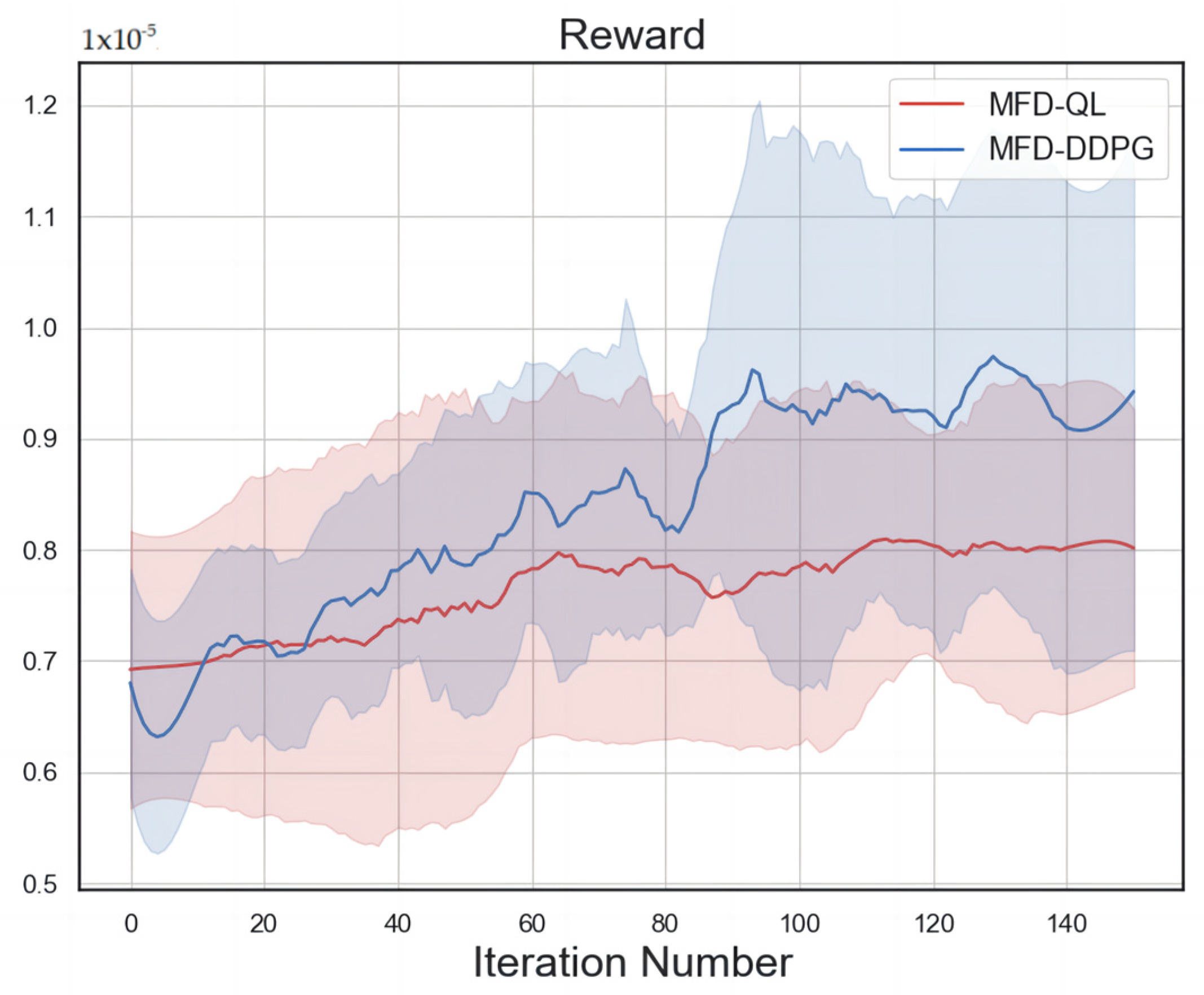

3.3.1. Convergence Analysis

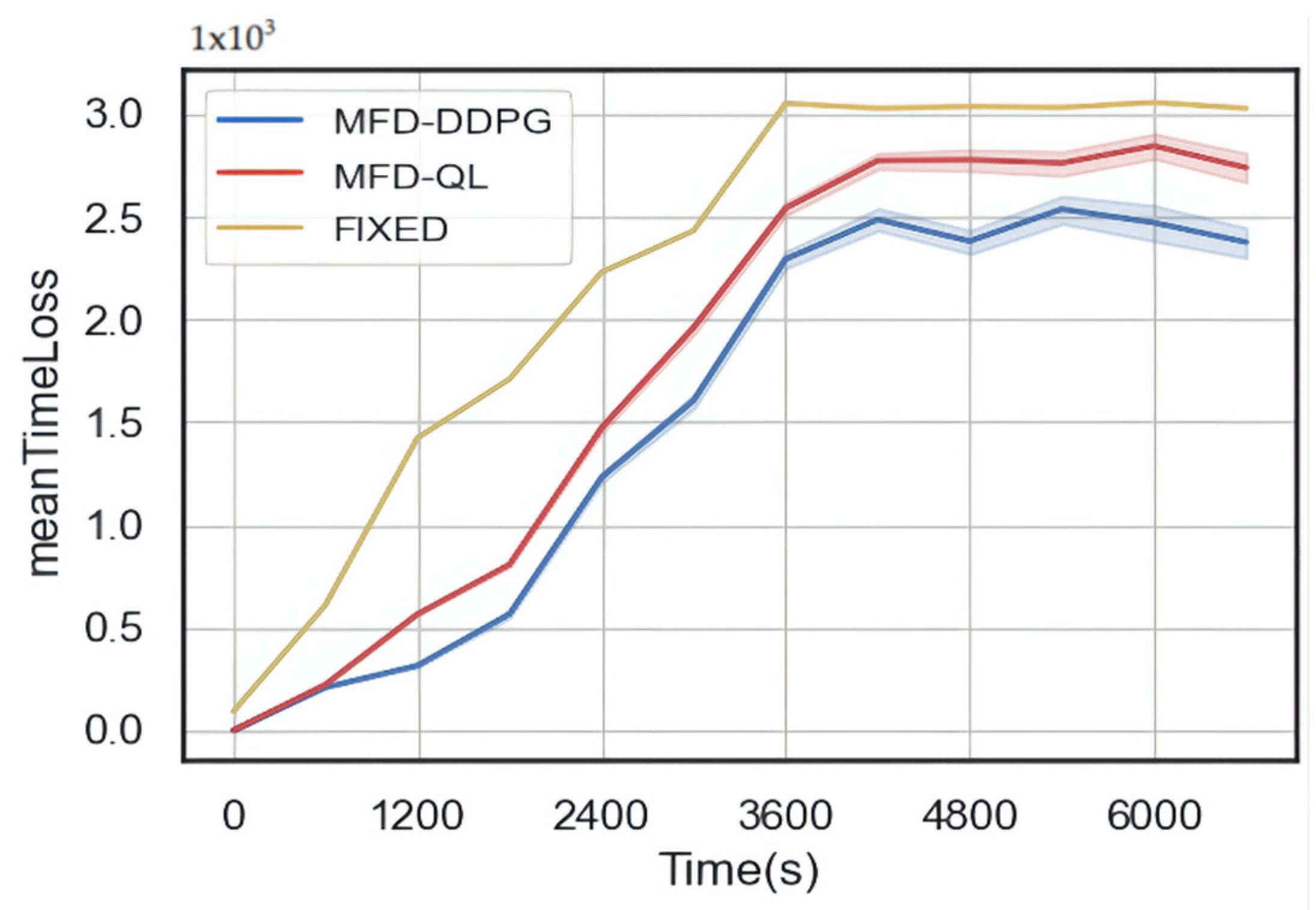

3.3.2. Effectiveness Analysis

4. Simulation Experiment and Result Analysis

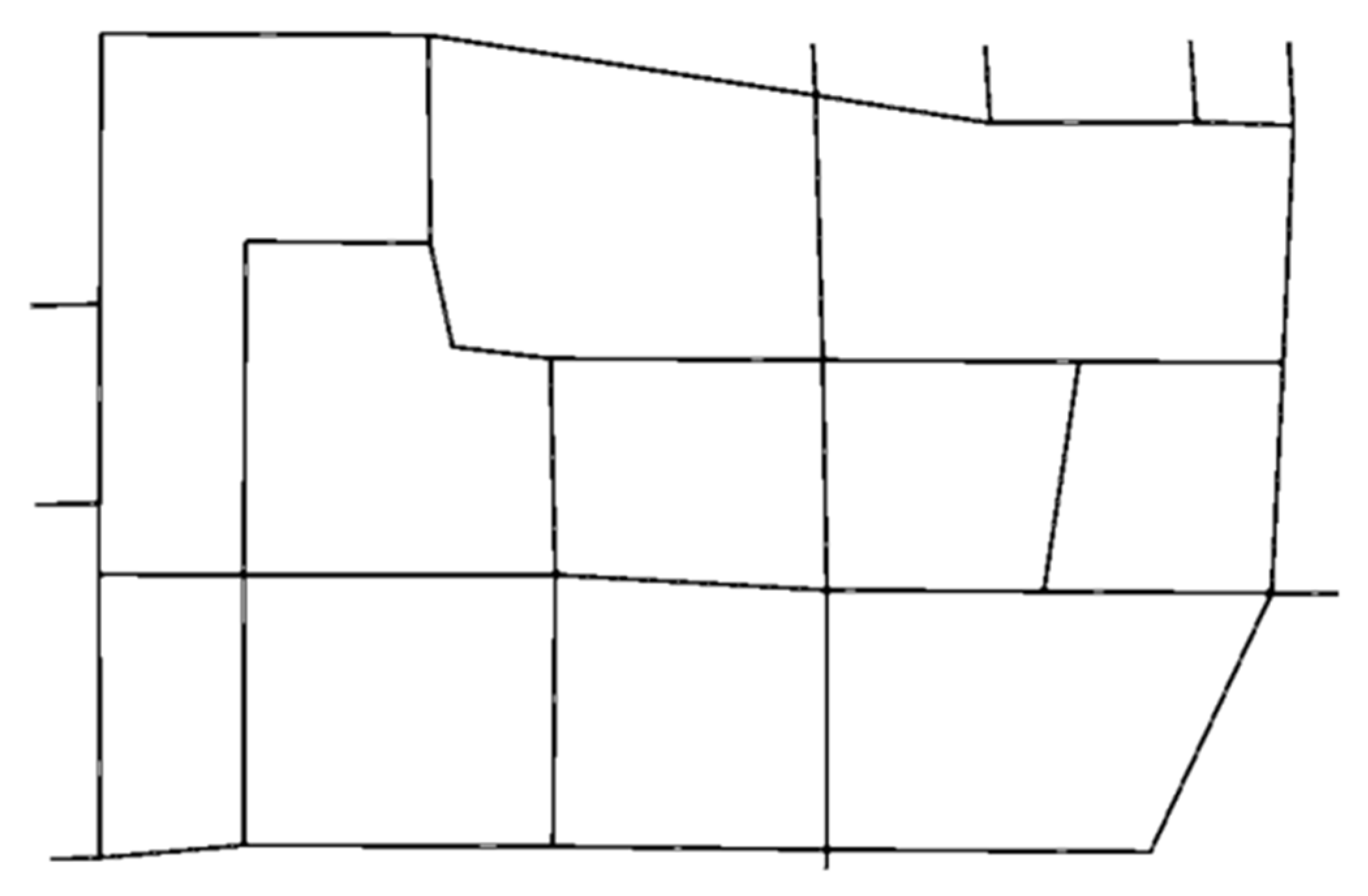

4.1. Experimental Setup

4.2. Parameter Setting

4.2.1. Environmental State Design

4.2.2. Action Design for the Intelligent Agent

4.2.3. Reward Function Design

4.3. Result Analysis

4.3.1. Convergence Analysis

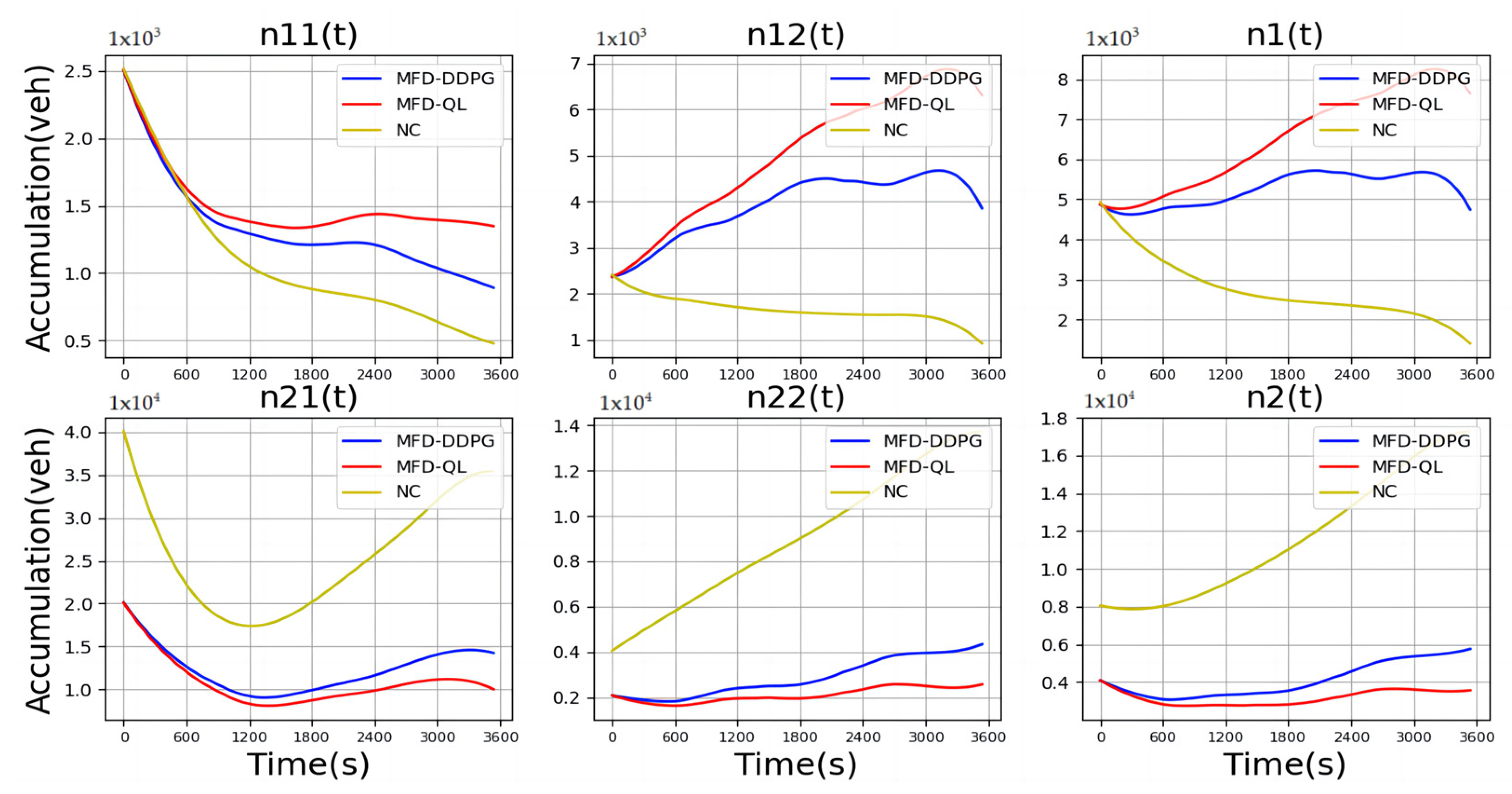

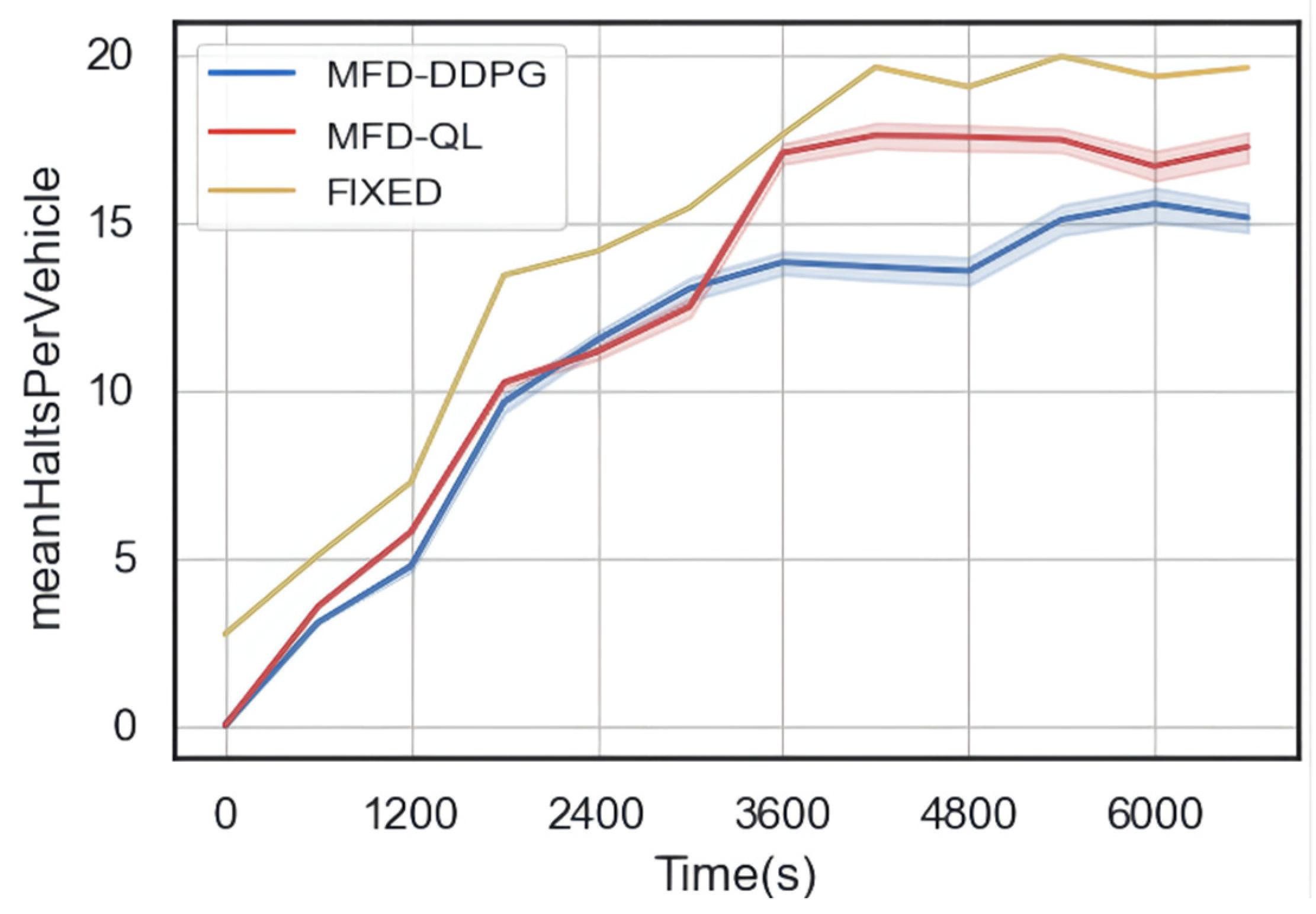

4.3.2. Effectiveness Analysis

5. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Odfrey, J.W. The mechanism of a road network. Traffic Eng. Control 1969, 11, 323–327. [Google Scholar]

- Zhang, W.H.; Chen, S.; Ding, H. Feedback gating control considering the congestion at the perimeter intersection. Control Theory Appl. 2019, 36, 241–248. [Google Scholar]

- Geroliminis, N.; Haddad, J.; Ramezani, M. Optimal Perimeter Control for Two Urban Regions With Macroscopic Fundamental Diagrams: A Model Predictive Approach. IEEE Trans. Intell. Transp. Syst. 2013, 14, 348–359. [Google Scholar] [CrossRef]

- Ambühl, L.; Menendez, M. Data fusion algorithm for macroscopic fundamental diagram estimation. Transp. Res. Part C 2016, 71, 184–197. [Google Scholar] [CrossRef]

- Prabhu, S.; George, K. Performance improvement in MPC with time-varying horizon via switching. In Proceedings of the 2014 11th IEEE International Conference on Control & Automation (ICCA), Taichung, Taiwan, 18–20 June 2014. [Google Scholar]

- Keyvan-Ekbatani, M.; Papageorgiou, M.; Knoop, V.L. Controller Design for Gating Traffic Control in Presence of Time-delay in Urban Road Networks. Transp. Res. Procedia 2015, 7, 651–668. [Google Scholar] [CrossRef][Green Version]

- Haddad, J.; Mirkin, B. Coordinated distributed adaptive perimeter control for large-scale urban road networks. Transp. Res. Part C 2017, 77, 495–515. [Google Scholar] [CrossRef]

- Aboudolas, K.; Geroliminis, N. Perimeter and boundary flow control in multi-reservoir heterogeneous networks. Transp. Res. Part B 2013, 55, 265–281. [Google Scholar] [CrossRef]

- Liu, J.; Qin, S. Intelligent Traffic Light Control by Exploring Strategies in an Optimised Space of Deep Q-Learning. IEEE Trans. Veh. Technol. 2022, 7, 5960–5970. [Google Scholar] [CrossRef]

- Wu, H.S. Control Method of Traffic Signal Lights Based On DDPG Reinforcement Learning. J. Phys. Conf. Ser. 2020, 1646, 012077. [Google Scholar] [CrossRef]

- Gao, X.S.; Gayah, V.V. An analytical framework to model uncertainty in urban network dynamics using Macroscopic Fundamental Diagrams. Transp. Res. Part B Methodol. 2017, 117, 660–675. [Google Scholar] [CrossRef]

| Traffic Parameters | Illustrate |

|---|---|

| Traffic area | |

| Link collection in the traffic area | |

| A link in the traffic area | |

| The number of vehicles in the traffic area at a time | |

| The traffic volume of the link at time | |

| The length of a link | |

| The sum of the lengths of all links in the traffic area | |

| The average traffic density of the traffic area at the moment | |

| The weighted traffic flow by Formula (2) of traffic area i at time | |

| Traffic benefits of traffic area |

| Control Intersection Location | Intersection ID | Traffic Phase | Signal Period (s) |

|---|---|---|---|

| Gucheng North Road—Gucheng Street | Four-phase fixed timing | 116 | |

| Gucheng North Road—Bajiao West Street | Four-phase fixed timing | 102 | |

| Bajiao North Road—Bajiao East Street | Four-phase fixed timing | 105 | |

| Bajiao South Road—Bajiao East Street | Four-phase fixed timing | 115 | |

| Shijingshan Road—Bajiao East Street | Four-phase fixed timing | 112 | |

| Shijingshan Road—Bajiao West Street | Four-phase fixed timing | 111 | |

| Shijingshan Road—Gucheng Street | Four-phase fixed timing | 117 | |

| Gucheng Street—Gucheng West Road | Four-phase fixed timing | 110 |

| Control Intersection Location | Phase 1 (s) | Phase 2 (s) | Phase 3 (s) | Phase 4 (s) | Yellow Light (s) |

|---|---|---|---|---|---|

| Gucheng North Road—Gucheng Street | 36 | 15 | 38 | 15 | 3 |

| Gucheng North Road—Bajiao West Street | 31 | 16 | 29 | 14 | 3 |

| Bajiao North Road—Bajiao East Street | 33 | 15 | 32 | 17 | 2 |

| Bajiao South Road—Bajiao East Street | 36 | 14 | 38 | 15 | 3 |

| Shijingshan Road—Bajiao East Street | 34 | 14 | 36 | 15 | 3 |

| Shijingshan Road—Bajiao West Street | 33 | 14 | 36 | 16 | 3 |

| Shijingshan Road—Gucheng Street | 35 | 17 | 38 | 15 | 3 |

| Gucheng Street—Gucheng West Road | 32 | 15 | 35 | 16/ | 3 |

| Control Intersection Location | Number of Cars (Morning Peak) | Number of Vehicles Arriving (Afternoon Peak) | Total Vehicles |

|---|---|---|---|

| Gucheng North Road—Gucheng Street | 1147 | 1241 | 2388 |

| Gucheng North Street—Bajiao West Street | 1283 | 1078 | 2370 |

| Bajiao North Road—Bajiao East Street | 928 | 816 | 1744 |

| Bajiao South Road—Bajiao East Street | 1306 | 1233 | 2539 |

| Shijingshan Road—Bajiao East Street | 1028 | 986 | 2014 |

| Shijingshan Road—Bajiao West Street | 1467 | 1298 | 2765 |

| Shijingshan Road—Gucheng Street | 1239 | 1083 | 2322 |

| Gucheng Street—Gucheng West Road | 825 | 921 | 1746 |

| Intersection Serial Number | ||||

|---|---|---|---|---|

| switch to north–south phase, phase duration 30 s | switch to north–south phase, phase duration 15 s | switch to east–west phase, phase duration 20 s | switch to east–west phase, phase duration 10 s | |

| switch to north–south phase, phase duration 30 s | switch to north–south phase, phase duration 15 s | switch to east–west phase, phase duration 20 s | switch to east–west phase, phase duration 10 s | |

| switch to north–south phase, phase duration 25 s | switch to north–south phase, phase duration 15 s | switch to east–west phase, phase duration 20 s | switch to east–west phase, phase duration 15 s | |

| switch to north–south phase, phase duration 30 s | switch to north–south phase, phase duration 20 s | switch to east–west phase, phase duration 20 s | switch to east–west phase, phase duration 15 s | |

| switch to north–south phase, phase duration 25 s | switch to north–south phase, phase duration 15 s | switch to east–west phase, phase duration 20 s | switch to east–west phase, phase duration 15 s | |

| switch to north–south phase, phase duration 30 s | switch to north–south phase, phase duration 25 s | switch to east–west phase, phase duration 25 s | switch to east–west phase, phase duration 20 s | |

| switch to north–south phase, phase duration 20 s | switch to north–south phase, phase duration 15 s | switch to east–west phase, phase duration 20 s | switch to east–west phase, phase duration 15 s | |

| switch to north–south phase, phase duration 35 s | switch to north–south phase, phase duration 15 s | switch to east–west phase, phase duration 20 s | switch to east–west phase, phase duration 25 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, G.; Liu, Y.; Fu, Y.; Zhao, Y.; Zhang, Z. Perimeter Control Method of Road Traffic Regions Based on MFD-DDPG. Sensors 2023, 23, 7975. https://doi.org/10.3390/s23187975

Zheng G, Liu Y, Fu Y, Zhao Y, Zhang Z. Perimeter Control Method of Road Traffic Regions Based on MFD-DDPG. Sensors. 2023; 23(18):7975. https://doi.org/10.3390/s23187975

Chicago/Turabian StyleZheng, Guorong, Yuke Liu, Yazhou Fu, Yingjie Zhao, and Zundong Zhang. 2023. "Perimeter Control Method of Road Traffic Regions Based on MFD-DDPG" Sensors 23, no. 18: 7975. https://doi.org/10.3390/s23187975

APA StyleZheng, G., Liu, Y., Fu, Y., Zhao, Y., & Zhang, Z. (2023). Perimeter Control Method of Road Traffic Regions Based on MFD-DDPG. Sensors, 23(18), 7975. https://doi.org/10.3390/s23187975