Abstract

Three video analysis-based applications for the study of captive animal behavior are presented. The aim of the first one is to provide certain parameters to assess drug efficiency by analyzing the movement of a rat. The scene is a three-chamber plastic box. First, the rat can move only in the middle room. The rat’s head pose is the first parameter needed. Secondly, the rodent could walk in all three compartments. The entry number in each area and visit duration are the other indicators used in the final evaluation. The second application is related to a neuroscience experiment. Besides the electroencephalographic (EEG) signals yielded by a radio frequency link from a headset mounted on a monkey, the head placement is a useful source of information for reliable analysis, as well as its orientation. Finally, a fusion method to construct the displacement of a panda bear in a cage and the corresponding motion analysis to recognize its stress states are shown. The arena is a zoological garden that imitates the native environment of a panda bear. This surrounding is monitored by means of four video cameras. We have applied the following stages: (a) panda detection for every video camera; (b) panda path construction from all routes; and (c) panda way filtering and analysis.

1. Introduction

The automatic analysis of animal behavior via video following has become a significant matter in neurosciences. To evaluate the effects of a gamut of factors on humans, such as drug and medicine influence, strain differences, or genetic mutations, medical research uses laboratory animals, mainly rodents. For instance, to evaluate the efficiency of a new drug to cure a specific disease, chemicals are administrated to rodents to induce specific symptoms. General or episodic facts, such as rearing, grooming, chewing, or movement in certain surroundings, have to be analyzed before and after drug administration. Laboratory beings are also utilized in neurology and psychiatry research to increase the basic knowledge of the nervous system and the anatomy and physiology of the brain. This cognition leads to better comprehension and therefore to better care of neurological and psychic illnesses. To substantiate the behavior of the human brain, monkeys are much more suited than rodents. For this type of experiment, the analysis of the monkeys’ behavior under specific circumstances is the most efficient way to realize the connections between individual or certain neuronal structures and complex cognitive functions. Another specific field of following animal behavior is intended to identify the motion stereotypes showing stress episodes of animals in zoos. A link between the stress state and some outside agents as visitors or other cumbersome facts that could provoke it has also to be established.

To objectively measure the relevant behaviors, many automated video monitoring and motion investigation techniques have been studied. There are proposals where the specificity of the scene imposes very particular tracking and trajectory analysis algorithms [1,2,3,4,5]. A recent review of the currently available open-source tools for animal behavioral video analysis is presented in [6]. Another important type of the proposed organism motion monitoring applications uses Kalman filter-based methods [7,8,9,10]. Other articles present animal activity tracking by means of AI (deep learning) or the optical flow method [11,12,13,14,15,16,17,18]. Two methods to localize rodents and analyze their motion are presented in [1,2]. The first one is a two-step approach to identify rodents in specific surroundings with few restraints. First, a sliding window method based on three traits follows the rodent and calculates its raw place. Next, the edge map and a system of pulses are employed to set the borders of the watching window close to the contour of the rodent. The other approach proposes an algorithm coming from the CONDENSATION method, which uses a smaller average number of provided tracking particles. This technique decreases the computational load of the initial algorithm while keeping the same detection accuracy. Analysis of the synthesized trajectory identified three rodent behaviors (exploring, rearing, and freezing). An improved version of motion analysis was later presented in [3]. Motion History Images and a Multiple Classifier System were used to recognize the three comportments. In every decision stage, the classifier with the best decision was taken into account. The presented method is invariant to the rodent and background color. It provides an accuracy of 87%.

To assess the animal activity related to a specific pathology, some techniques of trajectory estimation are presented in [4]: (a) two differential techniques by means of background subtraction; (b) entropy thresholding proceeding by using a global and local threshold; and (c) color matching with a computed template integrating a selected spectrum of colors. The authors deduced that all used procedures offered similar results for small rodent activities, but these techniques were aborted when great motions were analyzed.

A method to assess the fast and periodic behavior of a mouse by extracting the scratching patterns has been shown in [5]. Motion is identified by a series of operations in the consecutive binarized frames differences: noise reduction by erosion; nonzero pixels counting; pulse detection; long-term pulse filtering; pulse processing for scratching quantification; and scratching time quantification. The outcome is interpreted as an objective behavioral evaluation of atopic dermatitis, which can help to obtain new drugs for this disease.

In this paragraph, some tracking organism applications using Kalman filter-based techniques are remembered. An available tracking method and program software for animal activity named ToxTrac is described in [7]. It was developed for high-speed following of insects, fish, and rodents. First, it identifies gray mobile animals on a bright homogenous background. Next, it uses a second-order Kalman filter to assess target paths.

‘Bio-Sense’, described in [8], is a computing program that ensures object detection and then employs an adaptive Kalman filter to model the motion dynamics of different animals.

The paper [9] presents a simple following algorithm that accommodates the spatially closest objects of interest between successive video frames. It approaches only objects marked as animals in a previous stage.

An accommodation of the Kalman filter is used to estimate the position of animals that are hidden by other objects. For these methods, the main supposition is the motion linearity. The Kalman filter method is hard to develop when the animal route is covered. There were deployed methods to defeat this shortcoming. For example, in [10], a mixture of mean shift and particle–Kalman filter methods is shown. Mean shift performs as the main follower when the object is not covered. When occlusions appear, the particle–Kalman filter becomes the leader of the procedure. But for some experimental research, as in our application, the movement linearity is not fulfilled due to the sudden changes in speed and movement direction.

A review concerning deep learning tools for the evaluation of animal activity in neuroscience was issued in [11]. The study reveals that even though pose estimation based on deep learning can now much more easily be measured, there still remain some challenges to be solved. Performances of pose estimation approaches have to be improved for situations with contexts of multi-animal/human or/and occlusions. Also, it is mentioned that right now, it is hard to train networks to generalize to out-of-domain scenarios.

A video analysis of the animal posture evaluation framework is presented in [12]. It sums a deep learning elastic exemplary to approach the fluctuation in the animal corpus profile and an optical flow method to integrate temporal information from different film sequences. The proposed application was evaluated using datasets of four different lab animal species (mouse, fruit fly, zebrafish, and monkey). The authors claim that their system provides the best prediction accuracy among current deep learning-based approaches.

To identify multiple animals in areas with many occlusions and crossings, the following technique is described in [13]. The main idea is to link trajectory parts by means of their histogram and Hu moments.

The authors of [14] demonstrate the application of deep learning for the detection, tracking, activity, and behavior determination of red foxes during an experimental study. The detector was trained to detect red foxes and three body postures (‘lying’, ‘sitting’, ‘standing’). The trained algorithm has a mean average precision of 99.91%.

One deep learning method uses certain deep neural networks for panda bear face detection, segmentation, alignment, and identification [15]. The authors assert that the method is credited with 96.27% accuracy in panda identification.

The authors of [16] proposed an unsupervised probabilistic deep learning framework that identifies behavioral structures from deep variational embeddings of animal motion. In [17], the authors claim to demonstrate, for the first time, the effective application of a deep learning-based head pose estimation model trained and tested in a non-restraint setup with a monkey taking part in a cognitive task in their home area. Another deep learning framework for non-invasive behavior monitoring of individual animals is proposed in [18]. Video data are processed to continuously determine the position of a polar bear within the area. The analysis of the bear’s trajectories describing his travel patterns is presented through a graphical interface. The authors show the framework can localize and identify individual polar bears with a rate of 86.4%.

The remainder of this paper is structured as follows: The following chapter presents the experiment environments and the animal identification methods applied in three applications: (a) a laboratory rat tracking and trajectory analysis technique to provide certain movement parameters used to assess a specific drug efficiency; (b) a monkey tracking and head orientation computation method that provides information related to a neuroscience experiment; (c) a zoo panda bear motion analysis to identify stress states due to guests and the noise produced by airplanes when using a neighboring airport. A resume of laboratory rat tracking and a trajectory analysis system is presented in Section 3. The application is proposed in [19,20]. Section 4 describes, in the same manner, the laboratory monkey tracking and trajectory analysis system whose detailed presentation was the subject of [21]. The detailed proposal of an application for zoo panda stress state identification is presented in Section 5. The last application is briefly described in [22]. At the end of each of the last three sections, the results and conclusions are presented.

2. Experimental Environments and Localization Methods

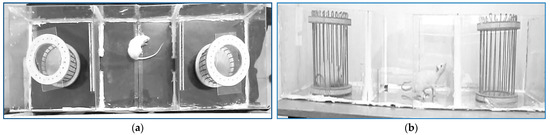

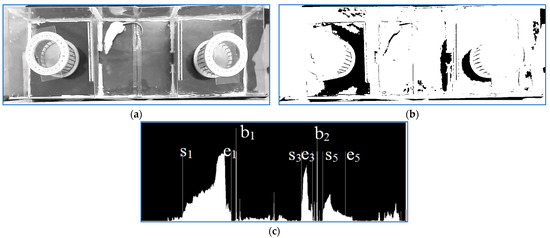

A plastic box divided into three rooms by means of two walls represented the environment of the first presented application. A cage was placed in the left room and another in the right room. Each video showing the experiments began with some frames displaying a rat placed in the middle domain. The scene is seen from above, Figure 1a. The gates to the marginal rooms were shut for at least 8 min. For this part of the experiment, the rat’s head orientation had to be provided at every moment. The experiment went on by opening the two doors, so the free rodent could walk into the entire environment. Also, each of the two cages could be populated or not by another rat. For the final part of the experiment, the scene was viewed with a camera placed on one side of the plastic box, Figure 1b. The number of visits in each compartment and the duration of each visit had to be provided.

Figure 1.

The two experimental environment configurations for rat tracking and trajectory analysis application: first stage (a) and second stage (b).

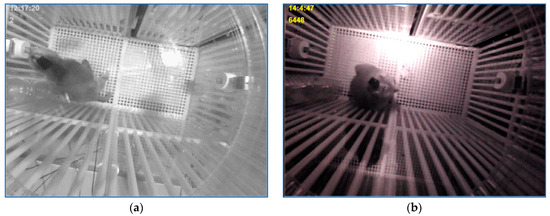

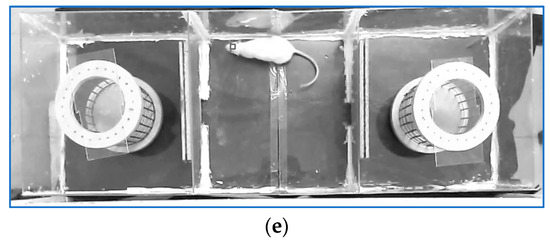

The experimental environment for the second application is presented in Figure 2. A 96-channel multielectrode array implanted in the dorsolateral prefrontal cortex (dlPFC; area 46 [23]) of the monkey acquired and transmitted the EEG signals via radio waves to be recorded and further analyzed by neuroscientists. The experiments conducted in the neuroscience lab of McGovern Medical School at the University of Texas are described in detail in [24]. Along with EEG signals, the sensor array position and monkey’s head orientation were also needed for an accurate final analysis. This task, one of the three applications presented in this paper, was performed using the registrations of the four experiments described earlier. The cage was unevenly illuminated. In addition, the monkey could switch the light at every moment. Besides the sensor array position and the monkey’s head orientation, an indicator for light-on/light-off was transmitted to be considered by specialists for final analysis. Figure 2a illustrates a light-on scene and Figure 2b depicts a light-off moment.

Figure 2.

The laboratory environment for the neuroscience experiment: (a) lighted cage and (b) dark scene.

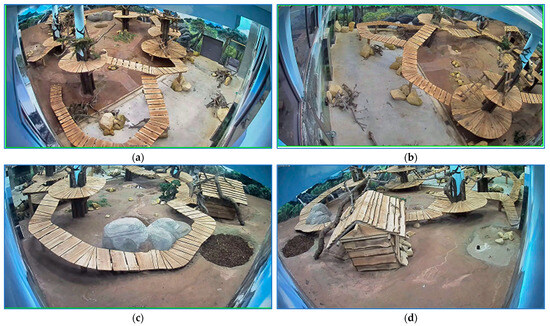

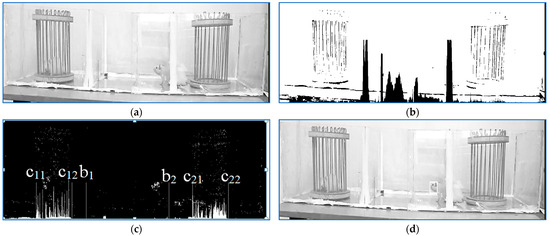

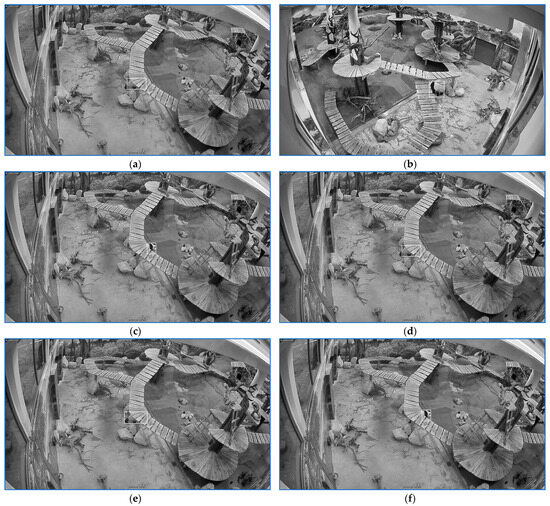

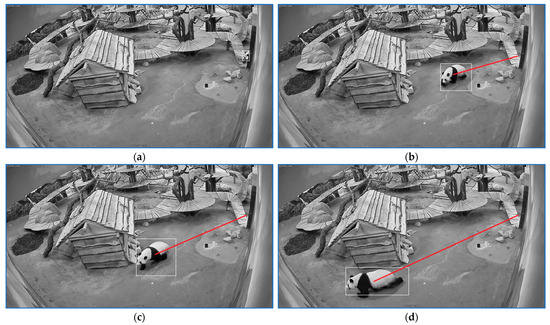

As for the last application, the scene was visualized by four video cameras, Figure 3. Each camera had a fixed position. There were two views of the entire arena viewed from two different angles (Figure 3a,b). The other two video cameras captured the background of the first two general scene views (Figure 3c,d). The habitat included six refuges. Only the refuge gates were visualized with the cameras. The bear might have quit the landscape entering one refuge. When the panda was in the domain viewed by the visitors, it could be visualized in at least one of the four views. Every time the zoo caretaker entered the cage to fetch food or to clean up the room, the bear was guided in a specific retreat. For the sequences with pandas in the field, five categories of stress episodes had to be identified. Each stress type was characterized by specific movement patterns.

Figure 3.

The arena is seen by 4 video cameras. Two cameras offer a view of the entire landscape viewed from two various angles (a,b). The other 2 cameras observe the ground of the general view (c,d).

The target localization using only the current frame could be accomplished by classic image object detection techniques only for the first part of the rat tracking and trajectory analysis application, Figure 1a. Due to the high contrast target/background, the rodent could be identified with a succession of single image operations: binarization -> erosion -> the main blob identification.

Head position, as explained in the next section, was determined using information from the frames in the vicinity of the current one. As for the rest of the presented applications, due to the low contrast target/background, a tracking technique had to be used. In this application, we faced great motion nonlinearity because of the big shifts in velocity and motion direction. In this respect, Kalman filter methods could not be employed. Also, due to brightness changes, tracking techniques issued from the optical flow technique could also not be used. Thus, the main supposition in the optical flow method was that the pixel intensities were translated from a certain frame to the next one: , where is the image intensity of the pixel at moment , and is the level of the pixel translated by the vector in the next frame at moment . From frame to frame, the target could perform significant movements from a darker area to a whiter zone. Largely used in the last years, movement tracking based on deep learning approaches was not suited in our case due to the fact that the computation was strongly scene-dependent for each of the three applications. For the first and the last systems, the parameters to be computed were related to the scene architecture. As for the monkey’s head orientation, the analysis was directed by the scene illumination specificities.

We propose a localization method as follows:

For each consecutive three frames composed by the current frame, indicated by , and the two anterior frames, denoted and , respectively, perform:

- ;

- , where D and E represent Dilation and Erosion morphological operations, respectively;

- In , delete all movement pixels external to the region of interest (this step is application-dependent);

- Through a ‘counting-box’ method, identify the most relevant movement cluster in .

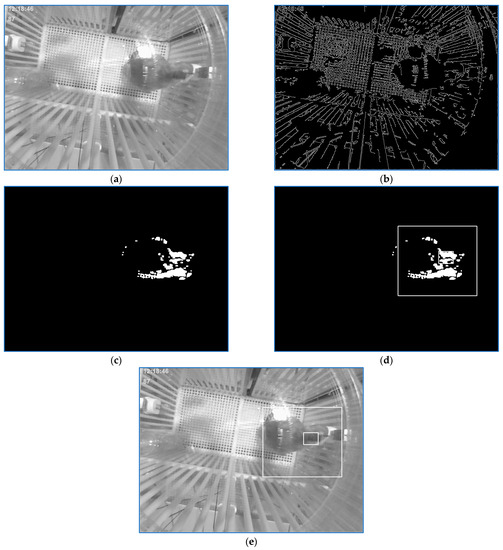

The procedure outcome is shown in Figure 4.

Figure 4.

Motion traits generation. From the successive frame series, (a–c) generate target image shown in (d): .

The movement cluster coarse localization provided by this procedure was refined using other information provided by the application-dependent procedures. For instance, in the case of the neuroscience experiment, to exactly identify the target location, the Canny image of the current frame was used. The right location was in the movement cluster mass center vicinity corresponding to the area in the Canny image where a hole made by the sensor box border was identified.

3. Rat Behavior Analysis

3.1. Head Localization

For the first part of the experiment, we proposed a three-stage method [19]: (1) for every frame, the scene characteristics were computed; (2) if the characteristics analysis indicates there were no scene outsiders, the rat body was identified; also, the rat head was recognized considering the head coordinates from the preceding frames. For doubtful cases, it was calculated in a second position; (3) the entire coordinates set was processed in order to solve each uncertain case considering subsequently certain head positions.

The first step of the scene analysis is illustrated in Figure 5. A binarization threshold was computed so that for a normal frame, the analysis of the binary image projection on the vertical axis would detect five clusters delimited by , parameters, Figure 5c. If the five cluster borders satisfied some predefined proximity conditions, the left and right middle room walls, , were in the middle of the second and fourth cluster, respectively. If the two parameters could be computed, the process continued by analyzing only the middle part of the cage in the current frame. Otherwise, the next frame would be analyzed.

Figure 5.

(a). Original frame; (b). binary frame; (c). binary frame column projection and the middle cage left and right walls.

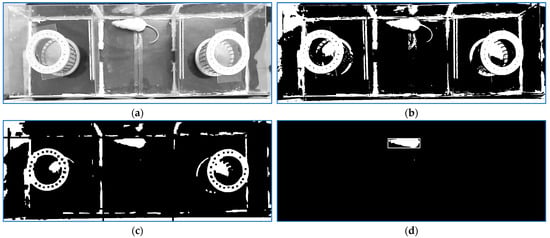

The analysis of a normal frame is depicted in Figure 6. A new binarization threshold was applied so that the main blob in the middle room could be easily located after an erosion of the binarized image.

Figure 6.

(a). Current frame; (b). binary frame; (c). erosion result; (d). rat and rat head localization results; (e). rat head detection result illustrated in the current frame.

In the initial stage of the following session, because of the fact that the rat was positioned in the middle zone by the human operator, only vertical or horizontal positions were available. After the first two frames, analysis the two previous frame localizations strongly indicated the head position for the current frame. However, for the first two frames, the previous two head locations were not available, so a voting method involving two techniques was used for vertical or horizontal situations. Let us consider the horizontal case, which is depicted in Figure 6a. First, the vertical projections of a fraction of the left and right areas, respectively, of the horizontal framing rectangle were analyzed. The area with significantly fewer body pixels would contain the head. Secondly, in the binary image, the tiny areas outside the right part and outside the left part were scanned, Figure 6b, to detect the tail. The rat’s tail container was considered the area with a large amount of white pixels located in the middle. The decision was taken following these rules: (a) if both methods show the same head zone, the area mass center represents a head indicator, as illustrated in Figure 6e; (b) one of the two methods does not offer reliable information (the two vertical projections are almost similar or the tail could not be identified): The result comes from the other one if it provides a strong decision; (c) both algorithms provide strong but contradictory responses: The second one is validated; (d) if none of the first three conditions is satisfied, the decision is delayed for the next frames. If the previous two frames’ directions were available, the decision was taken considering the old direction. When the rat reached a box wall or turned around, the body framing quadrilateral was almost a square. In such cases, the head was localized based on the movement direction and head locations of the previous frames. If more movement directions were possible, the two most reliable results were considered. At the end of the tracking process, based on the entire list analysis, the uncertain cases (with two solutions) were solved by choosing the closest position to the location of the next frame with a reliable localization (one solution).

3.2. Compartment Visits Number and Visit Duration

To analyze low contrast target/background registrations of the final part of the experiment, we proposed a two-stage tracking method [20]: (1) a tracking step based on the frame differences approach presented in Section 2 to synthesize the trajectories of the moving rats and (2) trajectory offline analysis to compute the entry number of the free rat in each area (cages, middle room) and the visit durations.

The tracking process is depicted in Figure 7. The original frame with a free rat wandering through the middle room is shown in Figure 7a. As in the first part of the experiment, a binarization threshold was computed. This time, the computation was conducted so that for a normal frame, the analysis of the binary image projection on the vertical axis would detect the limits of the two cages on the left and the right of the whole box. If the result fulfilled the condition then compute by analyzing the column projections in the interval . The image used for the experimental environment parameter computation is depicted in Figure 7b. Finally, if the deducted parameters satisfied the condition , proceed to locate with a counting box approach the main movement cluster in the working image generated as explained in Section 2. For the frame in Figure 7a, the result is shown in Figure 7d. The working image generated by consecutive frame differences is shown in Figure 7c. At its bottom, the image also displays the cages and the plastic box extremities: .

Figure 7.

(a) Original frame; (b) generated image to compute the cage parameters; (c) working frame. At its bottom, the image also displays the cages and the plastic box extremities: ; (d) tracking result.

For both stages of the rat behavior analysis project, the experimental box parameters had to be computed for each analyzed frame due to the fact the experiment conductor could interfere at any time during the process and might slightly change the scene angle view.

3.3. Results and Conclusions

All of the two sorts of experiment registrations have been provided by our collaborators from the Center of Biomedical Research of the Romanian Academy, Iasi Branch. For the first part of the experiment, tests were performed on ten movies of various resolutions. The first two movies show only the application studied in our experiments. In the middle camera, the rat did not have the ability to go to the other two rooms. The other films last 30 min and start with an episode of about 8 min. The head identification is accurate for assessing the motion of the rat, and the body framing quadrilateral is a vertical or horizontal rectangle. We detected a few errors in the case of turn-around motions or motion direction changing cases. To decrease the errors in these cases, an entire position file processing was accomplished. One of the two possible places calculated for doubtful cases was selected by means of the proximity to the first certain position of the following frames. For each video, the number of frames with small positioning errors in the framing squares versus the total number of observed frames is detailed in [19]. If the overall analysis is not considered, the error rate varies between 2.4% and 3.5% except in the first recording in which the error rate is about 1.2%. This is because the freezing sequences of the monitored rats last longer than for the rest of this study. After the complete analysis, the error rate decreased by one-third for the recordings.

As for the final part of the experiment, tests were conducted on three videos of different durations (10, 20, 40 min) and resolutions (788 × 246, 808 × 196, and 1092 × 270). The entry numbers in each area were determined errorless. The entry durations were computed error-free for short visits and with errors of a maximum of 1–2 s for visits longer than 1 min.

4. Animal Tracking Method Used in Neuroscience Experiments

4.1. Target Localization

As mentioned in Section 2, to locate the monkey in the current frame, a tracking technique was employed based on the identification of the main cluster in the image resulting from the processing sequences of the three consecutive frames (current frame and the two previous ones), Figure 8c. At the beginning of the tracking process, a model was yielded by storing the gray levels of the rectangle shape around the cluster center of gravity as soon as the following conditions were met: (a) the main detected cluster was relevant; (b) the Canny features, Figure 8b, of the current frame depicted in Figure 8a in the neighborhood of the movement cluster center of gravity were in a range specific for the Canny traits near the border of the empty patch yielded by the sensor box; (c) the average gray level of the cluster center of gravity neighborhood of the current frame indicated a dark area.

Figure 8.

(a). Current frame; (b). canny features image of the current frame; (c). movement features image; (d). localization result provided by the movement features and Canny edges images analysis; (e). localization result visualized in current frame.

The localization result is depicted in the working image, Figure 8d, and in the current frame, Figure 8d. Apparently, the model synthesis is very restrained because of the strong (a–c) conditions and the area borders where the model could be produced. Yet, the model initialization could take place only if the scene was illuminated. Now, the monkey moved a lot but also takes many breaks in the specific areas where the model could be initialized.

After a model was produced, the watching conditions were simplified. A new position was deduced after the movement cluster localization was validated only on the basis of an acceptable model pattern matching score and Canny edges being in the right range. Yet, the model could be applied to consolidate the detection of the current position only if this one was in the close vicinity of the previous one. Every time a head position was calculated, the current model was updated. The pattern-matching approach had no significance when a very relevant motion cluster was found far away from the anterior position. Now, the tracking procedure started, and a new exemplary was produced if the conditions (a–c) were satisfied.

Some precautions have to be considered. The entire process restarted in the case of the current frame being uniform. Another precaution is related to the unlighted frame sequences. The monkey shadow could yield false motion features. This problem can be approached by taking appropriate search zones. Moreover, it is important to choose the Canny traits number and their spatiality (in the sense that the close vicinity of the candidate center of gravity must be Canny features-free).

Four movement areas of the cage scene were considered. Each area has an experimentally determined reliability index. For example, the east domain of the cage has a smaller index when the monkey climbs the box wall, and its shape is not noticeable. The north domain of the cage has a low index, too. For every localization, a confidence index was calculated by means of the movement cluster area reliability index, possibly the model matching score, and the iteration number in case the recognition was resumed using more relaxed locations conditions. As another aspect of reliability index computing, the position calculated is assigned a lower confidence index if it is not computed in the first iteration, and the motion traits number is great. Ultimately, just localizations with an index bigger than 75% are useful for further assessment.

4.2. Head Orientation

Two stages are necessary to compute the head orientation. First, for each frame, the head orientation was calculated considering that the sensor set had a dark color, a right-angled profile, and was mounted in a determined position on the monkey’s head. Secondly, the orientation amounts were filtered, and a complete set of trends was computed.

Moreover, the area located in each frame by the following method was increased by enlarging its size in every direction by half of the primary measures. The new region of interest (ROI) resulting after inflation was converted into the HSV color space (hue, saturation, value). Then, a Gaussian filter was applied to the V component.

The sensor array was identified in the new image with the following operations: (a) the image was binarized by means of a threshold amount in the domain [30; 60] according to the scene brightness; (b) all contours in the binary image were located; (c) for each contour with a significant area (more than 500 pixels), a filling index was calculated as a percentage between the area bordered by the profile and the surface of the smallest bounding rectangle; (d) the specific orientation was offered by the direction of the large part of the bounding rectangle of the area with the biggest filling index.

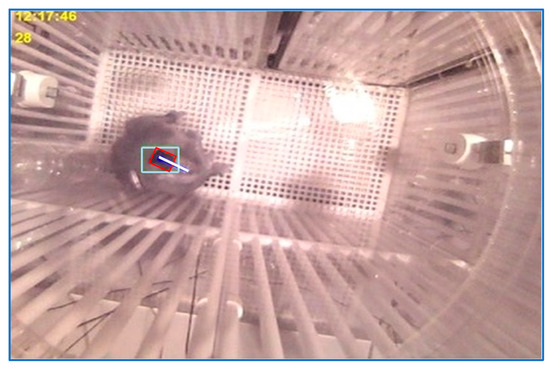

Finally, a 1-D average filter was used for the series of frame angles to clear the high-frequency noise and to estimate the angle measures related to the frames for which the orientation could not be calculated. The angle of the frame i calculated in the first step was changed by the mean of the angles in a temporal window of size t centered in i, from which the places where the angles were not calculated were eliminated, or the suitable confidence index was smaller than a certain threshold. A result of the target orientation computation is shown in Figure 9.

Figure 9.

Head orientation calculus result. The red rectangle frames the sensors array antenna and the white line indicates the head orientation.

4.3. Results and Conclusions

Four videos provided by McGovern Medical School at the University of Texas were used in the experiments. The movies’ resolutions are besides 917 × 688, and all of them have significant light off sequences. A large variation of illumination intensity for the lighted case is presented in the third film. Some of the registration sets contain frame sequences with no information. The angle of view is slightly modified from one video to another.

The analysis outcomes are better detailed in [21]. The tracking error was defined as the percent of frames with a low localization confidence index where the ROIs were not taken into account in the orientation estimations. For three of the four recordings, the error is about 1%. Due to the issues shown in the previous paragraph, the computed error for the fourth sequence is 3.4%. The average angle correction during the filtering stage varies between and These amounts are great but admissible if we know that for many frames, the primary direction and orientation cannot not be calculated because of the weak image’s quality.

Due to the fact that this application was deployed for specific experiments, it is hard to confront the results with those of other animal following developments. The experiment specificities consist of:

- -

- Unequal brightness for lighted scenes and also when light was banned; during the torn-off light episodes, the monkey shadow could yield forged motion traits;

- -

- The manner in which the experimental work was managed: Frequently the monkey's movement was tracked from the cage exterior, and the experimenter’s motion produced not useful movement traits; occasionally, the monkey was fed from the outside of the box, and this fact also does not generate monkey traits;

- -

- The abrupt shifts of the box brightness due to the fact the monkey could accidentally turn the light on/off;

- -

- The monkey motion is highly nonlinear.

To conclude, the monkey localization and its head orientation tasks have an accuracy that makes this practice appropriate for this type of neuroscience experiment.

5. Stress Sequences Identification of a Zoo Panda Bear by Movement Image Analysis

The analysis input consists of sets of zoo films. A set can contain synchronized recordings from one to four video cameras. The four sights from Figure 3 are indicated with the camera number used in the experiment: c5-Figure 3d, c6-Figure 3b, c7-Figure 3a, and c8-Figure 3c.

First, the application would initialize the following parameters needed via a tracking process and trajectory analysis:

- -

- Motion domain selection: To cancel no information motion traits (e.g., visitors’ motions; flaring on walls or ceiling), for every view, a helpful information polygon was set up. Afterward, during the tracking stage, by means of a ‘ray casting’ method, just motion traits inside the polygon were analyzed;

- -

- Panda bear and zoological garden caregiver entry/exit gates coordinates in the information polygon for every sight;

- -

- The complete visibility zones were bordered for each sight. In these zones, it was possible that after a valid motion cluster recognition, there were no discovered motion traits. This could be motivated by a relaxation or freezing moment of a panda. A no-motion characteristic was set up to eliminate the tracking re-restoration;

- -

- For each visible area of a view, a list of the coordinates of the correspondent areas of the other cameras was synthesized.

Then, an already defined project could be launched, or a new project was defined: (a) the views to be fused from the available video set were chosen; (b) for each project view and for the final result, a list with an entry number equal to the minimum frame number of all the project video lengths was generated. Each list entry was a structure including a camera number, movement coordinates, and a movement attribute. For all lists, each list position was initialized to a null camera number, null movement coordinates, and a freezing_or_missing attribute.

For every video camera comprised in the actual experiment, a tracking procedure was initialized. For at least two camera working sequences, these tasks were run in parallel. The next tasks were applied: (a) target localization for every camera session; (b) trajectory estimation from all trajectory views; and (c) obtain trajectory filtering and analysis.

5.1. Localization at Task Level

To estimate the movement strength given by the number of features (features_nmb) of the localized movement cluster, three movement thresholds were used; they are denoted: features_nmb_thr, low_features_nmb_thr, and high_features_nmb_thr. A tracking session is initialized or reinitialized only if:

- (a)

- features_nmb > high_features_nmb_thr. In this case, if the height movement rectangle is specific to the zoo technician and is seized in the zoo technician gate area, an attribute indicating technician presence is set: zoo_technician = 1. This parameter was used in the overall trajectory synthesis where only panda frames were considered;

- (b)

- features_nmb > features_nmb_thr, and the movement cluster is identified in the gate areas;

- (c)

- features_nmb > features_nmb_thr, and the movement cluster is identified in the previous valid position area. This case is possible only if the precedent tracking session provided valid results.

A watching session could end after a caregiver or panda bear exits or after a long series of static frames.

During the tracking, according to features_nmb and the previous frame characteristic, in every sequence of the working session, the existing frame was assigned as being a movement or freezing or no_information frame.

If features_nmb < low_features_nmb_thr and:

- -

- In the preceding frame, the bear was detected in an observable zone, and the movement labeling the actual frame was marked as freezing, and the watching kept going;

- -

- The previous frame was labeled as freezing, and the current frame was also labeled as freezing;

- -

- Else, the frame was marked as no_information, and the watching was canceled.

If features_nmb > features_nmb_thr, the picture was marked as a movement frame and was examined in order to be confirmed as one or not.

If low_features_nmb_thr < features_nmb < features_nmb_thr and:

- -

- The panda bear was viewed in the door area after an exit, the watching zone for the next video frame would be restrictive to the same door area. This specific seeking continued as long as the condition took place;

- -

- The bear was viewed in a visible area other than the door zone after a movement frame series, and the current motion rectangle was almost in the same position as the set of preceding rectangles, then the current frame was marked as freezing;

- -

- Else, the no_information attribute is set.

When the panda was detected in a visible zone and the precedent frame was a movement frame, the motion-seeking area was confined around the current position. Otherwise, the searching area for the counting box method increased to the entire motion zone determined by the ‘ray casting’ method. A very specific watching case could be generated for films provided by camera number 8 when the panda bear had a bath merely behind the two foreground stones. The panda might have been invisible, but because of his bathing activity, the pond water started flooding the zone. In this way, a lot of motion traits were generated in the analyzed image. In this particular case, the watching took place in a limited zone beside the panda's last observable position.

Finally, if the primary was marked as a movement frame, the current frame was not selected as a candidate for trajectory synthesis if: (a) the movement rectangle area greatly surpassed the previous movement area. This could be generated by a bursting, huge movement (caused by flaring or abrupt brightness changes); (b) the motion rectangle was located far away from the previous positions in a visible area; (c) the motion rectangle position did not agree with the motion direction shown in the preceding motion.

Another validation framework was generated by a sequence with the panda slowing down, sitting in a visible area, and starting to play with wood branches. In this case, the movement features were situated apart from the real panda position. For such sequences characterized by a fast movement followed by a stop in an observable area and subsequently far away motion rectangles, the tracking outcome is correlated with a frame assessment that identifies the panda corpus. If the image analysis indicates a panda location very near to the previous position, the current movement rectangle is ignored, and the previous validated movement rectangle is considered.

The final step of the validation of the current frame analysis result consists of the correlation with the previous frame selected by the fusion process. In the case of cameras in the general view, c6 and c7, the watching result could be disturbed by flying birds. Now, the result was validated only if the movement features were located in an area corresponding to the zone detected in the previously detected frame. The previous selected camera could be different from the current one. To check the condition, the list of corresponding areas of the previously selected camera was used.

The tracking task pseudo-code is presented in Appendix A.

5.2. Trajectory Synthesis

The frame with the most observable bear was chosen and archived in the final frame collection at the end of the current frame analysis for all the project cameras. The list would be processed in order to maximize the sequences of the same camera and finally would be analyzed to detect the stress episodes.

The frames with localizations in the exterior of the observable zone were not considered. The same held for those where the zoo technician is present.

As described in pseudo-code in Appendix B, the frame selection was applied by means of a gamut of rules that establish a hierarchy of observable zones for the four video cameras.

5.3. Trajectory Analysis

The procedure of the trajectory synthesis provides the list of fused panda positions. Each entry of the final list also includes the frame number and the motion attribute (movement, freezing, or no_information). Also, the trajectories picked up from each project camera are available.

The synthesized trajectory was filtered in order to simplify the stress episode detection by generating more compact sequences of the same camera. The final trajectory synthesis performed at the end of each frame analysis chose the best available view. Sometimes, when the panda was moving in a full-view area, the target was sensed by at least two cameras. From the two options with almost the same information offered, the synthesis technique favors the one already selected previously. There are situations when the selection procedure switches to another available position because the previously selected camera did not provide a position due to some tracking error. Later on, the former selected camera could provide a better result than the currently selected view, and again, a switch occurs.

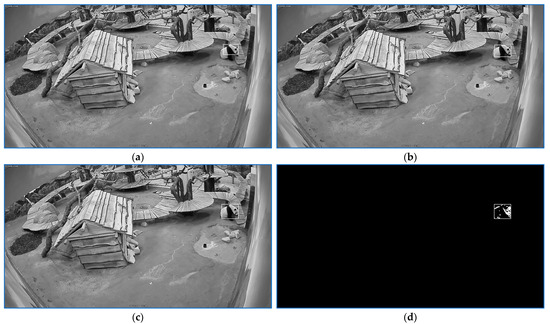

For high-resolution registrations, the six filtering methods presented in [22] were improved. Due to the new implementation, the recognition rate increased for three specific stress categories. The six trajectory optimizations are linked to motion shapes: freezing–moving switches, frequent walks on the same scene path, etc. Each of the six filtering approaches employs the same technique but is applied to different contexts. So, we shall detail only the first filtering step, which is meant to detect the sequences bordered by large selection domains of the same camera. Inside the list, short position sequences of other video cameras are introduced and possibly alternate with a few video frames of the current camera. To obtain a more compact sequence of the main camera, each short succession of the complementary camera would be changed with the corresponding series of the current camera. The process is shown in Figure 10 where Figure 10a,d is the right end of the first large series of current cameras; Figure 10c,f is the left extremity of the second large series of current cameras. Figure 10b shows a selection substitution from the current camera to the complementary camera. The new camera series starting from the position shown in Figure 10b until the last position of the interleaved sequence would be changed with the correspondent sequence of a current camera whose start is shown in Figure 10e. The procedure is summarized also by the pseudo-code in lines 1–9.

Figure 10.

First filtering stage of the ending path. (a,d) depict the right end of the first sequence of current camera; (c,f) show the left extremity of the second sequence of the same camera. (b) shows the beginning of an in-terleaved sequence of the complementary camera. (e) indicates the beginning of the current camera sequence replacing the interleaved sequence of the complementary camera.

Finally, in order to identify the stress sequences, the following processing stages were implemented in the last variant of the computed path:

- (1)

- For each frame gap of the unfilled frame positions bounded by the same useful information from the camera, perform the following: Each parameter structure of gap intermediary frame , would be filled with movement mass center coordinates: ; , and the attribute would be labeled as moving if at least one pair position is marked as moving. Else, the new positions are marked as freezing;

- (2)

- For every element of the updated list filter, the motion characteristic by counting the number of every characteristic type in a window is centered in the current position. Ultimately, the position characteristic is the characteristic type with the utmost number of votes;

- (3)

- For every pair with an identical available camera number, assess the local velocity: . The velocity is then weighted by camera number and by camera resolution;

- (4)

- For every available place in the last list, filter the velocity by averaging the velocities of good places in a window centered in the actual position.

To assess the stress sequences, three thresholds were used: . According to the velocity amount and the zoo surface of the examined frames, and for a particular velocity domain, there are defined five stress classes: stress running, stress walking 1, stress walking 2, stress walking 3, and stress stationary:

- -

- Positions on the list with are labeled as ;

- -

- Every place on the list, besides the stress feature, with and that is a partition of a moving series consequence of a recording period in a complete visible zone is marked as ;

- -

- Every place on the list, besides stress feature, with and that is a partition of a series consequence of a still period in one of the garden housings is marked as ;

- -

- Every place on the list, besides stress feature, with that is a partition of a series consequence of a still period in one of the garden housings, and the path series has at least one height, is marked as . Here, height is defined as the point located at a significant distance to the line approximating the path section;

- -

- Tag with the characteristic : the frame series with a duration greater than 5 s in which the panda bear bathes in the basin and is viewed by camera 8. Every place in such a frame series must have the motion center of gravity situated close to the basin.

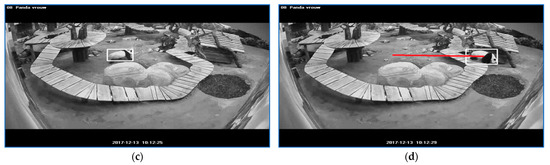

The start of a ‘stress running’ sequence is depicted in Figure 11a. The sequence end is illustrated in Figure 11b. The sequence is followed by a segment labeled as ‘stress walking 1′ whose start is depicted in Figure 11c and the end in Figure 11d. The first episode lasted for 1.5 s, and the second had a duration of 2.3 s.

Figure 11.

The start of a ‘stress running’ sequence is depicted in (a). The sequence end is illustrated in (b). The sequence is followed by a segment labeled as ‘stress walking 1’ whose start is depicted in (c) and the end in (d). The white rectangle frames the panda and the red line indicates the linear path that panda traveled for a specific stress sequence.

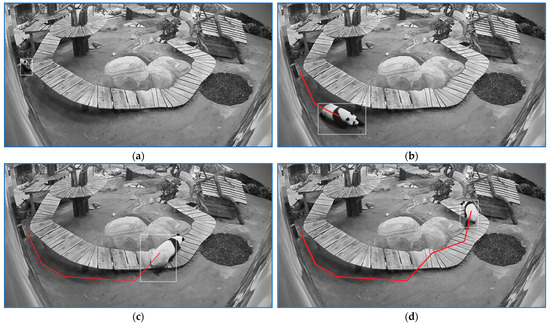

The start, the end, and two intermediary positions of a sequence labeled with ‘stress walking 2’ are illustrated in Figure 12.

Figure 12.

Four positions of a sequence labeled as ‘stress walking 2‘. (a) indicates the sequence start. (d) shows the sequence end and (b,c) depict intermediate positions on the path. The rectangle frames the panda and the red line indicates the linear path that panda traveled for a specific stress sequence.

The start, the end, and two intermediary positions of a sequence labeled as ‘stress walking 3’ are illustrated in Figure 13.

Figure 13.

Four positions of a sequence labeled as ‘stress walking 3‘. (a) indicates the sequence start. (d) shows the sequence end and (b,c) depict intermediate positions on the path. The rectangle frames the panda and the red line shows the panda trajectory from the sequence start to the depicted position.

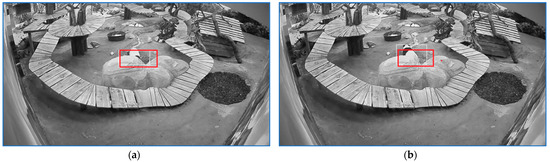

The start and the end of a 30 s sequence of ‘stress stationary’ are illustrated in Figure 14.

Figure 14.

First frame (a) and last frame (b) of a sequence labeled as ‘stress stationary’. When panda is seized in the area behind of the two stones and the movement features cluster tends to elongate to the north the search area is restrained inside the zone depicted by the red rectangle.

Appendix C presents the pseudo-code of the list filtering and analysis procedure.

5.4. Results and Conclusions

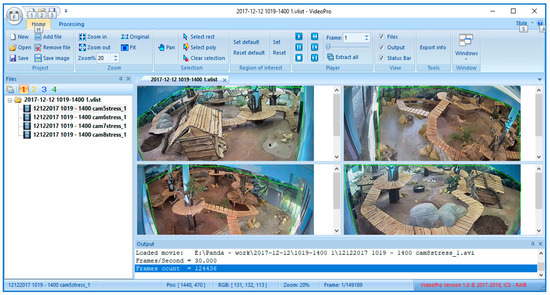

This shown implementation was the theme of a feasibility research contract with the company SC Centric IT Solutions Romania and is related to the tracking and recognition of recorded animals’ actions or behaviors by video analysis.

The main window of the user interface is displayed below (Figure 15).

Figure 15.

Main window of the application for animal activity recognition.

The methods were tested on a large set of video registrations of two to four synchronized recordings given by the beneficiary comprising 480 h of high-resolution and 150 h of low-resolution movies with zoo activity.

Every tracking sequence was analyzed with great accuracy due to the correlation of the current position with the preceding frame information related to the last path obtained by the path fusion procedure. Actually, the computed trajectory at the final part of the tracking stage is errorless. Before the last step of filtering and analysis of the synthesized trajectory, due to the many camera switches, just classification of the running stress states could be readily accomplished because the running parts yielded long path series in the last list. The walking and stationary stress state recognition could be as easy as in the last positions list; they are constituted by series provided by the same video camera. The six optimization stages shown in the previous section led to a better recognition rate.

The classification accuracy for the five stress classes varies between 90% and 96% in the case of the low-resolution recordings as it is detailed in [22]. As mentioned earlier, for the high-resolution recordings , the improvement of the filtering methods was conducted with a better recognition rate (Table 1) for three stress types from the whole stress set, namely stress walking 2, stress walking 2, and stress walking 3. Now, for the high-resolution videos, the classification rate for all stress classes varies between 96% and 98%.

Table 1.

Improvement of stress episode rate recognition for high-resolution registrations.

It is difficult to compare these recognition rates with other application results due to the specificities of the panda habitat that strongly affect the panda behavior. Three of the five stress patterns, namely stress walking 2, stress walking 3, and stress stationary, are directly related to the specific locations of the whole observed area. However, the results are good enough considering the final purpose of this application.

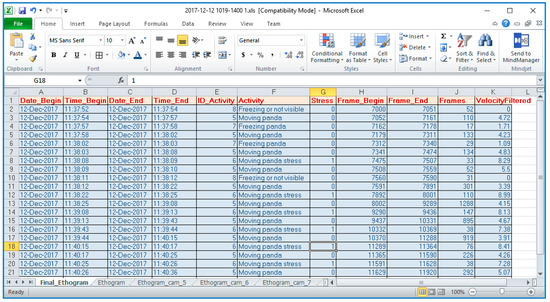

Finally, the stress episode detection result is provided in an Excel format, as it is shown in the image below (Figure 16).

Figure 16.

Stress episode detection. Processing results in Excel format.

6. Conclusions

The paper describes three applications for tracking and studying the behavior of animals in captivity. The three experiments are different both in terms of the configuration and size of the scene in which the animals are located, as well as in the type of actions followed and analyzed. The applications were developed within research projects and contracts, and the results obtained allow the continuation of research for a more in-depth analysis.

Author Contributions

The authors equally contributed. Conceptualization, F.R.; methodology, F.R. and S.-I.B.; software, F.R. and S.-I.B.; validation, F.R., S.-I.B., H.-N.C., R.L. and C.D.N.; formal analysis, F.R., S.-I.B. and H.-N.C.; investigation, F.R. and H.-N.C.; data curation, S.-I.B., R.L. and C.D.N.; writing—original draft preparation, F.R.; writing—review and editing, F.R., S.-I.B., H.-N.C., R.L. and C.D.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Romanian Academy as part of the research project Signals Analysis and Recognition, Information Fusion, Research Theme Methods of Artificial Intelligence in Biomedical Images Analysis, except for the research presented in Section 5—Stress Sequences Identification of a Zoo Panda Bear by Movement Image Analysis, which was developed by the Romanian Academy with the financing contract no. 8821/19.12.2017 with SC CENTRIC IT SOLUTIONS ROMÂNIA SRL: ‘Pilot Research Program regarding the analysis and processing of images to recognize behaviors or activities of some animals’.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Restrictions apply to the availability of this data. Data were obtained from application beneficiaries for this research only.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

| Algorithm A1. Task Trajectory synthesis procedure denoted tracking_task |

| Input: iFrame = Current frame of the specific view; camera_nmb = current camera indicative; start_Frame = initial frame number of the processed sequence; |

| Output: Tracking rectangles coordinates / frame file |

| 01. movement attributes initializations; |

| 02. compute the gray level average of the current frame; |

| 03. if view gray_level_average[i] is too low or too high |

| 04. return (-1, -1); |

| 05. if current view is low resolution then low_resolution = 1; |

| 06. localize movement cluster (procedure Section 2 completed with a ‚ray casting’ selection and eventually with a searching area around previous position if the panda was in a full visible zone); |

| 07. if not_first_time_localization == 0 |

| 08. if the number of movement features is less than high_features_nmb_thr then |

| 09. if zoo_technician = 1 and the movement cluster is localized in the area of the gate where panda was fostered during technician session then |

| 10. not_first_time_localization = 1; valid_movement = 1; zoo_technician = 0; |

| else |

| 11. if the movement is in the gates area or around previous valid position then |

| 12. not_first_time_localization = 1; valid_movement = 1; |

| else |

| 13. valid_movement = 0; end if (lines 11, 09) |

| else |

| 14. if zoo_technician = 0 |

| 15. if the current frame is the first movement frame of the sequence and the movement is strong and around zoo technician gate then |

| 16. zoo_technician = 1; not_first_time_localization = 1; valid_movement = 1; |

| else |

| 17. if the movement is in the gates area or around previous valid position then |

| 18. not_first_time_localization = 1; valid_movement = 1; |

| else |

| 19. valid_movement = 0; end if (lines 17, 15) |

| else |

| 20. if the movement is around the area of the gate where panda was fostered during technician session and also the last movement was detected in the area of the technician gate then |

| 21. zoo_technician = 0; not_first_time_localization = 1; valid_movement = 1; |

| else |

| 22. not_first_time_localization = 1; valid_movement = 1; end ifs (lines 20, 14, 08) |

| else |

| 23. if the number of movement features is less than low_features_nmb_thr then |

| 24. maintain_gate_search = 0; |

| 25. goto line 63; // no or weak movement analysis; end if (line 23) |

| 27. if the number of movement features is less than features_nmb_thr and (iFrame-last_information_frame > frame_gap_thr or maintain_gate_search == 1) then |

| 28. if features are not located in the gate areas of the scene then |

| 29. maintain_gate_search = 0; goto line 63; // no or weak movement analysis; |

| 30. else |

| 31. maintain_gate_search = 1; valid_movement = 1; end if (line 28) |

| 32. else |

| 33. maintain_gate_search = 0; valid_movement = 1; end ifs (line 27, 07) |

| 34. if valid_movement == 1 then |

| 35. if one of the following conditions is true |

| 1. suddenly bursting configuration with more than half of the movement features far away from the current movement; |

| 2. current movement cluster is far away from the position in full view areas where panda was previously sleeping; |

| 3. current movement cluster is not in the area of zoo technician gate and the last exit was of the technician (zoo_technician == 1) |

| 36. then valid_movement = 0; |

| 37. if iFrame-last_information_frame > frame_gap_thr then |

| 38. not_first_time_localization = 0; end ifs (lines 37, 35, 34) |

| 39. if valid_movement == 0 then |

| 40. if zoo_technician == 1 |

| 41. return (-1, -1); end if (line 40) |

| 42. |

| 43. scroll down the all processed information/frame; |

| 44. ; end if (line 39) |

| 45. if panda_sleeping == 0 and the last five movement rectangles are included one in the preceding one and movement features are decreasing then |

| 46. if the number of the movement features is less than a threshold then |

| 47. panda_sleeping = 1; end if (line 46, 45) |

| 48. if panda_sleeping == 1 |

| 49. if the current movement rectangle is not included in the previous one but not far away from this one then |

| 50. panda_sleeping = 0; |

| 51. scroll down the all processed information/frame; last_information_frame = iFrame; |

| 52. else |

| 53. set the current movement rectangle to the previous one and perform a search for movement features. |

| 54. if the number of movement features is greater than low_features_nmb_thr then |

| 55. panda_sleeping = 0; |

| 56. scroll down the all processed information/frame; last_information_frame= iFrame; |

| 57. else |

| 58. valid_movement = 0; end if (lines 54, 49, 48) |

| 59. if zoo_technician == 1 |

| 60. return (-1, -1); |

| 61. else |

| 62. ; end if (line 59) |

| 63. if iFrame-last_information_frame > frame_gap_thr and panda_sleeping == 0 and zoo_technician == 0 then |

| 64. not_first_time_localization = 0;//initialize the tracking procedure |

| 65. if zoo_technician == 1 and the previous computed target coordinates were in the technician gate area then |

| 66. zoo_technician =0; end if (line 65) |

| 67. if panda_sleeping == 1 then |

| 68. if the last chosen position by the fusion process is of a different view than the current one and there is no match with the current view position then |

| 69. panda_sleeping = 0; |

| 70. else |

| 71. if there is weak movement check for small displacements |

| 72. if small displacements then |

| 73. update new position; panda_sleeping = 0; last_information_frame = iFrame; |

| 74. |

| 75. end ifs (lines 72, 71, 68) |

| 76. else |

| //check for sleep condition |

| 77. if iFrame-last_information_frame == 1 and panda_sleeping == 0 then |

| 78. if the computed pose in current view is in a suitable area for freezing then |

| 79. if the last chosen position by the fusion process is of a different view than the current one and there is a match with the current view position then |

| 80. if in the previous five frame there were not significant movements, related to low_resolution parameter, or panda in the last frame was detected in a full view area (for instance on a high platform) then |

| 81. panda_sleeping = 1; end ifs (lines 80, 79, 78, 77, 67) |

| 82. if panda_sleeping == 1 |

| 83. if zoo_technician == 1 |

| 84. ; |

| 85. else |

| 86. ; end if |

| 87. scroll down the all processed information/frame; |

| 88. |

| 89. else |

| 90. ; |

| 91. scroll down the all processed information/frame; |

| 92. ; end if (line 82, 63) |

Appendix B

| Algorithm A2. Trajectory synthesis procedure |

| Input: Video sequences (1-4) |

|

Output

: List

of tracking rectangles coordinates of the most visible position from all

views/ frame file; frame number of the analyzed position and the movement attribute (moving, freezing). |

| 01. Choose the camera (view) set to be included in the

project; Generate the list results: one list for each project camera; one list for fused trajectory; For each project video frame is allocated an entry list include ing: camera number; localization coordinates and movement attributes. |

| 02. Establish the tracking mode: parallel (two to

four camera tasks) or sequential (for debug purpose); |

| 03. for each project camera do |

| 04. set the polygon of useful view of the entire camera scene; |

|

05. set the areas of doors scene through panda or

zoo technician could enter in or quit the scene; |

|

06. set the areas where could not be considered

asleep in case of lack of movement features for the current camera; |

|

07. set the areas where the movement features should

be erased because are generated by the time and frame counters or by the water waves for the views including water ponds (mainly the fourth view); |

| 08. end for |

|

09. for each frame, denoted iFrame, of the

current video sequence (start_frame to end_frame) do |

|

10. if iFrame–start_Frame < 3 compute the gray level average (denoted gray_level_average_current) for each view; |

|

11. if at least one gray_level_average_current

is too low or too high (the image is entire black or white) |

| 12. start_Frame = iFrame; |

| 13. continue; end if |

| 14. fill the list gray_level_average[3] of each view: |

| task(i).gray_level_average[2] = task(i). gray_level_average[1] |

| task(i).gray_level_average[1] = task(i) .gray_level_average[0] |

| task(i).gray_level_average[0] = task(i). gray_level_average_current; |

| 15. continue; end if |

|

16. if parallel _mode launch tracking

task (iFrame, camera_nmb, start_frame) of the all cameras in the project; |

| 15. else |

|

16. for each camera in project execute tracking

task (iFrame, camera_nmb, start_frame); end for, end if |

|

17. if at least one of the tasks returns a

value related to zoo technician presence in the scene do not consider the current frame in the fusion process; end if |

|

18. if a task returns non valid coordinates,

others than those signalizing the technician presence do not consider it in the fusion process; end if |

| 19. if only one task returns valid position retain its view as most suitable view; end if |

|

20. if at least two tasks return valid

positions retain most suitable view considering area localization weight and the previous view in the fused views chain; |

|

21. if the previous selection is near the

border of a full view area, neighbor of another full view area, and the movement speed is fast (lot of movement features) do not switch views |

| else |

|

22. if the previous selection is near

the fading view border of a full view area and at least one of the others available views is in a full view area consider this one instead the current view; end if (lines 22, 21, 20) |

| 23. end for; |

Appendix C

| Algorithm A3. List filtering and analysis |

|

Input

: List

of tracking rectangles coordinates of the most visible position from all

views/ frame file; frame number of the analyzed position and the movement attribute (moving, freezing, no_information). |

| Output : Excel sheet pointing to stress episodes of the analyzed sequence. |

| 01. for each project camera do |

|

02. detect in the final list the pair intervals with

the same current camera with more entries than each contained sub-interval. The sub-intervals can be only of the selected camera or of the complementary camera (complementary cameras: 6 and 7, respectively 5 and 8); |

| 03. if there is at least one pair of such intervals do |

| 04. for each interval delimited by the pair intervals |

| 05. for each sub-interval of complementary camera do |

|

06. if there are positions of

selected camera delimited at left by the left side of the current sub-interval and at right by the left side of the next sub-interval of selected camera then |

|

07. replace current subinterval

of complementary camera with all the specified positions of selected camera; |

| 08. else |

|

09. eliminate current sub-interval

from the list by setting its positions with null number and freezing_or_missing attribute ; end if, for, for, if, for |

| 10. for each project camera do |

|

11. detect the pair intervals with the same current

camera with more entries than each contained sub-interval. The sub-intervals can be only of the selected camera or of the opposite camera (pairs of opposite cameras: (6,5), (6, 8) respectively (7,5) and (7,8)); In addition, the second interval of the pair is followed by a significant interval of the same opposite camera from the current interval; |

| 12. if there is at least one pair of such intervals do |

| 13. for each interval delimited by the pair intervals |

| 14. for each sub-interval of the opposite camera do |

|

15. if there are positions of

selected camera delimited at left by the left side of the current sub-interval and at right by the left side of the next sub-interval of selected camera then |

|

16. replace current subinterval

of opposite camera with all the specified positions of selected camera; |

| 17. else |

|

18. eliminate current sub-interval

from the list by setting its positions with null number and freezing_or_missing attribute ; end if, for, for, if, for |

| 19. if both general view cameras (6 and 7) are in the project do |

|

20. for each k entry of camera

positions list (there are two lists) compute a visibility parameter as follows: |

| 21. if panda is in foreground in a full visibility area: visibilityci(k) = 1 end if |

| 22. if panda is in foreground in a not full visibility area: visibilityci(k) = 0 end if |

| 23. if panda is in background |

| 24. if camera_nmb = 7 then visibilityci(k) = −1 |

| 25. else |

| 26. if new position is included in excepted area visibilityci(k) = 1; |

| 27. else visibilityci(k) = −1 end ifs, for |

| 28.

if there is at least one switch to the other general camera with visibilityci(k) = 1 followed by a sequence containing a re-switch to the former selection but this time with visibilityci(k) = −1 and again a switch to the best view camera |

| 29. for each such a sequence do |

|

30. replace the subinterval of weak view

camera with all the not-selected positions of the best view camera; end for, if |

| 31. if there is at least one switch from camera 6 to camera 7 with visibilityc6(k) = 1 followed by a sequence containing a re-switch to the former selection of camera 6 with visibilityci(k) = 1 and again a switch to the best view camera then |

|

32. count from the end of the included sequence

of camera 6 consecutive positions with visibilityci(k) = −1 |

| 33. for each such a sequence do |

| 34. if counter not null |

|

35. replace the last counter positions of

the interval of weak view camera with all the not-selected positions of the best view camera; end if, for, if, if (line 19) |

| 36. detect in current final list the sequences of transitions c8->c7>c8->c7. |

|

37. for each transition where camera 7 area is

fully visible and located behind the three levels platform do |

|

38. replace the second sequence c8 with the

not-selected positions of camera 7 in the same frame interval with the sequence c8 to be eliminated; end for |

|

39. detect in current final list the sequences of

transitions c7->c6 for periods when only one or both of these cameras provide information; |

|

40. for each transition where camera 7 area is

fully visible and located behind the three levels platform do |

|

41. if the first entry of c6 segment has ‘pause’ attribute replace the sequence c6 with the

not-selected positions of camera 7 in the same frame interval with the sequence c6 to be eliminated; end if, for |

| 42. for each project camera do |

|

43. detect the sequences starting and ending with the

same camera containing only intervals of the complementary camera alternating with those of the starting selected camera of the sequence (complementary cameras: c6 and c7, respectively c5 and c8); |

|

44. for each sequence compute the duration of

selection for each camera in current sequence and select as winner the camera that detained more time the selection |

| 45. for each sequence interval of camera to be replaced do |

|

46. if there are positions of selected

camera delimited at left by the left side of the current sub-interval and at right by the left side of the next sub-interval of selected camera then |

|

47. replace current subinterval of

complementary camera with all the specified positions of selected camera; |

| 48. else |

|

49. refill the interval to be replaced

with the last selected position of the winner camera. Set the still attribute for all positions; end if, for, for, for |

| 50. for each project foreground camera do |

| 51. detect a sequence of consistent length with mostly central list interval in full visible area of |

| current camera; |

|

52. continue searching as long as next sequence

in the final list is short and of low visibility positions of a general view camera; |

| 53. if there is not such a sequence goto 51 (continue searching from the current position) |

| 54. else |

|

55. if next sequence is of consistent

length with mostly central list interval in full visible area of complementary camera |

|

56. replace the sequences found in step 52

with the most appropriate sequence from the lists of foreground cameras; |

| 57. goto 51 (continue searching from the current position); |

| 58. else |

| 59. goto 51 (continue searching from the current position); end if, if, for |

|

60. for each sequence in the fused list with the

same camera but with a gap of unfilled position complete the gap according with paragraph a) of the procedure description; end for |

|

61. for every position of the updated list filter

the motion characteristic by computing the number of each characteristic type in a window centered in the actual position. At the end, the position characteristic will be the characteristic class with the utmost number of votes; end for |

|

63. for every pair with the same good camera

number calculate the local velocity suitable with the paragraph c) of the procedure description; end for |

|

64. for every available position in the list

filter the velocity by averaging the velocities of good places in a window centered in the actual position; end for |

| 65. Label with attribute stress running each position in the list with vi > thr_v1 |

| 66. Label with attribute stress walking 1 each position in the list, without stress attribute, with thr_v2 < thr_v1 and that is part of a sequence following a repos period in a full visible area; |

|

66. Label with attribute stress walking 2 each position in the list, without stress attribute, with thr_v2 < thr_v1 and is part of a sequence following a still period in one of the garden shelters; |

| 67. Mark with attribute stress walking 3 each place in the list, besides stress characteristic, with thr_v3 < vi thr_v2 that is part of a sequence following a still period in one of the garden housings, and the path sequence has at least one height; |

| 68. Label with attribute stress stationary the episodes with a duration more than 5 seconds, in which panda bathes in the basin, and is viewed by camera 8. Each place in such a series must have the motion center of gravity situated nearby the basin; |

|

69. Synthesize

an Excel sheet indicating the sequences (start frame, end frame) with stress attribute. |

References

- Farah, R. Computer Vision Tools for Rodent Monitoring. Ph.D. Thesis, University of Montreal, Montreal, Canada, July 2013. [Google Scholar]

- Farah, R.; Langlois, J.M.P.; Bilodeau, G.-A. Catching a rat by its edglets. IEEE Trans. Image Process. 2013, 22, 668–678. [Google Scholar] [CrossRef] [PubMed]

- Farah, R.; Langlois, J.M.P.; Bilodeau, G.-A. Computing a Rodent’s Diary. Signal Image Video Process. 2016, 10, 567–574. [Google Scholar] [CrossRef]

- Koniar, D.; Hargaš, L.; Loncová, Z.; Duchoň, F.; Beňo, P. Machine vision applications in animal trajectory tracking. In Computer Methods and Programs in Biomedicine 127; Elsevier: Amsterdam, The Netherlands, 2016; pp. 258–272. [Google Scholar]

- Ishii, I.; Kurozumi, S.; Orito, K.; Matsuda, H. Automatic Scratching Pattern Detection for Laboratory Mice Using High-Speed Video Images. IEEE Trans. Autom. Sci. Eng. 2008, 5, 176–182. [Google Scholar] [CrossRef]

- Luxem, K.; Sun, J.J.; Bradley, S.P.; Krishnan, K.; Yttri, E.; Zimmermann, J.; Pereira, T.D.; Laubach, M. Open-Source Tools for Behavioral Video Analysis: Setup, Methods, and Development. 2022. Available online: https://arxiv.org/ftp/arxiv/papers/2204/2204.02842.pdf (accessed on 15 April 2023).

- Rodriguez, A.; Zhang, H.; Klaminder, J.; Brodin, T.; Andersson, P.L.; Andersson, M. ToxTrac: A fast and robust software for tracking organisms. Methods Ecol. Evol. 2018, 9, 460–464. [Google Scholar] [CrossRef]

- Patman, J.; Michael, S.C.J.; Lutnesky, M.M.F.; Palaniappan, K. BioSense: Real-Time Object Tracking for Animal Movement and Behavior Research. In Proceedings of the 2018 IEEE Applied Imagery Pattern Recognition Workshop (AIPR), Washington, DC, USA, 9–11 October 2018; pp. 1–8. [Google Scholar]

- Itskovits, E.; Levine, A.; Cohen, E.; Zaslaver, A. A multi-animal tracker for studying complex behaviors. BMC Biol. 2017, 15, 29. [Google Scholar] [CrossRef] [PubMed]

- Iswanto, I.A.; Li, B. Visual Object Tracking Based on Mean-shift and Particle-Kalman Filter. ScienceDirect. Procedia Comput. Sci. 2017, 116, 587–595. [Google Scholar] [CrossRef]

- Mathis, M.W.; Mathis, A. Deep learning tools for the measurement of animal behavior in neuroscience. ScienceDirect. Curr. Opin. Neurobiol. 2020, 60, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Yu, S.-Y.; Flierman, N.; Loyola, S.; Kamermans, M.; Hoogland, T.M.; De Zeeuw, C.I. OptiFlex: Multi-Frame Animal Pose Estimation Combining Deep Learning With Optical Flow. Front. Cell. Neurosci. 2021, 15, 621252. [Google Scholar] [CrossRef] [PubMed]

- Rodriguez, A.; Zhang, H.; Klaminder, J.; Brodin, T.; Andersson, M. ToxId: An efficient algorithm to solve occlusions when tracking multiple animals. Sci. Rep. 2017, 7, 14774. [Google Scholar] [CrossRef] [PubMed]

- Schütz, A.K.; Krause, E.T.; Fischer, M.; Müller, T.; Freuling, C.M.; Conraths, F.J.; Homeier-Bachmann, T.; Lentz, H.H.K. Computer Vision for Detection of Body Posture and Behavior of Red Foxes. Animals 2022, 12, 233. [Google Scholar] [CrossRef] [PubMed]

- Chen, P.; Swarup, P.; Matkowski, W.M.; Kong, A.W.K.; Han, S.; Zhang, Z.; Rong, H. A study on giant panda recognition based on images of a large proportion of captive pandas. Ecol. Evol. 2020, 10, 3561–3573. [Google Scholar] [CrossRef] [PubMed]

- Luxem, K.; Mocellin, P.; Fuhrmann, F.; Kürsch, J.; Miller, S.R.; Palop, J.J.; Remy, S.; Bauer, P. Identifying behavioral structure from deep variational embeddings of animal motion. Commun. Biol. 2022, 5, 1267. [Google Scholar] [CrossRef] [PubMed]

- Bethell, E.J.; Khan, W.; Hussain, A. A deep transfer learning model for head pose estimation in rhesus macaques during cognitive tasks: Towards a nonrestraint noninvasive 3Rs approach. Appl. Anim. Behav. Sci. 2022, 255, 105708. [Google Scholar] [CrossRef]

- Zuerl, M.; Stoll, P.; Brehm, I.; Raab, R.; Zanca, D.; Kabri, S.; Happold, J.; Nille, H.; Prechtel, K.; Wuensch, S.; et al. Automated Video-Based Analysis Framework for Behavior Monitoring of Individual Animals in Zoos Using Deep Learning—A Study on Polar Bears. Animals 2022, 12, 692. [Google Scholar] [CrossRef] [PubMed]

- Rotaru, F.; Bejinariu, S.I.; Luca, M.; Luca, R.; Niţă, C.D. Video processing for rat behavior analysis. In Proceedings of the 13-th International Symposium on Signals, Circuits and Systems, ISSCS 2017, Iaşi, România, 13–14 July 2017; pp. 1–4. [Google Scholar]

- Rotaru, F.; Bejinariu, S.I.; Luca, M.; Luca, R.; Niţă, C.D. Video tracking for animal behavior analysis. In Memoirs of The Scientific Sections of Romanian Academy; Series IV, Tome XXXIX; Publishing House of the Romanian Academy: Bucharest, Romania, 2016; pp. 37–46. [Google Scholar]

- Rotaru, F.; Bejinariu, S.I.; Costin, H.; Luca, R.; Niţă, C.D. Animal tracking method used in neurosciene experiments. In Proceedings of the 8th IEEE International Conference on E-Health and Bioengineering-EHB 2020, Iaşi, România, 29–30 October 2020. [Google Scholar]

- Rotaru, F.; Bejinariu, S.I.; Costin, H.; Luca, R.; Niţă, C.D. Captive Animal Stress Study by Video Analysis. In Proceedings of the 10th IEEE International Conference on E-Health and Bioengineering-EHB 2022, Iaşi, România, 17–18 November 2022. [Google Scholar]

- Paxinos, G.; Huang, X.F.; Toga, A.W. The Rhesus Monkey Brain in Stereotaxic Coordinates; Academic Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Milton, R.; Shahidi, N.; Dragoi, V. Dynamic states of population activity in prefrontal cortical networks of freely-moving macaque. Nat. Commun. 2020, 11, 1948. [Google Scholar] [CrossRef] [PubMed]