Abstract

High-efficiency video coding (HEVC/H.265) is one of the most widely used video coding standards. HEVC introduces a quad-tree coding unit (CU) partition structure to improve video compression efficiency. The determination of the optimal CU partition is achieved through the brute-force search rate-distortion optimization method, which may result in high encoding complexity and hardware implementation challenges. To address this problem, this paper proposes a method that combines convolutional neural networks (CNN) with joint texture recognition to reduce encoding complexity. First, a classification decision method based on the global and local texture features of the CU is proposed, efficiently dividing the CU into smooth and complex texture regions. Second, for the CUs in smooth texture regions, the partition is determined by terminating early. For the CUs in complex texture regions, a proposed CNN is used for predictive partitioning, thus avoiding the traditional recursive approach. Finally, combined with texture classification, the proposed CNN achieves a good balance between the coding complexity and the coding performance. The experimental results demonstrate that the proposed algorithm reduces computational complexity by 61.23%, while only increasing BD-BR by 1.86% and decreasing BD-PSNR by just 0.09 dB.

1. Introduction

As the demand for multimedia content continues to grow, high-definition video services are becoming a sought-after goal [1,2]. To efficiently transmit a huge amount of high-resolution video data, the ITU-T VCEG and ISO/IEC MPEG organizations continuously update and develop video transmission standards. The technique based on CU partition, commonly used in various video coding standards, enhances coding efficiency but at the expense of increased complexity. Take the widely used HEVC/H.265 [3] as an example, although HEVC increases the coding efficiency by nearly 50% over the advanced video coding AVC/H.264 [4], the complex prediction techniques result in a two to four times increase in coding complexity [5]. Among these, the adoption of quad-tree technology in coding unit (CU) partition consumes a significant amount of coding time. It is mainly done by dividing the coding tree unit (CTU) [6] into multiple CUs based on the structure. This process uses a recursive search algorithm that traverses all four CU sizes in the CTU and calculates the rate distribution (RD) costs [7] to determine the best CU partition. Therefore, optimizing the CU partition is an extremely important study to balance complexity and coding performance. Taking into account the most recent generation of video coding standards, such as VVC/H.266 [8], which is 18 times more complex than HEVC [9] and the HEVC is more popular in industrial applications than VVC [10]. The superiority of the algorithm in HEVC is verified without sacrificing generality, thus exploring ways to reduce the complexity of HEVC.

A wide variety of algorithms have been developed to decrease complexity in video coding, primarily by skipping or controlling the traversal rate-distortion optimization (RDO) search at each CU depth. These algorithms can be classified as heuristic or machine learning. Heuristic algorithms [11,12,13] typically extract the prior information based on artificially designed features to assess the complexity of CUs, allowing for the skipping of redundant calculations and optimizing CU partition. Texture information, spatial correlation and statistical RD are commonly used to predict CU depth characteristics. Wang et al. [14] proposed a fast reference image selection strategy that utilizes motion vectors of adjacent coding blocks after performing CU depth pruning based on block texture features and motion information. Liu et al. [15] introduced an adaptive CU selection method that employs depth hierarchy and texture complexity to filter out unnecessary CUs. Moreover, raw redundant pattern candidates can be reduced based on the texture orientation of each prediction unit (PU). Zhang et al. [16] proposed a statistical model-based CU optimization scheme and an intra-prediction model decision. They utilized the texture and depth recursion of spatially adjacent CUs to predict the size range of the current CU. The correlation between the prediction patterns is then utilized to terminate the complete RDO process of each CU depth in advance.

In recent years, machine learning-based algorithms applied to fast CU partitions have gained more and more attention. Among these algorithms, the support vector machine (SVM) based algorithm is a classical approach. Hu et al. [17] proposed a method that utilizes dual SVMs to determine the CU size based on the texture characteristics of the CU. Pakdaman et al. [18] proposed a weight-based adaptive SVM model for deciding the CU partition, thereby reducing the RD cost calculation of traversing all CU sizes. Amna et al. [19] cascaded three SVMs to obtain sufficient features for accurate CU partition. Westland et al. [20] introduced a new algorithm for determining the simplest and most efficient decision tree model, enabling the quick implementation of CU partition. Compared to heuristic algorithms, traditional machine learning methods are able to learn more parameters than heuristic algorithms, making the learned models more accurate. However, although determining the division structure of the CTU by optimization of the decision boundary reduces the computational effort, the global image features are difficult to capture which reduces the accuracy of CU partition.

Recent studies have demonstrated that CNN-based algorithms [21,22,23,24,25,26,27,28,29,30,31] outperform traditional learn-based algorithms in terms of the predicting accuracy for the CU partition because they can automatically extract the features and classify them, and accelerate the speed of CU partition while maintaining high coding performance. Liu et al. [21] first applied CNN to HEVC intra-frame CU partition and deployed it on hardware, thereby reducing encoder complexity. Xu et al. [22] proposed a hierarchical CU partition mapping (HCPM) for the problem of repeated calls to the classifier. Huang et al. [23] combined two state-of-the-art acceleration algorithms, CNN and parsimonious Bayes, based on RD loss to accelerate the classification of CU and PU, respectively. Galpin et al. [24] utilized a CNN-based approach to accelerate the encoding of the intra-frame image slices. Some algorithms design different CNNs to predict the different CU depths. For example, Zhang et al. [25] developed a thresholding model associated with CU depth and quantization parameter (QP) and proposed three different CNNs corresponding to different CU sizes. Zaki et al. [26] proposed a method based on three ResNets to recognize each of the three sizes of CUs. However, the different CNNs deployed at different CU depths make the network more complex and indirectly affect the training speed. To better relate the output of the CNN with the CU partition structure, some algorithms designed the output of the network with a structure corresponding to the CU depth to achieve better prediction. For example, Feng et al. [27] utilized a matrix (8 × 8) to represent the CU depth in a CTU and then predicted each depth value based on a CNN. Tahir et al. [28] used a system based on the integration of online and offline random forest classifiers to segment CUs to accommodate the dynamic nature of video content. Li et al. [29] used a new two-stage strategy for the CU fast partition decision. The CTU partition was predicted in the first stage using a bagged tree model. In the second stage, a CU partition of size 32 × 32 is modeled as a 17-output classification problem. However, although this phased algorithm can effectively improve the coding efficiency, it is extended to the rest of the CU sizes since it is only suitable for the 32 × 32 size CU. Yao et al. [30] constructed a CNN-based dual network structure and applied it to predict CU partition. Li et al. [31] proposed an RL-based CU partition algorithm. The algorithm modeled the decision-making process of CU as a Markov decision process (MDP). Based on the MDP, two different CNNs are used for the depth-independent CU decision learning, thus reducing the computational complexity. This combined structure of two networks, although seemingly convincing, did not yet meet the requirement of making full use of the pixel features of the adjacent CUs.

The above algorithms have studied texture complexity differently and obtained good results. However, there is still potential and need for further exploration of the texture features depending on the different application scenarios. In fact, the high computational complexity incurred during encoding is usually due to dealing with the CUs in the complex texture regions, while the encoding is fast and uncomplicated for the CUs in the smooth texture regions. Therefore, unnecessary division can be reduced by performing partition skipping for the CUs in the smooth texture regions, thus reducing the coding complexity. This paper proposes an algorithm for the fast CU partition based on joint texture classification decisions and machine learning. First, a heuristic-based method is used to determine the texture complexity of the CU which avoids dividing the CUs in the smooth texture regions into smaller sizes. Then, the CUs in the complex texture regions are optimally partitioned using a CNN-based to avoid traversal search. Finally, the whole algorithm process performs three stages of judgment for each CU to ensure that the best CU partition results can be obtained.

In brief, the contributions of this paper can be summarized as follows.

- A classification decision method based on the global and local texture features of the CU is proposed, which divides the CU into smooth and complex texture regions efficiently. Moreover, the CUs in the smooth texture regions will no longer be divided which can avoid the CU redundant partition.

- A novel CNN based on a modified depth-separated convolution is designed for predicting the CU partition in the complex texture regions, thus replacing the RDO process in traditional CU classification and effectively reducing the complexity of the CU partition while maintaining the RD performance.

- Combining the texture classification decision with the proposed CNN achieves both early termination for CUs in smooth texture regions and direct prediction for CUs in complex texture regions using the CNN, thus achieving a good balance between the coding complexity and the coding performance.

2. Proposed Fast CU Partitioning Algorithm

In this section, the proposed algorithm for fast CU partition is described in detail. First, the experimental results are observed and the motivation for this paper is presented. Second, the proposed classification decision method for the image texture features is elaborated. Third, the proposed CNN-based is detailed. Finally, a CU partition method based on the texture classification and the CNN is summarized.

2.1. Observation and Motivation

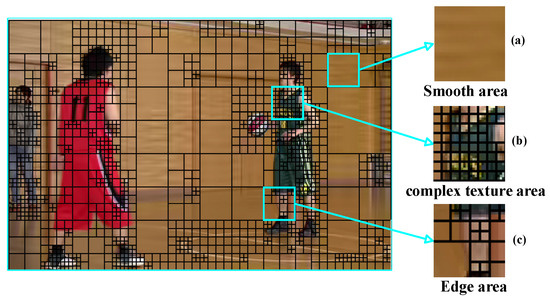

Figure 1 shows the CU partition in the reconstructed video frames (Basketball Pass seq., QP = 32). It can be seen that it tends to have a large CU for the smooth texture region and a smaller CU for the complex texture region in Figure 1a,b, respectively. For the CU of the edge in Figure 1c, it has the above two characteristics. This shows that the large-sized CUs usually appear in smooth texture regions, while the small-sized CUs usually appear in complex texture regions. Therefore, the texture information has a strong correlation with the CU partition.

Figure 1.

CU partition of Basketball Pass reconstructed frames. (a) For smooth area. (b) For complex texture area. (c) For edge area.

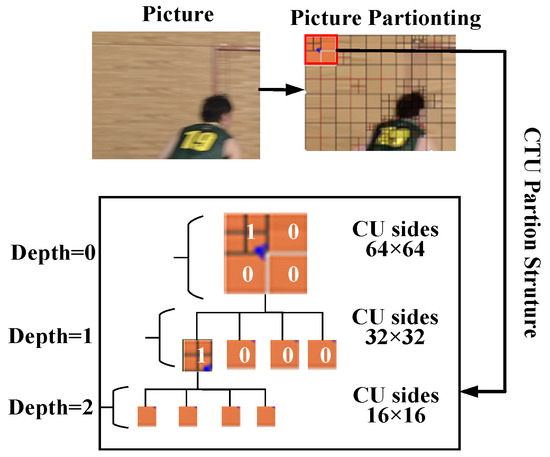

In HEVC, all the CUs of the CTU are traversed based on the quad-tree technique and the RD costs of all CUs are compared for determining the final CU partition. The quad-tree technique is shown in Figure 2, where 0 and 1 mean not splitting and splitting, respectively. The encoding process usually splits the complex texture region into smaller CU after multiple, thus determining the best CU partition structure. In contrast, for the smooth texture regions, only one intra-frame pattern is needed to accurately predict the pixel of the current CTU, and no split into smaller CU is required. This indicates that we can terminate the CU partition of the smooth regions in advance by judging the image texture, which can reduce the coding time by a significant amount.

Figure 2.

The quad-tree partition structure.

As mentioned above [11,12,13,14,15,16], the heuristic-based approaches can reduce the complexity of the coding to some extent by skipping the unnecessary CU partition. The machine learning-based method further reduces the encoding complexity by overcoming the shortcomings of the heuristic manual feature extraction. However, the machine learning-based approach does not sufficiently take into account the characteristics of different regions and different CU depths, which limits the degree of complexity reduction. More specifically, the CNN-based CU partition method predicts all CUs identically, regardless of the features of the texture. This is highly unnecessary for smooth regions and may limit the effectiveness of the algorithm. Based on the above observations and analysis, there is an incentive to propose corresponding processing methods for the smooth or complex texture regions, and fully utilize the advantages of heuristic algorithms and machine learning algorithms to reduce the computational complexity while maintaining the coding performance.

2.2. Texture Judgment Decision

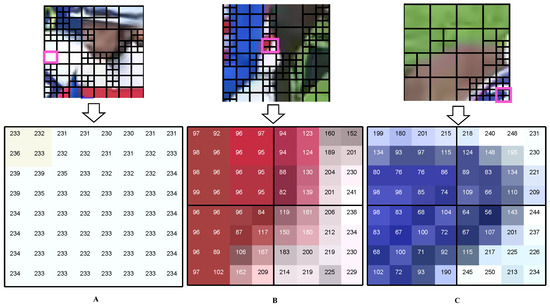

To measure the CU texture complexity, three different 8 × 8 blocks of pixels from the sequence Race Horses are selected for observation. Considering that the luminance component represents the brightness or intensity information of the image, which is more helpful in perceiving the edge and texture features of the image, the pixel values here are the luminance pixel values, as shown in Figure 3. It can be seen that for block A the pixel values distribution are relatively uniform and the pixel values do not differ significantly. While for areas similar to blocks B and C, the pixel values fluctuate greatly and are not evenly distributed. Therefore, we determine the texture of a CU based on decisions on both global and local metrics.

Figure 3.

Pixel values in different CU block. (A) is the pixel value of the texture flat region, (B) is the pixel value of the texture edge region, and (C) is the pixel value of the texture complex region.

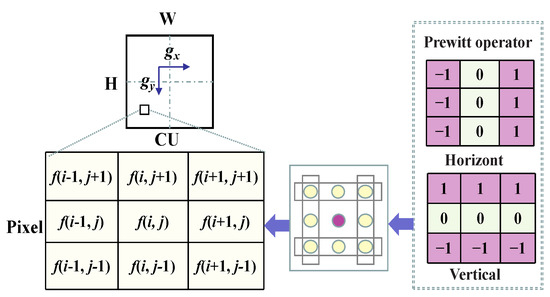

The texture information can be obtained by comparing the gradients in different directions. The most common ones are the horizontal and vertical edge information. Prewitt operator [32] can sensitively capture both vertical and horizontal edge information of a pixel. Therefore, these two directional gradient operators are used to calculate the gradient amplitude of the CU and measure the local texture of the CU, as shown in Figure 4. The amplitude of the directional gradient represents the pixel difference between the adjacent pixels along this direction. The closer the difference is to zero, the smoother the region is in this direction. If the gradient difference between the adjacent blocks is large enough, it indicates that they belong to different texture features and need to be divided into smaller CU.

Figure 4.

Schematic diagram of CU using the two-directional gradient operator.

The gradient of CU pixels is calculated as follows:

where H and W are the height and width of the CU, respectively, whose values are selected from (64, 32, and 16). and are the horizontal and vertical gradients, respectively. is the pixel value in the coordinate . G indicates the gradient amplitude.

For each pixel value in the CU, the gradient amplitude G at its position is computed by , . To better characterize the complexity of the current texture, a mean squared root is used to denote the average level of edge strength across the image. First, the average of the gradient amplitudes of all pixel positions in the CU is calculated, and then the root mean square of the gradient amplitude (GMSR) is calculated as follows:

Since the pixels in the regions with the smooth textures are usually consistent, to reflect the degree of dispersion of pixel values center on the mean. The root mean square error (RMSE) is chosen as the global texture complexity metric for determining the CU. When the RMSE is larger. It indicates that the CU has a discrete distribution of pixel values, and vice versa, it indicates that the CU has a concentrated distribution of pixel values. The RMSE is calculated as follows:

Each CU is evaluated for the global and local complexity. RMSE and GMSR are used for global judgment and local judgment, respectively.

where and is a predefined threshold. indicates the texture classification of the CU. If the RMSE is not greater than the , and the GMSR is not greater than the , the CU is identified as a smooth texture region. Otherwise, it is judged as a complex region.

For CU size larger than 8 × 8, is used to denote the split flag bit of the current block. If equals 0, it means no split is needed, i.e., the split is stopped; if it is equal to 1, the split is needed. The expression for the judgment of is as follows:

where denotes the current CU. The CU that is classified as smooth texture can be terminated partition early to avoid more redundant depth partition. Algorithm 1 shows the execution flow of texture classification.

| Algorithm 1: Texture judgment decision |

| Input: Size of current CU, THA, THB |

|

2.3. Proposed Network

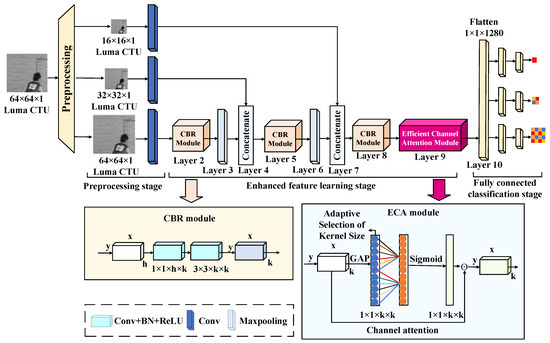

To enable fast prediction of partitioning of CUs with complex textures, Figure 5 shows a novel CNN that enables end-to-end training and learning. The structural depth has 13 layers and can be divided into three stages: the preprocessing stage, the enhanced feature learning stage and the fully connected classification stage. The preprocessing stage enhances the convergence of the features in general. The enhanced feature learning stage leverages the features for low and high-depth feature fusion and end-to-end training for CU partition decisions. The fully connected classification stage fully learns the low-depth and high-depth features to strengthen the global representation of CU partition decision markers. The three stages in the proposed network structure are described in detail below.

Figure 5.

The proposed structure of CNN.

Preprocessing stage (Layer 1): Since the luminance component can characterize the visual information better than that of the color component, the proposed CNN mainly extracts the luminance information of video sequences for analysis without loss of generality. A global normalization preprocessing operation is required to ensure that the original luminance component data are taken in the same magnitude range before inputting the data into the network to make the training data fast convergent and accelerate the training speed. To facilitate the feature learning of the different size CUs, the original CTU is down-sampled with three different sizes, respectively. Specifically, the input CU of 64 × 64 size is normalized and down-sampled according to the quad-tree partition size in HEVC and then by means of 4 × 4 convolution layers with non-overlapping features to strengthen the generalization ability of the training CNN. Ultimately, the data of the CU for convolution operations are obtained, in preparation for the later feature extraction stage.

Enhanced feature learning stage (Layer 2–9): To better predict the CU partition, adequate extraction and effective learning of data are required. Inspired by the deeply separable convolution proposed in [33], a similar convolutional structure is used to predict the CU partition. However, unlike [33], in this paper, a novel module named CBR is designed in the newly proposed network. The module consists of two convolutional layers, (i.e., 1 × 1, 3 × 3). Use 1 × 1 convolution to reduce the amount of computation before performing 3 × 3 convolution to reduce the computational effort. In addition, the module combines nonlinear batch normalization (BN) and ReLU during the convolution process to improve the stability and depth of the network, which makes it more suitable for extracting features from the CU partitions.

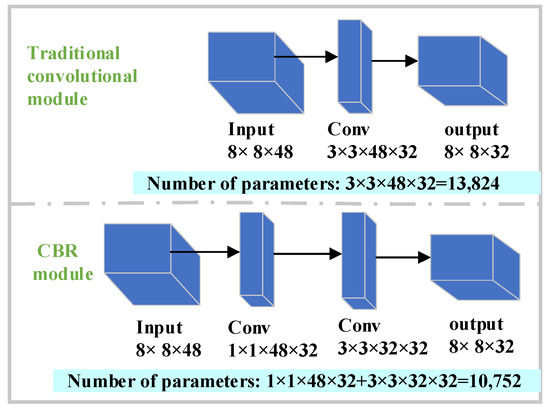

More specifically, the main structure of the CBR module in this paper is designed as 1 × 1 + 3 × 3 (Conv, BN, and ReLU). First, a 1 × 1 convolution is used to reduce the number of channels as well as realize the channel information interaction, thus avoiding the unlimited increase in the number of channels caused by concatenating the convolution channels in the enhancement feature learning stage. Secondly, the 3 × 3 convolution can reduce the amount of parameter computation compared to other large-size convolutions and enhance the generalization ability and training efficiency of the CNN. It is used after the 1 × 1 convolution to extract the useful features. Furthermore, to explore the computational complexity of the CBR model, Comparing the number of parameters transmitted in the fourth layer of the proposed CNN between the CBR model and the conventional convolutional model is shown in Figure 6. It can clearly be seen that the CBR model has fewer parameters and lower computational costs compared to the traditional convolutional module. This is designed to maintain high performance in extracting the CU features while improving the efficiency and reducing the complexity of the overall model.

Figure 6.

Comparison of the number of parameters between CBR model and traditional convolution.

After passing the CBR module, the features are fully learned. However, the size of the feature map is not changed, making more redundant information in the deeper features, which indirectly reduces the prediction performance of the network. Therefore, it is necessary to simplify the plane size through the maximum pooling layer to reduce redundant information in the network. The maximum pooling layer kernel size of this network is 2 × 2 and the features do not overlap so that important information can be extracted while avoiding more information loss.

Finally, in order to be able to fully learn the details of CU features of four different sizes, the CU data of the three different down-sampling outputs from the preprocessing stage are added after each maximum pooling layer, thus improving the training efficiency of the network. Note, that due to the different feature map sizes, a separate non-overlapping convolution operation is performed on the down-sampling data to enable concatenation at the end. In addition, a lightweight efficient channel attention (ECA) model [34] is introduced to compensate for the problem of ignoring critical information between network channels in the convolution operation. The ECA model avoids the dimensionality reduction allows for efficient learning of channel information and thus improves accuracy. Without compromising training time, this module is introduced after the output of the convolution operation to ensure performance stability.

Fully connected classification stage (Layer 10–13): The fully connected feature classification is composed mainly of three output and six fully connected layers. The output of the network is designed according to the CU depth. denotes the predicted outputs of the fully connected classification stage corresponding to the different depth CU partitions probability with the following expressions:

where d corresponds to the CU depth, is the classifier corresponding to the different depths and is the size of CU. The predicted outputs , , and correspond to the different CU sizes, respectively. First, the input of the fully connected layer needs to convert the data processed by ECA into a one-dimensional vector. Then, the fully connected layer is divided into three branches to correspond to the prediction outputs described above. Finally, each branch passes through two fully connected layers. To correspond to the different depths of CU partition decisions, an HCPM structure [22] is used as the output labels. The network outputs here correspond to 1, 4 and 16, respectively. Note, that the sigmoid function is activated at the output layer to maintain the binary properties of all output labels. To prevent overfitting in the proposed CNN, the loss function is represented by the sum of the cross-entropies to train the network model, which is calculated as follows:

where is the truth-ground labels, is the predicted output of the CNN and N is the total number of samples. The cross-entropy can effectively measure the discrepancy between the model’s predictions and the actual labels, making it a suitable choice for training the network. Table 1 presents the detailed structure and corresponding parameters of the proposed network.

Table 1.

Detailed parameters of the proposed network.

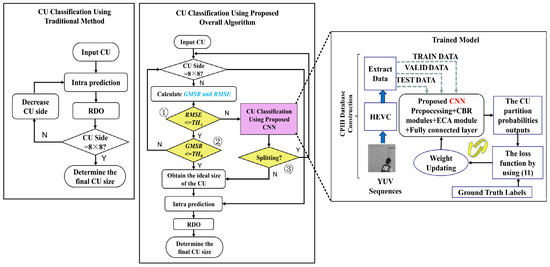

2.4. Fast CU Partitioning Algorithm

An algorithm is proposed based on the CNN combined with CU-based texture discrimination to make full use of texture information. The fast algorithm not only uses the advantages of the heuristic algorithm to skip the unnecessary CU partition in advance but also replaces the complex process of traversal searching RDO in the traditional algorithm based on the advantages of CNN. The general flow of the fast algorithm and the traditional method is shown in Figure 7. Firstly, the collected data is input into the designed network for training. Then, the network is trained cooperatively according to the target loss function. Finally, the trained model is embedded into HEVC, so as to replace the original traversal search process. The proposed algorithm needs to go through three layers of metrics corresponding to global judgment RMSE, local judgment GMSE and prediction judgment of CNN respectively. Specifically, Before starting to partition the CU, the size of the CU needs to be judged in advance. Except for the 8 × 8 CU, the rest of the CUs first calculate the texture complexity by the metrics GMSR and RMSE. The global texture complexity of the CU is first measured using RMSE. Measures the complexity of the local texture if . If , the CU is considered smooth and no smaller size partition on decision is made. Otherwise, a CNN is used to determine whether it requires further partition. The fast CU partition algorithm can evaluate the current CU texture complexity and select the best CU partition by three layers of metrics.

Figure 7.

Comparison of the flowchart between the fast and the traditional algorithms. Three layers of metrics corresponding to global judgment RMSE, local judgment GMSE and prediction judgment of CNN respectively.

3. Experimental Results and Performance Analysis

3.1. Performance Evaluation Index

To evaluate the performance of the proposed algorithm, three evaluation metrics are considered, i.e., the coding time saved evaluates the coding complexity, the BD-PSNR and BD-BR [35] for evaluating the variation in the objective quality of the image. The coding complexity metric is derived from the following equation:

where and denote the encoding time consumed by the method in the HEVC test platform HM and the proposed algorithm, respectively. The QP is taken from {22, 27, 32, 37} when encoding. The BD-PSNR reflects the residuals between the original and reconstructed pixels, which is calculated as:

where and denote the peak signal-to-noise ratio of the HM and the proposed algorithm, respectively. The BD-BR indicates the average difference in bit rates between the original algorithm and the proposed algorithm as shown as follows:

where and denote the output bitrate of the method in the HM and the proposed algorithm, respectively.

3.2. Experimental Parameter Configuration

The algorithm in this paper uses the HEVC reference software HM16.5 to evaluate CU partition decisions. The experiments are performed on 18 video sequences from the JCT-VC standard test set [36] and all settings are used as default in CTC. Since only the complexity reduction of the HEVC intra-coding process is considered, the test sequences are encoded with the encoder_intra_main.cfg [37] configuration files. More detailed information on the parameter settings of the test sequence is shown in Table 2. The hardware configuration of the environment is an NVIDIA GeForce RTX 3050 Laptop GPU and AMD Ryzen 7 5800H with Radeon Graphics@3.20 GHz computer, the operating system is Windows 11. The GPU is only there to speed up training and is disabled in testing. The proposed CNN network is implemented on tensorflow [38] version 1.15.4. A robust dataset is necessary for the proposed method to be fully learned. The image dataset CPIH [39] with different resolutions contains CU partition information for 2000 images, which ensures diverse and sufficient data needed to learn to predict CU partitions. Therefore, the proposed CNN is trained and validated using this dataset. The batch size of all training is 64 to predict CU partitions more accurately and the data are trained with one million iterations using a random initialization method during the training process.

Table 2.

Experimental parameter settings of the test sequence.

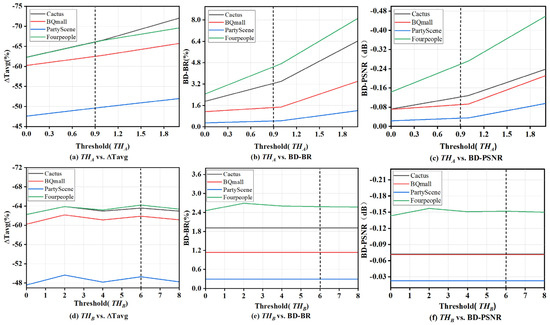

3.3. Optimal Threshold Decision

As known in Section 2.4, the threshold directly affects the judgment of texture complexity and subsequent RD performance. To find a suitable threshold that can balance well the computational complexity and RD performance, the threshold experiments are conducted by pre-assignment method. Specifically, to ensure the generality and accuracy of the results, the BQ Mall, Cactus, Party Scene, and Four People sequences with different features and different resolutions are selected for the test. The curves, BD-BR curves, and BD-PSNR curves varying with the threshold are obtained, as shown in Figure 8. From Figure 8a–c, it can be seen that the increases steadily with the threshold, the BD-BR and the BD-PSNR change relatively stable when the less than 1 while changing steeply when the is greater than 1. This is due to that as a global threshold, any small changes of will lead to fluctuations in encoding performance and complexity since the features of the entire image are relatively stable. In addition, when the threshold exceeds a certain value, the performance will change significantly. Considering that the threshold greater than 1 may cause a significant change in the bit rate and PSNR, from Figure 8a–c, it can be seen that it is reasonable to choose equal to 0.9 as the threshold. Figure 8d–f shows the vs. BD-BR and vs. BD-PSNR curves varying with the threshold , respectively, based on the suitable threshold equal to 0.9. It is clear that the fluctuation of the vs. BD-BR and vs. BD-PSNR curves with is very small. This is due to that the global metric already takes into account a large number of texture features, leading to a small variation in texture differentiation with local metric thresholds. However, the local metrics can avoid some redundant RD calculations due to their simplicity, thereby reducing time. It can be seen that the encoding time is shortest when equals to 2 and 6, while the changes of BD-BR and BD-PSNR are smoother when equals 6. Therefore, the equal to 6 is empirically chosen as the suitable threshold.

Figure 8.

The saved encoding time, BD-BR and BD-PSNR curves varying with the threshold values. Threshold is equal to 0.9 and threshold is equal to 6.

3.4. Ablation Experiment

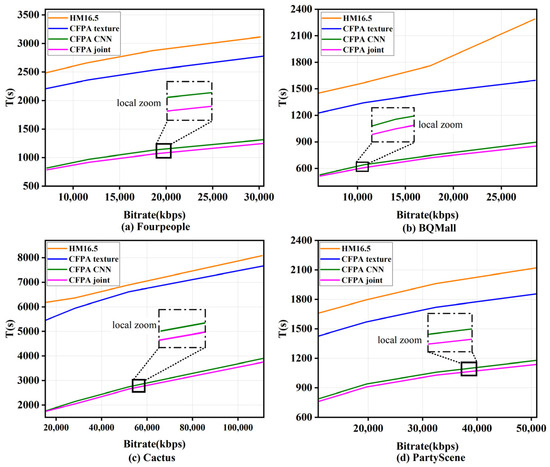

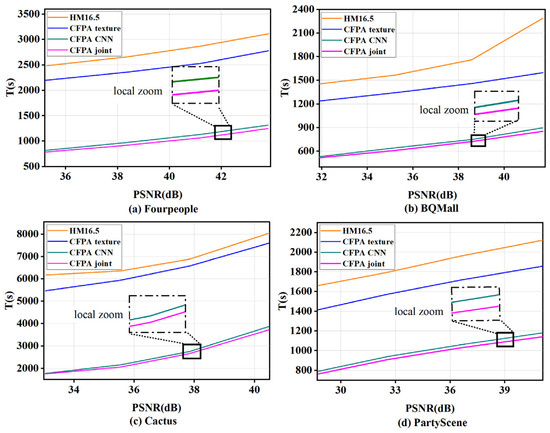

For convenience, the proposed texture classification decision, the CNN and the total algorithm are denoted by , , and , respectively. The original encoder method is denoted as HM16.5. To compare the performance of the above algorithms, this section selected test sequences with different resolutions such as BQ Mall, Cactus, Party Scene, and Four People for encoding testing with QP values {22, 27, 32, 37}, respectively. Figure 9 and Figure 10 show the variation curves of bit rate, PSNR and coding time, respectively. It is confirmed that the , , and algorithms can significantly reduce the coding time compared to the original algorithm. In Figure 9 and Figure 10, localized enlarged views of and are shown. Obviously, the proposed has the least coding time. This advantage is attributed to that the algorithm can avoid the CU partition of the smooth regions and further use the proposed CNN method to predict CU partition of the complex texture regions, consequently effectively reducing many redundant calculations and reducing the coding complexity.

Figure 9.

Curves of bitrate vs. encoding time.

Figure 10.

Curves of PSNR vs. encoding time.

3.5. Reduced Complexity and RD Performance Evaluation

Table 3 shows the comparison results of the ΔT (%), BD-PSNR and BD-BR between the algorithm and the one in HM16.5 by using QP {22, 27, 32, 37} on the standard test sequence. The algorithm saves −55.76%, −59.92%, −62.75%, and −66.53% of the coding time compared to the original algorithm in the encoder on the four QP, respectively, with only 1.86% increase in BD-BR and −0.090 reduction in BD-PSNR. It is worth mentioning that can reduce the computational complexity for high-resolution classes (2560 × 1600, 1920 × 1080) by almost 68% and 74%. In particular, for sequences with more complex textures and more intense motion, such as Basketball Drive and Basketball Drill, the algorithm can reduce the computational complexity by nearly 79% and 76%, respectively. Additionally, for sequences with more smooth textures, such as Kimono, the can even reduce the computational complexity by almost around 83%. The low complexity of the proposed is partly attributed to that this algorithm takes a different approach to smooth and complex regions of the texture, respectively, mainly attributed to that the proposed CNN can be used to directly predict the CU partition of the complex texture regions that need high coding complexity. From Table 3, the BD-BR increase is only 1.86% and the BD-PSNR loss is only 0.09 dB. The results show that the decreases the coding complexity while remaining the sound RD performance.

Table 3.

Experimental results of the algorithm on JCT-VC standard test sequence.

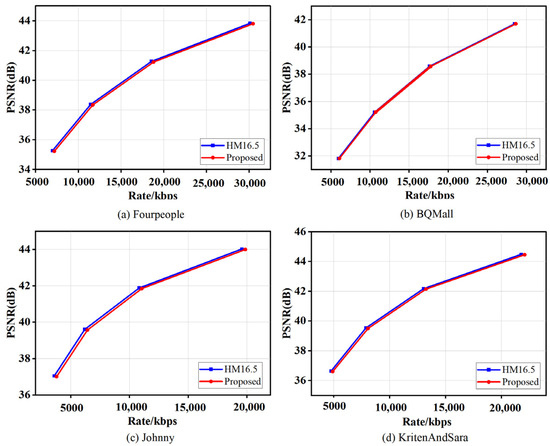

The four sequences with the large video quality loss (Basketball Drill, Four People, Johnny, and KritenAndSara) are selected for comparison with HM16.5 to assess the RD performance of the algorithm. Figure 11 shows the RD performance of the proposed algorithm and HM16.5 for the four sequences. It is straightforward to observe that the RD curves of the and the original algorithm almost coincide. This indicates that the proposed algorithm has good robustness and stability, and even significant video quality loss can be ignored compared to the original encoding.

Figure 11.

The RD performance of the proposed and HM16.5 for the four sequences (Basketball Drill, Four People, Johnny, and KritenAndSara).

To further assess the high performance of the algorithm, a comparison was made with three state-of-the-art fast CU partition algorithms implemented in HM16.5, as shown in Table 4. In terms of RD performance, the proposed algorithm achieved a BD-BR of only 1.86%, which is significantly lower than [21] of 6.19%, [22] of 2.25%, and [23] of 1.90%. Additionally, the BD-PSNR of the algorithm is −0.09 dB, outperforming [21] of −0.32 dB and [22] of −0.11 dB. Regarding the reduction in encoding time, the algorithm demonstrated an average complexity reduction of 61.23%, slightly higher than [21] of 61.09%, although slightly lower than [22] of 61.84% and [23] of 61.7%. However, the algorithm has less loss in BD-BR performance. The main reason is that the proposed CNN can fully utilize the texture features of the CUs with smaller sizes to achieve more excellent judgment.

Table 4.

Comparison results with the fast CU partition decision making algorithms on sequences.

It is important to note that as encoding time is reduced to a greater extent, there is usually an increase in RD performance loss. Therefore, it is crucial to strike a balance between complexity reduction and RD performance. To further observe the tradeoff between RD performance and coding time savings, a common figure of metric (FoM) [40] is used. FoM can effectively evaluate the relationship between the two, and the calculation method is as follows:

Since FoM must strike a good balance between more reduced encoding time and low BD-BR increase, lower FoM is desirable [41]. Table 5 displays the FoMs for the proposed algorithm and three different state-of-the-art algorithms. It is worth noting that the proposed algorithm has a FoM of only 3.0377, which is lower than the other advanced algorithms. Therefore, the algorithm is able to strike a good balance between reducing coding time and RD loss.

Table 5.

Compared results with the fast CU partition decision algorithm on FoM index.

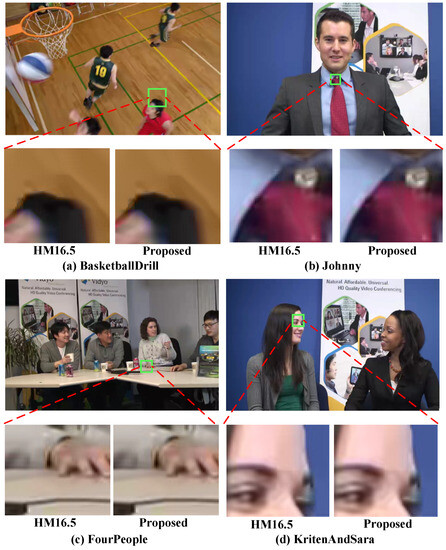

Finally, to assess the subjective quality of the algorithm, the intra-frames of the four sequences with the large video quality loss (Basketball Drill, Four People, Johnny, and KritenAndSara) are selected for comparison with HM16.5 under QP = 37. Figure 12 shows the local zoom-in regions of four sequences encoded by using the proposed algorithm and the method in HM16.5, respectively. It can be seen that in the local zoom-in regions of the video, the subjective feelings of the two algorithms are almost identical. This indicates that the video quality loss of the proposed algorithm can be ignored.

Figure 12.

The local zoom regions of the proposed algorithm and HM16.5 for the four sequences (Basketball Drill, Four People, Johnny, and KritenAndSara, QP = 37).

4. Conclusions and Future Work

In this paper, combining the heuristics and the deep learning for predicting the extremely complex CU partition in HEVC is proposed. First, a discriminative method based on image texture can skip the CU partition process of smooth texture regions in advance is proposed. Second, a CNN-based network is designed for CU in complex texture regions for partition prediction, thus improving CU partition efficiency. Finally, image texture recognition decisions are combined with the CNN and embedded in the official HEVC test software HM16.5 to achieve the best CU partition. The experimental results show that the proposed algorithm intra-frame coding complexity by 61.23% on average compared to the HM16.5, while BD-BR is only 1.86%.

In future work, we will consider optimizing the heterogeneous architecture of GPUs to accelerate the processing of CNNs, thus further reducing the encoding time consumption. In addition, we will also focus on adapting our method to better fit the block partitioning structure of VVC and consider applying it to other coding modes. These efforts will further improve the applicability and performance of our approach.

Author Contributions

Conceptualization, T.W., G.W. and H.L.; methodology, T.W., G.W., H.L., T.B. and Q.Z.; software, T.W., G.W. and H.L.; validation, T.W., G.W., H.L. and R.W.; formal analysis, T.W., G.W. and H.L.; investigation, T.W., G.W. and H.L.; resources, data curation, T.W.; writing—original draft preparation, T.W. and G.W.; writing—review and editing, T.W., G.W., T.B., H.L., Q.Z. and R.W.; visualization, T.W. and G.W.; supervision, G.W.; project administration, G.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation of Guangxi Province (Grants No. 2020GXNSFAA297184, No. 2020GXNSFBA297097), the National Natural Science Foundation of China (Grant No. 62161031), and the Science and Technology Planning Project of Guangxi Province (Grant No. AD21238038).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data that supports the findings of this study is available from Wei at this email address, wei_geng@nnnu.edu.cn, upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Falkowski-Gilski, P.; Uhl, T. Current trends in consumption of multimedia content using online streaming platforms: A user-centric survey. Comput. Sci. Rev. 2020, 37, 100268. [Google Scholar] [CrossRef]

- Falkowski-Gilski, P. On the consumption of multimedia content using mobile devices: A year to year user case study. Arch. Acoust. 2020, 45, 321–328. [Google Scholar]

- Sullivan, G.J.; Ohm, J.R.; Han, W.J.; Wiegand, T. Overview of the high efficiency video coding (HEVC) standard. IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 1649–1668. [Google Scholar] [CrossRef]

- Wiegand, T.; Sullivan, G.J.; Bjontegaard, G.; Luthra, A. Overview of the H.264/AVC video coding standard. IEEE Trans. Circuits Syst. Video Technol. 2003, 13, 560–576. [Google Scholar] [CrossRef]

- Bossen, F.; Bross, B.; Suhring, K.; Flynn, D. HEVC Complexity and Implementation Analysis. IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 1685–1696. [Google Scholar] [CrossRef]

- Guo, H.; Zhu, C.; Xu, M.; Li, S. Inter-Block Dependency-Based CTU Level Rate Control for HEVC. IEEE Trans. Broadcast. 2020, 66, 113–126. [Google Scholar] [CrossRef]

- Jamali, M.; Coulombe, S. Fast HEVC Intra Mode Decision Based on RDO Cost Prediction. IEEE Trans. Broadcast. 2019, 65, 109–122. [Google Scholar] [CrossRef]

- Bross, B.; Wang, Y.K.; Ye, Y.; Liu, S.; Chen, J.; Sullivan, G.J.; Ohm, J.R. Overview of the versatile video coding (VVC) standard and its applications. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 3736–3764. [Google Scholar] [CrossRef]

- Wu, S.; Shi, J.; Chen, Z. HG-FCN: Hierarchical Grid Fully Convolutional Network for Fast VVC Intra Coding. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 5638–5649. [Google Scholar] [CrossRef]

- The Bitmovin. Video Developer Report. Available online: https://go.bitmovin.com/video-developer-report (accessed on 27 November 2018).

- Qi, M.B.; Chen, X.L.; Yang, Y.F.; Jiang, J.G.; Jin, Y.L.; Zhang, J.J. Fast coding unit splitting algorithm for high efficiency video coding intra prediction. J. Electron. Inf. Technol. 2014, 36, 1699–1705. [Google Scholar]

- Zhang, W.B.; Chen, D.; Yao, X.Y.; Feng, Y.B. Fast intra coding unit splitting algorithm based on spatial-temporal correlation in HE-VC. J. Image Graph. 2018, 23, 155–162. [Google Scholar]

- Chen, F.; Jin, D.; Peng, Z.; Jiang, G.; Yu, M.; Chen, H. Fast intra coding algorithm for HEVC based on depth range prediction and mode reduction. Multimed. Tools Appl. 2018, 77, 28375–28394. [Google Scholar] [CrossRef]

- Wang, X.J.; Xue, Y.L. Fast HEVC inter prediction algorithm based on spatio-temporal block information. In Proceedings of the 2017 IEEE International Symposium on Broadband Multimedia Systems and Broadcasting (BMSB), Cagliari, Italy, 7–9 June 2017. [Google Scholar]

- Liu, X.G.; Liu, Y.B.; Wang, P.C.; Lai, C.F.; Chao, H.C. An Adaptive Mode Decision Algorithm Based on Video Texture Characteristics for HEVC Intra Prediction. IEEE Trans. Circuits Syst. Video Technol. 2022, 27, 1737–1748. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, N.; Kwong, S.; Jiang, G.; Zeng, H. Statistical early termination and early skip models for fast mode decision in hevc intra coding. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2019, 15, 1–23. [Google Scholar] [CrossRef]

- Fu, B.; Zhang, Q.Q.; Hu, J. Fast prediction mode selection and cu partition for hevc intra coding. IET Image Process. 2020, 14, 1892–1900. [Google Scholar] [CrossRef]

- Pakdaman, F.; Yu, L.; Hashemi, M.R.; Ghanbari, M.; Gabbouj, M. SVM based approach for complexity control of HEVC intra coding. Signal Process. Image Commun. 2021, 93, 116177. [Google Scholar] [CrossRef]

- Amna, M.; Imen, W.; Soulef, B.; Sayadi, F.E. Machine Learning Based approaches to reduce HEVC intra coding unit partition decision complexity. Multimedi. Tools Appl. 2022, 81, 2777–2802. [Google Scholar] [CrossRef]

- Westland, N.; Dias, A.S.; Mrak, M. Decision Trees for Complexity Reduction in Video Compression. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 15–17 November 2019. [Google Scholar]

- Liu, Z.; Yu, X.; Gao, Y.; Chen, S.; Ji, X.; Wang, D. CU partition mode decision for HEVC hardwired intra encoder using convolution neural network. IEEE Trans. Image Process. 2016, 25, 5088–5103. [Google Scholar] [CrossRef]

- Xu, M.; Li, T.Y.; Wang, Z.; Deng, X.; Yang, R.; Guan, Z. Reducing complexity of HEVC: A deep learning approach. IEEE Trans. Image Process. 2018, 27, 5044–5059. [Google Scholar] [CrossRef]

- Huang, Y.; Song, L.; Xie, R.; Izquierdo, E.; Zhang, W. Modeling acceleration properties for flexible intra hevc complexity control. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 4454–4469. [Google Scholar] [CrossRef]

- Galpin, F.; Racapé, F.; Jaiswal, S.; Bordes, P.; Léannec, F.L.; Francois, E. CNN-based driving of block partitioning for intra slices encoding. In Proceedings of the 2019 Data Compression Conference (DCC), Snowbird, UT, USA, 11–13 March 2019. [Google Scholar]

- Zhang, Y.; Wang, G.; Tian, R.; Xu, M.; Kuo, C.J. Texture-Classification Accelerated CNN Scheme for Fast Intra CU Partition in HEVC. In Proceedings of the 2019 Data Compression Conference (DCC), Snowbird, UT, USA, 26–29 March 2019. [Google Scholar]

- Zaki, F.; Mohamed, A.E.; Sayed, S.G. CtuNet: A Deep Learning-based Framework for Fast CTU Partitioning of H265/HEVC Intra-coding. Ain Shams Eng. J. 2021, 12, 1859–1866. [Google Scholar] [CrossRef]

- Feng, A.; Gao, C.; Li, L.; Liu, D.; Wu, F. Cnn-Based Depth Map Prediction for Fast Block Partitioning in HEVC Intra Coding. In Proceedings of the 2021 IEEE International Conference on Multimedia and Expo (ICME), Shenzhen, China, 5–9 July 2021. [Google Scholar]

- Tahir, M.; Taj, I.A.; Assuncao, P.A.; Muhammad, A. Fast video encoding based on random forests. J. Real-Time Image Process. 2020, 17, 1029–1049. [Google Scholar] [CrossRef]

- Li, Y.; Li, L.; Fang, Y.; Peng, H.; Ling, N. Bagged Tree and ResNet-Based Joint End-to-End Fast CTU Partition Decision Algorithm for Video Intra Coding. Electronics 2022, 11, 1264. [Google Scholar] [CrossRef]

- Yao, C.; Xu, C.; Liu, M. RDNet: Rate–Distortion-Based Coding Unit Partition Network for Intra-Prediction. Electronics 2022, 11, 916. [Google Scholar] [CrossRef]

- Li, N.; Zhang, Y.; Zhu, L.; Luo, W.; Kwong, S. Reinforcement learning based coding unit early termination algorithm for high efficiency video coding. J. Vis. Commun. Image Represent. 2019, 60, 276–286. [Google Scholar] [CrossRef]

- Gao, W.; Yang, L.; Zhang, X.; Zhou, B.; Ma, C. Based on soft-threshold wavelet denoising combining with Prewitt operator edge detection algorithm. In Proceedings of the 2010 2nd International Conference on Education Technology and Computer (ICRTC), Shanghai, China, 22–24 June 2010. [Google Scholar]

- Cho, S.I.; Kang, S.J. Gradient Prior-Aided CNN Denoiser With Separable Convolution-Based Optimization of Feature Dimension. IEEE Trans. Multimed. 2019, 21, 484–493. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Zhang, M.; Lai, D.; Liu, Z.; An, C. A novel adaptive fast partition algorithm based on CU complexity analysis in HEVC. Multimed. Tools Appl. 2019, 78, 1035–1051. [Google Scholar] [CrossRef]

- Bossen, F. Common test conditions and software reference configurations. In Proceedings of the Joint Collaborative Team on Video Coding (JCT-VC) of ITU-T SG16 WP3 and ISO/IEC JTC1/SC29/WG11, 5th Meeting, Geneva, Switzerland, 16–23 March 2011. [Google Scholar]

- Xu, M.; Deng, X.; Li, S.; Wang, Z. Region-of-Interest Based Conversational HEVC Coding with Hierarchical Perception Model of Face. IEEE J. Sel. Top. Signal Process. 2014, 8, 475–489. [Google Scholar] [CrossRef]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. Tensorflow: Large-scale machine learning on heterogeneous distributed systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

- Li, T.; Xu, M.; Deng, X. A Deep Convolutional Neural Network Approach for Complexity Reduction on Intra-mode HEVC. In Proceedings of the 2017 IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, China, 10–14 July 2017. [Google Scholar]

- Correa, G.; Assuncao, P.A.; Agostini, L.V.; da Silva Cruz, L.A. Fast HEVC encoding decisions using data mining. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 660–673. [Google Scholar] [CrossRef]

- Najafabadi, N.; Ramezanpour, M. Mass center direction-based decision method for intraprediction in HEVC standard. J. Real-Time Image Process. 2020, 17, 1153–1168. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).