Novel Bayesian Inference-Based Approach for the Uncertainty Characterization of Zhang’s Camera Calibration Method

Abstract

:1. Introduction

- The method of estimating parameter uncertainties leads to the assumption that the model is linear, when, in fact, it is highly non-linear.

- The accuracy of the parameters with these software has not been verified with a suitable alternative method.

2. Materials and Methods

2.1. Camera Calibration

2.2. Plane-Based Camera Calibration

- Take n images of the planar calibration pattern at different orientations by moving either the plane, the camera or both.

- In each image, m feature points (corners) are detected. With these, the associated image homographies are computed.

- Using the homographies, the intrinsic parameters are estimated by least- squares minimization.

- The radial and tangential distortion coefficients are solved by the method of least squares.

- With the interior orientation, the undistorted points and the world points, the extrinsic or exterior orientation parameters are calculated.

- Finally, all the estimated parameters values are refined by non-linear optimization (Levenberg–Marquardt algorithm), considering all the m corners of the n views.where is the estimated projection of the 2D image point , corresponding to the detected target points .

3. Bayesian Inference-Based Method for Uncertainty Characterization

3.1. Markov Chain Monte Carlo (MCMC)

3.2. Computational Tools

- The uncertain model parameters; in our case, the intrinsic parameters and lens distortion coefficients (interior orientation) .

- The measurement vector or experimental data .

- The forward model, i.e., .

- Calculate the intrinsic parameters and distortion coefficients with their uncertainties using Zhang´s method [6] (with Matlab or OpenCv), assuming normal distributions.

- With each set of random values sampled from the PDFs of the intrinsic parameters of the camera and lens distortion coefficients, obtain the undistorted image points.

- With the undistorted points and the sampled values, calculate the exterior camera orientation or extrinsic parameters with Equation (15).

- Use the obtained exterior camera orientation values as initial guests and determine the optimal value of the exterior camera orientation using Equation (16).

- Calculate the theoretical image points with Equation (6).

4. Results

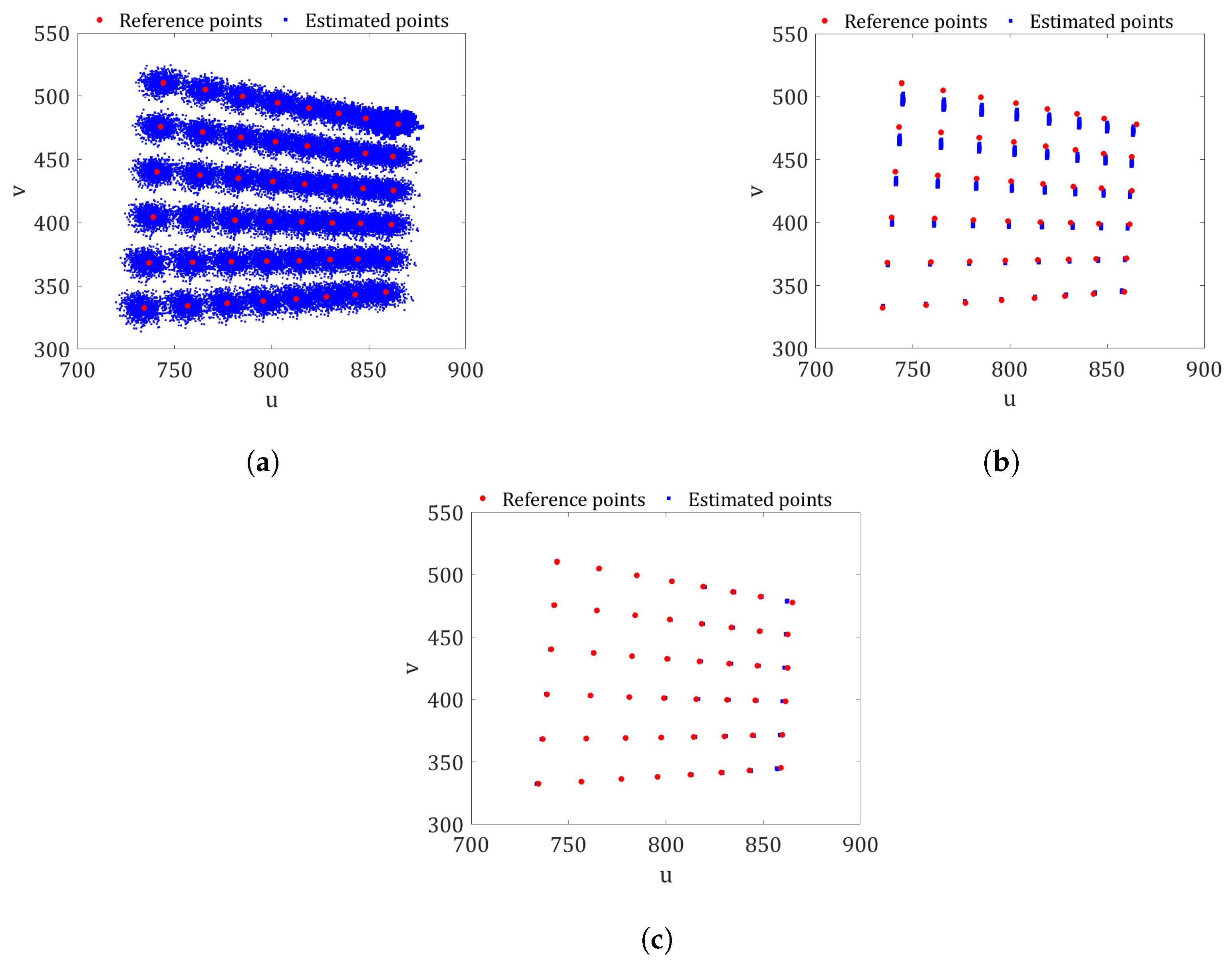

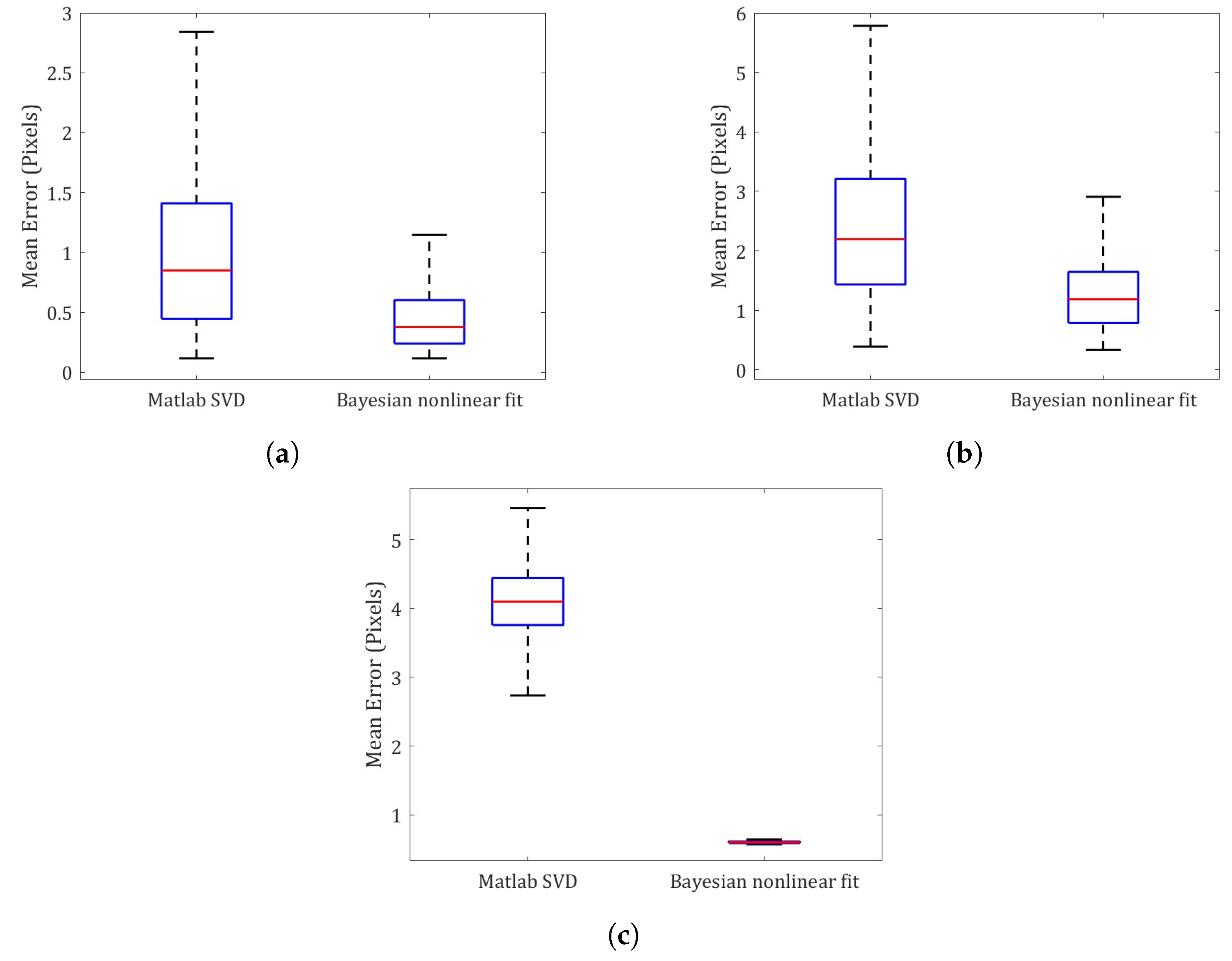

- Procedure A: Based on 1000 pseudo-random samples from the Gaussian PDFs of the interior and exterior camera orientations, computed with the Matlab camera software. In this way, we have then assumed that the exterior camera orientation do not depend on the other parameters of the camera.

- Procedure B: From 1000 pseudo-random samples, considering only the Gaussian PDFs of the interior camera orientation calculated with the Matlab camera software. This is the common procedure used for an already calibrated camera [6], i.e., with the -values provided by the Matlab camera software, executing the following steps:

- Procedure C: From 1000 pseudo-random samples from the PDFs of the interior camera orientation obtained with the proposed Bayesian inference method, where, in addition to each sample of the interior camera orientation, the optimal values of and are obtained. In this case, the procedure used to calculate the estimated point was as follows:

- First, the initial exterior camera orientation matrices are calculated using Equation (15) with the random values from the PDFs.

- Then, and are optimized with the Equation (16), as was carried out in the proposed Bayesian camera calibration procedure.

- Finally, the image points are predicted with Equation (6).

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Aber, J.S.; Marzolff, I.; Ries, J.B. Chapter 3-photogrammetry. In Small-Format Aerial Photography; Elsevier: Amsterdam, The Netherlands, 2010; pp. 23–39. [Google Scholar]

- Champion, E. Virtual Heritage: A Guide; Ubiquity Press: London, UK, 2021. [Google Scholar]

- Jähne, B.; Haußecker, H. Computer Vision and Applications; Elsevier: Amsterdam, The Netherlands, 2000. [Google Scholar]

- Danuser, G. Computer vision in cell biology. Cell 2011, 147, 973–978. [Google Scholar] [CrossRef] [PubMed]

- Klette, R. Concise Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; Volume 233. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Luhmann, T.; Fraser, C.; Maas, H.G. Sensor modelling and camera calibration for close-range photogrammetry. ISPRS J. Photogramm. Remote Sens. 2016, 115, 37–46. [Google Scholar] [CrossRef]

- Fraser, C.S. Digital camera self-calibration. ISPRS J. Photogramm. Remote Sens. 1997, 52, 149–159. [Google Scholar] [CrossRef]

- Geiger, A.; Moosmann, F.; Car, Ö.; Schuster, B. Automatic camera and range sensor calibration using a single shot. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, St Paul, MN, USA, 14–18 May 2012; pp. 3936–3943. [Google Scholar]

- Nyimbili, P.H.; Demirel, H.; Seker, D.; Erden, T. Structure from motion (sfm)-approaches and applications. In Proceedings of the International Scientific Conference on Applied Sciences, Antalya, Turkey, 27–30 September 2016; pp. 27–30. [Google Scholar]

- Zhou, X.; Sun, K.; Wang, J.; Zhao, J.; Feng, C.; Yang, Y.; Zhou, W. Computer vision enabled building digital twin using building information model. IEEE Trans. Ind. Inf. 2022, 19, 2684–2692. [Google Scholar] [CrossRef]

- Chen, J.; Wang, Q.; Cheng, H.H.; Peng, W.; Xu, W. A review of vision-based traffic semantic understanding in ITSs. IEEE Trans. Intell. Transp. Syst. 2022, 23, 19954–19979. [Google Scholar] [CrossRef]

- Orghidan, R.; Salvi, J.; Gordan, M.; Orza, B. Camera calibration using two or three vanishing points. In Proceedings of the 2012 Federated Conference on Computer science and information systems (FedCSIS), Wroclaw, Poland, 9–12 September 2012; pp. 123–130. [Google Scholar]

- Mei, C.; Rives, P. Single view point omnidirectional camera calibration from planar grids. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; pp. 3945–3950. [Google Scholar]

- Tsai, R. A versatile camera calibration technique for high-accuracy 3D machine vision metrology using off-the-shelf TV cameras and lenses. IEEE J. Robot. Autom. 1987, 3, 323–344. [Google Scholar] [CrossRef]

- Heikkila, J.; Silvén, O. A four-step camera calibration procedure with implicit image correction. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Juan, Puerto Rico, 17–19 June 1997; pp. 1106–1112. [Google Scholar]

- Sundareswara, R.; Schrater, P.R. Bayesian modelling of camera calibration and reconstruction. In Proceedings of the Fifth International Conference on 3-D Digital Imaging and Modeling (3DIM’05), Ottawa, ON, Canada, 13–16 June 2005; pp. 394–401. [Google Scholar]

- Jorio, A.; Dresselhaus, M. Nanostructured Materials: Metrology. In Reference Module in Materials Science and Materials Engineering; Elsevier: Amsterdam, The Netherlands, 2016. [Google Scholar] [CrossRef]

- Rasmussen, K.; Kondrup, J.B.; Allard, A.; Demeyer, S.; Fischer, N.; Barton, E.; Partridge, D.; Wright, L.; Bär, M.; Fiebach, H.; et al. Novel Mathematical and Statistical Approaches to Uncertainty Evaluation: Best Practice Guide to Uncertainty Evaluation for Computationally Expensive Models; Euramet: Brunswick, Germany, 2015. [Google Scholar]

- Wang, C.; Qiang, X.; Xu, M.; Wu, T. Recent advances in surrogate modeling methods for uncertainty quantification and propagation. Symmetry 2022, 14, 1219. [Google Scholar] [CrossRef]

- Fu, C.; Sinou, J.J.; Zhu, W.; Lu, K.; Yang, Y. A state-of-the-art review on uncertainty analysis of rotor systems. Mech. Syst. Signal Process. 2023, 183, 109619. [Google Scholar]

- Karandikar, J.; Chaudhuri, A.; No, T.; Smith, S.; Schmitz, T. Bayesian optimization for inverse calibration of expensive computer models: A case study for Johnson-Cook model in machining. Manuf. Lett. 2022, 32, 32–38. [Google Scholar] [CrossRef]

- Zhang, C.; Sun, L. Bayesian Calibration of the Intelligent Driver Model. arXiv 2022, arXiv:2210.03571. [Google Scholar]

- King, G.; Lovell, A.; Neufcourt, L.; Nunes, F. Direct comparison between Bayesian and frequentist uncertainty quantification for nuclear reactions. Phys. Rev. Lett. 2019, 122, 232502. [Google Scholar] [CrossRef] [PubMed]

- Bok, Y.; Ha, H.; Kweon, I.S. Automated checkerboard detection and indexing using circular boundaries. Pattern Recognit. Lett. 2016, 71, 66–72. [Google Scholar] [CrossRef]

- Feng, X.; Cao, M.; Wang, H.; Collier, M. The comparison of camera calibration methods based on structured-light measurement. In Proceedings of the 2008 Congress on Image and Signal Processing, Octeville, France, 1–3 July 2008; Volume 2, pp. 155–160. [Google Scholar]

- Burger, W. Zhang’s Camera Calibration Algorithm: In-Depth Tutorial and Implementation; HGB16-05; University of Applied Sciences Upper Austria: Hagenberg, Austria, 2016; pp. 1–6. [Google Scholar]

- Rojtberg, P.; Kuijper, A. Efficient pose selection for interactive camera calibration. In Proceedings of the 2018 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 16–20 October 2018; pp. 31–36. [Google Scholar]

- Peng, S.; Sturm, P. Calibration wizard: A guidance system for camera calibration based on modelling geometric and corner uncertainty. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1497–1505. [Google Scholar]

- Richardson, A.; Strom, J.; Olson, E. AprilCal: Assisted and repeatable camera calibration. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 1814–1821. [Google Scholar]

- Semeniuta, O. Analysis of camera calibration with respect to measurement accuracy. Procedia Cirp 2016, 41, 765–770. [Google Scholar] [CrossRef]

- Hagemann, A.; Knorr, M.; Janssen, H.; Stiller, C. Inferring bias and uncertainty in camera calibration. Int. J. Comput. Vis. 2022, 130, 17–32. [Google Scholar] [CrossRef]

- MATLAB. Version 9.12.0.2039608 (R2022a) Update 5 (R2022a); The MathWorks Inc.: Natick, MA, USA, 2022. [Google Scholar]

- Wang, Y.; Li, Y.; Zheng, J. A camera calibration technique based on OpenCV. In Proceedings of the 3rd International Conference on Information Sciences and Interaction Sciences, Chengdu, China, 23–25 June 2010; pp. 403–406. [Google Scholar]

- Hu, W.; Xie, J.; Chau, H.W.; Si, B.C. Evaluation of parameter uncertainties in nonlinear regression using Microsoft Excel Spreadsheet. Environ. Syst. Res. 2015, 4, 1–12. [Google Scholar] [CrossRef]

- García-Alfonso, H.; Córdova-Esparza, D.M. Comparison of uncertainty analysis of the Montecarlo and Latin Hypercube algorithms in a camera calibration model. In Proceedings of the 2018 IEEE 2nd Colombian Conference on Robotics and Automation (CCRA), Barranquilla, Colombia, 1–3 November 2018; pp. 1–5. [Google Scholar]

- Zhan, K.; Fritsch, D.; Wagner, J.F. Stability analysis of intrinsic camera calibration using probability distributions. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2021; Volume 1048, p. 012010. [Google Scholar]

- Kainz, O.; Jakab, F.; Fecil’ak, P.; Vápeník, R.; Deák, A.; Cymbalák, D. Estimation of camera intrinsic matrix parameters and its utilization in the extraction of dimensional units. In Proceedings of the 2016 International Conference on Emerging eLearning Technologies and Applications (ICETA), Stary Smokovec, Slovakia, 24–25 November 2016; pp. 153–156. [Google Scholar]

- Reznicek, J. Method for measuring lens distortion by using pinhole lens. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 40, 509–515. [Google Scholar] [CrossRef]

- Fryer, J.G.; Brown, D.C. Lens distortion for close-range photogrammetry. Photogramm. Eng. Remote Sens. 1986, 52, 51–58. [Google Scholar]

- Duane, C.B. Close-range camera calibration. Photogramm. Eng. 1971, 37, 855–866. [Google Scholar]

- Wang, J.; Shi, F.; Zhang, J.; Liu, Y. A new calibration model of camera lens distortion. Pattern Recognit. 2008, 41, 607–615. [Google Scholar] [CrossRef]

- Sudhanshu, K. CarND-Camera-Calibration. 2016. Available online: https://github.com/udacity/CarND-Camera-Calibration/tree/master (accessed on 1 December 2022).

- Wong, R.K.; Storlie, C.B.; Lee, T.C. A frequentist approach to computer model calibration. J. R. Stat. Soc. Ser. B Stat. Methodol. 2017, 79, 635–648. [Google Scholar] [CrossRef]

- Zhang, J.; Fu, S. An efficient Bayesian uncertainty quantification approach with application to k-ω-γ transition modeling. Comput. Fluids 2018, 161, 211–224. [Google Scholar] [CrossRef]

- Radaideh, M.I.; Lin, L.; Jiang, H.; Cousineau, S. Bayesian inverse uncertainty quantification of the physical model parameters for the spallation neutron source first target station. Results Phys. 2022, 36, 105414. [Google Scholar] [CrossRef]

- Marelli, S.; Sudret, B. UQLab: A framework for uncertainty quantification in Matlab. In Proceedings of the 2nd International Conference on Vulnerability and Risk Analysis and Management (ICVRAM2014), Liverpool, UK, 13–16 July 2014; pp. 2554–2563. [Google Scholar]

- Van Ravenzwaaij, D.; Cassey, P.; Brown, S.D. A simple introduction to Markov Chain Monte–Carlo sampling. Psychon. Bull. Rev. 2018, 25, 143–154. [Google Scholar] [CrossRef]

- Wu, X.; Kozlowski, T.; Meidani, H.; Shirvan, K. Inverse uncertainty quantification using the modular Bayesian approach based on Gaussian process, Part 1: Theory. Nucl. Eng. Des. 2018, 335, 339–355. [Google Scholar] [CrossRef]

- Kruschke, J. Doing Bayesian Data Analysis: A Ttutorial with R, JAGS, and Stan; Academic Press: Cambridge, MA, USA, 2014. [Google Scholar]

- Roberts, G.O.; Rosenthal, J.S. Examples of adaptive MCMC. J. Comput. Graph. Stat. 2009, 18, 349–367. [Google Scholar] [CrossRef]

- Yamada, T.; Ohno, K.; Ohta, Y. Comparison between the Hamiltonian Monte Carlo method and the Metropolis–Hastings method for coseismic fault model estimation. Earth Planets Space 2022, 74, 86. [Google Scholar] [CrossRef]

- Jin, S.S.; Ju, H.; Jung, H.J. Adaptive Markov chain Monte Carlo algorithms for Bayesian inference: Recent advances and comparative study. Struct. Infrastruct. Eng. 2019, 15, 1548–1565. [Google Scholar] [CrossRef]

- Fornacon-Wood, I.; Mistry, H.; Johnson-Hart, C.; Faivre-Finn, C.; O’Connor, J.P.; Price, G.J. Understanding the differences between Bayesian and frequentist statistics. Int. J. Radiat. Oncol. Biol. Phys. 2022, 112, 1076–1082. [Google Scholar] [CrossRef]

- Ding, J.; Wang, Y.D.; Gulzar, S.; Kim, Y.R.; Underwood, B.S. Uncertainty quantification of simplified viscoelastic continuum damage fatigue model using the bayesian inference-based markov chain monte carlo method. Transp. Res. Rec. 2020, 2674, 247–260. [Google Scholar] [CrossRef]

- Kacker, R.; Jones, A. On use of Bayesian statistics to make the Guide to the Expression of Uncertainty in Measurement consistent. Metrologia 2003, 40, 235. [Google Scholar] [CrossRef]

| Zhang’s Method | Proposed Bayesian Method | |||||

|---|---|---|---|---|---|---|

| Parameter | Mean | Standard Deviation | Mean | Standard Deviation | ||

| 565.0326 | 3.3316 | Gaussian | 564.9714 | 3.1788 | Logistic | |

| 565.5575 | 3.3106 | Gaussian | 565.3179 | 3.5436 | Weibull | |

| 647.1847 | 0.6420 | Gaussian | 647.1668 | 0.8688 | Gaussian | |

| 504.2582 | 0.6714 | Gaussian | 504.3673 | 1.0146 | Gaussian | |

| −0.2693 | 0.0037 | Gaussian | −0.2700 | 0.0040 | Gumbel | |

| 0.1244 | 0.0068 | Gaussian | 0.1252 | 0.0071 | GumbelMin | |

| −0.0467 | 0.0063 | Gaussian | −0.0458 | 0.0062 | Gaussian | |

| −0.0022 | 0.0003 | Gaussian | −0.0022 | 0.0005 | Gaussian | |

| 0.0026 | 0.0002 | Gaussian | 0.0027 | 0.0003 | Beta | |

| Zhang’s Method | Proposed Bayesian Method | |||||

|---|---|---|---|---|---|---|

| Parameter | Mean | Standard Deviation | Mean | Standard Deviation | ||

| 566.8778 | 3.6615 | Gaussian | 567.4637 | 3.5116 | Gaussian | |

| 567.4131 | 3.7248 | Gaussian | 567.9525 | 3.6931 | Gaussian | |

| 651.4962 | 0.6736 | Gaussian | 651.7141 | 0.9339 | Uniform | |

| 502.6545 | 0.7893 | Gaussian | 502.7747 | 1.0737 | Uniform | |

| −0.2465 | 0.0036 | Gaussian | −0.2466 | 0.0029 | Gaussian | |

| 0.0756 | 0.0023 | Gaussian | 0.0756 | 0.0021 | Gaussian | |

| −0.0116 | 0.0006 | Gaussian | −0.0117 | 0.0006 | Gaussian | |

| −0.0010 | 0.0002 | Gaussian | −0.0010 | 0.0003 | Gaussian | |

| −0.0002 | 0.0002 | Gaussian | −0.0002 | 0.0003 | Gaussian | |

| Zhang’s Method | Proposed Bayesian Method | |||||

|---|---|---|---|---|---|---|

| Parameter | Mean | Standard Deviation | Mean | Standard Deviation | ||

| 553.2964 | 4.5922 | Gaussian | 552.7039 | 3.3532 | Gaussian | |

| 548.7821 | 4.3422 | Gaussian | 547.2856 | 3.6085 | Uniform | |

| 641.1869 | 2.1851 | Gaussian | 641.2931 | 2.9777 | Gumbel | |

| 502.4452 | 2.2514 | Gaussian | 502.2640 | 2.8074 | Weibull | |

| −0.2400 | 0.0051 | Gaussian | −0.2396 | 0.0037 | Logistic | |

| 0.0766 | 0.0047 | Gaussian | 0.0764 | 0.0038 | Logistic | |

| −0.0140 | 0.0015 | Gaussian | −0.0141 | 0.0013 | Logistic | |

| −0.0012 | 0.0006 | Gaussian | −0.0011 | 0.0006 | Gaussian | |

| 0.0035 | 0.0005 | Gaussian | 0.0036 | 0.0005 | Beta | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gutiérrez-Moizant, R.; Boada, M.J.L.; Ramírez-Berasategui, M.; Al-Kaff, A. Novel Bayesian Inference-Based Approach for the Uncertainty Characterization of Zhang’s Camera Calibration Method. Sensors 2023, 23, 7903. https://doi.org/10.3390/s23187903

Gutiérrez-Moizant R, Boada MJL, Ramírez-Berasategui M, Al-Kaff A. Novel Bayesian Inference-Based Approach for the Uncertainty Characterization of Zhang’s Camera Calibration Method. Sensors. 2023; 23(18):7903. https://doi.org/10.3390/s23187903

Chicago/Turabian StyleGutiérrez-Moizant, Ramón, María Jesús L. Boada, María Ramírez-Berasategui, and Abdulla Al-Kaff. 2023. "Novel Bayesian Inference-Based Approach for the Uncertainty Characterization of Zhang’s Camera Calibration Method" Sensors 23, no. 18: 7903. https://doi.org/10.3390/s23187903

APA StyleGutiérrez-Moizant, R., Boada, M. J. L., Ramírez-Berasategui, M., & Al-Kaff, A. (2023). Novel Bayesian Inference-Based Approach for the Uncertainty Characterization of Zhang’s Camera Calibration Method. Sensors, 23(18), 7903. https://doi.org/10.3390/s23187903