Development and Testing of a Daily Activity Recognition System for Post-Stroke Rehabilitation

Abstract

1. Introduction

Previous and Related Work

2. Materials and Methods

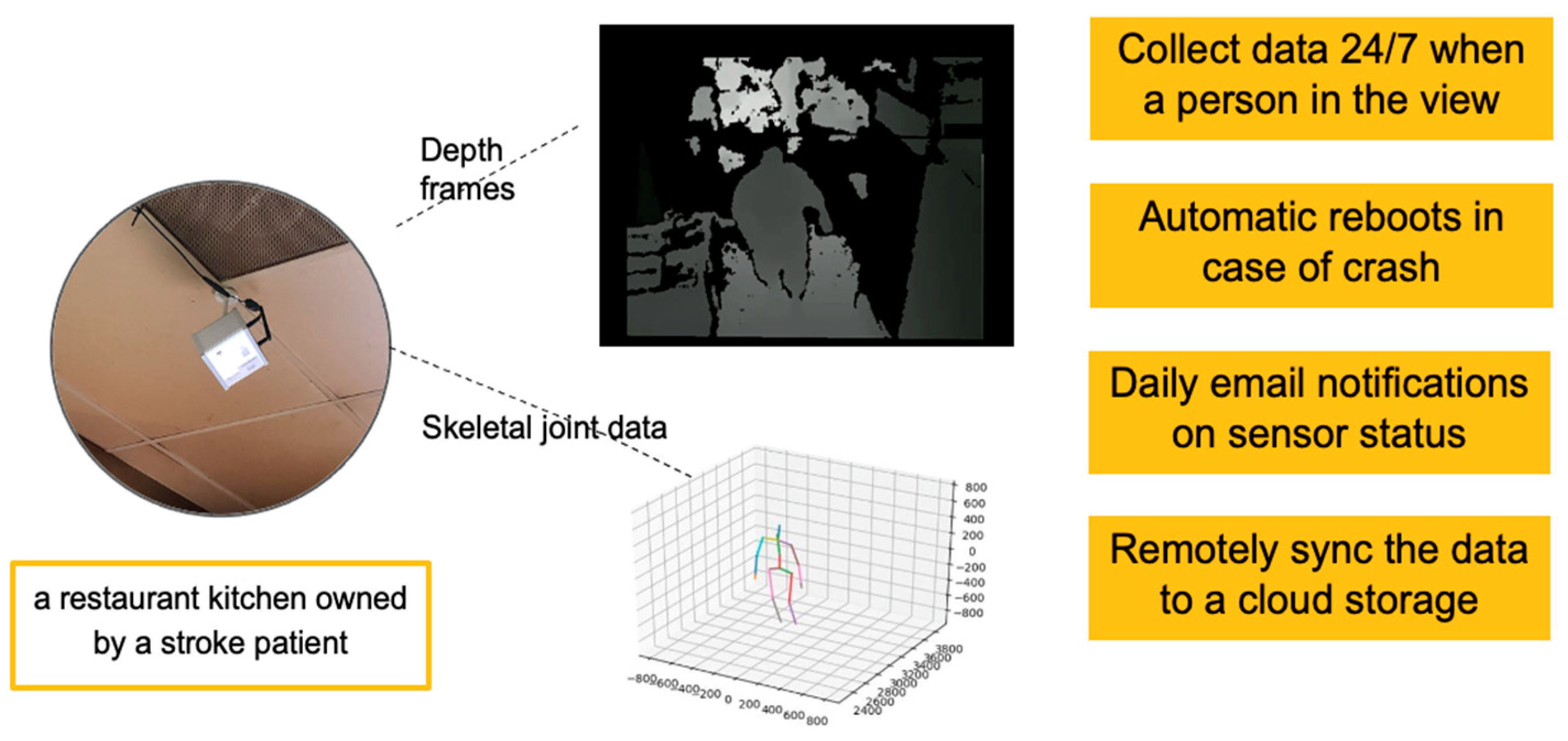

2.1. Action Data Logging System

2.2. Action Recognition Algorithm

2.2.1. Study Participants

2.2.2. Data Collection and Processing

Data Annotations

- Walking;

- Reaching overhead;

- Reaching forward;

- Reaching below the waist;

- Hand manipulation;

- None of the above.

2.2.3. Action Recognition Algorithm

HON4D Descriptor

| Algorithm 1. Pseudocode used to generate a list of oriented 4D normals for a sequence of images. |

| 1: procedure CALCULATENORMALS(images) |

| 2: for k = 0; k < images.Count – 1; k++ do |

| 3: img1 ← images[k] |

| 4: img2 ← images[k + 1] |

| 5: for x = 0; x < img1.Width; x++ do |

| 6: for y = 0; y < img1.Height; y++ do |

| 7: currentPixel = img1.GetPixel(x, y) |

| 8: nextPixel = img2.GetPixel(x, y) |

| 9: rightPixel = img1.GetPixel(x + 1, y) |

| 10: leftPixel = img1.GetPixel(x − 1, y) |

| 11: upPixel = img1.GetPixel(x, y − 1) |

| 12: downPixel = img1.GetPixel(x, y + 1) |

| 13: x = rightPixel – leftPixel |

| 14: y = downPixel – upPixel |

| 15: z = currentPixel – nextPixel |

| 16: normalList.Add(x, y, z − 1) |

| 17: end for |

| 18: end for |

| 19: end for |

| 20: return normalList |

| 21: end procedure |

| Algorithm 2. Pseudocode used to generate a histogram of oriented 4D normals, where proj is the list of projectors, normalList is the list of normals calculated from Algorithm 1, and hon4d is the histogram. |

| 1: procedure CREATEHON4D(proj, normList, hon4d) |

| 2: for k = 0; k < proj.Count; k++ do |

| 3: for n = 0; n < normList.Count; n++ do |

| 4: hon4d[k] += max(0, dotP(proj[k],norm – List[n])) |

| 5: end for |

| 6: end for |

| 7: return hon4d |

| 8: end procedure |

2.2.4. Ensemble Network Architecture

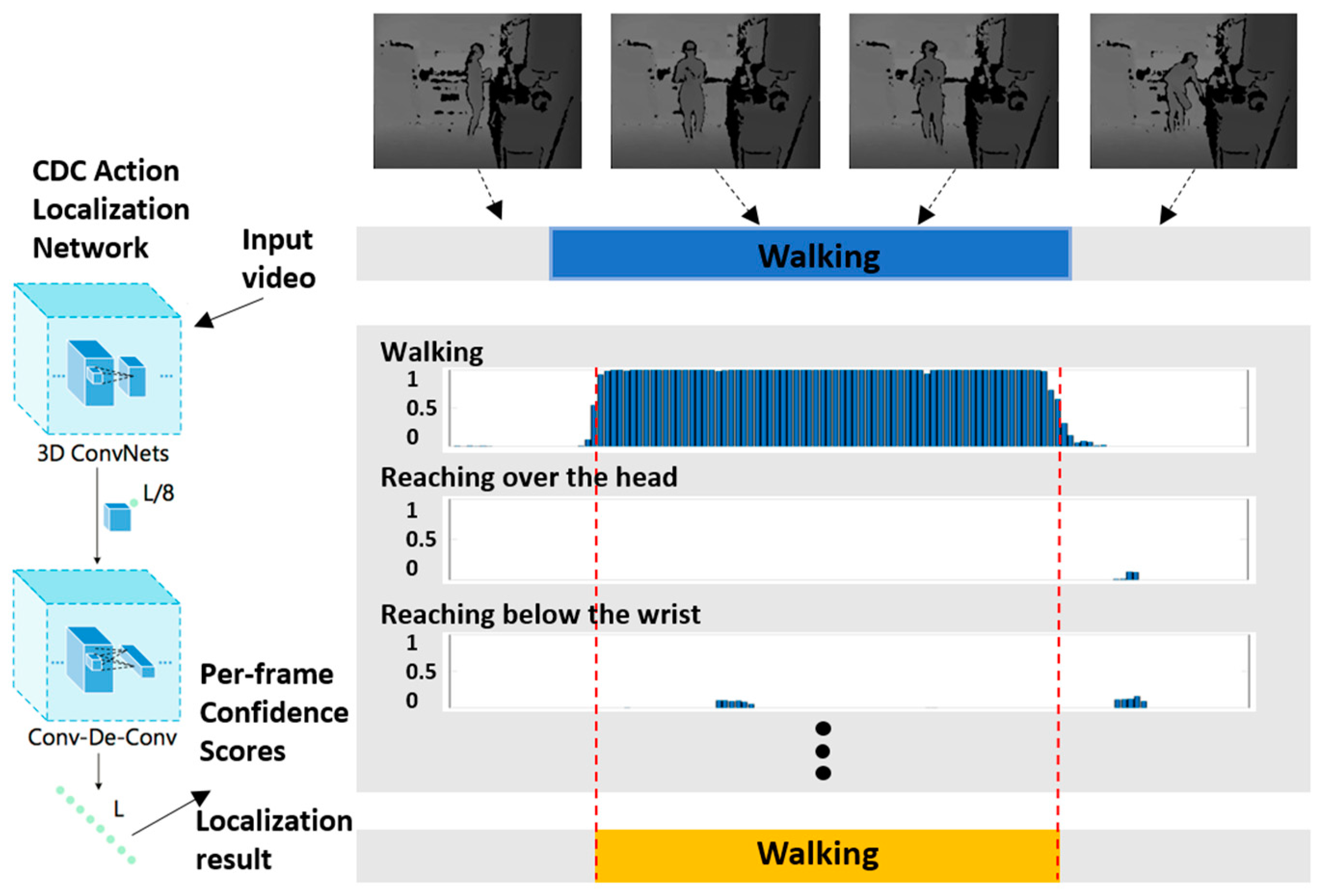

Convolutional–De-Convolutional (CDC) Network

Region Convolutional 3D Network

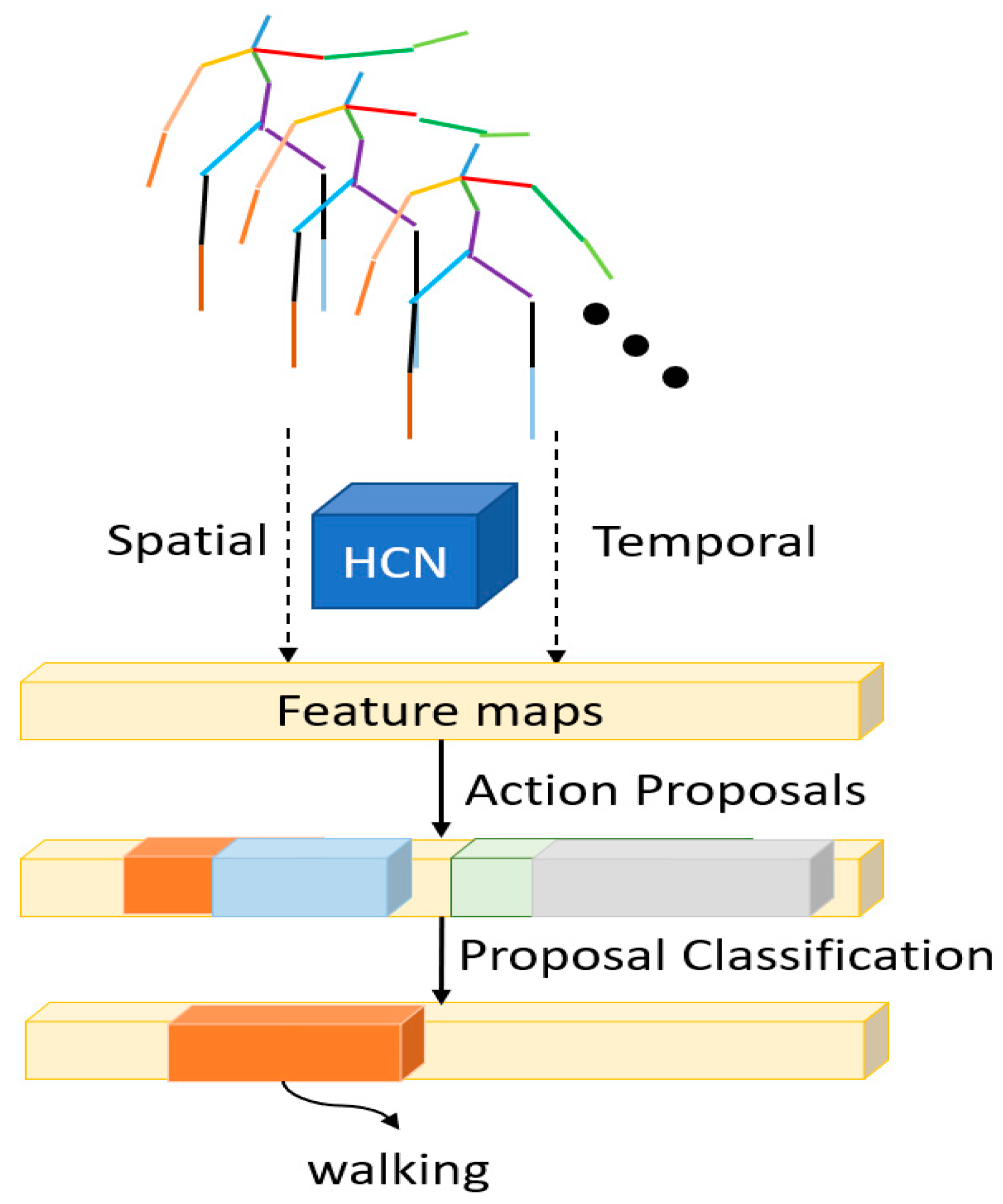

Region Hierarchical Co-Occurrence Network

2.2.5. Ensemble Network Action Recognition

3. Results

3.1. Participants

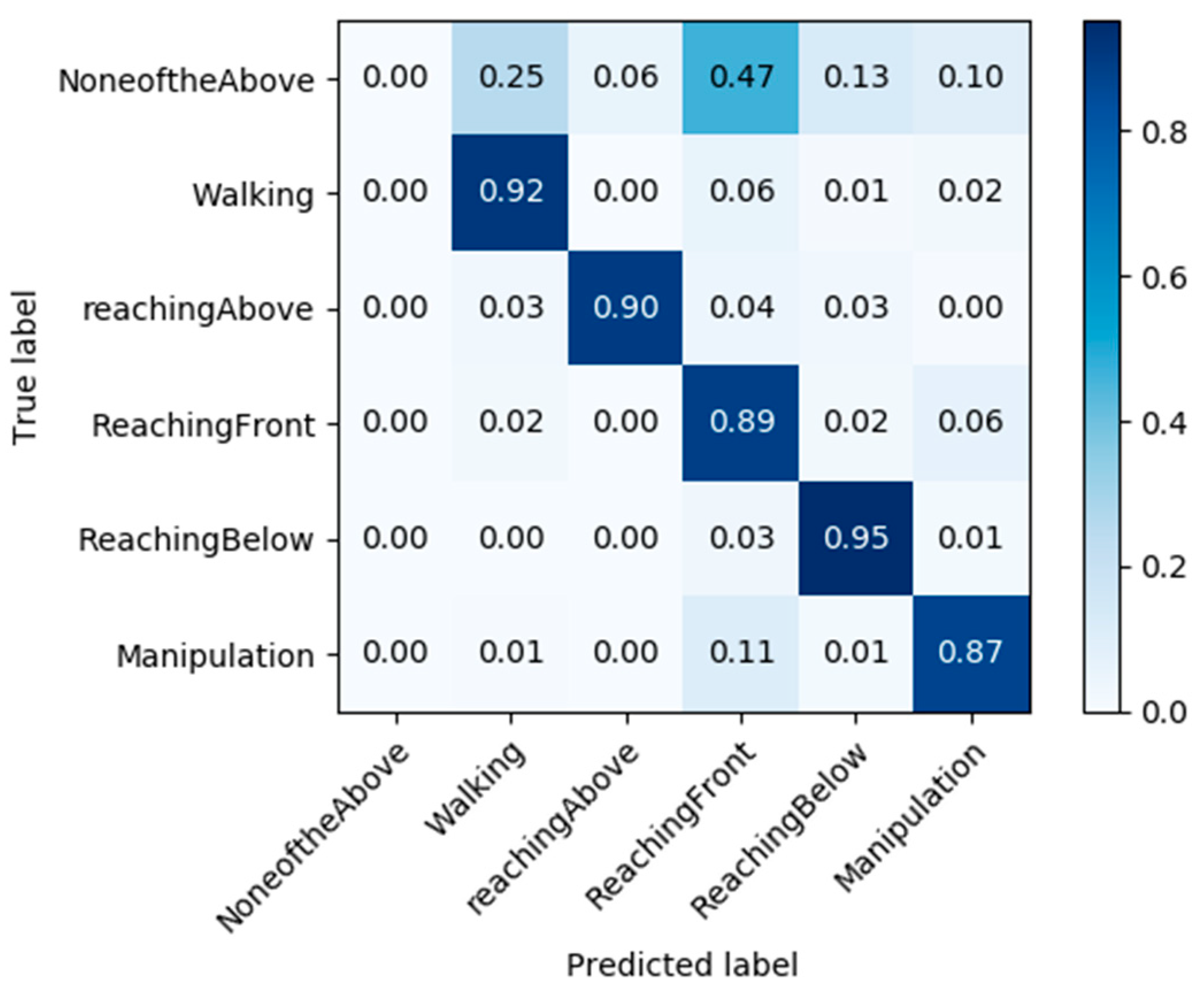

3.2. Algorithm Training and Testing

3.2.1. CDC

3.2.2. R-C3D

3.2.3. R-HCN

3.2.4. Ensemble Network

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Tsao, C.W.; Aday, A.W.; Almarzooq, Z.I.; Anderson, C.A.; Arora, P.; Avery, C.L.; Baker-Smith, C.M.; Beaton, A.Z.; Boehme, A.K.; Buxton, A.E.; et al. Heart disease and stroke statistics—2023 update: A report from the American Heart Association. Circulation 2023, 21, e93–e621. [Google Scholar] [CrossRef] [PubMed]

- Winstein, C.J.; Stein, J.; Arena, R.; Bates, B.; Cherney, L.R.; Cramer, S.C.; Deruyter, F.; Eng, J.J.; Fisher, B.; Harvey, R.L.; et al. Guidelines for adult stroke rehabilitation and recovery: A guideline for healthcare professionals from the American Heart Association/American Stroke Association. Stroke 2016, 47, e98–e169. [Google Scholar] [CrossRef] [PubMed]

- Jurkiewicz, M.T.; Marzolini, S.; Oh, P. Adherence to a home-based exercise program for individuals after stroke. Top. Stroke Rehabil. 2011, 18, 277–284. [Google Scholar] [CrossRef] [PubMed]

- Morris, J.H.; Williams, B. Optimising long-term participation in physical activities after stroke: Exploring new ways of working for physiotherapists. Physiotherapy 2009, 95, 227–233. [Google Scholar] [CrossRef]

- Nicholson, S.; Sniehotta, F.F.; Van Wijck, F.; Greig, C.A.; Johnston, M.; McMurdo, M.E.; Dennis, M.; Mead, G.E. A systematic review of perceived barriers and motivators to physical activity after stroke. Int. J. Stroke 2013, 8, 357–364. [Google Scholar] [CrossRef]

- Simpson, D.B.; Bird, M.L.; English, C.; Gall, S.L.; Breslin, M.; Smith, S.; Schmidt, M.; Callisaya, M.L. Connecting patients and therapists remotely using technology is feasible and facilitates exercise adherence after stroke. Top. Stroke Rehabil. 2020, 17, 93–102. [Google Scholar] [CrossRef]

- Thielbar, K.O.; Triandafilou, K.M.; Barry, A.J.; Yuan, N.; Nishimoto, A.; Johnson, J.; Stoykov, M.E.; Tsoupikova, D.; Kamper, D.G. Home-based upper extremity stroke therapy using a multiuser virtual reality environment: A randomized trial. Arch. Phys. Med. Rehabil. 2020, 101, 196–203. [Google Scholar] [CrossRef]

- Wolf, S.L.; Sahu, K.; Bay, R.C.; Buchanan, S.; Reiss, A.; Linder, S.; Rosenfeldt, A.; Alberts, J. The HAAPI (Home Arm Assistance Progression Initiative) trial: A novel robotics delivery approach in stroke rehabilitation. Neurorehabilit. Neural Repair 2015, 29, 958–968. [Google Scholar] [CrossRef] [PubMed]

- Grau-Pellicer, M.; Lalanza, J.F.; Jovell-Fernández, E.; Capdevila, L. Impact of mHealth technology on adherence to healthy PA after stroke: A randomized study. Top. Stroke Rehabil. 2020, 27, 354–368. [Google Scholar] [CrossRef] [PubMed]

- Zhang, D.; Zhou, J.; Guo, M.; Cao, J.; Li, T. TASA: Tag-Free Activity Sensing Using RFID Tag Arrays. IEEE Trans. Parallel Distrib. Syst. 2011, 22, 558–570. [Google Scholar] [CrossRef]

- Barman, J.; Uswatte, G.; Ghaffari, T.; Sokal, B.; Byrom, E.; Trinh, E.; Brewer, M.; Varghese, C.; Sarkar, N. Sensor-enabled RFID system for monitoring arm activity: Reliability and validity. IEEE Trans. Neural Syst. Rehabil. Eng. 2012, 20, 771–777. (In English) [Google Scholar] [CrossRef] [PubMed]

- Barman, J.; Uswatte, G.; Sarkar, N.; Ghaffari, T.; Sokal, B. Sensor-enabled RFID system for monitoring arm activity in daily life. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 5219–5223. [Google Scholar] [CrossRef]

- Yared, R.; Negassi, M.E.; Yang, L. Physical activity classification and assessment using smartphone. In Proceedings of the 2018 IEEE 9th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), Vancouver, BC, Canada, 1–3 November 2018; pp. 140–144. [Google Scholar] [CrossRef]

- Cheng, W.Y.; Lipsmeier, F.; Scotland, A.; Creagh, A.; Kilchenmann, T.; Jin, L.; Schjodt-Eriksen, J.; Wolf, D.; Zhang-Schaerer, Y.P.; Garcia, I.F.; et al. Smartphone-based continuous mobility monitoring of Parkinsons disease patients reveals impacts of ambulatory bout length on gait features. In Proceedings of the 2017 IEEE Life Sciences Conference (LSC), Sydney, Australia, 13–15 December 2017; pp. 166–169. [Google Scholar] [CrossRef]

- Coni, A.; Mellone, S.; Leach, J.M.; Colpo, M.; Bandinelli, S.; Chiari, L. Association between smartphone-based activity monitoring and traditional clinical assessment tools in community-dwelling older people. In Proceedings of the 2016 IEEE 2nd International Forum on Research and Technologies for Society and Industry Leveraging a Better Tomorrow (RTSI), Bologna, Italy, 7–9 September 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Wang, C.C.; Chiang, C.Y.; Lin, P.Y.; Chou, Y.C.; Kuo, I.T.; Huang, C.N.; Chan, C.T. Development of a Fall Detecting System for the Elderly Residents. In Proceedings of the 2008 2nd International Conference on Bioinformatics and Biomedical Engineering, Shanghai, China, 16–18 May 2008; pp. 1359–1362. [Google Scholar] [CrossRef]

- Narayanan, M.R.; Lord, S.R.; Budge, M.M.; Celler, B.G.; Lovell, N.H. Falls management: Detection and prevention, using a waist-mounted triaxial accelerometer. In Proceedings of the 2007 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Lyon, France, 22–26 August 2007; Volume 2007, pp. 4037–4040. (In English). [Google Scholar] [CrossRef]

- Noury, N.; Galay, A.; Pasquier, J.; Ballussaud, M. Preliminary investigation into the use of Autonomous Fall Detectors. In Proceedings of the 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vancouver, BC, Canada, 20–25 August 2008; Volume 2008, pp. 2828–2831. (In English). [Google Scholar] [CrossRef]

- Song, M.; Kim, J. An Ambulatory Gait Monitoring System with Activity Classification and Gait Parameter Calculation Based on a Single Foot Inertial Sensor. IEEE Trans. Biomed. Eng. 2018, 65, 885–893. [Google Scholar] [CrossRef] [PubMed]

- Gyllensten, I.C.; Bonomi, A.G. Identifying types of physical activity with a single accelerometer: Evaluating laboratory-trained algorithms in daily life. IEEE Trans. Biomed. Eng. 2011, 58, 2656–2663. (In English) [Google Scholar] [CrossRef]

- Karantonis, D.M.; Narayanan, M.R.; Mathie, M.; Lovell, N.H.; Celler, B.G. Implementation of a real-time human movement classifier using a triaxial accelerometer for ambulatory monitoring. IEEE Trans. Inf. Technol. Biomed. 2006, 10, 156–167. [Google Scholar] [CrossRef]

- Sangil, C.; LeMay, R.; Jong-Hoon, Y. On-board processing of acceleration data for real-time activity classification. In Proceedings of the 2013 IEEE 10th Consumer Communications and Networking Conference (CCNC), Las Vegas, NA, USA, 11–14 January 2013; pp. 68–73. [Google Scholar] [CrossRef]

- Imran, H.A. Khail-Net: A Shallow Convolutional Neural Network for Recognizing Sports Activities Using Wearable Inertial Sensors. IEEE Sens. Lett. 2022, 6, 1–4. [Google Scholar] [CrossRef]

- Cornacchia, M.; Ozcan, K.; Zheng, Y.; Velipasalar, S. A Survey on Activity Detection and Classification Using Wearable Sensors. IEEE Sens. J. 2017, 17, 386–403. [Google Scholar] [CrossRef]

- Chen, C.; Jafari, R.; Kehtarnavaz, N. A survey of depth and inertial sensor fusion for human action recognition. Multimed. Tools Appl. 2017, 76, 4405–4425. [Google Scholar] [CrossRef]

- Nam, Y.; Rho, S.; Lee, C. Physical Activity Recognition using Multiple Sensors Embedded in a Wearable Device. ACM Trans. Embed. Comput. Syst. (TECS) 2013, 12, 26. [Google Scholar] [CrossRef]

- Hafeez, S.; Jalal, A.; Kamal, S. Multi-Fusion Sensors for Action Recognition based on Discriminative Motion Cues and Random Forest. In Proceedings of the 2021 International Conference on Communication Technologies (ComTech), Rawalpindi, Pakistan, 21–22 September 2021; pp. 91–96. [Google Scholar] [CrossRef]

- Doherty, A.R.; Kelly, P.; Kerr, J.; Marshall, S.; Oliver, M.; Badland, H.; Hamilton, A.; Foster, C. Using wearable cameras to categorise type and context of accelerometer-identified episodes of physical activity. Int. J. Behav. Nutr. Phys. Act. 2013, 10, 22. [Google Scholar] [CrossRef]

- Meng, L.; Jiang, X.; Qin, H.; Fan, J.; Zeng, Z.; Chen, C.; Zhang, A.; Dai, C.; Wu, X.; Akay, Y.M.; et al. Automatic Upper-Limb Brunnstrom Recovery Stage Evaluation via Daily Activity Monitoring. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 2589–2599. [Google Scholar] [CrossRef] [PubMed]

- Taylor, K.; Abdulla, U.A.; Helmer, R.J.N.; Lee, J.; Blanchonette, I. Activity classification with smart phones for sports activities. Procedia Eng. 2011, 13, 428–433. [Google Scholar] [CrossRef][Green Version]

- Prathivadi, Y.; Wu, J.; Bennett, T.R.; Jafari, R. Robust activity recognition using wearable IMU sensors. In Proceedings of the SENSORS, 2014 IEEE, Valencia, Spain, 2–5 November 2014; pp. 486–489. [Google Scholar] [CrossRef]

- Atallah, L.; Lo, B.; King, R.; Yang, G.Z. Sensor Placement for Activity Detection Using Wearable Accelerometers. In Proceedings of the 2010 International Conference on Body Sensor Networks, Singapore, 7–9 June 2010; pp. 24–29. [Google Scholar] [CrossRef]

- Kunze, K.; Lukowicz, P. Sensor Placement Variations in Wearable Activity Recognition. IEEE Pervasive Comput. 2014, 13, 32–41. [Google Scholar] [CrossRef]

- Saeedi, R.; Purath, J.; Venkatasubramanian, K.K.; Ghasemzadeh, H. Toward seamless wearable sensing: Automatic on-body sensor localization for physical activity monitoring. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; pp. 5385–5388. [Google Scholar]

- Hadjidj, A.; Souil, M.; Bouabdallah, A.; Challal, Y.; Owen, H. Wireless sensor networks for rehabilitation applications: Challenges and opportunities. J. Netw. Comput. Appl. 2013, 36, 1–15. [Google Scholar] [CrossRef]

- Connelly, K.; Molchan, H.; Bidanta, R.; Siddh, S.; Lowens, B.; Caine, K.; Demiris, G.; Siek, K.; Reeder, B. Evaluation framework for selecting wearable activity monitors for research. mHealth 2021, 7, 6. [Google Scholar] [CrossRef]

- Liang, B.; Zheng, L. A Survey on Human Action Recognition Using Depth Sensors. In Proceedings of the 2015 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Adelaide, Australia, 23–25 November 2015; pp. 1–8. [Google Scholar] [CrossRef]

- Vrigkas, M.; Nikou, C.; Kakadiaris, I. A Review of Human Activity Recognition Methods. Front. Robot. Artif. Intell. 2015, 2, 28. [Google Scholar] [CrossRef]

- Dawn, D.D.; Shaikh, S.H. A comprehensive survey of human action recognition with spatio-temporal interest point (STIP) detector. Vis. Comput. 2016, 32, 289–306. [Google Scholar] [CrossRef]

- Laptev, I.; Lindeberg, T. Space-time interest points. In Proceedings of the Ninth IEEE International Conference on Computer Vision (ICCV’03), Washington, DC, USA, 13–16 October 2003; Volume 1, pp. 432–439. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar] [CrossRef]

- Oreifej, O.; Liu, Z. HON4D: Histogram of Oriented 4D Normals for Activity Recognition from Depth Sequences. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 716–723. [Google Scholar] [CrossRef]

- Dollar, P.; Rabaud, V.; Cottrell, G.; Belongie, S. Behavior recognition via sparse spatio-temporal features. In Proceedings of the2005 IEEE International Workshop on Visual Surveillance and Performance Evaluation of Tracking and Surveillance, Lausanne, Switzerland, 15–16 October 2005; pp. 65–72. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Wang, H.; Kläser, A.; Schmid, C.; Liu, C.L. Action recognition by dense trajectories. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 3169–3176. [Google Scholar] [CrossRef]

- Bilen, H.; Fernando, B.; Gavves, E.; Vedaldi, A.; Gould, S. Dynamic Image Networks for Action Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3034–3042. [Google Scholar] [CrossRef]

- Banerjee, T.; Keller, J.M.; Popescu, M.; Skubic, M. Recognizing complex instrumental activities of daily living using scene information and fuzzy logic. Comput. Vis. Image Underst. 2015, 140, 68–82. [Google Scholar] [CrossRef]

- Wang, H.; Schmid, C. Action Recognition with Improved Trajectories. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 3551–3558. [Google Scholar] [CrossRef]

- Wang, H.; Schmid, C. Lear-inria submission for the thumos workshop. In Proceedings of the ICCV Workshop on Action Recognition with a large Number of Classes, Sydney, Australia, 1–8 December 2013; Volume 8. [Google Scholar]

- Vahdani, E.; Tian, Y. Deep Learning-based Action Detection in Untrimmed Videos: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 4302–4320. [Google Scholar] [CrossRef]

- Xu, H.; Das, A.; Saenko, K. R-C3D: Region Convolutional 3D Network for Temporal Activity Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Gong, G.; Zheng, L.; Mu, Y. Scale Matters: Temporal Scale Aggregation Network for Precise Action Localization in Untrimmed Videos. In Proceedings of the 2020 IEEE International Conference on Multimedia and Expo (ICME), London, UK, 6–10 July 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Wang, L.; Xiong, Y.; Lin, D.; Gool, L.V. UntrimmedNets for Weakly Supervised Action Recognition and Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6402–6411. [Google Scholar] [CrossRef]

- Rashid, M.; Kjellström, H.; Lee, Y. Action Graphs: Weakly-supervised Action Localization with Graph Convolution Networks. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Village, CO, USA, 1–5 March 2020. [Google Scholar]

- Lee, P.; Uh, Y.; Byun, H. Background Suppression Network for Weakly-supervised Temporal Action Localization. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019. [Google Scholar]

- Nguyen, P.; Liu, T.; Prasad, G.; Han, B. Weakly Supervised Action Localization by Sparse Temporal Pooling Network. arXiv 2017, arXiv:1712.05080. Available online: https://ui.adsabs.harvard.edu/abs/2017arXiv171205080N (accessed on 1 June 2023).

- Nguyen, P.; Ramanan, D.; Fowlkes, C. Weakly-Supervised Action Localization with Background Modeling. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5501–5510. [Google Scholar] [CrossRef]

- Rantz, M.; Skubic, M.; Abbott, C.; Galambos, C.; Popescu, M.; Keller, J.; Stone, E.; Back, J.; Miller, S.J.; Petroski, G.F. Automated in-home fall risk assessment and detection sensor system for elders. Gerontologist 2015, 55 (Suppl. S1), S78–S87. [Google Scholar] [CrossRef] [PubMed]

- Phillips, L.J.; DeRoche, C.B.; Rantz, M.; Alexander, G.L.; Skubic, M.; Despins, L.; Abbott, C.; Harris, B.H.; Galambos, C.; Koopman, R.J. Using embedded sensors in independent living to predict gait changes and falls. West. J. Nurs. Res. 2017, 39, 78–94. [Google Scholar] [CrossRef] [PubMed]

- Collins, J.; Warren, J.; Ma, M.; Proffitt, R.; Skubic, M. Stroke patient daily activity observation system. In Proceedings of the 2017 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Kansas City, MO, USA, 13–16 November 2017; pp. 844–848. [Google Scholar]

- Shou, Z.; Chan, J.; Zareian, A.; Miyazawa, K.; Chang, S.F. CDC: Convolutional-de-convolutional networks for precise temporal action localization in untrimmed videos. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1417–1426. [Google Scholar]

- Proffitt, R.; Ma, M.; Skubic, M. Novel clinically-relevant assessment of upper extremity movement using depth sensors. Top. Stroke Rehabil. 2023, 30, 11–20. [Google Scholar] [CrossRef]

- Healthcare, F. Intelligent Design for Preventive Healthcare, Foresite. Available online: https://www.foresitehealthcare.com/ (accessed on 1 June 2023).

- Ma, M.; Meyer, B.J.; Lin, L.; Proffitt, R.; Skubic, M. VicoVR-Based Wireless Daily Activity Recognition and Assessment System for Stroke Rehabilitation. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; pp. 1117–1121. [Google Scholar] [CrossRef]

- Moore, Z.; Sifferman, C.; Tullis, S.; Ma, M.; Proffitt, R.; Skubic, M. Depth Sensor-Based In-Home Daily Activity Recognition and Assessment System for Stroke Rehabilitation. In Proceedings of the 2019 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), San Diego, CA, USA, 18–21 November 2019; pp. 1051–1056. [Google Scholar] [CrossRef]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning Spatiotemporal Features with 3D Convolutional Networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Li, C.; Zhong, Q.; Xie, D.; Pu, S. Co-occurrence Feature Learning from Skeleton Data for Action Recognition and Detection with Hierarchical Aggregation. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence (IJCAI-18), Stockholm, Sweden, 13–19 July 2018; pp. 786–792. [Google Scholar]

- Dorsch, A.K.; Thomas, S.; Xu, X.; Kaiser, W.; Dobkin, B.H. SIRRACT: An international randomized clinical trial of activity feedback during inpatient stroke rehabilitation enabled by wireless sensing. Neurorehabilit. Neural Repair 2015, 29, 407–415. [Google Scholar] [CrossRef]

- Boukhennoufa, I.; Zhai, X.; Utti, V.; Jackson, J.; McDonald-Maier, K.D. Wearable sensors and machine learning in post-stroke rehabilitation assessment: A systematic review. Biomed. Signal Process. Control 2022, 71, 103197. [Google Scholar] [CrossRef]

- Bisio, I.; Delfino, A.; Lavagetto, F.; Sciarrone, A. Enabling IoT for in-home rehabilitation: Accelerometer signals classification methods for activity and movement recognition. IEEE Internet Things J. 2016, 4, 135–146. [Google Scholar] [CrossRef]

- Debnath, B.; O’brien, M.; Yamaguchi, M.; Behera, A. A review of computer vision-based approaches for physical rehabilitation and assessment. Multimed. Syst. 2022, 28, 209–239. [Google Scholar] [CrossRef]

- Ahad, M.A.; Antar, A.D.; Shahid, O. Vision-based Action Understanding for Assistive Healthcare: A Short Review. In Proceedings of the CVPR Workshops, Long Beach, CA, USA, 16–20 June 2019; pp. 1–11. [Google Scholar]

- Bijalwan, V.; Semwal, V.B.; Singh, G.; Mandal, T.K. HDL-PSR: Modelling spatio-temporal features using hybrid deep learning approach for post-stroke rehabilitation. Neural Process. Lett. 2023, 55, 279–298. [Google Scholar] [CrossRef]

- Lin, H.C.; Chiang, S.Y.; Lee, K.; Kan, Y.C. An activity recognition model using inertial sensor nodes in a wireless sensor network for frozen shoulder rehabilitation exercises. Sensors 2015, 15, 2181–2204. [Google Scholar] [CrossRef]

| Classification | Regression |

|---|---|

| Ncls: number of batches | Nreg: number of anchor or proposal segments |

| ai: the predicted probability of the proposal or activity | ti = {tx,tw}: predicted relative offset to anchor segments or proposals |

| ai*: the ground truth (1 if the anchor is positive and 0 if the anchor is negative.) | ti*={tx*,tw*}: the coordinate transformation of ground truth segments to anchor segments or proposals |

| Dataset | Precision | Trial1 | Trial2 | Trial3 | Mean | Std. |

|---|---|---|---|---|---|---|

| P1–3 * | Per Frame | 0.785 | 0.791 | 0.679 | 0.752 | 0.063 |

| Per Action | 0.831 | 0.801 | 0.761 | 0.798 | 0.035 | |

| P1–4 | Per Frame | 0.790 | 0.772 | 0.789 | 0.784 | 0.010 |

| Per Action | 0.801 | 0.792 | 0.724 | 0.773 | 0.042 | |

| P1–5 | Per Frame | 0.818 | 0.817 | 0.742 | 0.792 | 0.044 |

| Per Action | 0.839 | 0.801 | 0.791 | 0.810 | 0.025 | |

| P1–5, 10 ^ | Per Frame | 0.824 | 0.904 | 0.881 | 0.869 | 0.041 |

| Per Action | 0.827 | 0.911 | 0.867 | 0.868 | 0.042 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Proffitt, R.; Ma, M.; Skubic, M. Development and Testing of a Daily Activity Recognition System for Post-Stroke Rehabilitation. Sensors 2023, 23, 7872. https://doi.org/10.3390/s23187872

Proffitt R, Ma M, Skubic M. Development and Testing of a Daily Activity Recognition System for Post-Stroke Rehabilitation. Sensors. 2023; 23(18):7872. https://doi.org/10.3390/s23187872

Chicago/Turabian StyleProffitt, Rachel, Mengxuan Ma, and Marjorie Skubic. 2023. "Development and Testing of a Daily Activity Recognition System for Post-Stroke Rehabilitation" Sensors 23, no. 18: 7872. https://doi.org/10.3390/s23187872

APA StyleProffitt, R., Ma, M., & Skubic, M. (2023). Development and Testing of a Daily Activity Recognition System for Post-Stroke Rehabilitation. Sensors, 23(18), 7872. https://doi.org/10.3390/s23187872