Abstract

A multi-exposure imaging approach proposed in earlier studies is used to increase star sensors’ attitude update rate by N times. Unfortunately, serious noises are also introduced in the star image due to multiple exposures. Therefore, a star centroid extraction method based on Kalman Filter is proposed in this paper. Firstly, star point prediction windows are generated based on centroids’ kinematic model. Secondly, the classic centroid method is used to calculate the coarse centroids of the star points within the prediction windows. Lastly, the coarse centroids are, respectively, processed by each Kalman Filter to filter image noises, and thus fine centroids are obtained. Simulations are conducted to verify the Kalman-Filter-based estimation model. Under noises with zero mean and ±0.4, ±1.0, and ±2.5 pixel maximum deviations, the coordinate errors after filtering are reduced to about 37.5%, 26.3%, and 20.7% of the original ones, respectively. In addition, experiments are conducted to verify the star point prediction windows. Among 100 star images, the average proportion of the number of effective star point objects obtained by the star point prediction windows in the total object number of each star image is calculated as only 0.95%. Both the simulated and experimental results demonstrate the feasibility and effectiveness of the proposed method.

1. Introduction

The star sensor is currently known as the most accurate attitude measurement instrument, and it also has the characteristics of no drift and autonomy [1,2,3,4,5,6,7,8,9,10,11]. Therefore, the star sensor has been widely used in the field of aerospace navigation [12,13,14,15,16,17,18,19,20,21,22]. However, the attitude update rate (AUR) of the traditional star sensor is usually 4–10 Hz, which is too low to accomplish navigation tasks independently. In order to improve the AUR, an integrated navigation system of a star sensor and inertial measurement unit (IMU) has become a kind of classic solution [23,24,25,26,27,28,29]. The attitude data of the star sensor have no drift but the update rate is low, while the update rate of the IMU is high, but the attitude data drift with time. When the above two navigation devices are combined, the attitude data of the star sensor can be regularly used to correct the data drift of the IMU. The integrated navigation system can output the attitude data without drift and with a high update rate. Additionally, when a three-field-of-view star sensor is adopted [30], the AUR can reach to three times of that of a single-field-of-view star sensor. This is because the three fields can be used to expose and output the attitude data alternately. Nevertheless, both the above two schemes also have some obvious disadvantages, such as a large volume, heavy weight, high power consumption, and so on, which are not conducive to the overall development of an attitude measurement and control system towards light and miniaturization.

In contrast, research on the improvement method for the AUR of a single star sensor seems to have more extensive application value. Zhong et al. [31] and Mao et al. [32], respectively, studied the working characteristics of the star sensor and then divided its working process into three stages, i.e., star image exposure imaging and star image pixel data transmission and processing, as well as star tracking and attitude calculation. Subsequently, they proposed a parallel pipeline processing method, and thus the above three working stages could be pipelined, so as to improve the AUR of the star sensor. In this situation, the AUR is totally determined by the most time-consuming one of the above three working stages. For the conventional star sensor, a long exposure time must be conducted to obtain a sufficient star sensitivity due to the low sensitivity of the image detector. Therefore, the exposure time is the main bottleneck of the AUR of the conventional star sensor. In order to reduce the exposure time, some scholars have introduced high-sensitivity image detectors into the field of star sensors, such as electron-multiplying charge-coupled devices (EMCCD) and intensified charge-coupled devices (ICCD), etc. The shortening of the exposure time improves the AUR of star sensors to a certain extent. At this time, the transmission and processing times of the star image pixel data become a new bottleneck of the AUR. To further improve the AUR, Wang et al. [33] proposed a star centroid extraction method based on distributed parallel super blocks, and Ding et al. [34] also proposed a multichannel star centroid extraction method. The above two methods both utilized the idea of parallel processing to extract the star centroid, thus effectively reducing the pixel data processing time. Nevertheless, the transmission time of pixel data could still not be significantly reduced because of the restriction in the output capacity of the image detector itself. Meanwhile, Liebe’s research [35] pointed out that the attitude measurement accuracy of the star sensor is correspondingly improved with an improvement in the pixel resolution of the image detector, without changing other parameters. Therefore, large-array image detectors have been gradually applied to the field of star sensors. In this case, the transmission time consumption of star image pixel data is more serious, which greatly limits the AUR’s further improvement. In order to break the above limitation, some scholars have proposed a rolling shutter exposure imaging method [36,37,38,39]. In this method, each star point in a single star image is actually the imaging result at different moments. When the attitude tracking and single star point measurement are combined, a set of attitude data can be obtained by each star point, thus resulting in the AUR’s improvement. Unfortunately, the attitude measurement’s accuracy is seriously affected by the random error of the single star centroid, which makes it difficult to ensure the attitude measurement accuracy of star sensors.

In order to significantly improve the AUR, as well as overcome the shortcomings of the above methods, a multi-exposure imaging approach was proposed in the authors’ previous research [40,41]. N times of short exposure can be adaptively inserted into one normal exposure period according to the angular velocity of the carrier, so that the star spots at N moments can be recorded in a single star image. When the star tracking and attitude calculation are carried out according to N moments, respectively, the attitude information corresponding to N moments can be obtained, which is equivalent to increasing the AUR by N times. However, due to multiple exposures, serious noises and false objects are also introduced in the star image, which is not conducive to practical applications. For this reason, this paper proposes a star centroid extraction method based on Kalman Filter. It has a significant effect on removing false objects and improving the centroid accuracy, thus benefiting the practical application of the multi-exposure imaging star sensor (MEISS).

The remainder of this paper is organized as follows. Section 2 details the principle of the high-accuracy star centroid extraction method, including the star point prediction windows, centroiding, and Kalman Filter. Both simulations and experiments in Section 3 are conducted to demonstrate the feasibility and effectiveness of the proposed method. Section 4 describes the discussion of the simulated and experimental results. Finally, conclusions are drawn in Section 5.

2. Principle of High Accuracy Star Centroid Extraction Method

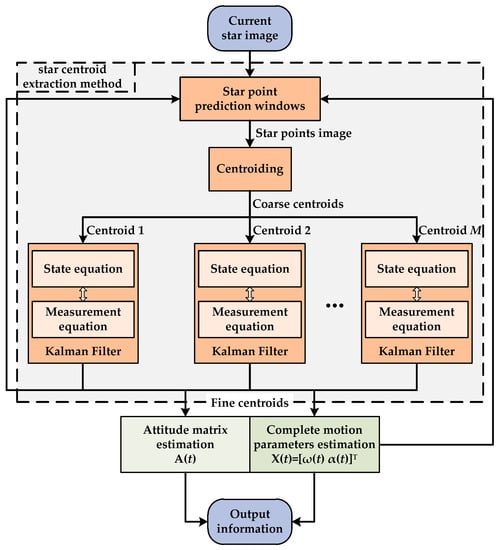

As described in the authors’ previous research [40], the multi-exposure imaging approach can be adopted to improve the star sensor’s AUR by N times. Nevertheless, due to the multiple exposures of an image detector, more severe imaging noises, as well as more fake star points, will be inevitably introduced into the whole star image. This is unfavorable for the accurate centroid extraction of true star points, thus affecting the MEISS’s accuracy. To ensure the MEISS’s effective application, this study proposes a high-accuracy star centroid extraction method based on Kalman Filter, which is illustrated in Figure 1.

Figure 1.

Flow chart of the proposed star centroid extraction method.

As shown in Figure 1, the proposed star centroid extraction method can be divided into three parts, i.e., star point prediction windows, centroiding, and Kalman Filter. Firstly, the star point prediction windows are generated by combining the fine centroids of the star spots in the former star image, as well as the complete motion parameters X(t) (including the angular velocity ω(t) and angular acceleration α(t)) [41]. The current star image will be processed by the star point prediction windows and then only the star points within the prediction windows can be transmitted to the next step. Secondly, the classic centroid method is used to calculate the centroids of the above star points. These centroids inevitably include many image noises and thus are just coarse centroids. Lastly, the coarse centroids are, respectively, processed by each Kalman Filter to filter the image noises and thus fine centroids are obtained. By using the fine centroids, both the attitude matrix and complete motion parameters can be estimated as the final output information. Meanwhile, the current fine centroids and complete motion parameters can be utilized to generate the next star point prediction windows. Therefore, the high-accuracy star centroid extraction can be constantly realized in such circulation.

2.1. Star Point Prediction Windows

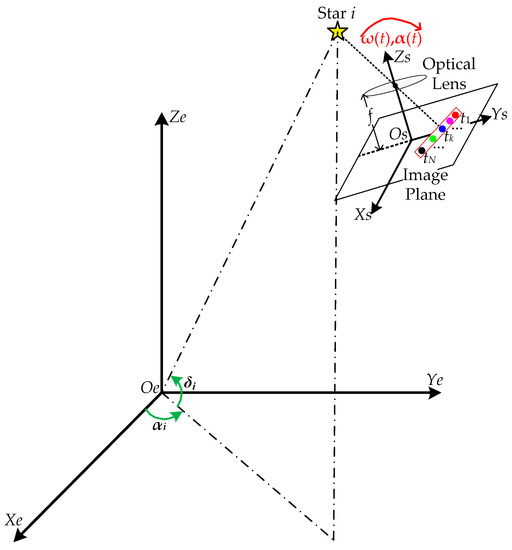

Figure 2 shows the MEISS’s basic working principle. Os-XsYsZs is the coordinate frame of the star sensor. Its coordinate origin Os is located at the center of the image plane of the image detector. The Xs-Os-Ys plane coincides with the image plane, and the Xs-axis and Ys-axis are, respectively, parallel to the horizontal and vertical directions of the image plane. The Zs-axis points to the direction of the bore sight. Oe-XeYeZe is the celestial coordinate frame. It is an inertial frame and its coordinate origin Oe is located at the center of the earth. The Xe-Oe-Ye plane coincides with the celestial equator plane. The Xe-axis points to the vernal equinox and the Ze-axis points to the celestial north pole. The Ye-axis is determined by the right-handed coordinate frame.

Figure 2.

Schematic diagram of the MEISS’s basic working principle.

There are a large number of stars who are moving quite slowly with time in the whole celestial sphere. After the correction of precession and nutation [42], the positions of the stars can be considered as time-independent constants. Taking Star i as an example, its right ascension (the rotation angle from the Xe-axis to the projection of Star i on the Xe-Oe-Ye plane) and declination (the rotation angle from the projection of Star i on the Xe-Oe-Ye plane to Star i) are, respectively, (αi, δi), and then its reference unit vector in the celestial coordinate frame Oe-XeYeZe can be expressed as:

When Star i is imaged by the MEISS, there will be a total of N imaging star spots of Star i in one star image due to the exposure times N. Taking the imaging time tk (k = 1,2,…,N) as an example, the observation unit vector of Star i in the MEISS’s coordinate frame Os-XsYsZs can be expressed as:

where (xi(tk), yi(tk)) are the coordinates of the star spot at time tk in the image plane and f is the MEISS’s focal length.

Let A(tk) represent the MEISS’s attitude matrix at time tk, and then the relation between Wi(tk) and Vi can be expressed as:

where M represents the total number of guide stars in the MEISS’s field of view (FOV).

Similarly, when the imaging time turns out to be tk+1, the relation between Wi(tk+1) and Vi can also be expressed as:

where A(tk+1) represents the MEISS’s attitude matrix at time tk+1.

When substituting Equation (3) into Equation (4), it can be derived as:

If defining R(tk,tk+1) as the attitude rotation matrix of the star sensor from time tk to time tk+1, it can be expressed as:

Since the time interval between time tk and time tk+1 is quite small, the rotation matrix can thus satisfy [43]:

where I is the 3 × 3 identity matrix, Δθ = [Δθx Δθy Δθz]T is the small rotation angle vector, [[Δθ]] is the antisymmetric matrix of Δθ, and is the higher-order infinitesimal matrix of Δθ.

Within the quite small time interval Δt = tk+1 − tk, the motion of the star sensor can be considered as the uniform angular acceleration motion. Therefore, let ω(tk) = [ωx(tk) ωy(tk) ωz(tk)]T and α(tk) = [αx(tk) αy(tk) αz(tk)]T represent the angle velocity and angle acceleration of the star sensor at time tk, respectively, and then the instantaneous angle velocity ω(t) = [ωx(t) ωy(t) ωz(t)]T can be expressed as:

By integrating Equation (8), the small rotation angle vector can be derived as:

When ignoring the higher-order infinitesimal matrix , the rotation matrix R(tk,tk+1) can be accordingly approximated as:

When substituting Equations (2) and (10) into Equation (5), it can be derived as:

According to Equation (11), the relationship of the third component of the observation unit vector can be expressed as:

Furthermore, Equation (12) can also be derived as:

When substituting Equation (13) into Equation (11), it can be transformed as:

Since the rotation angles Δθx and Δθy are quite small, the maximum calculation result of is still less than 0.001 and can thus be ignored. Therefore, Equation (14) can be accordingly approximated as:

Furthermore, considering that the focal length f is constant and (xi(t),yi(t)) are the coordinates of the star spot in the image plane, Equation (15) can be accordingly rewritten as:

For clarity, Equation (16) can also be simplified as a two-dimensional formula:

Therefore, when the current coordinates (xi(tk),yi(tk)), the focal length f, and the small rotation angle vector Δθ are known, the next coordinates (xi(tk+1),yi(tk+1)) can be predicted according to Equation (17).

It should be noticed that the star point of the MEISS is generally 7 × 7 pixels in size. In order to fully contain the effective pixel data of the star point, each star point prediction window can thus be set at a size of 14 × 14 pixels, which is two times bigger than the size of the star point.

2.2. Centroiding

After the star point prediction windows are generated according to Equation (17), they can be used to process the next star image. Only pixel data within each prediction window should be used to calculate the real star centroid, while those pixel data without the prediction window must belong to fake star points and should thus be discarded.

When obtaining the effective pixel data of the star spot, the classic centroid method [35] can be used to calculate the star centroid, which is expressed as:

where m, n, and f(m, n) are the x-coordinate, y-coordinate, and grayscale value of each effective pixel in the prediction window, and (xic(tk+1),yic(tk+1)) are the calculated star centroid. Since the above centroid results inevitably include many image noises, they are just coarse centroids.

2.3. Kalman Filter

In order to improve the accuracy of the above coarse centroids, Kalman Filter is utilized to filter the image noises and thus obtain the fine centroids. Kalman Filter consists of a state equation and a measurement equation.

Firstly, Equation (17) describes the relationship between the current centroid and the next centroid, and can thus be used as the state equation. Therefore, the state equation can be expressed as:

where Xk+1 = [xi(tk+1), yi(tk+1)]T and Xk = [xi(tk), yi(tk)]T are the state vectors at time tk and tk+1, respectively; Φk+1,k is the state transfer matrix, which is expressed as:

BkUk is the deterministic driving item, which is expressed as:

Wk represents the process noise, and its covariance matrix is Qk = εI2×2, where I2×2 is a 2 × 2 identity matrix and ε is a very small positive quantity.

Secondly, Equation (18) describes the measurement vector at time tk+1, and thus the measurement equation can be expressed as:

where Zk+1 = [xic(tk+1), yic(tk+1)]T and Xk+1 = [xi(tk+1), yi(tk+1)]T are the measurement vector and the state vector at time tk+1, respectively; Hk+1 = I2×2 is the measurement matrix; Vk+1 represents the measurement noise, and its covariance matrix is Rk+1 = μI2×2 (μ > 0), where μ is related to the noise level of the star image.

In summary, Equations (19) and (22) constitute the Kalman-Filter-based estimation model of the star centroid. The entire estimation process can be expressed as:

where k and k + 1 represent the current and next exposure times, respectively; and are the state vector estimations, which correspond to the two exposure times k and k + 1, respectively; and are the one-step prediction vector and one-step predictive error matrix, respectively; and and are the estimated error matrix and the filter gain matrix, respectively. When the initial values and are given, the star centroid vector can be estimated according to Equation (23).

3. Simulations and Experiments

3.1. Simulations

In this section, simulations are conducted to verify the feasibility and effectiveness of the above Kalman-Filter-based estimation model. The simulated parameters of the MEISS are listed in Table 1. The MEISS’s total period T and the multi-exposure times N are set as 100 ms and 10, respectively, and thus the sampling interval between each exposure time is 10 ms.

Table 1.

MEISS’s simulated parameters.

The entire simulations are run on a computer with a Windows 10 operating system and a CPU with a main frequency of 2.60 GHz. MATLAB (version: R2014a) is chosen as the simulation calculation software. The simulation process can be divided into several steps. Firstly, based on the star catalog of the Smithsonian Astrophysical Observatory (SAO), only stars with a magnitude of less than 6.0 are selected to build the MEISS’s navigation star catalog. Then, a set of attitude data is randomly generated as the MEISS’s initial attitude data, and a total of M = 30 navigation stars within the FOV are obtained under that attitude. Thirdly, the initial values of the angular velocity ω(t0) and angular acceleration α(t0) are assumed to be [10°/s 0 0]T and [1.0°/s2 0 0]T, respectively. Subsequently, among these 30 navigation stars, the 10th navigation star is randomly selected as the tested object. When the MEISS moves under the assumption of the above angular velocity ω(t0) and the angular acceleration α(t0), the centroid data of the 10th navigation star at continuous sampling times St, whose total sampling times are set as 300, can be obtained and used as the true values. Lastly, three kinds of different sizes of Gaussian white noise are added to the true centroid data and the Kalman-Filter-based estimation model is used to filter the noisy data.

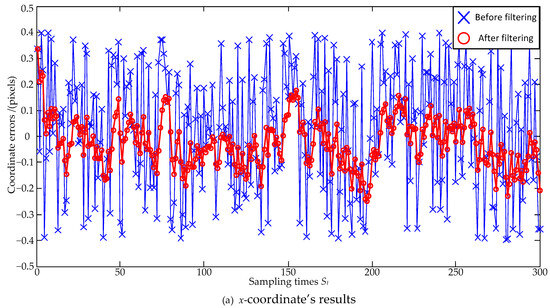

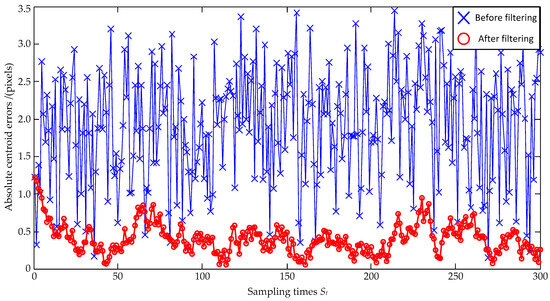

The noise size is firstly set as a zero mean and ±0.4 pixel maximum deviation, and the estimation errors are shown in Figure 3 and Figure 4. In Figure 3, the standard deviations of the x-coordinate’s estimation errors are 0.24 pixels before filtering and 0.09 pixels after filtering, respectively; the standard deviations of the y-coordinate’s estimation errors are 0.23 pixels before filtering and 0.08 pixels after filtering, respectively. Both the standard deviations of the x-coordinate’s and the y-coordinate’s estimation errors are decreased, and the x-coordinate’s and the y-coordinate’s reductions can be, respectively, calculated as:

Figure 3.

Coordinate estimation errors under ±0.4 pixel maximum deviation.

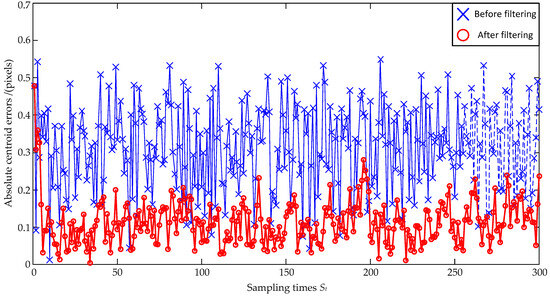

Figure 4.

Absolute centroid errors under ±0.4 pixel maximum deviation.

In order to make a reasonable evaluation, the larger value of 37.5% is selected as the evaluation result. That is to say, the coordinate errors are reduced to 37.5% of the original ones after the Kalman Filter estimation. In Figure 4, the absolute distance errors between the true and real values of the centroid are calculated, and it can be seen that the absolute distance errors are significantly reduced after filtering.

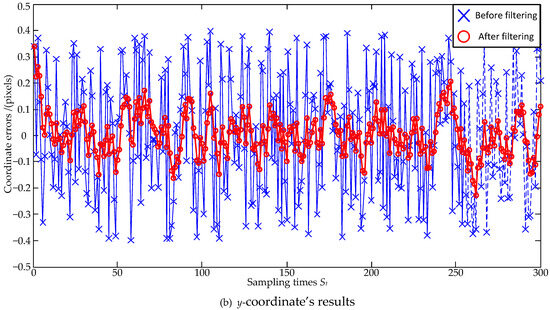

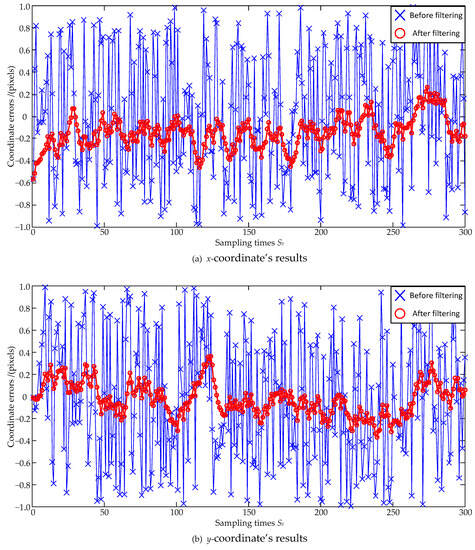

Subsequently, the noise size is enlarged as a zero mean and ±1.0 pixel maximum deviation, and the estimation errors are shown in Figure 5 and Figure 6. In Figure 5, the standard deviations of the x-coordinate’s estimation errors are 0.57 pixels before filtering and 0.14 pixels after filtering, respectively; the standard deviations of the y-coordinate’s estimation errors are 0.57 pixels before filtering and 0.15 pixels after filtering, respectively. Both the standard deviations of the x-coordinate’s and the y-coordinate’s estimation errors are decreased, and the x-coordinate’s and the y-coordinate’s reductions can be, respectively, calculated as:

Figure 5.

Coordinate estimation errors under ±1.0 pixel maximum deviation.

Figure 6.

Absolute centroid errors under ±1.0 pixel maximum deviation.

Similarly, the larger value of 26.3% is selected as the evaluation result. Therefore, the coordinate errors are reduced to 26.3% of the original ones after the Kalman Filter estimation. In Figure 6, the absolute distance errors are also significantly reduced after filtering.

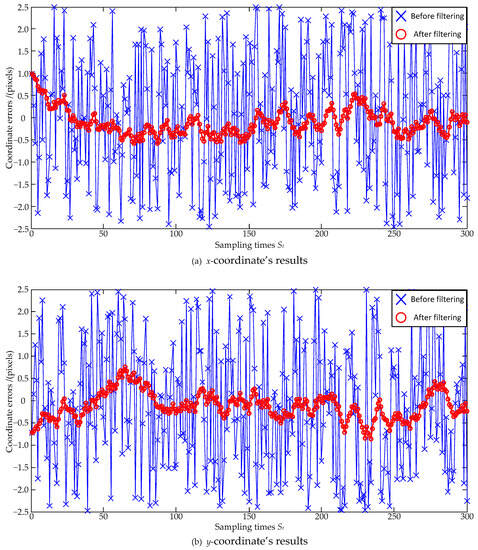

Lastly, to simulate the performance of the Kalman-Filter-based estimation model under very large errors, the noise size is further enlarged as a zero mean and ±2.5 pixel maximum deviation, and the estimation errors are shown in Figure 7 and Figure 8. In Figure 7, the standard deviations of the x-coordinate’s estimation errors are 1.46 pixels before filtering and 0.29 pixels after filtering, respectively; the standard deviations of the y-coordinate’s estimation errors are 1.45 pixels before filtering and 0.30 pixels after filtering, respectively. Both the standard deviations of the x-coordinate’s and the y-coordinate’s estimation errors are decreased, and the x-coordinate’s and the y-coordinate’s reductions can be, respectively, calculated as:

Figure 7.

Coordinate estimation errors under ±2.5 pixel maximum deviation.

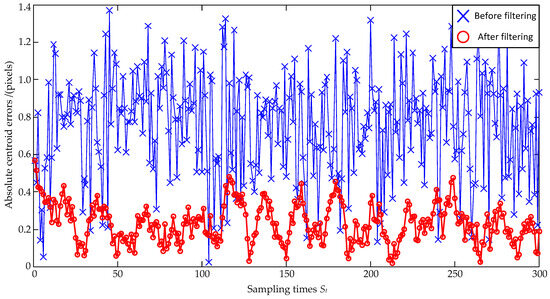

Figure 8.

Absolute centroid errors under ±2.5 pixel maximum deviation.

Similarly, the coordinate errors are reduced to 20.7% of the original ones after the Kalman Filter estimation. In Figure 8, the absolute distance errors are also significantly reduced after filtering.

According to the results of Figure 4, Figure 6, and Figure 8, the mean values and standard deviations of the absolute distance errors under different size noises are listed in Table 2. As shown in Table 2, the absolute distance errors of the Kalman Filter estimation increase with an increase in noises, but the increase in amplitude is smaller than that in the noises. When the noise size is enlarged as a zero mean and ±2.5 pixel maximum deviation, the mean values are 1.92 pixels before filtering and 0.40 pixels after filtering, respectively, and the standard deviations are 0.76 pixels before filtering and 0.20 pixels after filtering, respectively. It can be seen that the mean values and standard deviations of the absolute distance errors are significantly reduced after the Kalman Filter estimation.

Table 2.

Mean values and standard deviations of absolute distance errors.

3.2. Experiments

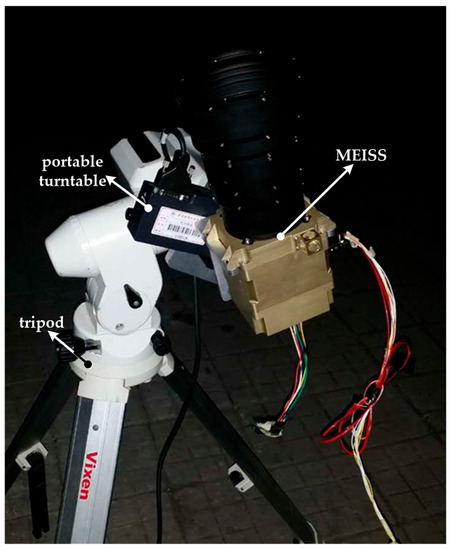

In this section, experiments are conducted to further verify the feasibility and effectiveness of the star point prediction windows. Figure 9 shows the equipment of night sky experiments. The main experimental equipment includes the MEISS, a portable turntable, a tripod, a computer, and a power supply. The main design parameters adopted by the real MEISS in Figure 9, such as the focal length and the FOV, etc., are the same as those listed in Table 1. A portable turntable is firmly installed on a tripod. The MEISS is installed on the turntable and rotates with it. The computer is the same as the one used in the simulations. The power supply is used to provide 220 V AC and 5 V DC.

Figure 9.

Night sky experiments.

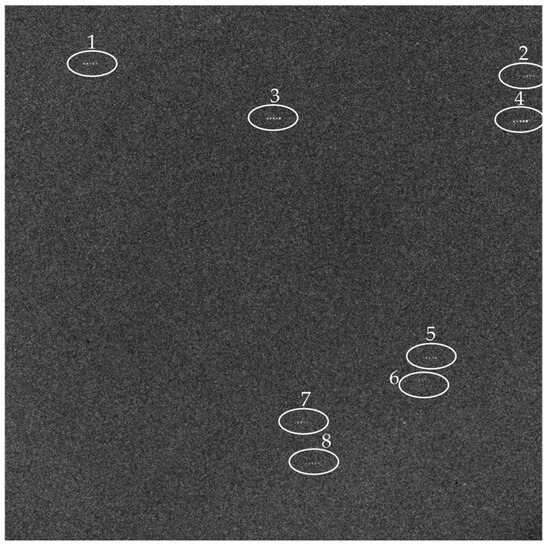

The angular velocity and angular acceleration of the turntable are set as 5°/s and 0.5°/s2, respectively, and the multi-exposure times N is set as 5. Under these conditions, the MEISS begins to work, and one of the star images obtained by MEISS is shown as Figure 10.

Figure 10.

One multi-exposure star image.

In Figure 10, there are, in total, eight guide stars within the star image, which are numbered as 1–8, and the rest is a starry background with noise. Since the multi-exposure times N is set as five, each guide star has five star spots. Furthermore, it can be seen that the noise level of the star image is relatively high. When using the global threshold segmentation (global threshold = 128) and classic centroid method, there are a total of 4985 objects. Among them, there are 3664 one-pixel objects accounting for 73.5% of the total, 749 two-pixel objects accounting for 15.0% of the total, and 572 over-three-pixel objects accounting for 11.5% of the total. Although most objects are false (one-pixel and two-pixel objects) and can be eliminated, the number of candidate star point objects is still large (572 over-three-pixel objects), which seriously hinders the accurate extraction of real star point objects. In contrast, when using the star point prediction windows for centroid extraction, only 8 × 5 = 40 star point objects are extracted, accounting for only 0.8% of the total. Similarly, under the same experimental conditions, another 99 star images are obtained to further verify the star point prediction windows. Among the 100 star images, the average proportion of the number of effective star point objects in the total object number of each star image is calculated as only 0.95%, which greatly demonstrates the effectiveness of the proposed method.

4. Discussion

In the simulations, different size noises were added to the true centroid data to verify the performance of the Kalman-Filter-based estimation model. Under the noises with z zero mean and ±0.4, ±1.0, and ±2.5 pixel maximum deviations, the coordinate errors after filtering were reduced to about 37.5%, 26.3%, and 20.7% of the original ones, respectively. The absolute distance errors of the Kalman Filter estimation increased with an increase in noises, but the increase amplitude was smaller than that of noises. Under the noise with a zero mean and ±2.5 pixel maximum deviation, the mean value and standard deviation of the absolute distance errors before filtering were 1.92 pixels and 0.76 pixels, respectively, and the errors were too large to perform the correct star image recognition. In contrast, the mean value and standard deviation after filtering were 0.40 pixels and 0.20 pixels, respectively, which still remain at the sub pixel level of accuracy and thus enabled the correct star image recognition.

Subsequently, night sky experiments were conducted to verify the performance of the star point prediction windows. Taking one MEISS’s star image as an example, its noise level was relatively high. When the classic centroid method was used, a total 4985 objects were obtained. Among them, the number of candidate star point objects was 572 (accounting for 11.5% of the total), which seriously hindered the accurate extraction of real star point objects. Furthermore, due to the high level of noise, the global threshold had to be set as 128, and this would filter out the weak pixel signals of the real star points, resulting in a decrease in the centroid accuracy. In contrast, when using the star point prediction windows for the centroid extraction, the local threshold could be adaptively set within a very small prediction window, and only 8 × 5 = 40 star point objects were extracted, accounting for only 0.8% of the total. Similarly, under the same experimental conditions, a total of 100 star images were obtained, and the average proportion of the number of effective star point objects in the total object number of each star image was calculated as only 0.95%.

Both the simulations and experiments verified the feasibility and effectiveness of the proposed method. It should be noticed that the proposed method also had certain limitations. In order to effectively filter out the noise carried by the centroid data, it was necessary to perform the filtering process on each star point object, thus resulting in significant computational and time costs. Therefore, further research work should focus on parallel computing based on Field Programmable Gate Array (FPGA) and improving the computational efficiency of the Kalman-Filter-based estimation model.

5. Conclusions

A multi-exposure imaging approach, as proposed in earlier studies, can be used to increase the AUR by N times. However, serious noises are also introduced into star images due to multiple exposures. In this paper, a high-accuracy star centroid extraction method based on Kalman Filter was proposed to remove false objects and improve the centroid accuracy, which is beneficial to the MEISS’s practical application. The principle of the proposed method was described in detail, including star point prediction windows, centroiding, and Kalman Filter. Subsequently, simulations were conducted to verify the performance of the Kalman-Filter-based estimation model. Furthermore, experiments were also conducted to verify the performance of the star point prediction windows. Both the simulated and experimental results demonstrated the feasibility and effectiveness of the proposed method.

Author Contributions

Conceptualization, W.Y.; methodology, W.Y.; software, Y.Z.; validation, H.Q.; formal analysis, W.Y.; investigation, H.Q.; resources, H.Q.; data curation, Y.Z.; writing—original draft preparation, W.Y.; writing—review and editing, W.Y., H.Q. and Y.Z.; visualization, Y.Z.; supervision, H.Q.; project administration, Y.Z.; funding acquisition, W.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (NSFC), grant number 62005135, and the China Postdoctoral Science Foundation, grant number EZ53020003.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We gratefully acknowledge the support from the above funds.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ma, Y.; Jiang, J. Guide Triangle Catalog Generation Based on Triangle Density and Utilization for Star Sensors. IEEE Sens. J. 2022, 22, 3472–3483. [Google Scholar] [CrossRef]

- Liu, M.; Wei, X.; Wen, D.; Wang, H. Star Identification Based on Multilayer Voting Algorithm for Star Sensors. Sensors 2021, 21, 3084. [Google Scholar] [CrossRef] [PubMed]

- Han, J.; Yang, X.; Xu, T.; Fu, Z.; Chang, L.; Yang, C.; Jin, G. An End-to-End Identification Algorithm for Smearing Star Image. Remote Sens. 2021, 13, 4541. [Google Scholar] [CrossRef]

- Bao, J.; Zhan, H.; Sun, T.; Fu, S.; Xing, F.; You, Z. A Window-Adaptive Centroiding Method Based on Energy Iteration for Spot Target Localization. IEEE Trans. Instrum. Meas. 2022, 71, 7004113. [Google Scholar] [CrossRef]

- Wan, X.; Wang, G.; Wei, X.; Li, J.; Zhang, G. ODCC: A Dynamic Star Spots Extraction Method for Star Sensors. IEEE Trans. Instrum. Meas. 2021, 70, 5009114. [Google Scholar] [CrossRef]

- Fan, Q.Y.; Zhang, M.; Xue, Y.K. Restoration of motion-blurred star images with elliptical star streaks. Meas. Sci. Technol. 2023, 34, 065403. [Google Scholar] [CrossRef]

- Cui, Y.H.; Feng, J.; Li, Y.D.; Wen, L.; Guo, Q. Proton Radiation Effects of CMOS Image Sensors on Different Star Map Recognition Algorithms for Star Sensors. Electronics 2023, 12, 1629. [Google Scholar] [CrossRef]

- Zhu, Z.J.; Ma, Y.B.; Dan, B.B.; Zhao, R.J.; Liu, E.H.; Zhu, Z.F. ISSM-ELM—A guide star selection for a small-FOV star sensor based on the improved SSM and extreme learning machine. Appl. Opt. 2022, 61, 6443–6452. [Google Scholar] [CrossRef]

- Zapevalin, P.R.; Novoselov, A.; Zharov, V.E. Artificial neural network for star tracker centroid computation. Adv. Space Res. 2023, 71, 3917–3925. [Google Scholar] [CrossRef]

- Niu, Y.Z.; Wei, X.G.; Li, J. Fast and Robust Star Identification Using Color Ratio Information. IEEE Sens. J. 2022, 22, 20401–20412. [Google Scholar] [CrossRef]

- Cao, H.J.; Zhan, H.Y.; Li, J.D.; Rao, Q.L.; Xing, F.; You, Z. An All-Day Attitude Sensor Integrating Stars and Sun Measurement Based on Extended Pixel Response of CMOS APS Imager. IEEE Trans. Instrum. Meas. 2023, 72, 7003111. [Google Scholar] [CrossRef]

- Lu, K.; Liu, E.; Zhao, R.; Tian, H.; Zhang, H. A Fast Star Identification Algorithm of Star Sensors in the LIS Mode. Remote Sens. 2022, 14, 1739. [Google Scholar] [CrossRef]

- Lei, H.J.; Li, B.; Wei, Q.; Yue, Y.Z.; Ding, X.K.; Liang, K.; Chen, L.F.; Yang, H.J.; Zhang, W.G.; Hu, X.D. Inertial information based star detection for airborne star sensor. Opt. Laser Technol. 2023, 162, 109325. [Google Scholar] [CrossRef]

- Ning, X.; Chen, P.; Huang, Y.; Wu, W.; Fang, J. Angular velocity estimation using characteristics of star trails obtained by star sensor for spacecraft. Sci. China Inform. Sci. 2021, 64, 112209. [Google Scholar] [CrossRef]

- Wang, B.W.; Wang, H.Y.; Mao, X.N.; Wu, S.C.; Liao, Z.; Zang, Y.Z. Optical System Design Method of Near-Earth Short-Wave Infrared Star Sensor. IEEE Sens. J. 2022, 22, 22169–22178. [Google Scholar] [CrossRef]

- Liu, X.; Xing, F.; Fan, S.; You, Z. A Compressed and High-Accuracy Star Tracker with On-Orbit Deployable Baffle for Remote Sensing CubeSats. Remote Sens. 2021, 13, 2503. [Google Scholar] [CrossRef]

- Izadmehr, M.; Ghomi, M.K. An accuracy of better than 200 m in positioning using a portable star tracker. New Astron. 2020, 74, 101272. [Google Scholar] [CrossRef]

- Lu, J.; Liang, S.; Yang, L. Analytic coarse alignment and calibration for inertial navigation system on swaying base assisted by star sensor. IET Sci. Meas. Technol. 2018, 12, 673–677. [Google Scholar] [CrossRef]

- Liu, M.Y. Research on Star Sensor-based Space Debris Detection and Positioning Technology. Publ. Astron. Soc. Pac. 2023, 135, 047001. [Google Scholar] [CrossRef]

- Ren, S.Y.; Qiu, S.; Cheng, K.Y. An Edge Computing Algorithm Based on Multi-Level Star Sensor Cloud. CMES-Comp. Model. Eng. 2023, 136, 1643–1659. [Google Scholar] [CrossRef]

- Wang, Y.L.; Wang, M.; Zhu, Y.; Long, X.X. Low frequency error analysis and calibration for multiple star sensors system of GaoFen7 satellite. Geo-Spat. Inf. Sci. 2022. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, M.; Zhu, Y. On-Orbit Calibration of Installation Parameter of Multiple Star Sensors System for Optical Remote Sensing Satellite with Ground Control Points. Remote Sens. 2020, 12, 1055. [Google Scholar] [CrossRef]

- Gao, Z.Q.; Wang, H.Y.; Wang, W.H.; Xu, Y. SIMU/Triple star sensors integrated navigation method of HALE UAV based on atmospheric refraction correction. J. Navig. 2022, 75, 704–726. [Google Scholar] [CrossRef]

- Tan, W.; Dai, D.; Wu, W.; Wang, X.; Qin, S. A Comprehensive Calibration Method for a Star Tracker and Gyroscope Units Integrated System. Sensors 2018, 18, 3106. [Google Scholar] [CrossRef]

- Zhang, H.; Niu, Y.; Lu, J.; Yang, Y. System-level Calibration for the Star Sensor Installation Error in the Stellar-Inertial Navigation System on a Swaying Base. IEEE Access 2018, 6, 47288–47294. [Google Scholar] [CrossRef]

- Ning, X.; Zhang, J.; Gui, M.; Fang, J. A Fast Calibration Method of the Star Sensor Installation Error Based on Observability Analysis for the Tightly Coupled SINS/CNS-Integrated Navigation System. IEEE Sens. J. 2018, 18, 6794–6803. [Google Scholar] [CrossRef]

- Xu, Z.H.; Zhou, Z.F.; Chang, Z.J.; Hao, S.W.; Duan, H. All-Parameter System-Level Calibration for SINS/CNS Based on Global Observability Analysis. IEEE Sens. J. 2023, 23, 10856–10870. [Google Scholar] [CrossRef]

- Wang, X.M.; Zhang, H.; Gao, X.D.; Zhao, R.J. The Tobit-Unscented-Kalman-Filter-Based Attitude Estimation Algorithm Using the Star Sensor and Inertial Gyro Combination. Micromachines 2023, 14, 1243. [Google Scholar] [CrossRef]

- Hou, B.W.; He, Z.M.; Zhou, H.Y.; Wang, J.Q. Integrated Design and Accuracy Analysis of Star Sensor and Gyro on the Same Benchmark for Satellite Attitude Determination System. IEEE/CAA J. Autom. Sin. 2019, 6, 1074–1080. [Google Scholar] [CrossRef]

- Wu, L.; Xu, Q.; Wang, H.; Lyu, H.; Li, K. Guide Star Selection for the Three-FOV Daytime Star Sensor. Sensors 2019, 19, 1457. [Google Scholar] [CrossRef] [PubMed]

- Zhong, H.; Yang, M.; Lu, X. Increasing Update Rate for Star Sensor by Pipelining Parallel Processing Method. Opt. Precis. Eng. 2009, 17, 2230–2235. [Google Scholar]

- Mao, X.; Liang, W.; Zheng, X. A Parallel Computing Architecture Based Image Processing Algorithm for Star Sensor. J. Astron. 2011, 32, 613–619. [Google Scholar]

- Wang, Z.; Jiang, J.; Zhang, G. Distributed Parallel Super-Block-Based Star Detection and Centroid Calculation. IEEE Sens. J. 2018, 18, 8096–8107. [Google Scholar] [CrossRef]

- Ding, J.Q.; Dai, D.K.; Tan, W.F.; Wang, X.S.; Qin, S.Q. Implementation of a real-time star centroid extraction algorithm with high speed and superior denoising ability. Appl. Opt. 2022, 61, 3115–3122. [Google Scholar] [CrossRef] [PubMed]

- Liebe, C.C. Accuracy performance of star trackers—A tutorial. IEEE Trans. Aerosp. Electron. Syst. 2002, 38, 587–599. [Google Scholar] [CrossRef]

- Zhang, S.; Xing, F.; Sun, T.; You, Z. Variable Angular Rate Measurement for a Spacecraft Based on the Rolling Shutter Mode of a Star Tracker. Electronics 2023, 12, 1875. [Google Scholar] [CrossRef]

- Li, Y.; Wei, X.; Li, J.; Wang, G. Imaging modeling and error analysis of the star sensor under rolling shutter exposure mode. Opt. Express 2021, 29, 15478–15496. [Google Scholar] [CrossRef]

- He, L.; Ma, Y.; Zhao, R.; Hou, Y.; Zhu, Z. High Update Rate Attitude Measurement Method of Star Sensors Based on Star Point Correction of Rolling Shutter Exposure. Sensors 2021, 21, 5724. [Google Scholar] [CrossRef]

- Zhang, S.; Xing, F.; Sun, T.; You, Z.; Wei, M.S. Novel approach to improve the attitude update rate of a star tracker. Opt. Express 2018, 26, 5164–5181. [Google Scholar] [CrossRef]

- Yu, W.; Jiang, J.; Zhang, G. Multiexposure Imaging and Parameter Optimization for Intensified Star Trackers. Appl. Opt. 2016, 55, 10187–10197. [Google Scholar] [CrossRef]

- Yu, W.; Jiang, J.; Zhang, G. Star Tracking Method Based on Multiexposure Imaging for Intensified Star Trackers. Appl. Opt. 2017, 56, 5961–5971. [Google Scholar] [CrossRef] [PubMed]

- IERS Conventions. 2010. Available online: https://www.iers.org/IERS/EN/Publications/TechnicalNotes/tn36.html (accessed on 7 May 2023).

- Shuster, M.D. A survey of attitude representations. Navigation 1993, 8, 439–517. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).