A Pure Electric Driverless Crawler Construction Machinery Walking Method Based on the Fusion SLAM and Improved Pure Pursuit Algorithms

Abstract

:1. Introduction

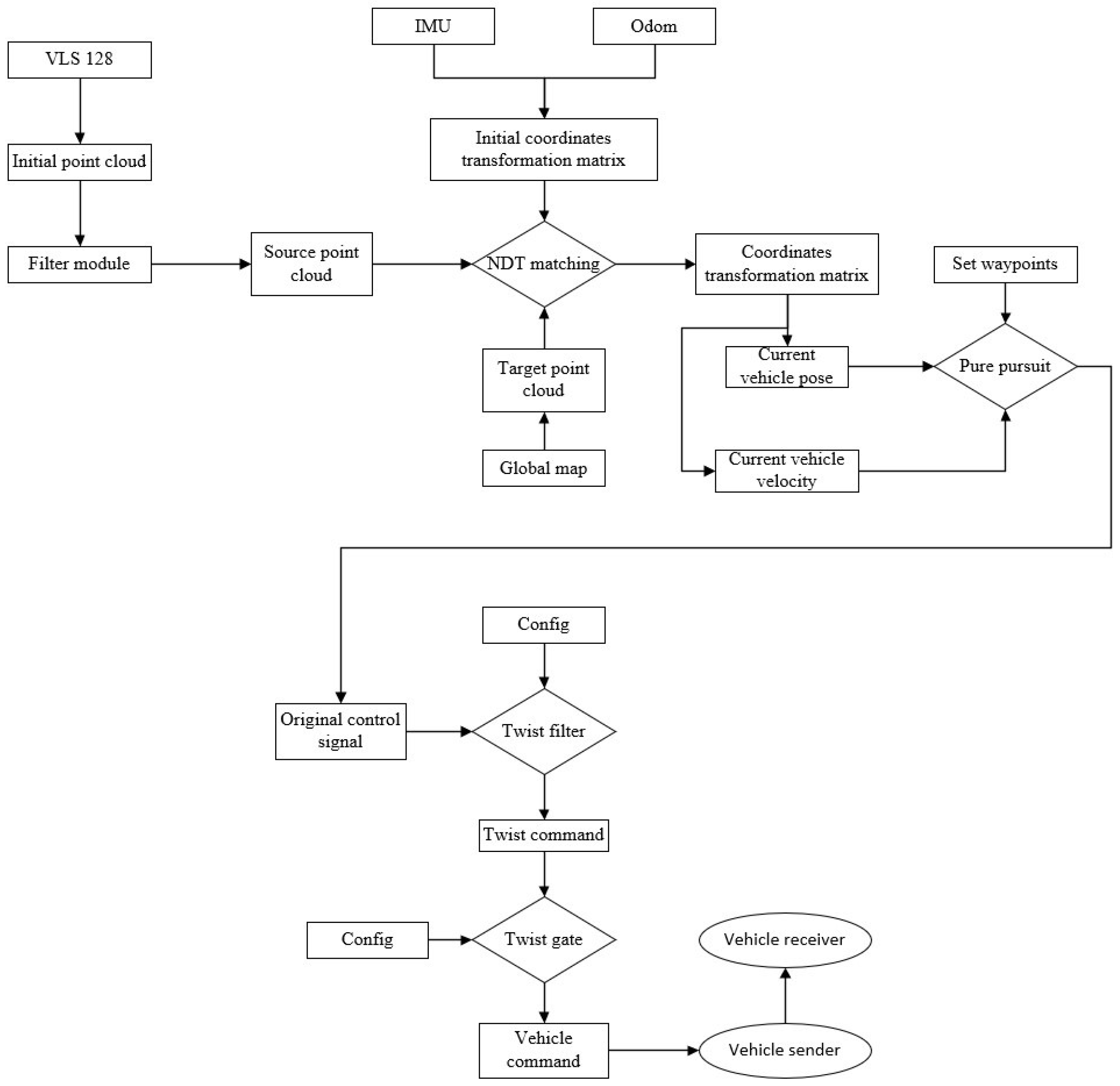

2. Fusion SLAM System Based on Improved NDT

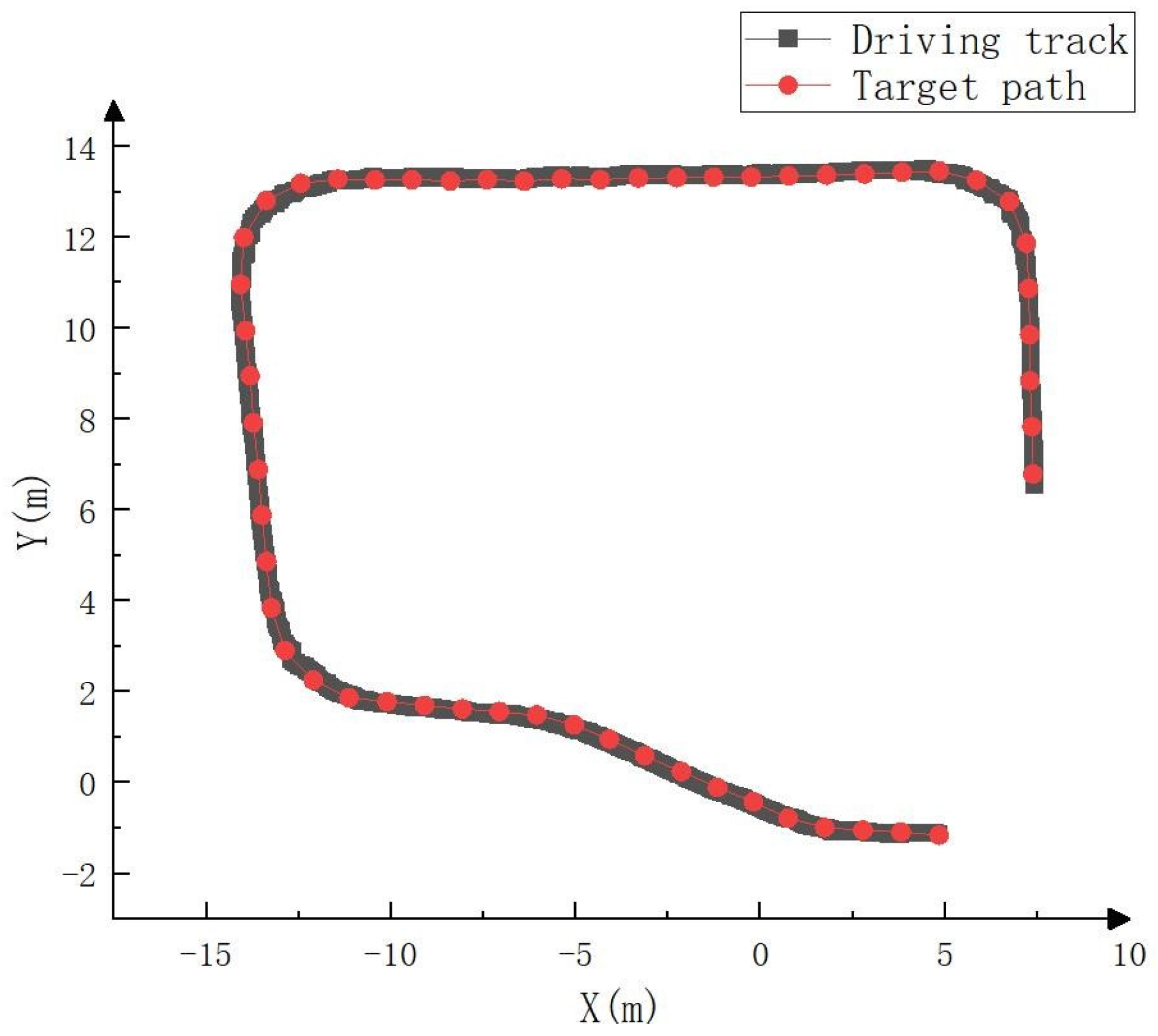

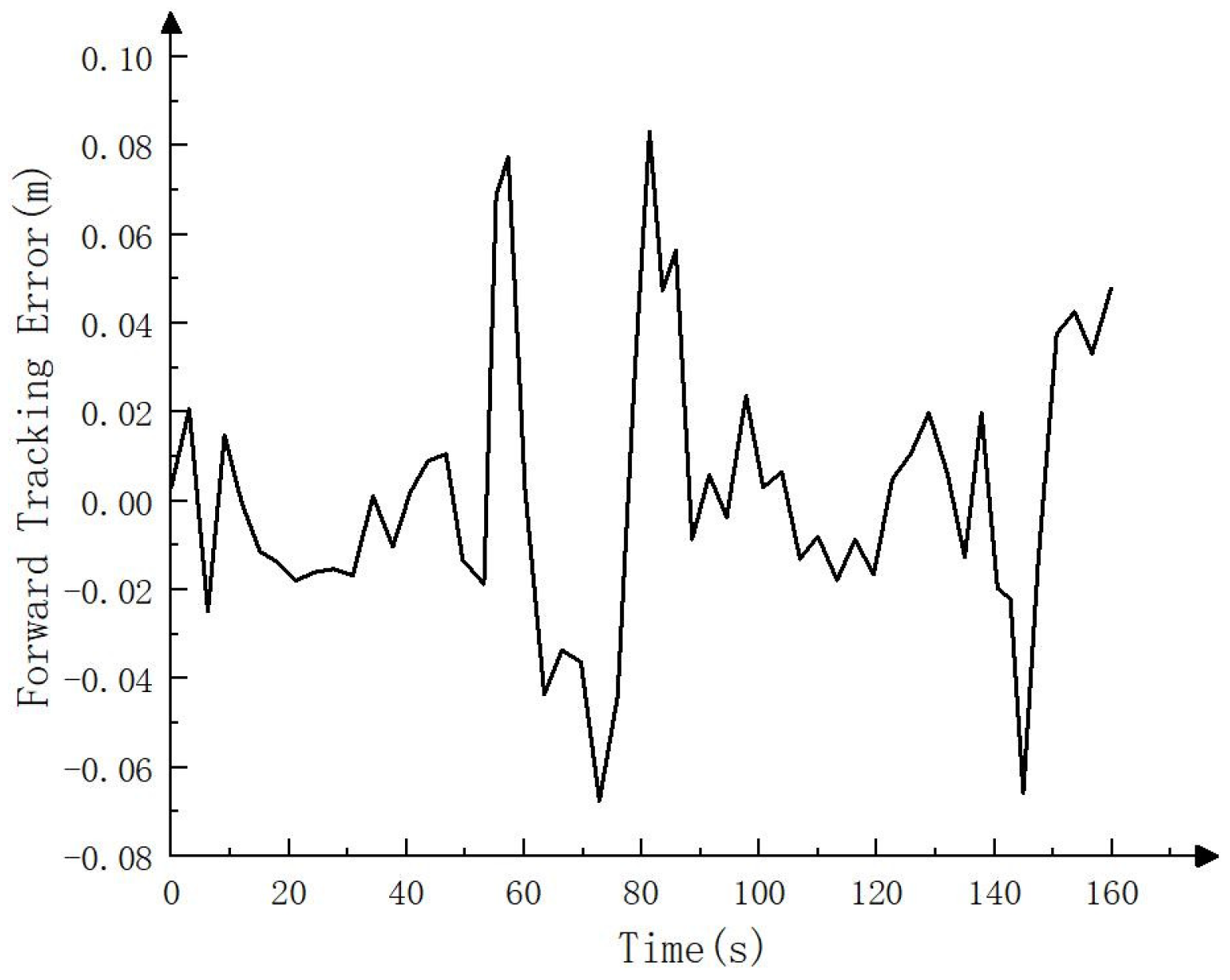

2.1. Point Cloud Registration Algorithm Based on NDT

2.1.1. Point Cloud Registration

2.1.2. NDT Algorithm

2.1.3. NDT Algorithm Flow

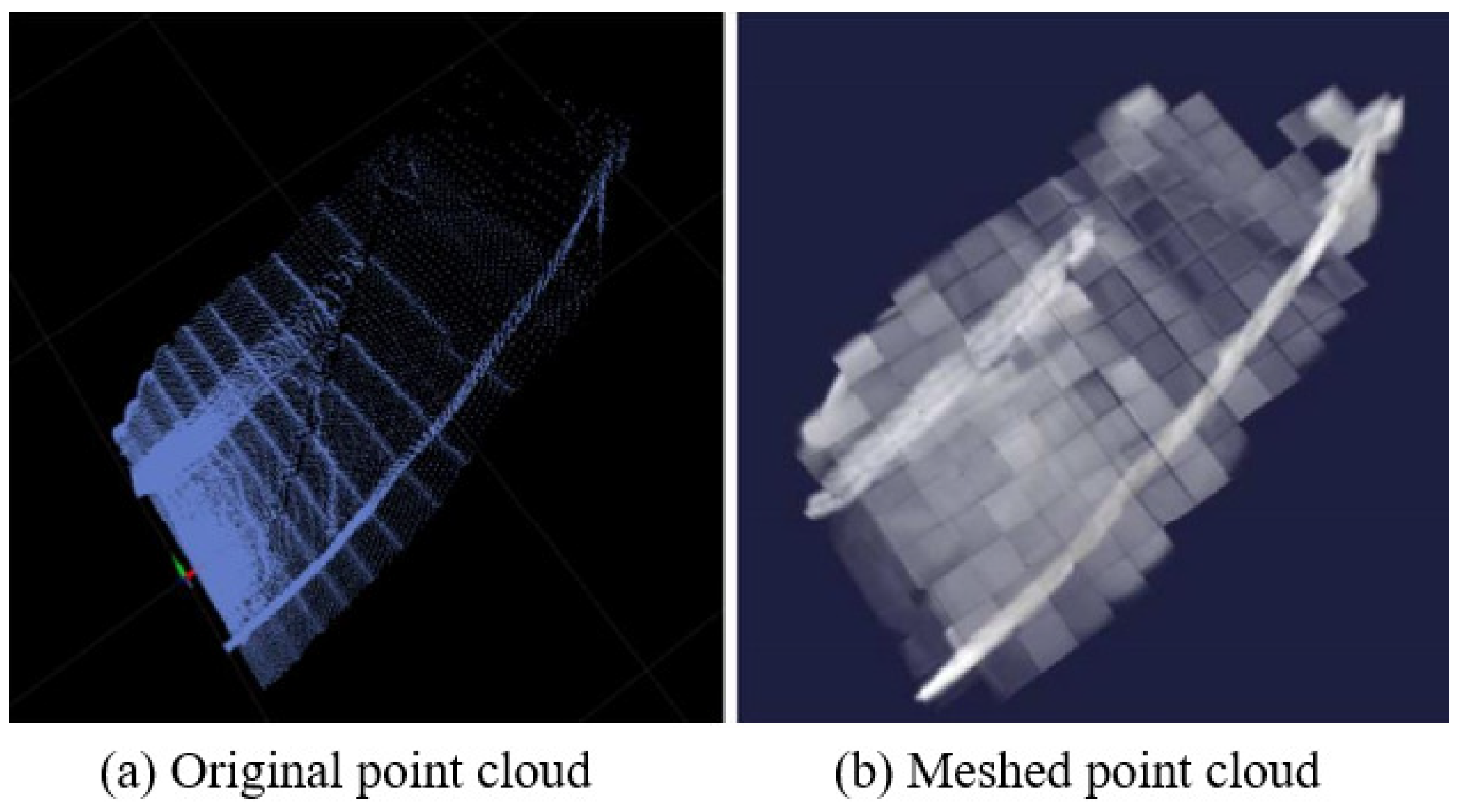

2.2. NDT Mapping Process and Effect Display

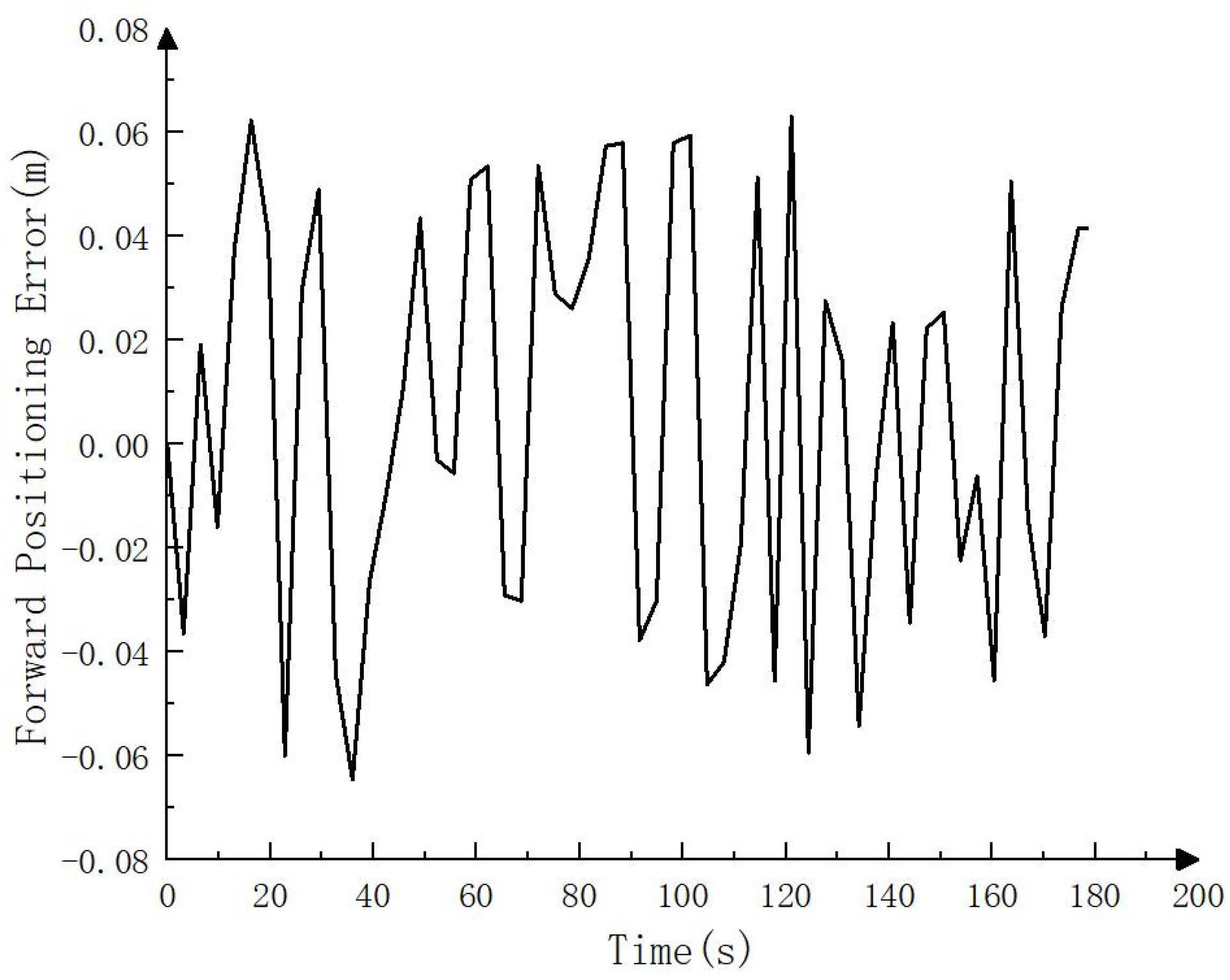

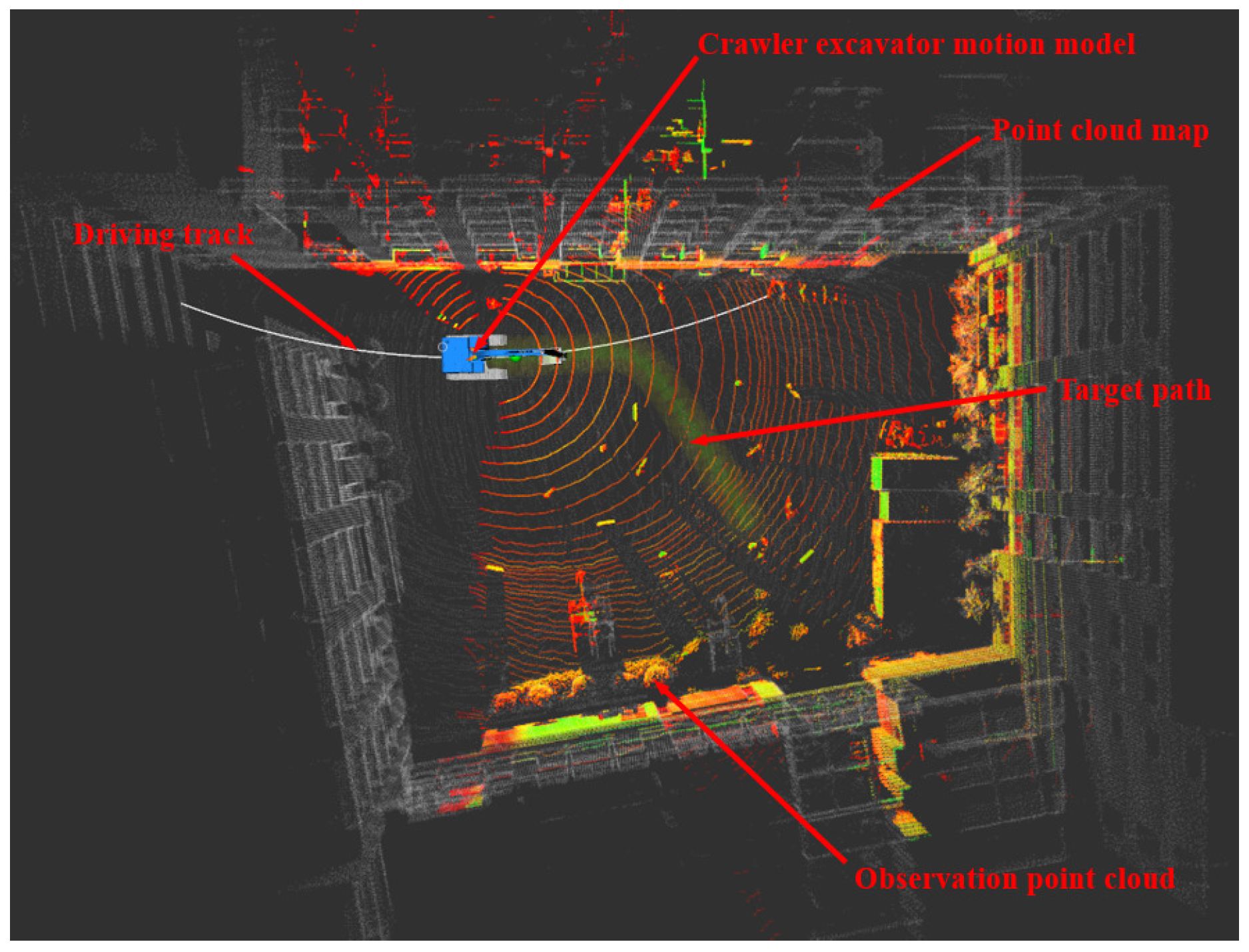

2.3. NDT Positioning Process and Effect Display

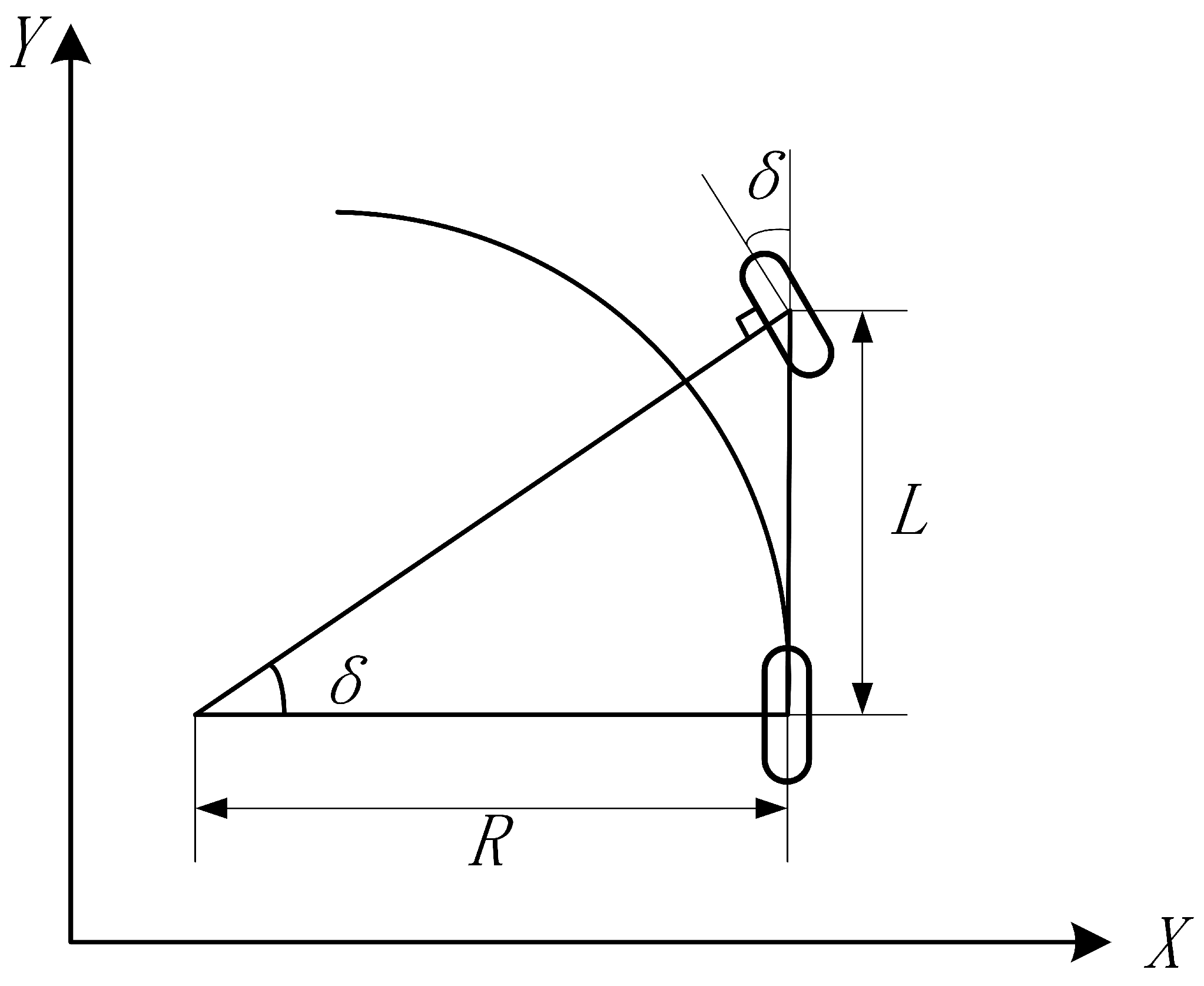

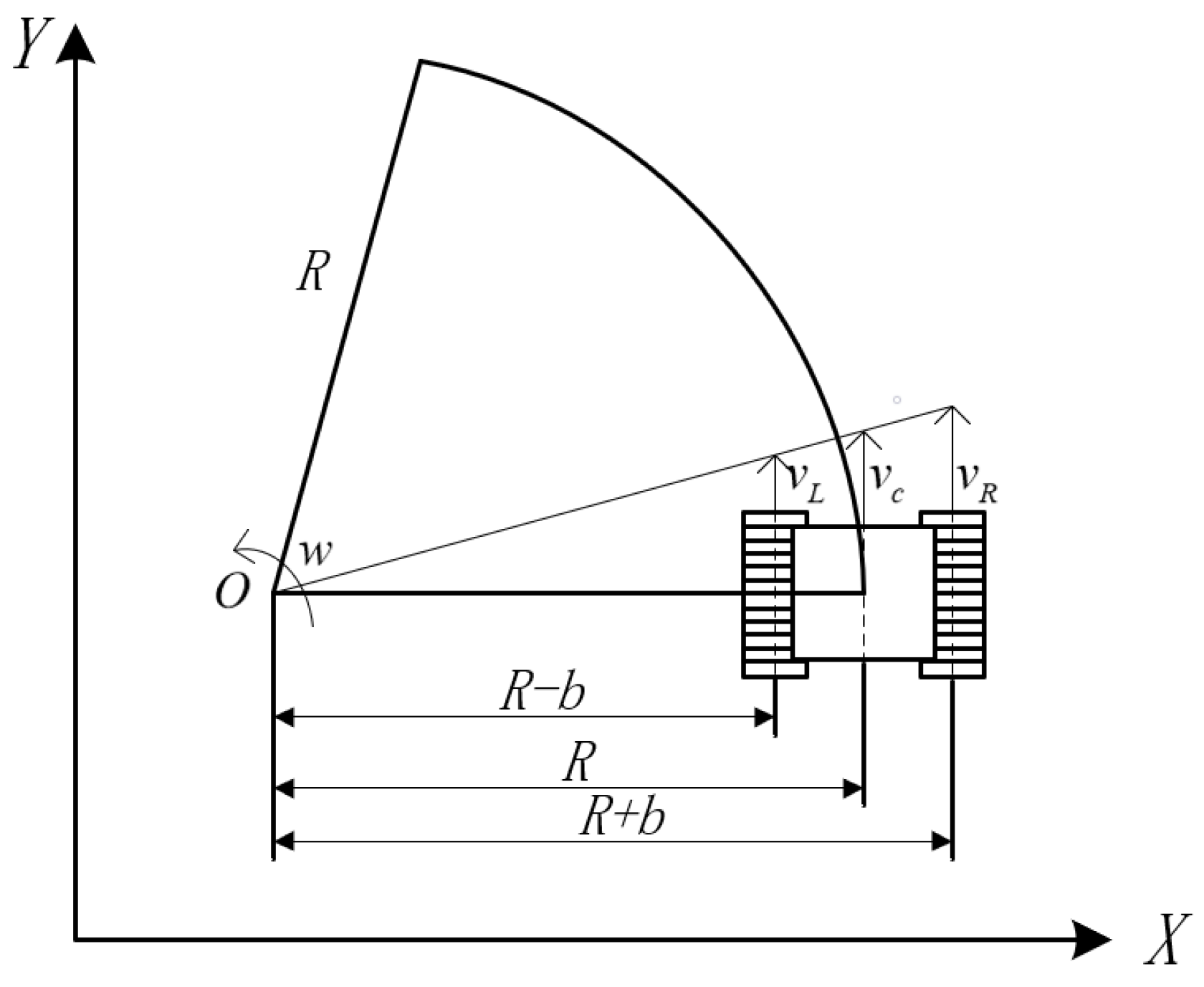

3. Motion Control System of Crawler Construction Machinery Based on the Improved Pure Pursuit Algorithm

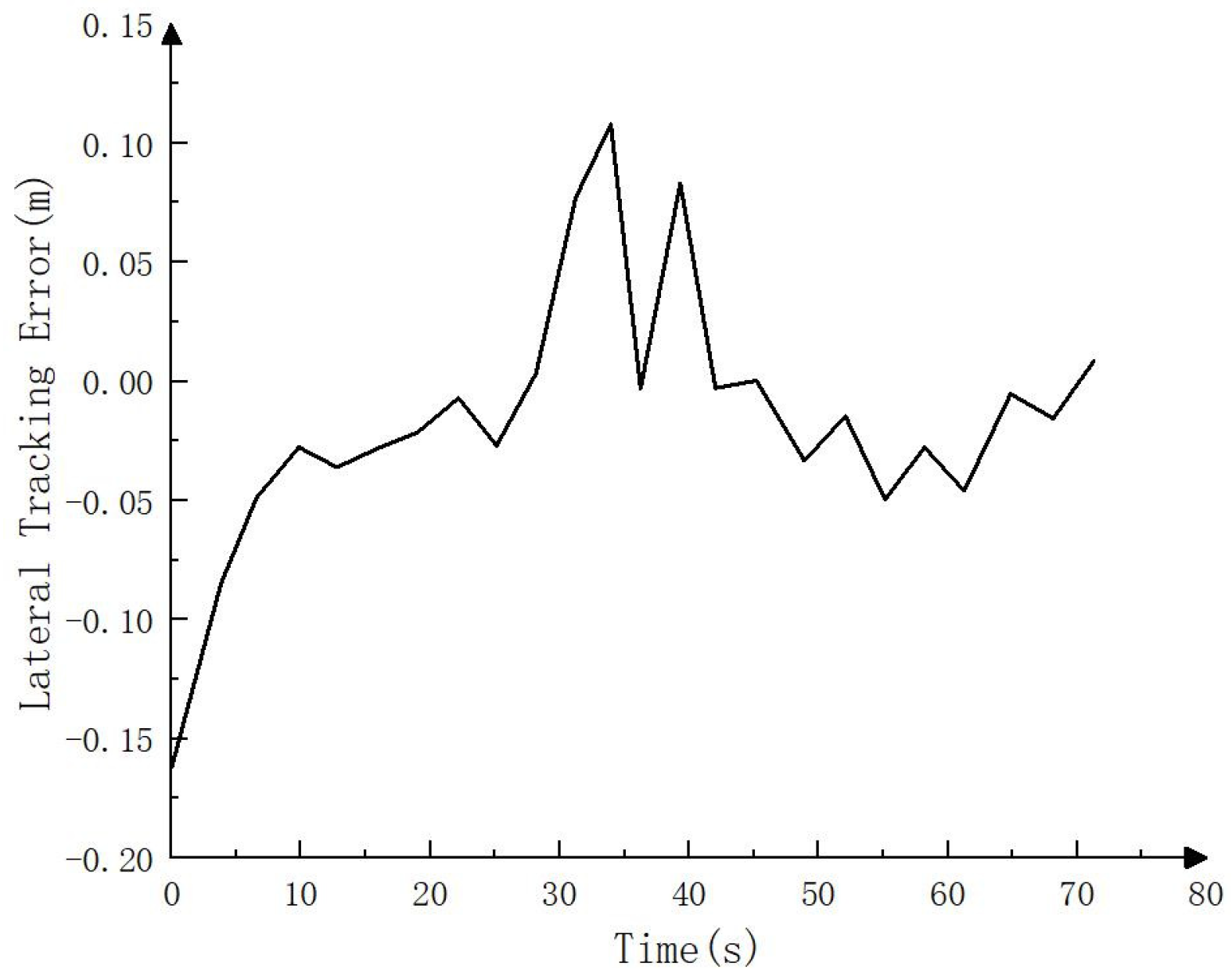

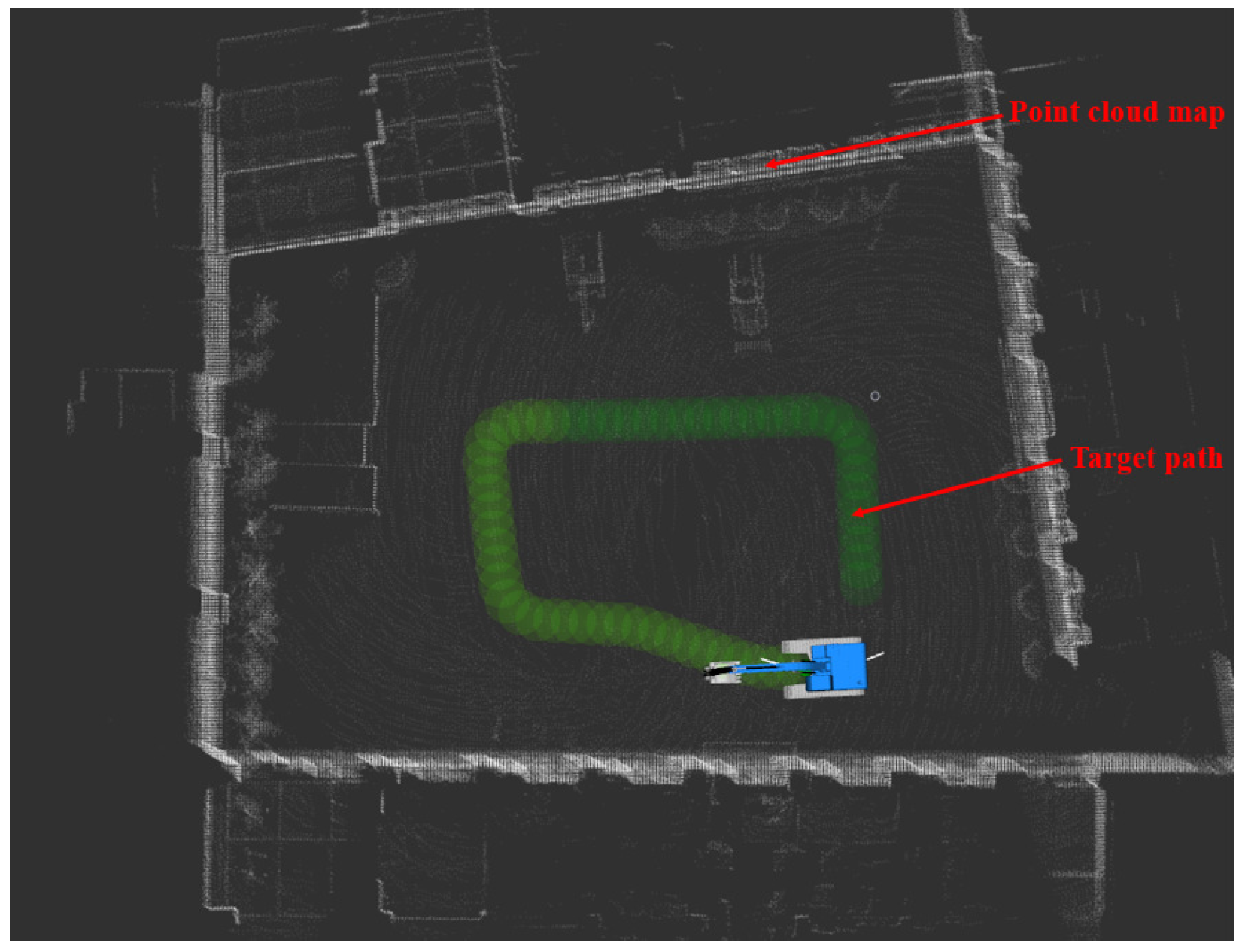

4. Simulation Platform Construction and Real Vehicle Test

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lin, T. Energy-Saving Technology and Application of Construction Machinery; China Machine Press: Beijing, China, 2018. [Google Scholar]

- Ge, L.; Quan, L.; Zhang, X.; Dong, Z.; Yang, J. Power matching and energy efficiency improvement of hydraulic excavator driven with speed and displacement variable power source. Chin. J. Mech. Eng. Engl. Ed. 2019, 32, 1–12. [Google Scholar] [CrossRef]

- Lin, T.; Yao, Y.; Xu, W.; Fu, S.; Ren, H.; Chen, Q. Driverless walking method of electric construction machinery based on environment recognition. Jixie Gongcheng Xuebao 2021, 57, 42–49. [Google Scholar]

- Zhang, X. Application analysis of unmanned technology in construction machinery. IOP Conf. Ser. Earth Environ. Sci. 2019, 310, 022005. [Google Scholar] [CrossRef]

- Ma, Y.; Li, Z.; Sotelo, M.A. Testing and evaluating driverless vehicles’ intelligence: The Tsinghua lion case study. IEEE Intell. Transp. Syst. Mag. 2020, 12, 10–22. [Google Scholar] [CrossRef]

- Oussama, A.; Mohamed, T. End-to-End Deep Learning for Autonomous Vehicles Lateral Control Using CNN. In Advanced Intelligent Systems for Sustainable Development; Springer: Cham, Switzerland, 2022; pp. 705–712. [Google Scholar]

- Milford, M.; Anthony, S.; Scheirer, W. Self-driving vehicles: Key technical challenges and progress off the road. IEEE Potentials 2019, 39, 37–45. [Google Scholar] [CrossRef]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LIDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1271–1278. [Google Scholar]

- Biber, P.; Straßer, W. The normal distributions transform: A new approach to laser scan matching. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003), Las Vegas, NV, USA, 27–31 October 2003; pp. 2743–2748. [Google Scholar]

- Yu, X.; Huai, Y.; Yao, Z.; Sun, Z.; Yu, A. Key technologies in autonomous vehicle for engineering. Jilin Daxue Xuebao 2021, 51, 1153–1168. [Google Scholar]

- Yeong, D.J.; Velasco-Hernandez, G.; Barry, J.; Walsh, J. Sensor and sensor fusion technology in autonomous vehicles: A review. Sensors 2021, 21, 2140. [Google Scholar] [CrossRef]

- Gao, B.; Hu, G.; Zhong, Y.; Zhu, X. Distributed state fusion using sparse-grid quadrature filter with application to INS/CNS/GNSS integration. IEEE Sens. J. 2021, 22, 3430–3441. [Google Scholar] [CrossRef]

- Gao, S.; Zhong, Y.; Zhang, X.; Shirinzadeh, B. Multi-sensor optimal data fusion for INS/GPS/SAR integrated navigation system. Aerosp. Sci. Technol. 2009, 13, 232–237. [Google Scholar] [CrossRef]

- Lai, Q.; Yuan, H.; Wei, D.; Wang, N.; Li, Z.; Ji, X. A multi-sensor tight fusion method designed for vehicle navigation. Sensors 2020, 20, 2551. [Google Scholar] [CrossRef]

- Gao, S.; Zhong, Y.; Shirinzadeh, B. Random weighting estimation for fusion of multi-dimensional position data. Inf. Sci. 2010, 180, 4999–5007. [Google Scholar] [CrossRef]

- Zong, H.; Gao, Z.; Wei, W.; Zhong, Y.; Gu, C. Randomly weighted CKF for multisensor integrated systems. J. Sens. 2019, 2019, 1–19. [Google Scholar] [CrossRef]

- Yu, H.; Dai, K.; Li, Q.; Li, H.; Zhang, H. Optimal Distributed Finite-Time Fusion Method for Multi-Sensor Networks under Dynamic Communication Weight. Sensors 2023, 23, 7397. [Google Scholar] [CrossRef]

- Koestler, L.; Yang, N.; Zeller, N.; Cremers, D. Tandem: Tracking and dense mapping in real-time using deep multi-view stereo. In Proceedings of the Conference on Robot Learning, PMLR, Auckland, New Zealand, 14–18 December 2022; pp. 34–45. [Google Scholar]

- Chen, X.; Vizzo, I.; Läbe, T.; Behley, J.; Stachniss, C. Range image-based LiDAR localization for autonomous vehicles. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 5802–5808. [Google Scholar]

- Xu, W.; Cai, Y.; He, D.; Lin, J.; Zhang, F. Fast-lio2: Fast direct lidar-inertial odometry. IEEE Trans. Robot. 2022, 38, 2053–2073. [Google Scholar] [CrossRef]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Magnusson, M.; Lilienthal, A.; Duckett, T. Scan registration for autonomous mining vehicles using 3D NDT. J. Field. Rob. 2007, 24, 803–827. [Google Scholar] [CrossRef]

- Moré, J.J. The Levenberg-Marquardt algorithm: Implementation and theory. In Numerical Analysis, Proceedings of the Biennial Conference, Dundee, Scotland, 28 June–1 July 1977; Springer: Berlin/Heidelberg, Germany, 2006; pp. 105–116. [Google Scholar]

- Magnusson, M. The Three-Dimensional Normal-Distributions Transform: An Efficient Representation for Registration, Surface Analysis, and Loop Detection. Ph.D. Thesis, Örebro University, Örebro, Sweden, 2013. [Google Scholar]

- Chen, S.; Ma, H.; Jiang, C.; Zhou, B.; Xue, W.; Xiao, Z.; Li, Q. NDT-LOAM: A real-time lidar odometry and mapping with weighted NDT and LFA. IEEE Sens. J. 2021, 22, 3660–3671. [Google Scholar] [CrossRef]

- Deschaud, J. IMLS-SLAM: Scan-to-model matching based on 3D data. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 2480–2485. [Google Scholar]

- Samuel, M.; Hussein, M.; Mohamad, M.B. A review of some pure-pursuit based path tracking techniques for control of autonomous vehicle. Int. J. Comput. Appl. 2016, 135, 35–38. [Google Scholar] [CrossRef]

- Thrun, S.; Montemerlo, M.; Dahlkamp, H.; Stavens, D.; Aron, A.; Diebel, J.; Fong, P.; Gale, J.; Halpenny, M.; Hoffmann, G.; et al. Stanley: The robot that won the DARPA Grand Challenge. J. Field Robot. 2006, 23, 661–692. [Google Scholar] [CrossRef]

- Bemporad, A.; Morari, M.; Dua, V.; Pistikopoulos, E.N. The explicit linear quadratic regulator for constrained systems. Automatica 2002, 38, 3–20. [Google Scholar] [CrossRef]

- Camacho, E.F.; Alba, C.B. Model Predictive Control; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Liu, J.; Yang, Z.; Huang, Z.; Li, W.; Dang, S.; Li, H. Simulation Performance Evaluation of Pure Pursuit, Stanley, LQR, MPC Controller for Autonomous Vehicles. In Proceedings of the 2021 IEEE International Conference on Real-time Computing and Robotics (RCAR), Xining, China, 15–19 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1444–1449. [Google Scholar]

- Park, M.; Lee, S.; Han, W. Development of steering control system for autonomous vehicle using geometry-based path tracking algorithm. ETRI J. 2015, 37, 617–625. [Google Scholar] [CrossRef]

- Snider, J.M. Automatic steering methods for autonomous automobile path tracking. Master’s Thesis, Carnegie Mellon University, Pittsburgh, PA, USA, 2009. [Google Scholar]

- Zhang, C.; Dong, W.; Xiong, Z.; Hu, Z.; Wang, D.; Ding, Y. Design and Experiment of Fuzzy Adaptive Pure Pursuit Control of Crawler-type Rape Seeder. Nongye Jixie Xuebao 2021, 52. [Google Scholar]

- Lee, K.; Kim, B.; Choi, H.; Moon, H. Development of Path Tracking Algorithm and Variable Look Ahead Distance Algorithm to Improve the Path-Following Performance of Autonomous Tracked Platform for Agriculture. J. Korea Robot Soc. 2022, 17, 142–151. [Google Scholar] [CrossRef]

- Wang, S.; Fu, S.; Li, B.; Wang, S. Path tracking control of tracked paver based on improved pure pursuit algorithm. In Proceedings of the 2021 40th Chinese Control Conference (CCC), Shanghai, China, 26–28 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 4187–4192. [Google Scholar]

- Dolgov, D.; Thrun, S.; Montemerlo, M.; Diebel, J. Path planning for autonomous vehicles in unknown semi-structured environments. Int. J. Robot. Res. 2010, 29, 485–501. [Google Scholar] [CrossRef]

- Dijkstra, E.W. A note on two problems in connexion with graphs. In Edsger Wybe Dijkstra: His Life, Work, and Legacy; ACM Books: New York, NY, USA, 2022; pp. 287–290. [Google Scholar]

- Likhachev, M.; Ferguson, D. Planning long dynamically feasible maneuvers for autonomous vehicles. Int. J. Robot. Res. 2009, 28, 933–945. [Google Scholar] [CrossRef]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12697–12705. [Google Scholar]

- Yin, T.; Zhou, X.; Krahenbuhl, P. Center-based 3d object detection and tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 11784–11793. [Google Scholar]

| Device Name | Equipment Model | Equipment Parameters |

|---|---|---|

| IMU | N100 | Output frequency: 400 Hz |

| Serial port baud rate: 921,600 | ||

| Pitch/roll accuracy: 0.05° | ||

| number of axles: 9 axes | ||

| RTK/INS | CHCNAV CGI-610 | Attitude accuracy: 0.1° |

| Positioning accuracy: 1 cm | ||

| Output frequency: 100 Hz | ||

| Initialization time: 1 min | ||

| Lidar | Velodyne16 | Measurement range: 300 m |

| Measurement accuracy: ±3 cm | ||

| Vertical measurement angle: 40° | ||

| Horizontal measurement angle: 360° | ||

| Measurement frequency: 5–20 Hz | ||

| On-board computer | TW-T609 | CPU: 8-core Arm architecture |

| GPU: 512 core Volte architecture + 64 Tensor core | ||

| Computational power: 32 TOPS |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, J.; Ren, H.; Lin, T.; Yao, Y.; Fang, Z.; Liu, C. A Pure Electric Driverless Crawler Construction Machinery Walking Method Based on the Fusion SLAM and Improved Pure Pursuit Algorithms. Sensors 2023, 23, 7784. https://doi.org/10.3390/s23187784

Wu J, Ren H, Lin T, Yao Y, Fang Z, Liu C. A Pure Electric Driverless Crawler Construction Machinery Walking Method Based on the Fusion SLAM and Improved Pure Pursuit Algorithms. Sensors. 2023; 23(18):7784. https://doi.org/10.3390/s23187784

Chicago/Turabian StyleWu, Jiangdong, Haoling Ren, Tianliang Lin, Yu Yao, Zhen Fang, and Chang Liu. 2023. "A Pure Electric Driverless Crawler Construction Machinery Walking Method Based on the Fusion SLAM and Improved Pure Pursuit Algorithms" Sensors 23, no. 18: 7784. https://doi.org/10.3390/s23187784

APA StyleWu, J., Ren, H., Lin, T., Yao, Y., Fang, Z., & Liu, C. (2023). A Pure Electric Driverless Crawler Construction Machinery Walking Method Based on the Fusion SLAM and Improved Pure Pursuit Algorithms. Sensors, 23(18), 7784. https://doi.org/10.3390/s23187784