Investigation of Camera-Free Eye-Tracking Glasses Compared to a Video-Based System

Abstract

:1. Introduction

2. Materials and Methods

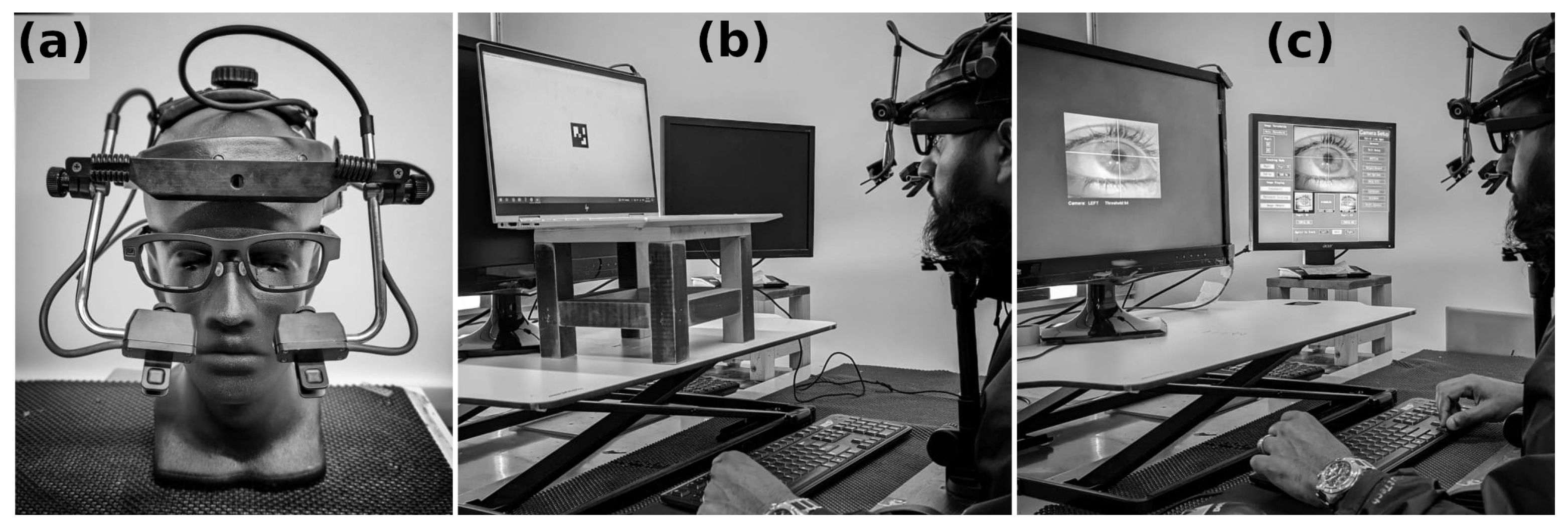

2.1. Eye-Tracking Devices

EyeLink-MindLink Signal Alignment

2.2. Participants

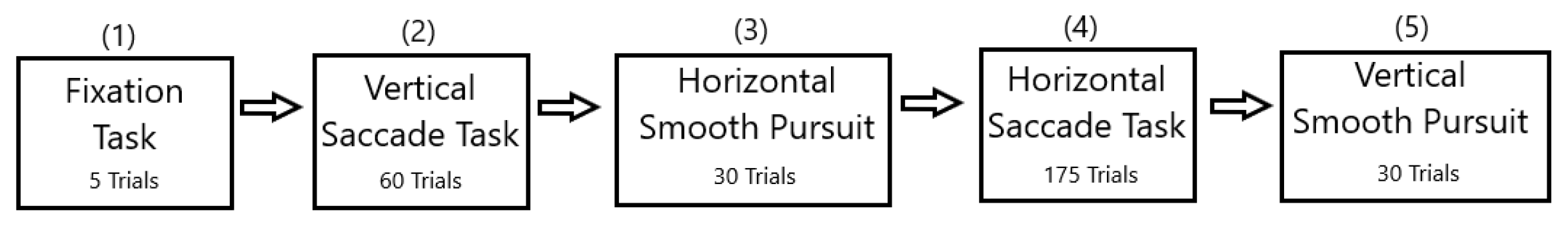

2.3. Stimulus and Tasks

2.3.1. Fixation Task

2.3.2. Saccade Tasks

2.3.3. Smooth Pursuit Tasks

2.4. Analysis

2.4.1. Conversion to Degrees of the Visual Angle

2.4.2. Fixation Stability

2.4.3. Saccades

2.4.4. Smooth Pursuit

3. Results

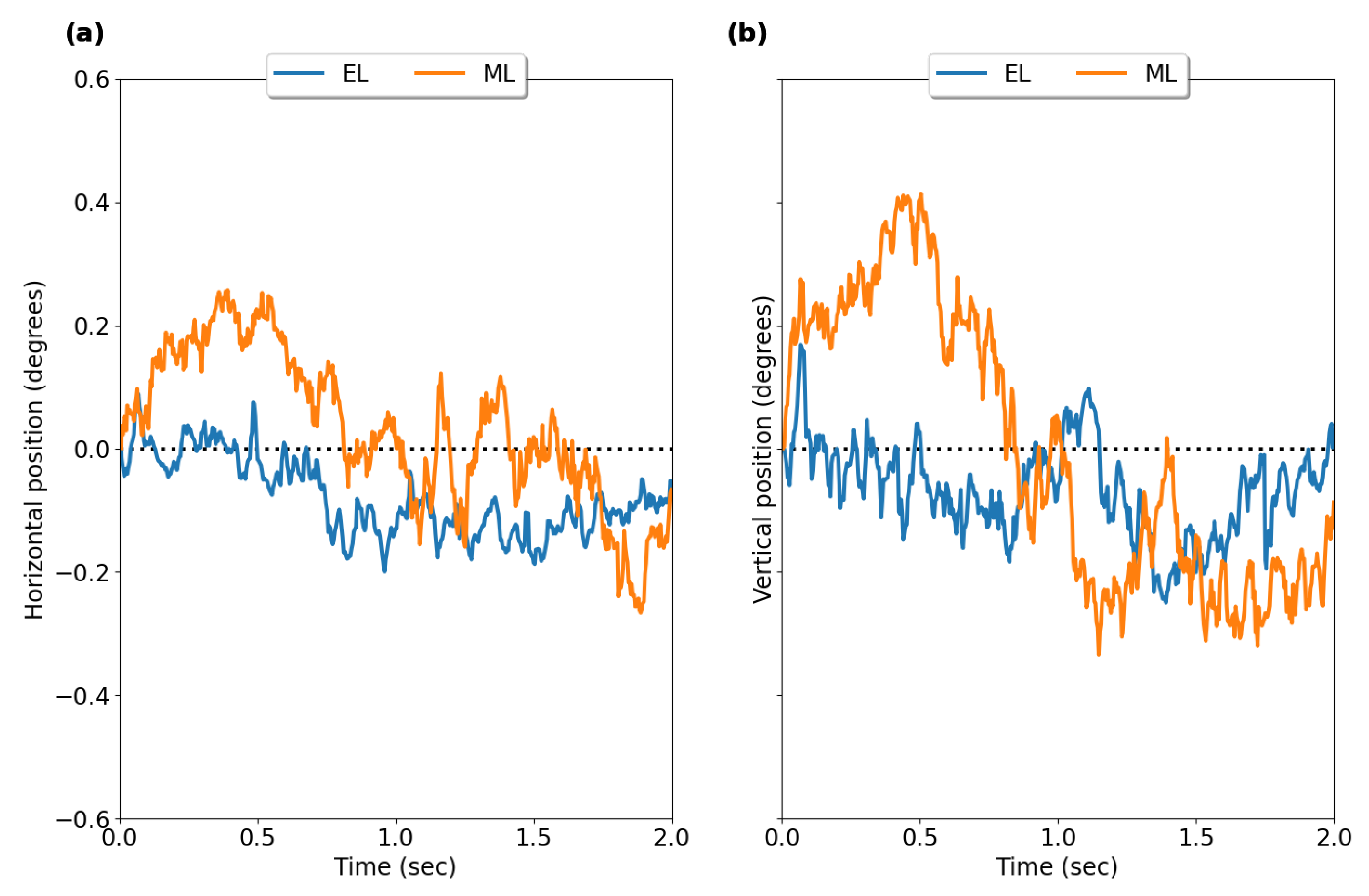

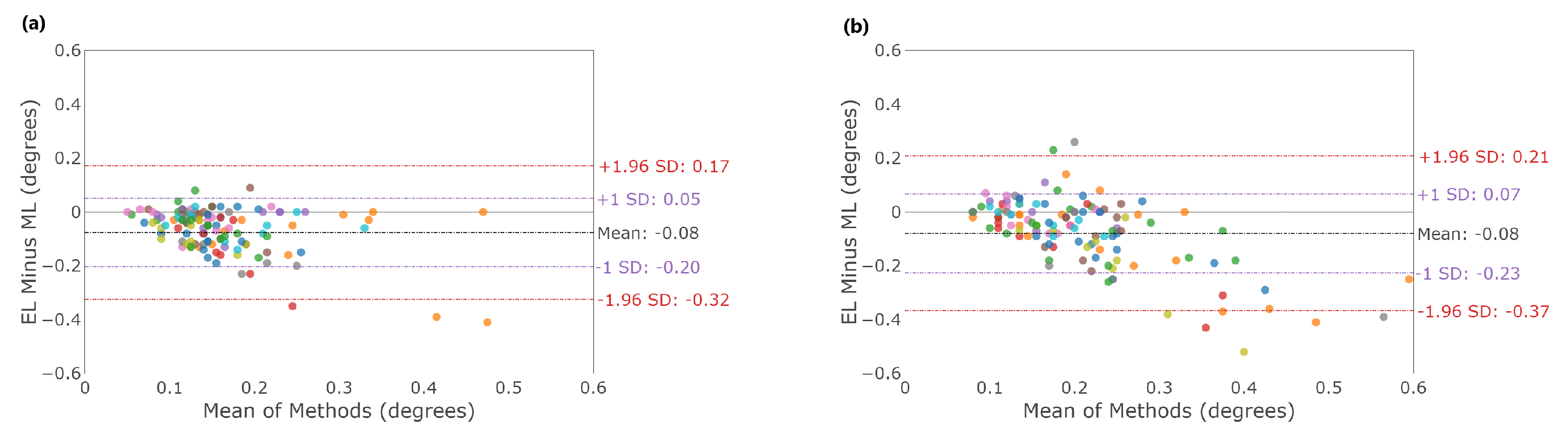

3.1. Fixation Stability

3.2. Saccades

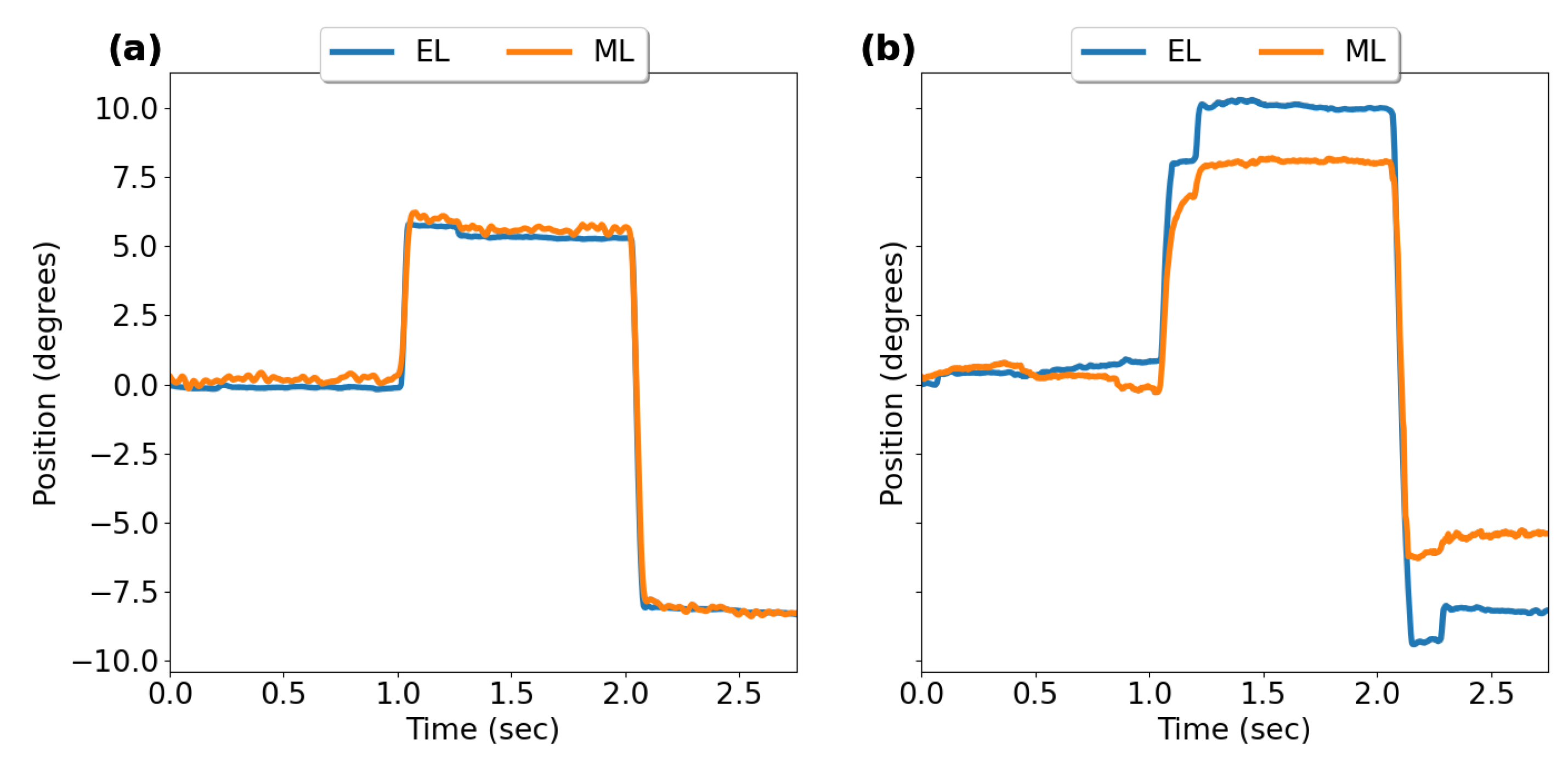

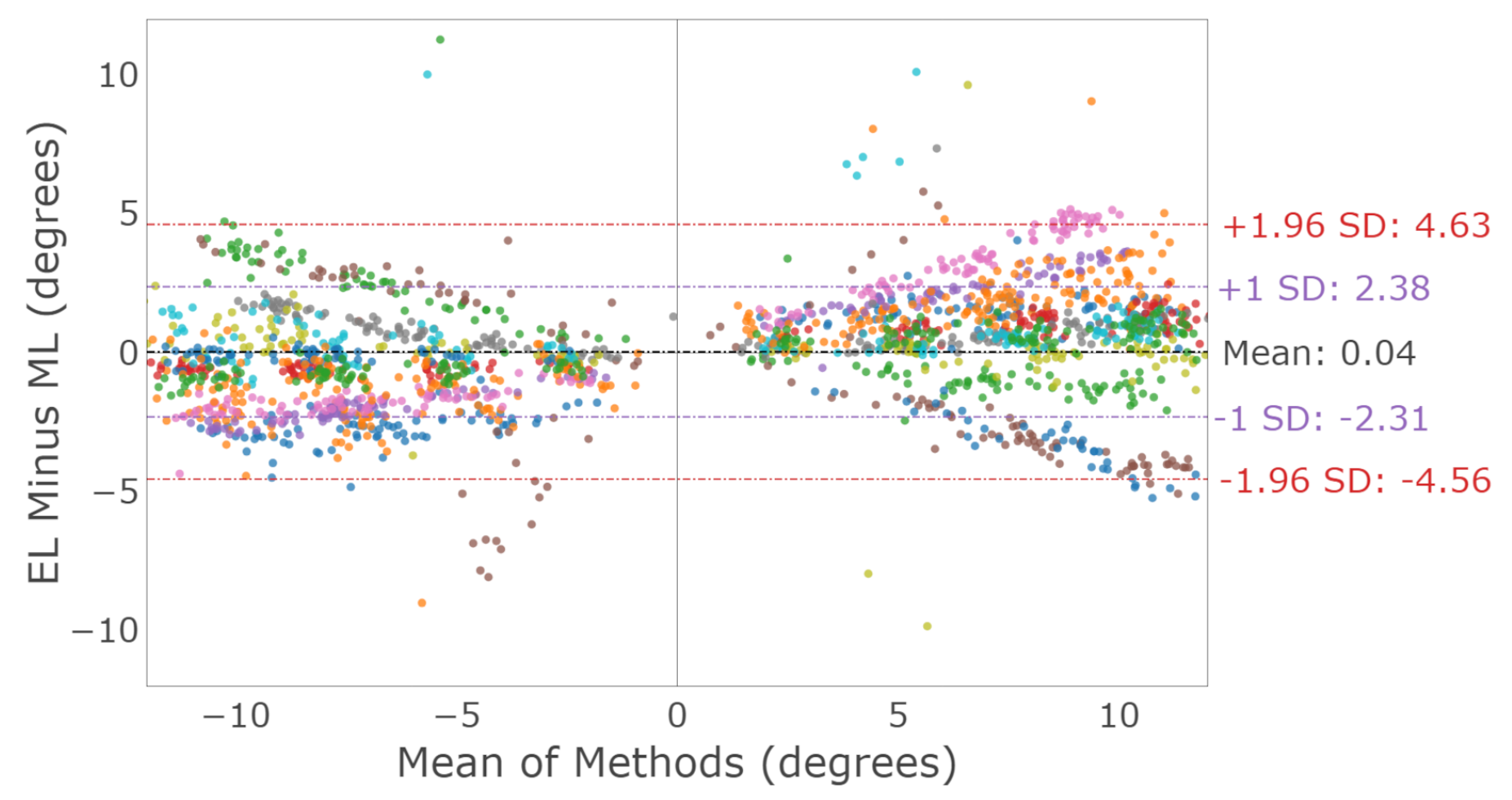

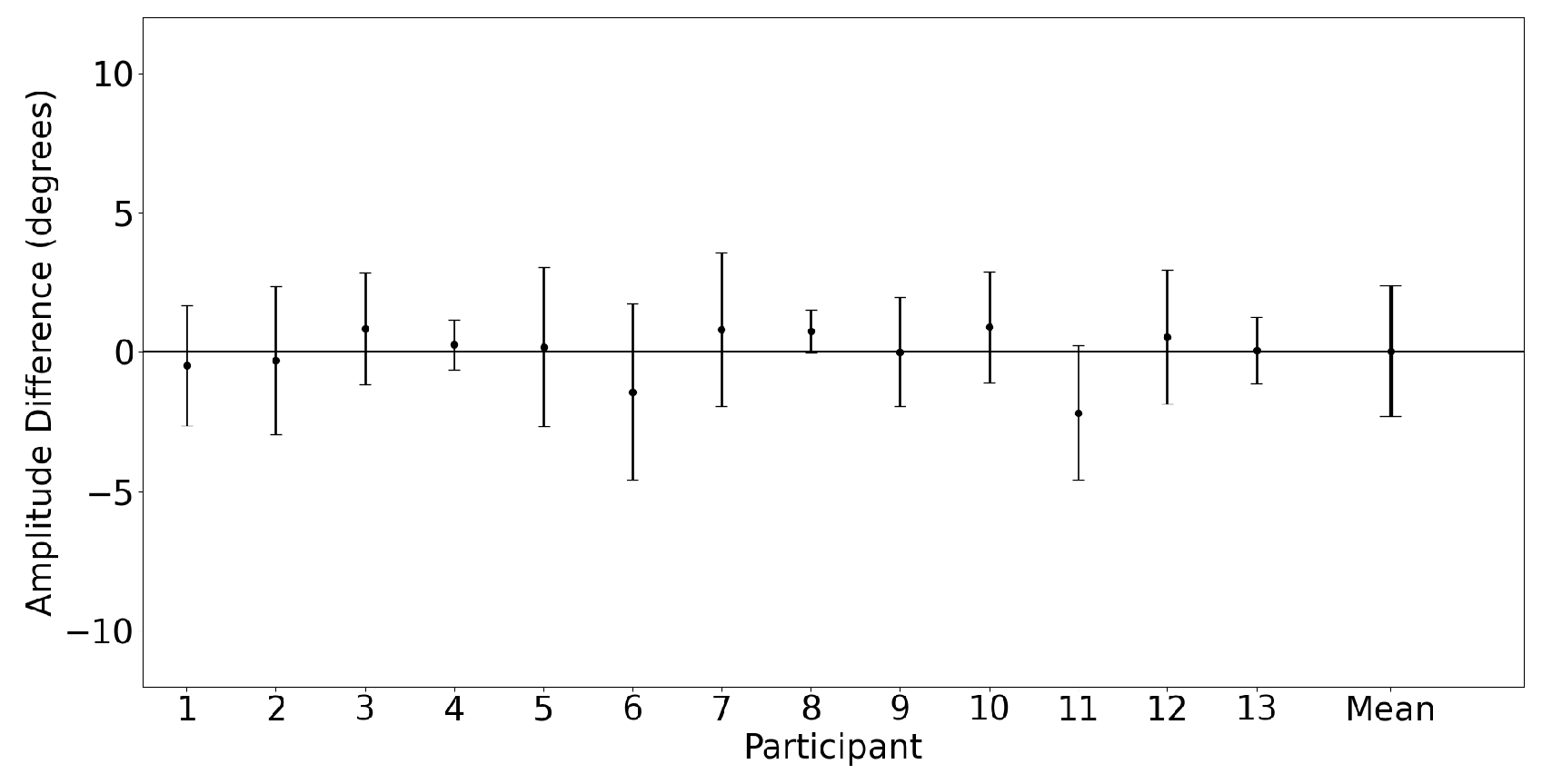

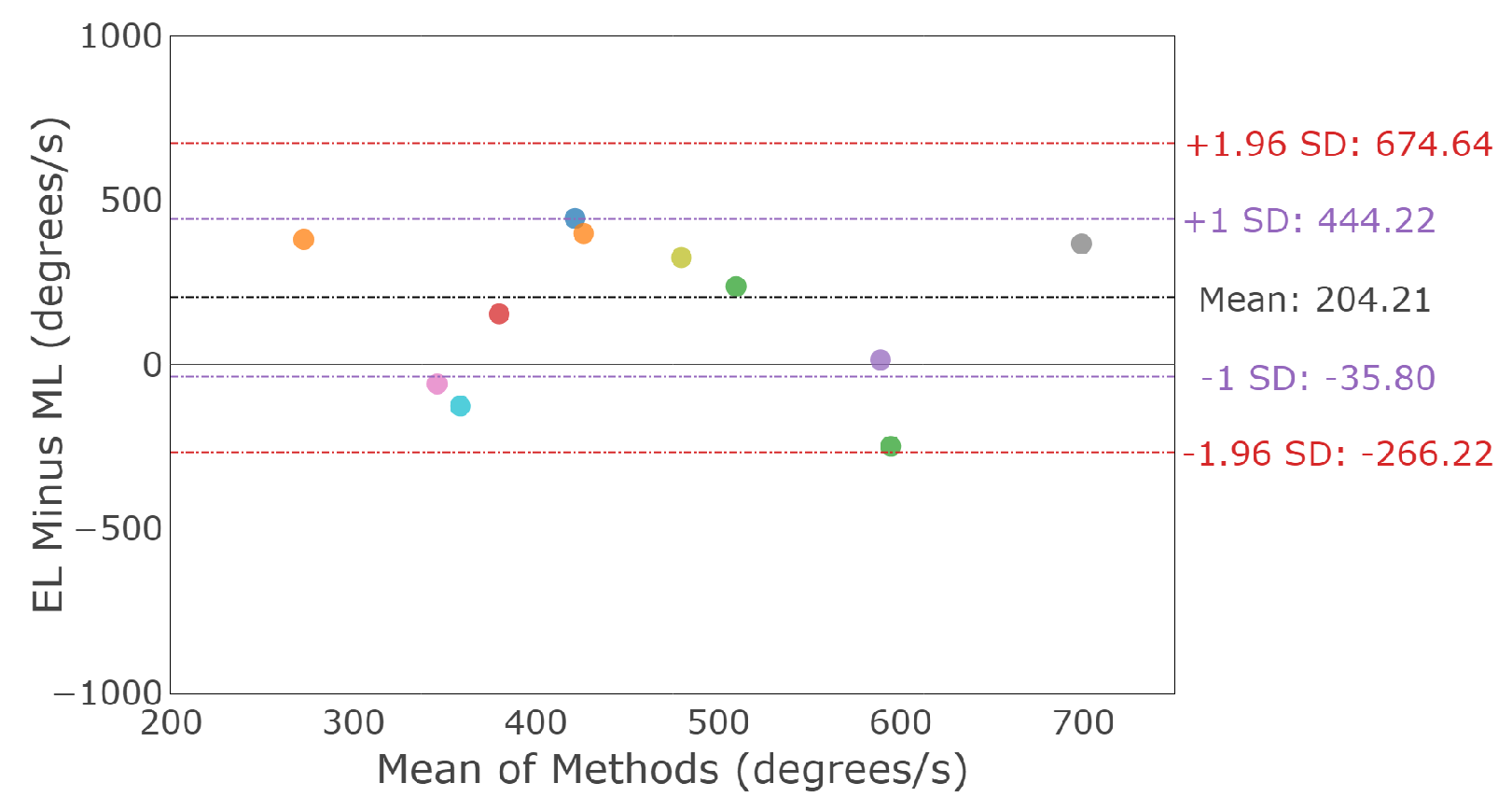

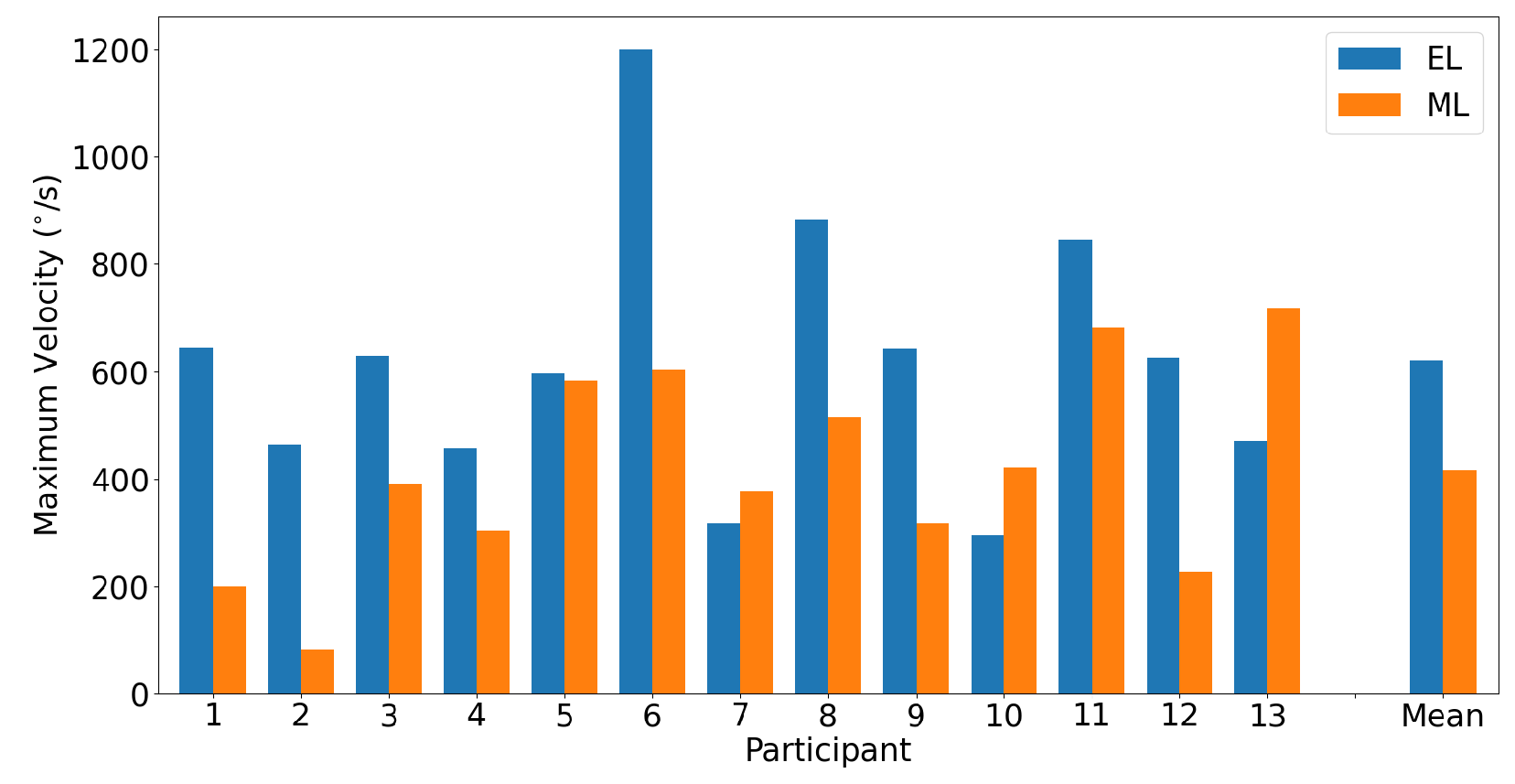

3.2.1. Horizontal Saccades

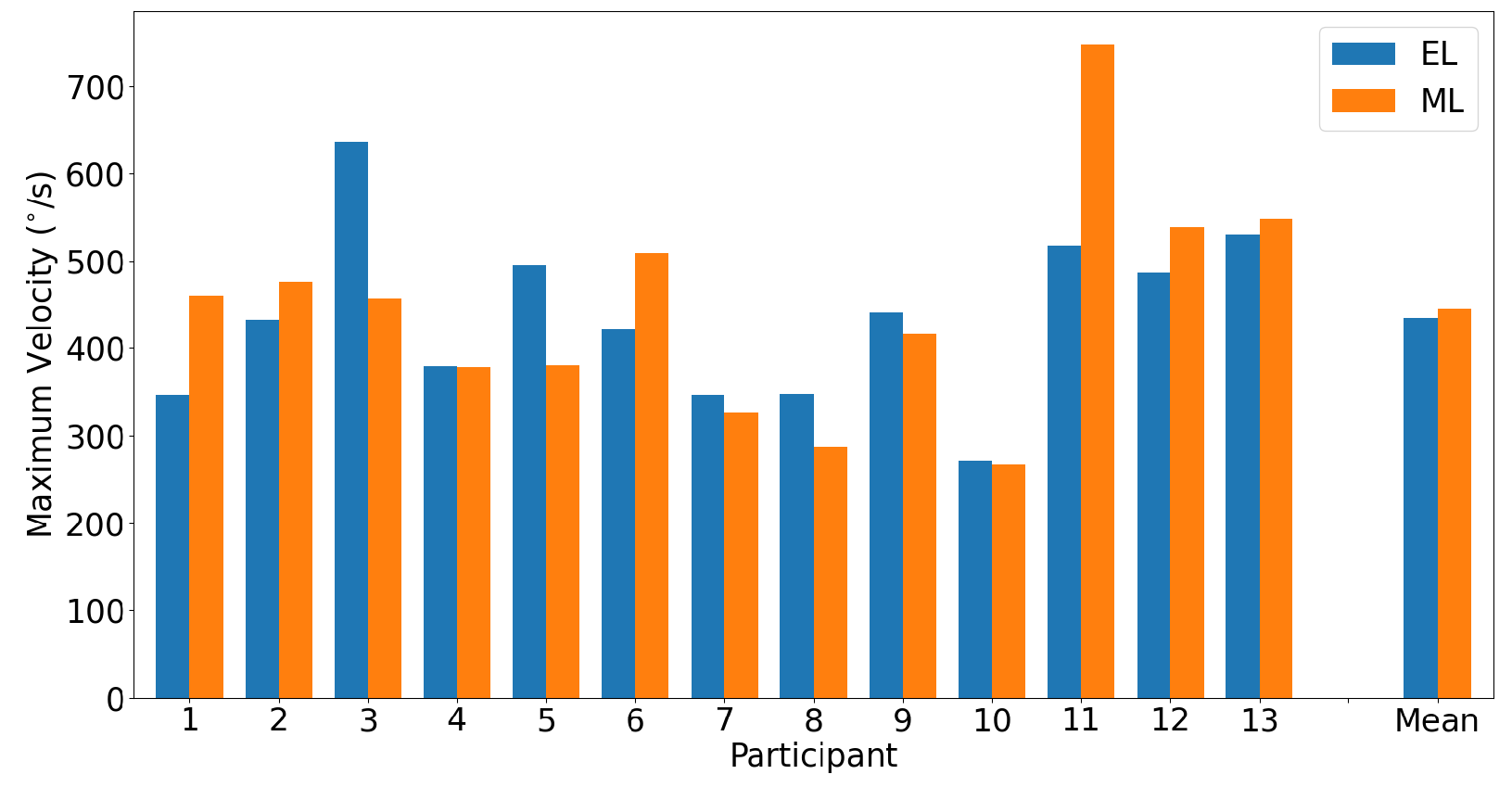

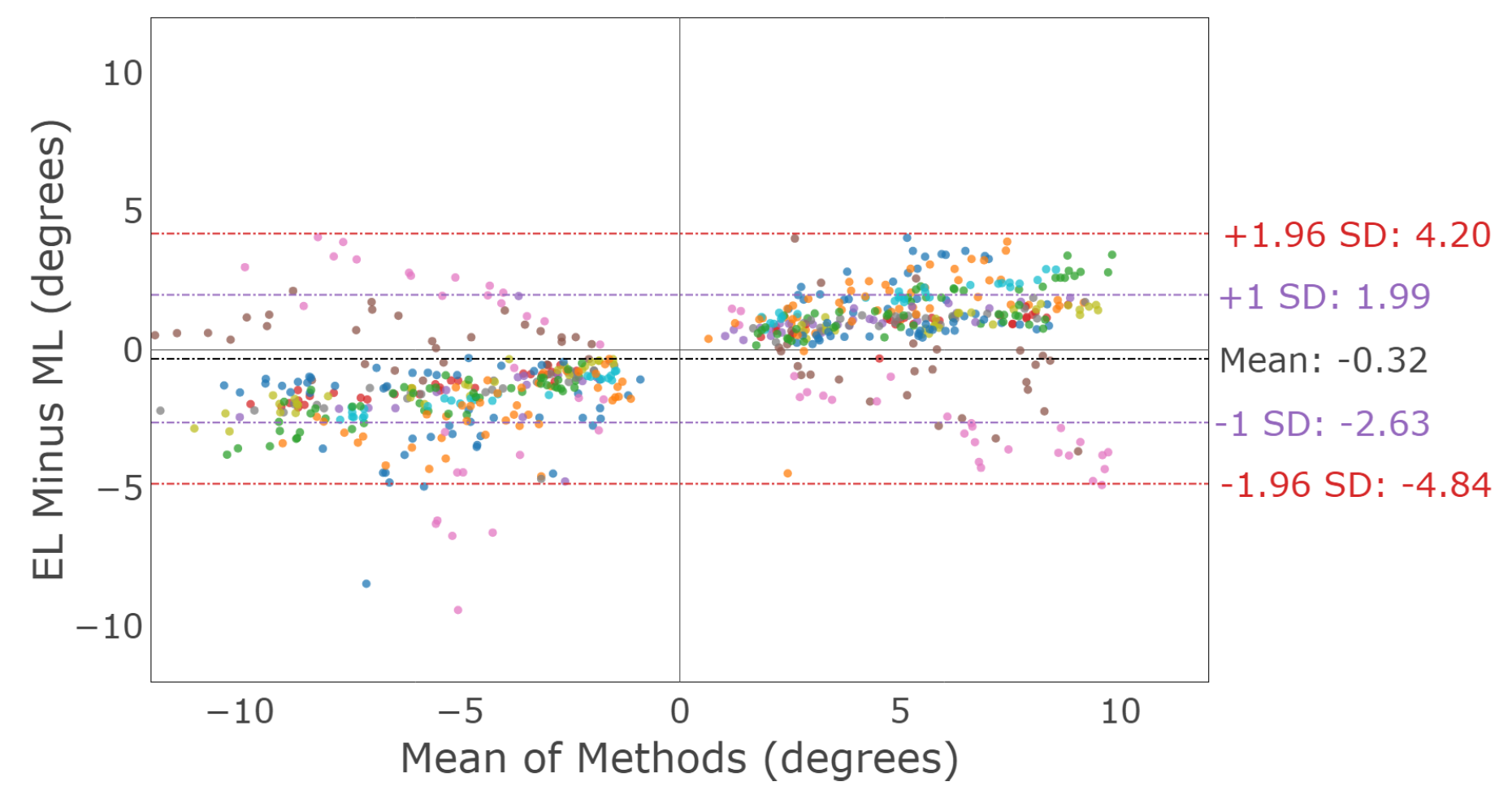

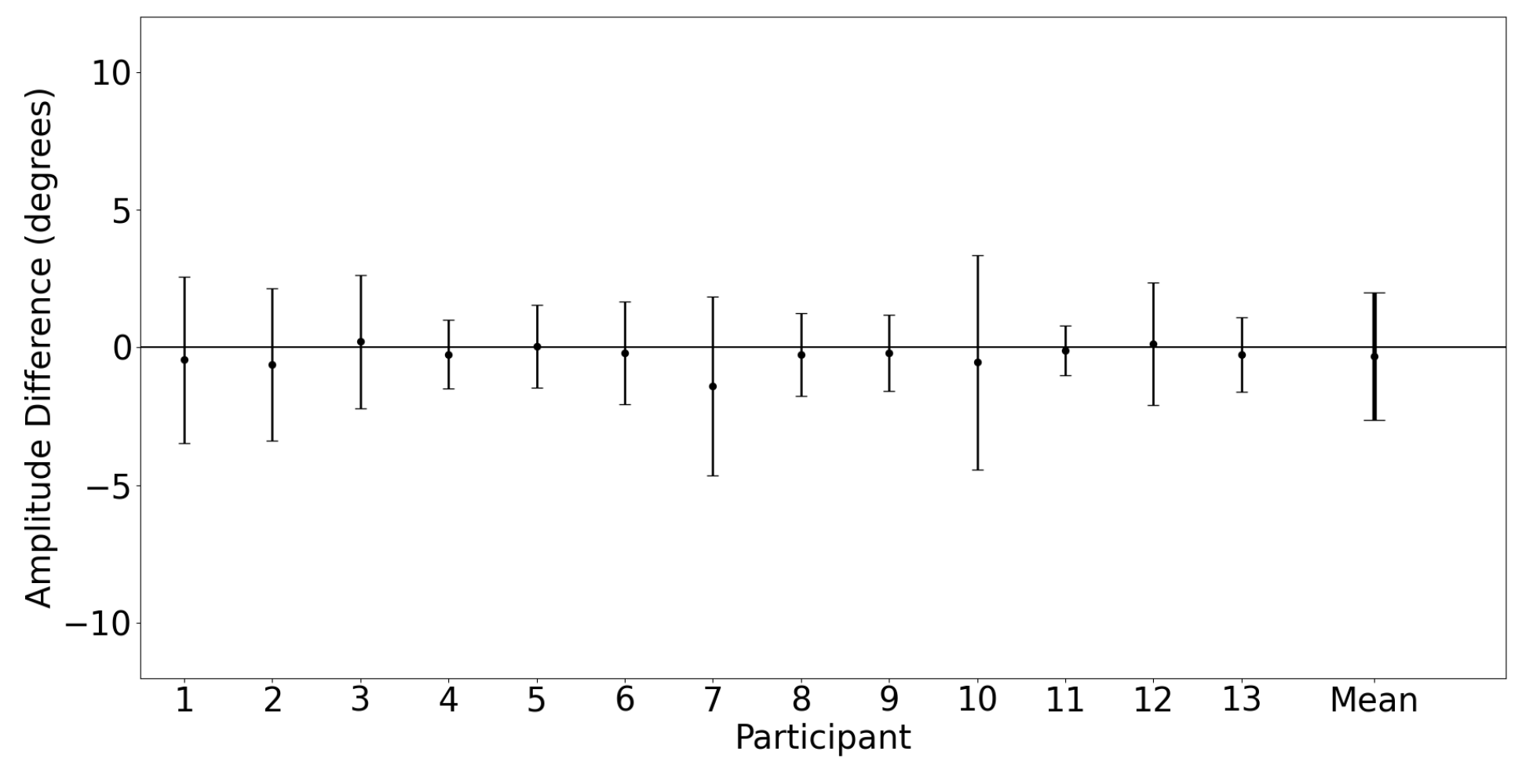

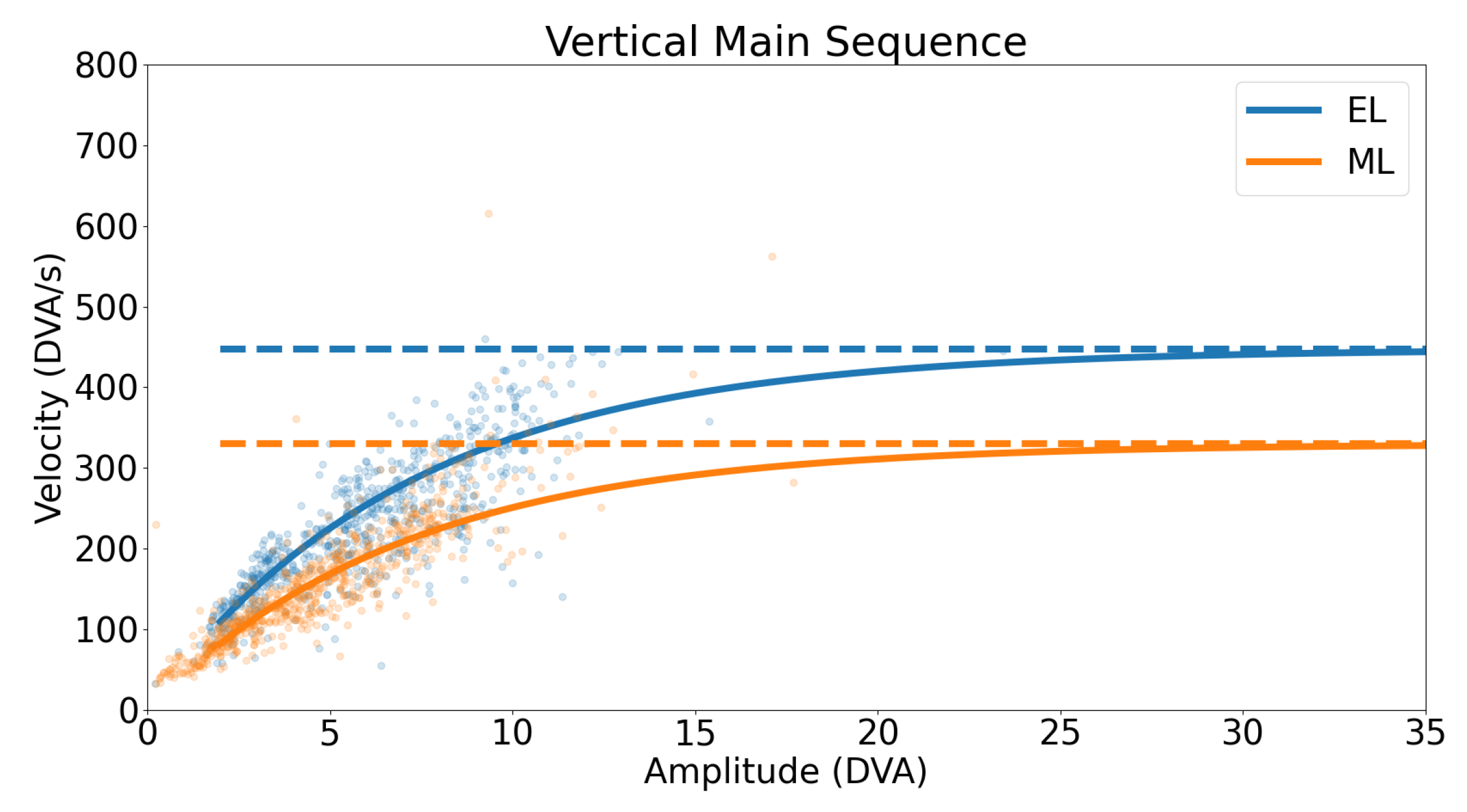

3.2.2. Vertical Saccades

3.2.3. Saccade Conjugacy

3.3. Smooth Pursuit

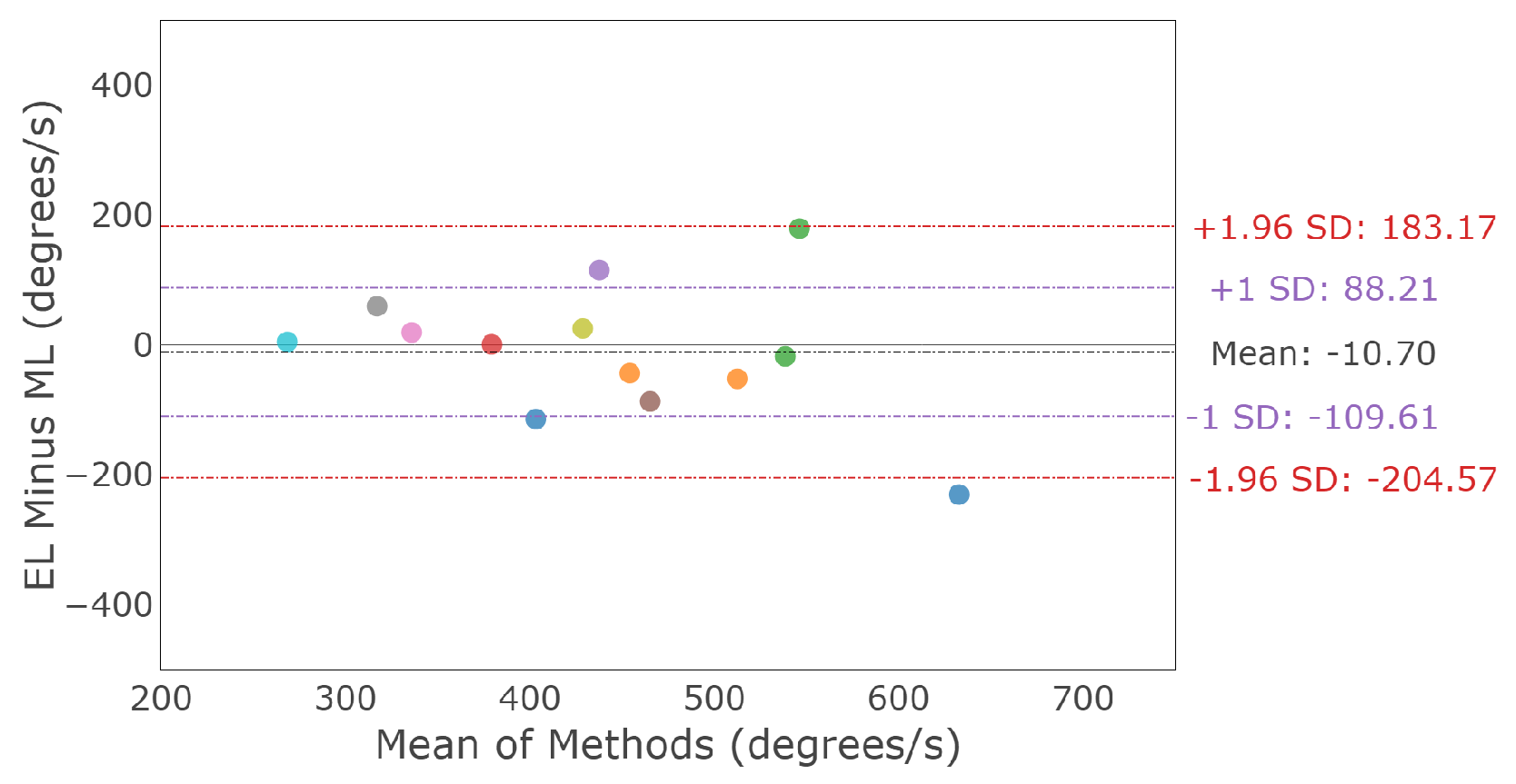

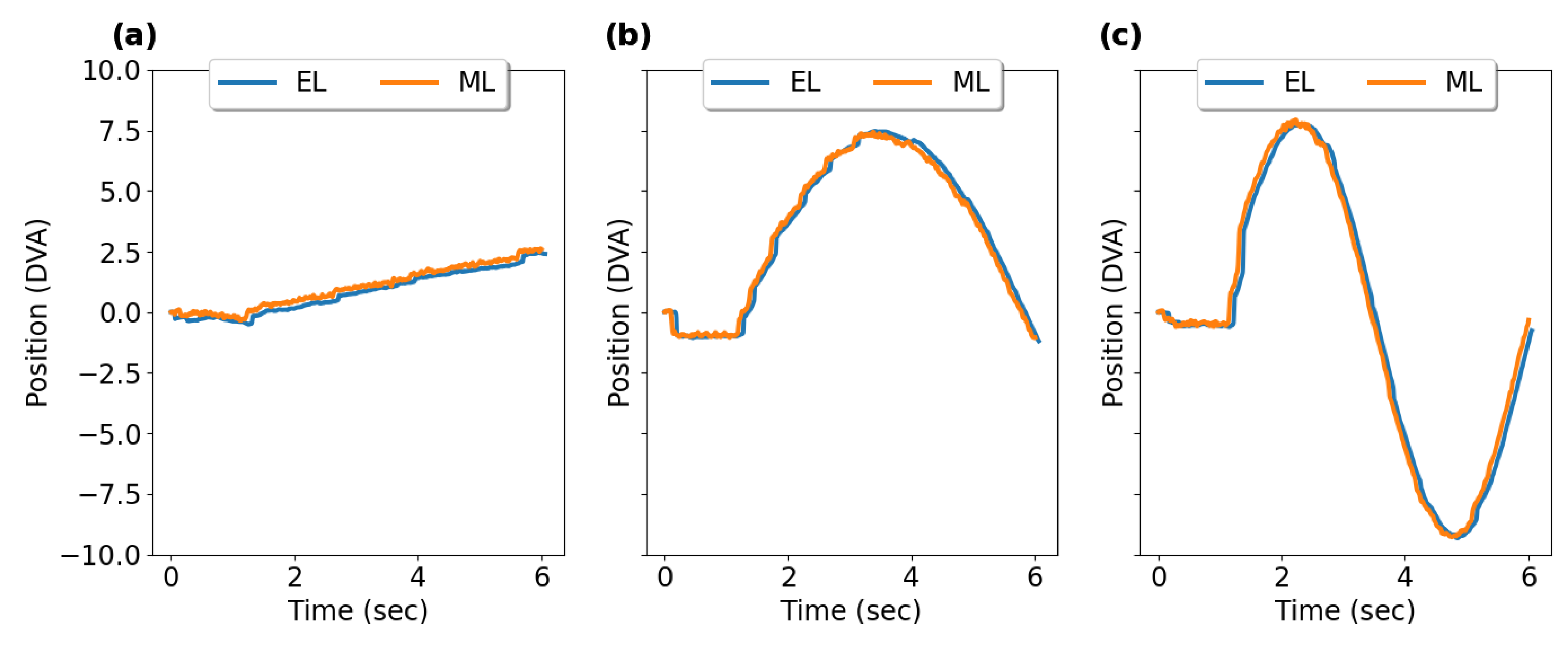

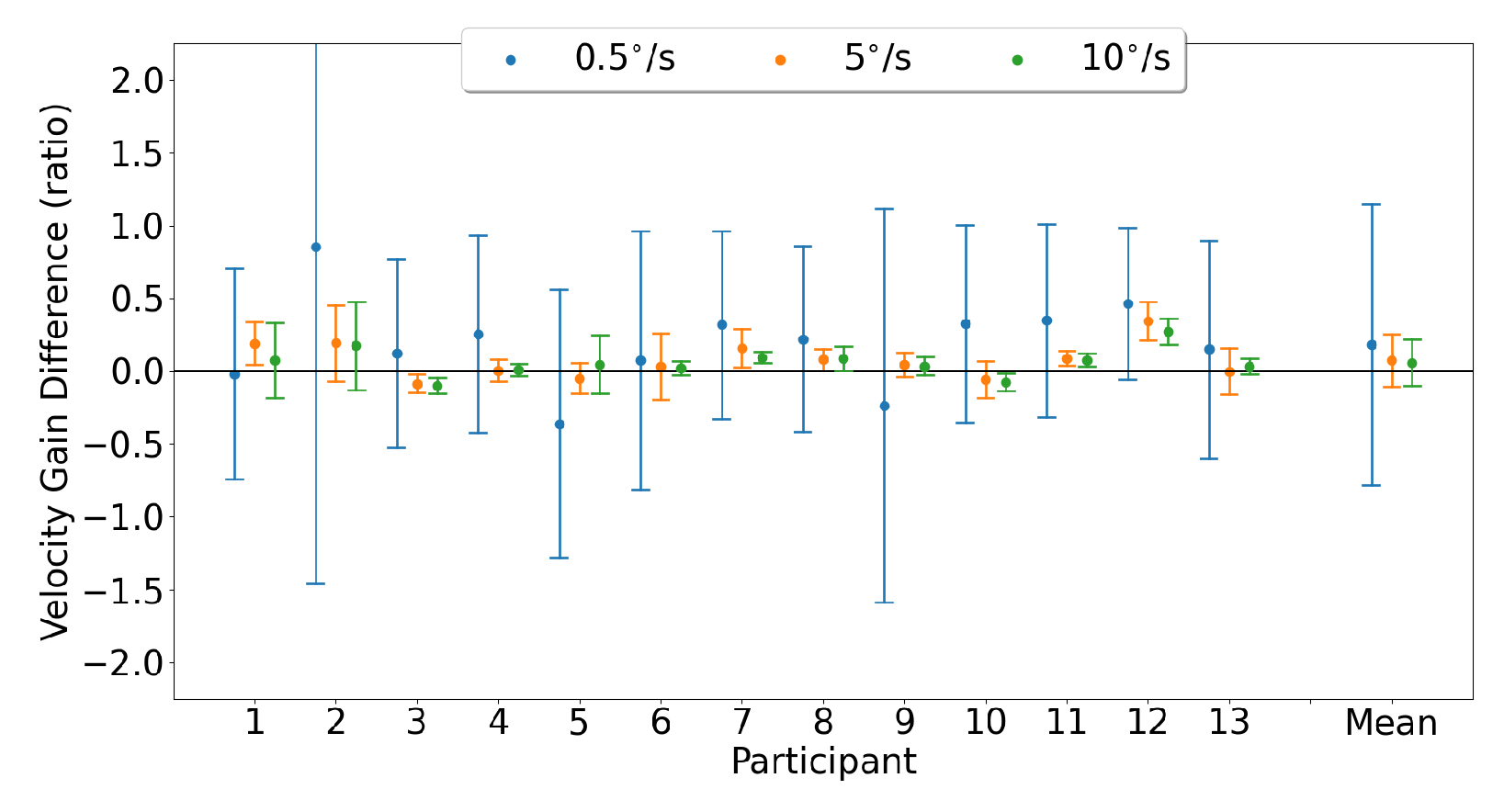

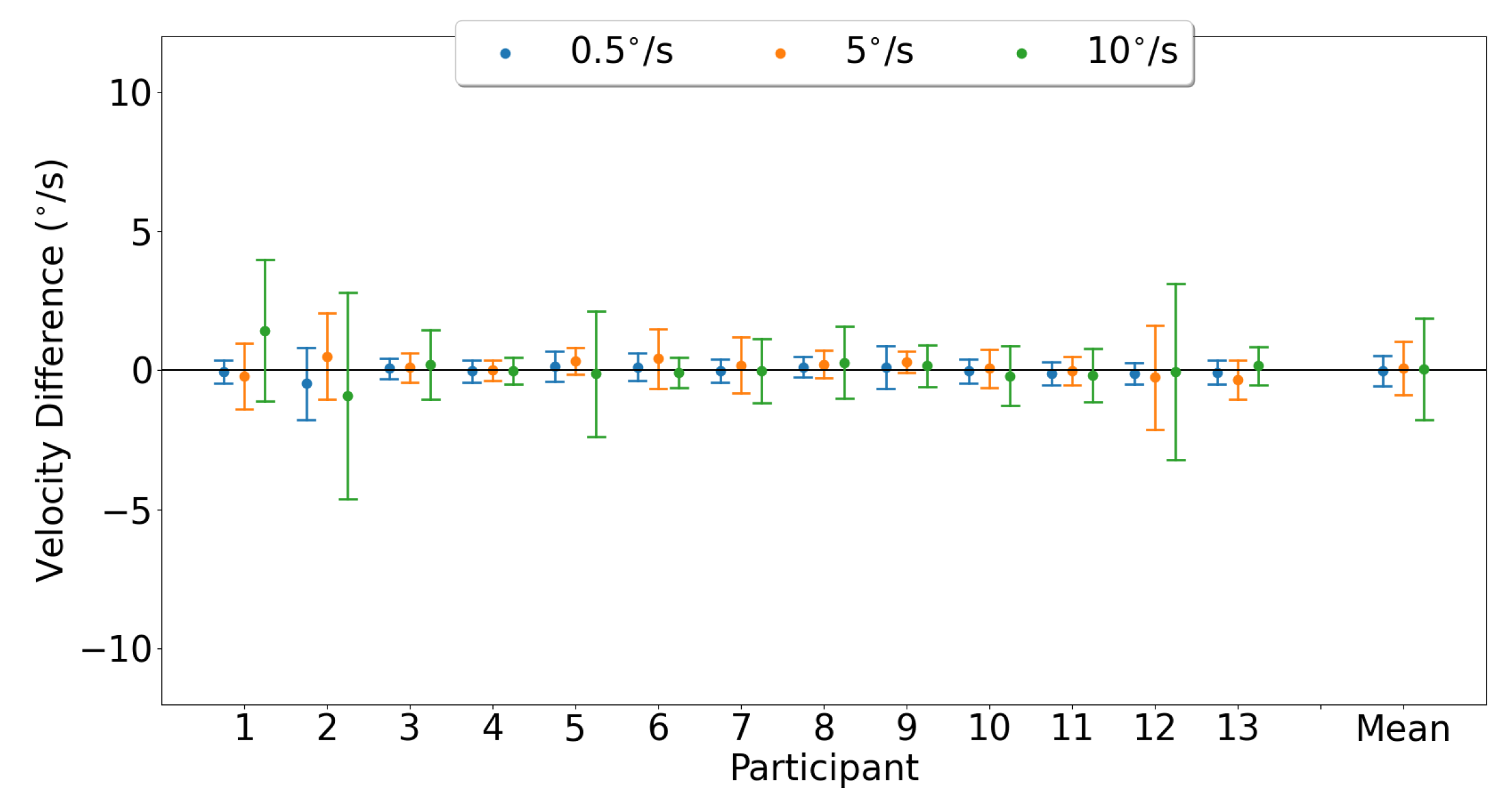

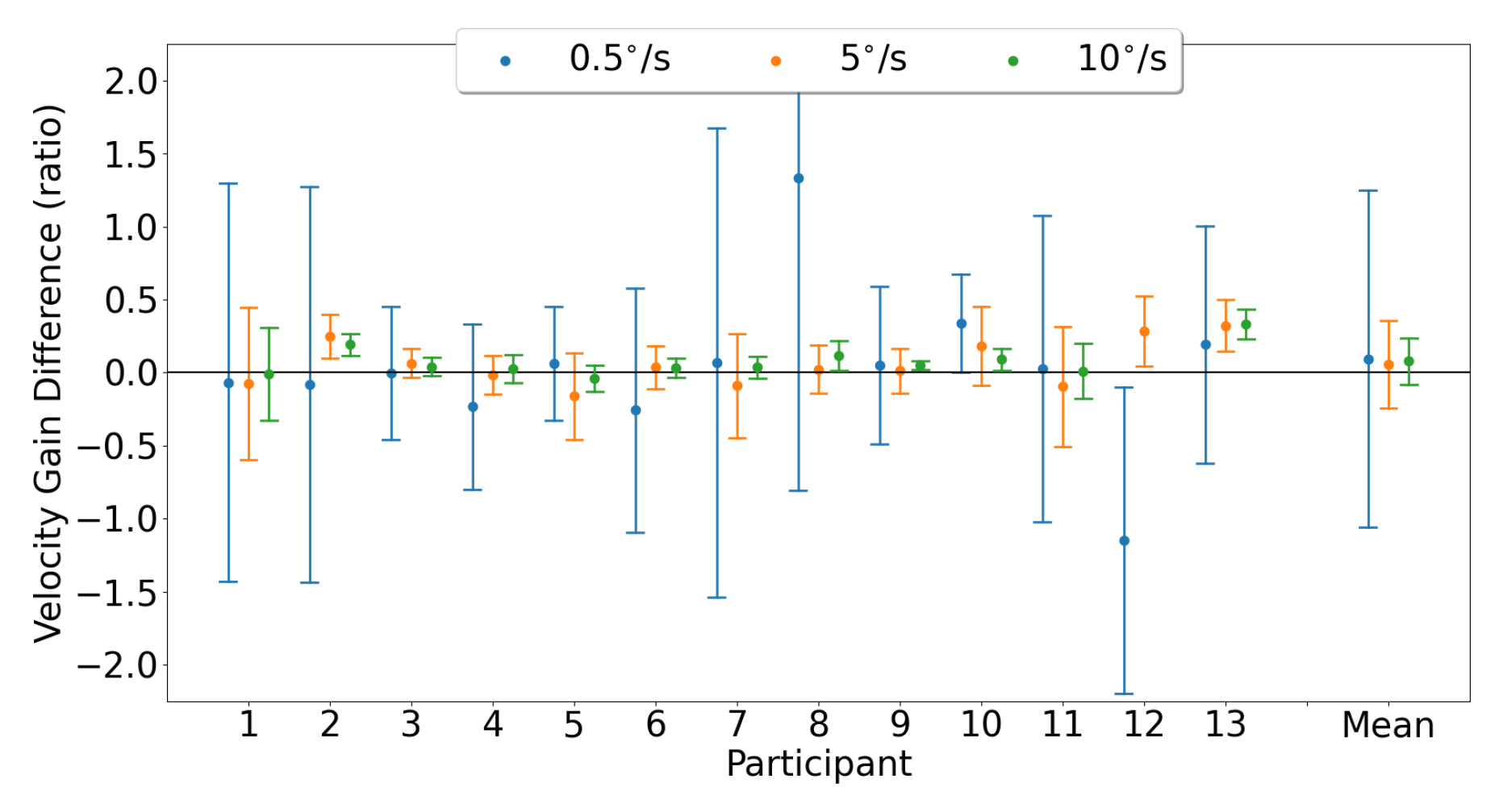

3.3.1. Horizontal Smooth Pursuit

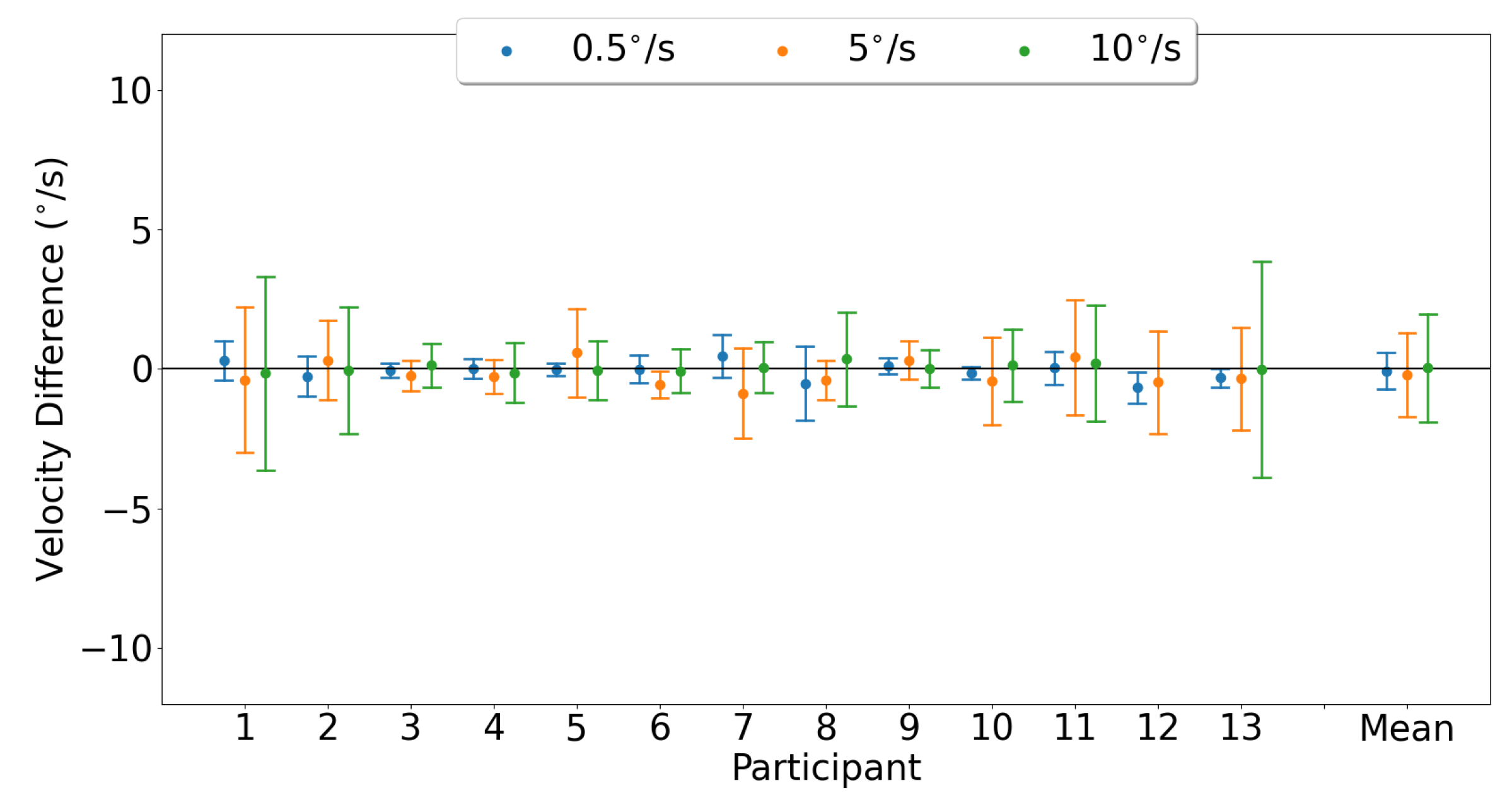

3.3.2. Vertical Smooth Pursuit

3.3.3. Smooth Pursuit Conjugacy

4. Discussion

4.1. Fixation

4.2. Saccades

4.3. Smooth Pursuit

4.4. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| SD | standard deviation |

| EL | EyeLink |

| ML | MindLink |

References

- Hodgson, T.L.; Ezard, G.; Hermens, F. Eye movements in neuropsychological tasks. Curr. Top. Behav. Neurosci. 2019, 41, 393–418. [Google Scholar] [PubMed]

- Land, M.F.; Hayhoe, M. In what ways do eye movements contribute to everyday activities? Vis. Res. 2001, 41, 3559–3565. [Google Scholar] [CrossRef] [PubMed]

- Moehler, T.; Fiehler, K. Effects of central and peripheral cueing on perceptual and saccade performance. Vis. Res. 2018, 143, 26–33. [Google Scholar] [CrossRef] [PubMed]

- Jonikaitis, D.; Dhawan, S.; Deubel, H. Saccade selection and inhibition: Motor and attentional components. J. Neurophysiol. 2019, 121, 1368–1380. [Google Scholar] [CrossRef]

- Ahlström, C.; Kircher, K.; Nyström, M.; Wolfe, B. Eye tracking in driver attention research - how gaze data interpretations influence what we learn. Front. Neuroergonomics 2021, 2, 778043. [Google Scholar] [CrossRef]

- Parker, A.; Poole, J.; Dagnall, N. Saccade-induced retrieval enhancement and the recovery of perceptual item-specific information. Cogn. Process. 2020, 21, 223–237. [Google Scholar] [CrossRef]

- Bachurina, V.; Arsalidou, M. Effects of task complexity and working memory load on eye-tracking indicies of cognitive effort in adults and children. J. Vis. 2020, 20, 1069. [Google Scholar] [CrossRef]

- Seideman, J.A.; Stanford, T.R.; Salinas, E. Saccade metrics reflect decision-making dynamics during urgent choices. Nat. Commun. 2018, 9, 2907. [Google Scholar] [CrossRef]

- Brams, S.; Ziv, G.; Levin, O.; Spitz, J.; Wagemans, J.; Williams, A.M.; Helsen, W.F. The relationship between gaze behaviour, expertise, and performance: A systemic review. Psychol. Bull. 2019, 145, 980–1027. [Google Scholar] [CrossRef]

- Gegenfurtner, A.; Lehtinen, E.; Säljö, R. Expertise differences in the comprehension of visualizations: A meta-analysis of eye-tracking research in proffesional domains. Educ. Psychol. Rev. 2011, 23, 523–552. [Google Scholar] [CrossRef]

- Irving, E.L.; Steinbach, M.J.; Lillakas, L.; Babu, R.J.; Hutchings, N. Horizontal saccade dynamics across the human life span. Investig. Ophthalmol. Vis. Sci. 2006, 47, 2478–2484. [Google Scholar] [CrossRef] [PubMed]

- Luna, B.; Velanova, K.; Geier, C.F. Development of eye-movement control. Brain Cogn. 2008, 68, 293–308. [Google Scholar] [CrossRef] [PubMed]

- Niechwiej-Szwedo, E.; Wu, S.; Nouredanesh, M.; Tung, J.; Christian, L.W. Development of eye-hand coordination in typically developing children and adolescents assessed using a reach-to-grasp sequencing task. Hum. Mov. Sci. 2021, 80, 102868. [Google Scholar] [CrossRef] [PubMed]

- McDonald, M.A.; Holdsworth, S.J.; Danesh-Meyer, H.V. Eye movements in mild traumatic brain injury: Ocular biomarkers. J. Eye Mov. Res. 2022, 15. [Google Scholar] [CrossRef] [PubMed]

- Yep, R.; Smorenburg, M.L.; Riek, H.C.; Calancie, O.G.; Kirkpatrick, R.H.; Perkins, J.E.; Huang, J.; Coe, B.C.; Brien, D.C.; Munoz, D.P. Interleaved pro/anti-saccade behavior across the lifespan. Front. Aging Neurosci. 2022, 14, 842549. [Google Scholar] [CrossRef]

- Anderson, T.J.; MacAskill, M.R. Eye movements in patients with neurodegenerative disorders. Nat. Rev. Neurol. 2013, 9, 74–85. [Google Scholar] [CrossRef]

- Lappi, O. Eye movements in the wild: Oculomotor control, gaze behaviour & frames of reference. Neurosci. Biobehav. Rev. 2016, 69, 49–68. [Google Scholar]

- Zhu, S.; Lakshminarasimhan, K.J.; Arfaei, N.; Angelaki, D.E. Eye movements reveal spatiotemporal dynamics of visually-informed planning in navigation. Elife 2022, 11, e73097. [Google Scholar] [CrossRef]

- Atik, O.; Arslan, O. Use of eye tracking for assessment of electronic navigation competency in maritime training. J. Eye Mov. Res. 2019, 12. [Google Scholar] [CrossRef]

- Peißl, S.; Wickens, C.D.; Baruah, R. Eye-tracking measures in aviation: A selective literature review. Int. J. Aerosp. Psychol. 2018, 28, 98–112. [Google Scholar] [CrossRef]

- Darici, D.; Masthoff, M.; Rischen, R.; Schmitz, M.; Ohlenburg, H.; Missler, M. Medical imaging training with eye movement modeling examples: A randomized controlled study. Med. Teach. 2023, 45, 918–924. [Google Scholar] [CrossRef]

- Halszka, J.; Holmqvist, K.; Gruber, H. Eye tracking in educational science: Theoretical frameworks and research agendas. J. Eye Mov. Res. 2017, 10, 1–18. [Google Scholar]

- Ashraf, H.; Sodergren, M.H.; Merali, N.; Mylonas, G.; Singh, H.; Darzi, A. Eye-tracking technology in medical education: A systemic review. Med. Teach. 2018, 40, 62–69. [Google Scholar] [CrossRef] [PubMed]

- Cognolato, M.; Atzori, M.; Müller, H. Head-mounted eye gaze tracking devices: An overview of modern devices and recent advances. J. Rehabil. Assitive Techonologies Eng. 2018, 5, 2055668318773991. [Google Scholar] [CrossRef] [PubMed]

- Holmqvist, K.; Örbom, S.L.; Hooge, I.T.C.; Niehorster, D.C.; Alexander, R.G.; Andersson, R.; Benjamins, J.S.; Blignaut, P.; Brouwer, A.M.; Chuang, L.L.; et al. Eye tracking: Empirical foundations for a minimal reporting guideline. Behav. Res. Methods 2023, 55, 364–416. [Google Scholar] [CrossRef] [PubMed]

- Aziz, S.; Lohr, D.J.; Komogortsev, O. SynchronEyes: A Novel, Paired Data Set of Eye Movements Recorded Simultaneously with Remote and Wearable Eye-Tracking Devices. In Proceedings of the 2022 Symposium on Eye Tracking Research and Applications, Seattle, WA, USA, 8–11 June 2022; pp. 1–6. [Google Scholar]

- Cardona, G.; Quevedo, N. Blinking and driving: The influence of saccades and cognitive workload. Curr. Eye Res. 2014, 39, 239–244. [Google Scholar] [CrossRef] [PubMed]

- Fletcher, W.A.; Sharpe, J.A. Smooth pursuit dysfunction in Alzheimer’s disease. Neurology 1988, 38, 272. [Google Scholar] [CrossRef]

- González, E.G.; Wong, A.M.; Niechwiej-Szwedo, E.; Tarita-Nistor, L.; Steinbach, M.J. Eye position stability in amblyopia and in normal binocular vision. Investig. Ophthalmol. Vis. Sci. 2012, 53, 5386–5394. [Google Scholar] [CrossRef]

- Engbert, R.; Kliegl, R. Microsaccades uncover the orientation of covert attention. Vis. Res. 2003, 43, 1035–1045. [Google Scholar] [CrossRef] [PubMed]

- Di Russo, F.; Pitzalis, S.; Spinelli, D. Fixation stability and saccadic latency in elite shooters. Vis. Res. 2003, 43, 1837–1845. [Google Scholar] [CrossRef]

- Kimmel, D.L.; Mammo, D.; Newsome, W.T. Tracking the eye non-invasively: Simultaneous comparison of the scleral search coil and optical tracking techniques in the macaque monkey. Front. Behav. Neurosci. 2012, 6, 49. [Google Scholar] [CrossRef] [PubMed]

- Spering, M.; Schütz, A.C.; Braun, D.I.; Gegenfurtner, K.R. Keep your eyes on the ball: Smooth pursuit eye movements enhance prediction of visual motion. J. Neurophysiol. 2011, 105, 1756–1767. [Google Scholar] [CrossRef]

- Fehd, H.M.; Seiffert, A.E. Eye movements during multiple object tracking: Where do participants look? Cognition 2008, 108, 201–209. [Google Scholar] [CrossRef] [PubMed]

- Fooken, J.; Yeo, S.H.; Pai, D.K.; Spering, M. Eye movement accuracy determines natural interception strategies. J. Vis. 2016, 16, 1. [Google Scholar] [CrossRef]

- Ehinger, B.V.; Groß, K.; Ibs, I.; König, P. A new comprehensive eye-tracking test battery concurrently evaluating the Pupil Labs glasses and the EyeLink 1000. PeerJ 2019, 7, e7086. [Google Scholar] [CrossRef]

- Sarkar, N.; Strathearn, D.; Lee, G.; Olfat, M.; Rohani, A.; Mansour, R.R. A large angle, low voltage, small footprint micromirror for eye tracking and near-eye display applications. In Proceedings of the 2015 Transducers—2015 18th International Conference on Solid-State Sensors, Actuators and Microsystems (TRANSDUCERS), Anchorage, AK, USA, 21–25 June 2015; pp. 855–858. [Google Scholar]

| Task | # of Trials | Target Amplitude | |

|---|---|---|---|

| Saccades | Horizontal | 175 | 3–25 (2.9, 5.8, 8.6, 11.4, 12.2, 14.4, 17.3, 20.1, 24.5) |

| Vertical | 60 | 0.5–17 (0.5, 2.9, 5.7, 6.4, 8.6, 11.9, 17.2) | |

| Smooth Pursuit | Horizontal | 30 | 0.01, 0.1, 0.2 Hz (0.5, 5, 10 ) |

| Vertical | 30 | 0.01, 0.1, 0.2 Hz (0.5, 5, 10 ) | |

| Eye Tracker | Measure | n | Mean () | SD () |

|---|---|---|---|---|

| EyeLink | SD (horizontal) | 13 | 0.13 | 0.05 |

| SD (vertical) | 13 | 0.18 | 0.04 | |

| logBCEA | 13 | −0.92 | 0.21 | |

| MindLink | SD (horizontal) | 13 | 0.21 | 0.11 |

| SD (vertical) | 13 | 0.25 | 0.10 | |

| logBCEA | 13 | −0.63 | 0.30 |

| Saccade Direction | Eye Tracker | Mean of Difference | t-Statistic | p-Value | df |

|---|---|---|---|---|---|

| Horizontal | EyeLink | −0.34 | −4.12 | 0.002 | 12 |

| MindLink | −1.29 | −4.09 | 0.002 | 12 | |

| Vertical | EyeLink | −0.28 | −4.04 | 0.002 | 12 |

| MindLink | −1.40 | −37.3 | 0.003 | 12 |

| Eye Tracker | Direction | Measure | n | 0.5 | 5 | 10 | |||

|---|---|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | ||||

| EyeLink | Horizontal | Speed | 13 | 0.58 | 0.20 | 4.72 | 0.20 | 9.85 | 0.74 |

| Gain | 13 | 1.00 | 0.37 | 0.95 | 0.04 | 0.90 | 0.07 | ||

| Vertical | Speed | 13 | 0.42 | 0.10 | 3.26 | 0.29 | 7.32 | 1.10 | |

| Gain | 13 | 0.67 | 0.20 | 0.65 | 0.06 | 0.67 | 0.10 | ||

| MindLink | Horizontal | Speed | 13 | 0.52 | 0.15 | 4.36 | 0.65 | 9.22 | 1.10 |

| Gain | 13 | 0.84 | 0.27 | 0.87 | 0.13 | 0.85 | 0.10 | ||

| Vertical | Speed | 13 | 0.49 | 0.19 | 2.86 | 0.55 | 6.44 | 1.24 | |

| Gain | 13 | 0.78 | 0.35 | 0.57 | 0.11 | 0.59 | 0.11 | ||

| Effect | DFn | DFd | F | p | |

|---|---|---|---|---|---|

| Eye Tracker | 1.05 | 12.64 | 1.21 | 0.30 | 0.01 |

| Pursuit Speed | 1.21 | 14.58 | 0.77 | 0.42 | 0.01 |

| Eye Tracker : Pursuit Speed | 1.18 | 14.13 | 0.63 | 0.47 | 0.03 |

| Effect | DFn | DFd | F | p | |

|---|---|---|---|---|---|

| Eye Tracker | 1.05 | 11.50 | 0.87 | 0.38 | 0.01 |

| Pursuit Speed | 1.07 | 11.74 | 0.02 | 0.92 | 0.0004 |

| Eye Tracker : Pursuit Speed | 1.17 | 12.88 | 0.85 | 0.39 | 0.03 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zafar, A.; Calderon, C.M.; Yeboah, A.M.; Dalton, K.; Irving, E.; Niechwiej-Szwedo, E. Investigation of Camera-Free Eye-Tracking Glasses Compared to a Video-Based System. Sensors 2023, 23, 7753. https://doi.org/10.3390/s23187753

Zafar A, Calderon CM, Yeboah AM, Dalton K, Irving E, Niechwiej-Szwedo E. Investigation of Camera-Free Eye-Tracking Glasses Compared to a Video-Based System. Sensors. 2023; 23(18):7753. https://doi.org/10.3390/s23187753

Chicago/Turabian StyleZafar, Abdullah, Claudia Martin Calderon, Anne Marie Yeboah, Kristine Dalton, Elizabeth Irving, and Ewa Niechwiej-Szwedo. 2023. "Investigation of Camera-Free Eye-Tracking Glasses Compared to a Video-Based System" Sensors 23, no. 18: 7753. https://doi.org/10.3390/s23187753

APA StyleZafar, A., Calderon, C. M., Yeboah, A. M., Dalton, K., Irving, E., & Niechwiej-Szwedo, E. (2023). Investigation of Camera-Free Eye-Tracking Glasses Compared to a Video-Based System. Sensors, 23(18), 7753. https://doi.org/10.3390/s23187753