Three-Dimensional (3D) Visualization under Extremely Low Light Conditions Using Kalman Filter

Abstract

:1. Introduction

2. Theory

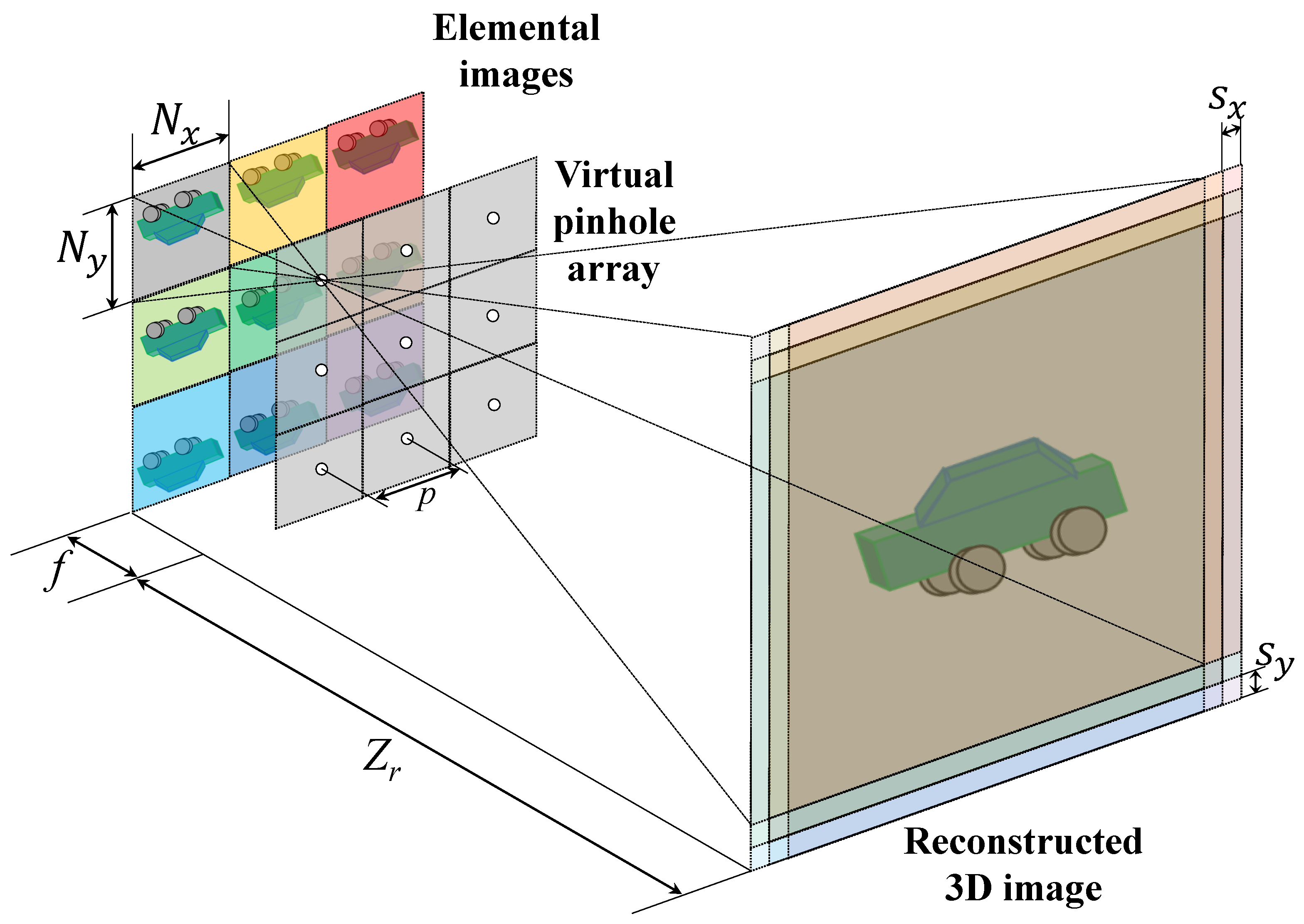

2.1. Volumetric Computational Reconstruction (VCR)

2.2. Photon-Counting Integral Imaging

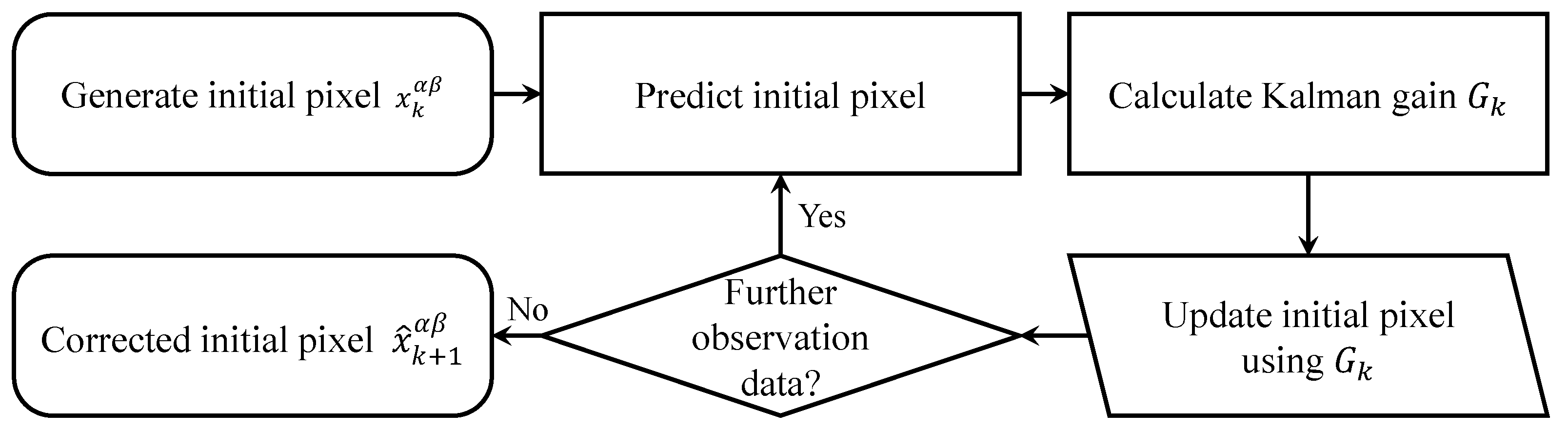

2.3. Kalman Filter

3. Image Processing and Experimental Setup

3.1. Kalman Estimation before Reconstruction (KEBR)

3.2. Kalman Estimation after Reconstruction (KEAR)

3.3. Experimental Setup

4. Experimental Results and Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kang, M.K.; Nguyen, H.P.; Kang, D.; Park, S.G.; Kim, S.K. Adaptive viewing distance in super multi-view displays using aperiodic 3-D pixel location and dynamic view indices. Opt. Express 2018, 26, 20661–20679. [Google Scholar] [CrossRef]

- Fan, Z.B.; Qiu, H.Y.; Zhang, H.L.; Pang, X.N.; Zhou, L.D.; Liu, L.; Ren, H.; Wang, Q.H.; Dong, J.W. A broadband achromatic metalens array for integral imaging in the visible. Light Sci. Appl. 2019, 8, 67. [Google Scholar] [CrossRef] [PubMed]

- Javidi, B.; Carnicer, A.; Arai, J.; Fujii, T.; Hua, H.; Liao, H.; Martínez-Corral, M.; Pla, F.; Stern, A.; Waller, L.; et al. Roadmap on 3D integral imaging: Sensing, processing, and display. Opt. Express 2020, 28, 32266–32293. [Google Scholar] [CrossRef] [PubMed]

- Tobon Vasquez, J.A.; Scapaticci, R.; Turvani, G.; Bellizzi, G.; Rodriguez-Duarte, D.O.; Joachimowicz, N.; Duchêne, B.; Tedeschi, E.; Casu, M.R.; Crocco, L.; et al. A prototype microwave system for 3D brain stroke imaging. Sensors 2020, 20, 2607. [Google Scholar] [CrossRef] [PubMed]

- Lin, S.F.; Wang, D.; Wang, Q.H.; Kim, E.S. Full-color holographic 3D display system using off-axis color-multiplexed-hologram on single SLM. Opt. Lasers Eng. 2020, 126, 105895. [Google Scholar] [CrossRef]

- Rogers, C.; Piggott, A.Y.; Thomson, D.J.; Wiser, R.F.; Opris, I.E.; Fortune, S.A.; Compston, A.J.; Gondarenko, A.; Meng, F.; Chen, X.; et al. A universal 3D imaging sensor on a silicon photonics platform. Nature 2021, 590, 256–261. [Google Scholar] [CrossRef] [PubMed]

- Cho, D.Y.; Kang, M.K. Human gaze-aware attentive object detection for ambient intelligence. Eng. Appl. Artif. Intell. 2021, 106, 104471. [Google Scholar] [CrossRef]

- Lee, B.R.; Lee, H.; Son, W.; Yano, S.; Son, J.Y.; Heo, G.; Venkel, T. Monocular Accommodation in the Light Field Imaging. IEEE Trans. Broadcast. 2022, 68, 397–406. [Google Scholar] [CrossRef]

- Yin, K.; Hsiang, E.L.; Zou, J.; Li, Y.; Yang, Z.; Yang, Q.; Lai, P.C.; Lin, C.L.; Wu, S.T. Advanced liquid crystal devices for augmented reality and virtual reality displays: Principles and applications. Light Sci. Appl. 2022, 11, 161. [Google Scholar] [CrossRef]

- Xiang, L.; Gai, J.; Bao, Y.; Yu, J.; Schnable, P.S.; Tang, L. Field-based robotic leaf angle detection and characterization of maize plants using stereo vision and deep convolutional neural networks. J. Field Rob. 2023, 40, 1034–1053. [Google Scholar] [CrossRef]

- Yan, X.; Yan, Z.; Jing, T.; Zhang, P.; Lin, M.; Li, P.; Jiang, X. Enhancement of effective viewable information in integral imaging display systems with holographic diffuser: Quantitative characterization, analysis, and validation. Opt. Laser Technol. 2023, 161, 109101. [Google Scholar] [CrossRef]

- Huang, Y.; Krishnan, G.; O’Connor, T.; Joshi, R.; Javidi, B. End-to-end integrated pipeline for underwater optical signal detection using 1D integral imaging capture with a convolutional neural network. Opt. Express 2023, 31, 1367–1385. [Google Scholar] [CrossRef] [PubMed]

- So, S.; Kim, J.; Badloe, T.; Lee, C.; Yang, Y.; Kang, H.; Rho, J. Multicolor and 3D Holography Generated by Inverse-Designed Single-Cell Metasurfaces. Adv. Mater. 2023, 2208520. [Google Scholar] [CrossRef] [PubMed]

- Lee, B.R.; Marichal-Hernández, J.G.; Rodríguez-Ramos, J.M.; Son, W.H.; Hong, S.; Son, J.Y. Wavefront Characteristics of a Digital Holographic Optical Element. Micromachines 2023, 14, 1229. [Google Scholar] [CrossRef] [PubMed]

- Shi, Z.; Wan, Z.; Zhan, Z.; Liu, K.; Liu, Q.; Fu, X. Super-resolution orbital angular momentum holography. Nat. Commun. 2023, 14, 1869. [Google Scholar] [CrossRef]

- Costello, K.; Paquette, N.M.; Sharma, A. Top-down holography in an asymptotically flat spacetime. Phys. Rev. Lett. 2023, 130, 061602. [Google Scholar] [CrossRef] [PubMed]

- Sun, H.; Wang, S.; Hu, X.; Liu, H.; Zhou, X.; Huang, J.; Cheng, X.; Sun, F.; Liu, Y.; Liu, D. Detection of surface defects and subsurface defects of polished optics with multisensor image fusion. PhotoniX 2022, 3, 6. [Google Scholar] [CrossRef]

- Hu, Z.Y.; Zhang, Y.L.; Pan, C.; Dou, J.Y.; Li, Z.Z.; Tian, Z.N.; Mao, J.W.; Chen, Q.D.; Sun, H.B. Miniature optoelectronic compound eye camera. Nat. Commun. 2022, 13, 5634. [Google Scholar] [CrossRef]

- Liu, X.Q.; Zhang, Y.L.; Li, Q.K.; Zheng, J.X.; Lu, Y.M.; Juodkazis, S.; Chen, Q.D.; Sun, H.B. Biomimetic sapphire windows enabled by inside-out femtosecond laser deep-scribing. PhotoniX 2022, 3, 104174. [Google Scholar] [CrossRef]

- Mao, J.W.; Han, D.D.; Zhou, H.; Sun, H.B.; Zhang, Y.L. Bioinspired Superhydrophobic Swimming Robots with Embedded Microfluidic Networks and Photothermal Switch for Controllable Marangoni Propulsion. Adv. Funct. Mater. 2023, 33, 2208677. [Google Scholar] [CrossRef]

- Zhang, Y.; Jiang, H.; Shectman, S.; Yang, D.; Cai, Z.; Shi, Y.; Huang, S.; Lu, L.; Zheng, Y.; Kang, S.; et al. Conceptual design of the optical system of the 6.5 m wide field multiplexed survey telescope with excellent image quality. PhotoniX 2023, 4, 16. [Google Scholar] [CrossRef]

- Lippman, G. La Photographie Integrale. Comp. Rend. Acad. Sci. 1908, 146, 446–451. [Google Scholar]

- Lee, J.; Cho, M.; Lee, M.C. 3D Visualization of Objects in Heavy Scattering Media by Using Wavelet Peplography. IEEE Access 2022, 10, 134052–134060. [Google Scholar] [CrossRef]

- Krishnan, G.; Joshi, R.; O’Connor, T.; Pla, F.; Javidi, B. Human gesture recognition under degraded environments using 3D-integral imaging and deep learning. Opt. Express 2020, 28, 19711–19725. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Yu, C.; Guo, J. Multi-Image Encryption Method via Computational Integral Imaging Algorithm. Entropy 2022, 24, 996. [Google Scholar] [CrossRef] [PubMed]

- Cho, B.; Kopycki, P.; Martinez-Corral, M.; Cho, M. Computational volumetric reconstruction of integral imaging with improved depth resolution considering continuously non-uniform shifting pixels. Opt. Lasers Eng. 2018, 111, 114–121. [Google Scholar] [CrossRef]

- Welch, G.; Bishop, G. An Introduction to the Kalman Filter; University of North Carolina at Chapel Hill: Chapel Hill, NC, USA, 1995. [Google Scholar]

- Khodaparast, J. A review of dynamic phasor estimation by non-linear Kalman filters. IEEE Access 2022, 10, 11090–11109. [Google Scholar] [CrossRef]

- Xia, X.; Hashemi, E.; Xiong, L.; Khajepour, A. Autonomous vehicle kinematics and dynamics synthesis for sideslip angle estimation based on consensus kalman filter. IEEE Trans. Control Syst. Technol. 2022, 31, 179–192. [Google Scholar] [CrossRef]

- Hossain, M.; Haque, M.E.; Arif, M.T. Kalman filtering techniques for the online model parameters and state of charge estimation of the Li-ion batteries: A comparative analysis. J. Energy Storage 2022, 51, 104174. [Google Scholar] [CrossRef]

- Khodarahmi, M.; Maihami, V. A review on Kalman filter models. Arch. Comput. Methods Eng. 2023, 30, 727–747. [Google Scholar] [CrossRef]

- Cho, M.; Javidi, B. Computational reconstruction of three-dimensional integral imaging by rearrangement of elemental image pixels. J. Disp. Technol. 2009, 5, 61–65. [Google Scholar] [CrossRef]

- Inoue, K.; Lee, M.C.; Javidi, B.; Cho, M. Improved 3D integral imaging reconstruction with elemental image pixel rearrangement. J. Opt. 2018, 20, 025703. [Google Scholar] [CrossRef]

- Inoue, K.; Cho, M. Visual quality enhancement of integral imaging by using pixel rearrangement technique with con-volution operator (CPERTS). Opt. Lasers Eng. 2018, 111, 206–210. [Google Scholar] [CrossRef]

- Tavakoli, B.; Javidi, B.; Watson, E. Three dimensional visualization by photon counting computational Integral Imaging. Opt. Express 2008, 16, 4426–4436. [Google Scholar] [CrossRef]

- Cho, M. Three-dimensional color photon counting microscopy using Bayesian estimation with adaptive priori information. Chin. Opt. Lett. 2015, 13, 070301. [Google Scholar]

- Hwang, H.; Haddad, R.A. Adaptive median filters: New algorithms and results. IEEE Trans. Image Process. 1995, 4, 499–502. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 4th ed.; Pearson: New York, NY, USA, 2018. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoneceil, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Yoo, J.C.; Han, T.H. Fast normalized cross-correlation. Circuits Syst. Signal Process. 2009, 28, 819–843. [Google Scholar] [CrossRef]

| Model | GoPro HERO6 Black | |

|---|---|---|

| Resolution ( × ) | 2000 × 1500 | |

| Focal length (f) | 3 mm | |

| Sensor size ( × ) | 5.9 mm × 4.4 mm | |

| Shutter speed | Normal | 1/125 s |

| Extremely low-light | 1/2000 s | |

| ISO | Normal | 1600 |

| Extremely low-light | 200 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, H.-W.; Cho, M.; Lee, M.-C. Three-Dimensional (3D) Visualization under Extremely Low Light Conditions Using Kalman Filter. Sensors 2023, 23, 7571. https://doi.org/10.3390/s23177571

Kim H-W, Cho M, Lee M-C. Three-Dimensional (3D) Visualization under Extremely Low Light Conditions Using Kalman Filter. Sensors. 2023; 23(17):7571. https://doi.org/10.3390/s23177571

Chicago/Turabian StyleKim, Hyun-Woo, Myungjin Cho, and Min-Chul Lee. 2023. "Three-Dimensional (3D) Visualization under Extremely Low Light Conditions Using Kalman Filter" Sensors 23, no. 17: 7571. https://doi.org/10.3390/s23177571

APA StyleKim, H.-W., Cho, M., & Lee, M.-C. (2023). Three-Dimensional (3D) Visualization under Extremely Low Light Conditions Using Kalman Filter. Sensors, 23(17), 7571. https://doi.org/10.3390/s23177571