Effects of Data Augmentation on the Nine-Axis IMU-Based Orientation Estimation Accuracy of a Recurrent Neural Network

Abstract

:1. Introduction

2. Materials and Methods

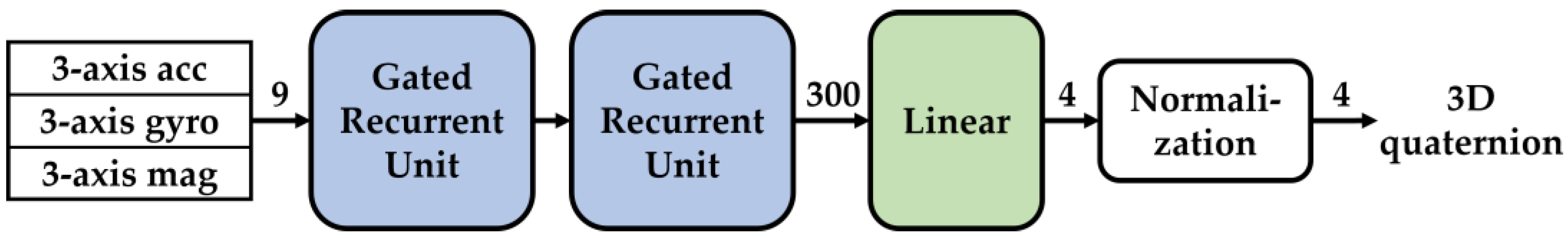

2.1. Three-Dimensional Orientation Estimation Neural Network

2.2. Data Augmentation Techniques

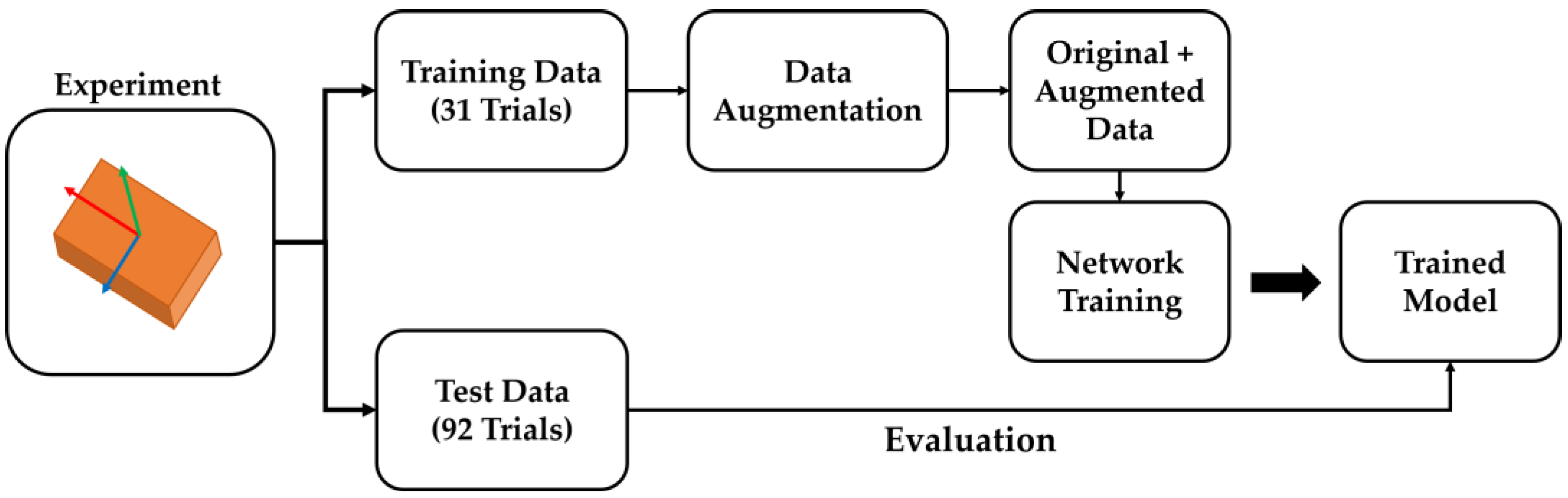

3. Experiment and Training Scenario

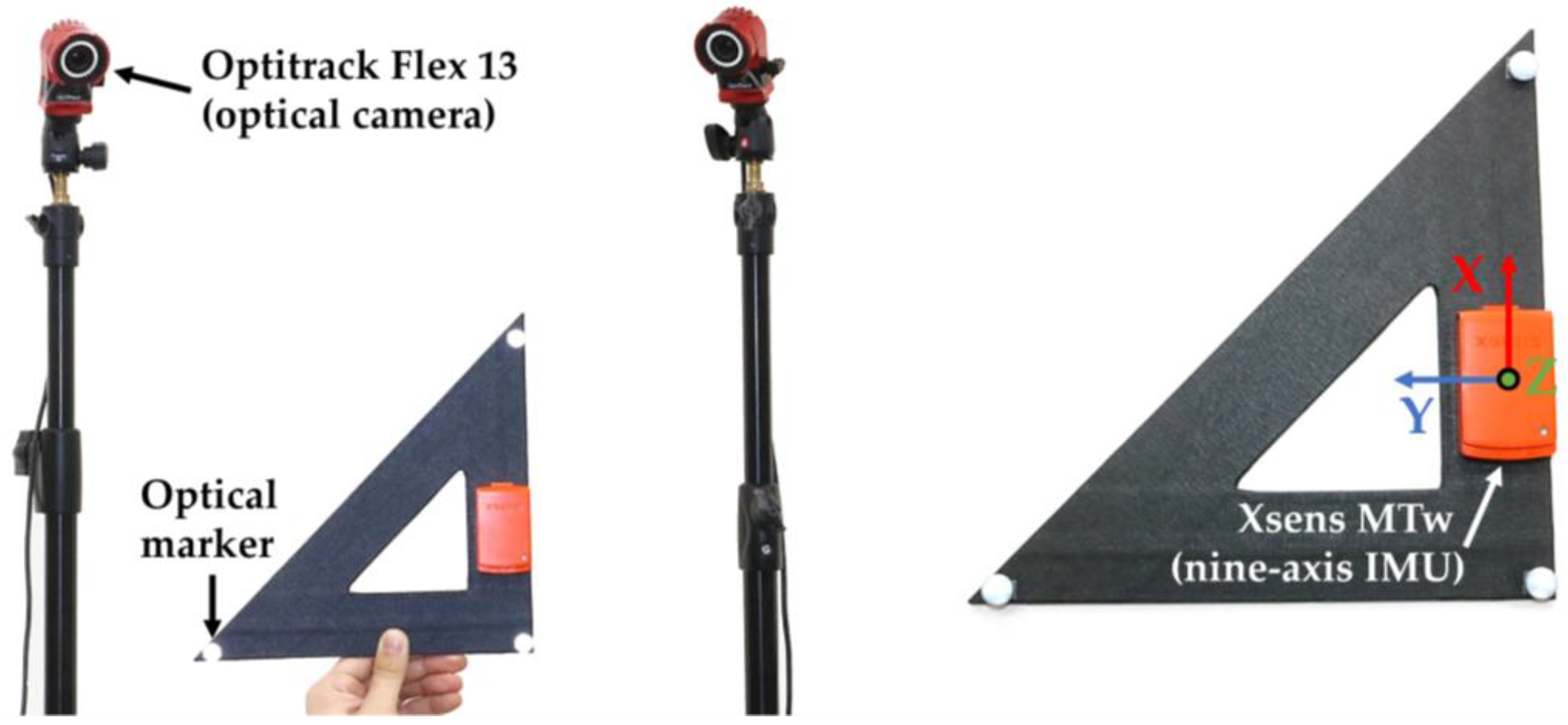

3.1. Experiment

- Rotation: only rotation is performed while maintaining the position of the sensor as much as possible.

- Translation: only translation is performed while maintaining the orientation of the sensor as much as possible.

- Combined: rotation and translation are performed randomly.

3.2. Training Scenario

- Rotation: An RNN model trained with a training dataset in which only rotation augmentation was applied.

- Bias: An RNN model trained with a training dataset in which only bias augmentation was applied.

- Noise: An RNN model trained with a training dataset in which only noise augmentation was applied.

- Rotation and Bias: An RNN model trained with a training dataset in which both rotation and bias augmentation were simultaneously applied.

- Rotation and Noise: An RNN model trained with a training dataset in which both rotation and noise augmentation were simultaneously applied.

- Bias and Noise: An RNN model trained with a training dataset in which bias and noise augmentation were simultaneously applied.

- All: An RNN model trained with a training dataset in which all three augmentations were simultaneously applied.

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Dong, X.; Gao, Y.; Guo, J.; Zuo, S.; Xiang, J.; Li, D.; Tu, Z. An integrated UWB-IMU-vision framework for autonomous approaching and landing of UAVs. Aerospace 2022, 9, 797. [Google Scholar] [CrossRef]

- Chen, P.; Dang, Y.; Liang, R.; Zhu, W.; He, X. Real-time object tracking on a drone with multi-inertial sensing data. IEEE Trans. Intell. Transp. Syst. 2017, 19, 131–139. [Google Scholar] [CrossRef]

- Araguás, G.; Paz, C.; Gaydou, D.; Paina, G.P. Quaternion-based orientation estimation fusing a camera and inertial sensors for a hovering UAV. J. Intell. Robot. Syst. 2015, 77, 37–53. [Google Scholar] [CrossRef]

- Li, S.; Jiang, J.; Ruppel, P.; Liang, H.; Ma, X.; Hendrich, N.; Sun, F.; Zhang, J. A Mobile Robot Hand-arm Teleoperation System by Vision and IMU. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 10900–10906. [Google Scholar]

- Han, Y.C.; Wong, K.I.; Murray, I. Gait phase detection for normal and abnormal gaits using IMU. IEEE Sens. J. 2019, 19, 3439–3448. [Google Scholar] [CrossRef]

- Lee, C.J.; Lee, J.K. Wearable IMMU-based relative position estimation between body segments via time-varying segment-to-joint vectors. Sensors 2022, 22, 2149. [Google Scholar] [CrossRef] [PubMed]

- Madgwick, S.O.H.; Harrison, A.J.L.; Vaidyanathan, R. Estimation of IMU and MARG Orientation Using a Gradient Descent Algorithm. In Proceedings of the 2011 IEEE International Conference on Rehabilitation Robotics, Zurich, Switzerland, 29 June–1 July 2011; pp. 1–7. [Google Scholar]

- Lee, J.K. A parallel attitude-heading Kalman filter without state-augmentation of model-based disturbance components. IEEE Trans. Instrum. Meas. 2019, 68, 2668–2670. [Google Scholar] [CrossRef]

- Sabatini, A.M. Quaternion-based extended Kalman filter for determining orientation by inertial and magnetic sensing. IEEE Trans. Biomed. Eng. 2006, 53, 1346–1356. [Google Scholar] [CrossRef] [PubMed]

- Valenti, R.G.; Dryanovski, I.; Xiao, J. A linear Kalman filter for MARG orientation estimation using the algebraic quaternion algorithm. IEEE Trans. Instrum. Meas. 2015, 65, 467–481. [Google Scholar] [CrossRef]

- Chan, T.H.; Jia, K.; Gao, S.; Lu, J.; Zeng, Z.; Ma, Y. PCANet: A simple deep learning baseline for image classification? IEEE Trans. Image Process. 2015, 24, 5017–5032. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Zhou, C.; Sun, C.; Liu, Z.; Lau, F. A C-LSTM neural network for text classification. arXiv 2015, arXiv:1511.08630. [Google Scholar]

- Singh, S.P.; Kumar, A.; Darbari, H.; Singh, L.; Rastogi, A.; Jain, S. Machine translation using deep learning: An overview. In Proceedings of the 2017 International Conference on Computer, Communications and Electronics (Comptelix), Jaipur, India, 1–2 July 2017; pp. 162–167. [Google Scholar]

- Li, R.; Fu, C.; Yi, W.; Yi, X. Calib-Net: Calibrating the low-cost IMU via deep convolutional neural network. Front. Robot. AI 2022, 8, 772583. [Google Scholar] [CrossRef] [PubMed]

- Jiang, C.; Chen, S.; Chen, Y.; Zhang, B.; Feng, Z.; Zhou, H.; Bo, Y. A MEMS IMU de-noising method using long short term memory recurrent neural networks (LSTM-RNN). Sensors 2018, 18, 3470. [Google Scholar] [CrossRef]

- Chiang, K.W.; Chang, H.W.; Li, C.Y.; Huang, Y.W. An artificial neural network embedded position and orientation determination algorithm for low cost MEMS INS/GPS integrated sensors. Sensors 2009, 9, 2586–2610. [Google Scholar] [CrossRef]

- Sun, S.; Melamed, D.; Kitani, K. IDOL: Inertial deep orientation-estimation and localization. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; pp. 6128–6137. [Google Scholar]

- Narkhede, P.; Walambe, R.; Poddar, S.; Kotecha, K. Incremental learning of LSTM framework for sensor fusion in attitude estimation. PeerJ Comput. Sci. 2021, 7, e662. [Google Scholar] [CrossRef]

- Esfahani, M.A.; Wang, H.; Wu, K.; Yuan, S. OriNet: Robust 3-D orientation estimation with a single particular IMU. IEEE Robot. Autom. Lett. 2019, 5, 399–406. [Google Scholar] [CrossRef]

- Weber, D.; Gühmann, C.; Seel, T. RIANN—A robust neural network outperforms attitude estimation filters. AI 2021, 2, 444–463. [Google Scholar] [CrossRef]

- Kim, W.Y.; Seo, H.I.; Seo, D.H. Nine-axis IMU-based extended inertial odometry neural network. Expert Syst. Appl. 2021, 178, 115075. [Google Scholar] [CrossRef]

- Choi, J.S.; Lee, J.K. Recurrent neural network for nine-axis IMU-based orientation estimation: 3D orientation estimation performance in disturbed conditions. J. Inst. Contr. Robot. Syst. 2022, 18, 123–493. (In Korean) [Google Scholar]

- Perez, L.; Wang, J. The effectiveness of data augmentation in image classification using deep learning. arXiv 2017, arXiv:1712.04621. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 1–48. [Google Scholar] [CrossRef]

- Feng, S.Y.; Gangal, V.; Wei, J.; Chandar, S.; Vosoughi, S.; Mitamura, T.; Hovy, E. A survey of data augmentation approaches for NLP. arXiv 2021, arXiv:2105.03075. [Google Scholar]

- Wei, J.; Zou, K. Eda: Easy data augmentation techniques for boosting performance on text classification tasks. arXiv arXiv:1901.11196, 2019.

- Tran, L.; Choi, D. Data augmentation for inertial sensor-based gait deep neural network. IEEE Access 2020, 8, 12364–12378. [Google Scholar] [CrossRef]

- Li, C.; Tokgoz, K.K.; Fukawa, M.; Bartels, J.; Ohashi, T.; Takeda, K.I.; Ito, H. Data augmentation for inertial sensor data in CNNs for cattle behavior classification. IEEE Sens. Lett. 2021, 5, 1–4. [Google Scholar] [CrossRef]

- Jaafer, A.; Nilsson, G.; Como, G. Data augmentation of IMU signals and evaluation via a semi-supervised classification of driving behavior. In Proceedings of the 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020; pp. 1–6. [Google Scholar]

- Cho, K.; Van Merriënboer, B.; Bahdanau, D.; Bengio, Y. On the properties of neural machine translation: Encoder-decoder approaches. arXiv 2014, arXiv:1409.1259. [Google Scholar]

- Laidig, D.; Caruso, M.; Cereatti, A.; Seel, T. BROAD—A Benchmark for Robust Inertial Orientation Estimation. Data 2021, 6, 72. [Google Scholar] [CrossRef]

- Jaeger, H. A Tutorial on Training Recurrent Neural Networks, Covering BPPT, RTRL, EKF and the “Echo State Network” Approach; GMD Report; German National Research Center for Information Technology: St. Augustin, Germany, 2002. [Google Scholar]

- Wright, L.; Demeure, N. Ranger21: A synergistic deep learning optimizer. arXiv 2021, arXiv:2106.13731. [Google Scholar]

- Smith, L.N.; Topin, N. Super-convergence: Very fast training of neural networks using large learning rates. arXiv 2018, arXiv:1708.07120. [Google Scholar]

- Smith, L.N. Cyclical learning rates for training neural networks. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 24–31 March 2017; pp. 464–472. [Google Scholar]

- Howard, J.; Gugger, S. Fastai: A layered API for deep learning. Information 2020, 11, 108. [Google Scholar] [CrossRef]

- Lee, J.K.; Jung, W.C. Quaternion-based local frame alignment between an inertial measurement unit and a motion capture system. Sensors 2018, 18, 4003. [Google Scholar] [CrossRef] [PubMed]

- Szczęsna, A.; Skurowski, P.; Pruszowski, P.; Pęszor, D.; Paszkuta, M.; Wojciechowski, K. Reference Data Set for Accuracy Evaluation of Orientation Estimation Algorithms for Inertial Motion Capture Systems. In Computer Vision and Graphics; Chmielewski, L.J., Datta, A., Kozera, R., Wojciechowski, K., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2016; pp. 509–520. [Google Scholar]

| Attitude | Heading | Avg. Improvement | |

|---|---|---|---|

| Original | 9.27 | 12.11 | |

| Rotation | 6.59 | 8.26 | 30.4% |

| Bias | 7.32 | 9.49 | 21.3% |

| Noise | 7.98 | 11.03 | 11.4% |

| Rotation and Bias | 6.63 | 8.65 | 28.5% |

| Rotation and Noise | 6.60 | 9.05 | 27.0% |

| Bias and Noise | 7.35 | 9.47 | 21.2% |

| All | 5.99 | 7.86 | 35.2% |

| (a) Results for the test dataset with applied virtual gyroscope bias | |||

| Attitude | Heading | Avg. Improvement | |

| Original | 9.27 | 12.09 | - |

| Rotation | 6.60 | 8.25 | 30.3% |

| Bias | 7.33 | 9.47 | 21.3% |

| Noise | 7.98 | 11.02 | 11.4% |

| Rotation and Bias | 6.63 | 8.64 | 28.5% |

| Rotation and Noise | 6.60 | 9.04 | 27.0% |

| Bias and Noise | 7.37 | 9.45 | 21.2% |

| All | 6.00 | 7.85 | 35.2% |

| (b) Results for the test dataset with applied virtual noise | |||

| Attitude | Heading | Avg. Improvement | |

| Original | 9.27 | 12.11 | - |

| Rotation | 6.59 | 8.26 | 30.4% |

| Bias | 7.32 | 9.49 | 21.3% |

| Noise | 7.98 | 11.03 | 11.4% |

| Rotation and Bias | 6.63 | 8.65 | 28.5% |

| Rotation and Noise | 6.60 | 9.05 | 27.0% |

| Bias and Noise | 7.36 | 9.47 | 21.2% |

| All | 5.99 | 7.85 | 35.2% |

| (c) Results for the test dataset with applied virtual rotation | |||

| Attitude | Heading | Avg. Improvement | |

| Original | 30.43 | 38.57 | - |

| Rotation | 13.00 | 14.63 | 59.7% |

| Bias | 25.98 | 33.79 | 13.5% |

| Noise | 27.24 | 35.99 | 8.6% |

| Rotation and Bias | 12.36 | 15.77 | 59.3% |

| Rotation and Noise | 11.58 | 14.31 | 62.3% |

| Bias and Noise | 25.81 | 31.95 | 16.2% |

| All | 11.60 | 14.45 | 62.2% |

| (d) Results for the dataset from [39] | |||

| Attitude | Heading | Avg. Improvement | |

| Original | 31.49 | 54.77 | - |

| Rotation | 26.40 | 31.24 | 29.6% |

| Bias | 30.90 | 45.16 | 9.71% |

| Noise | 33.72 | 50.82 | 0.05% |

| Rotation and Bias | 24.77 | 32.69 | 30.8% |

| Rotation and Noise | 23.74 | 34.71 | 30.6% |

| Bias and Noise | 34.36 | 49.01 | 0.69% |

| All | 24.63 | 31.28 | 32.3% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, J.S.; Lee, J.K. Effects of Data Augmentation on the Nine-Axis IMU-Based Orientation Estimation Accuracy of a Recurrent Neural Network. Sensors 2023, 23, 7458. https://doi.org/10.3390/s23177458

Choi JS, Lee JK. Effects of Data Augmentation on the Nine-Axis IMU-Based Orientation Estimation Accuracy of a Recurrent Neural Network. Sensors. 2023; 23(17):7458. https://doi.org/10.3390/s23177458

Chicago/Turabian StyleChoi, Ji Seok, and Jung Keun Lee. 2023. "Effects of Data Augmentation on the Nine-Axis IMU-Based Orientation Estimation Accuracy of a Recurrent Neural Network" Sensors 23, no. 17: 7458. https://doi.org/10.3390/s23177458

APA StyleChoi, J. S., & Lee, J. K. (2023). Effects of Data Augmentation on the Nine-Axis IMU-Based Orientation Estimation Accuracy of a Recurrent Neural Network. Sensors, 23(17), 7458. https://doi.org/10.3390/s23177458