Abstract

Autonomous robots heavily rely on simultaneous localization and mapping (SLAM) techniques and sensor data to create accurate maps of their surroundings. When multiple robots are employed to expedite exploration, the resulting maps often have varying coordinates and scales. To achieve a comprehensive global view, the utilization of map merging techniques becomes necessary. Previous studies have typically depended on extracting image features from maps to establish connections. However, it is important to note that maps of the same location can exhibit inconsistencies due to sensing errors. Additionally, robot-generated maps are commonly represented in an occupancy grid format, which limits the availability of features for extraction and matching. Therefore, feature extraction and matching play crucial roles in map merging, particularly when dealing with uncertain sensing data. In this study, we introduce a novel method that addresses image noise resulting from sensing errors and applies additional corrections before performing feature extraction. This approach allows for the collection of features from corresponding locations in different maps, facilitating the establishment of connections between different coordinate systems and enabling effective map merging. Evaluation results demonstrate the significant reduction of sensing errors during the image stitching process, thanks to the proposed image pre-processing technique.

1. Introduction

Autonomous exploration involves the process of robots constructing maps of unfamiliar environments by sensing their surroundings while navigating. Simultaneous Localization and Mapping (SLAM) is a widely adopted approach in autonomous exploration as it enables robots to simultaneously build maps of the environment and determine their own location. The concept of SLAM was initially proposed and discussed in 1986, and it has since gained widespread recognition as a valuable technique [1,2]. Over the years, several commonly used methods have been proposed, starting from the 1990s until the present day. These include EKF-SLAM [3], Fast-SLAM [4], GraphSLAM [5], and others, which have all contributed significantly to the field of SLAM.

Despite advancements in SLAM technology, a single robot is not sufficient to conduct a comprehensive environmental exploration of a large area. As a result, researchers have focused on the development of multi-robot systems (MRS) for the decentralized exploration of environments. MRS involve collaboration among distributed robots, which enhances both work efficiency and system robustness when compared to single-robot systems. However, local maps generated by multiple robots may have different coordinates, making it challenging to integrate them into a global map. Therefore, researchers have explored methods for combining various local maps, which is known as the map merging problem.

This study presents a map merging method that utilizes image features to address the issue of sensing errors, which has received little attention in the literature. Our approach involves using image processing techniques to correct local maps before merging them into a global map. This paper is organized as follows: Section 2 reviews previous methods proposed to address the map merging problem. Section 3 presents a detailed problem statement. In Section 4, we describe our proposed method. Section 5 presents the simulation results and provides a discussion. Finally, Section 6 concludes the study and offers suggestions for future work.

2. Literature Review

Robot mapping utilizes different types of maps, and the methods for merging them may vary. The commonly used maps include occupancy grid maps, feature maps, and topological maps [6]. Occupancy grid maps are widely used and can be easily implemented in any environment [7]. Feature maps require less memory space, but their complex feature extraction process consumes computational resources. Topological maps depict a network structure and are useful for specific tasks such as navigation and path planning. In this study, we use occupancy grid maps as they have abundant applications in the literature.

Map merging methods can be classified as direct or indirect, with most recent methods using indirect approaches. Lee’s survey reviewed several merging methods and classified them into these two types based on their logical structure [8]. Direct methods involve establishing correlations between the sensing data of multiple robots to obtain their relative positions for merging. They can be based on direct observation, where robots use their sensors to observe cooperating robots and determine their relative positions [9], or on identifying common environmental objects, such as ceiling features, to establish relative positions [10]. However, direct methods have the limitation of requiring robots to be in the same field, which restricts their activity range and time allocation.

Indirect methods offer a solution to the limitations of direct methods but come at the expense of increased computational complexity. These methods treat maps as images or 2D matrices, allowing them to overcome temporal and spatial constraints and reduce computational requirements by discarding certain sensing data [8]. Indirect methods often extract and match features to establish correlations between the maps. Once these correlations are obtained, a relative transformation can be calculated by scaling, rotating, and translating the grid cells of the maps. Indirect methods effectively align maps with significant deviations in position or orientation, enhancing their reliability for map merging purposes.

The map images exhibit a diverse range of features, encompassing spectral features of line segments [11] and local extreme features [12]. Depending on the extracted features from these maps, different methods are employed. Carpin [11] proposed a non-iterative and fast algorithm that utilizes the Hough transform to determine the necessary rotation and translation parameters for map merging. This approach performs well in spatially structured environments, such as square rooms. However, it is important to note that this method has certain limitations, such as the requirement for local maps to have the same scale and a sufficient number of overlapping areas for successful merging. On the other hand, Ferrão [12] utilized the scale invariant feature transformation (SIFT) algorithm [13] to extract robust features from occupancy grid maps. This widely-used algorithm identifies extreme grid points in map images and performs feature matching. Although SIFT is commonly employed in computer vision and image stitching problems [14], it is worth noting that typical red-green-blue (RGB) images exhibit a higher diversity of texture, brightness, and color characteristics, enabling the extraction of more features compared to occupancy grid maps with pixel values represented by only three integers denoting free, unknown, and occupied areas. Furthermore, the presence of sensor errors and uncertainties in occupancy grid maps poses additional challenges for feature extraction and matching, leading to a decreased success rate of map merging solutions, as discussed in Jiang’s works [7,15].

In order to overcome the limitations in feature extraction and matching from occupancy grid maps, we present a novel image pre-processing operation aimed at reducing the impact of sensor errors and uncertainty. Our objective is to improve the effectiveness of feature extraction and ultimately address the challenge of map merging. Our proposed map merging approach involves two key steps. Firstly, we apply the image pre-processing technique to mitigate the impact of uncertainty and enhance the efficiency of feature extraction. This step aims to extract significant features from the occupancy grid maps. Secondly, we utilize an image stitching method to merge the pre-processed occupancy grid maps. By combining these steps, we aim to achieve a more effective and reliable map merging solution.

3. Basic Definitions on Map Merging

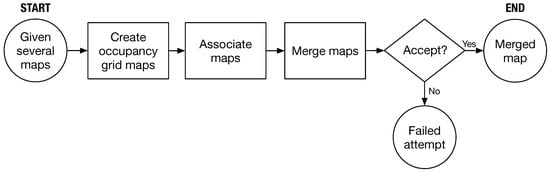

Consider multiple map images obtained by robots. Figure 1 illustrates the process defining the map merging problem in this work. After the maps are obtained, occupancy grid maps are generated based on the availability of each grid element. Two maps are associated by transforming them onto the same basis. The association results in a merging index, which determines whether the maps can be merged into one. If the index is acceptable, the maps are merged, and if not, the merging attempt fails. The rest of this section describes the occupancy grid map, the transformation between two maps, and the index used to evaluate the merged result in detail.

Figure 1.

Basic concept of map merging.

- Create occupancy grid maps: In the context of map merging, we define M as a 2D occupancy grid map with size as shown in Equation (1). Each cell contains a binary value that indicates whether the cell is occupied () or free ().Let be the probability that the cell is occupied. A grid cell could be classified into three conditions:where and are two predefined thresholds.In addition to a binary value, if M represents an image, each cell element in Equation (1) will have a pixel value that can also be used to represent the cell conditions.

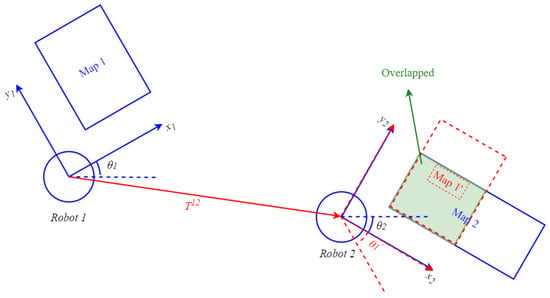

- Associate maps for merging: Consider two maps and with different coordinates, as shown in Figure 2. The transformation matrix between them is denoted as , as defined in Equation (2).

Figure 2. Diagram of overlapping regions of maps and . Original coordinates are expressed by blue solid lines, and transformed coordinates are expressed by red dashed lines.

Figure 2. Diagram of overlapping regions of maps and . Original coordinates are expressed by blue solid lines, and transformed coordinates are expressed by red dashed lines. - Determine acceptance of the merged result: In Equation (3), the acceptance index [11], denoted as , serves as a means to evaluate the merging result when the transformed map is overlaid onto map . The acceptance index considers two types of grids: agreement and disagreement. Agreement represents correctly paired grid cells between and , indicating that both maps have the same type (occupied-occupied or free-free) in the overlapping areas. On the other hand, disagreement signifies incorrect pairings, indicating that the two maps have different types (occupied-free) in those regions. Due to its higher uncertainty, the unknown type is not discussed here.When the transformation accurately aligns the grids of the transferred map with those of map in the overlapping regions, the number of agreement grids increases while the number of disagreement grids decreases. As a result, the acceptance index approaches 1. Conversely, if the transformation is incorrect, the overlapping areas will contain numerous disagree grids, leading to a smaller or even 0 acceptance index . Thus, the acceptance index effectively determines the correctness of the map transformation and reflects the quality of the merging result. In our study, we define an index threshold, as shown in Equation (4), to judge the accuracy of the map transformation.

With an acceptable merged result, we can successfully combine multiple images from robots into a single map. In this study, we use a threshold of and test it in various scenarios. In the next section, we will present our method that builds on the concept flow shown in Figure 1 to handle images that may contain sensing errors in reality.

4. Map Merging Method

In this section, we present our novel image pre-processing method, which effectively mitigates the impact of sensing errors and uncertainties in maps prior to their stitching. The proposed pre-processing operation focuses on eliminating noise and distortion issues in the occupancy grid map through several image processing steps. By applying these steps, we ensure that the existing image-stitching method is unaffected by noise and can seamlessly merge the maps. In what follows, we will describe the existing process of imaging stitching in Section 4.1, and our proposed operation in Section 4.2.

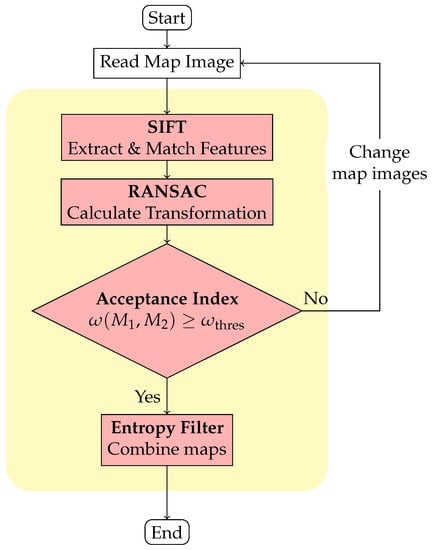

4.1. Existing Method in Image Stitching

We utilize an image-stitching method [16] proposed by Mills for our stitching operation. However, when combining two occupancy grid maps, it is essential to consider their probabilistic information, which differs from regular images. Therefore, we incorporate an entropy filter [17] after calculating the relative transformation using Mills’ method. The complete operation is illustrated in Figure 3. In this operation, two maps and are merged, as shown in Figure 2. First, the scale-invariant feature transform (SIFT) [18,19] is used to extract features from the occupancy grid maps. SIFT applies Gaussian filters to build the scale space of the image and then finds the extreme points that can be used as features under the difference of Gaussians (DoG) in the scale space. The gradient vector around each feature is calculated, and a descriptor with the histogram of the gradient vector is obtained. Therefore, SIFT features are invariant to scale, rotation, and brightness [18,19]. The SIFT algorithm obtains features from and features from . These features are matched as pairs , and by using the Euclidean distance to find the feature in Q that is closest to the feature in P.

Figure 3.

Flowchart of the image stitching technique.

Matching pairs are further processed by applying the random sample consensus algorithm (RANSAC) [20] to eliminate outliers and estimate a relative transformation between the maps. RANSAC effectively exclude outliers and calculate a model that fits the input data by randomly sampling the data. If the inliers (that conform to the real data model) are selected, the correct model can be calculated and outliers can be effectively excluded. Specifically, in our method, RANSAC includes the following three steps.

- Step 1

- Define a ‘Guess’ model.Randomly sample two matched pairs of SIFT features from the input data.Calculate the four variables in the model with the following equations.

- Step 2

- If the transformed pair satisfiesthen the pair conforms to the guess model. is a parameter for selecting data. The number of pairs that match the guess model is recorded. If that number exceeds the highest number previously recorded, the current model is updated as the ‘best’ model .

- Step 3

- Repeat Steps 1 and 2 until the maximum number of iterations is reached and output the best model as .

Once we have obtained , the two maps can be overlapped through the transformation. The quality of the transformation is then evaluated using an acceptance index and a threshold . If the index exceeds the threshold (in this study, we use 0.95), an entropy filter [17] is applied to adjust the map probabilities and combine the maps into a global map. A higher entropy value corresponds to a higher level of uncertainty. Thus, the entropy filter selects probabilities with low uncertainty [17].

Let us assume that the occupied probabilities of a grid in the overlapping areas of two maps and are and , respectively. We can calculate the combined probability by updating the occupancy grid mapping in terms of the log probabilities [21]. This can be done using Equation (12). The corresponding entropy is calculated using Equation (13), and the three entropy values for , , and are listed in Equation (14).

In overlapping areas, the occupancy probability of grids from different maps are considered together and a grid with low uncertainty is selected as the output.

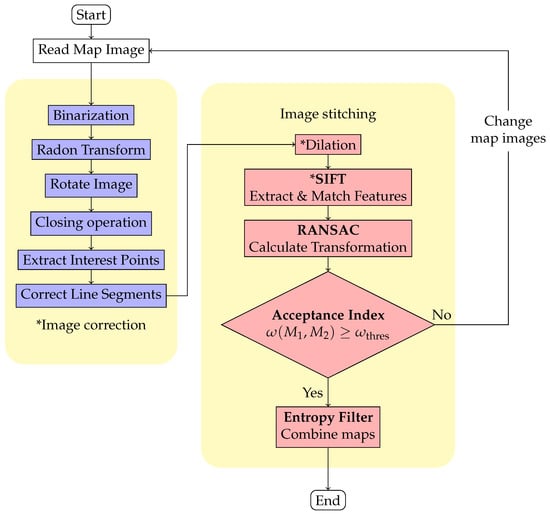

4.2. Proposed Image Pre-Processing Operation

Failed map merging can occur due to uncertainty in the map and distortion caused by sensing errors. To tackle this issue, we applied image correction to the maps, which eliminates uncertainty and distortion, and improves the merge result after feature extraction and matching. Our image correction process involves five steps, which are illustrated in the image correction block of Figure 4.

Figure 4.

Flowchart of proposed method. Blocks with an asterisk “*” indicate added or slightly modified steps.

4.2.1. Image Correction Process

To extract features with matching relationships from occupancy grid maps, it is important to avoid using pixel values of unknown regions with high uncertainty. In order to get around this, we use a method called binarization that separates the map’s regions into “occupied” and “non-occupied” sections. The “occupied” section includes the original occupied area, while the “non-occupied” section consists of the original free and unknown areas.

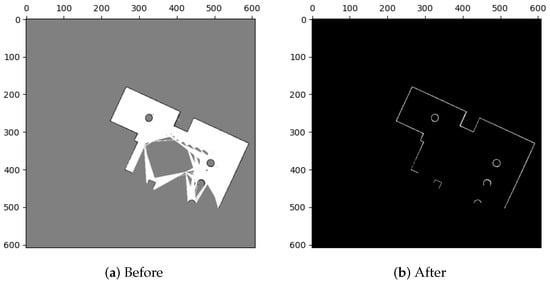

In binarization, each grid is classified using a threshold value . If the pixel value of a grid is less than , it is classified as occupied and assigned a new value of 255. If is greater than or equal to , it is classified as non-occupied, and assigned a new value of 0, as shown in Equation (15). After binarization, the map only contains two types of grids, as illustrated in Figure 5.

Figure 5.

Binarization.

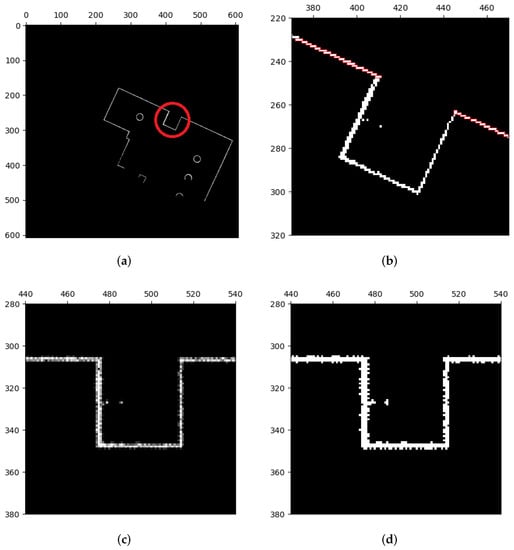

Figure 6b illustrates what can happen when barriers like linear walls on a map are not parallel or perpendicular to the coordinate axes: they can take the form of stairs. We rotate the maps to align linear features with the coordinate axes in order to improve feature extraction. The Radon transform, which is applied to the map, is used to determine the rotation’s angle (ref. [22]). By performing line integration on f, the Radon transform turns the map image into a two-dimensional linear function , , as shown in Equation (16).

Figure 6.

Rotation of map images. (a) Binarized map. Stair shapes of linear features are marked with a red circle. (b) The zoomed part of (a). (c) The rotated image of (b). Stair shapes are roughly eliminated, but some pixel values of grids are unequal to 0 or 255 due to interpolation. (d) Implement binarization to classify pixel values. Stair shapes are still eliminated but some uneven burrs happen instead. These defects will be eliminated by image closing.

The longest line in the original map image corresponds to the greatest value of the linear function following the Radon transform on the occupancy grid map, as shown in Figure 7. The rotation angle for the map can be calculated as the angle between this longest line and the coordinate axis. However, as illustrated in Figure 6c, some grids’ pixel values may no longer be equal to 0 or 255 as a result of rotation’s interpolation calculations. Therefore, as shown in Figure 6d, we apply extra binarization to make sure that the map image only comprises occupied and unoccupied areas. We use methods from image morphology [23] to fix instances where the map image has uneven burrs and imperfections as a result of prior operations.

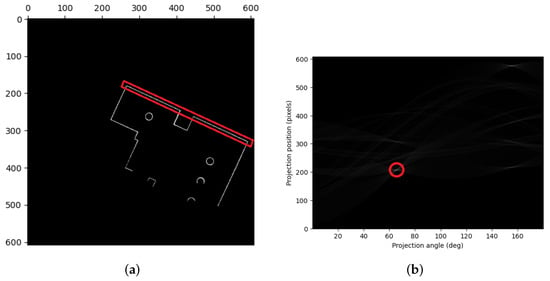

Figure 7.

Radon transform. (a) Map image. The longest line is marked with a red rectangle. (b) Two-dimensional space linear function . The largest value is marked with a red circle.

The dilation operation from image morphology moves the structuring element S across each grid of the image M when given an image M and S. When S intersects with the grids surrounding , the value of is set to 255; otherwise, it is set to 0. While the foreground regions of image M are expanded by the dilation operation, the erosion operation, reduces the foreground regions of the image M. Erosion process sets the value of to 255 only if S belongs to grids surrounding ; otherwise, it is set to 0.

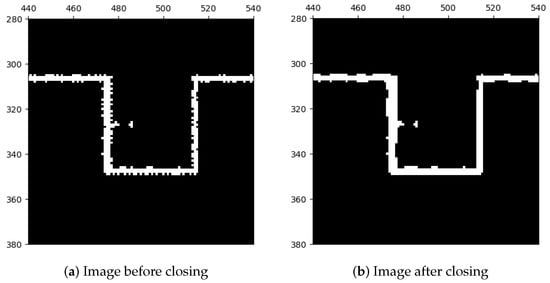

To improve the image, we performed a closing operation as shown in Figure 8, which applies dilation followed by erosion. This operation expands the foreground regions (i.e., the occupied image areas), fills gaps and defects by dilation, and then restores the area to its original size by erosion. The closing operation can be represented mathematically by Equation (17), where the ⊕, ⊖, and • operators represent dilation, erosion, and closing, respectively.

Figure 8.

Closing operation.

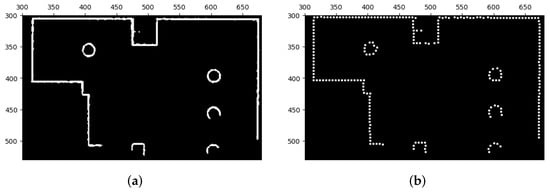

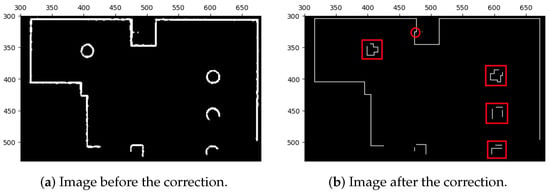

After performing the closing operation, the preliminary correction of the lines in the occupied areas has been completed. However, to further improve the quality of the lines and increase their straightness, remove defects, and normalize their widths, we applied another correction method before using the feature extraction algorithm. This correction method consists of two parts—the first part involves sampling interest points from the current lines as depicted in Figure 9 and the second part involves redrawing new lines based on these interest points as shown in Figure 10.

Figure 9.

Extraction of interest points. (a) Image before the extraction of interest points. (b) Image after the extraction of interest points.

Figure 10.

Correction of line segments of map images with extracted interest points. Certain geometric shapes that are sensitive to orientation might be influenced by the correction, as indicated by the red boxes and circles. Nevertheless, we can still extract comparable features and subsequently match them during the image stitching process. This is possible because the majority of extracted features are derived from the corrected line segments, while only a small portion originates from these orientation-sensitive shapes.

The extraction of interest points from the lines is performed by Algorithm 1, which creates a new image with all interest points. To achieve this, the structuring element S is moved across the original image M in a similar way to previous image morphology operations. If S intersects with the grids surrounding , a marker value of 255 is assigned to the grid , and the original grids surrounding are deleted by setting their values to 0. After the movement of the structuring element S is completed on each grid of M, all interest points are identified and marked on image .

| Algorithm 1 Extraction of interest points of map images. |

| Input: M: map image; S: structuring element; Output: : map image with interest points

|

Algorithm 2 is the final step of our proposed image correction process, presented in Figure 4, which aims to correct the line segments in the occupied area. The algorithm takes the image with interest points and the image M after closing as inputs, along with the distance parameter L, to ensure that the new line segments resemble the original data with some uncertainty and are parallel to the coordinates. The algorithm finds points on the map that form line segments and corrects them using the following five steps:

- Step 1

- Find the reference point closest to the origin using the Manhattan distance in Equation (18).

- Step 2

- Find the target point closest to the reference point as presented in Equation (19).

- Step 3

- Connect and with a line, and all grids () that the line intersects with are recorded. The position of these n grids is then used to calculate the ratio of occupied areas on the original image M, as shown in Equation (20).The line connecting and was present in the original image and can be corrected if the calculated ratio exceeds the defined threshold , . If not, the algorithm picks a new target point and repeats Step 2 in that case.

- Step 4

- Calculate a vector as Equation (21) if the line between and can be corrected.According to , we then take as a reference and translate . The translation follows the following rule.

- (1)

- If , translate grid in x-direction. For example, moves to ;

- (2)

- If , translate grid in y-direction. For example, moves to ;

- (3)

- If , add an additional grid as a target point according to the previous direction and perform the translation again. For example, if the previous direction is:

- x-direction, then add a new grid as a new target point;

- y-direction, then add a new grid as a new target point;

- none (no previous direction), then abandon the translation and select another reference and target points.

In the aforementioned translation, shifts or additions of grids are performed. To ensure that the correction does not differ excessively from the original image, we again connect the grid and the shifted (or added) grid (or ) on a line, record all n grids that the line passes through, and calculate the ratio of occupied areas at the same positions on original image M. This calculation is the same as Equation (20). If ratio is greater than , the correction is accepted and the occupied grids on the connected line will be stored to corrected image . If not, the correction is rejected and the original information from image M will be stored to corrected image . - Step 5

- According to the result of Step 4, the correction point (or ) and can be used as a new reference point to find a new adjacent target point. Steps 3 to 5 are repeated until the correction is completed. Once the correction is completed, the corrected image, , is output as the result.

| Algorithm 2 Corrected occupancy grid maps with interest points. |

| Input: M: map image; : map image with interest points; L: distance parameter; Output: : map image after correction

|

4.2.2. Image Stitching with ICP

After the image correction, the map images only contain line segments with consistent width that are parallel to the coordinate axes. Features from these maps can then be extracted to enable image stitching. However, feature extraction involves the extraction of the extreme points in the scale space of the image, a certain occupancy proportion is required to effectively detect the extreme points in the scale space. In our experiments, an additional dilation operation might be required before extracting features when we have insufficient proportion of occupied areas in the map. In addition, most extracted features are roughly located at the corner or the end of the line segments where their neighborhoods are overly similar, causing the feature descriptors to be similar and preventing matching using the Euclidean distance. Instead, we used brute-force matching, as in Equation (22).

where and are features from maps and , respectively.

When using RANSAC to merge corrected map images, slight deviations may occur. Therefore, we first performed an initial alignment of the maps using the RANSAC model and then applied the Iterative Closest Point (ICP) algorithm to achieve a more precise alignment.

ICP is a point cloud matching and alignment method that utilizes the least square difference to calculate a spatial transformation and minimize the distance between two input point clouds to achieve alignment [24,25]. In our approach, we use the occupied grids (, ) from the original maps (, ) as point clouds and applied the ICP algorithm to obtain a model for the second alignment. To ensure good initial conditions, we transformed the point clouds, , as described in Equation (23).

During each iteration of the ICP algorithm, every point in the set is matched with its corresponding point in the point set to establish a correspondence relationship of . Using this correspondence relationship, the ICP model for the second alignment can be obtained by solving Equation (24).

and are rotation and translation matrices, respectively. With and , a final model is obtained by combining these two models:

5. Results & Discussion

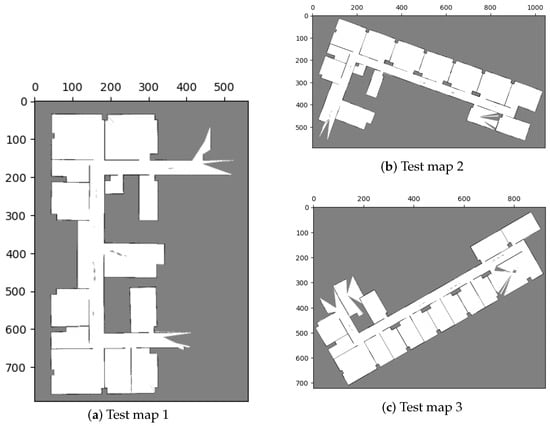

In this section, we provide a comprehensive performance comparison between the utilization of our proposed image pre-processing method and the absence of such pre-processing in two distinct cases, as detailed and analyzed in Section 5.1 and Section 5.2, respectively. Additionally, we present the corresponding merging results for each case to support our findings. Additionally, both of these cases are carried out within the Robotic Operating System (ROS) [26] and the Gazebo virtual world [27]. Moreover, we utilize various open-source algorithms, including OpenCV [28] for feature extraction and Gmapping-based SLAM for mapping the environment.

5.1. Scenario 1: Performance of Our Proposed Method

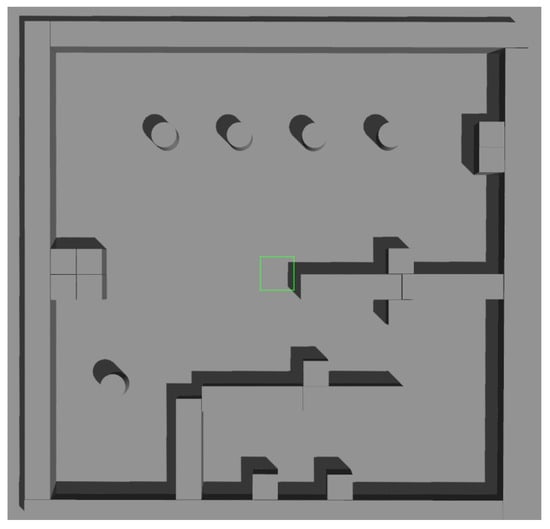

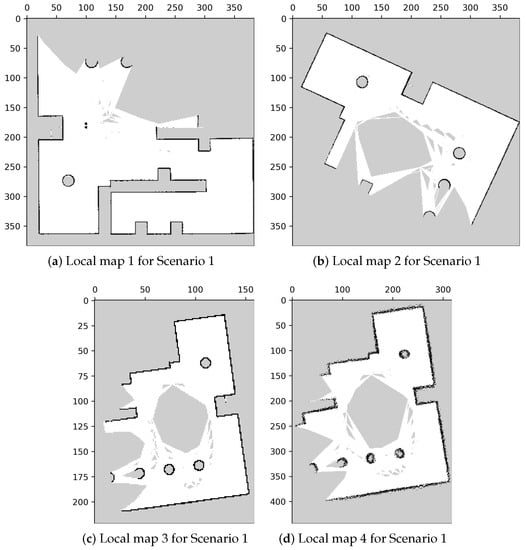

A simulation environment was created as shown in Figure 11. In this environment, we created four local maps with different parameters, as illustrated in Figure 12, and the specific parameters are listed in Table 1.

Figure 11.

Simulation environment of Scenario 1.

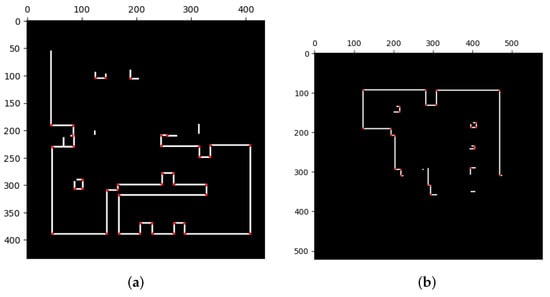

Figure 12.

Local maps of the environment in Scenario 1.

Table 1.

Parameters used in mapping.

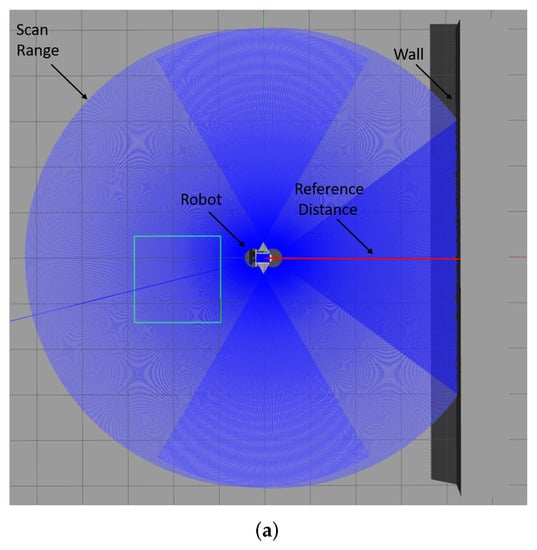

In Figure 12, the displayed maps exhibit variations in coordinates due to differences in rotation and translation resulting from mapping from various origin positions. Additionally, we manipulated the resolutions of the maps to create variations in their scales. Moreover, we investigated the impact of sensing errors on the map quality, as depicted in Figure 13. In Figure 13a, the robot is positioned at the center, and the blue area represents the LiDAR scanning range. The long object on the right depicts the wall being measured, with the red line indicating the reference distance of 4 m from the robot to the wall. We adjusted the LiDAR signal with different sensor errors, represented by standard deviation, and mapped the wall into occupancy grid maps. The effects of sensing errors can be observed in Figure 13b–e. Mapping with a lower standard deviation of LiDAR measurement (Figure 13) resulted in a more accurate representation of the wall (Figure 13c). Conversely, mapping with a higher standard deviation of LiDAR measurement (Figure 13d) led to a distorted representation of the wall (Figure 13e). These findings highlight the importance of low standard deviation in LiDAR measurements for constructing accurate maps.

Figure 13.

The impact of sensing errors on mapping accuracy. As the standard deviation of LiDAR measurements increases, the resulting occupancy grid maps become more distorted and less accurate. (a) Simulation environment for lidar sensing errors. (b) Standard deviation of measurement of LiDAR = 0.005. (c) Standard deviation = 0.005. The line of occupied grids is straight. (d) Standard deviation of measurement of lidar = 0.05. (e) Standard deviation = 0.05. The line of occupied grids is distorted.

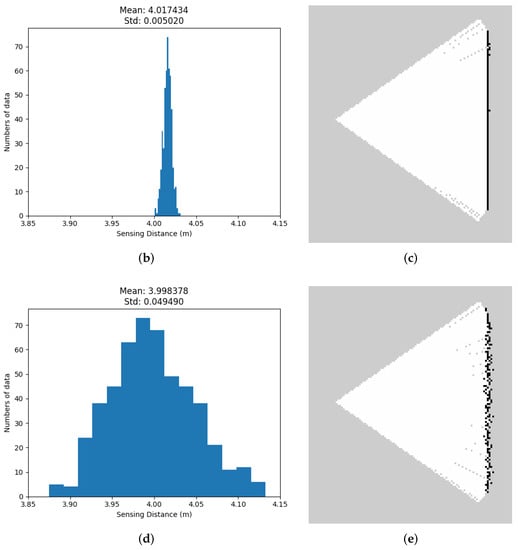

Table 2 outlines the relationships between the local maps to be merged, encompassing three distinct data sets. The first set involves merging maps with differing coordinates, while the second set introduces an additional scale difference. The third set focuses on the impact of sensing errors. In the subsequent sections, we will discuss the merging outcomes with and without the utilization of our proposed method.

Table 2.

Sets of the local maps to be merged.

5.1.1. Results without Image Pre-Process—Mills’ Method [16]

The merging results for the three data sets without the image pre-process (i.e., using Figure 3) are shown in Figure 14, and the corresponding acceptance indices are listed in Table 3.

Figure 14.

Merging results with the existing method in test case 1. In (b–d), the green areas represent correct pairings of grids, whereas the red areas represent incorrect pairings. Among these results, only the result of Set 1 correspond to the environment (a).

Table 3.

Corresponding acceptance indices for merged sets in test case 1 by using the existing method.

Only data set 1 (Figure 14a) produces a matching result with the environment. To evaluate the merging performance, Table 3 shows the acceptance indices for the three data sets. The results suggest that a successful merging threshold should fall between 0.966 and 0.935. That is the reason we set the merging threshold, , to 0.95 throughout the study.

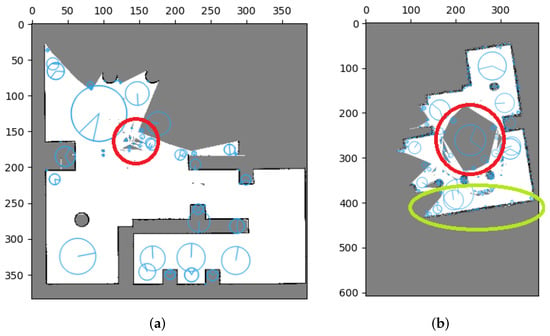

Mills’ method showed ineffective results when merging these occupancy grid maps, mainly due to feature matching errors. During feature extraction, extreme points are calculated based on the value and distribution of pixels, leading to the inclusion of unknown areas with high uncertainty and generating unnecessary features without matching relationships between maps. This issue is highlighted in Figure 15 where red circles indicate these errors. Furthermore, sensing errors can also result in the extraction of features without matching relationships. A smaller number of features were extracted in the occupied area of Figure 15a, while a larger number of features were extracted in the occupied area of Figure 15b (marked in green circles). These feature extraction errors lead to ineffective map merging, as evidenced by our results.

Figure 15.

Error effect for SIFT features in occupancy grid maps. Uncertainty can lead to the presence of redundant features (indicated by red circles), while distortion in occupied areas can also result in the same issue (highlighted by green circles). (a) Error effect for SIFT features in Local map 1. (b) Error effect for SIFT features in Local map 4.

Consequently, inaccurate matching relations can lead to errors in calculating the relative transformation and ultimately result in unsuccessful merges. However, our proposed method provides an effective solution to address this issue. We performed tests on the same datasets, applying our image pre-processing technique before utilizing Mills’ method. The results of these tests will be thoroughly examined and discussed in Section 5.1.2.

5.1.2. Results with Image Pre-Process

Figure 16 presents the results of feature extraction after applying our proposed image pre-processing. It is notable that features are selectively extracted only at the corners of occupied areas. Unlike Figure 15, this approach effectively excludes the influence of uncertainties and sensing errors on feature extraction, making it more efficient for true matches of features and the subsequent stitching method. Furthermore, Figure 17 displays the merging results for the three datasets obtained by applying our image pre-processing operation before utilizing Mills’ method (as depicted in Figure 4). The corresponding acceptance indices are presented in Table 4. The results demonstrate a close resemblance to the characteristics of the simulated environment. Moreover, acceptance indices surpassing the predetermined threshold () signify successful merges. These findings validate that our proposed operation effectively eliminates the impact of sensing errors and uncertainties in occupancy grid maps, resulting in accurate feature matching and correct map merging. Furthermore, Table 5 illustrates the superior performance of the stitching method when coupled with our image pre-processing operation compared to the method without such an operation.

Figure 16.

Evaluation of SIFT feature extraction performance with image pre-processing in occupancy grid maps. The extracted features are strategically located at the corners of occupied areas, effectively mitigating the influence of uncertainty and sensing errors. (a) Extraction of SIFT features in corrected Local map 1. (b) Extraction of SIFT features in corrected Local map 3.

Figure 17.

Merging results with our proposed method in test case 1. All the results of the three sets correspond to the environment [Figure 14a].

Table 4.

Corresponding acceptance indices for merged sets in test case 1 by using the reference method.

Table 5.

Comparison between existing method and our method.

Additionally, to demonstrate that our proposed method can merge multiple maps simultaneously, we created tests in various environments, which will be discussed in greater detail in the subsequent section.

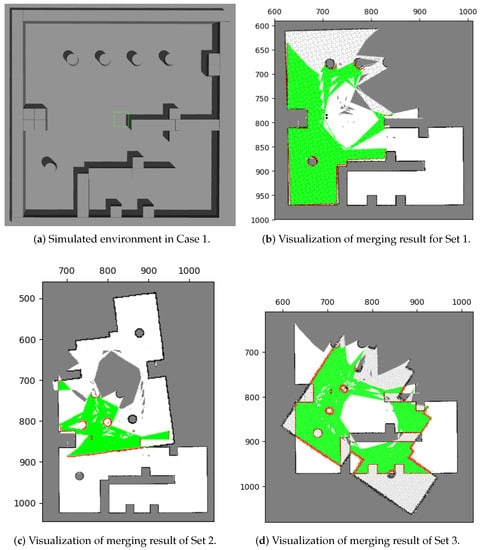

5.2. Scenario 2: Merging Multiple Maps

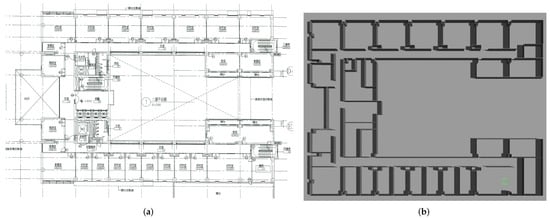

To assess the capability of our method to merge multiple maps simultaneously, we conducted a test in our research office situated in Yonglin Biomedical Engineering Hall, Taipei, Taiwan. In order to expedite the experiment, we simulated the environment using floor plans that faithfully replicated the real-world setting, as shown in Figure 18. Within this simulated environment, we created three distinct local maps, as depicted in Figure 19, and the corresponding parameters can be found in Table 6 and Table 7.

Figure 18.

Environment of test case 2. (a) Floor plan of Yonglin biomedical engineering hall. (b) Simulation environment of Yonglin biomedical engineering hall.

Figure 19.

Local maps of Yonglin biomedical engineering hall.

Table 6.

Parameters for local maps in test case 2.

Table 7.

Sets of the local maps to be merged.

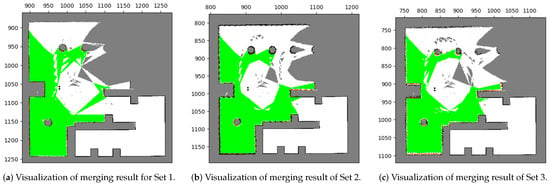

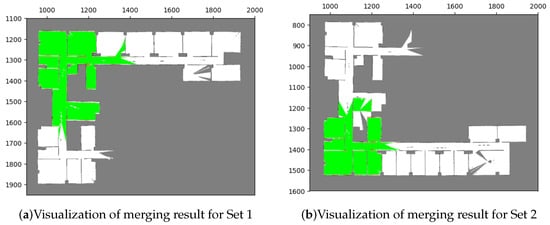

Results with the Proposed Method

The merging results for these two sets are illustrated in Figure 20, and it can be observed that most of the overlapping grids have accurate pairings (green areas), with only few grids having incorrect ones (red areas). These red grids are caused by minor errors due to image rotation. The results indicate that the merged maps are consistent with the upper and lower parts of the environment. Additionally, the corresponding acceptance indices are listed in Table 8, both of which exceed the threshold value (), indicating successful merges.

Figure 20.

Merging results with our proposed method in test case 2. The visualization indicates that the results conform with the simulation environment (Figure 18).

Table 8.

Corresponding acceptance indices for merged sets in test case 2 by using our proposed method.

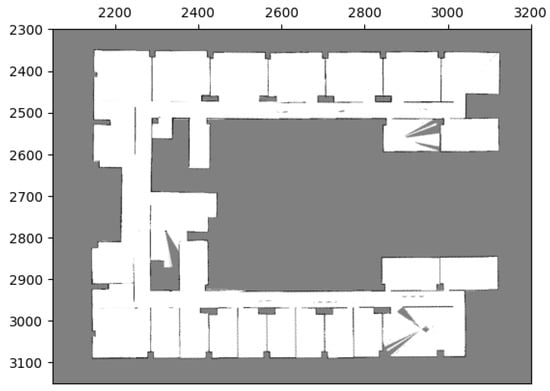

Finally, the merging operations generate transformations that allow for the combination of the three local maps. By transforming these maps into the same coordinate system and processing their occupancy probabilities through the entropy filter, a global map can be obtained. The resulting global map is presented in Figure 21.

Figure 21.

Global maps of Yonglin biomedical engineering hall by merging three local maps in Figure 19.

These two cases demonstrate the effectiveness of our proposed method in solving the problem of merging occupancy grid maps.

6. Conclusions

In conclusion, we have introduced a novel method for merging occupancy grid maps by utilizing image features to calculate the relative relationships between the maps. Our approach combines the proposed image pre-processing operation with an existing image stitching method, allowing for seamless integration with multi-robot systems without the need for physical interactions, thus enhancing flexibility.

Furthermore, we have identified that occupancy grid maps are susceptible to sensing errors and possess low pixel values, which can result in a reduced number of extracted features or inaccurate feature matching. To address this, we have implemented a pre-processing operation to rectify the map images prior to feature extraction and matching. This operation effectively eliminates the impact of uncertainty and sensing errors, enabling the extraction of features at specific positions. Consequently, this facilitates the accurate calculation of the relative transformation and ensures successful map merging.

We conducted rigorous testing of our method in two distinct environments that resemble typical living spaces. The results of these tests demonstrate the effectiveness of our method in merging maps with varying coordinates, scales, and the ability to merge multiple local maps into a global map simultaneously. Overall, our proposed method offers a robust and flexible approach to map merging for multi-robot systems, providing valuable advancements in this field.

Author Contributions

Conceptualization, Y.-L.C. and K.-Y.C.; Methodology, Y.-L.C. and K.-Y.C.; Software, Y.-L.C.; Validation, Y.-L.C.; Formal analysis, Y.-L.C.; Investigation, K.-Y.C.; Data curation, K.-Y.C.; Writing – original draft, Y.-L.C.; Writing—review & editing, K.-Y.C.; Project administration, K.-Y.C.; Funding acquisition, K.-Y.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Science and Technology Council, Taipei, Taiwan, R.O.C. grant number NSTC-110-2221-E-002-136-MY3.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing not applicable. No new data were created or analyzed in this study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Durrant-Whyte, H.; Bailey, T. Simultaneous localization and mapping: Part I. IEEE Robot. Autom. Mag. 2006, 13, 99–110. [Google Scholar] [CrossRef]

- Bailey, T.; Durrant-Whyte, H. Simultaneous localization and mapping (SLAM): Part II. IEEE Robot. Autom. Mag. 2006, 13, 108–117. [Google Scholar] [CrossRef]

- Dissanayake, M.G.; Newman, P.; Clark, S.; Durrant-Whyte, H.F.; Csorba, M. A solution to the simultaneous localization and map building (SLAM) problem. IEEE Trans. Robot. Autom. 2001, 17, 229–241. [Google Scholar] [CrossRef]

- Montemerlo, M.; Thrun, S.; Koller, D.; Wegbreit, B. FastSLAM: A factored solution to the simultaneous localization and mapping problem. In Proceedings of the AAAI National Conference on Artificial Intelligence, Edmonton, AB, Canada, 28 July–1 August 2022; pp. 593–598. [Google Scholar]

- Grisetti, G.; Kümmerle, R.; Stachniss, C.; Burgard, W. A tutorial on graph-based SLAM. IEEE Intell. Transp. Syst. Mag. 2010, 2, 31–43. [Google Scholar] [CrossRef]

- Yu, S.; Fu, C.; Gostar, A.K.; Hu, M. A Review on Map-Merging Methods for Typical Map Types in Multiple-Ground-Robot SLAM Solutions. Sensors 2020, 20, 6988. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Z.; Zhu, J.; Jin, C.; Xu, S.; Zhou, Y.; Pang, S. Simultaneously merging multi-robot grid maps at different resolutions. Multimed. Tools Appl. 2020, 79, 14553–14572. [Google Scholar] [CrossRef]

- Lee, H.C.; Lee, S.H.; Lee, T.S.; Kim, D.J.; Lee, B.H. A survey of map merging techniques for cooperative-SLAM. In Proceedings of the 2012 9th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Daejeon, Republic of the Korea, 26–29 November 2012; pp. 285–287. [Google Scholar]

- Konolige, K.; Fox, D.; Limketkai, B.; Ko, J.; Stewart, B. Map merging for distributed robot navigation. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003) (Cat. No. 03CH37453), Las Vegas, NV, USA, 27–31 October 2003; Volume 1, pp. 212–217. [Google Scholar]

- Lee, H.S.; Lee, K.M. Multi-robot SLAM using ceiling vision. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 10–15 October 2009; pp. 912–917. [Google Scholar]

- Carpin, S. Fast and accurate map merging for multi-robot systems. Auton. Robot. 2008, 25, 305–316. [Google Scholar] [CrossRef]

- Ferrão, V.T.; Vinhal, C.D.N.; da Cruz, G. An occupancy grid map merging algorithm invariant to scale, rotation and translation. In Proceedings of the 2017 Brazilian Conference on Intelligent Systems (BRACIS), Uberlândia, Brazil, 2–5 October 2017; pp. 246–251. [Google Scholar]

- Lindeberg, T. Scale invariant feature transform. Scholarpedia 2012, 7, 10491. [Google Scholar] [CrossRef]

- Arya, S. A review on image stitching and its different methods. Int. J. Adv. Res. Comput. Sci. Softw. Eng. 2015, 5, 299–303. [Google Scholar]

- Jiang, Z.; Zhu, J.; Li, Y.; Wang, J.; Li, Z.; Lu, H. Simultaneous merging multiple grid maps using the robust motion averaging. J. Intell. Robot. Syst. 2019, 94, 655–668. [Google Scholar] [CrossRef]

- Mills, A.; Dudek, G. Image stitching with dynamic elements. Image Vis. Comput. 2009, 27, 1593–1602. [Google Scholar] [CrossRef]

- Fox, D.; Burgard, W.; Thrun, S.; Cremers, A.B. Position estimation for mobile robots in dynamic environments. In Proceedings of the Fifteenth National Conference on Artificial Intelligence and Tenth Innovative Applications of Artificial Intelligence Conference, AAAI 98, IAAI 98, Madison, WI, USA, 26–30 July 1998; pp. 983–988. [Google Scholar]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Corfu, Greece, 20–27 September 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Thrun, S. Learning occupancy grid maps with forward sensor models. Auton. Robot. 2003, 15, 111–127. [Google Scholar] [CrossRef]

- Helgason, S.; Helgason, S. The Radon Transform; Springer: Berlin/Heidelberg, Germany, 1980; Volume 2. [Google Scholar]

- Haralick, R.M.; Sternberg, S.R.; Zhuang, X. Image analysis using mathematical morphology. IEEE Trans. Pattern Anal. Mach. Intell. 1987, PAMI-9, 532–550. [Google Scholar] [CrossRef] [PubMed]

- Besl, P.J.; McKay, N.D. Method for registration of 3-D shapes. In Sensor Fusion IV: Control Paradigms and Data Structures; International Society for Optics and Photonics: Bellingham, WA, USA, 1992; Volume 1611, pp. 586–606. [Google Scholar]

- Huang, T.S.; Blostein, S.D.; Margerum, E. Least-squares estimation of motion parameters from 3-D point correspondences. In Proceedings of the IEEE Conference Computer Vision and Pattern Recognition, Miami Beach, FL, USA, 22–26 June 1986; pp. 112–115. [Google Scholar]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. In Proceedings of the ICRA Workshop on Open Source Software, Kobe, Japan, 12–17 May 2009; Volume 3, p. 5. [Google Scholar]

- Koenig, N.; Howard, A. Design and use paradigms for gazebo, an open-source multi-robot simulator. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE Cat. No. 04CH37566), Sendai, Japan, 28 September–2 October 2004; Volume 3, pp. 2149–2154. [Google Scholar]

- Bradski, G. The openCV library. Dobb’S J. Softw. Tools Prof. Program. 2000, 25, 120–123. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).