Master–Slave Control System for Virtual–Physical Interactions Using Hands

Abstract

1. Introduction

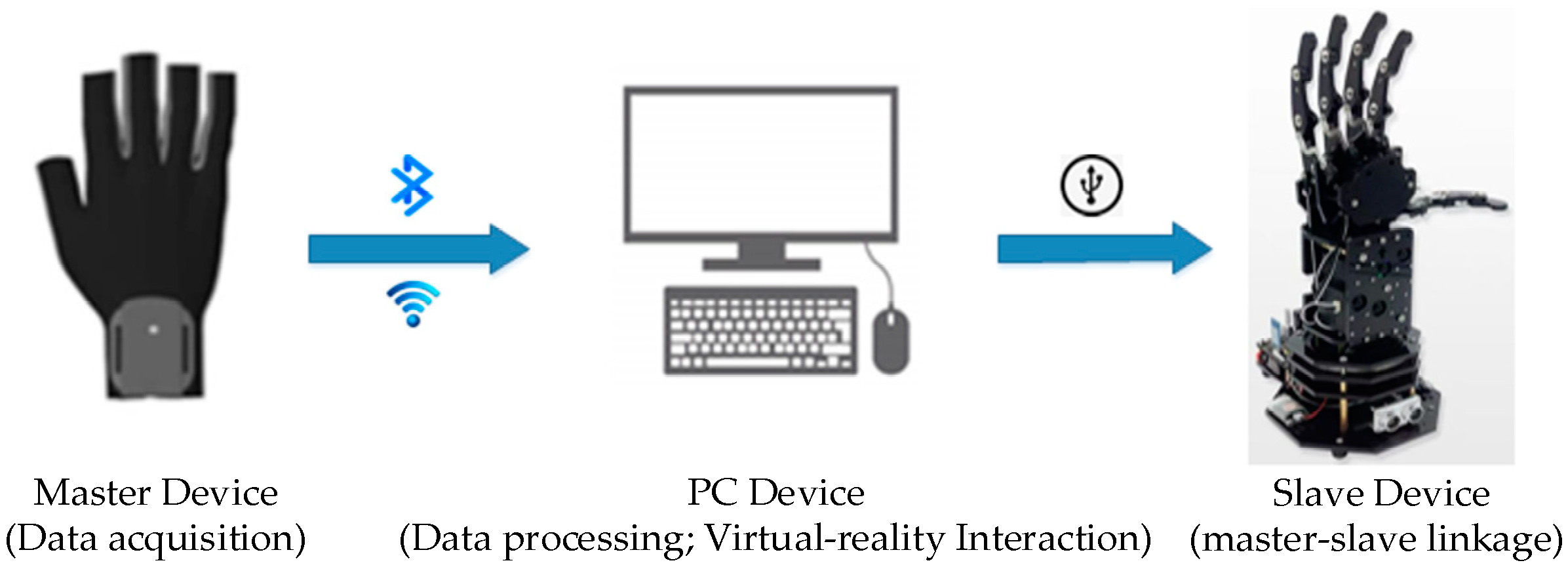

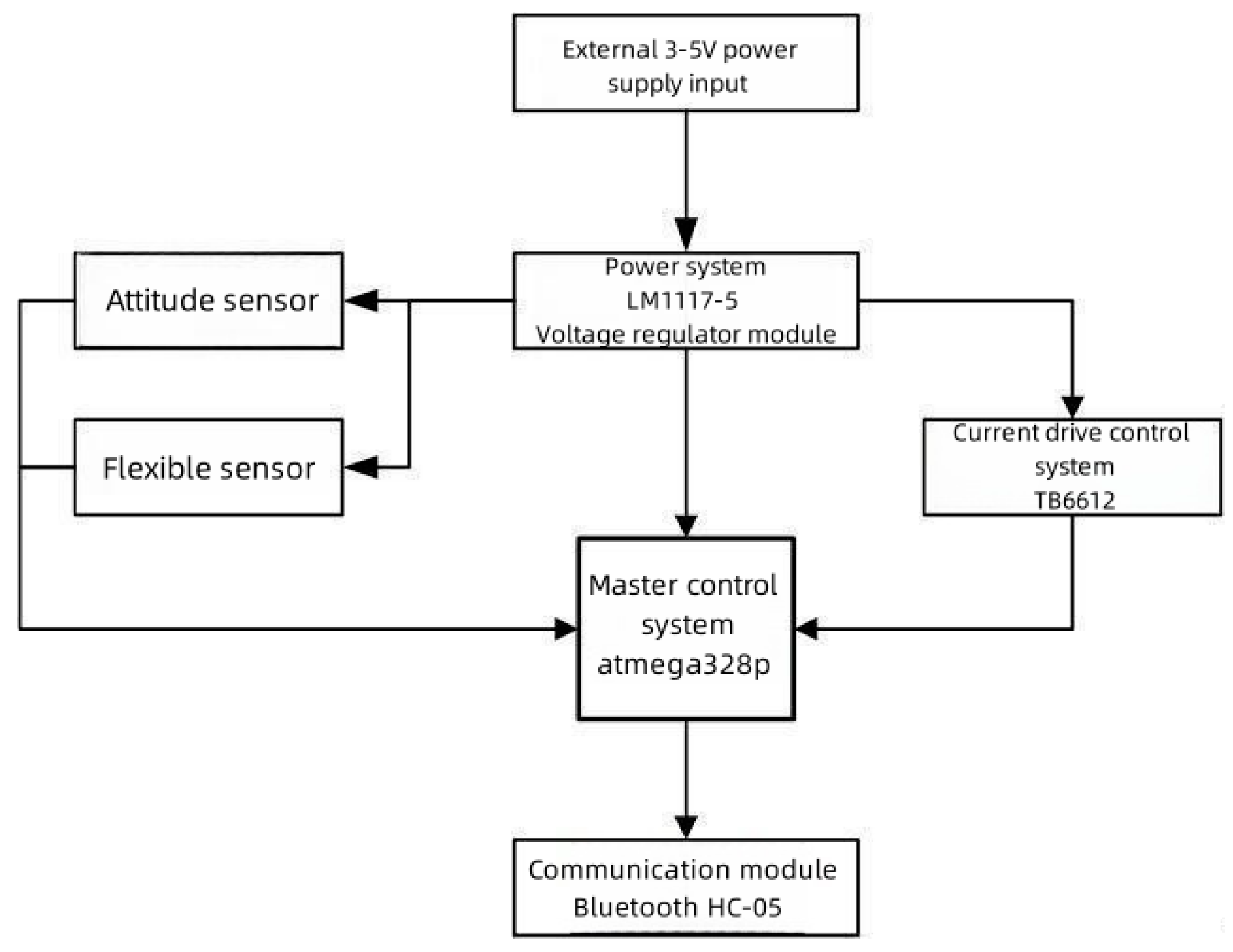

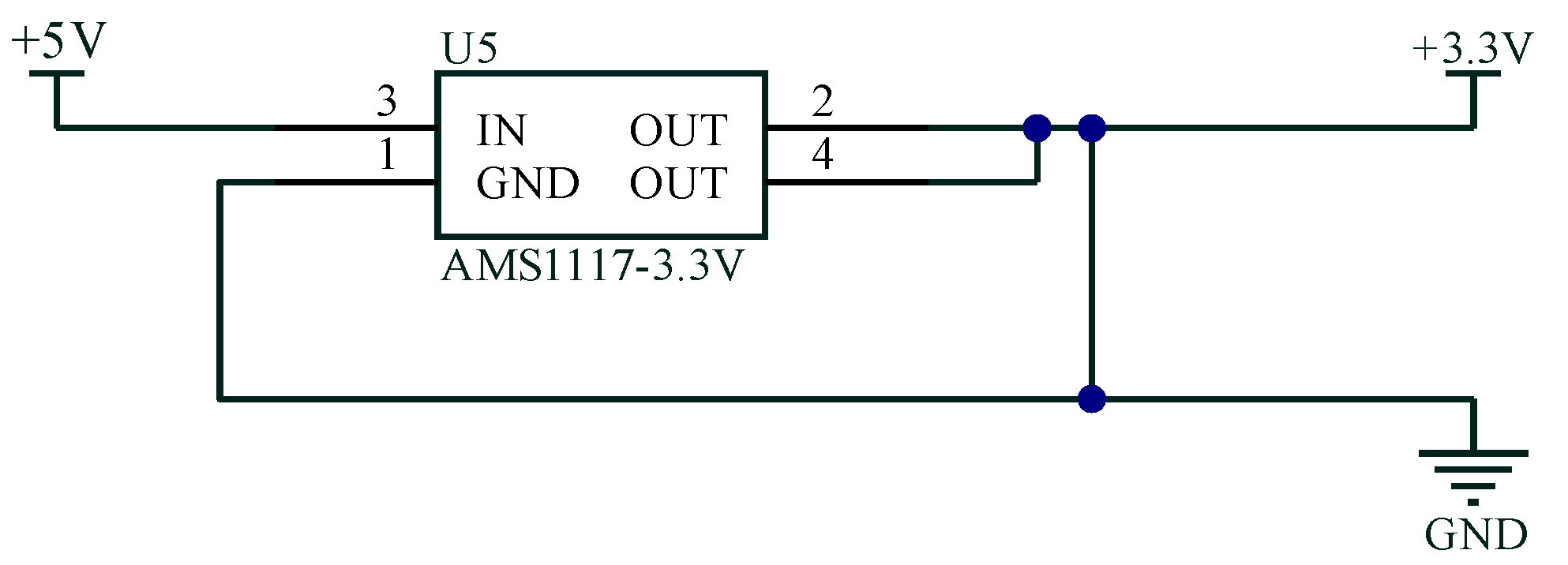

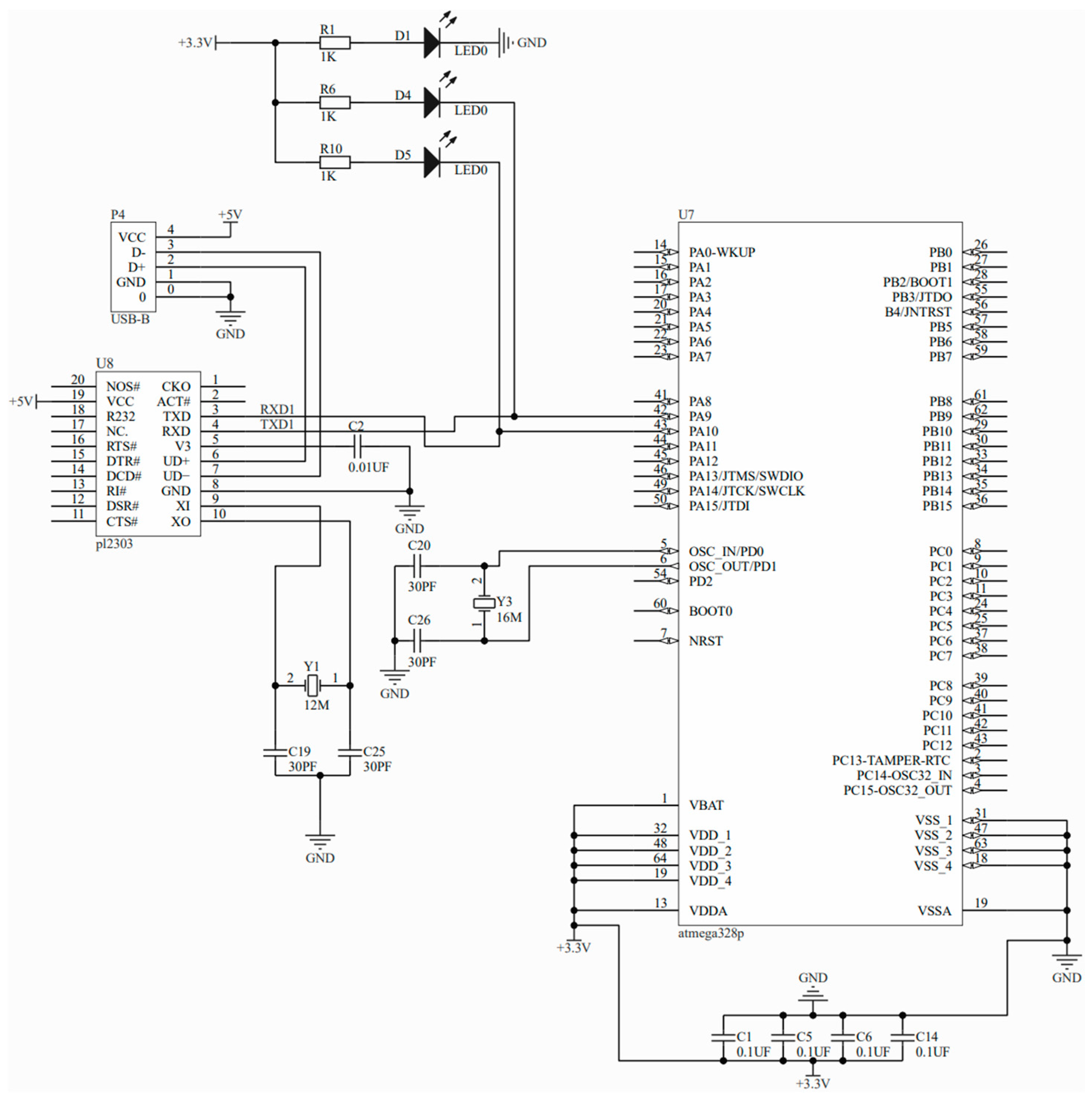

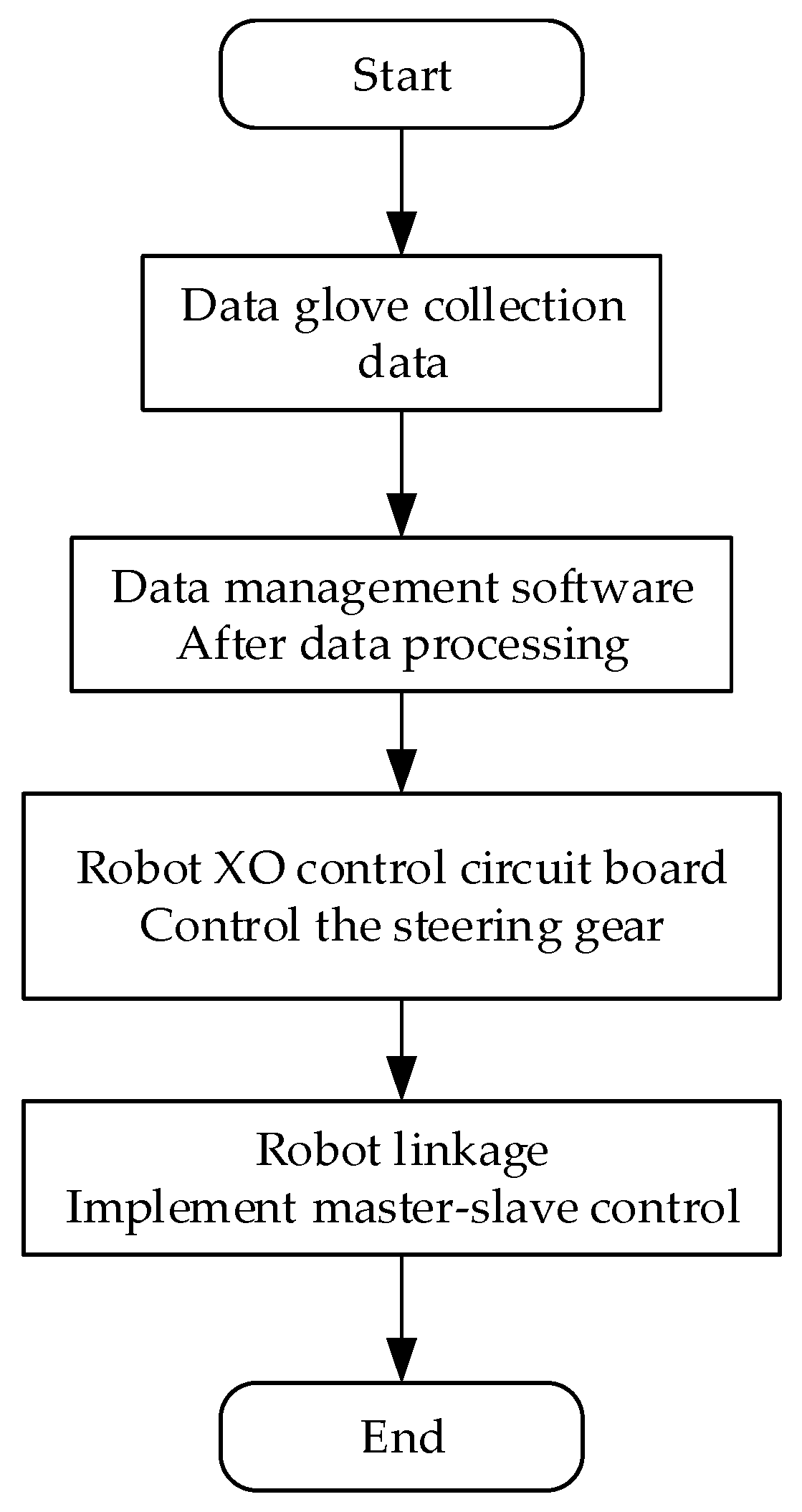

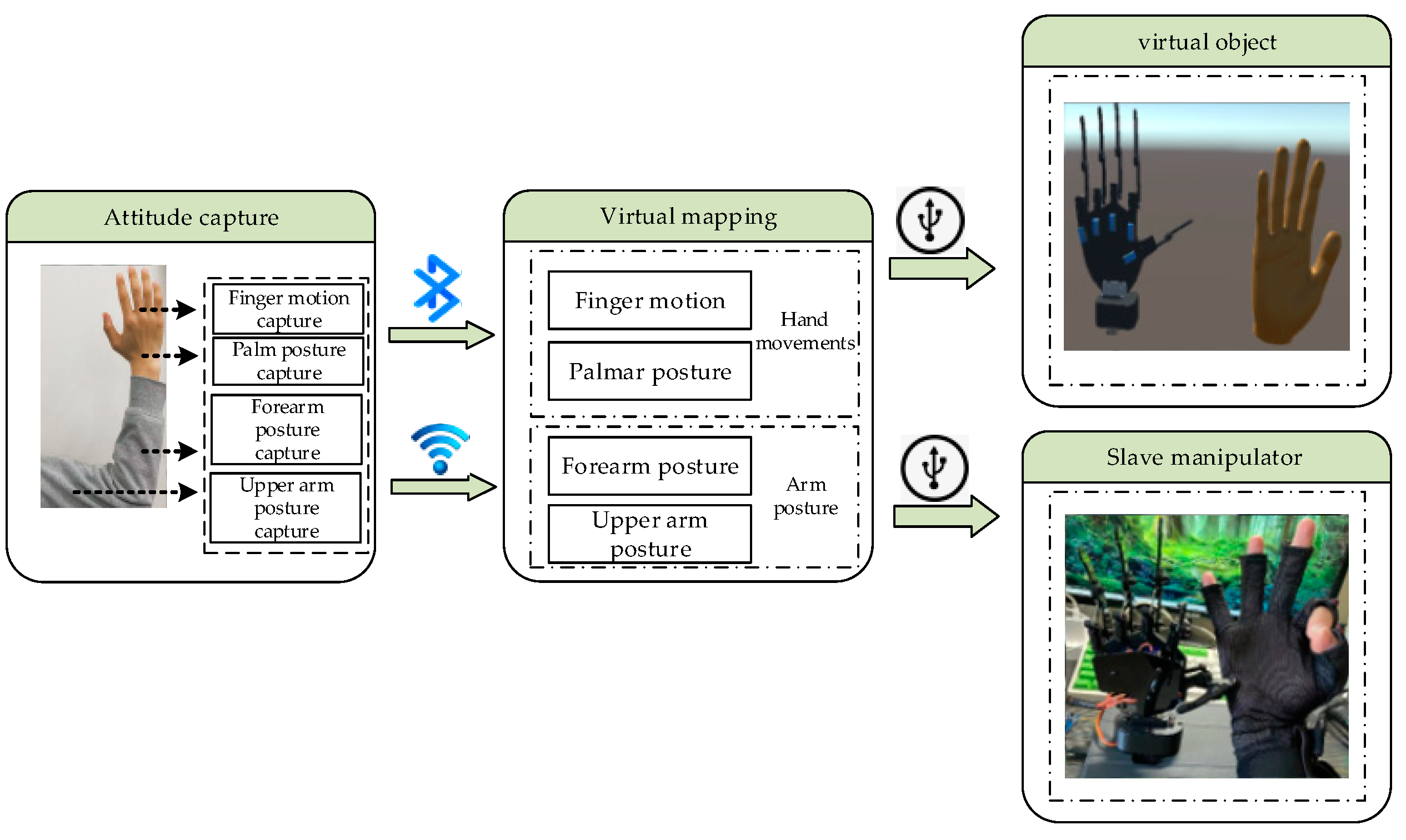

2. Structure of the System

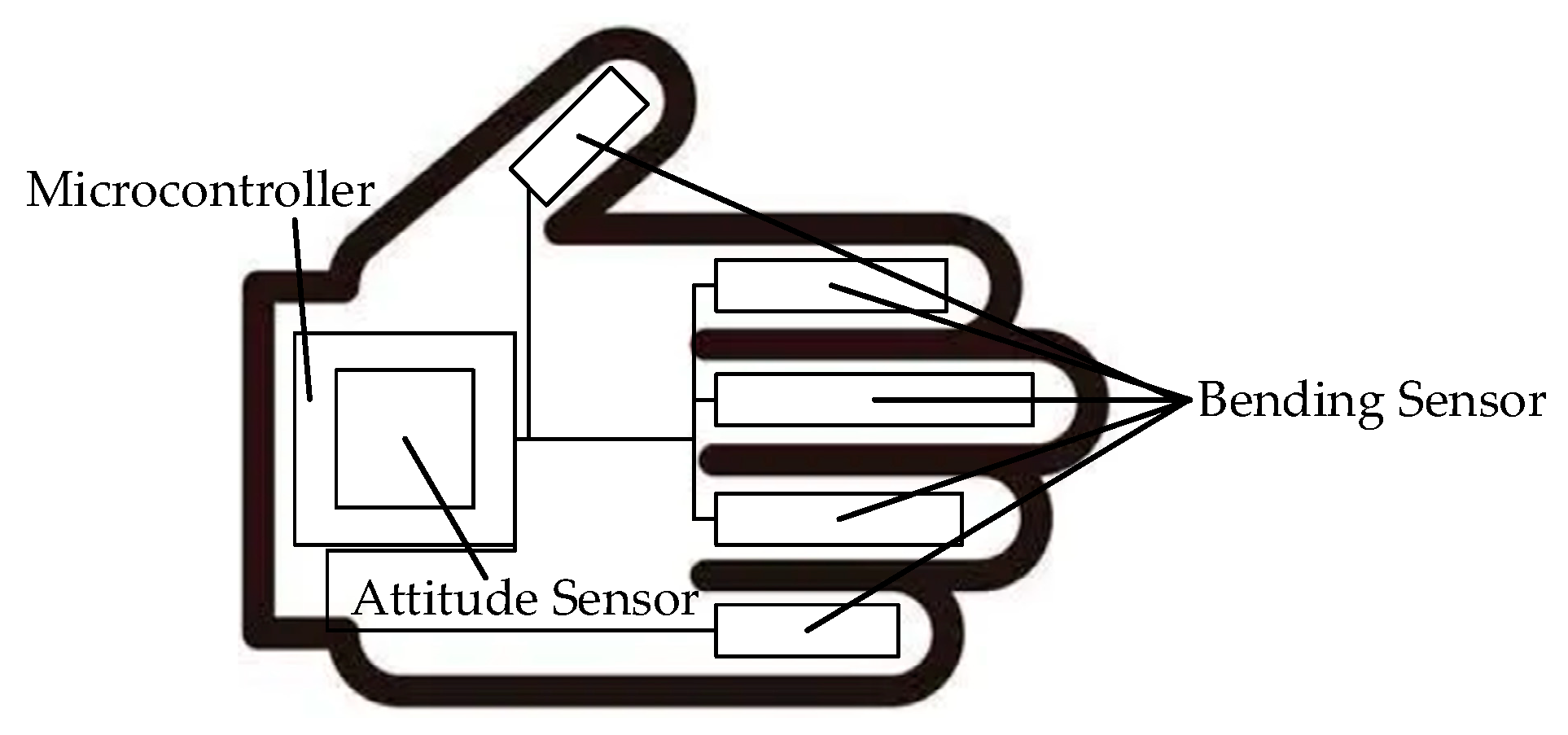

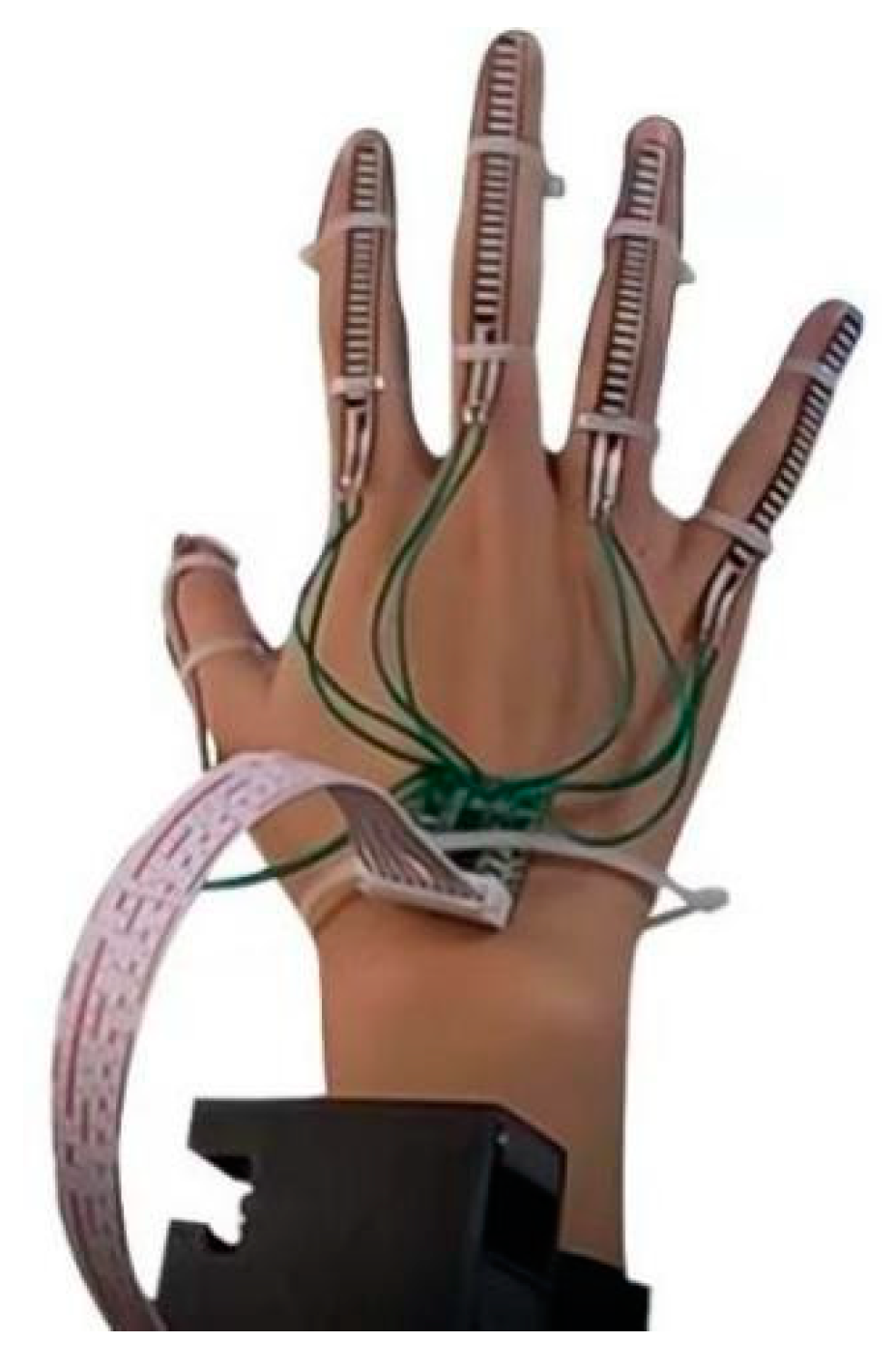

- Hand Data Acquisition Platform (HDT)

- 2.

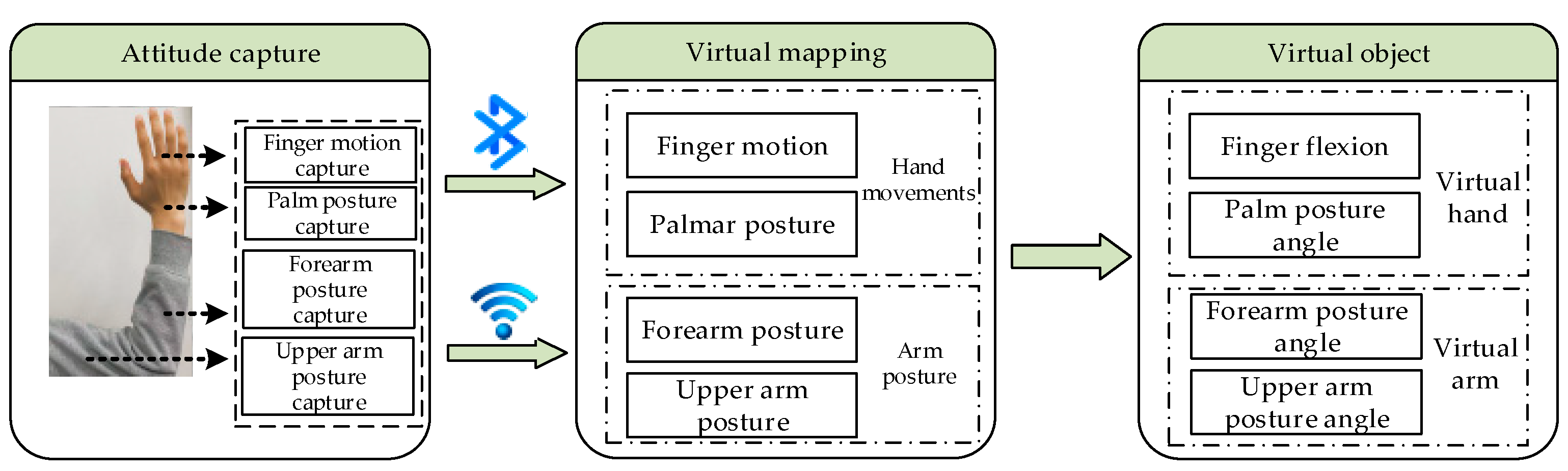

- Virtual and Real Interaction Platform Based on Unity3D

- 3.

- Master–Slave Control Platform

3. Data Analysis

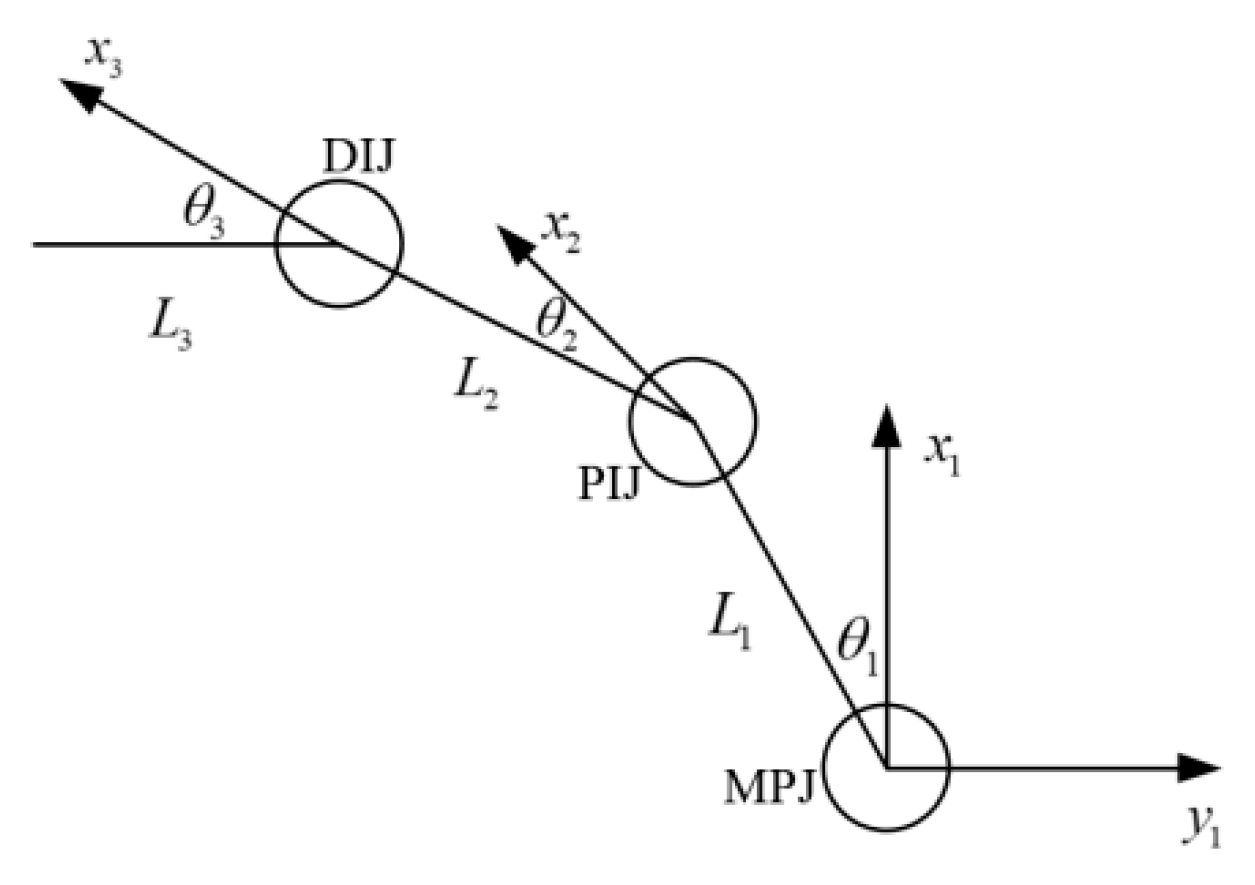

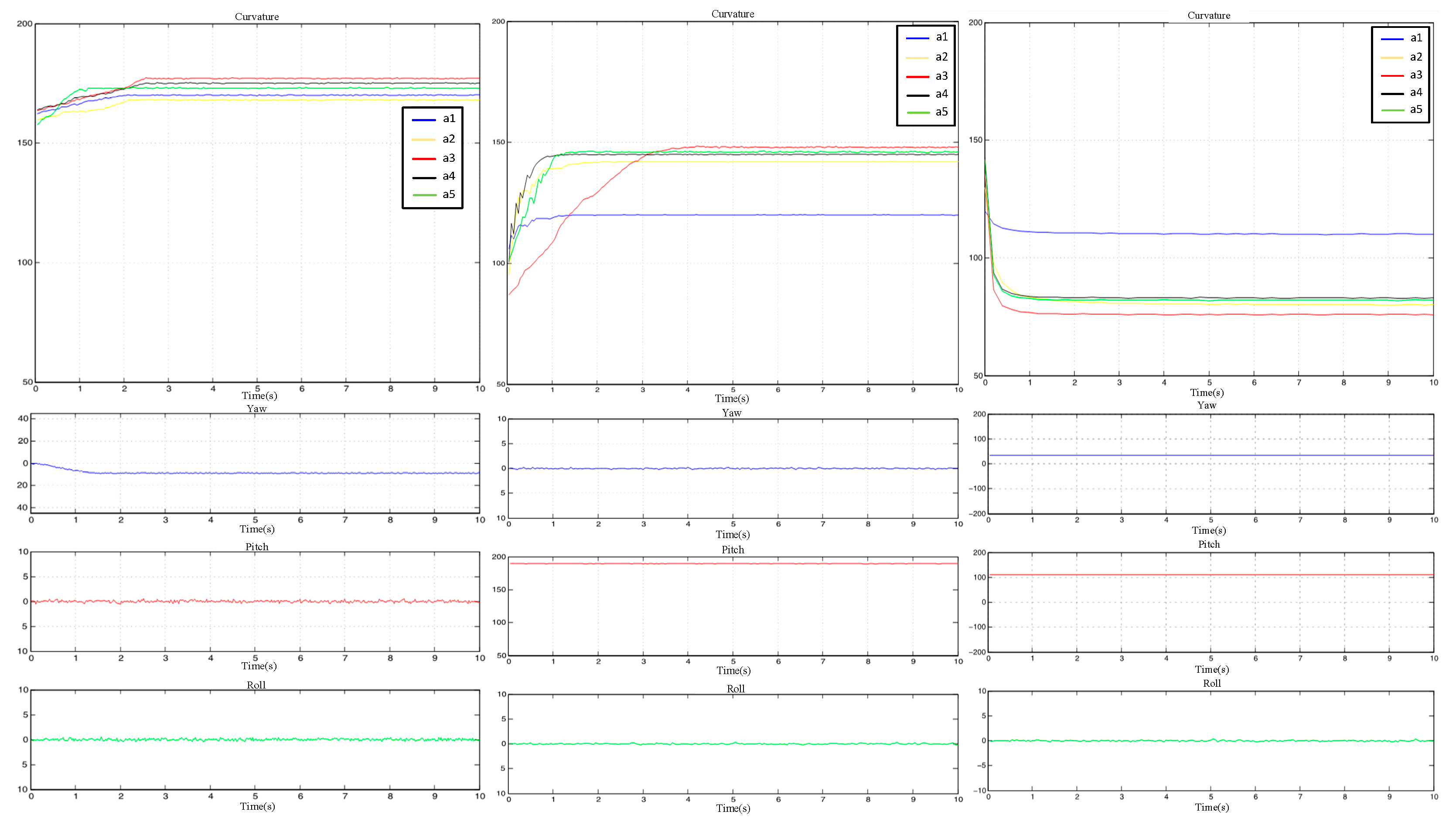

3.1. Analysis of the Bending Degrees of the Fingers

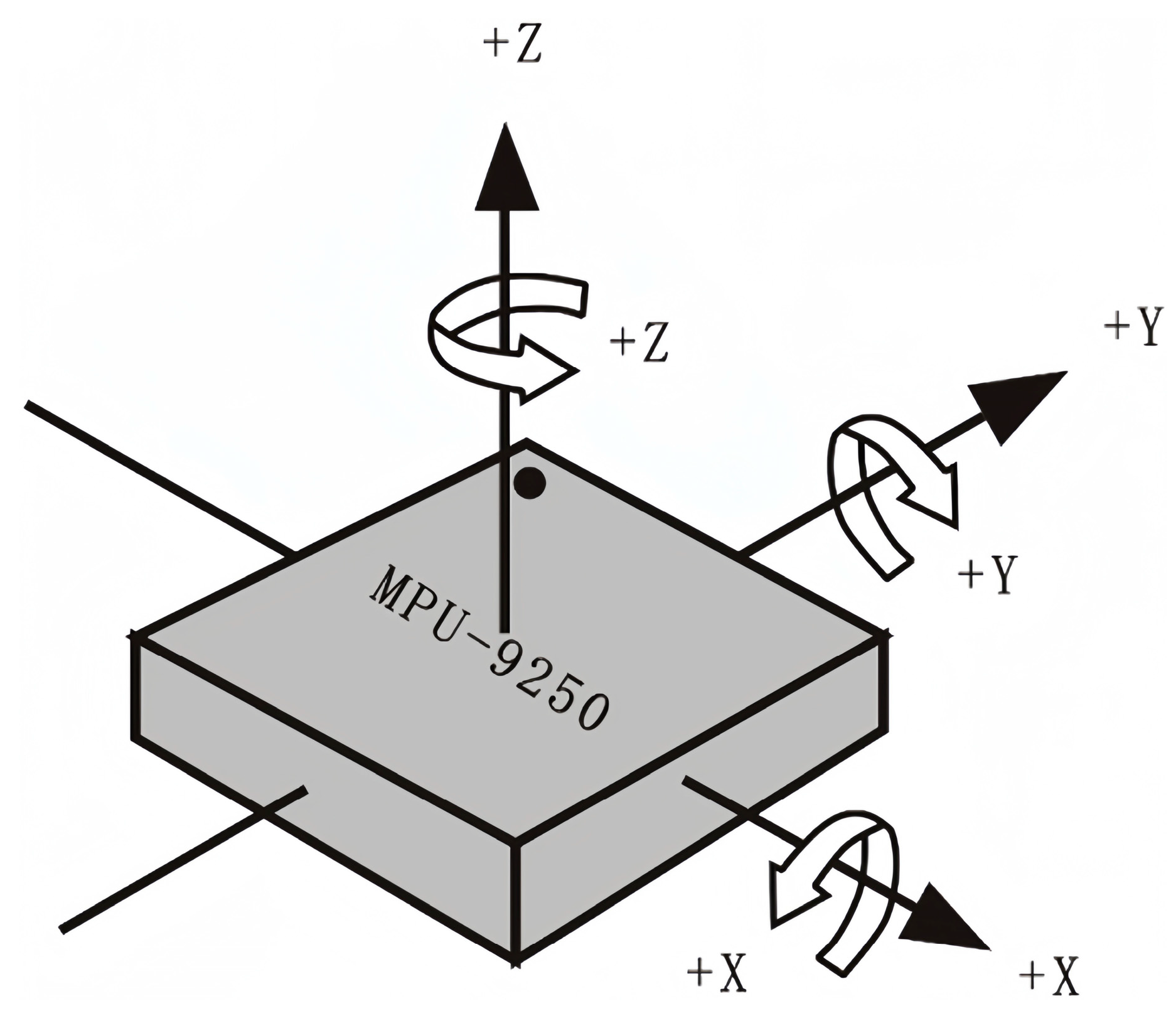

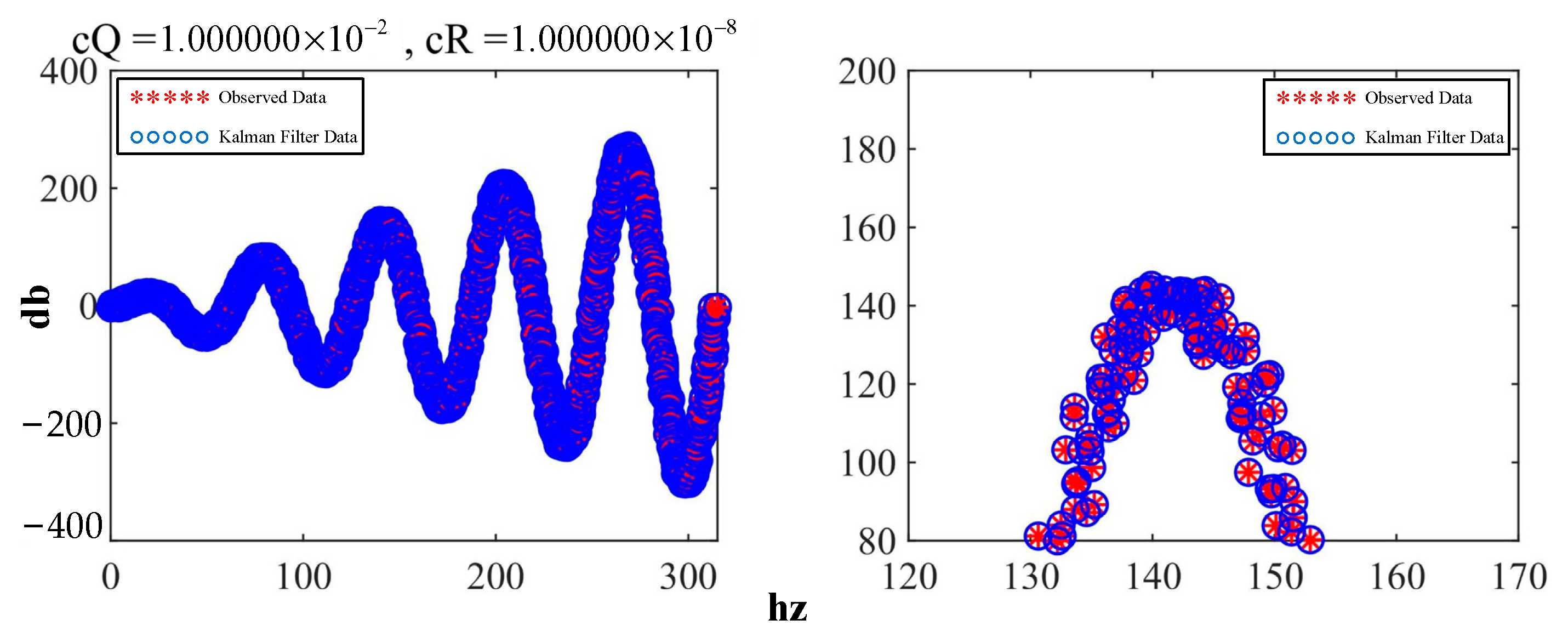

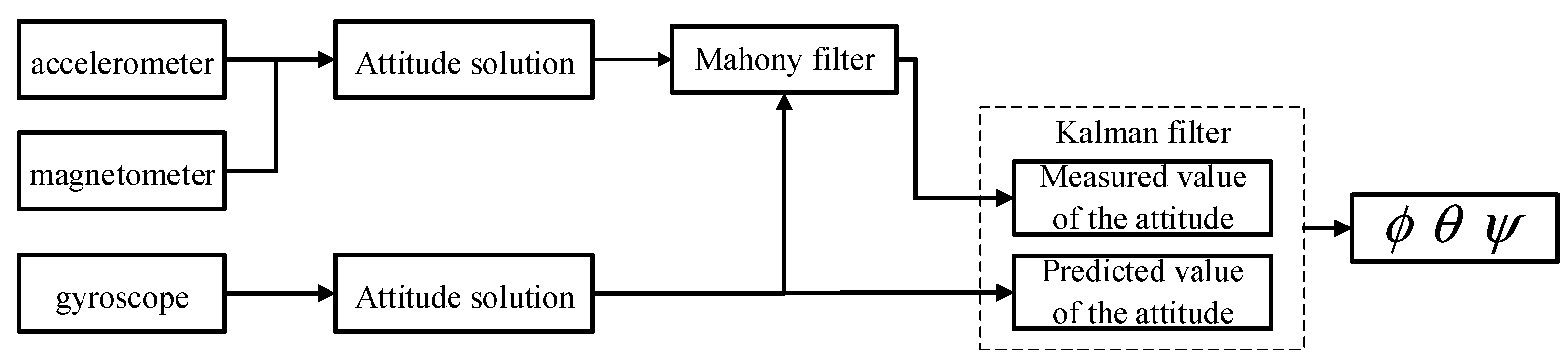

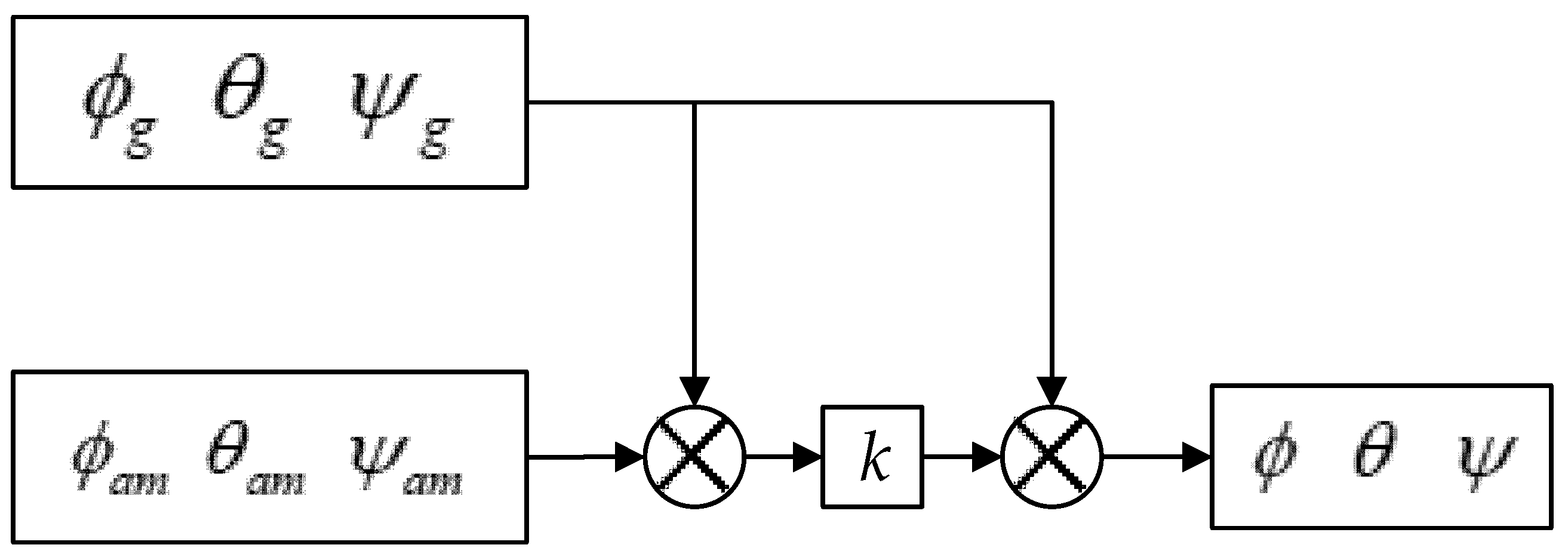

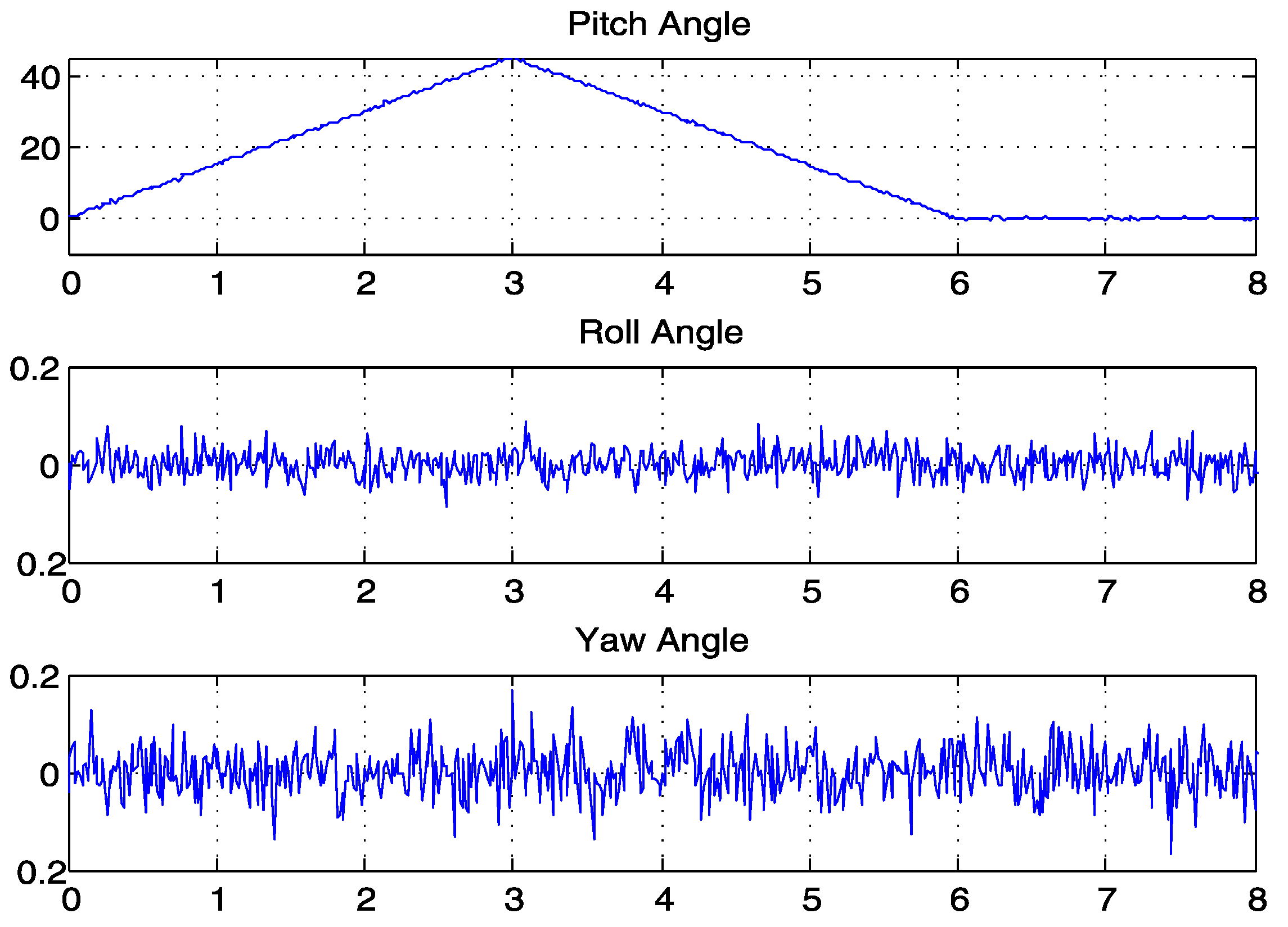

3.2. Analysis of the Eulerian Angles of the System

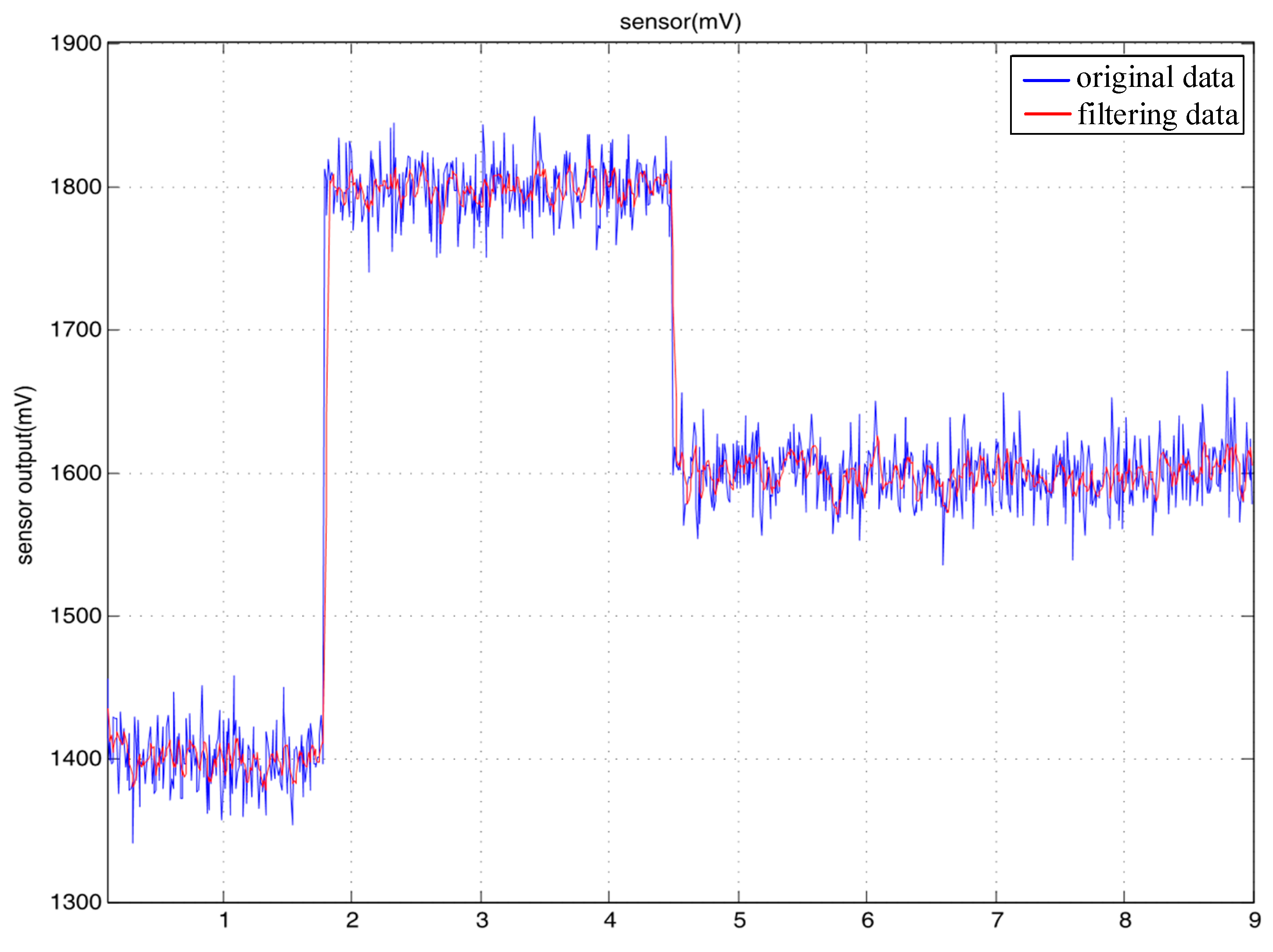

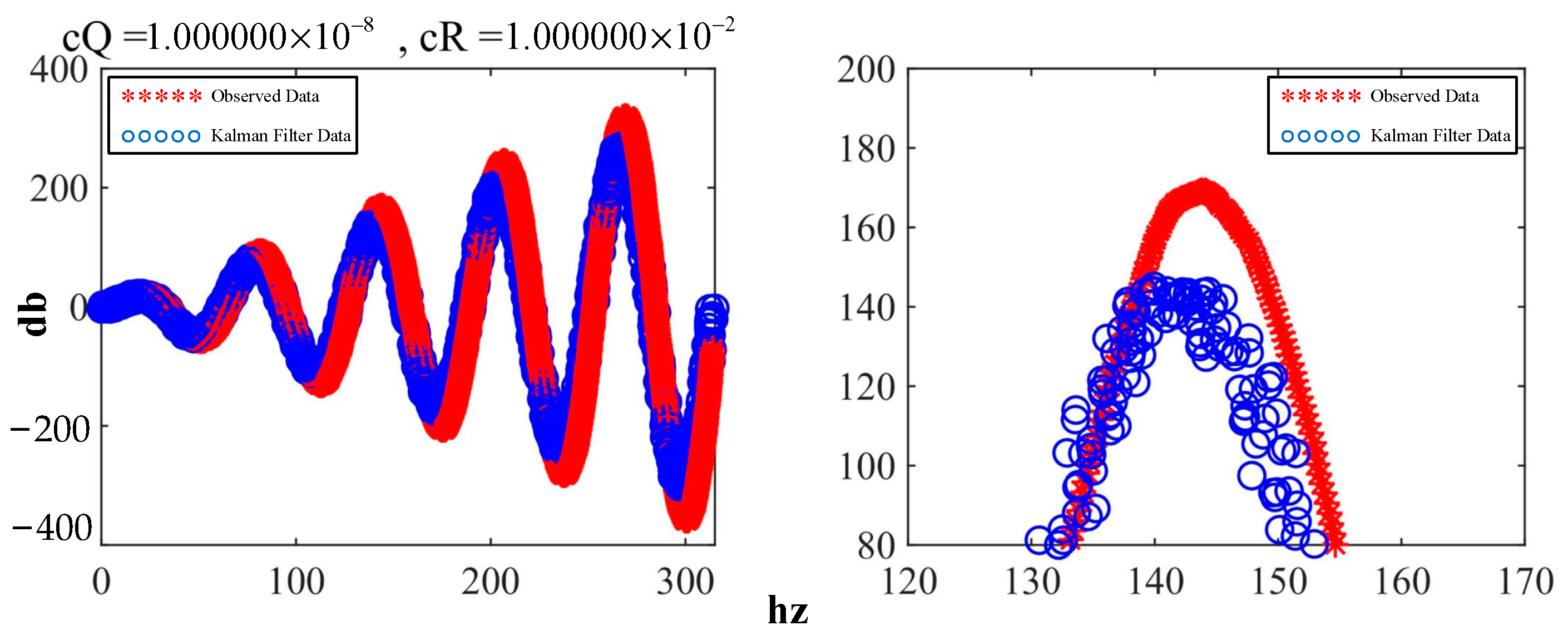

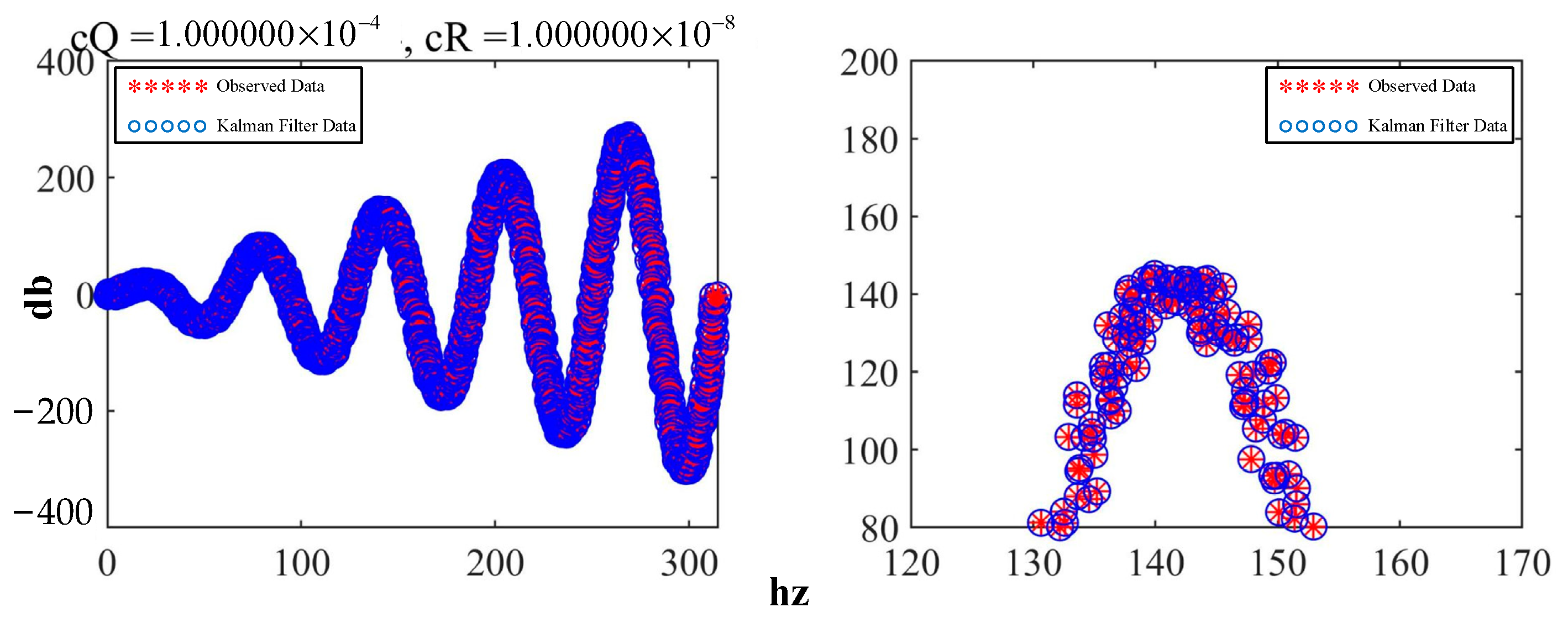

4. Data Calibration

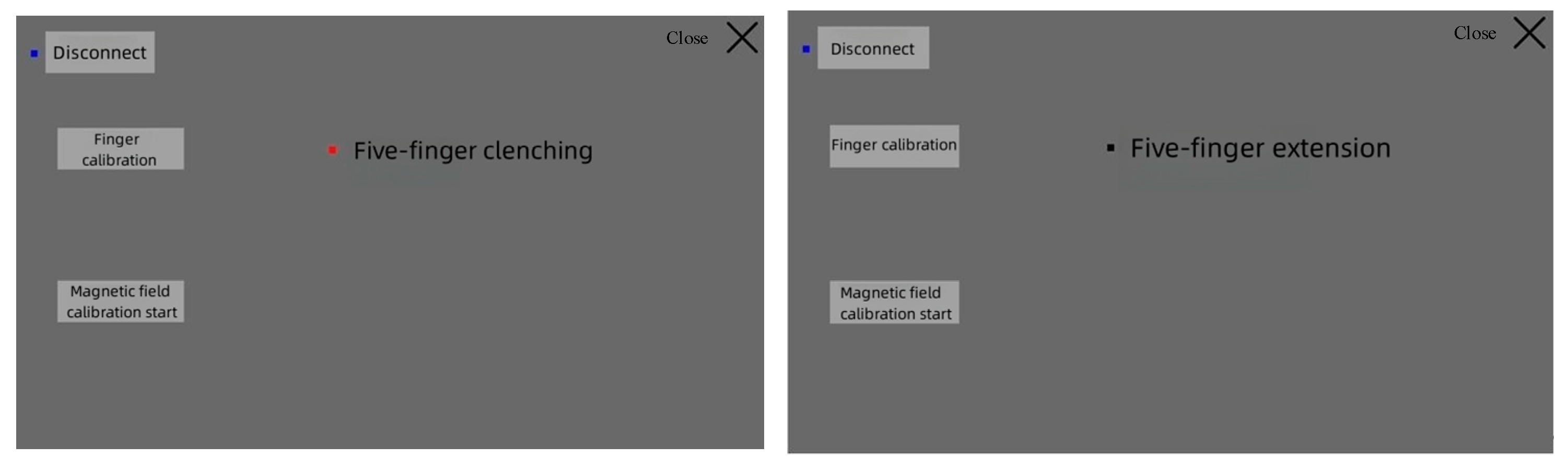

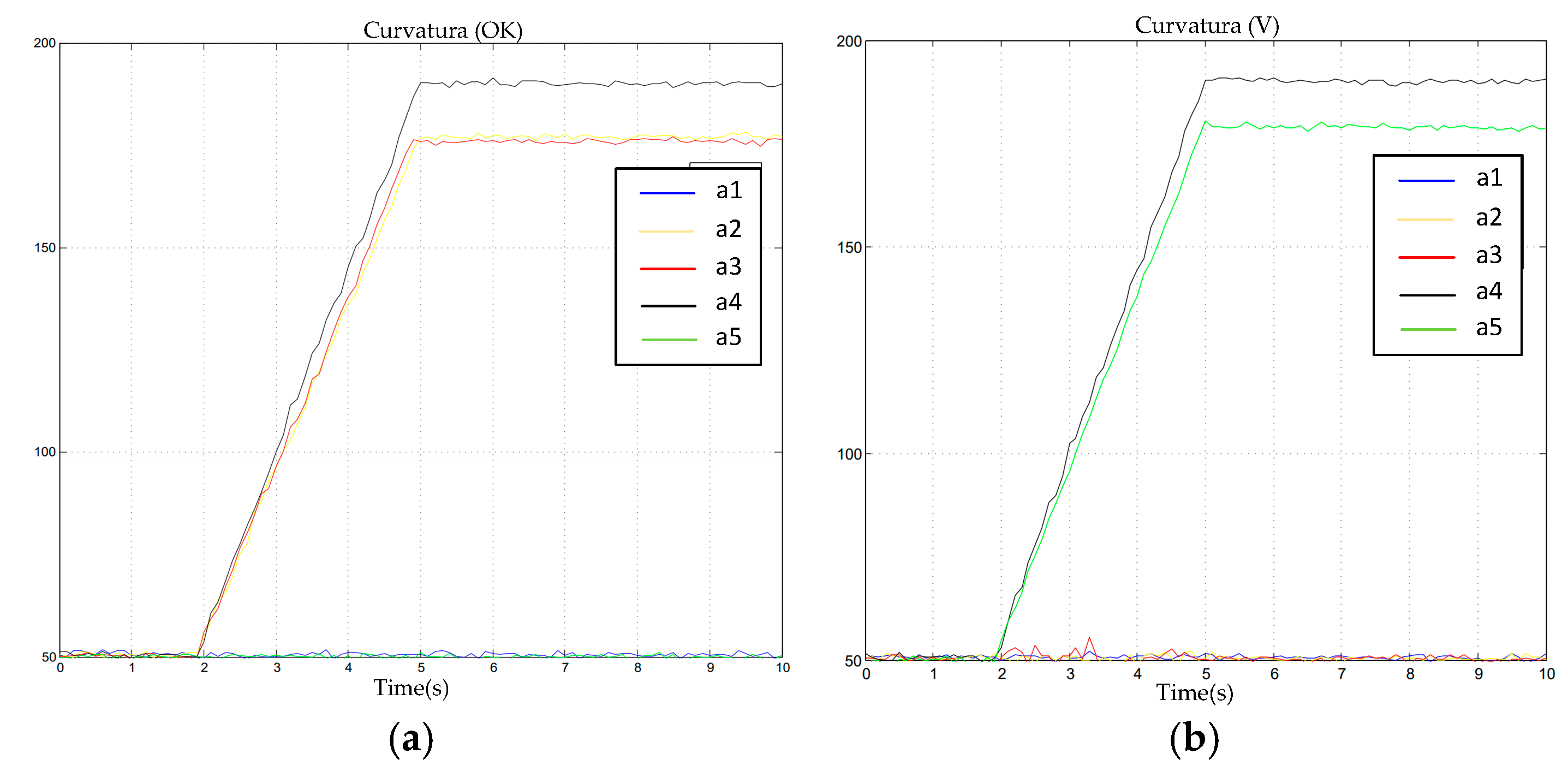

4.1. Calibration of the Bending Degrees of the Fingers

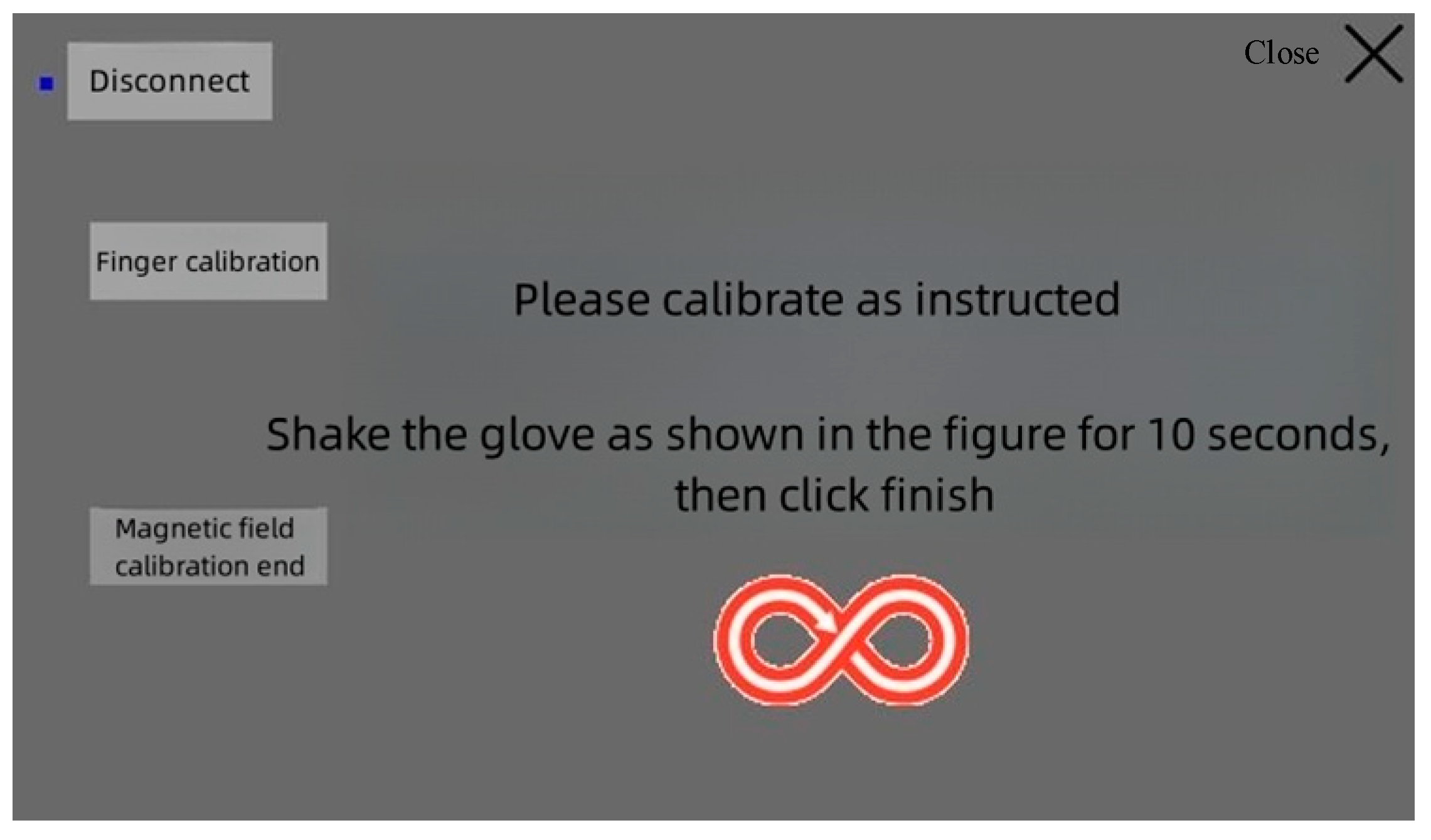

4.2. Calibration of the Eulerian Angles of the System

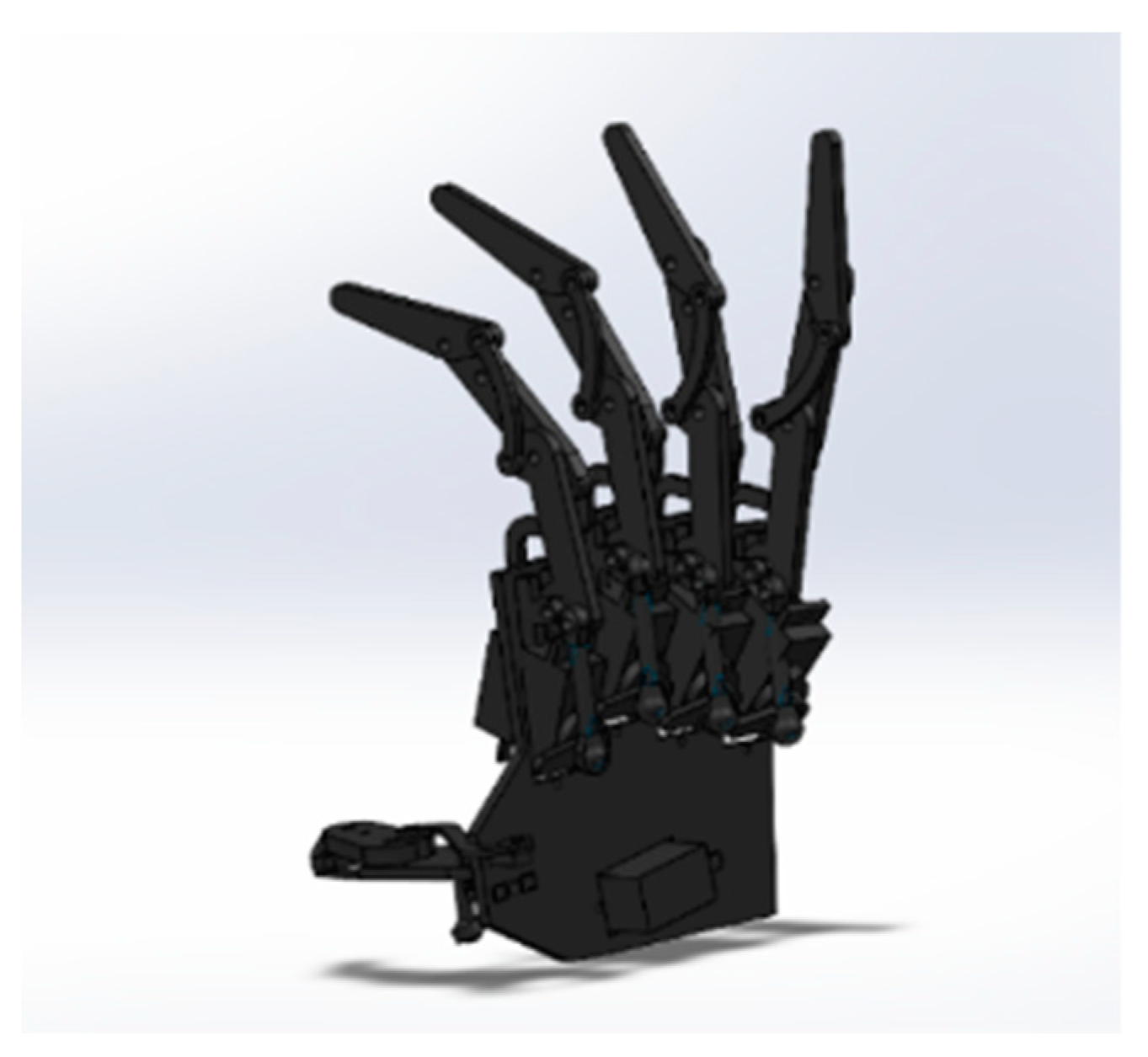

5. Design of the Mechanical Hand and Realization of Virtual–Physical Interaction

5.1. Design of the Mechanical Hand

5.2. Realization of Virtual–Physical Interaction

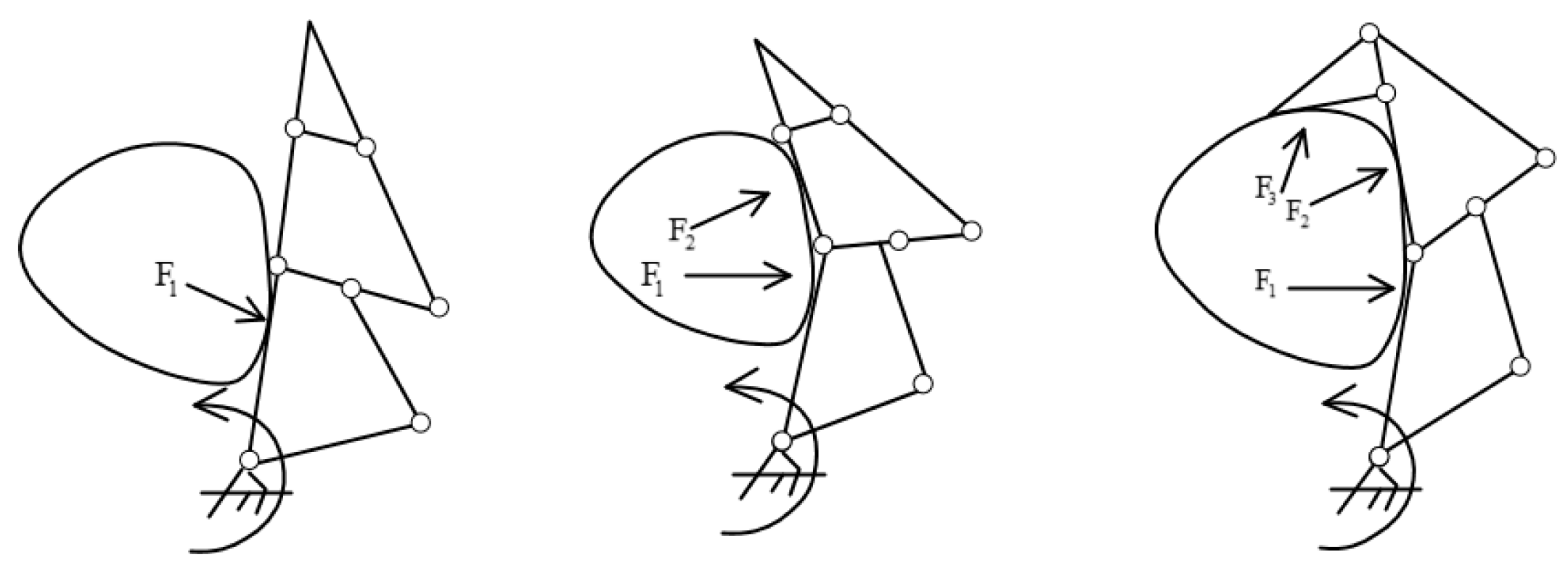

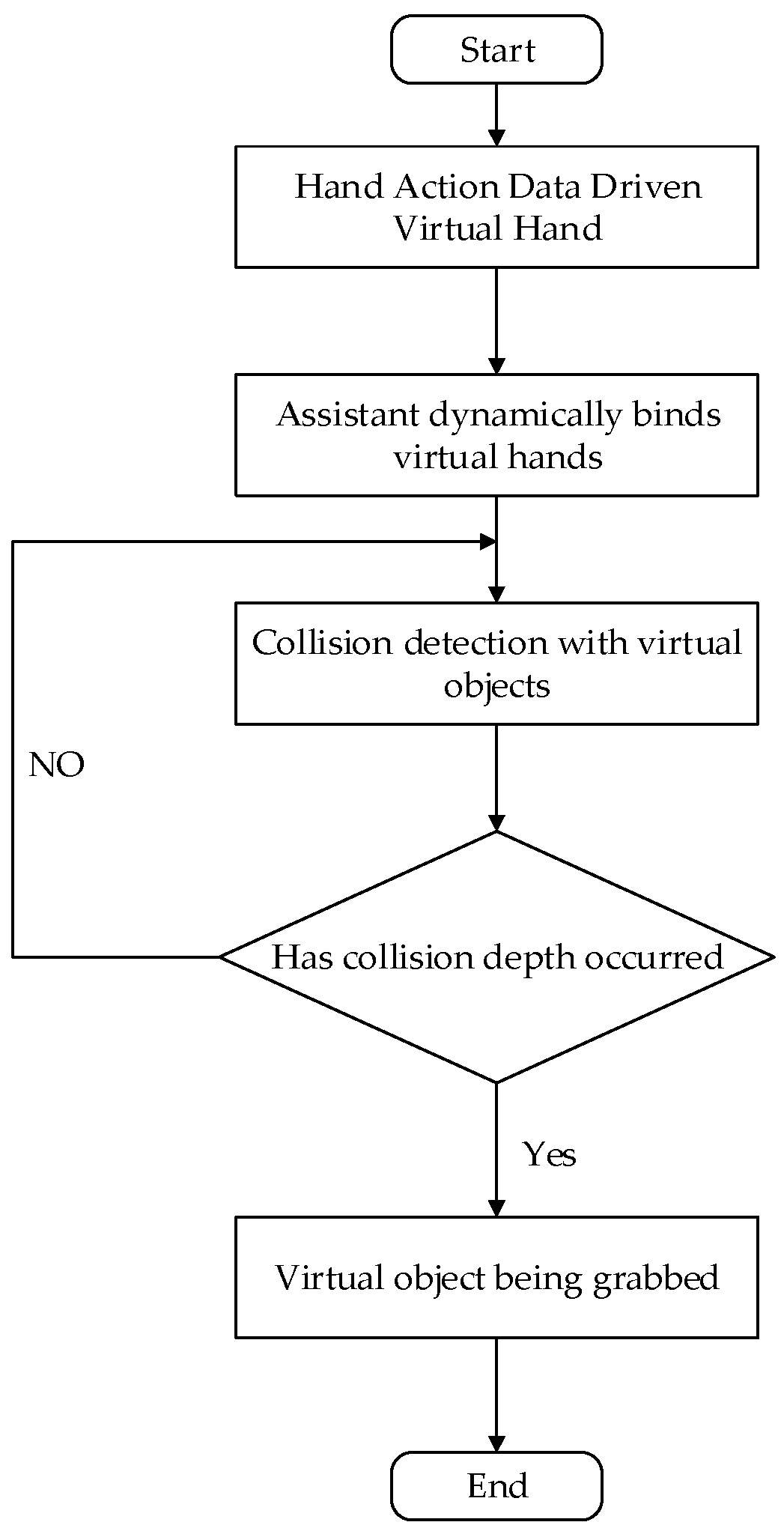

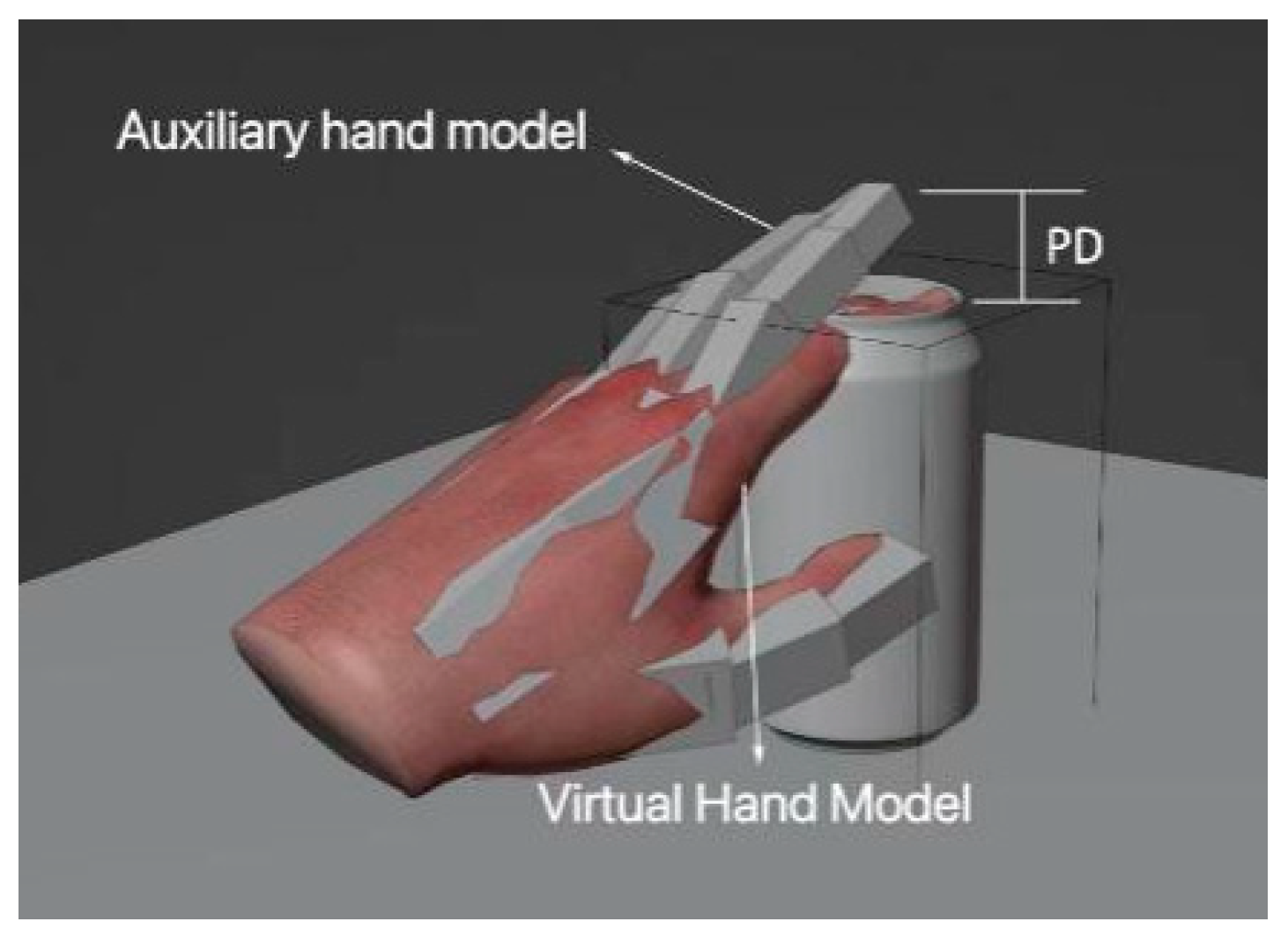

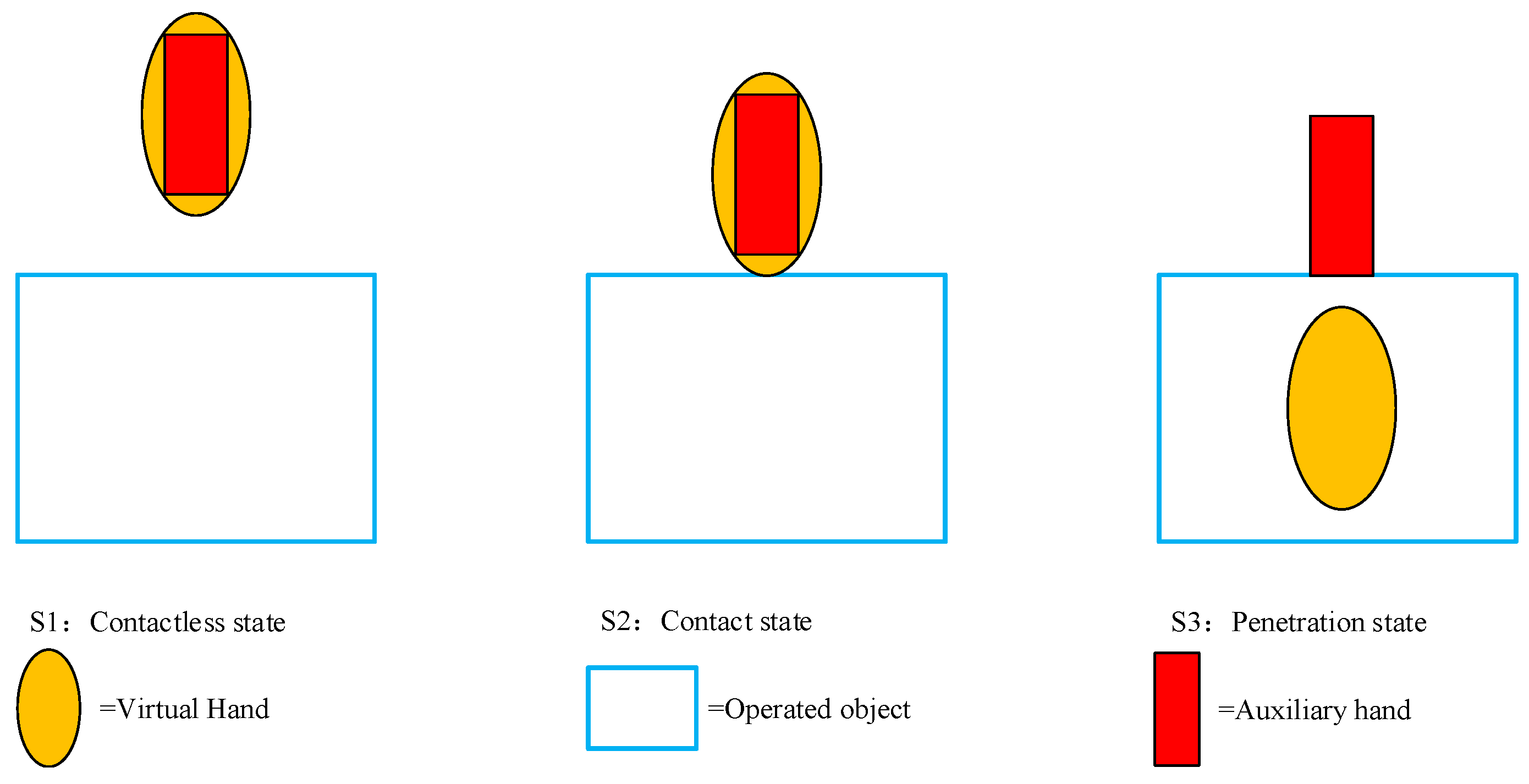

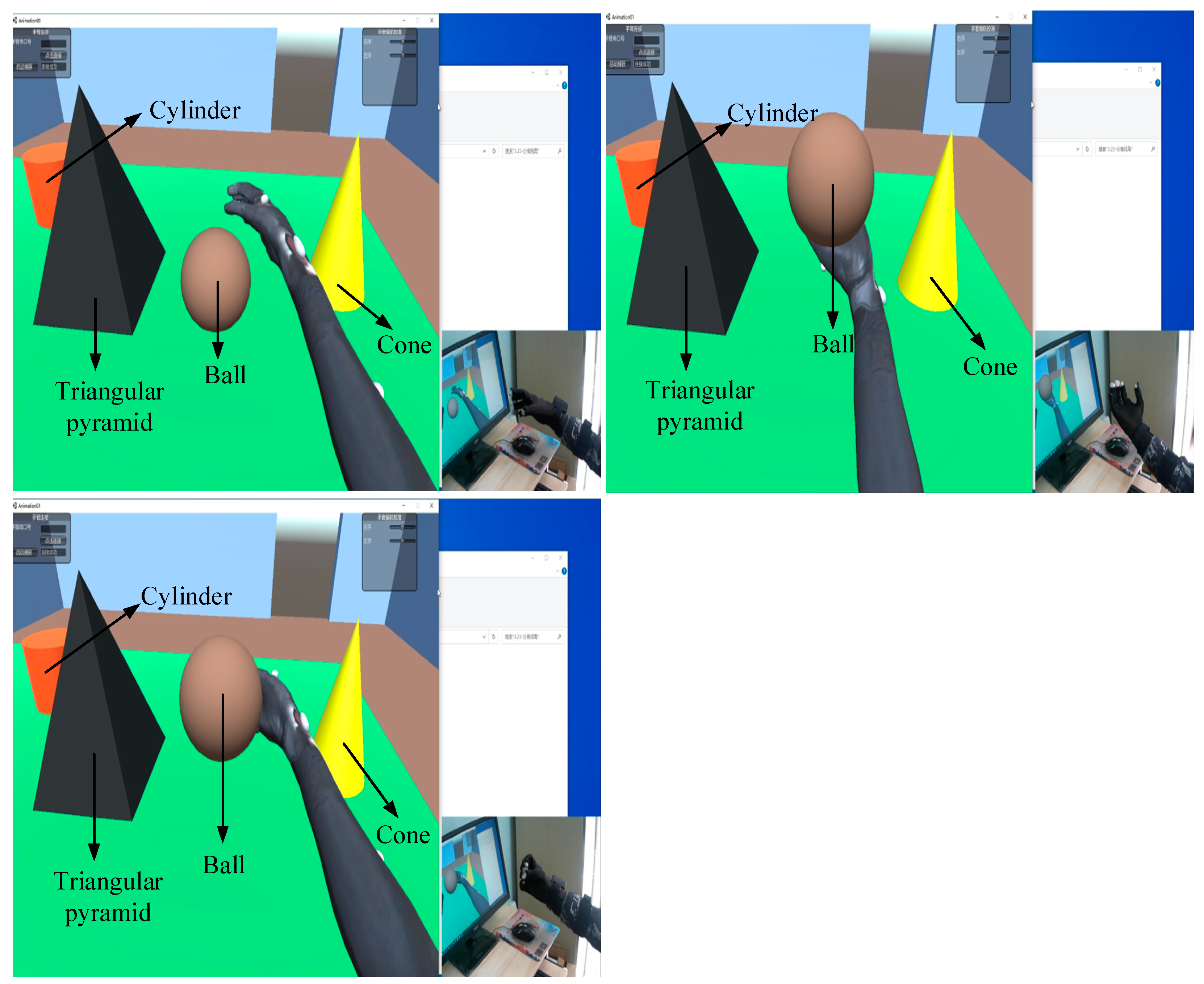

5.2.1. Realization of Virtual Grasping

- (1)

- Contactless State (S1): The hand is not in contact with the manipulated object.

- (2)

- Contact State (S2): Starting to separate from the virtual hand, the auxiliary hand remains at the contact spot.

- (3)

- Penetration State (S3): The auxiliary hand grasps the object that is being manipulated as the virtual one penetrates it.

- (4)

- Release State (S4): The auxiliary hand moves away with the virtual one, thereby releasing the object that is being manipulated.

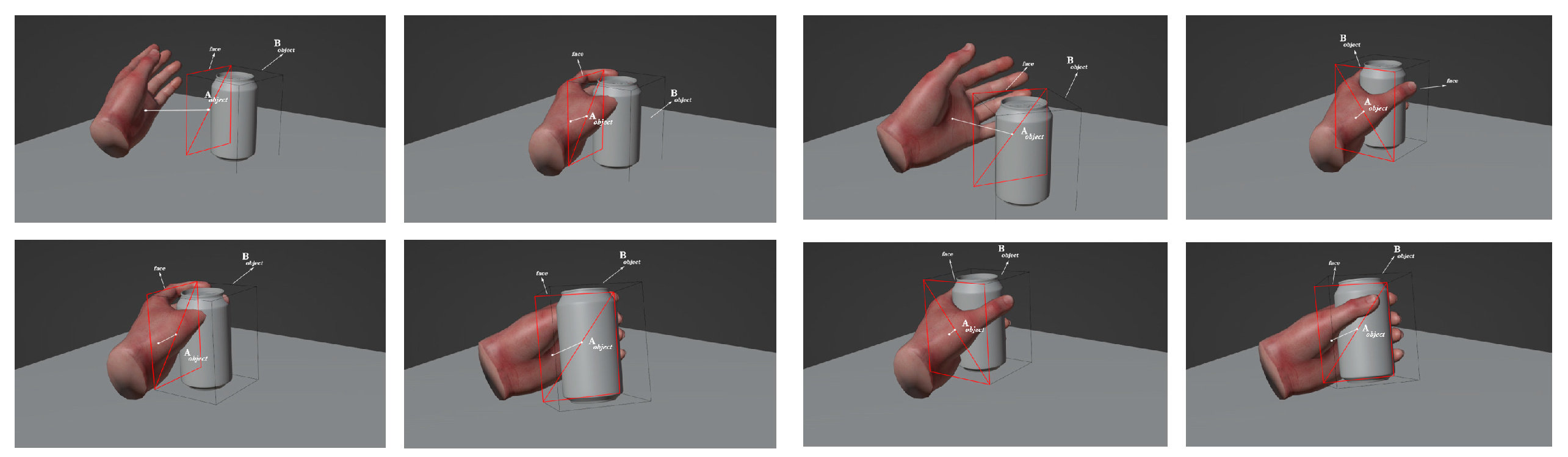

5.2.2. Realization of Stable Grasping

6. System Debugging and Experimentation

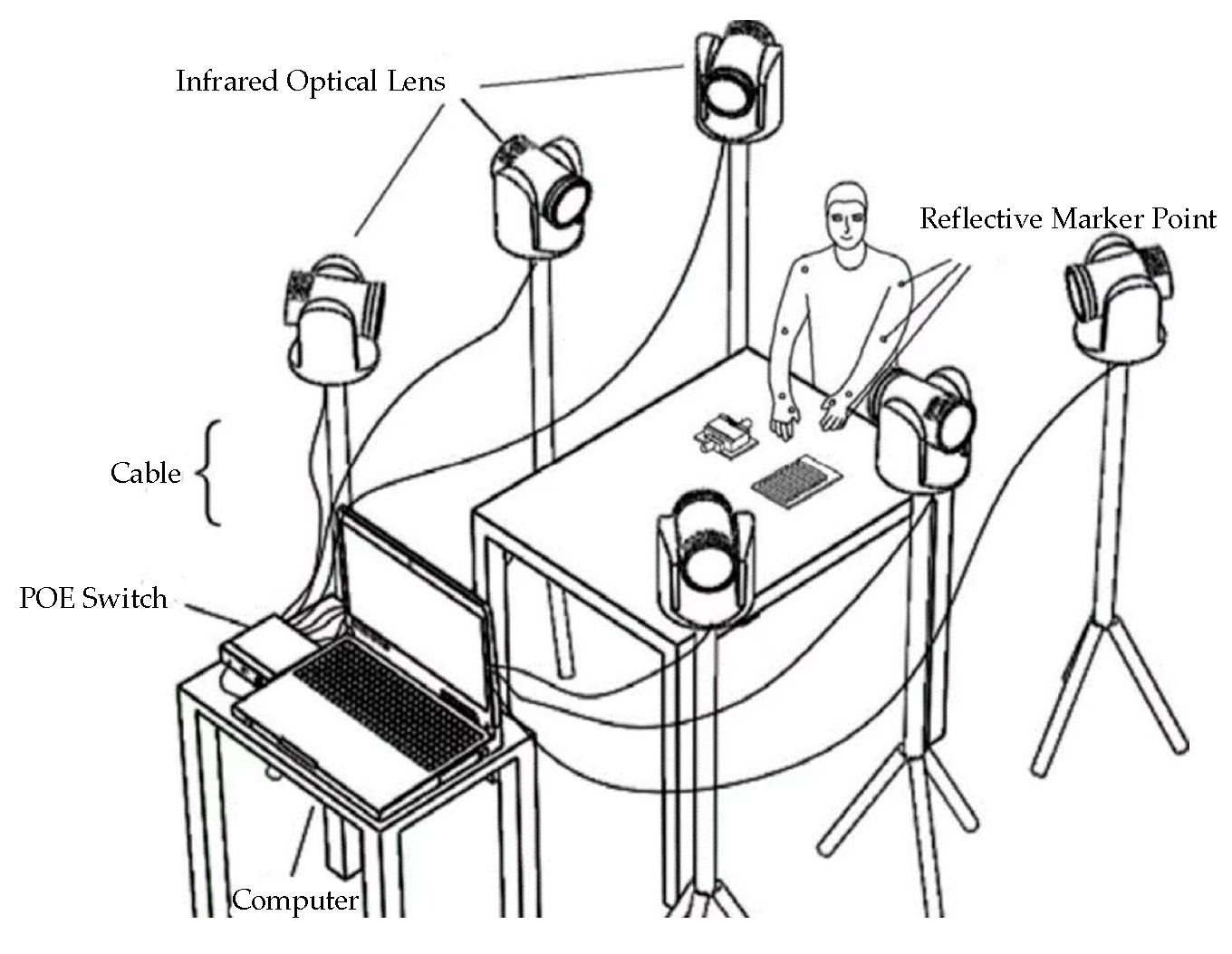

6.1. Establishment of the Data Platform

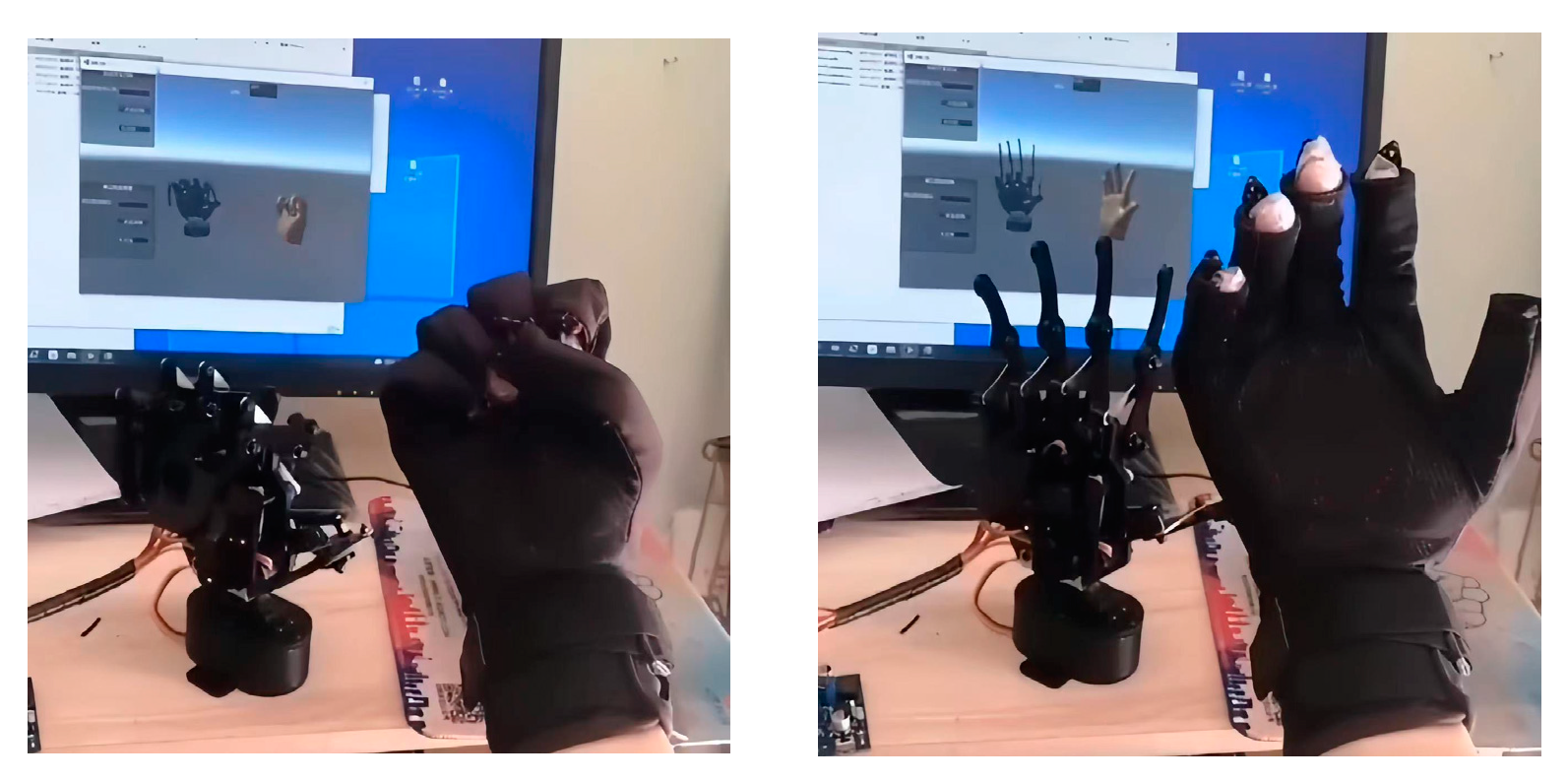

6.2. Testing the Interactive Virtual Reality System

6.3. Debugging of the Overall System

7. Conclusions

- (1)

- Due to the urgent need for hand protection and the common problems with existing hand master–slave control technologies, we combined virtual reality technology to propose a design scheme for a master–slave control system using hands based on virtual reality technology. We analyzed and identified the four important components of the system, namely, the hand data collection platform, the back-end data management platform, the Unity3D virtual–physical interaction platform, and the master–slave control platform. The working principles and design schemes of each main part were explained in detail.

- (2)

- In line with the overall design scheme and requirements, a detailed design and explanation of the system’s hardware structure and constituent components were provided. The hardware included a data glove and a five-fingered bionic mechanical hand, while the software part involved data management software and a virtual interaction program, which eventually resulted in the realization of the debugging and operation of the overall system.

- (3)

- In terms of hand posture calculation, we designed a data analysis model for the finger-bending degree and palm posture angles. In terms of data processing, we proposed the integration of complementary filtering based on Kalman filtering, thus fully exploiting the advantages of the two algorithms and compensating for their shortcomings.

- (4)

- In research on virtual–real interactions of the hand, we proposed a proxy hand solution, which solved the problem of mutual penetration between the virtual hand and virtual objects during the virtual interaction process. For the judgement of stable grasping, two judgement conditions were proposed, which addressed the non-immersive experience brought by the lack of physical constraints in the virtual world.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tsai, Y.T.; Jhu, W.Y.; Chen, C.C.; Kao, C.H.; Chen, C.Y. Unity game engine: Interactive software design using digital glove for virtual reality baseball pitch training. Microsyst. Technol. 2019, 24, 1401–1417. [Google Scholar] [CrossRef]

- Jha, C.K.; Gajapure, K.; Chakraborty, A.L. Design and evaluation of an FBG sensor-based glove to simultaneously monitor flexure of ten finger joints. IEEE Sens. J. 2020, 21, 7620–7630. [Google Scholar] [CrossRef]

- Kim, H.; Choi, Y. Performance Comparison of User Interface Devices for Controlling Mining Software in Virtual Reality Environments. Appl. Sci. 2019, 9, 2584. [Google Scholar] [CrossRef]

- Xiao, W.Y.; Zhi, C.Z.; Li, Q.K.; Xie, H. The Key technologies of human-computer interaction based on virtual hand. Comput. Appl. 2015, 35, 2945–2949. [Google Scholar]

- Mapes, D.P.; Moshell, J.M. A two-handed interface for object manipulation in virtual environments. Presence Teleoperators Virtual Environ. 1995, 4, 403–416. [Google Scholar] [CrossRef]

- Medeiros, D.; Carvalho, F.; Teixeira, L.; Braz, P.; Raposo, A.; Santos, I. Proposal and evaluation of a tablet-based tool for 3D virtual environments. SBC J. Interact. Syst. 2013, 4, 30–42. [Google Scholar] [CrossRef]

- Nasim, K.; Kim, Y.J. Physics-based assistive grasping for robust object manipulation in virtual reality. Comput. Animat. Virtual Worlds 2018, 29, e1820. [Google Scholar] [CrossRef]

- Li, Y.J.; Xue, S. Optical fiber data glove for hand posture capture. Opt.-Int. J. Light Electron Opt. 2021, 233, 166603. [Google Scholar] [CrossRef]

- Fang, B.; Sun, F.; Liu, H.; Guo, D. A novel data glove using inertial and magnetic sensors for motion capture and robotic arm-hand teleoperation. Ind. Robot. Int. J. 2017, 44, 155–165. [Google Scholar] [CrossRef]

- Wan, K.; Aziz, A.A.; Ab, S.; Zaaba, S.K.; Ibrahim, Z.; Yusof, Z.M.; Ibrahim, I.; Mukred, J.A.; Mokhtar, N. Probability Distribution of Arm Trajectory for Motion Estimation and Gesture Recognition. Adv. Sci. Lett. 2012, 13, 534–539. [Google Scholar] [CrossRef]

- Ahmad, A.; Migniot, C.; Dipanda, A. Hand Pose Estimation and Tracking in Real and Virtual Interaction: A Review. Image Vis. Comput. 2019, 89, 35–49. [Google Scholar] [CrossRef]

- Saggio, G. A novel array of flex sensors for a goniometric glove. Sens. Actuators A Phys. 2014, 205, 119–125. [Google Scholar] [CrossRef]

- Yang, W.K. Research on Attitude Recognition and Robot Tracking Control System. Master’s Thesis, Jilin University, Changchun, China, 2021. [Google Scholar]

- Lee, S.Y.; Bak, S.H.; Bae, J.H. An Effective Recognition Method of the Gripping Motion Using a Data Gloves in a Virtual Reality Space. J. Digit. Contents Soc. 2021, 22, 437–443. [Google Scholar] [CrossRef]

- Mitra, S.; Acharya, T. Gesture recognition: A survey. IEEE Trans. Syst. Man Cybern. 2007, 37, 311–324. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, Y.; Ye, X.; Zhang, S. Haptic rendering method based on generalized penetration depth computation. Signal Process. 2016, 120, 714–720. [Google Scholar] [CrossRef]

- Li, X.; Huang, Q.; Yu, Z.; Zhu, J. A novel under-actuated bionic hand and its grasping stability analysis. Adv. Mech. Eng. 2017, 9, 1687814016688859. [Google Scholar] [CrossRef]

- Shamaie, A.; Sutherland, A. Hand tracking in bimanual movements. Image Vis. Comput. 2005, 23, 1131–1149. [Google Scholar] [CrossRef]

- Miller, A.T.; Allen, P.K. Graspit! a versatile simulator for robotic grasping. IEEE Robot. Autom. Mag. 2004, 11, 110–122. [Google Scholar] [CrossRef]

- Zhang, Z.Y. Design of Motion Capture System Based on MEMS Inertial Measurement Units. Autom. Panor. 2017, 277, 85–88. [Google Scholar]

- Ceolini, E.; Frenkel, C.; Shrestha, S.B.; Taverni, G.; Donati, E. Hand-gesture recognition based on EMG and event-based camera sensor fusion: A benchmark in neuromorphic computing. Front. Neurosci. 2020, 14, 637–641. [Google Scholar] [CrossRef]

- Song, P.; Fu, Z.; Liu, L. Grasp planning via hand-object geometric fitting. Vis. Computer 2018, 34, 257–270. [Google Scholar] [CrossRef]

- Tian, H.; Wang, C.; Manocha, D.; Zhang, X.Y. Realtime hand-object interaction using learned grasp space for virtual environments. IEEE Trans. Vis. Comput. Graph. 2018, 25, 2623–2635. [Google Scholar] [CrossRef] [PubMed]

- Silva, L.; Dantas, R.; Pantoja, A. Development of a low cost dataglove based on arduino for virtual reality applications. In Proceedings of the 2013 IEEE International Conference on Computational Intelligence and Virtual Environments for Measurement Systems and Applications (CIVEMSA), Milan, Italy, 15–17 July 2013. [Google Scholar]

- Cha, Y.; Seo, J.; Kim, J.S.; Park, J.M. Human–computer interface glove using flexible piezoelectric sensors. Smart Mater. Struct. 2017, 26, 057002. [Google Scholar] [CrossRef]

- Hilman, M.; Basuki, D.K.; Sukaridhoto, S. Virtual hand: VR hand controller using IMU and flex sensor. In Proceedings of the International Electronics Symposium on Knowledge Creation and Intelligent Computing (IES-KCIC), Roma, Italy, 29–30 October 2018. [Google Scholar]

- Oprea, S.; Martinez-Gonzalez, P.; Garcia-Garcia, A.; Castro-Vargas, J.A.; Garcia-Rodriguez, J. A visually realistic grasping system for object manipulation and interaction in virtual reality environments. Computer Graph. 2019, 83, 77–86. [Google Scholar] [CrossRef]

- Huang, X.N.; Wang, Q.; Zang, S.Y.; Wan, J.X.; Ren, X.M. Tracing the Motion of Finger Joints for Gesture Recognition via Sewing RGO-Coated Fibers onto a Textile Glove. IEEE Sens. J. 2019, 19, 9504–9511. [Google Scholar] [CrossRef]

- Yan, P.Z.; Jing, L.; Ran, Y.; Lei, H.; Jin, L.C.; Jia, N.C. Attitude Solving Algorithm and FPGA Implementation of Four-Rotor UAV Based on Improved Mahony Complementary Filter. Sensors 2022, 22, 6411. [Google Scholar]

- Yang, Z.; Yan, S.; van Beijnum, B.J.; Li, B.; Veltink, P.H. Hand-Finger Pose Estimation Using Inertial Sensors. Magn. Sens. A Magnet. 2021, 21, 18115–18122. [Google Scholar]

- Maitre, J.; Rendu, C.; Bouchard, K.; Bouchard, B.; Gaboury, S. Object recognition in performed basic daily activities with a handcrafted data glove prototype. Pattern Recognit. Lett. 2021, 147, 181–188. [Google Scholar] [CrossRef]

- Sim, D.; Baek, Y.; Cho, M. Low-Latency Haptic Open Glove for Immersive Virtual Reality Interaction. Sensors 2021, 21, 3682. [Google Scholar] [CrossRef]

- Wen, Z.W.; Hua, X. Design and Implementation of Collision Detection System in Virtual Simulation. J. Beijing Inst. Petro-Chem. Technol. 2017, 25, 48–52. [Google Scholar]

- Ling, Z. Research and Application of Hybrid Bounding Box Collision Detection Algorithm in Virtual Environment. Master’s Thesis, Hangzhou DianZi University, Hangzhou, China, 2022. [Google Scholar]

| Number of Fingers | Number of Joints | Degrees of Freedom of the Fingers | Advantages | Disadvantages |

|---|---|---|---|---|

| 2 | 3 | 6 | Simple control; no redundancy | Poor grasping effect |

| 3 | 3 | 9 | Good grasping effect | Poor adaptive grasping |

| 5 | 3 | 5 | Strong adaptive effect; no redundancy | Average grasping effect |

| 5 | 3 | 15 | Strong grasping ability; good grasping effect | Complex control, existence of redundancy |

| Drive System | Advantages | Disadvantages |

|---|---|---|

| Electric drive system | Quick response; high precision of movement | Complex circuit; susceptible to interference |

| Pneumatic drive system | Simple structure; ample power source | Unsteady movement; has an impact |

| Hydraulic drive system | Smooth transmission; strong interference resistance | High design and maintenance cost |

| Roll1 | Pitch1 | Yaw1 | Roll2 | Pitch2 | Yaw2 | |

|---|---|---|---|---|---|---|

| Rotate 45° around the x-axis | 45.106 | −0.242 | 0.142 | 45.096 | 0.283 | 0.172 |

| Rotate 45° around the y-axis | −0.176 | 44.861 | 0.217 | 0.109 | 45.163 | 0.071 |

| Rotate 45° around the z-axis | 0.093 | −0.079 | 45.213 | 0.366 | −0.062 | 45.122 |

| 1 | 0 | |||

| 2 | 0 | |||

| 3 | 0 |

| a1 | a2 | a3 | a4 | a5 | |

|---|---|---|---|---|---|

| V | 191 | 1 | 0 | 2 | 185 |

| OK | 182 | 193 | 186 | 3 | 5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, S.; Sun, C. Master–Slave Control System for Virtual–Physical Interactions Using Hands. Sensors 2023, 23, 7107. https://doi.org/10.3390/s23167107

Liu S, Sun C. Master–Slave Control System for Virtual–Physical Interactions Using Hands. Sensors. 2023; 23(16):7107. https://doi.org/10.3390/s23167107

Chicago/Turabian StyleLiu, Siyuan, and Chao Sun. 2023. "Master–Slave Control System for Virtual–Physical Interactions Using Hands" Sensors 23, no. 16: 7107. https://doi.org/10.3390/s23167107

APA StyleLiu, S., & Sun, C. (2023). Master–Slave Control System for Virtual–Physical Interactions Using Hands. Sensors, 23(16), 7107. https://doi.org/10.3390/s23167107