Estimation of Wind Speed Based on Schlieren Machine Vision System Inspired by Greenhouse Top Vent

Abstract

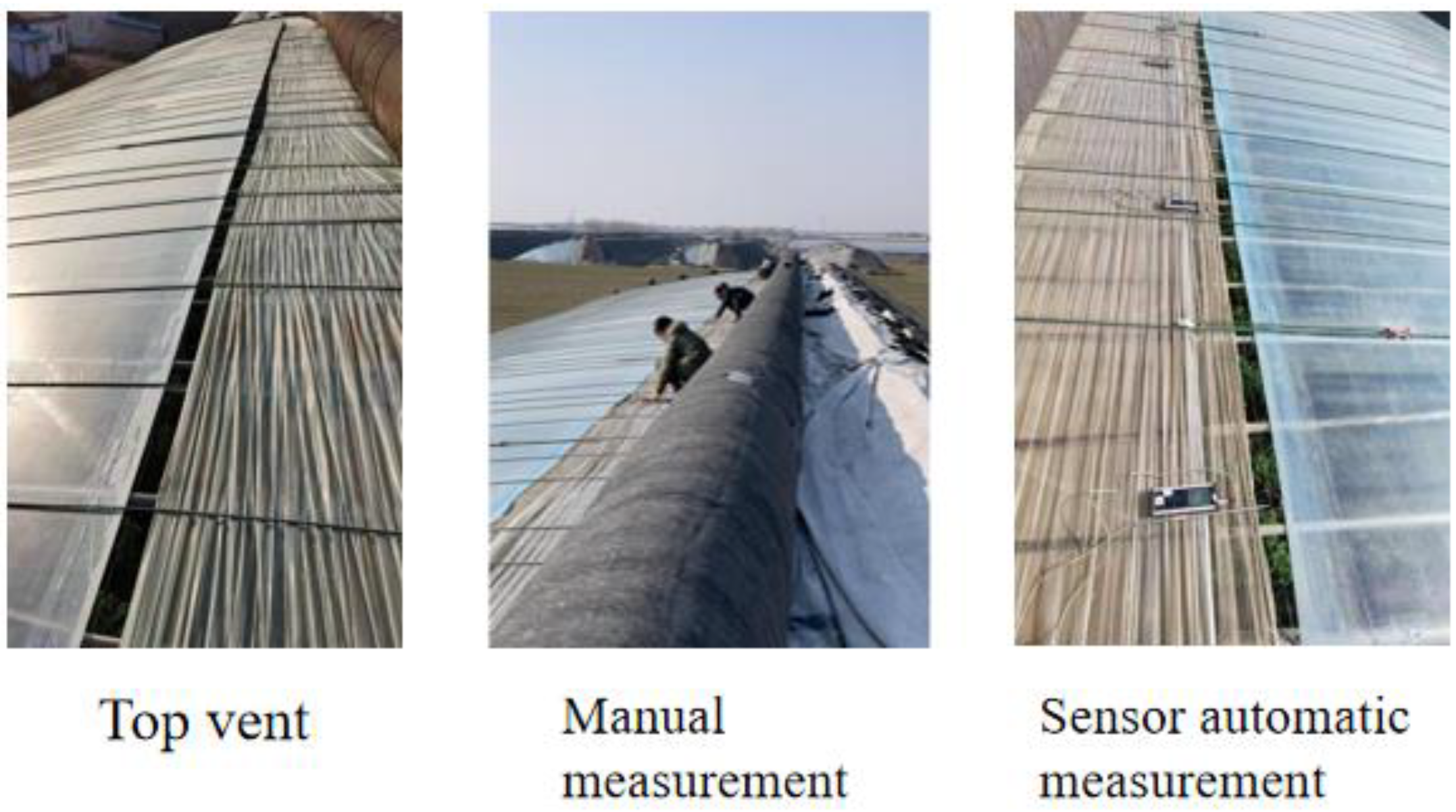

1. Introduction

2. Principle and Experiment

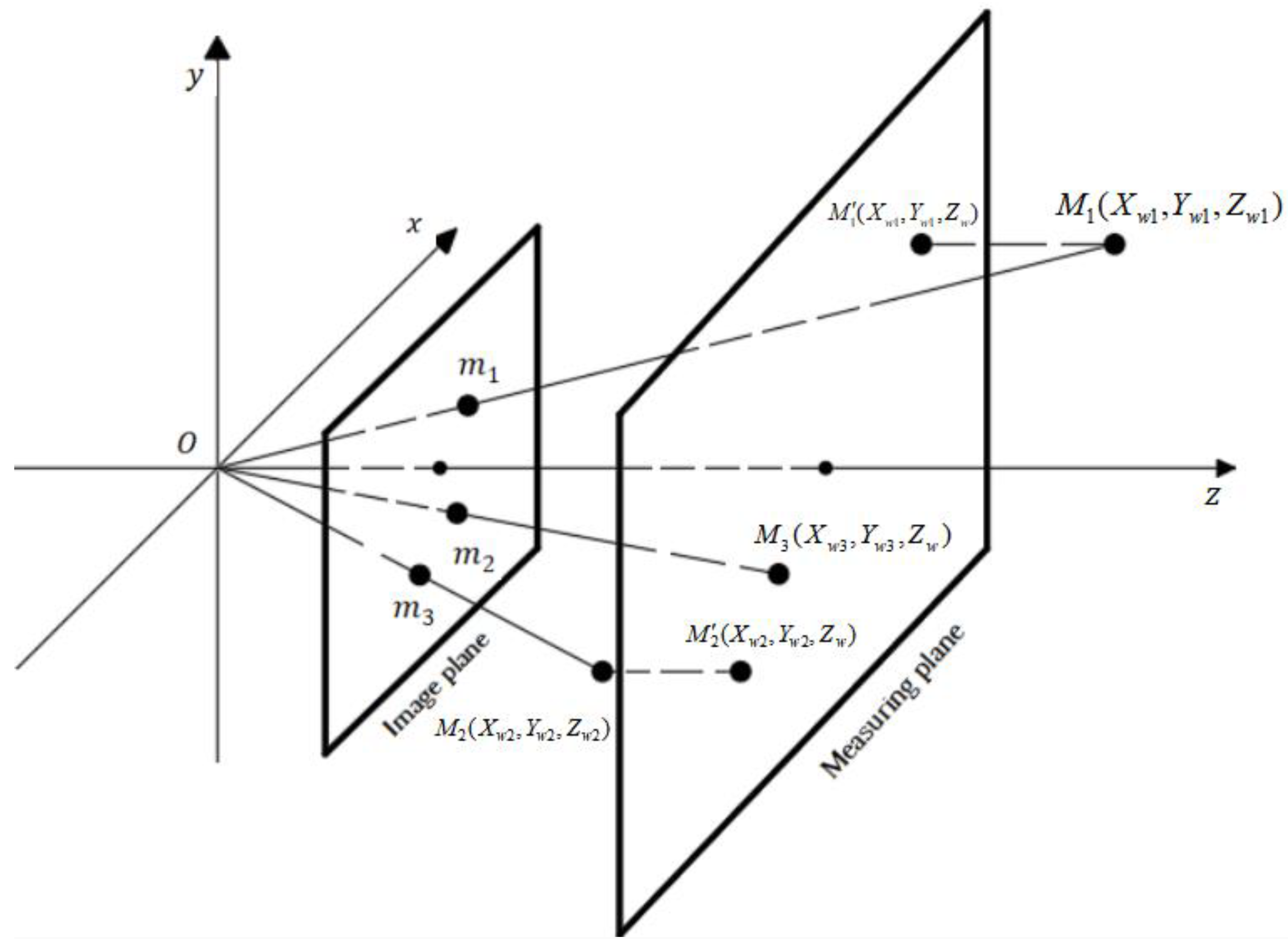

2.1. Principle

2.2. Experiment

2.2.1. Experiment Method

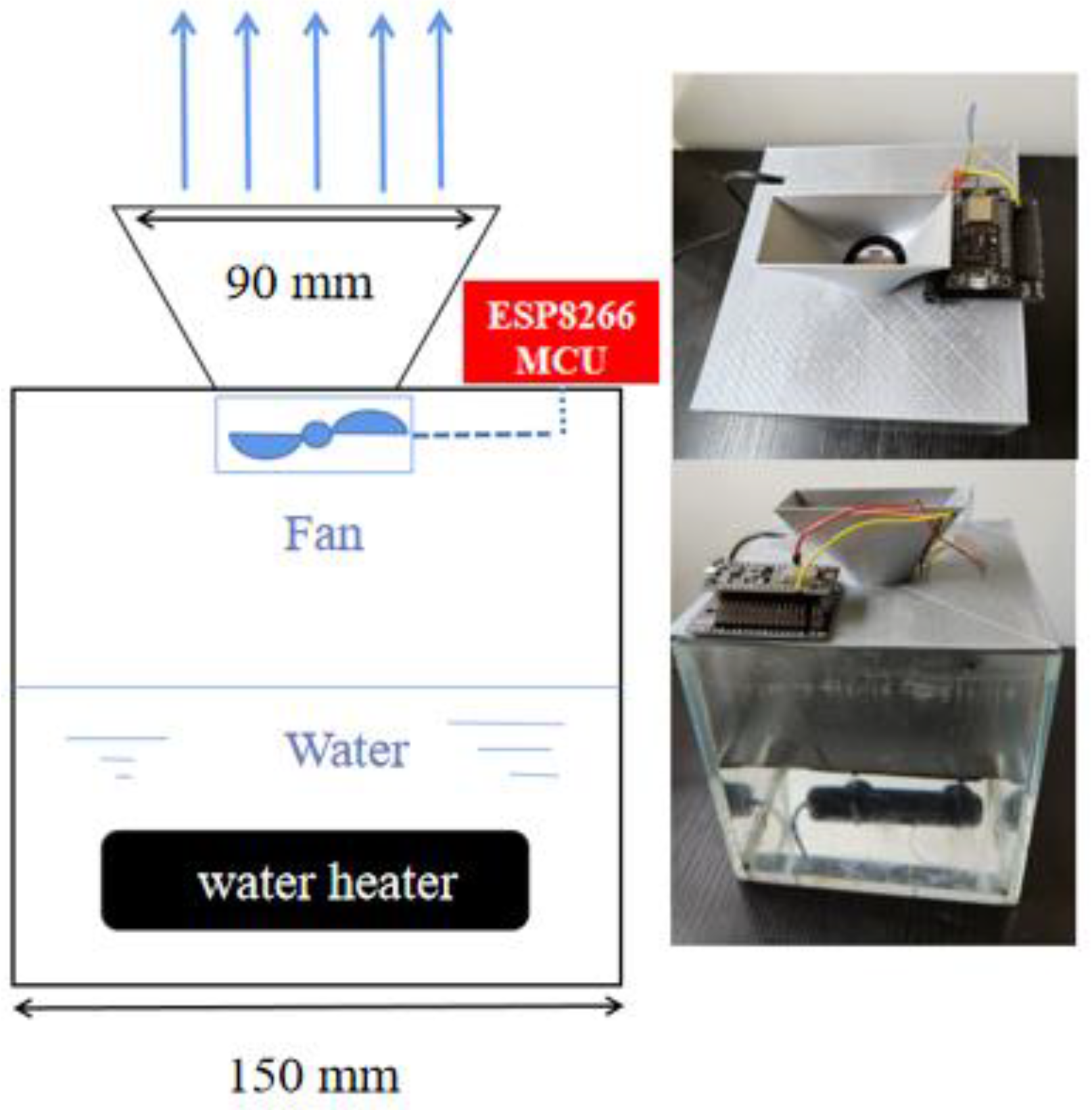

2.2.2. Experiment Equipment

3. Results and Discussion

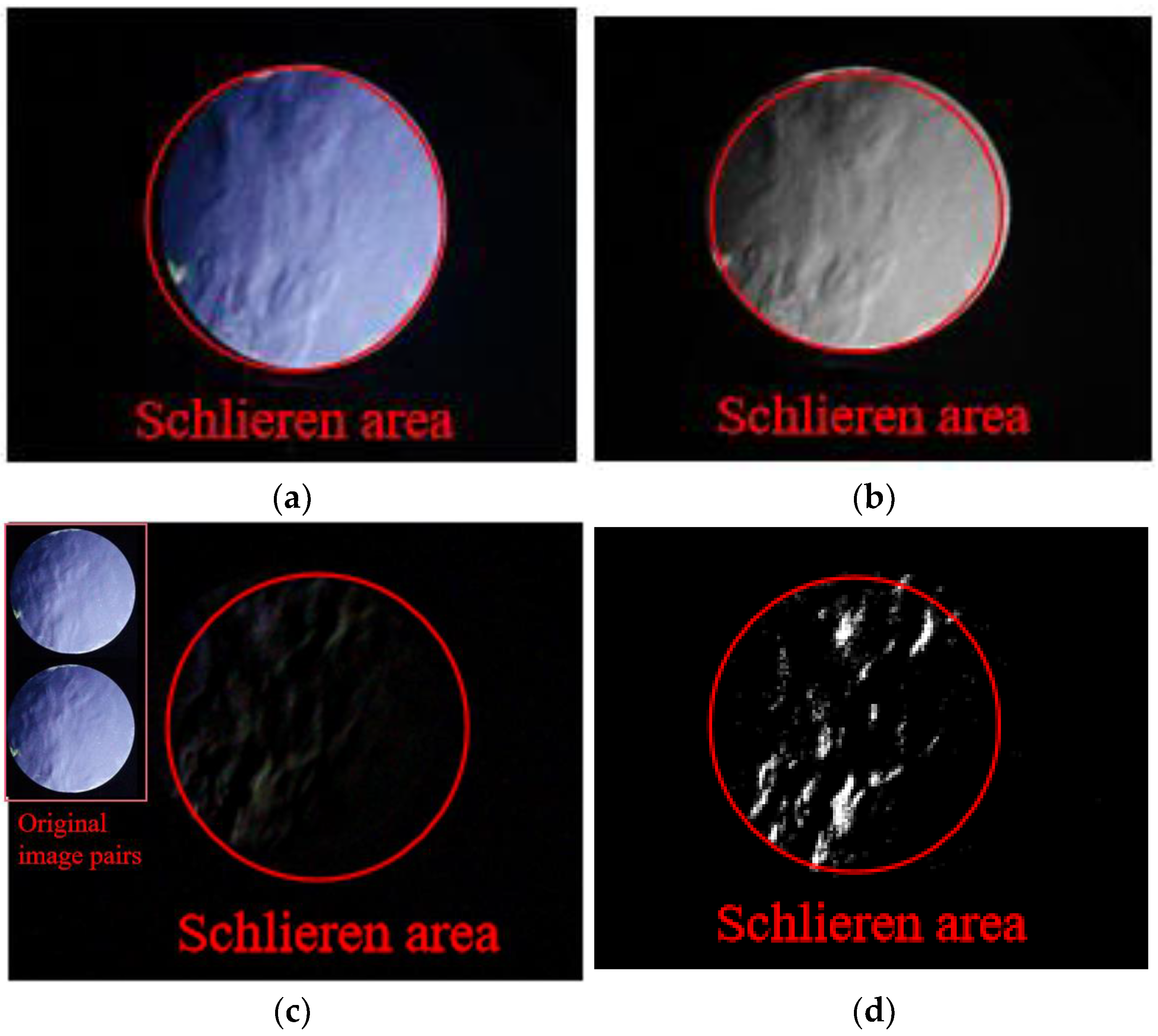

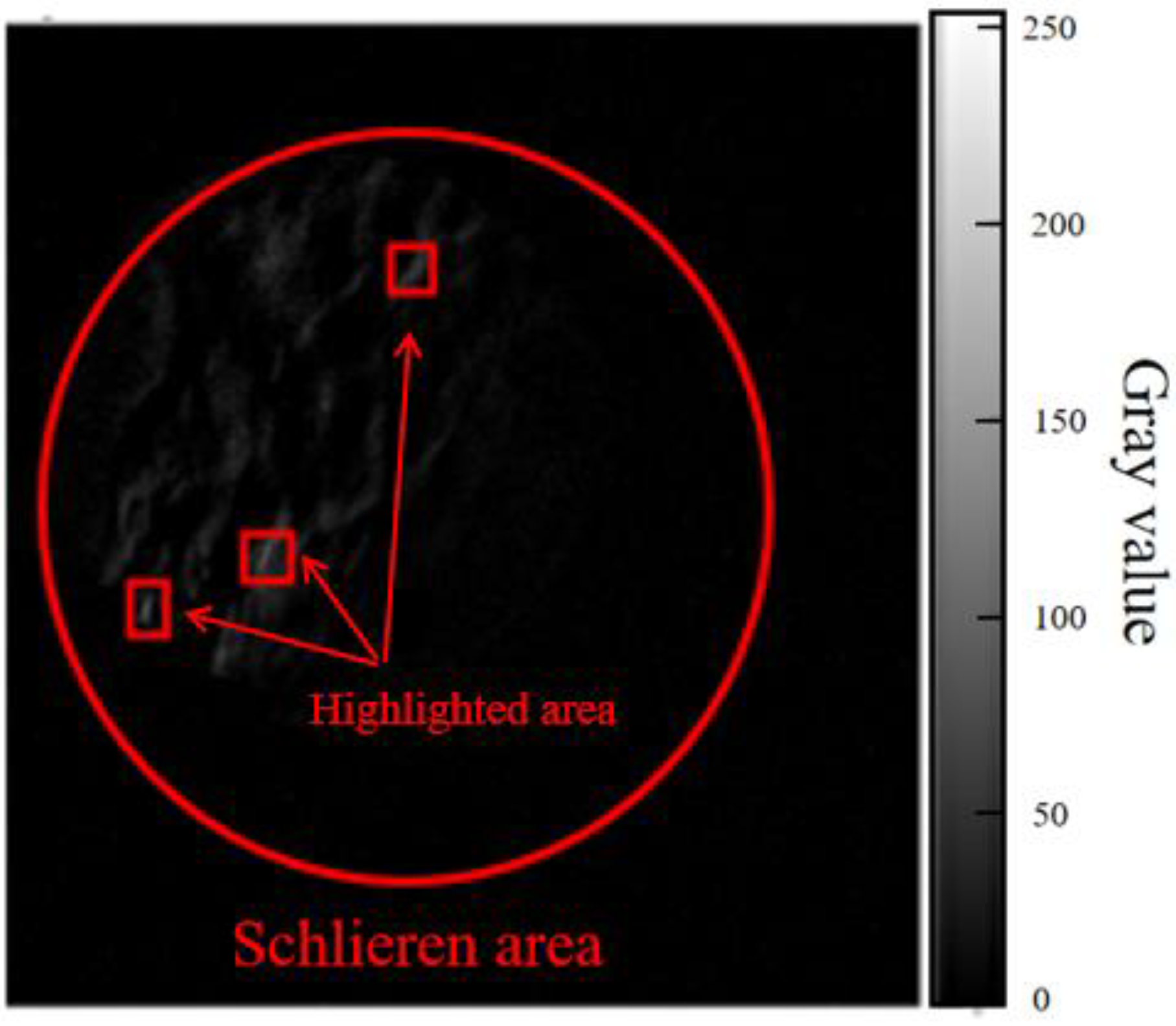

3.1. Schlieren Image Morphology Analysis

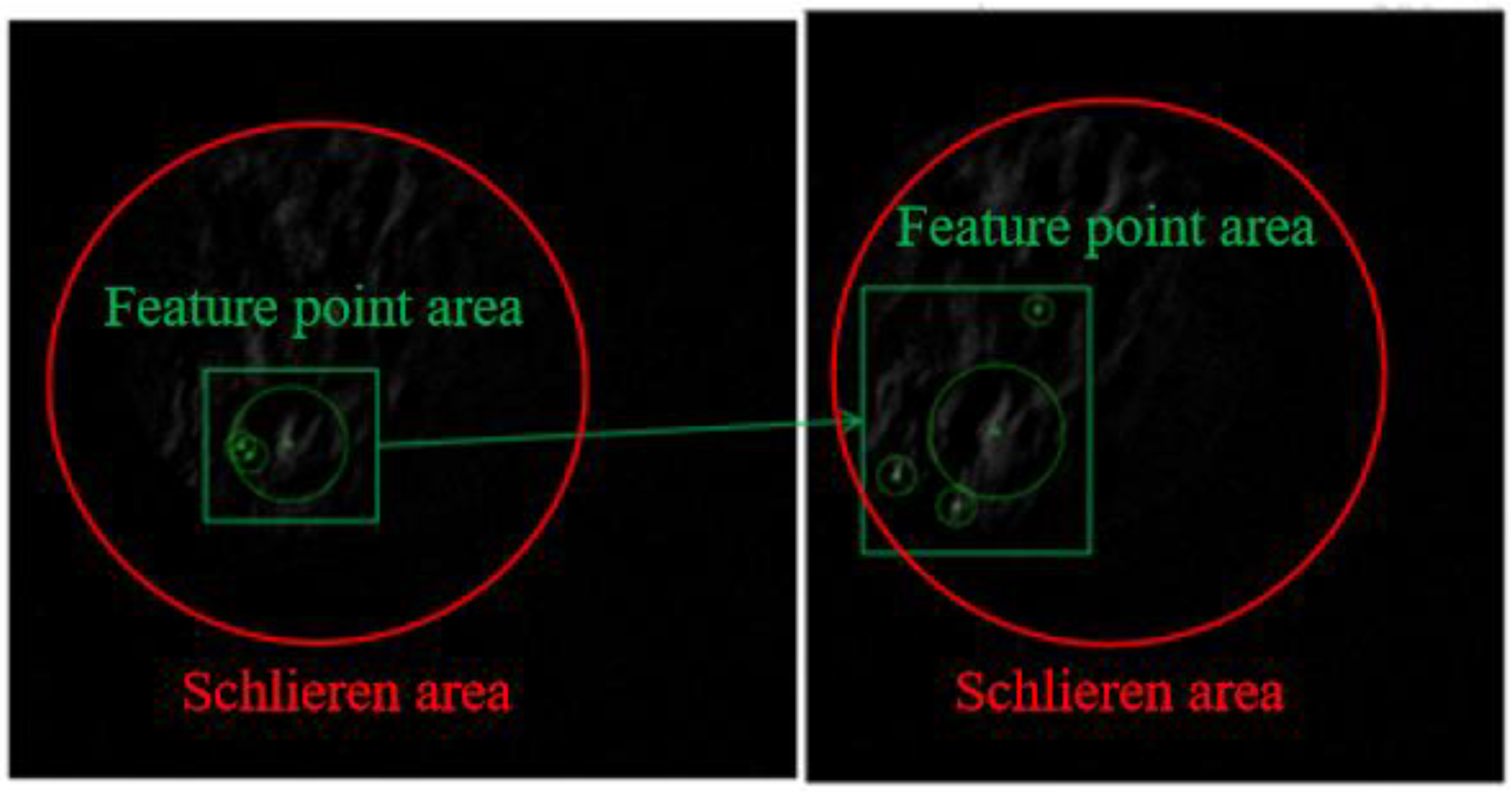

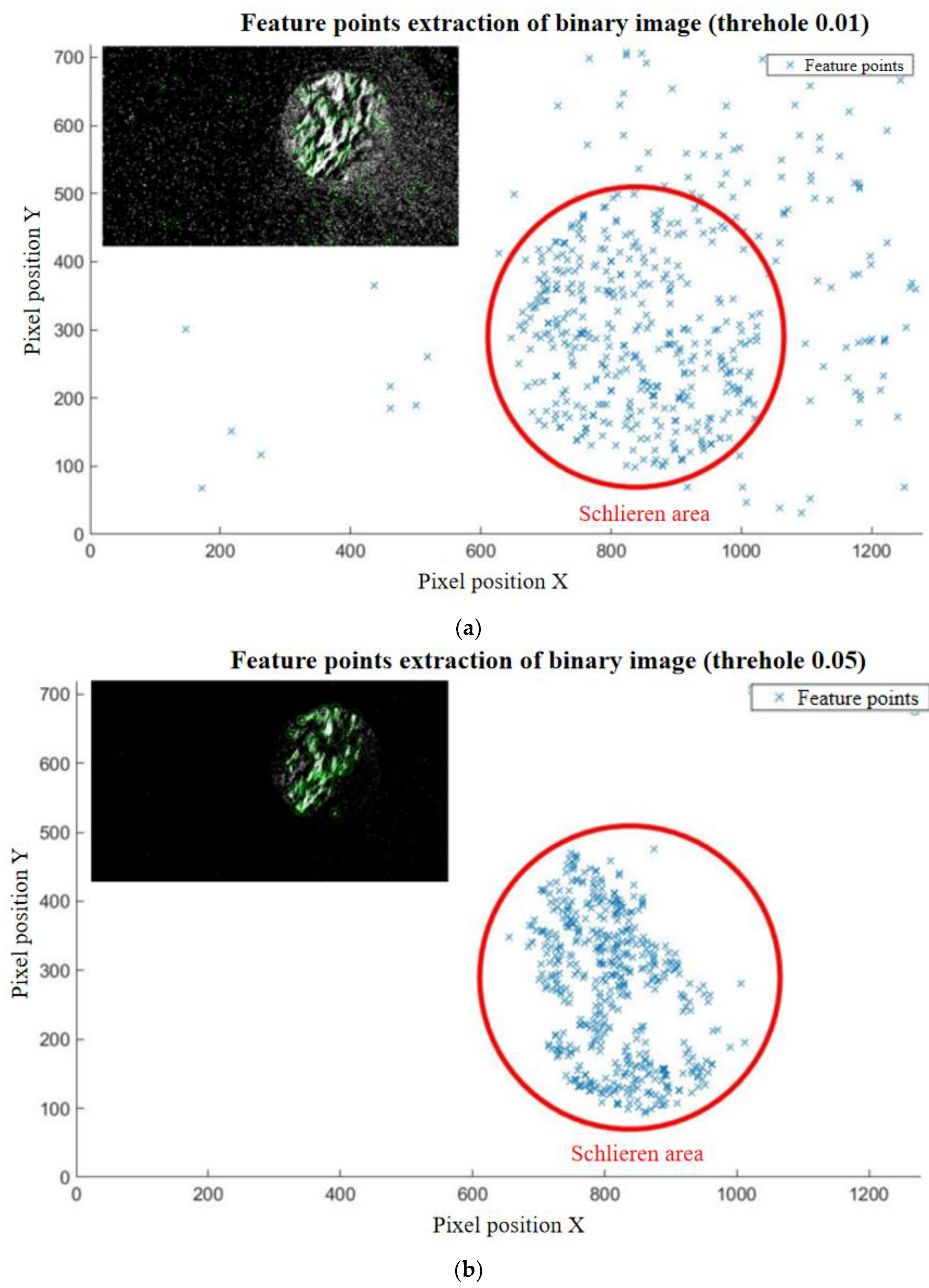

3.2. SURF Feature Point Matching

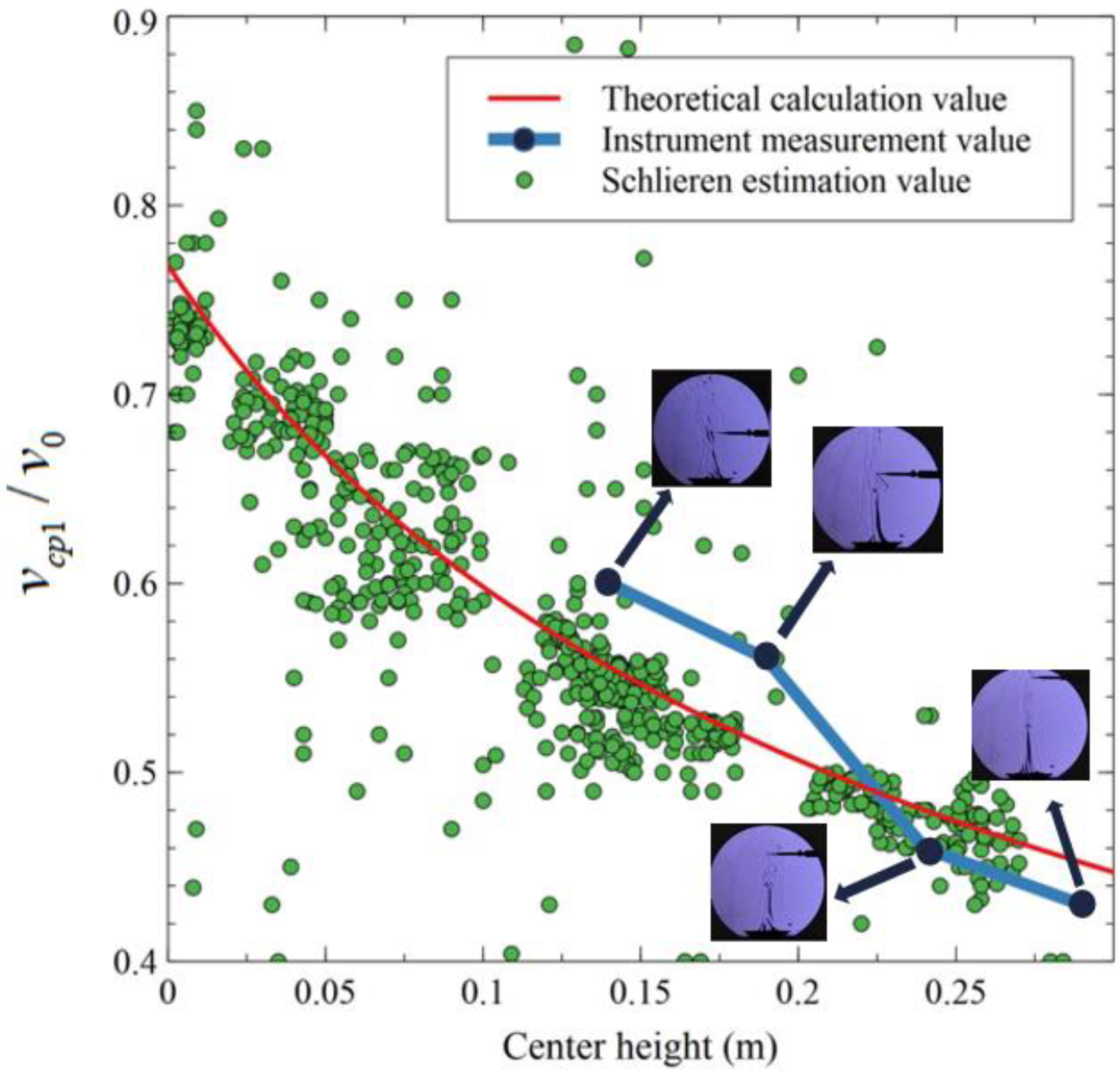

3.3. Wind Speed Estimation Results

3.3.1. Wind Speed Field Measurement

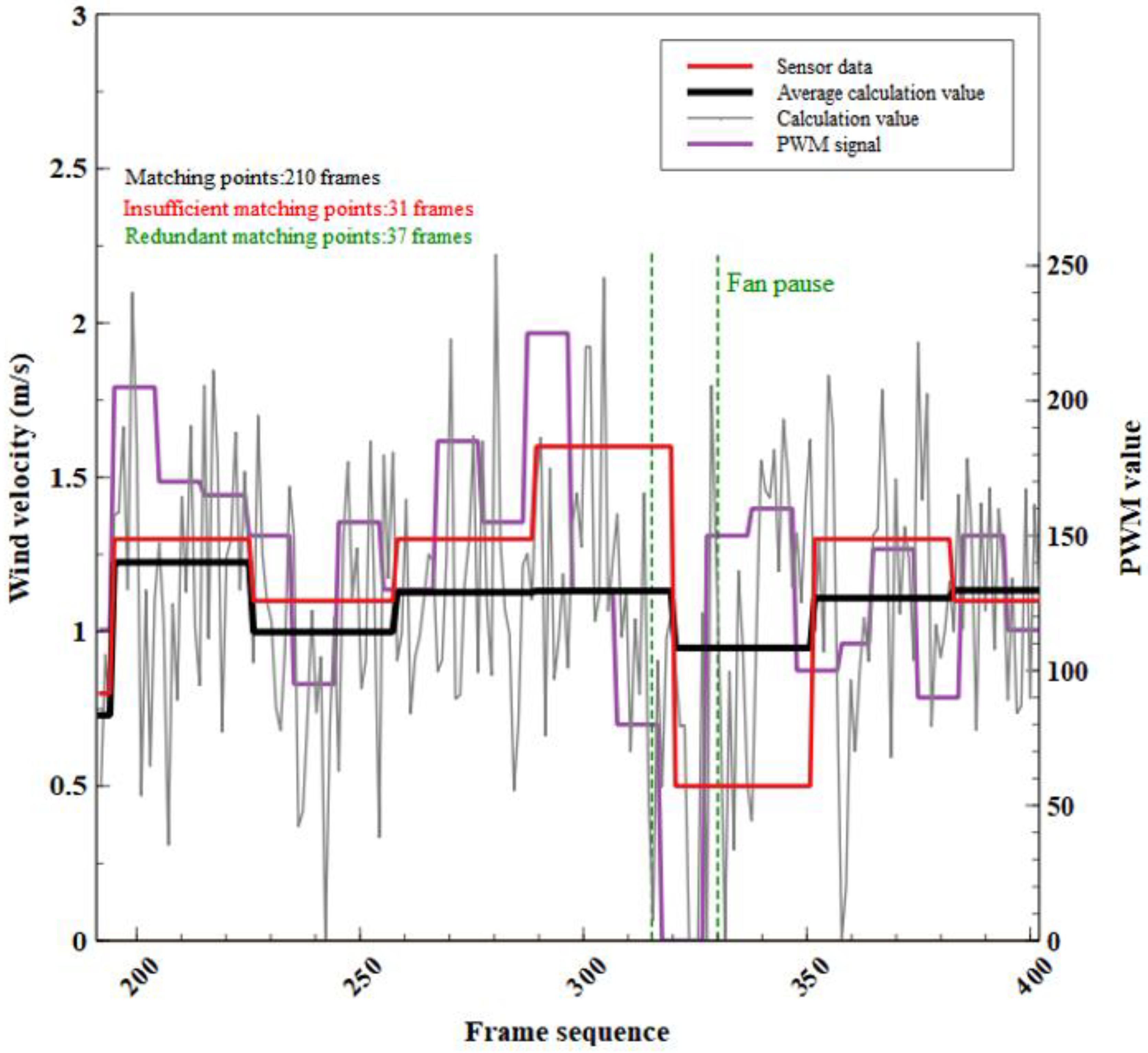

3.3.2. Average Wind Speed Measurement

4. Conclusions

- 1.

- The three-dimensional flow of two gases with density differences can be projected onto a hypothetical measurement plane and approximated as a two-dimensional flow image by using a shading device and a machine vision calculation method;

- 2.

- Combining pixel transformation with fluid kinematics, the relationship between the fluid motion of a two-dimensional shadow image and the change in image pixels is theoretically obtained;

- 3.

- The method of removing duplicate image information using a motion difference image and setting a reasonable binarization threshold for fast noise reduction are discussed to achieve more reasonable results of image feature point extraction and to improve the speed of computer operation;

- 4.

- The SURF feature matching results of two adjacent images after processing and the calculated wind speed from the results are discussed. The results illustrate that most of the relative errors between the experimental and measured values can be controlled within 15%. When the wind speed is 0 m/s in a short time, the calculation result of this method will be seriously affected. Although the wind speed of 0 m/s can be calculated in the analysis of two adjacent images, it is not reflected in the final output. In future studies, solutions need to be found and improved;

- 5.

- In addition to industrial cameras and machine vision software platforms, other parts are completed using open source hardware and 3D printing. The equipment bracket of the shading system is made of a 3D printing device made of PLA material and an open source development board for fan control. The control program can simulate the random wind speed and record the PWM signal through self-programming. The PWM value is also an important reference for the trend of wind speed change;

- 6.

- Although the method proposed in this article theoretically estimates wind speed, there are still many factors that cause errors that need to be improved in future research. The complex environment and ever-changing airflow conditions will affect the final calculation results. At the same time, further research is needed to eliminate erroneous calculation points through more effective statistical methods.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hou, Y.; Li, A.; Li, Y.; Jin, D.; Tian, Y.; Zhang, D.; Wu, D.; Zhang, L.; Lei, W. Analysis of microclimate characteristics in solar greenhouses under natural ventilation. Build. Simul. 2021, 14, 11. [Google Scholar] [CrossRef]

- Li, H.; Li, A.; Hou, Y.; Zhang, C.; Guo, J.; Li, J.; Ma, Y.; Wang, T.; Yin, Y. Analysis of Heat and Humidity in Single-Slope Greenhouses with Natural Ventilation. Buildings 2023, 13, 606. [Google Scholar] [CrossRef]

- Gaastra, P. Climatic control of photosynthesis and respiration. Environ. Control Plant Growth 1963, 113–140. [Google Scholar]

- Monteith, J.L. Gas exchange in plant communities. Environ. Control Plant Growth 1963, 95–112. [Google Scholar]

- Von Elsner, B.; Briassoulis, D.; Waaijenberg, D. Review of structural and functional characteristics of greenhouses in European Union countries: Part I, design requirements. J. Agric. Eng. Res. 2000, 75, 1–16. [Google Scholar] [CrossRef]

- Chen, C.; Ling, H.; Zhai, Z. Thermal performance of an active-passive ventilation wall with phase change material in solar greenhouses. Appl. Energy 2018, 216, 602–612. [Google Scholar] [CrossRef]

- Li, A.; Huang, L.; Zhang, T. Field test and Analysis of microclimate in naturally ventilated single-sloped greenhouses. Energy Build. 2017, 138, 479–489. [Google Scholar]

- Bruun, H.H. Hot-Wire Anemometry: Principles and Signal Analysis. Meas. Sci. Technol. 1996, 7, 024. [Google Scholar] [CrossRef]

- Papadopoulos, K.H.; Stefantos, N.C.; Paulsen, U.S.; Morfiadakis, E. Effects of turbulence and flow inclination on the performance of cup anemometers in the field. Bound. Layer Meteorol. 2001, 101, 77–107. [Google Scholar] [CrossRef]

- Hartley, G. The development of electrical anemometers. Proc. IEE Part II Power Eng. 1951, 98, 430–437. [Google Scholar]

- Kristensen, L. The Cup Anemometer and Other Exciting Instruments. Risoe-R (Denmark) 1992. [Google Scholar]

- Wu, C. Development of verification device for starting lever pressing automation of DEM6 portable three-cup anemometer. Meteorol. Environ. Res. 2021, 12, 31–33. [Google Scholar]

- Bucci, G.; Ciancetta, F.; Fiorucci, E.; Gallo, D.; Luiso, M. A low-cost ultrasonic wind speed and direction measurement system. In Proceedings of the 2013 IEEE International Instrumentation and Measurement Technology Conference, Minneapolis, MN, USA, 6–9 May 2013. [Google Scholar]

- Quaranta, A.A.; Aprilesi, G.C.; Cicco, G.D.; Taroni, A. A microprocessor based, three axes, ultrasonic anemometer. J. Phys. E 1985, 18, 384. [Google Scholar] [CrossRef]

- Samer, M.; Loebsin, C.; Bobrutzki, K.V.; Fiedler, M.; Ammon, C.; Berg, W.; Sanftleben, P.; Brunsch, R. A computer program for monitoring and controlling ultrasonic anemometers for aerodynamic measurements in animal buildings. Comput. Electron. Agric. 2011, 79, 1–12. [Google Scholar] [CrossRef]

- Wagenberg, A.; Leeuw, M. Measurement of air velocity in animal occupied zones using an ultrasonic anemometer. Appl. Eng. Agric. 2003, 19, 499. [Google Scholar]

- Han, D.; Park, S. Measurement range expansion of continuous wave ultrasonic anemometer. Measurement 2011, 44, 1909–1914. [Google Scholar] [CrossRef]

- Han, D.; Kim, S.; Park, S. Two-dimensional ultrasonic anemometer using the directivity angle of an ultrasonic sensor. Microelectron. J. 2008, 39, 1195–1199. [Google Scholar] [CrossRef]

- Horn, B.; Schunck, B.G. Determining optical flow. Artif. Intell. 1981, 17, 185–203. [Google Scholar] [CrossRef]

- Yuan, J.; Schnorr, C.; Memin, E. Discrete orthogonal decomposition and variational fluid flow estimation. J. Math. Imaging Vis. 2007, 28, 67–80. [Google Scholar]

- Liu, T.; Shen, L. Fluid flow and optical flow. J. Fluid Mech. 2008, 614, 253–291. [Google Scholar] [CrossRef]

- Suter, D. Motion estimation and vector splines. In Proceedings of the 1994 IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; pp. 939–942. [Google Scholar] [CrossRef]

- Bay, H. SURF: Speed Up Robust Features. In Computer Vision–ECCV 2006, Proceedings of the 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 404–417. [Google Scholar]

- Salem, A.; Ozeki, T. Face Recognition by Using SURF Features with Block-Based Bag of Feature Models. In Proceedings of the 3rd International Conference on Human-Agent Interaction, Daegu, Republic of Korea, 21–24 October 2015. [Google Scholar]

- Zhang, H.; Hu, Q. Fast image matching based-on improved SURF algorithm. In Proceedings of the 2011 International Conference on Electronics, Communications and Control (ICECC), Ningbo, China, 9–11 September 2011. [Google Scholar]

- Abderrahim, E.L.; Rachid, O.H.T.; Mounir, G.; Omar, B.; Sanaa, E.F. Vehicle speed estimation using extracted SURF features from stereo images. In Proceedings of the 2018 International Conference on Intelligent Systems and Computer Vision (ISCV), Fez, Morocco, 2–4 April 2018. [Google Scholar]

- Dai, Y.; Xu, T.; Feng, Z.Q.; Gao, X. Cotton flow velocity measurement based on image cross-correlation and Kalman filtering algorithm forforeign fibre elimination. J. Text. Inst. 2022, 113, 2135–2142. [Google Scholar] [CrossRef]

- Elder, S.A.; Williams, J. 6-Fluid Kinematics. Fluid Phys. Oceanogr. Phys. 1996, 101–126. [Google Scholar] [CrossRef]

- Faugeras, O.; Luong, Q.T. The Geometry of Multiple Images; MIT Press: Cambridge, MA, USA, 2001. [Google Scholar] [CrossRef]

- Gaspar, T.; Oliveira, P. Single pan and tilt camera indoor positioning and tracking system. Eur. J. Control 2011, 17, 414–428. [Google Scholar] [CrossRef]

- Feng, Q.; Zhang, Y.; Zhao, X. Estimation of surface speed of river flow based on machine vision. Trans. Chin. Soc. Agric. Eng. 2018, 34, 140–146. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Amelina, T. Theoretical basis for fog formation in chemical production-X. National Chemical Plant 1951. [Google Scholar]

| Device Parameters | Cost | |

|---|---|---|

| Concave mirror | Focal length: 700 mm Diameter: 300 mm | USD 60 |

| Knife edge | Tuning range: 0~5 mm Blade length: 30 mm Angle: 0~30° Edge direction: upward | USD 0.5 |

| Light source | Type: LED Diameter: 4 mm Power: 0.5 W | USD 2 |

| Trestle | USD 23 | |

| 3D printing attachments | USD 10 | |

| CCD (include lens) | Interface: USB 2.0 Resolution ratio: 1280 × 720 Frame rate: 30 fps Sensitivity: 0~50 °C 1.8 V/lux-sec at 550 nm Signal-to-noise ratio: 42.3 dB Focal length: 55 mm Effective focal length: 82.5 mm | USD 45 |

| Total cost | USD 140.5 |

| SWA 32 | Measurement range: 0.1–10 m/s Measurement accuracy: at 23 °C ± 3 °C ±0.03 m/s at 0.1–0.4 m/s ±0.04 m/s at 0.4–1.33 m/s ±3% read value at 1.33–30 m/s Full operating range 10–45 °C ±0.05 m/s at 0.1–10 m/s Resolution: 0.01 m/s |

| Insufficient Matching | Redundant Matching | ||||||

|---|---|---|---|---|---|---|---|

|  | ||||||

| Matching Point Location | Matching Point Location | ||||||

| u (221-222) | v (221-222) | u (222-223) | v (222-223) | u (284-285) | v (284-285) | u (285-286) | v (285-286) |

| 815.38263 | 427.07428 | 667.79425 | 371.96356 | 798.28131 | 478.50363 | 799.00092 | 472.76312 |

| 687.51044 | 368.84271 | 770.16553 | 289.96793 | ||||

| 758.77820 | 346.49329 | 731.72919 | 231.26047 | ||||

| 767.06598 | 327.19189 | 695.40533 | 329.61893 | ||||

| 687.54504 | 356.99612 | 695.40533 | 329.61893 | ||||

| 692.10498 | 341.34375 | 693.94812 | 325.99188 | ||||

| 762.82733 | 395.07770 | 785.15594 | 403.35800 | ||||

| 688.82349 | 344.70959 | 740.08984 | 321.66934 | ||||

| 742.68695 | 246.68456 | 837.17480 | 234.00017 | ||||

| 739.84436 | 327.85013 | 759.47424 | 426.84286 | ||||

| 746.35211 | 315.70590 | 690.93073 | 331.17929 | ||||

| 771.53284 | 326.28329 | 690.93073 | 331.17929 | ||||

| 786.75708 | 469.63852 | 854.80908 | 297.54007 | ||||

| 746.98755 | 403.53232 | 795.7077 | 392.96216 | ||||

| 696.28992 | 324.26947 | 695.82886 | 324.27798 | ||||

| Data Sequence | Frame Sequence | Sensor Data (m/s) | Average Calculation Data (m/s) | Relative Error |

|---|---|---|---|---|

| 1 | 191–194 | 0.8 | 0.72 | 8.8% |

| 2 | 194–225 | 1.3 | 1.22 | 5.8% |

| 3 | 225–257 | 1.1 | 0.99 | 9.2% |

| 4 | 257–288 | 1.3 | 1.13 | 13.3% |

| 5 | 288–319 | 1.6 | 1.13 | 29.2% |

| 6 | 319–350 | 0.5 | 0.95 | 90% |

| 7 | 350–381 | 1.3 | 1.10 | 14.7% |

| 8 | 381–401 | 1.1 | 1.13 | 3.1% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, H.; Li, A.; Zhang, L.; Hou, Y.; Yang, C.; Chen, L.; Lu, N. Estimation of Wind Speed Based on Schlieren Machine Vision System Inspired by Greenhouse Top Vent. Sensors 2023, 23, 6929. https://doi.org/10.3390/s23156929

Li H, Li A, Zhang L, Hou Y, Yang C, Chen L, Lu N. Estimation of Wind Speed Based on Schlieren Machine Vision System Inspired by Greenhouse Top Vent. Sensors. 2023; 23(15):6929. https://doi.org/10.3390/s23156929

Chicago/Turabian StyleLi, Huang, Angui Li, Linhua Zhang, Yicun Hou, Changqing Yang, Lu Chen, and Na Lu. 2023. "Estimation of Wind Speed Based on Schlieren Machine Vision System Inspired by Greenhouse Top Vent" Sensors 23, no. 15: 6929. https://doi.org/10.3390/s23156929

APA StyleLi, H., Li, A., Zhang, L., Hou, Y., Yang, C., Chen, L., & Lu, N. (2023). Estimation of Wind Speed Based on Schlieren Machine Vision System Inspired by Greenhouse Top Vent. Sensors, 23(15), 6929. https://doi.org/10.3390/s23156929