An Automatic Classification System for Environmental Sound in Smart Cities

Abstract

1. Introduction

2. Related Work

3. Proposed Method

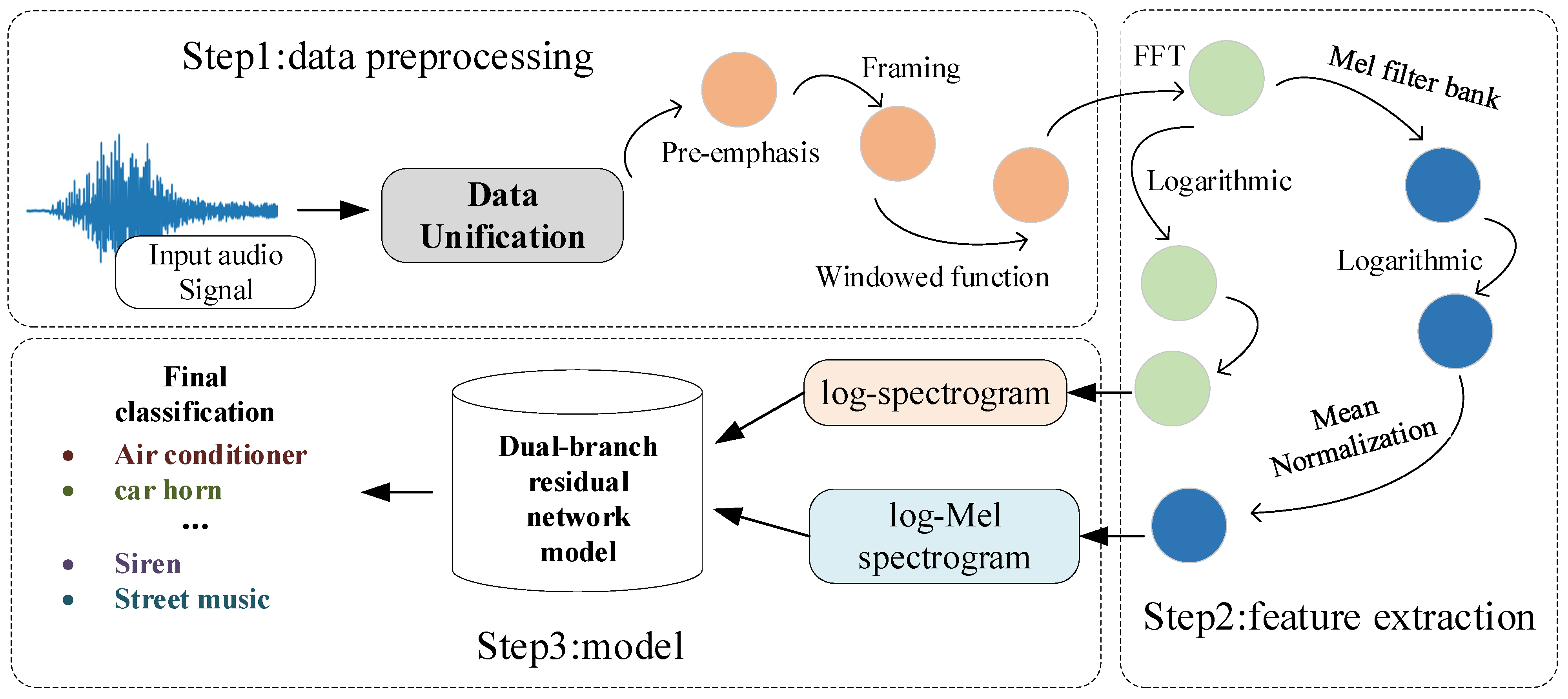

3.1. Data Pre-Processing

| Algorithm 1 Loop padding |

|

3.2. Feature Extraction

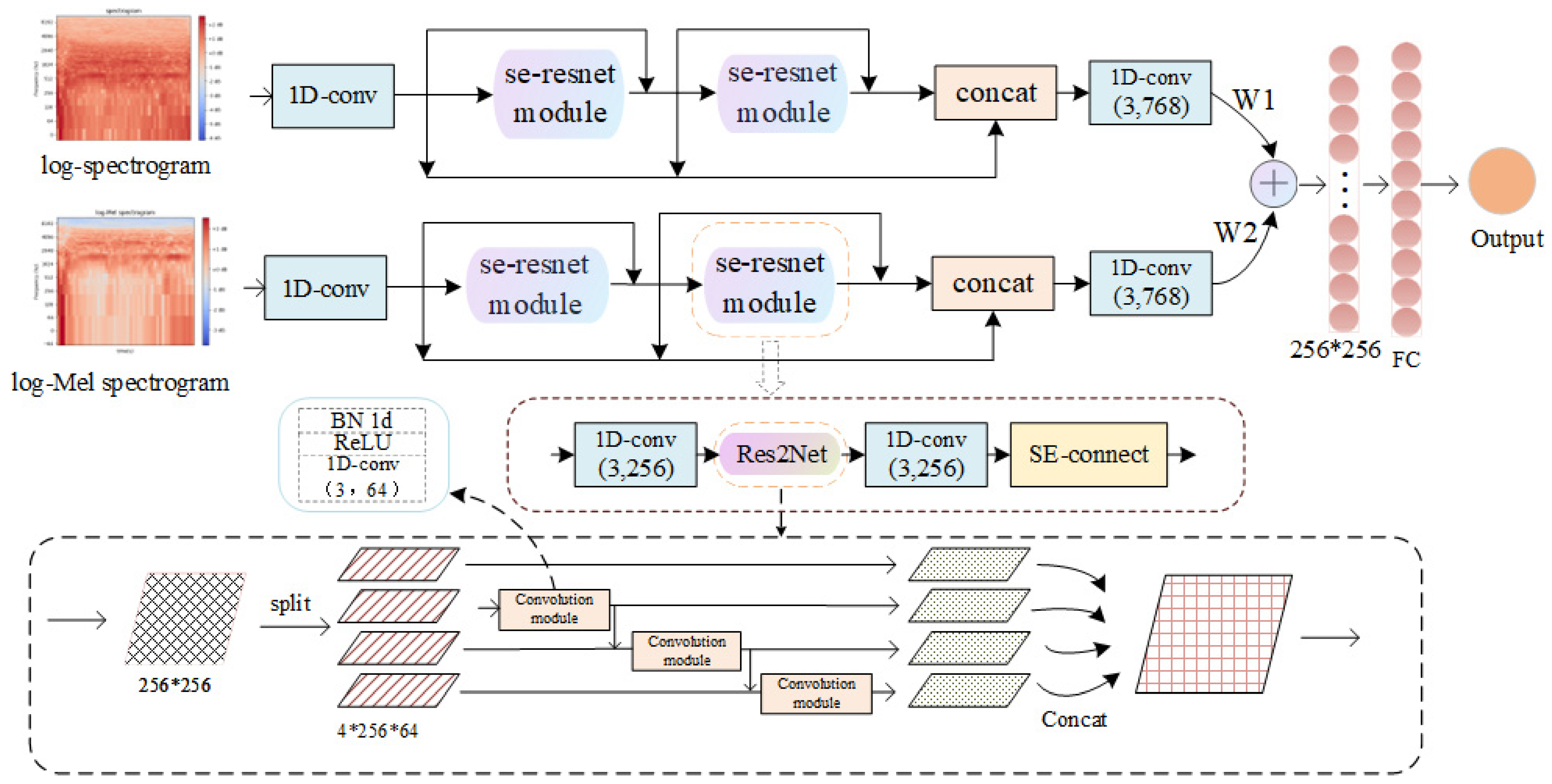

3.3. Dual-Branch Residual Network

3.4. Training the Network

4. Experiment and Result

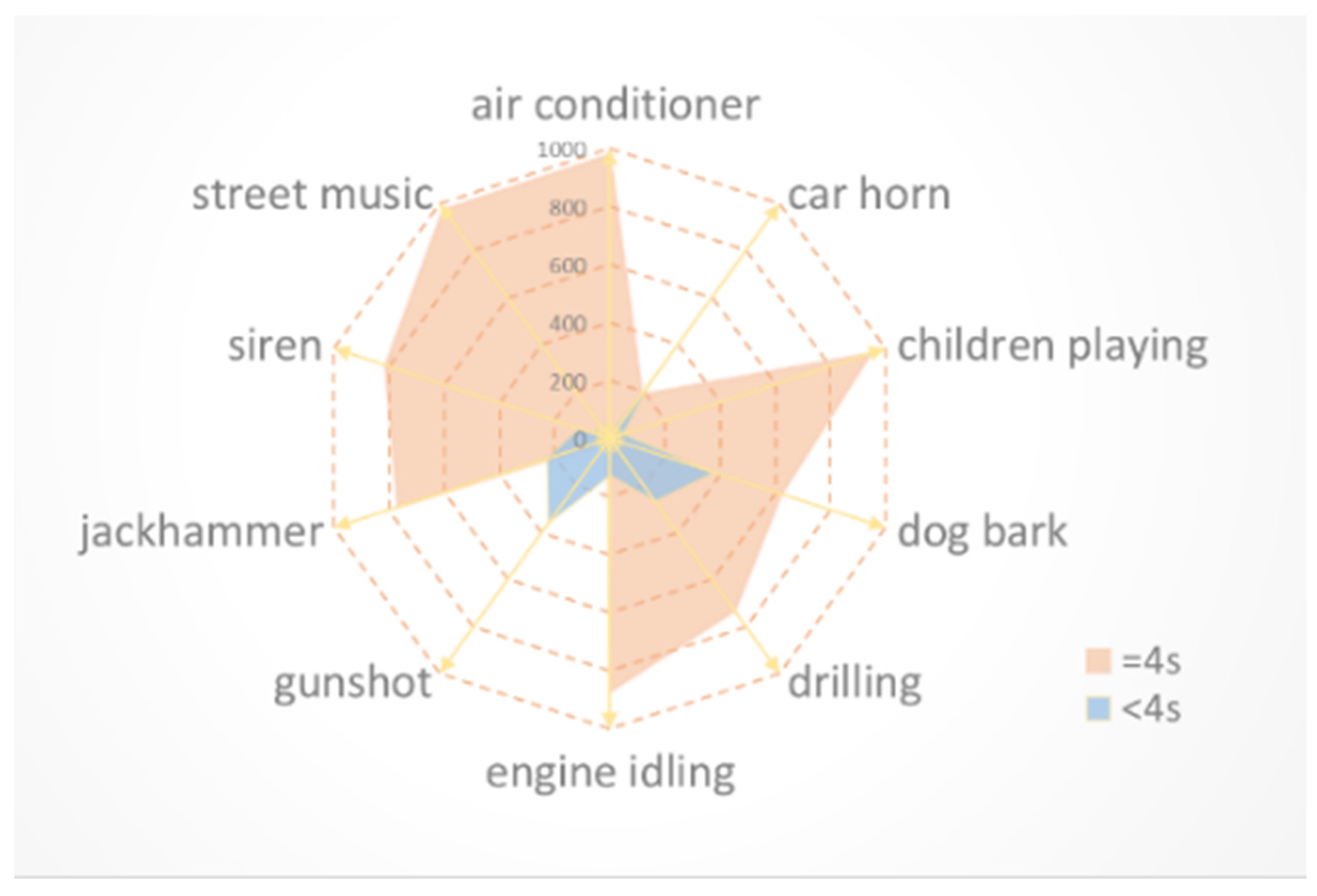

4.1. Datasets

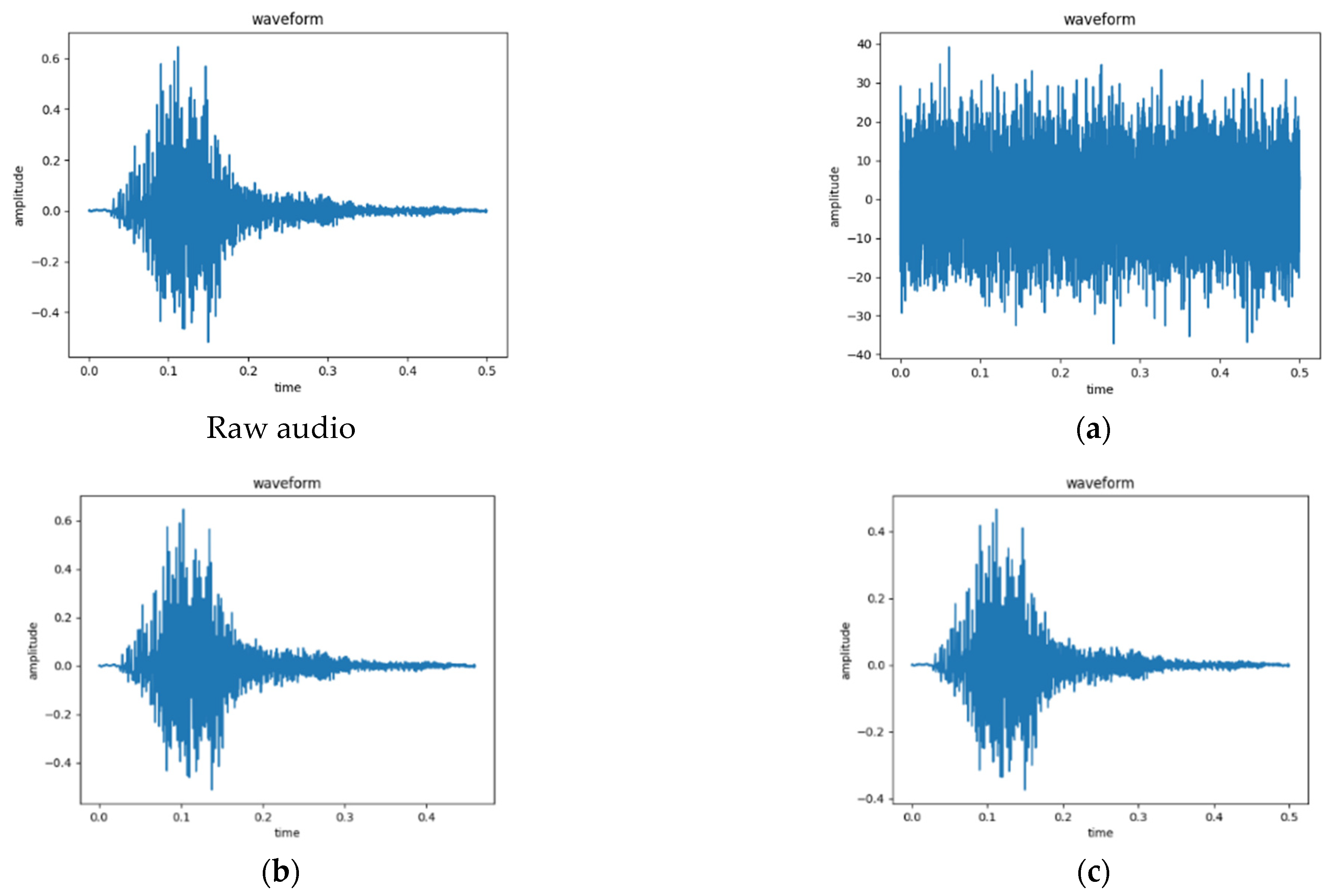

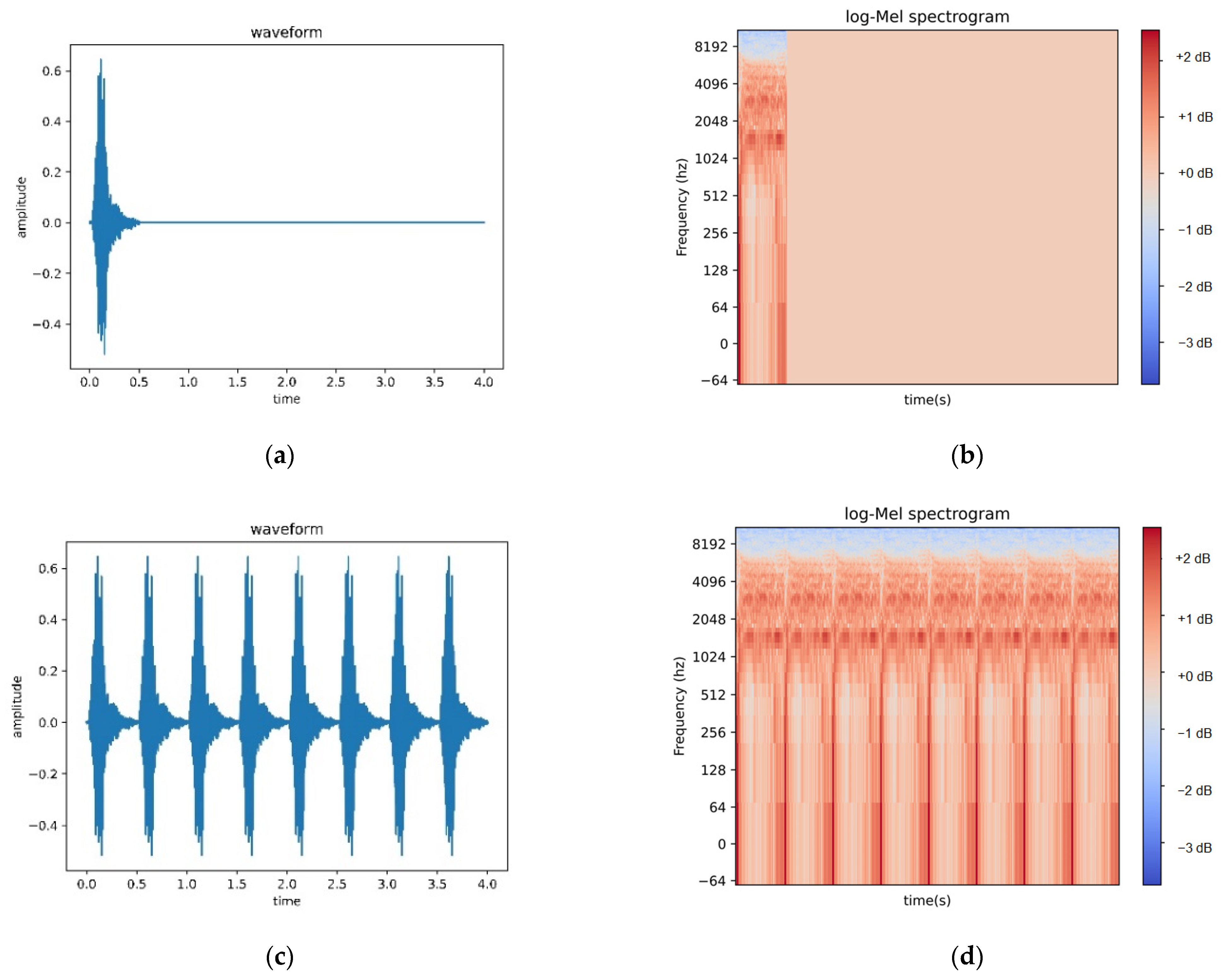

4.2. Comparison between Loop Padding and Zero Padding

- (a)

- Is the time-domain waveform of the original data after zero padding, and the NumPy. Pad ( ) function is used to unify the original signal to 4 s by zero padding.

- (b)

- Is the log-Mel spectrogram after the zero-padding method, and the log-Mel spectrogram feature extraction mentioned above was carried out on Figure 5a.

- (c)

- Is the time-domain waveform of the original data after loop padding, loop padding the original signal through Algorithm 1 proposed above and unifying it into 4 s.

- (d)

- Is the log-Mel spectrogram after the loop-padding method, and the log-Mel spectrogram feature extraction mentioned above was carried out on Figure 5c.

4.3. Proposed Model

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Pan, X.; Ge, C.; Lu, R.; Song, S.; Chen, G.; Huang, Z.; Huang, G. On the Integration of Self-Attention and Convolution. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 805–815. [Google Scholar] [CrossRef]

- Yu, R.; Du, D.; LaLonde, R.; Davila, D.; Funk, C.; Hoogs, A.; Clipp, B. Cascade Transformers for End-to-End Person Search. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 7257–7266. [Google Scholar] [CrossRef]

- Yan, K.; Zhou, X. Chiller faults detection and diagnosis with sensor network and adaptive 1D CNN. Digit. Commun. Netw. 2022, 8, 531–539. [Google Scholar] [CrossRef]

- Nagrani, A.; Albanie, S.; Zisserman, A. Seeing voices and hearing faces: Cross-modal biometric matching. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Tran, V.-T.; Tsai, W.-H. Acoustic-Based Emergency Vehicle Detection Using Convolutional Neural Networks. IEEE Access 2020, 8, 75702–75713. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Tai, T.-C.; Wang, J.-C.; Santoso, A.; Mathulaprangsan, S.; Chiang, C.-C.; Wu, C.-H. Sound Events Recognition and Retrieval Using Multi-Convolutional-Channel Sparse Coding Convolutional Neural Networks. IEEE ACM Trans. Audio, Speech, Lang. Process. 2020, 28, 1875–1887. [Google Scholar] [CrossRef]

- Avramidis, K.; Kratimenos, A.; Garoufis, C.; Zlatintsi, A.; Maragos, P. Deep Convolutional and Recurrent Networks for Polyphonic Instrument Classification from Monophonic Raw Audio Waveforms. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 3010–3014. [Google Scholar] [CrossRef]

- Piczak, K.J. Environmental sound classification with convolutional neural networks. In Proceedings of the Machine Learning for Signal Processing (MLSP), Boston, MA, USA, 17–20 September 2015; pp. 1–6. [Google Scholar]

- Zhang, J.; Liu, W.; Lan, J.; Hu, Y.; Zhang, F. Audio Fault Analysis for Industrial Equipment Based on Feature Metric Engineering with CNNs. In Proceedings of the 2021 4th International Conference on Robotics, Control and Automation Engineering (RCAE), Wuhan, China, 4–6 November 2021; pp. 409–416. [Google Scholar] [CrossRef]

- Abdoli, S.; Cardinal, P.; Koerich, A.L. End-to-End Environmental Sound Classification using a 1D Convolutional Neural Network. arXiv 2019, arXiv:1904.08990. [Google Scholar] [CrossRef]

- Mu, W.; Yin, B.; Huang, X.; Xu, J.; Du, Z. Environmental sound classification using temporal-frequency attention based convolutional neural network. Sci. Rep. 2021, 11, 21552. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Feng, C.; Anderson, D.V. A Multi-Channel Temporal Attention Convolutional Neural Network Model for Environmental Sound Classification. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 930–934. [Google Scholar] [CrossRef]

- Barchiesi, D.; Giannoulis, D.; Stowell, D.; Plumbley, M.D. Acoustic scene classification: Classifying environments from the sounds they produce. IEEE Signal Process. Mag. 2015, 32, 16–34. [Google Scholar] [CrossRef]

- Phan, H.; Maaß, M.; Mazur, R.; Mertins, A. Random regression forests for acoustic event detection and classification. IEEE ACM Trans. Audio Speech Lang. Process. 2015, 23, 20–31. [Google Scholar] [CrossRef]

- Khunarsal, P.; Lursinsap, C.; Raicharoen, T. Very short time environmental sound classification based on spectrogram pattern matching. Inf. Sci. 2013, 243, 57–74. [Google Scholar] [CrossRef]

- Huang, J.; Ren, L.; Feng, L.; Yang, F.; Yang, L.; Yan, K. AI Empowered Virtual Reality Integrated Systems for Sleep Stage Classification and Quality Enhancement. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 1494–1503. [Google Scholar] [CrossRef]

- Yan, K.; Zhou, X.; Yang, B. AI and IoT Applications of Smart Buildings and Smart Environment Design, Construction and Maintenance. Build. Environ. 2022, 109968. [Google Scholar] [CrossRef]

- Zaw, T.H.; War, N. The combination of spectral entropy, zero crossing rate, short time energy and linear prediction error for voice activity detection. In Proceedings of the 2017 20th International Conference of Computer and Information Technology (ICCIT), Dhaka, Bangladesh, 22–24 December 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Lartillot, O.; Toiviainen, P. A Matlab toolbox for musical feature extraction from audio. In Proceedings of the 10th International Conference on Digital Audio Effects (DAFx-07), Bordeaux, France, 10–15 September 2007; pp. 237–244. [Google Scholar]

- Cotton, C.V.; Ellis, D.P. Spectral vs. spectro-temporal features for acoustic event detection. In Proceedings of the 2011 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), New Paltz, NY, USA, 16–19 October 2011; pp. 69–72. [Google Scholar]

- Giannoulis, D.; Benetos, E.; Stowell, D.; Rossignol, M.; Lagrange, M.; Plumbley, M.D. Detection and classification of acoustic scenes and events: An IEEE AASP challenge. In Proceedings of the Applications of Signal Processing to Audio and Acoustics (WASPAA), New Paltz, NY, USA, 20–23 October 2013; Volume 10, pp. 1–4. [Google Scholar]

- Chen, J.; Wang, Y.; Wang, D. A feature study for classification-based speech separation at low signal-to-noise ratios. IEEE ACM Trans. Audio Speech Lang. Process. 2014, 22, 1993–2002. [Google Scholar] [CrossRef]

- Li, R.; Yin, B.; Cui, Y.; Du, Z.; Li, K. Research on Environmental Sound Classification Algorithm Based on Multi-feature Fusion. In Proceedings of the 2020 IEEE 9th Joint International Information Technology and Artificial Intelligence Conference (ITAIC), Chongqing, China, 11–13 December 2020; pp. 522–526. [Google Scholar] [CrossRef]

- Salamon, J.; Jacoby, C.; Bello, J.P. A dataset and taxonomy for urban sound research. In Proceedings of the 22nd ACM International Conference on Multimedia, Orlando, FL, USA, 3–7 November 2014; pp. 1041–1044. [Google Scholar]

- Agrawal, P.; Ganapathy, S. Interpretable representation learning for speech and audio signals based on relevance weighting. IEEE ACM Trans. Audio Speech Lang. Process. 2020, 28, 2823–2836. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Piczak, K.J. ESC: Dataset for environmental sound classification. In Proceedings of the 23rd ACM Multimedia Conference, Brisbane, Australia, 26–30 October 2015; pp. 1015–1018. [Google Scholar] [CrossRef]

- Park, D.S.; Chan, W.; Zhang, Y.; Chiu, C.C.; Zoph, B.; Cubuk, E.D.; Le, Q.V. Specaugment: A simple data augmentation method for automatic speech recognition. Proc. Interspeech 2019, 2613–2617. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Chen, Y.; Guo, Q.; Liang, X.; Wang, J.; Qian, Y. Environmental sound classification with dilated convolutions. Appl. Acoust. 2018, 148, 123–132. [Google Scholar] [CrossRef]

- Tokozume, Y.; Harada, T. Learning environmental sounds with end-to-end convolutional neural network. In Proceedings of the ICASSP 2017—2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017. [Google Scholar]

- Sang, J.; Park, S.; Lee, J. Convolutional Recurrent Neural Networks for Urban Sound Classification Using Raw Waveforms. In Proceedings of the 2018 26th European Signal Processing Conference (EUSIPCO), Rome, Italy, 3–7 September 2018; pp. 2444–2448. [Google Scholar] [CrossRef]

- Hojjati, H.; Armanfard, N. Self-Supervised Acoustic Anomaly Detection Via Contrastive Learning. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing), Singapore, 7–13 May 2022; pp. 3253–3257. [Google Scholar] [CrossRef]

- Chen, H.; Song, Y.; Dai, L.-R.; McLoughlin, I.; Liu, L. Self-Supervised Representation Learning for Unsupervised Anomalous Sound Detection Under Domain Shift. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 7–13 May 2022; pp. 471–475. [Google Scholar] [CrossRef]

| Padding Methods | Model | Sample Data Proportion | Accuracy (%) |

|---|---|---|---|

| zero padding | Dual-resnet | 7:2:1 | 75.8% |

| loop padding | Dual-resnet | 7:2:1 | 82.6% |

| zero padding | CNN | 7:2:1 | 73.9% |

| loop padding | CNN | 7:2:1 | 76.1% |

| zero padding | Dilated convolutions | 7:2:1 | 77.4% |

| loop padding | Dilated convolutions | 7:2:1 | 79.6% |

| zero padding | EnvNet | 7:2:1 | 78.2% |

| loop padding | EnvNet | 7:2:1 | 80.3% |

| Method | Single Branch | Double Branch |

|---|---|---|

| Log-Mel spectrogram | 74.9% | - |

| Log-spectrogram | 71.4% | - |

| Feature concatenate | 76.8% | - |

| Feature fusion | - | 82.6% |

| Model | Representation | UrbanSound8k | ESC-50 | |

|---|---|---|---|---|

| Accuracy (%) | Inference Time (s) | Accuracy (%) | ||

| Baseline system [25] | Mel-Frequency Cepstral Coefficients (MFCC) | 69.1 | - | - |

| CNN [9] | Mel spectrogram | 73.2 | 129.73 | 65.1 |

| Dilated CNN [31] | Mel spectrogram | 78.3 | 127.31 | 83.9 |

| EnvNet [32] | Original waveform | 77.6 | 118.56 | 83.5 |

| CRNN [33] | Original waveform | 79.0 | 115.60 | - |

| Ours | Log-Mel spectrogram Log-spectrogram | 82.6 | 123.39 | 86.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, D.; Zhong, Z.; Xia, Y.; Wang, Z.; Xiong, W. An Automatic Classification System for Environmental Sound in Smart Cities. Sensors 2023, 23, 6823. https://doi.org/10.3390/s23156823

Zhang D, Zhong Z, Xia Y, Wang Z, Xiong W. An Automatic Classification System for Environmental Sound in Smart Cities. Sensors. 2023; 23(15):6823. https://doi.org/10.3390/s23156823

Chicago/Turabian StyleZhang, Dongping, Ziyin Zhong, Yuejian Xia, Zhutao Wang, and Wenbo Xiong. 2023. "An Automatic Classification System for Environmental Sound in Smart Cities" Sensors 23, no. 15: 6823. https://doi.org/10.3390/s23156823

APA StyleZhang, D., Zhong, Z., Xia, Y., Wang, Z., & Xiong, W. (2023). An Automatic Classification System for Environmental Sound in Smart Cities. Sensors, 23(15), 6823. https://doi.org/10.3390/s23156823