MSA-YOLO: A Remote Sensing Object Detection Model Based on Multi-Scale Strip Attention

Abstract

1. Introduction

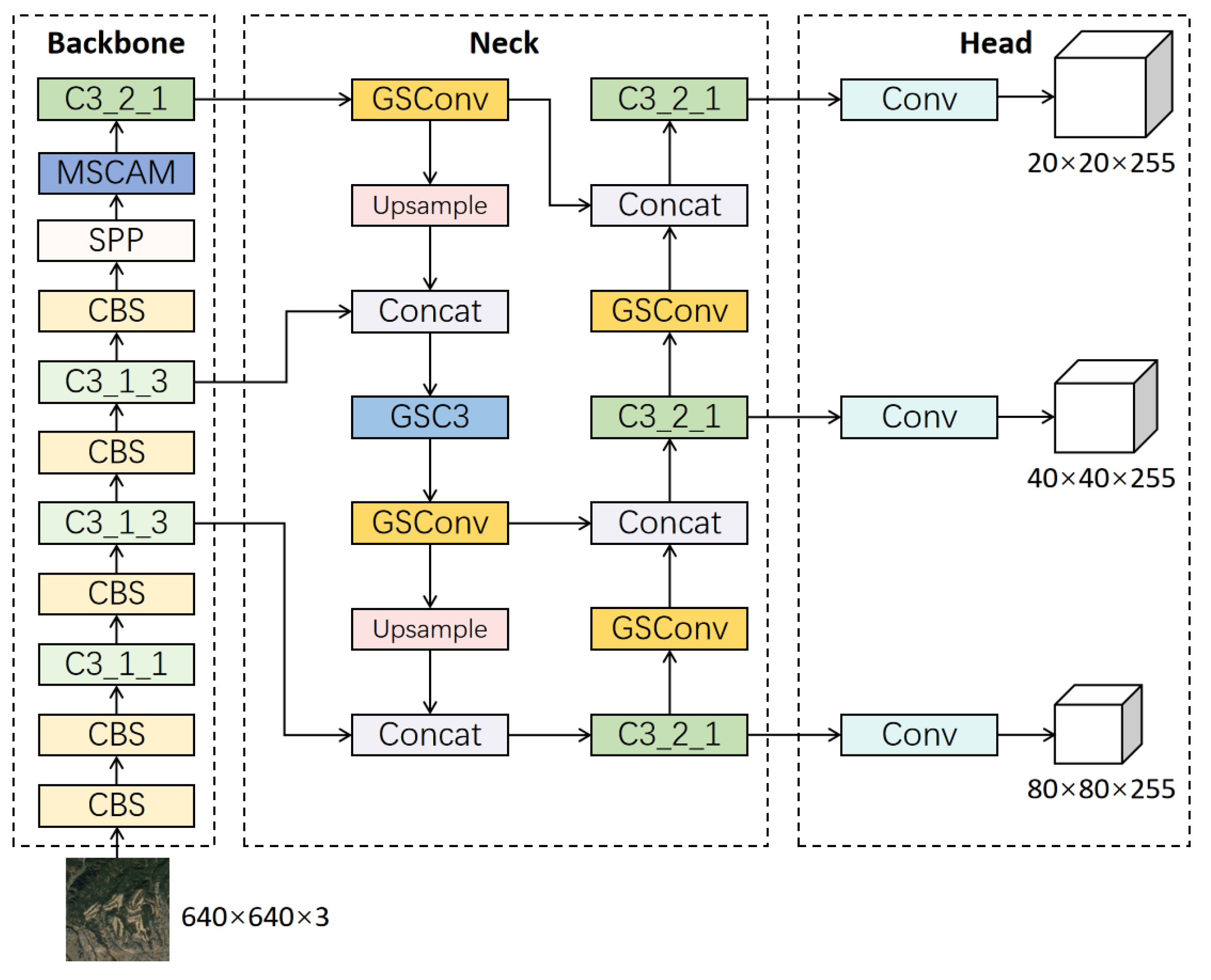

- We propose a multi-scale attention mechanism based on a strip convolution to solve the problems of complex background noise and significant object scale differences in object detection of remote sensing images. We add this attention module to the baseline model to reduce the effect of background noise and fuse multi-scale features.

- We introduce GSConv into the feature fusion layer of the model and propose a new GS-PANet. It can improve the detection accuracy and reduce the number of parameters of the model, making the model more applicable to practical remote sensing image detection tasks.

- We optimize the original loss function in YOLOv5 and propose a new Wise-Focal CIoU loss function. It allows the model to better balance the contribution of the loss function to different samples during training and thus optimize the regression performance of the model.

- We conducted multiple experiments on large-scale public datasets of remote sensing images. The results show that our MSA-YOLO model performs well in object detection of remote sensing images, proving the effectiveness and feasibility of our methods proposed in this article.

2. Related Work

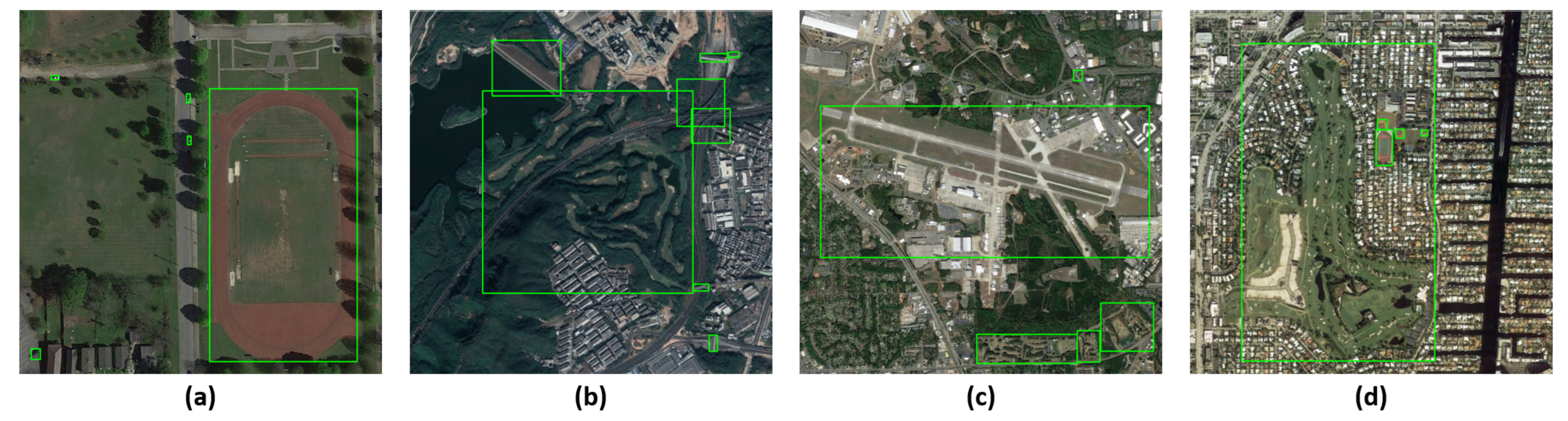

2.1. Object Detection for Remote Sensing Images

2.2. Attention Mechanism

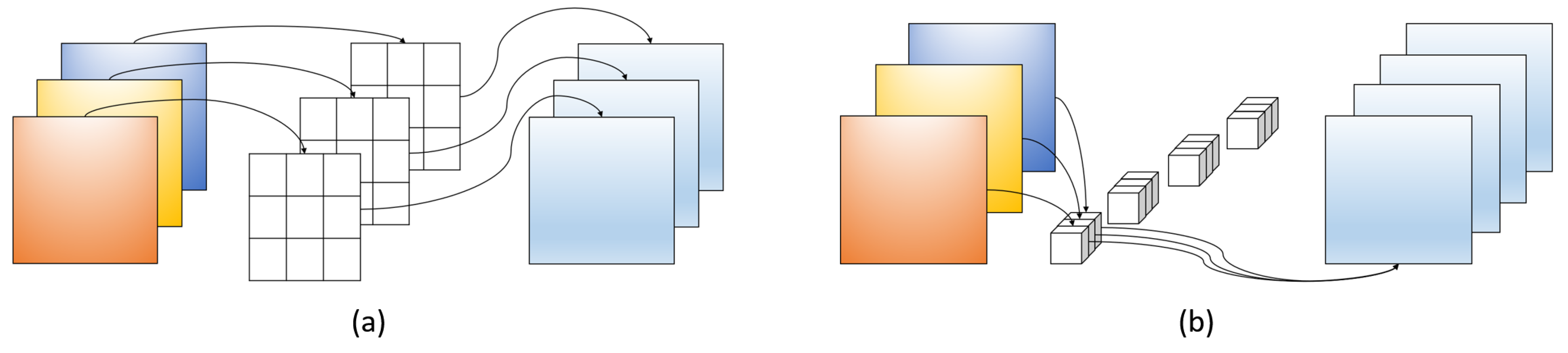

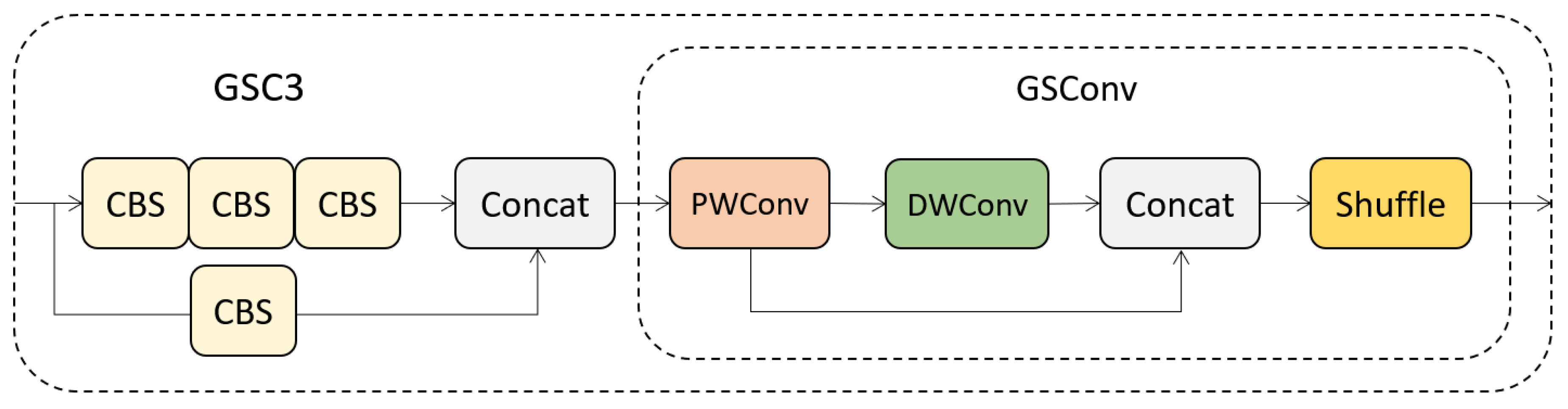

2.3. GSConv

3. Methods

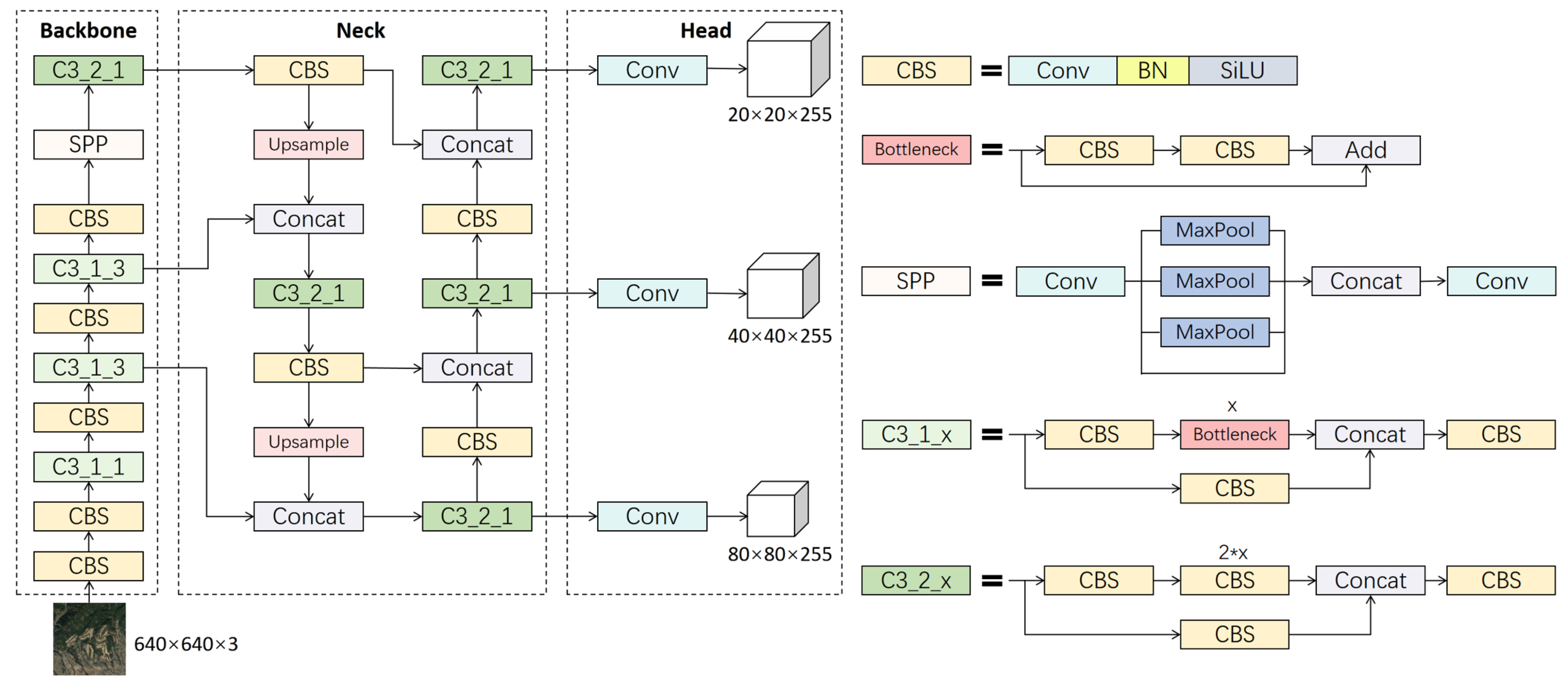

3.1. Review of YOLOv5s

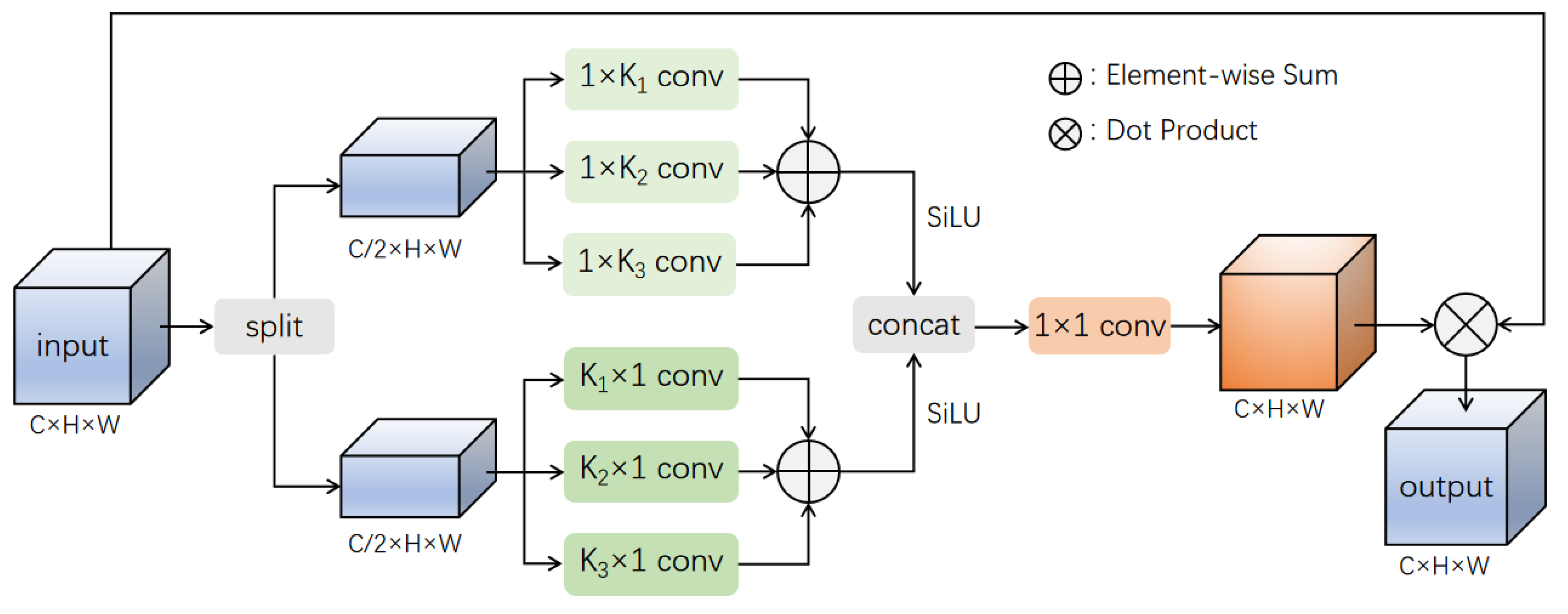

3.2. Multi-Scale Strip Convolution Attention Mechanism

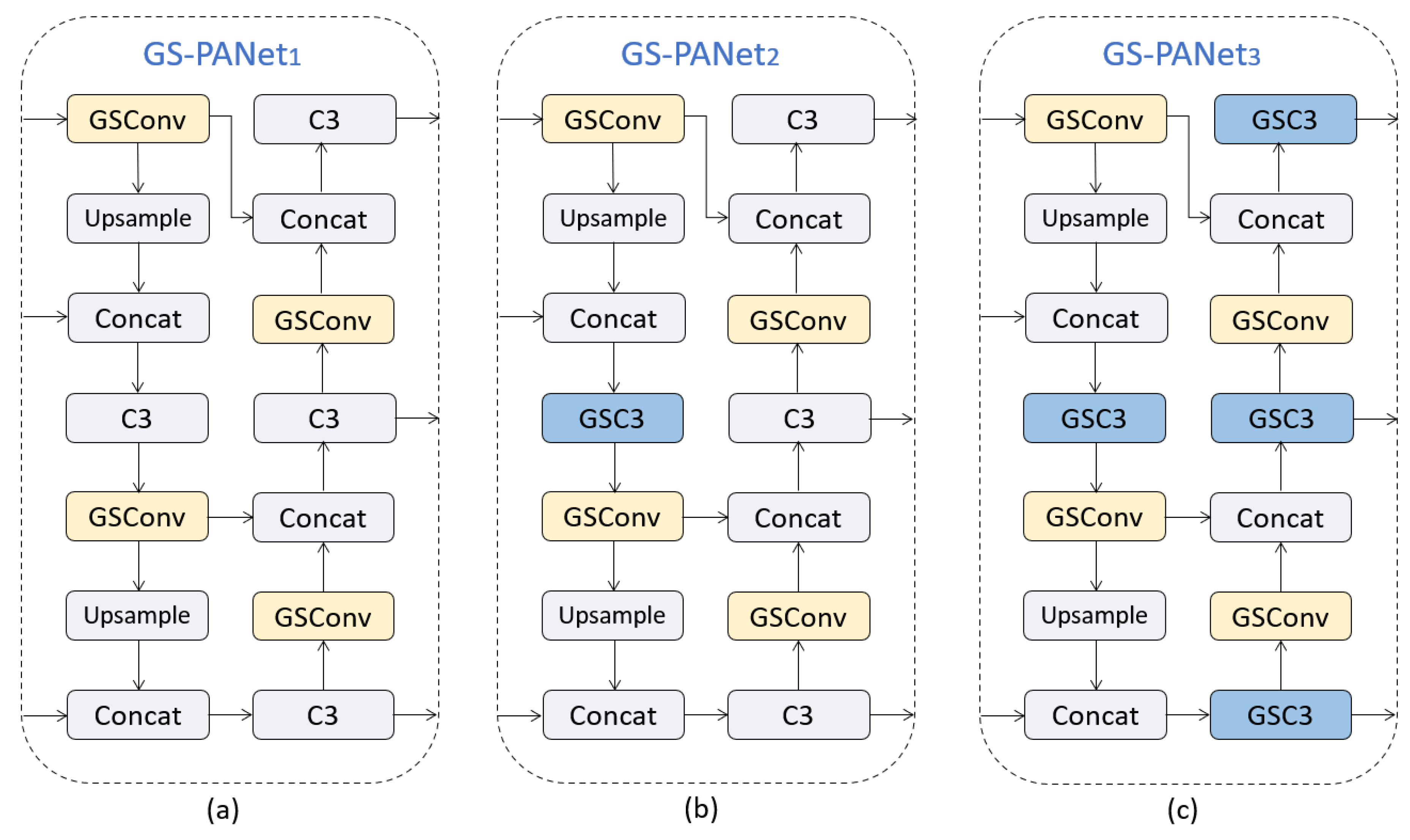

3.3. Improved PANet by GSConv

3.4. Wise-Focal CIoU Loss

3.5. Discussion

4. Experiments

4.1. Datasets

4.2. Experiments Settings

4.3. Evaluation Metrics

4.4. Experiment Results and Discussion

4.4.1. Ablation Experiments

4.4.2. Comparative Experiments

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cheng, G.; Han, J. A Survey on Object Detection in Optical Remote Sensing Images. arXiv 2016, arXiv:1603.06201. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. Isprs J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving Into High Quality Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2017; pp. 6154–6162. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R.B. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 42, 386–397. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.B.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 42, 318–327. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.E.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Redmon, J.; Divvala, S.K.; Girshick, R.B.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2015; pp. 779–788. [Google Scholar] [CrossRef]

- Farhadi, A.; Redmon, J. Yolov3: An incremental improvement. In Proceedings of the Computer Vision and Pattern Recognition, San Juan, PR, USA, 17–19 June 1997; Springer: Berlin/Heidelberg, Germany, 2018; pp. 1804–2767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Jocher, G.; Stoken, G.; Borovec, A.; Chaurasia, J.; Changyu, A.; Hogan, L.; Hajek, A.; Diaconu, J.; Kwon, L.; Defretin, Y.; et al. Ultralytics/yolov5: V5.0—YOLOv5-P6 1280 Models, AWS, Supervise.ly and YouTube Integrations. Zenodo 2021. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar] [CrossRef]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully Convolutional One-Stage Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9626–9635. [Google Scholar] [CrossRef]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. arXiv 2020, arXiv:2005.12872. [Google Scholar] [CrossRef]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection. arXiv 2020, arXiv:2010.04159. [Google Scholar] [CrossRef]

- SushmaLeela, T.; Chandrakanth, R.; Saibaba, J.; Varadan, G.; Mohan, S.S. Mean-shift based object detection and clustering from high resolution remote sensing imagery. In Proceedings of the 2013 Fourth National Conference on Computer Vision, Pattern Recognition, Image Processing and Graphics (NCVPRIPG), Jodhpur, India, 18–21 December 2013; pp. 1–4. [Google Scholar] [CrossRef]

- Paul, S.B.; Pati, U.C. Remote Sensing Optical Image Registration Using Modified Uniform Robust SIFT. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1300–1304. [Google Scholar] [CrossRef]

- Wang, Y.; Xu, C.; Liu, C.; Li, Z. Context Information Refinement for Few-Shot Object Detection in Remote Sensing Images. Remote Sens. 2022, 14, 3255. [Google Scholar] [CrossRef]

- Niu, R.; Zhi, X.; Jiang, S.; Gong, J.; Zhang, W.; Yu, L. Aircraft Target Detection in Low Signal-to-Noise Ratio Visible Remote Sensing Images. Remote Sens. 2023, 15, 1971. [Google Scholar] [CrossRef]

- Xie, Y.; Cai, J.; Bhojwani, R.; Shekhar, S.; Knight, J.F. A locally-constrained YOLO framework for detecting small and densely-distributed building footprints. Int. J. Geogr. Inf. Sci. 2020, 34, 777–801. [Google Scholar] [CrossRef]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-captured Scenarios. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, QC, Canada, 11–17 October 2021; pp. 2778–2788. [Google Scholar] [CrossRef]

- Sun, Y.; Liu, W.; Gao, Y.; Hou, X.; Bi, F. A Dense Feature Pyramid Network for Remote Sensing Object Detection. Appl. Sci. 2022, 12, 4997. [Google Scholar] [CrossRef]

- Wan, X.; Yu, J.; Tan, H.; Wang, J. LAG: Layered Objects to Generate Better Anchors for Object Detection in Aerial Images. Sensors 2022, 22, 3891. [Google Scholar] [CrossRef]

- Dong, X.; Qin, Y.; Fu, R.; Gao, Y.; Liu, S.; Ye, Y.; Li, B. Multiscale Deformable Attention and Multilevel Features Aggregation for Remote Sensing Object Detection. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Yu, D.; Ji, S. A new spatial-oriented object detection framework for remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–16. [Google Scholar] [CrossRef]

- Wang, J.; Yang, W.; Li, H.; Zhang, H.; Xia, G. Learning Center Probability Map for Detecting Objects in Aerial Images. IEEE Trans. Geosci. Remote Sens. 2020, 59, 4307–4323. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 42, 2011–2023. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Wu, B.; Zhu, P.F.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2019; pp. 11531–11539. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Online, 19–25 June 2021; pp. 13708–13717. [Google Scholar] [CrossRef]

- Misra, D.; Nalamada, T.; Arasanipalai, A.U.; Hou, Q. Rotate to Attend: Convolutional Triplet Attention Module. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2020; pp. 3138–3147. [Google Scholar] [CrossRef]

- Wang, X.; Girshick, R.B.; Gupta, A.K.; He, K. Non-local Neural Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2017; pp. 7794–7803. [Google Scholar] [CrossRef]

- Liu, J.; Hou, Q.; Cheng, M.M.; Wang, C.; Feng, J. Improving Convolutional Networks With Self-Calibrated Convolutions. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10093–10102. [Google Scholar] [CrossRef]

- Zhu, L.; Wang, X.; Ke, Z.; Zhang, W.; Lau, R.W.H. BiFormer: Vision Transformer with Bi-Level Routing Attention. arXiv 2023, arXiv:2303.08810. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2017; pp. 6848–6856. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. arXiv 2019, arXiv:1905.11946. [Google Scholar] [CrossRef]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-neck by GSConv: A better design paradigm of detector architectures for autonomous vehicles. arXiv 2022, arXiv:2206.02424. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.B.; He, K.; Hariharan, B.; Belongie, S.J. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar] [CrossRef]

- Cao, Y.; Chen, K.; Loy, C.C.; Lin, D. Prime Sample Attention in Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2019; pp. 11580–11588. [Google Scholar] [CrossRef]

- Pang, J.; Chen, K.; Shi, J.; Feng, H.; Ouyang, W.; Lin, D. Libra R-CNN: Towards Balanced Learning for Object Detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 821–830. [Google Scholar] [CrossRef]

- Zhang, H.; Chang, H.; Ma, B.; Wang, N.; Chen, X. Dynamic R-CNN: Towards High Quality Object Detection via Dynamic Training. In Proceedings of the European Conference on Computer Vision, Online, 23–28 August 2020. [Google Scholar]

- Girshick, R.B. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Zhang, Y.F.; Ren, W.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and efficient IOU loss for accurate bounding box regression. Neurocomputing 2022, 506, 146–157. [Google Scholar] [CrossRef]

- Jaderberg, M.; Simonyan, K.; Zisserman, A.; Kavukcuoglu, K. Spatial Transformer Networks. In Proceedings of the NIPS, Montreal, QC, Canada, 7–10 December 2015. [Google Scholar]

- Zhang, Y.; Yuan, Y.; Feng, Y.; Lu, X. Hierarchical and robust convolutional neural network for very high-resolution remote sensing object detection. In Proceedings of the IEEE Transactions on Geoscience and Remote Sensing, Yokohama, Japan, 28 July–2 August 2019; Volume 57, pp. 5535–5548. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Part V 13. Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

| Method | AP50 | AP50:95 | Params(M) |

|---|---|---|---|

| GS-PANet1 | 78.0 | 56.3 | 6.67 |

| GS-PANet2 | 78.2 | 56.5 | 6.63 |

| GS-PANet3 | 77.9 | 56.3 | 6.47 |

| Method | |||

|---|---|---|---|

| Wise-Focal CIoU =2 | 77.4 | 60.9 | 56.2 |

| Wise-Focal CIoU =3 | 77.3 | 60.6 | 56.2 |

| Wise-Focal CIoU =4 | 77.2 | 60.3 | 56.1 |

| Wise-Focal CIoU =5 | 76.9 | 60.4 | 56.1 |

| Wise-Focal CIoU =6 | 76.2 | 60.0 | 55.7 |

| Method | MSCAM | GS-PANet | Wise-Focal CIoU Loss | Params (M) | ||

|---|---|---|---|---|---|---|

| YOLOv5s (Baseline) | 77.7 | 55.7 | 7.11 | |||

| 1 | ✓ | 79.2 | 57.7 | 7.38 | ||

| 2 | ✓ | 78.2 | 56.5 | 6.63 | ||

| 3 | ✓ | 77.4 | 56.2 | 7.11 | ||

| 4 | ✓ | ✓ | 78.3 | 57.2 | 6.63 | |

| MSA-YOLO | ✓ | ✓ | ✓ | 79.3 | 58.6 | 6.91 |

| Attention Mechanism | Params (M) | ||||||

|---|---|---|---|---|---|---|---|

| No (Baseline) | 77.7 | 60.4 | 11.4 | 38.4 | 67.5 | 55.7 | 7.11 |

| SENet [28] | 78.0 | 60.4 | 11.2 | 38.2 | 67.2 | 55.7 | 7.15 |

| CBAM [30] | 77.4 | 60.2 | 10.5 | 38.4 | 67.3 | 55.6 | 7.18 |

| TAM [32] | 78.4 | 60.5 | 10.8 | 38.6 | 67.7 | 56.1 | 7.11 |

| CA [31] | 78.6 | 61.2 | 12.1 | 39.3 | 56.4 | 67.9 | 7.22 |

| Non-Local [33] | 78.2 | 60.7 | 10.7 | 39.5 | 67.4 | 56.0 | 7.63 |

| BRA [35] | 78.3 | 60.6 | 11.5 | 38.6 | 67.6 | 56.2 | 8.16 |

| MSCAM | 79.2 | 62.7 | 11.8 | 39.5 | 69.7 | 57.7 | 7.38 |

| Model | Params (M) | ||||||

|---|---|---|---|---|---|---|---|

| Faster RCNN [3] | 73.6 | 56.5 | 7.4 | 30.9 | 63.0 | 51.2 | 41.22 |

| Cascade RCNN [4] | 74.1 | 59.8 | 7.7 | 33.6 | 67.2 | 54.5 | 68.96 |

| YOLOv3 [9] | 75.7 | 60.6 | 10.1 | 36.5 | 67.6 | 55.5 | 62.67 |

| YOLOv5s [11] | 77.7 | 60.4 | 11.4 | 38.4 | 67.5 | 55.7 | 7.11 |

| YOLOX-S [14] | 76.5 | 58.2 | 10.6 | 37.6 | 65.3 | 53.9 | 8.96 |

| YOLOv7-tiny [12] | 78.9 | 62.3 | 10.1 | 39.0 | 69.6 | 57.6 | 6.06 |

| MSA-YOLO (ours) | 79.3 | 63.3 | 12.6 | 40.1 | 70.3 | 58.6 | 6.91 |

| Model | Params (M) | ||||||

|---|---|---|---|---|---|---|---|

| Faster RCNN [3] | 88.4 | 67.9 | 34.4 | 50.3 | 56.9 | 59.1 | 41.22 |

| Cascade RCNN [4] | 88.3 | 71.0 | 34.4 | 51.8 | 59.8 | 62.0 | 68.96 |

| YOLOv3 [9] | 89.5 | 68.9 | 36.9 | 51.4 | 59.5 | 61.3 | 62.67 |

| YOLOv5s [11] | 91.7 | 74.8 | 41.7 | 53.7 | 62.8 | 65.5 | 7.11 |

| YOLOX-S [14] | 91.4 | 73.2 | 42.8 | 54.2 | 62.0 | 64.8 | 8.96 |

| YOLOv7-tiny [12] | 91.5 | 73.3 | 41.3 | 53.3 | 62.3 | 64.7 | 6.06 |

| MSA-YOLO (ours) | 93.0 | 77.0 | 47.1 | 54.5 | 64.8 | 67.4 | 6.91 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Su, Z.; Yu, J.; Tan, H.; Wan, X.; Qi, K. MSA-YOLO: A Remote Sensing Object Detection Model Based on Multi-Scale Strip Attention. Sensors 2023, 23, 6811. https://doi.org/10.3390/s23156811

Su Z, Yu J, Tan H, Wan X, Qi K. MSA-YOLO: A Remote Sensing Object Detection Model Based on Multi-Scale Strip Attention. Sensors. 2023; 23(15):6811. https://doi.org/10.3390/s23156811

Chicago/Turabian StyleSu, Zihang, Jiong Yu, Haotian Tan, Xueqiang Wan, and Kaiyang Qi. 2023. "MSA-YOLO: A Remote Sensing Object Detection Model Based on Multi-Scale Strip Attention" Sensors 23, no. 15: 6811. https://doi.org/10.3390/s23156811

APA StyleSu, Z., Yu, J., Tan, H., Wan, X., & Qi, K. (2023). MSA-YOLO: A Remote Sensing Object Detection Model Based on Multi-Scale Strip Attention. Sensors, 23(15), 6811. https://doi.org/10.3390/s23156811