Interactive Attention Learning on Detection of Lane and Lane Marking on the Road by Monocular Camera Image

Abstract

1. Introduction

- •

- We propose a novel multi-task encoder–decoder architecture. It is the first to introduce the concept of interactive attention learning into the joint detection of lane and lane marking.

- •

- We propose the DFF module in calculating discriminative encoding features, and employ the Cross-Context module to transfer information between prediction heads, thus shifting the focus of learning on the spatial correlation between lane and lane marking.

- •

- We propose an enhanced loss function with a CIoU loss to emphasize the lane and lane marking interaction and an adaptive pixel-level loss weighting to alleviate data imbalance.

2. Related Works

2.1. Lane Marking Detection

2.2. Lane Detection

2.3. Multi-Task Approaches

3. Methodology

3.1. Architecture Overview

3.2. Encoder

3.2.1. Input

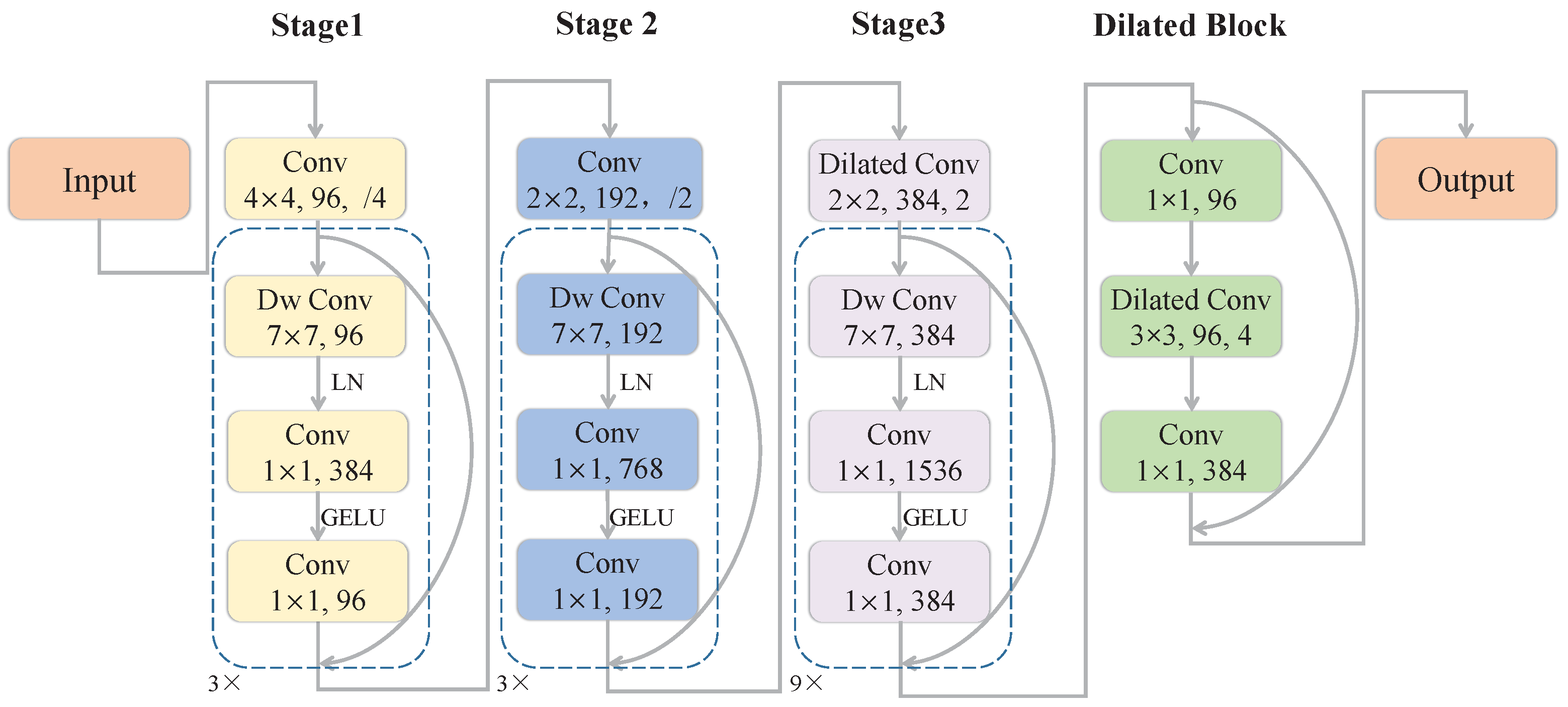

3.2.2. Backbone

3.2.3. Neck

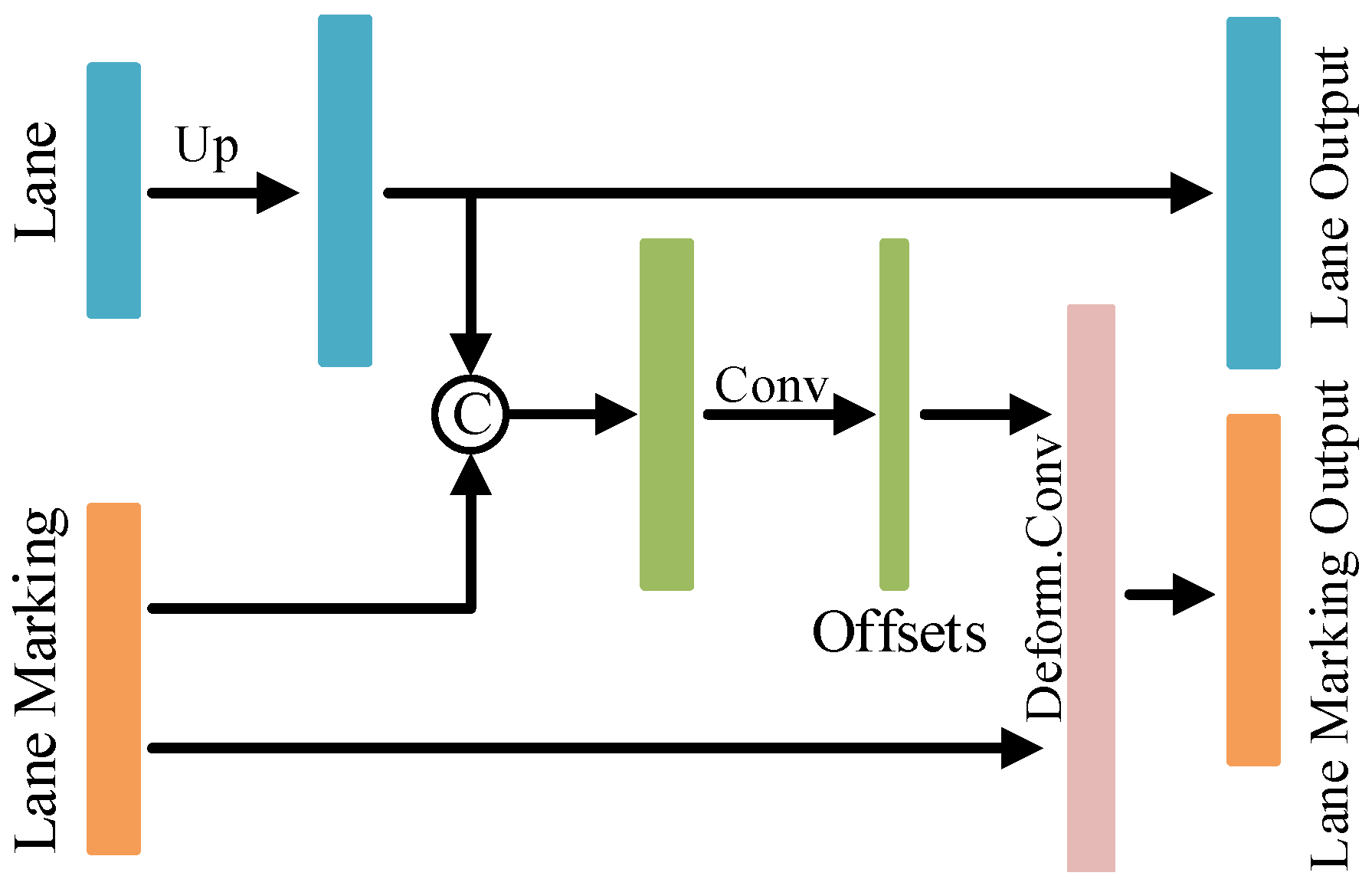

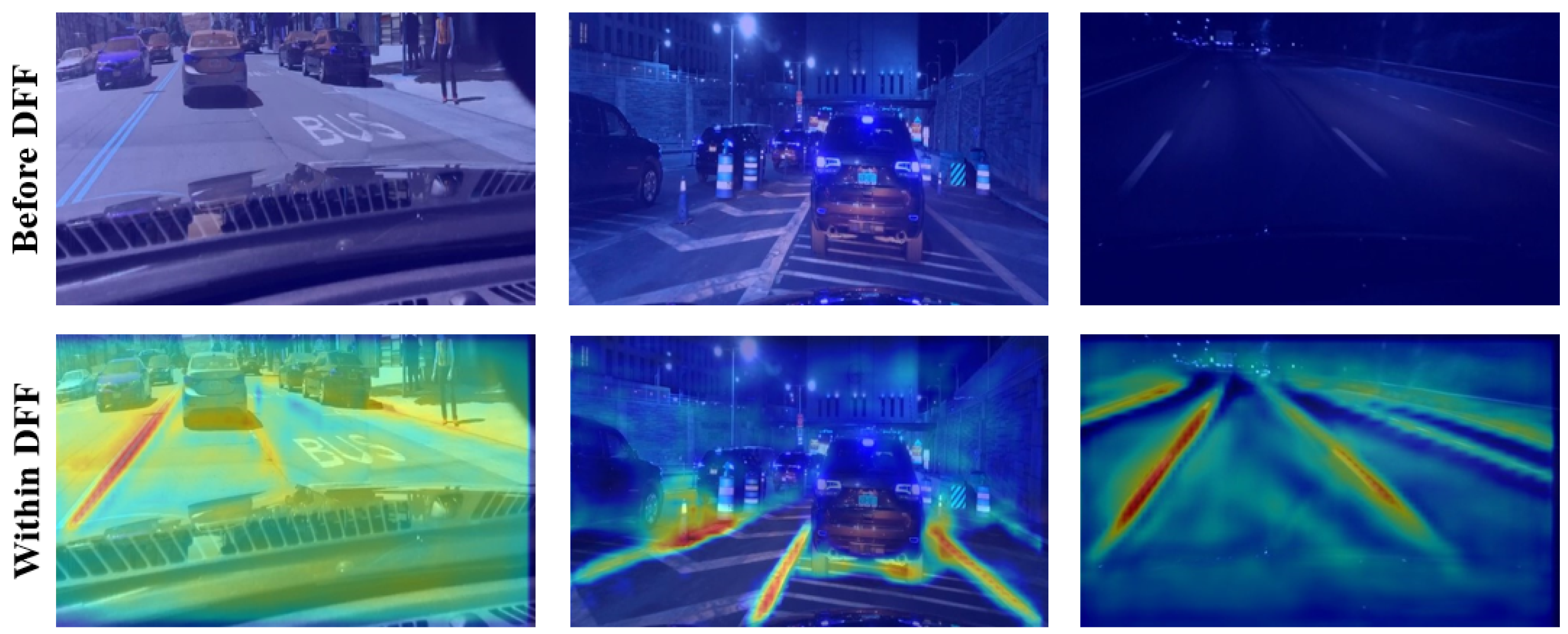

3.3. Deformable Feature Fusion Module

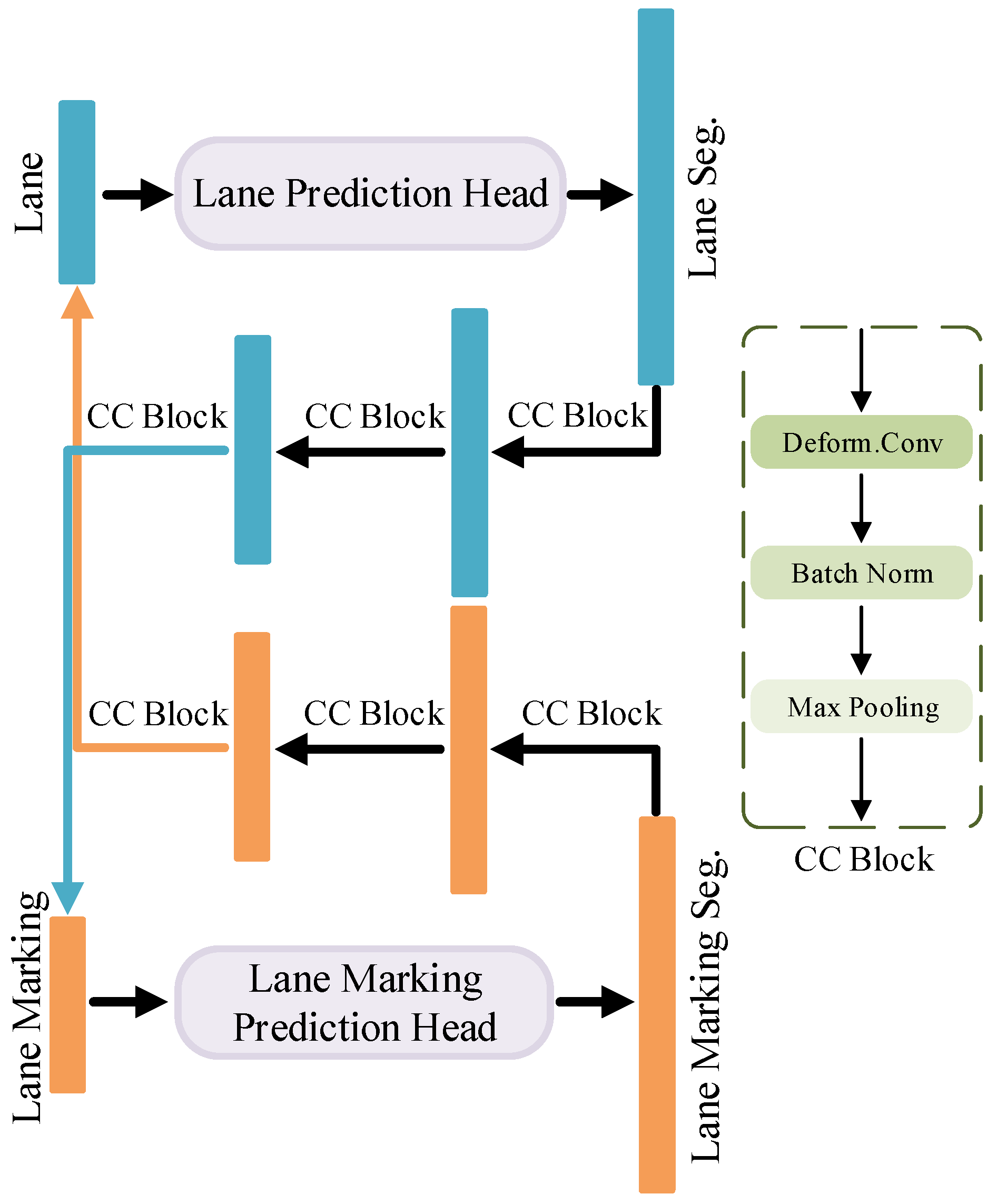

3.4. Decoder

3.4.1. Lane Marking Prediction Head

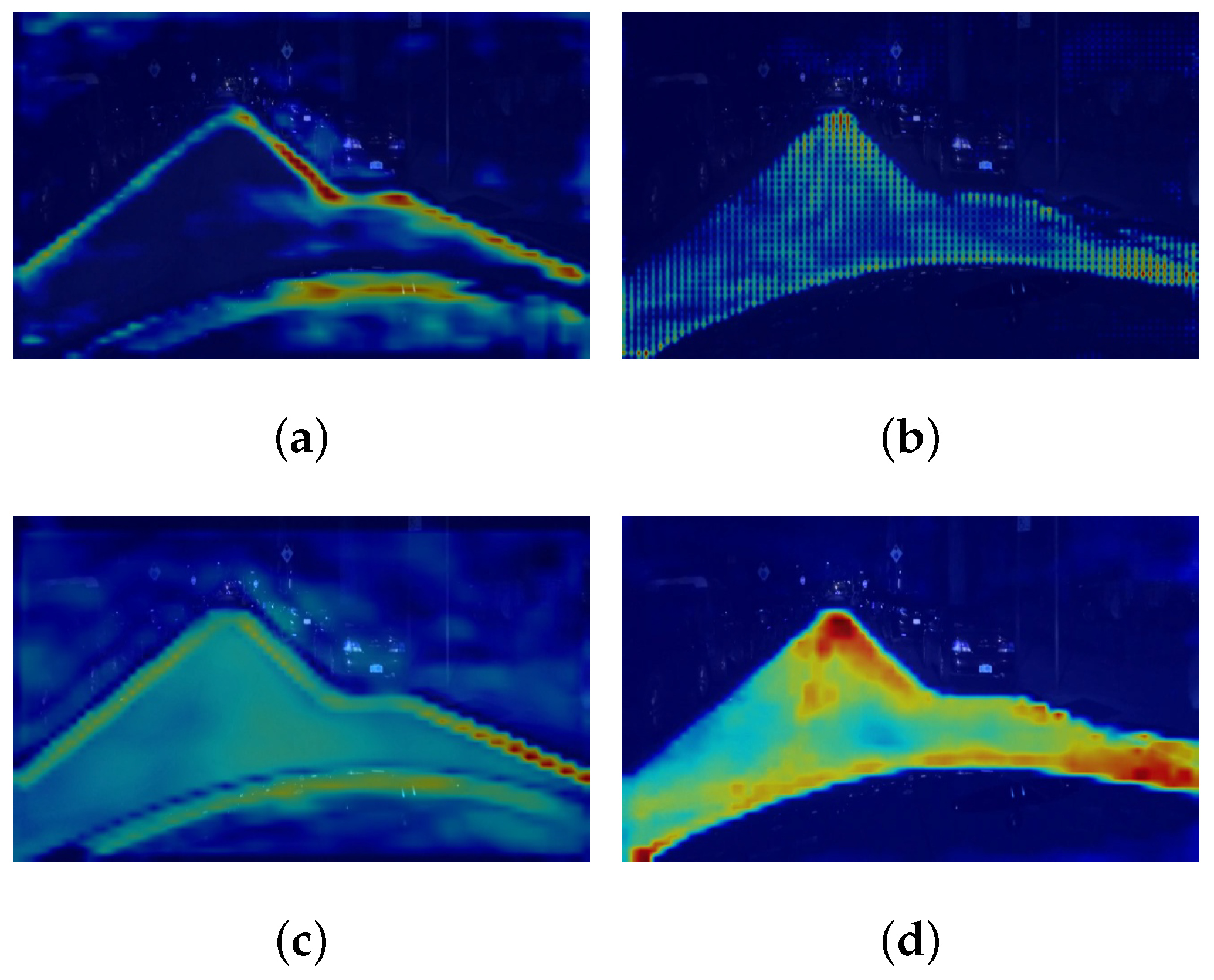

3.4.2. Lane Prediction Head

3.4.3. Cross-Context Module

3.5. Loss Function

3.5.1. Segmentation Dice Loss

3.5.2. Focal-Style Loss Weighting

3.5.3. Cross-IoU Loss

3.5.4. Total Learning Loss

4. Experiments and Evaluation

4.1. Implementation Details

4.1.1. Datasets

4.1.2. Metric

4.1.3. Training

4.2. Comparison with State-of-the-Arts

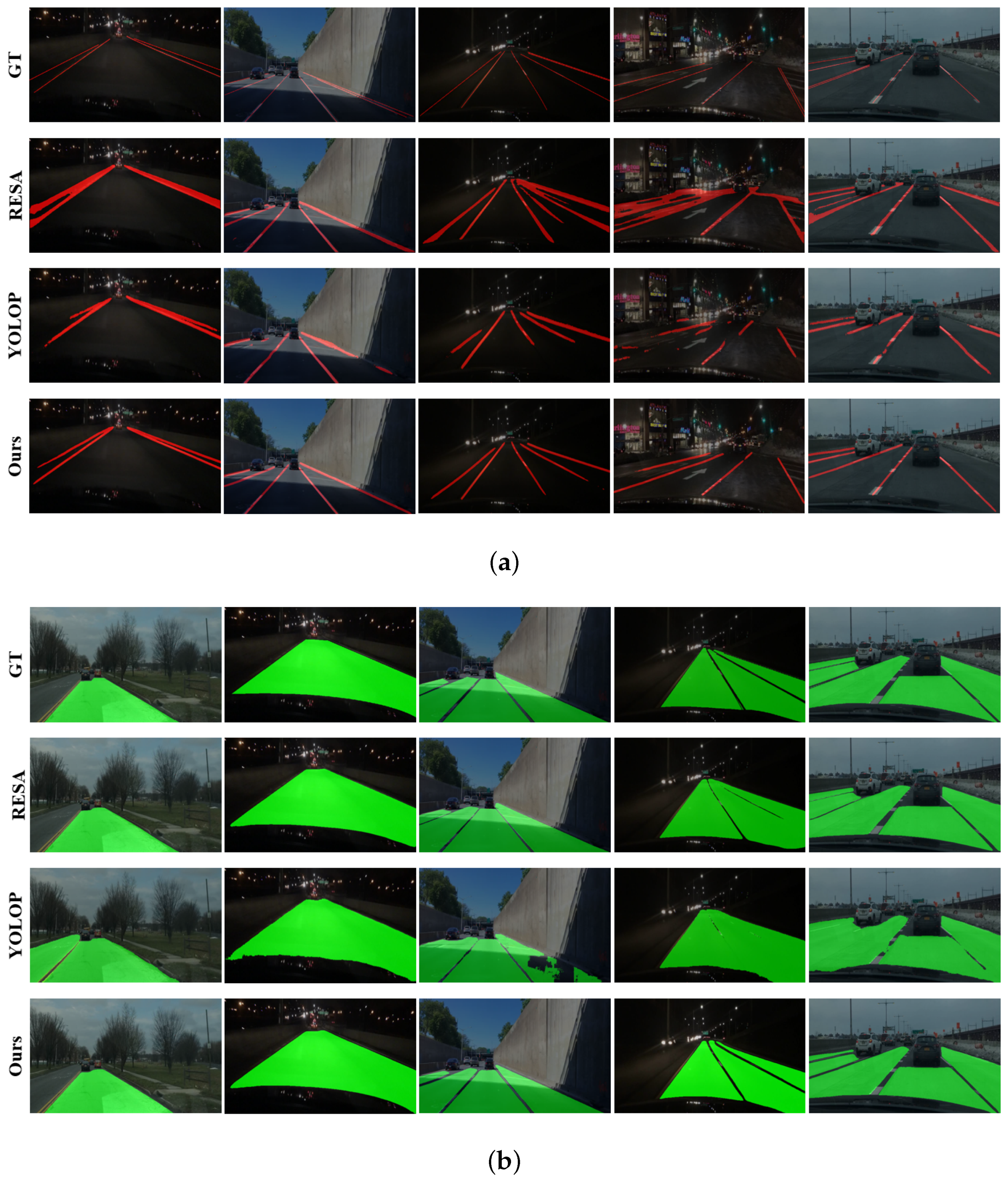

4.2.1. Lane Marking Detection

4.2.2. Lane Detection

4.3. Exploration on Interaction Learning Modules

4.3.1. DFF Module

4.3.2. Cross-Context Module

4.3.3. Focal-Style Loss Weighting

4.4. Further Exploration on Architecture Design

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- HERE. HERE HD Live Map: The Most Intelligent Sensor for Autonomous Driving. 2022. Available online: https://www.here.com/platform/automotive-services/hd-maps (accessed on 1 December 2022).

- TomTom. HD Maps—Highly Accurate Border-to-Border Model of the Road. 2022. Available online: https://www.tomtom.com/products/hd-map (accessed on 1 December 2022).

- Homayounfar, N.; Ma, W.C.; Liang, J.; Wu, X.; Fan, J.; Urtasun, R. DAGmapper: Learning to map by discovering lane topology. In Proceedings of the IEEE/CVF International Conference on Computer Vision (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 2911–2920. [Google Scholar]

- Chiu, K.Y.; Lin, S.F. Lane detection using color-based segmentation. In Proceedings of the IEEE Intelligent Vehicles Symposium, Las Vegas, NV, USA, 6–8 June 2005; pp. 706–711. [Google Scholar]

- Satzoda, R.K.; Sathyanarayana, S.; Srikanthan, T.; Sathyanarayana, S. Hierarchical additive Hough transform for lane detection. IEEE Embed. Syst. Lett. 2010, 2, 23–26. [Google Scholar] [CrossRef]

- Hou, Y.; Ma, Z.; Liu, C.; Loy, C.C. Learning lightweight lane detection cnns by self attention distillation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1013–1021. [Google Scholar]

- Chen, Z.; Liu, Q.; Lian, C. Pointlanenet: Efficient end-to-end cnns for accurate real-time lane detection. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 2563–2568. [Google Scholar]

- Tian, W.; Ren, X.; Yu, X.; Wu, M.; Zhao, W.; Li, Q. Vision-based mapping of lane semantics and topology for intelligent vehicles. Int. J. Appl. Earth Obs. Geoinf. 2022, 111, 102851. [Google Scholar] [CrossRef]

- Pan, X.; Shi, J.; Luo, P.; Wang, X.; Tang, X. Spatial as deep: Spatial cnn for traffic scene understanding. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Zheng, T.; Fang, H.; Zhang, Y.; Tang, W.; Yang, Z.; Liu, H.; Cai, D. Resa: Recurrent feature-shift aggregator for lane detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; Volume 35, pp. 3547–3554. [Google Scholar]

- Li, X.; Li, J.; Hu, X.; Yang, J. Line-cnn: End-to-end traffic line detection with line proposal unit. IEEE Trans. Intell. Transp. Syst. 2019, 21, 248–258. [Google Scholar] [CrossRef]

- Tabelini, L.; Berriel, R.; Paixao, T.M.; Badue, C.; De Souza, A.F.; Oliveira-Santos, T. Keep your eyes on the lane: Real-time attention-guided lane detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 294–302. [Google Scholar]

- Tabelini, L.; Berriel, R.; Paixao, T.M.; Badue, C.; De Souza, A.F.; Oliveira-Santos, T. Polylanenet: Lane estimation via deep polynomial regression. In Proceedings of the International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2021; pp. 6150–6156. [Google Scholar]

- Liu, R.; Yuan, Z.; Liu, T.; Xiong, Z. End-to-end lane shape prediction with transformers. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Online, 5–9 January 2021; pp. 3694–3702. [Google Scholar]

- Feng, Z.; Guo, S.; Tan, X.; Xu, K.; Wang, M.; Ma, L. Rethinking Efficient Lane Detection via Curve Modeling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 17062–17070. [Google Scholar]

- Yu, F.; Chen, H.; Wang, X.; Xian, W.; Chen, Y.; Liu, F.; Madhavan, V.; Darrell, T. Bdd100k: A diverse driving dataset for heterogeneous multitask learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2636–2645. [Google Scholar]

- TuSimple. 2022. Available online: http://benchmark.tusimple.ai/ (accessed on 1 December 2022).

- Loose, H.; Franke, U.; Stiller, C. Kalman particle filter for lane recognition on rural roads. In Proceedings of the IEEE Intelligent Vehicles Symposium, Xi’an, China, 3–5 June 2009; pp. 60–65. [Google Scholar]

- Teng, Z.; Kim, J.H.; Kang, D.J. Real-time lane detection by using multiple cues. In Proceedings of the International Conference on Control Automation and Systems (ICCAS 2010), Suwon, Republic of Korea, 27–30 October 2010; pp. 2334–2337. [Google Scholar]

- Aly, M. Real time detection of lane markers in urban streets. In Proceedings of the IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 7–12. [Google Scholar]

- Assidiq, A.A.; Khalifa, O.O.; Islam, M.R.; Khan, S. Real time lane detection for autonomous vehicles. In Proceedings of the International Conference on Computer and Communication Engineering, Kuala Lumpur, Malaysia, 13–15 May 2008; pp. 82–88. [Google Scholar]

- Azimi, S.M.; Fischer, P.; Körner, M.; Reinartz, P. Aerial LaneNet: Lane-marking semantic segmentation in aerial imagery using wavelet-enhanced cost-sensitive symmetric fully convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2018, 57, 2920–2938. [Google Scholar] [CrossRef]

- Guan, H.; Lei, X.; Yu, Y.; Zhao, H.; Peng, D.; Junior, J.M.; Li, J. Road marking extraction in UAV imagery using attentive capsule feature pyramid network. Int. J. Appl. Earth Obs. Geoinf. 2022, 107, 102677. [Google Scholar] [CrossRef]

- Xu, H.; Wang, S.; Cai, X.; Zhang, W.; Liang, X.; Li, Z. Curvelane-nas: Unifying lane-sensitive architecture search and adaptive point blending. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 689–704. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; Volume 28. [Google Scholar]

- Qin, Z.; Wang, H.; Li, X. Ultra fast structure-aware deep lane detection. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 276–291. [Google Scholar]

- Yoo, S.; Lee, H.S.; Myeong, H.; Yun, S.; Park, H.; Cho, J.; Kim, D.H. End-to-end lane marker detection via row-wise classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 1006–1007. [Google Scholar]

- Liu, L.; Chen, X.; Zhu, S.; Tan, P. Condlanenet: A top-to-down lane detection framework based on conditional convolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3773–3782. [Google Scholar]

- Wang, Q.; Wang, L.; Chi, Y.; Shen, T.; Song, J.; Gao, J.; Shen, S. Dynamic Data Augmentation Based on Imitating Real Scene for Lane Line Detection. Remote Sens. 2023, 15, 1212. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- He, X.; Zhou, Y.; Zhao, J.; Zhang, D.; Yao, R.; Xue, Y. Swin Transformer Embedding UNet for Remote Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Xie, W.; Liu, P.X.; Zheng, M. Moving object segmentation and detection for robust RGBD-SLAM in dynamic environments. IEEE Trans. Instrum. Meas. 2020, 70, 1–8. [Google Scholar] [CrossRef]

- Meyer, A.; Salscheider, N.O.; Orzechowski, P.F.; Stiller, C. Deep semantic lane segmentation for mapless driving. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 869–875. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Sun, T.; Di, Z.; Che, P.; Liu, C.; Wang, Y. Leveraging crowdsourced GPS data for road extraction from aerial imagery. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7509–7518. [Google Scholar]

- Fontanelli, D.; Moro, F.; Rizano, T.; Palopoli, L. Vision-based robust path reconstruction for robot control. IEEE Trans. Instrum. Meas. 2013, 63, 826–837. [Google Scholar] [CrossRef]

- Teichmann, M.; Weber, M.; Zoellner, M.; Cipolla, R.; Urtasun, R. Multinet: Real-time joint semantic reasoning for autonomous driving. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Suzhou, China, 26–30 June 2018; pp. 1013–1020. [Google Scholar]

- Qian, Y.; Dolan, J.M.; Yang, M. DLT-Net: Joint detection of drivable areas, lane lines, and traffic objects. IEEE Trans. Intell. Transp. Syst. 2019, 21, 4670–4679. [Google Scholar] [CrossRef]

- Chen, Z.; Chen, Z. Rbnet: A deep neural network for unified road and road boundary detection. In Proceedings of the International Conference on Neural Information Processing, Long Beach, CA, USA, 4–9 December 2017; pp. 677–687. [Google Scholar]

- Zhang, J.; Xu, Y.; Ni, B.; Duan, Z. Geometric constrained joint lane segmentation and lane boundary detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 486–502. [Google Scholar]

- Liu, Y.; Yao, J.; Lu, X.; Xia, M.; Wang, X.; Liu, Y. RoadNet: Learning to comprehensively analyze road networks in complex urban scenes from high-resolution remotely sensed images. IEEE Trans. Geosci. Remote Sens. 2018, 57, 2043–2056. [Google Scholar] [CrossRef]

- Meng, Z.; Xia, X.; Xu, R.; Liu, W.; Ma, J. HYDRO-3D: Hybrid Object Detection and Tracking for Cooperative Perception Using 3D LiDAR. IEEE Trans. Intell. Veh. 2023, 1–13. [Google Scholar] [CrossRef]

- Xia, X.; Meng, Z.; Han, X.; Li, H.; Tsukiji, T.; Xu, R.; Zheng, Z.; Ma, J. An automated driving systems data acquisition and analytics platform. Transp. Res. Part C Emerg. Technol. 2023, 151, 104120. [Google Scholar] [CrossRef]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11976–11986. [Google Scholar]

- Wu, D.; Liao, M.; Zhang, W.; Wang, X. Yolop: You only look once for panoptic driving perception. arXiv 2021, arXiv:2108.11250. [Google Scholar]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Milletari, F.; Navab, N.; Ahmadi, S.A. V-net: Fully convolutional neural networks for volumetric medical image segmentation. In Proceedings of the International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Rahman, M.A.; Wang, Y. Optimizing intersection-over-union in deep neural networks for image segmentation. In Proceedings of the International Symposium on Visual Computing, Las Vegas, NV, USA, 12–14 December 2016; pp. 234–244. [Google Scholar]

- Paszke, A.; Chaurasia, A.; Kim, S.; Culurciello, E. Enet: A deep neural network architecture for real-time semantic segmentation. arXiv 2016, arXiv:1606.02147. [Google Scholar]

- Romera, E.; Alvarez, J.M.; Bergasa, L.M.; Arroyo, R. Erfnet: Efficient residual factorized convnet for real-time semantic segmentation. IEEE Trans. Intell. Transp. Syst. 2017, 19, 263–272. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

| Network | Accuracy (%) ↑ | IoU (%) ↑ | Speed (fps) ↑ |

|---|---|---|---|

| ENet [54] | 34.12 | 14.64 | 100 |

| SCNN [9] | 35.79 | 15.84 | 19.8 |

| ENet-SAD [6] | 36.56 | 16.02 | 50.6 |

| RESA [10] | 61.26 | 16.71 | 47.4 |

| YOLOP [49] | 70.50 | 26.20 | 41 |

| Ours | 81.61 | 32.53 | 26 |

| Network | mIoU (%) ↑ | Speed (fps) ↑ |

|---|---|---|

| ERFNet [55] | 68.7 | 22.8 |

| MultiNet [41] | 71.6 | 8.6 |

| DLT-Net [42] | 72.1 | 9.3 |

| PSPNet [34] | 89.6 | 11.1 |

| RESA [10] | 89.3 | 47.4 |

| YOLOP [49] | 91.5 | 41 |

| Ours | 91.72 | 26 |

| Baseline | Multi-Scale | DFF | Cross-Context | CIoU | Focal-Style | Lane Marking IoU (%) ↑ | Lane mIoU (%) ↑ |

|---|---|---|---|---|---|---|---|

| ✓ | 19.14 | 87.40 | |||||

| ✓ | ✓ | 21.49 (+2.35) | 87.47 (+0.07) | ||||

| ✓ | ✓ | ✓ | 21.83 (+2.69) | 88.85 (+1.45) | |||

| ✓ | ✓ | ✓ | ✓ | 23.2 (+4.06) | 89.47 (+2.07) | ||

| ✓ | ✓ | ✓ | ✓ | ✓ | 23.49 (+4.35) | 89.54 (+2.14) | |

| ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | 23.96 (+4.82) | 90.07 (+2.67) |

| Backbone | Lane Marking IoU (%) ↑ | Lane mIoU (%) ↑ | Params (M) ↓ | FLOPs (G) ↓ | Speed (fps) ↑ |

|---|---|---|---|---|---|

| ResNet-18 | 30.39 | 90.54 | 17.05 | 89.83 | 58 |

| ResNet-34 | 30.46 | 90.61 | 27.16 | 139.46 | 40 |

| ConvNeXt-tiny | 31.48 | 91.29 | 18.35 | 96.52 | 39 |

| ConvNeXt-small | 32.53 | 91.72 | 39.97 | 200.07 | 26 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tian, W.; Yu, X.; Hu, H. Interactive Attention Learning on Detection of Lane and Lane Marking on the Road by Monocular Camera Image. Sensors 2023, 23, 6545. https://doi.org/10.3390/s23146545

Tian W, Yu X, Hu H. Interactive Attention Learning on Detection of Lane and Lane Marking on the Road by Monocular Camera Image. Sensors. 2023; 23(14):6545. https://doi.org/10.3390/s23146545

Chicago/Turabian StyleTian, Wei, Xianwang Yu, and Haohao Hu. 2023. "Interactive Attention Learning on Detection of Lane and Lane Marking on the Road by Monocular Camera Image" Sensors 23, no. 14: 6545. https://doi.org/10.3390/s23146545

APA StyleTian, W., Yu, X., & Hu, H. (2023). Interactive Attention Learning on Detection of Lane and Lane Marking on the Road by Monocular Camera Image. Sensors, 23(14), 6545. https://doi.org/10.3390/s23146545