1. Introduction

Bearings-only target tracking is widely applied in wireless sensor networks for the civilian and military areas [

1,

2]. Different from other sensors such as range-only sensors, time difference of arrival (TDOA) sensors, and so on, bearings-only sensors work in passive mode and easily survive from being detected and attacked. However, they are highly sensitive to a range in which even a small angle measurement error may lead to a large tracking error. Therefore, bearings-only target tracking has been a research area of considerable interest for decades. Meanwhile, with the development of the unmanned vehicles, the traditional stationary sensor platforms have evolved into mobile ones characterized by high speed and long endurance. Accordingly, flexible sensor motion coordination can be achieved, so the tracking accuracy and the survival ability are significantly improved from sensor coordination.

Much previous work has been dedicated to developing different estimators for target tracking based on bearings-only measurements in two- and three-dimensional space [

3,

4,

5,

6]. The extended Kalman filter (EKF) is a classical method for the nonlinear tracking problem [

7] but often diverges when the model nonlinearity is strong. The pseudolinear Kalman filter (PLKF) was introduced in [

8,

9], with better convergence than the EKF. However, the estimate is biased, which is highly dependent on sensor geometry [

10]. Furthermore, other estimation algorithms such as the unscented Kalman filter (UKF) [

11], cubature Kalman filter (CKF) [

12,

13,

14,

15], and particle filter (PF) [

16,

17] have been applied in bearings-only target tracking with different estimation performance advantages.

Compared with the improvement in the tracking accuracy produced by the estimation algorithms, sensor–target geometry plays a fundamental role in determining the accuracy of target tracking systems [

18,

19,

20,

21]. The Fisher information matrix (FIM) is a commonly used criterion assessing target tracking accuracy. The inverse of the FIM, called the Cramer–Rao lower bound (CRLB), indicates the optimal performance of a tracking system. Three popular optimality criteria are adopted tp achieve the optimal sensor configuration based on the FIM [

19]. D-optimality minimizes the area of the uncertainty ellipse by maximizing the determinant of the FIM [

18,

20,

22]; A-optimality suppresses the average variance by minimizing the trace of CRLB [

23,

24]; and E-optimality minimizes the length of the largest axis of the same ellipsoid by minimizing the maximum eigenvalue of the CRLB [

19]. In [

25], D-optimality was adopted to optimize sensor placement for range-based target tracking. In [

26], the conditions for optimal placement of heterogeneous sensors were derived based on maximizing the information matrix, and the optimal placement for paired sensors was developed leveraging a “divide-and-conquer” strategy. In [

27], A-optimality was used to solve sensor placement for 3D angle-of-arriving target localization. Geometric dilution of precision (GDOP) [

28] is another criterion used to evaluate tracking accuracy. GDOP is defined as the root mean square position error and illustrates how an estimation is influenced by sensor–target geometry [

29]. The optimal deployment for multitarget localization was developed in [

30] by minimizing the GDOP.

In addition to the above theoretical analysis on the sensor–target geometries, some sensor path optimization methods have been proposed for target tracking to avoid the difficulty in finding the closed-form solution. A gradient-descent-based motion planning algorithm was presented for decentralized target tracking [

31]. In [

32], a gradient descent optimization algorithm was proposed for single- and multisensor path planning by minimizing the mean square error in 2D space. In [

33], the path optimization for passive emitter localization in 2D space was transformed into a nonlinear programming problem with the FIM as the cost function. In [

34], the path optimization strategy for 3D AOA target tracking was developed by minimizing the trace of covariance matrices with gradient descent optimization and a grid search method. In [

35], the optimal sensor placement for AOA sensors was derived with a Gaussian prior using D- and A-optimality. In addition, the result was extended to path optimization based on a projection algorithm.

Most of the existing work has focused on optimal deployment using multiple bearings-only sensors for target localization. Some closed-form solutions have been derived with equal angular distribution. Inspired by the “divide-and-conquer” strategy in [

26], the continuum of the optimal solution for bearings-only measurement has potential to be extended to general circumstances. Moreover, for bearings-only target tracking problems using mobile sensors, some studies in the literature have adopted optimization methods such as gradient descent, Gauss–Seidel relaxation, and so on. Nevertheless, the solution space for optimization is complex due to the high nonlinearity of the cost functions related to the FIM. As a result, these numerical methods may lead to falling into local optima and fail to reach the globally optimal tracking performance. Motivated by the aforementioned aspects, this paper focuses on the optimal sensor–target geometry and motion coordination problem of mobile bearings-only sensors for target tracking. The sensors are driven to approach the target from a distance and eventually move in a circular formation to track the target.

The contributions of this paper are summarized as follows. (1) The suboptimality of approaching the target for bearings-only sensors to improve tracking performance is analyzed. (2) A continuum solution to optimal sensor–target geometry is derived with uniform sensor–target distance using D-optimality for arbitrary n () bearings-only sensors. The optimal geometry is characterized by the partition cases dividing n into the sum of integers no less than two. (3) A motion coordination algorithm to achieve globally optimal performance is developed based on matching optimal geometry and motion optimization to achieve the optimal target tracking performance.

The remainder of this paper is organized as follows:

Section 2 presents the problem formulation. The CKF and FIM are introduced in

Section 3.

Section 4 reformulates the problem and investigates the optimality analysis. In

Section 5, we design a motion coordination strategy based on the results in

Section 4. The proposed method is verified by simulations in

Section 6.

Section 7 concludes this paper.

Notations: Define , . The two-norm of a vector is defined as . indicates the Cholesky decomposition of . and denote the trace and the determinant of the matrix contained in the bracket, respectively. represents the cardinality of the set S. .

2. Problem Formulation

This paper focuses on the problem of sensor motion and coordination for single-moving-target tracking with

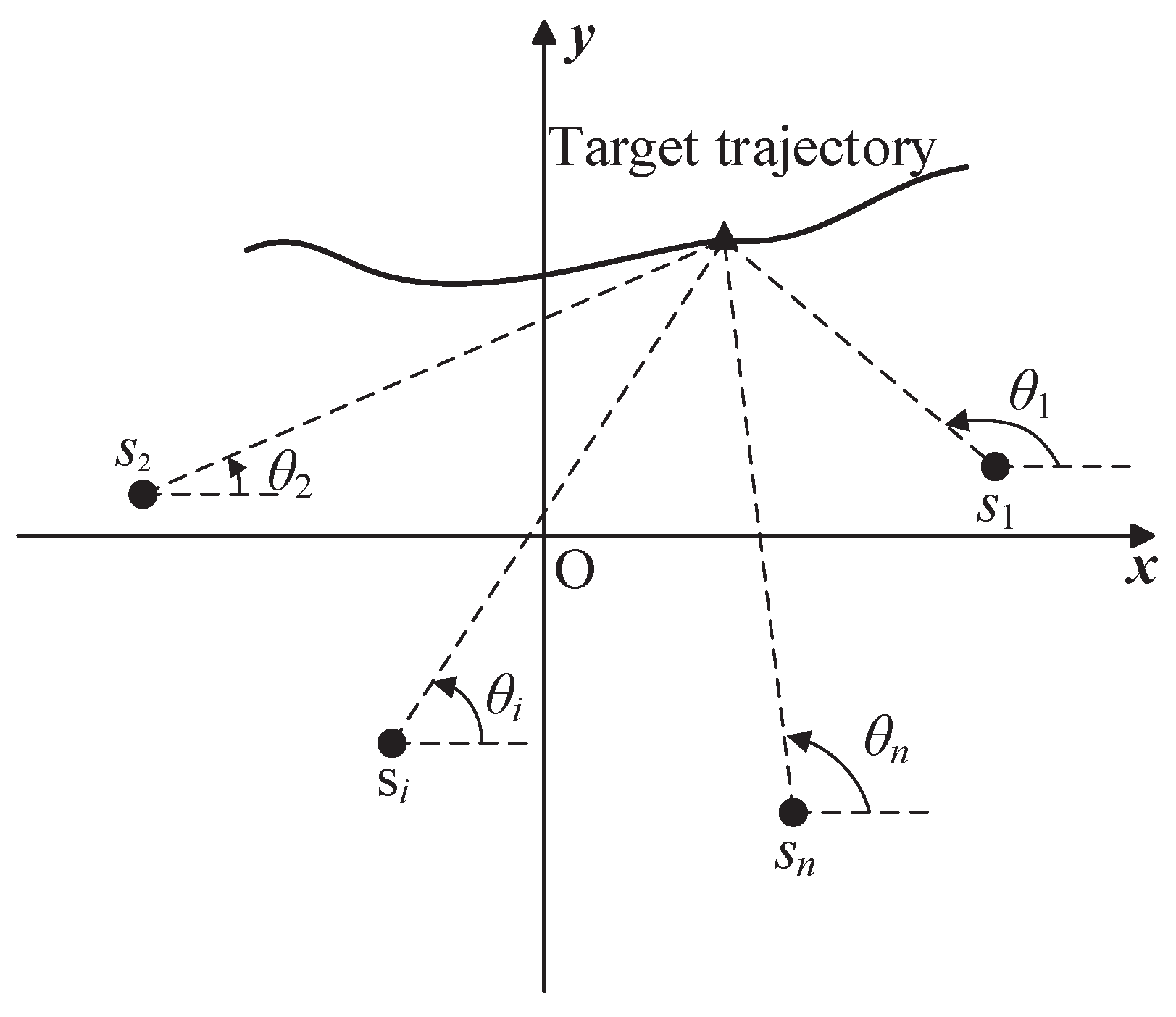

bearings-only sensors in 2D space. The target tracking geometry is depicted in

Figure 1.

is the angle of the line of sight (LOS) from sensor

i at discrete time

k. Define

as the measurement of

; then, the measurement function is

where

is the position of the target at time

k;

is the four-quadrant inverse tangent function and

;

is the location of sensor

i;

is the measurement noise and assumed to be i.i.d Gaussian noise with zero mean and variance

,

. The sensors are homogeneous, i.e.,

. Write the measurements in a compact form as

, and

is measurement Gaussian noise with zero mean and covariance

, where

is an identity matrix.

Consider the target whose motion is described by a nonlinear dynamic discrete system

where

is the state vector of the dynamic system at discrete time

k;

is process Gaussian noise with zero mean and covariance

;

is the dimension of the state vector. Meanwhile,

and

are mutually independent processes.

The dynamic model of the mobile sensors is given by

where

is the position of sensor

i at discrete time

k;

is the control input for sensor

i at time

k;

is the designed velocity of sensor

i at time

k; and

T is the sampling time.

The state parameters of the target are unknown. We assume that the state of the mobile sensors and the measurements taken by them are known. Because of the noncooperative scenario, the minimum distance restriction between the target and the sensors should be ensured. We aimed to estimate the target state using the bearings-only measurements and improve the tracking accuracy by optimizing the sensor–target geometry of cooperative mobile sensors under practical constraints.

Assumption A1. At the beginning of the tracking process, at least two sensors are deployed in positions that are not colinear to the target to ensure the observability of the target by the sensors [19,36]. Assumption A2. The mobile sensors are homogeneous with a maximum speed and a maximum turn rate due to the limitations of the mechanical properties. The maximum speed of the sensor is faster than that of the target to ensure they can catch up the target. The minimum distance between sensors and the target is denoted as .

4. Optimality Analysis

The problem of path planning and motion coordination for improving tracking performance is equivalent to finding the next waypoints at each time step by maximizing the determinant of the FIM. There exist two kinds of parameters influencing the determinant of the FIM. So, we can maximize by simultaneously reducing the distances between the sensors and target and configuring the angles among the sensors.

In order to ensure the minimum distance constraint, the sensors move on a circular trajectory at a distance radius around the target. Before that, the path to reaching the circular radius orbit (CRO) for improving the tracking accuracy was studied. Thus, the design of the motion coordination for multiple sensors is divided into two stages, including outside the CRO distance and on the CRO distance .

4.1. Outside the CRO Distance

Consider the bearings-only tracking problem. When the range between the target and the sensor is greater than

, the problem of the optimal sensor movement is equivalent to the following optimization problem:

where

is the angle of the velocity vector at time

k, and the difference between

and

is bounded by

due to the limited turn rate.

Obviously, the difficulty of solving problem (

12) increases with the increase in the number of the mobile sensors, though they can be solved via numerical methods. As such, we turned to suboptimal motion to reduce computational complexity.

When the sensors are far away from the target, the sensors are expected to move with maximum speed

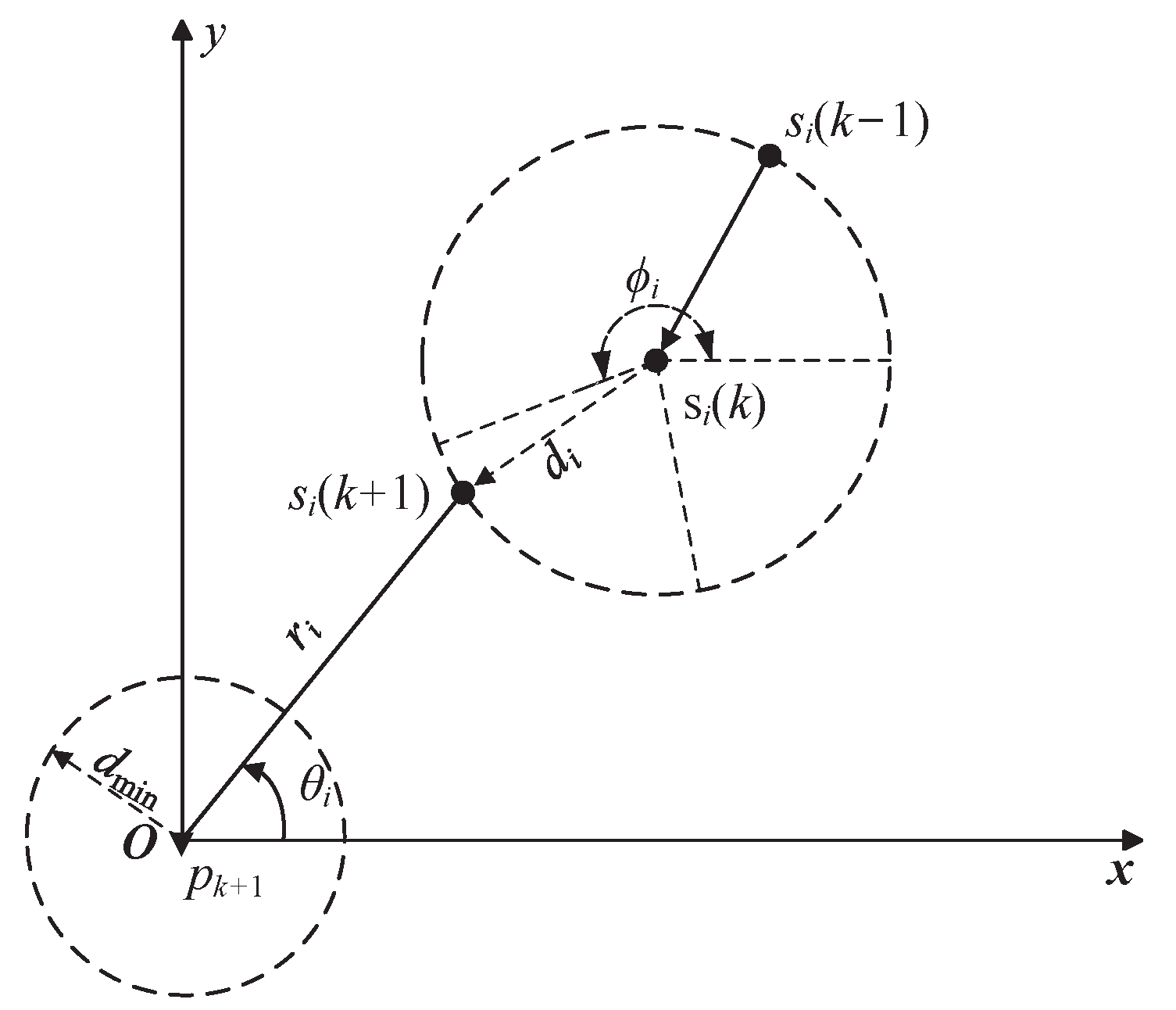

to approach the target. As shown in

Figure 2, the location

that sensor

i is able to reach can be expressed by

where

is the heading direction of sensor

i at time

k. For convenience, denote

,

and

.

Theorem 1. Consider the bearings-only tracking problem. When the range between the target and the sensor is greater than , and the position of the target is at time , the suboptimal heading direction of sensor i at time k is Proof. According to the Cauchy inequality,

Consider the function

where

.

To achieve the maximum of

, take the partial derivatives of

with respect to

. Then, we have

Let

, we obtain

Additionally, let

denote the Hessian matrix of

at

, with elements

We obtain

where

. Obviously,

is a negative definite matrix, and as a consequence,

is the maximum point. □

Furthermore, taking the limitation of the turn rate into consideration, the heading direction of sensor

i at time

k is

where

and

.

Note that the determinant of FIM increases with the range between the sensors and target when the angles among the sensors remain unchanged. In other words, the optimal heading direction is always toward the target, so we can force the sensors to directly approach the CRO around the target. The tracking accuracy is improved as well but does not reach the optimum.

4.2. On the CRO Distance

When all sensors reach the CRO around the target, which is a circle centered on the target and with a radius of

, we have

. Define

,

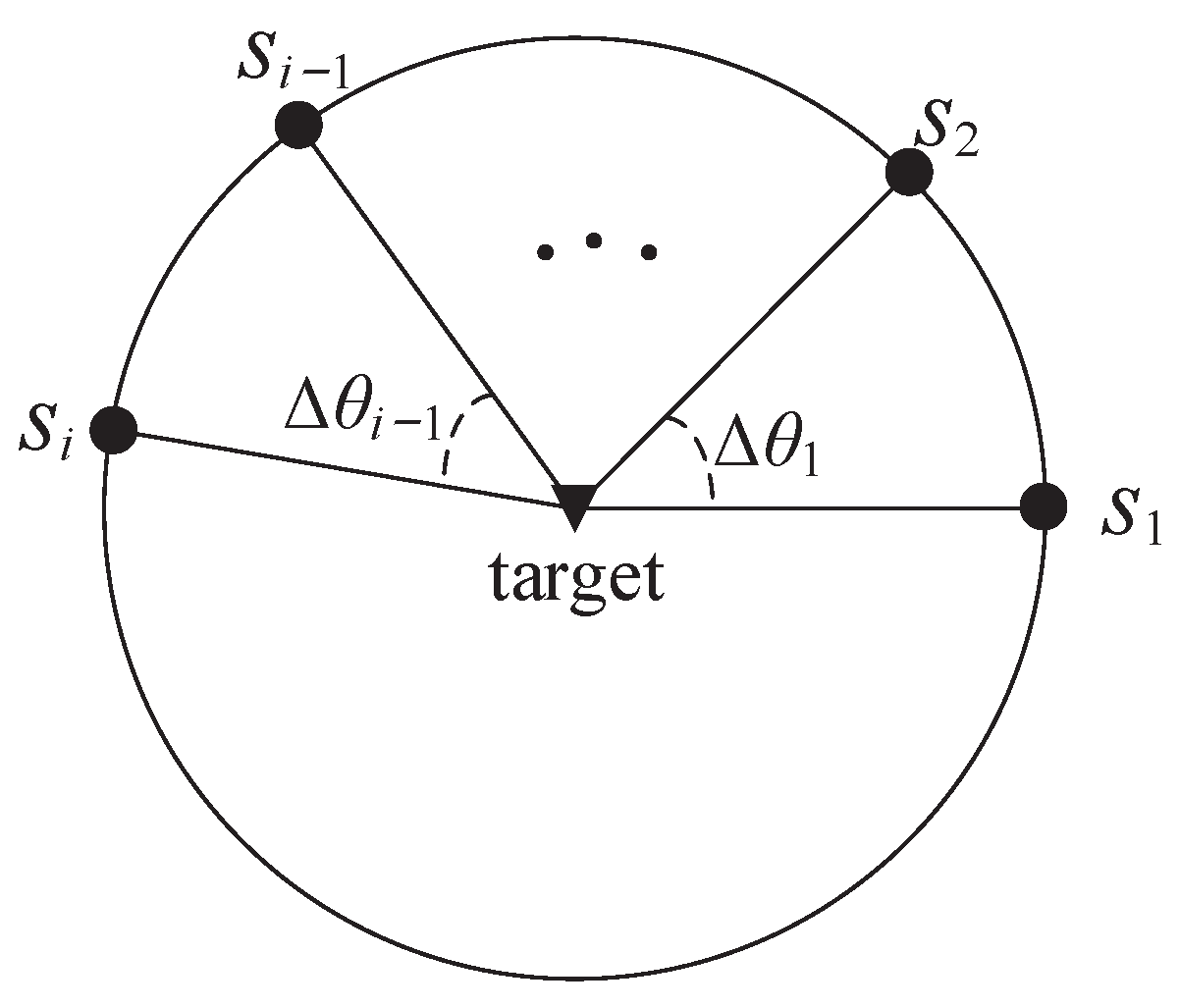

. The sensor–target geometry is depicted in

Figure 3. In this section, the time step

k is omitted for the convenience of description.

In order to simplify the analysis of optimal sensor–target geometry, the related propositions are reclaimed.

Proposition 1. The determinant of the FIM in (11) remains unchanged in the following three operations: - 1.

Switching the position of any two sensors;

- 2.

Rotating all the sensors around the target;

- 3.

Flipping arbitrary sensors about the target.

Remark 1. Proposition 1 originated from [18] and recognized in [20]. It implies that is invariant to these geometric operations. Without loss of generality, the sensors are assumed to be renumbered counterclockwise with through the geometric operations according to Proposition 1, which is equivalent to flipping the sensors with the actual angles of a LOS ranging from to 0.

The target tracking system achieves optimal estimation performance when all sensors move at the same speed as the target on the CRO in the formation, confirming the following results.

Lemma 2 ([

30]).

Consider n bearings-only sensors tracking a single target. When all sensors are on the CRO around the target (), , the Fisher information determinant given in (11) has the upper bound . The upper bound is achieved when . Remark 2. When , there are two solutions for optimal geometry with equal angular distribution in [30], i.e., . However, the optimal geometry when can be obtained through flipping part of the sensors about the target in the optimal geometry when . Therefore, we consider them as identical optimal geometry for n sensors and retain the solution of , which avoids the complexity arising from two optional solutions. For a more general circumstances, there is less limitation to . Denote as the set of all sensors and as the subset of S, . Denote , where . Then, we have the following result:

Theorem 2. Consider the bearings-only tracking problem. When all sensors are on the CRO around the target (), the Fisher information determinant given in (11) has the upper bound . The upper bound is achieved if the following conditions hold true Proof. The sensors in

are placed as Lemma 2. Then,

where

is the set of all combinations of

a and

b with

and

. Since

(

is arbitrary,

), for

Finally, consider the following function

where

.

□

In view of (

25) in the proof of Theorem 2, the angles between the sensors not in the same subset do not affect the optimal sensor–target geometry. In addition, it remains the optimal sensor–target geometry when the sensors are managed by the geometric operations in Proposition 1. Therefore, we can classify the optimal sensor–target geometry by the set

, which is recognized as the partition case dividing

n into the sum of integers no less than 2. In other words, the optimal sensor–target geometries are regarded as identical for equivalent

.

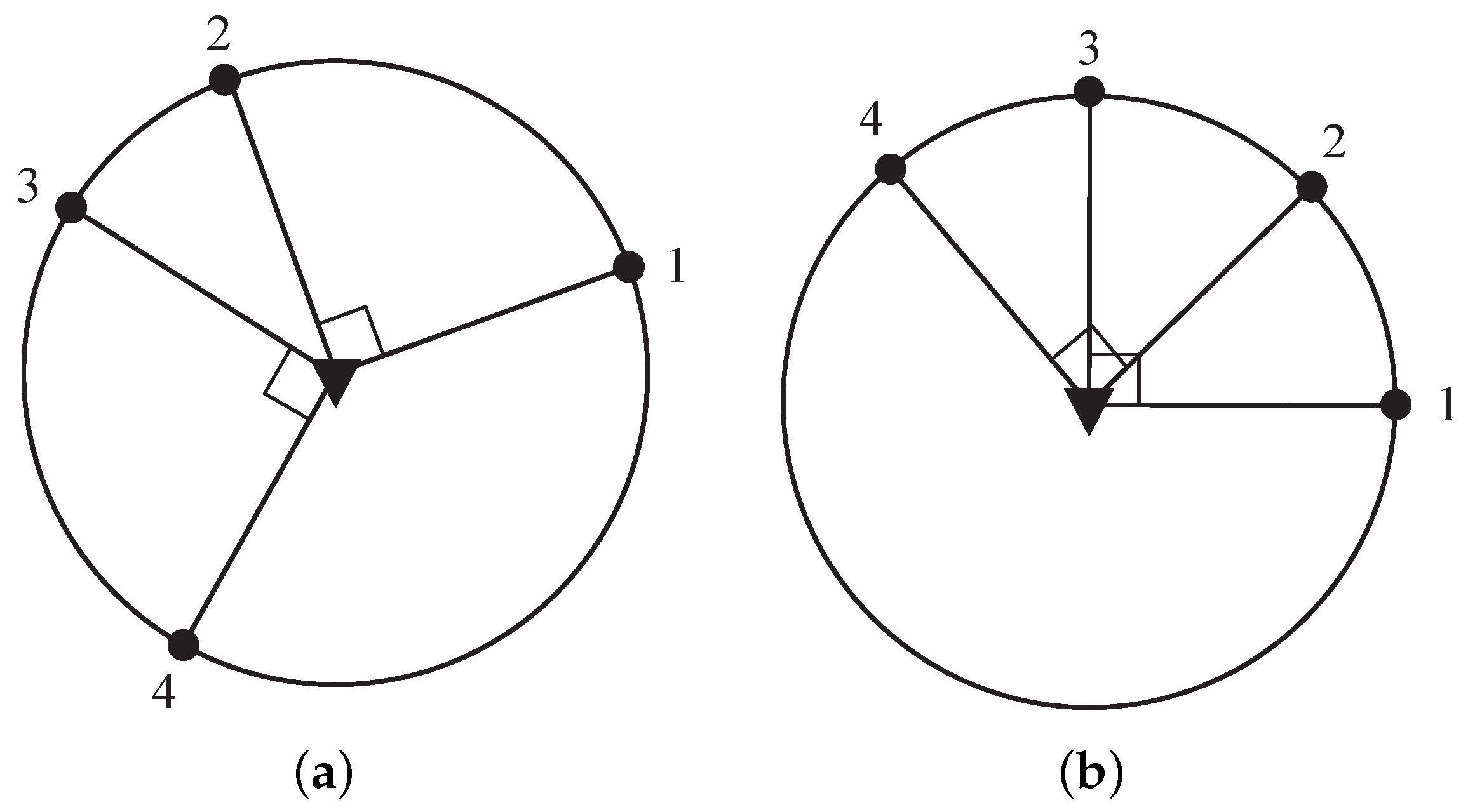

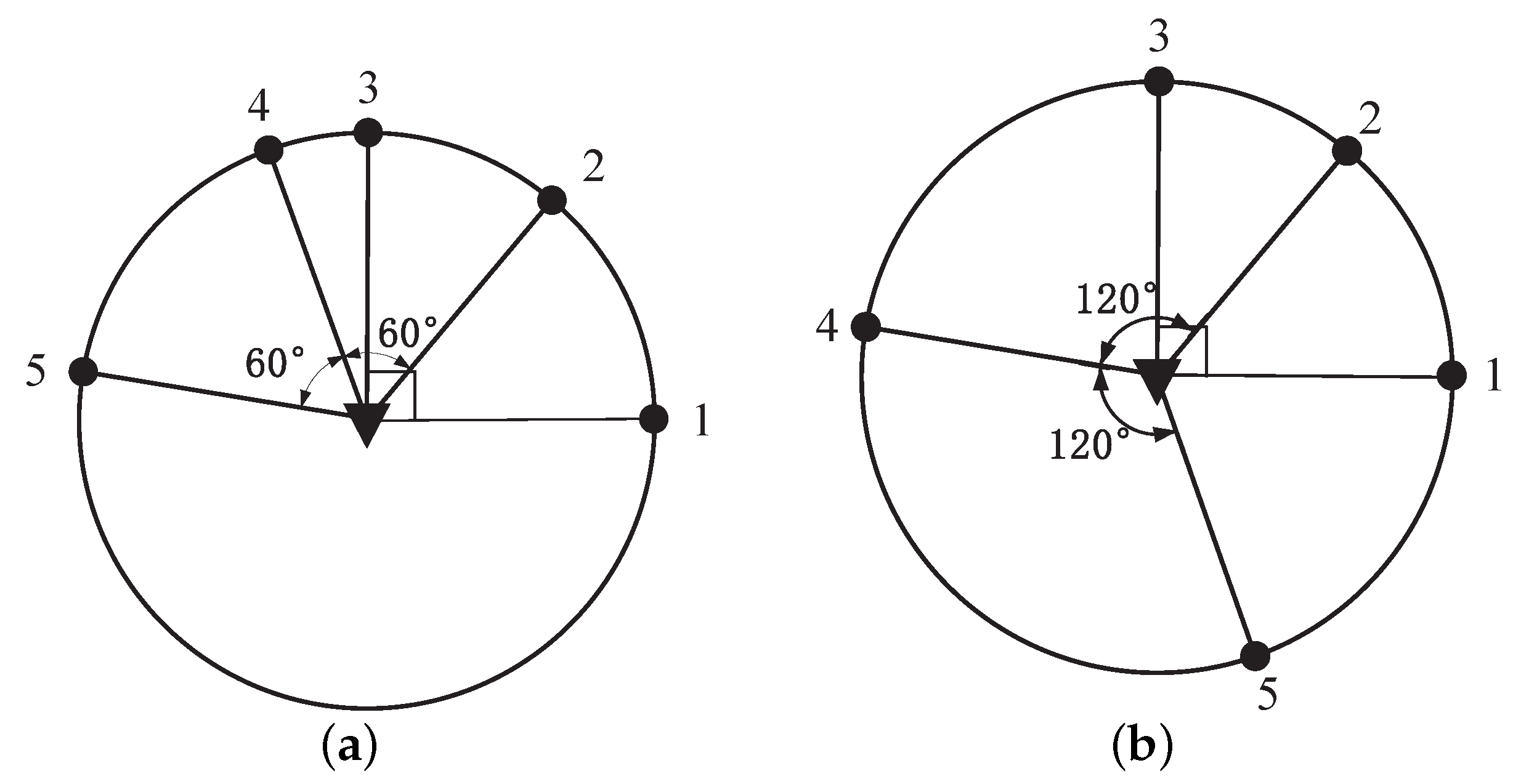

Figure 4 and

Figure 5 illustrate some examples of the optimal sensor–target geometry for

. In

Figure 4a,b, two sensor–target geometries are considered the same because the sensors are both divided into two subsets with

. Additionally, the sensors with the same

in

Figure 5a,b are also regarded as having identical sensor–target geometry, because the optimal sensor–target geometry in

Figure 5b can be obtained by flipping sensor 4 about the target in

Figure 5a. Additionally, the optimal sensor–target geometry with another partition case for

is shown in

Figure 5c,d, which is regarded as identical optimal geometry with

, but they differ from the optimal geometry in

Figure 5a,b due to different partition cases.

Remark 3. Although the number of optimal sensor–target geometries described in Theorem 2 is infinite due to rotation invariance, we are only concerned with the partition cases of the set S according the classification method in this paper. The number of the partition cases dividing n into a sum of positive integers no less than 2, denoted as , asymptotically equals [37]. 6. Simulation Experiments

In this section, we illustrate the proposed sensor motion coordination algorithm with some simulation examples. By default, all variables used in the simulation were in SI units. As introduced in

Section 3.1, we used a CKF method to estimate the state of the target. For comparison, the gradient descent method in [

34] and the projection method in [

35] were adopted to optimize the sensor motion under the same conditions.

To compare the tracking performance, we used the root mean square error (RMSE) of the position of the target. The RMSE of position at time

k is defined as

where

is the total numbers of Monte Carlo runs;

and

are the true and estimated positions at the

nth Monte Carlo run respectively.

Scenario 1: We consider a problem of tracking a moving target using 5 mobile sensors in 2D space. The dynamic function of the target is described by the constant velocity model

where

and

is the sampling time. The process noise

is a zero-mean Gaussian with a covariance matrix

, where

The scalar parameter

denotes the process noise intensity. The measurements taken by sensor

i at time

k is given in (

1) and

.

The true initial state of the target is , and its associated covariance is . The initial state estimate is randomly chosen from in each run. The initial positions of the 5 sensors are , , , , and . The maximum velocity and turn rate are and , respectively. The minimum restriction is , and the minimum distance among the sensors is . Set .

There are two partition cases for

with

and

. We first compared the tracking performance and distance traveled by the mobile sensors when the sensors are steered to achieve these two kinds of optimal sensor–target geometries. Additionally, we included static sensors and mobile sensors whose waypoints were computed by the methods in [

34,

35] in the comparative experiment.

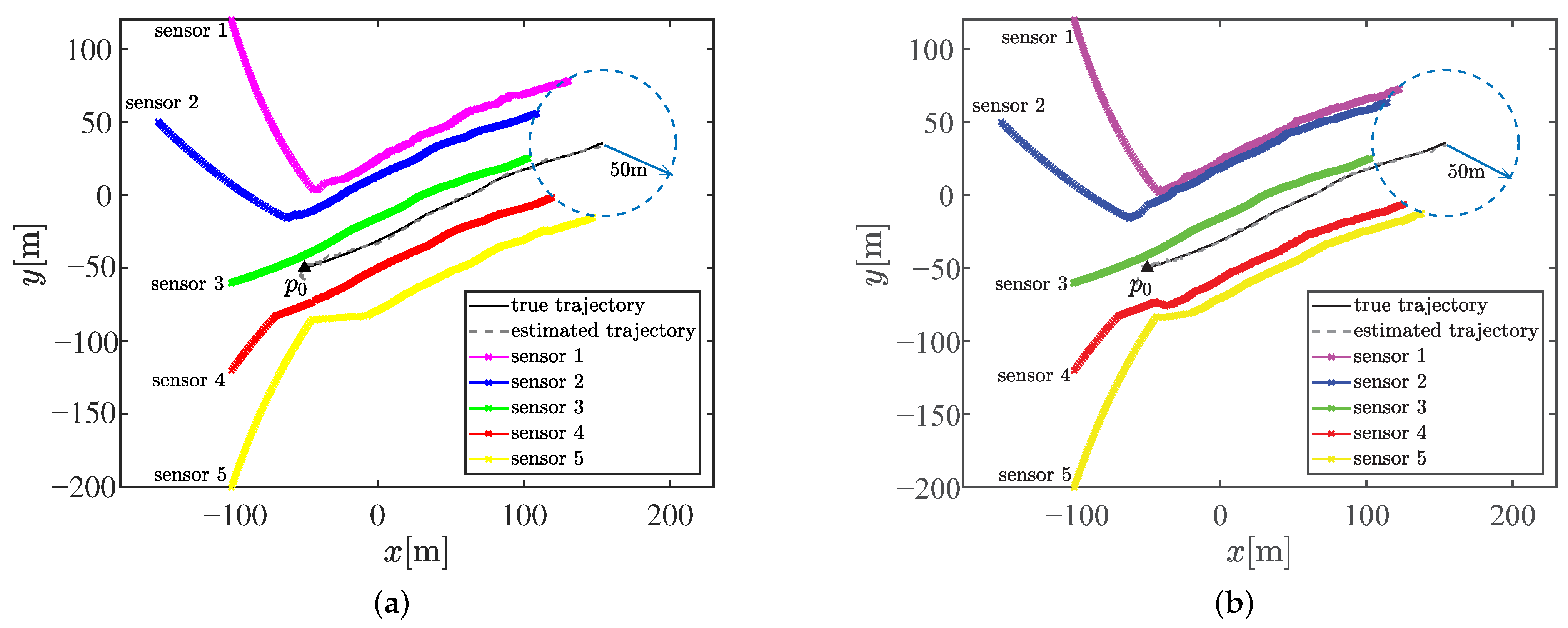

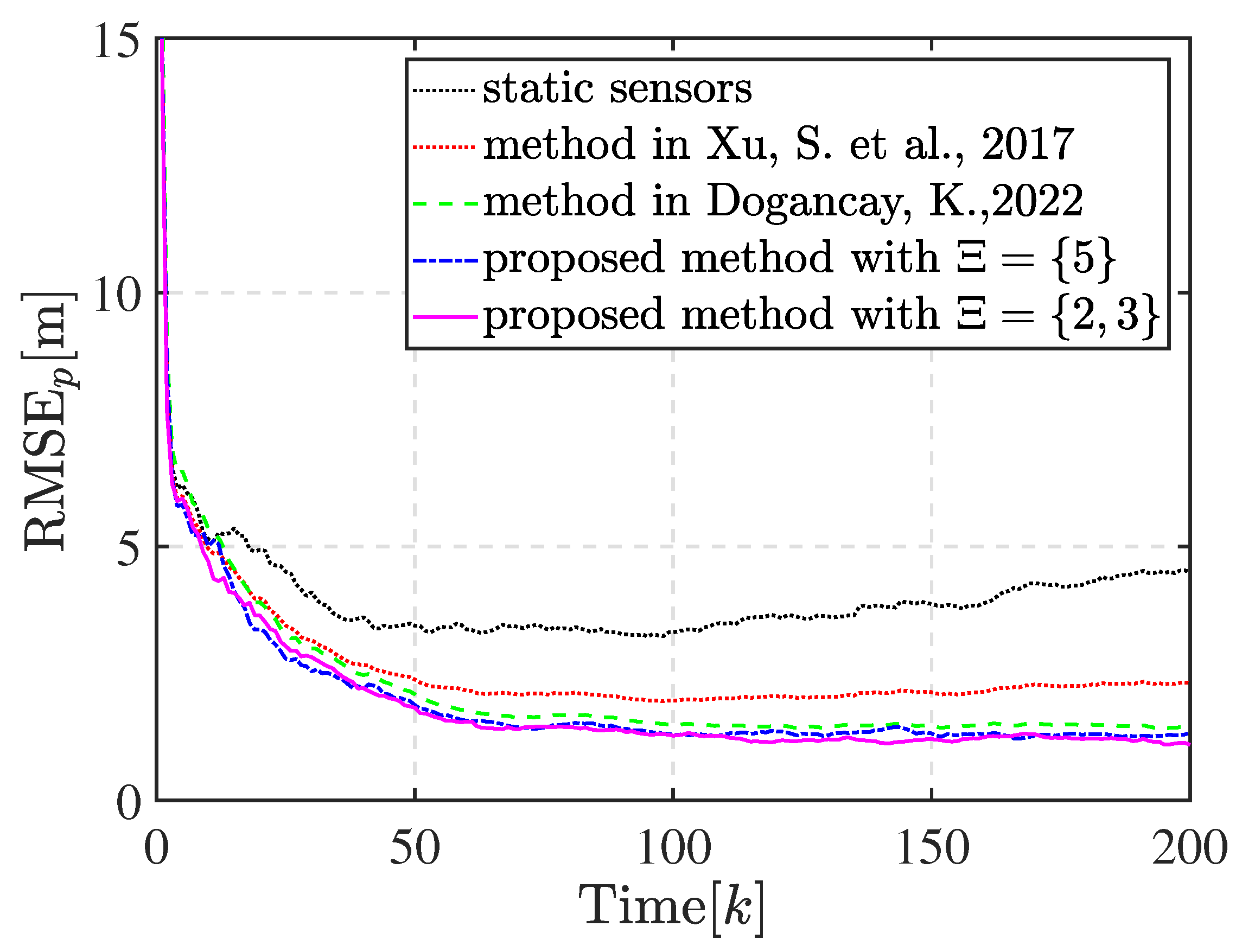

Figure 7a,b show the trajectory of the 5 bearings-only sensors achieving the optimal geometry with partition cases

and

, respectively. As shown in

Figure 7b, sensor 1, sensor 3, and sensor 5 are assigned into the subset with three sensors and the others in the subset with two sensors after matching the optimal geometry. The sensors eventually move with the target in the optimal geometry, as expected. The optimal geometry is referenced to the estimated target position and shows discrepancies with the true optimal sensor–target geometry. This discrepancy is unavoidable in practical applications since the true target position is unknown. However, the proposed motion coordination method can enhance the estimation performance, and the circular formation approaches closer to the true optimal geometry, thus achieving the theoretically optimal estimation accuracy, as shown by the compared RMSEs of the position illustrated in

Figure 8. Obviously, the tracking performance estimated by mobile sensors is better than that estimated by static sensors. The proposed method significantly improves the tracking performance and exhibits lower estimate error compared with the method in [

34]. Meanwhile, the tracking performance of the method in [

35] is close to the proposed method in this scenario. There is a negligible difference in the tracking performance between the two kinds of optimal geometries with

and

. Additionally, the sums of the distance traveled by all mobile sensors to achieve the optimal geometry with

and

are

and

, respectively. The reduction in distance between

and

is attributed to the fact that the sensor–target geometry is closer to the optimal geometry with

, whose

is smaller, when the sensors reach the CRO.

Scenario 2: We consider a problem of tracking a moving target using 4 mobile sensors in 2D space. The dynamic function of the target is described by

where

and

. The process noise

is a zero-mean Gaussian with a covariance matrix

, where

and

and

denote the process noise intensity. The true initial state of the target is

, and its associated covariance is

. The initial positions of the 4 sensors are randomly deployed. The rest parameters are listed as:

,

,

,

,

and

.

There are two partition cases for

with

and

. However, the optimal geometry with

can be obtained by rotating the sensors in one subset in the optimal geometry with

as a whole by a proper angle. Thus, the partition case for

is selected as

in Scenario 2.

Figure 9 shows the trajectory of the 4 bearings-only sensors tracking a target. In this run, sensors 1 and 3 are assigned in a subset and the others in another subset after matching the optimal geometry.

Figure 10 shows the compared RMSEs of the position. Obviously, the tracking performance estimated by static sensors is the poorest, and it continues to degrade as the distance from the target increases. The proposed method improves the tracking performance and exhibits lower estimate error compared with the methods in [

34,

35] for maneuver turning target tracking.