In this section, we present the statistics of our dataset of SWIR images, and the object detectors used in the research. In addition, the proposed tracking algorithm (MCDTrack) is explained.

3.1. Edge Computing

Deep neural networks require massive addition and multiplication operations that can be accelerated by GPU computing. As a result, training and inference of deep neural networks are typically performed on GPU-based servers. However, network bandwidth limitations or privacy issues often arise in city-surveillance scenarios, limiting the use of GPU server computing in such cases. Edge computing, which performs analysis close to the source of the data rather than on centralized servers, can be used in these situations.

Our city-surveillance scenario is one of the representative scenarios that require edge computing, as the complexity of resource management increases dramatically when streaming hundreds or thousands of high-resolution videos to central servers, and network bandwidth is often limited. In this study, we use the Raspberry Pi 4B, one of the most popular edge-computing devices, for object detection and tracking on SWIR images.

TFLite is a machine learning library developed by the Tensorflow community [

30], for edge computing on low computing devices. Through the TFLite framework, a machine learning model is optimized to be run on edge-computing devices. The TFLite library provides the conversion of a Tensorflow model into a compressed flat buffer, and the quantization of 32-bit floating point (FP32) into 8-bit integers (INT8). We use the 8-bit quantized TFLite model, and we measure the running speed of the quantized object detection models on the Raspberry Pi 4B. The average running speeds of TFLite-optimized lightweight YOLO object detectors (YOLOv5s and YOLOv7-tiny) of 320 × 320 pixels input show 2.94 fps and 3.65 fps on the Raspberry Pi 4B. Data collection was performed based on the speed of these object detectors on Raspberry Pi 4B. The object detection and tracking experiments were also performed on the Raspberry Pi 4B.

3.2. Dataset

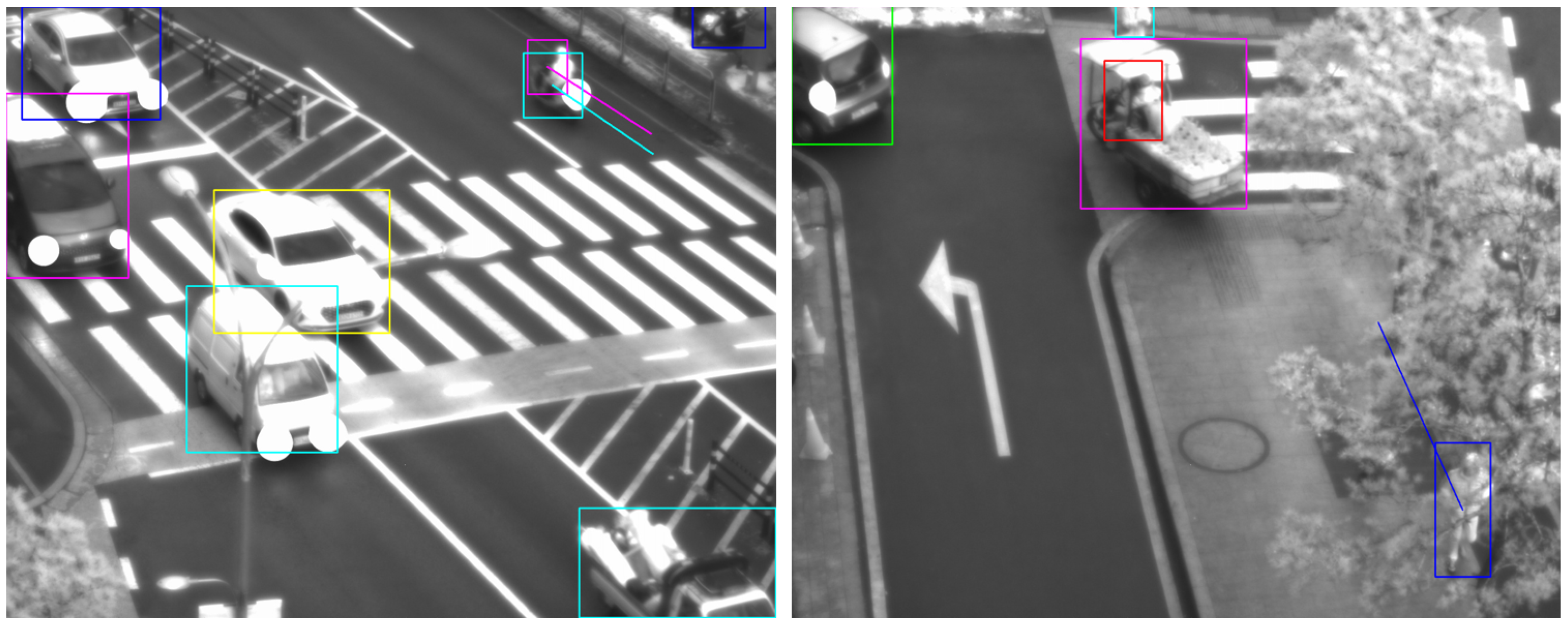

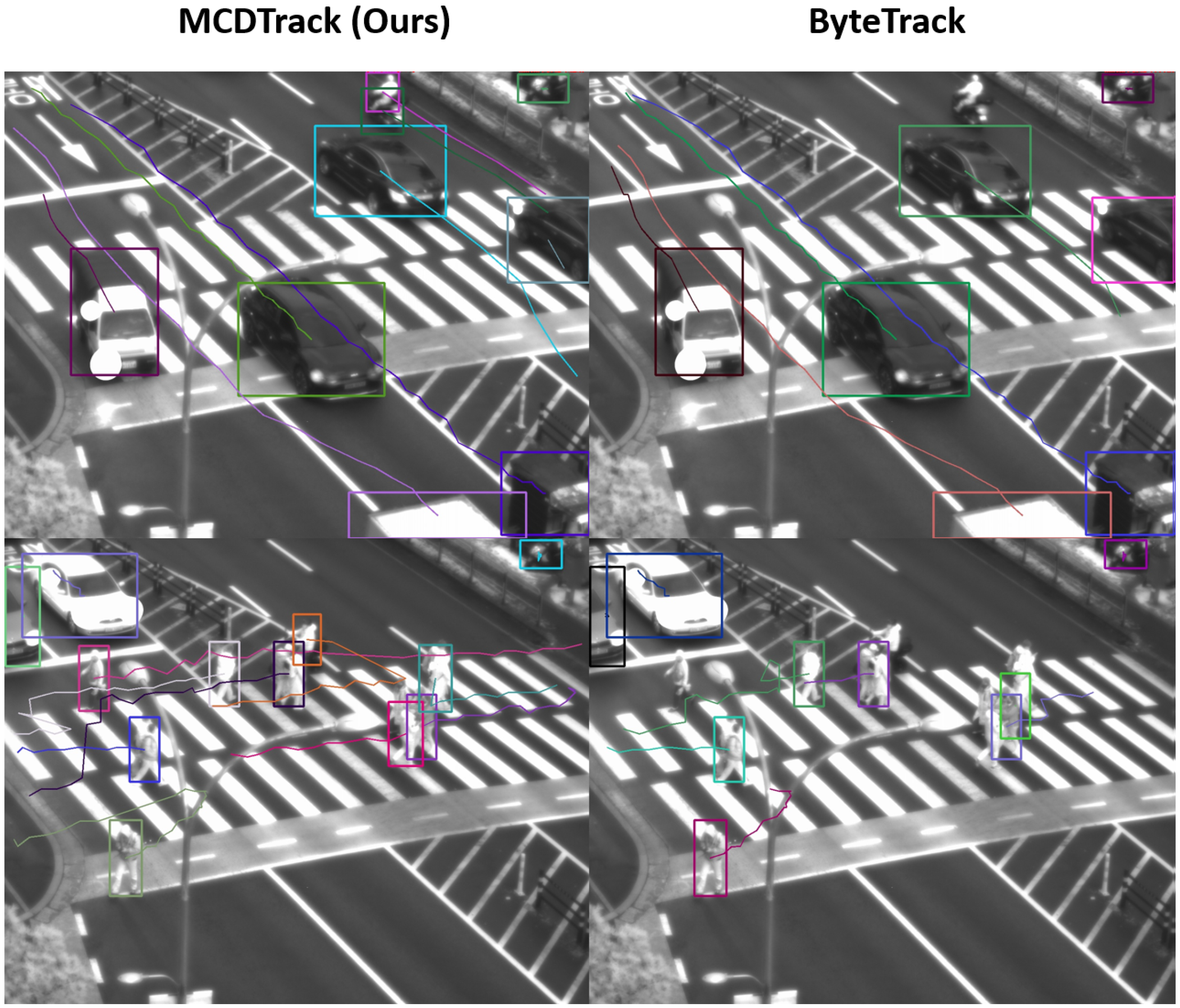

As SWIR sensors have rarely been investigated for automated object detection and tracking, we built a new SWIR dataset for city-surveillance scenarios. In the city center, a SWIR camera captured images of people, cars, trucks, buses, and motorcycles in a variety of environments, including streets, pavements, and crosswalks. The SWIR camera is installed in a fixed position with a top-down lateral angle, like standard surveillance cameras, and we vary the angle of view to capture different scenes.

Objects of the target classes are manually labeled by a labeling tool.

Table 1 shows the statistics of our training and testing data. In total, 7309 images with 45,925 objects are used for training and 2689 images with 11,296 objects are used for testing. The resolution of SWIR images is 1296 by 1032 pixels.

In the test dataset, we also link the object boxes of successive time frames to create tracking labels. A total of 13 tracking scenarios are used to test the tracking algorithms in our research. A tracking scenario consists of consecutive image frames with a minimum duration of one minute. The average time gap between image frames is 327 ms (an average of 3.1 frames per second), which is relatively large compared to previously studied tracking datasets. To validate that our algorithm works well on edge-computing devices, which are slow in image acquisition, object detection inference, and object tracking, we sample the images at a low frame rate for experimental scenarios. This low frame rate is appropriate for the speed of the object detection algorithm on the Raspberry Pi 4B. The number of image frames and the number of objects in each scenario are shown in

Table 2.

3.4. Tracking Object in SWIR Images

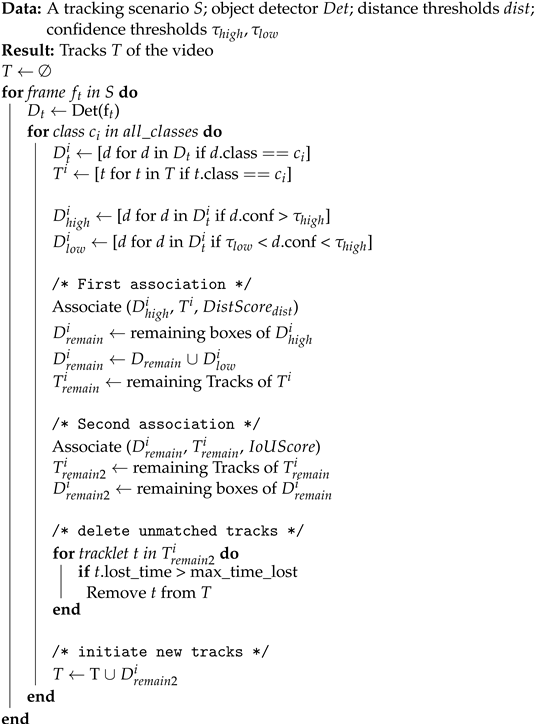

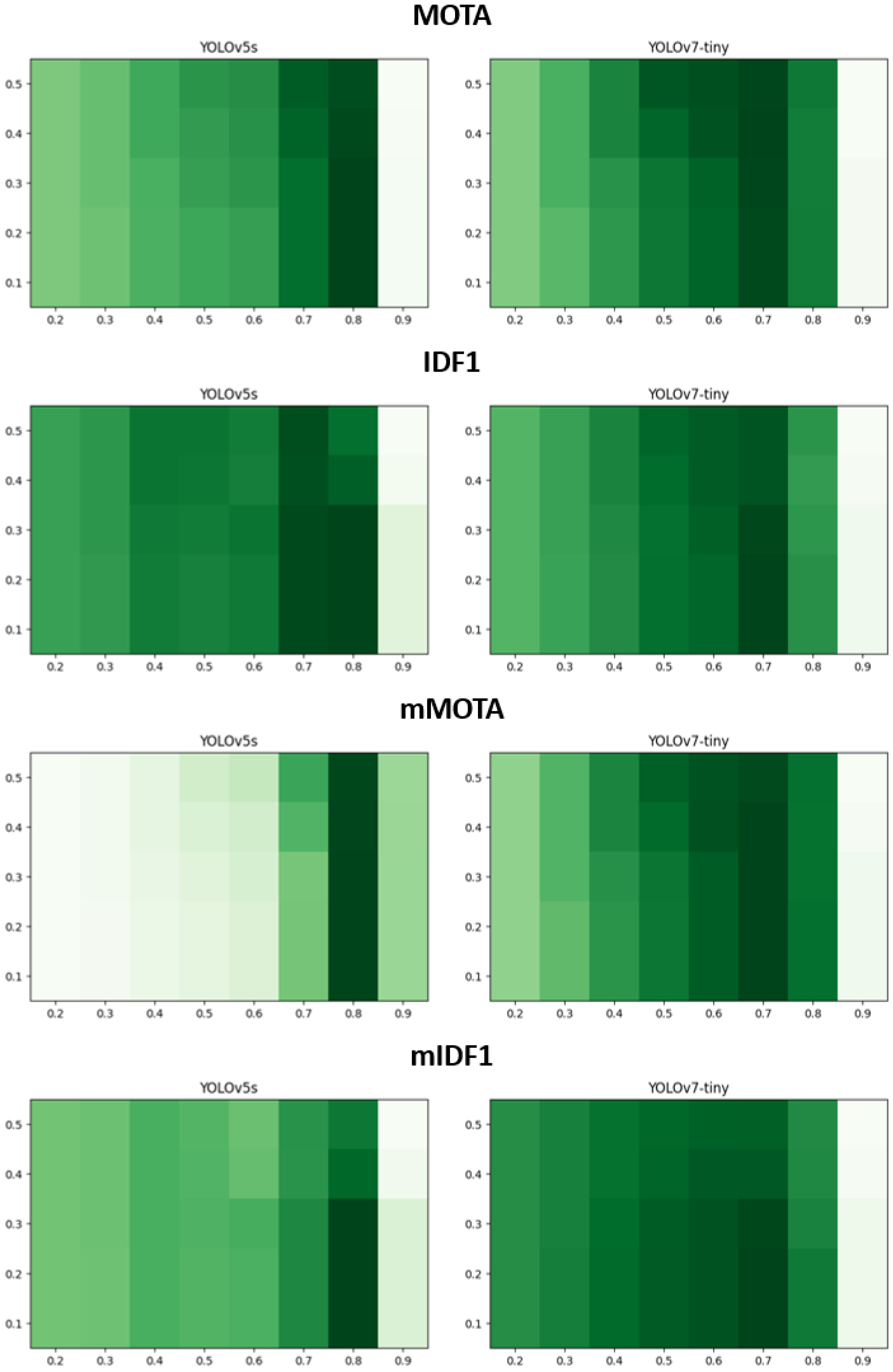

Algorithm 1 shows the pseudo-code of our MCDTrack (Multi-class Distance-based Tracking). MCDTrack is given a tracking scenario consisting of consecutive image frames, an object detector, distance thresholds for each class, and two confidence thresholds and representing high and low confidence levels used in the algorithm. The object detector receives an image frame and returns object box information, which includes box coordinates, a class, and a confidence score.

For each frame in a tracking scenario, MCDTrack retrieves each class to associate the bounding boxes of the class. Similar to previous multi-object tracking approaches [

6,

28,

29], the detection boxes of a given class are divided into high-confidence boxes and low-confidence boxes along the two confidence thresholds

and

. The high-confidence boxes are object boxes with confidence thresholds higher than

, and the low-confidence boxes are those with confidence thresholds between

and

. The first association uses the high-confidence boxes, and the second association uses the low-confidence boxes.

| Algorithm 1: Pseudo-code of MCDTrack. |

![Sensors 23 06373 i001 Sensors 23 06373 i001]() |

In MCDTrack, a distance-based similarity score is used for the first association as shown in Algorithm 1. The distance-based similarity score measures the distance of the centers between object boxes (

D) and tracks (

T). The center of each object box and track is represented by

. We then calculate the Euclidean distances of the centers, and the result is a matrix of

, where each cell in

represents the distance between

i’th object box and

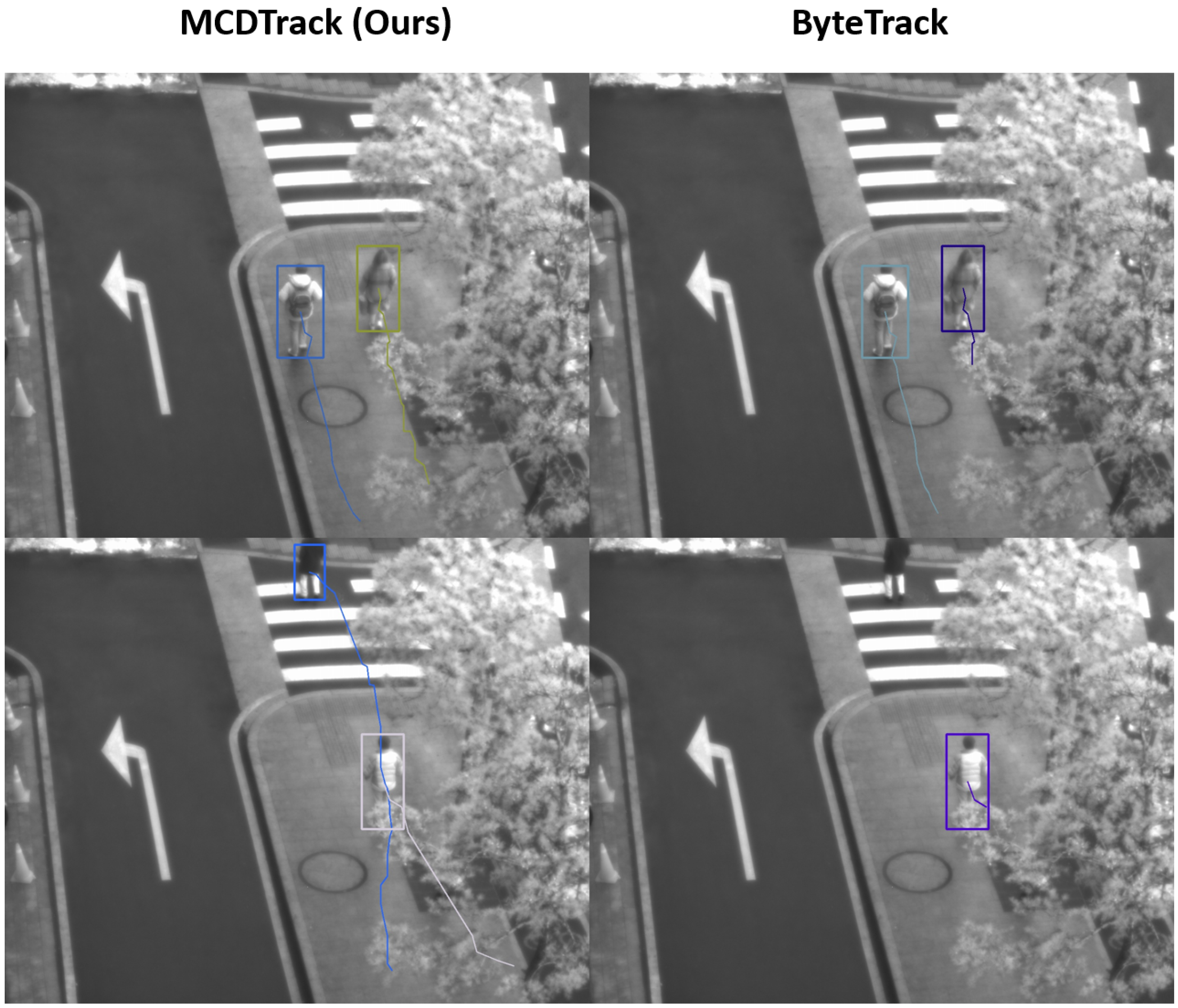

j’th track. We then divide the distance matrix by the distance threshold of each class, and the pairs that exceed the distance thresholds receive the highest distance cost and are not assigned to each other in the association phase. The distance threshold for each class is determined empirically, and the values in pixels are as follows—Person: 350, Car: 100, Motorcycle: 250, Bus: 100, Truck: 100. We found that the person and motorcycle classes should have longer matching distance thresholds because they are more likely to be occluded and move faster than objects of other classes, as shown in the example of

Figure 1. The distance-based score assumes that the boxes of the same object are closer together in successive time frames than boxes of different objects.

In conjunction with the distance-based score, the IoU-based score is utilized as the second association. The IoU score is used in many previous multi-object tracking approaches [

6,

25], and it measures the intersection over the union of detected boxes and previous tracks. It assumes that boxes of the same object in consecutive time frames overlap more than boxes of different objects. The IoU score is simple and effective in the multi-object tracking problem, but there could be no overlap if a target object is moving fast. The distance and IoU methods are simple to implement and do not require additional feature extraction and region-of-interest cropping from feature maps of convolutional neural networks, which could reduce tracking speed. Using the above similarity scores, a matrix is created showing how likely it is that object boxes and tracks are from the same object. The Hungarian algorithm is then used to find an optimal match in the matrix.

Compared to previous multi-object tracking approaches, the characteristics of our MCDTrack algorithm can be summarized as follows: (1) The Kalman filter is not used to predict the location and size of the boxes. Previous tracking approaches used the Kalman filter with a single predefined setting of mean and covariance matrices, which is not appropriate in our scenario of multi-class multi-object tracking scenario. The sizes, speeds, and width/height ratios vary for each class, and a single Kalman filter was unable to accurately predict the tracking box information. (2) Instead of visual feature-based re-identification scores, we use distance and IoU scores for the similarity measure. Visual feature-based methods require additional neural network computation. In addition, cropping the region of interest and comparing the high-dimensional features can reduce the latency of the algorithm, and it is not suitable for edge computing on regional surveillance cameras. (3) We adopt a multi-class tracking approach, which has not been thoroughly investigated in previous multi-object tracking approaches. In our MCDTrack, the pairs of track and bounding boxes of the same classes are matched. In addition, we investigate the best setting of distance thresholds for each class, which is an effective way to improve tracking performance.

The key factors in our MCDTrack are that it uses a two-phase association method as in the previous work [

6], and it uses distance-based tracking for the first association, which is fast and effective, especially in an edge-computing environment of low frame rate and low computing power. The Euclidean distance computation requires only a small number of multiplications, and the distance computation of multiple track information and multiple object boxes could be further improved by recent libraries, such as SciPy [

31]. The distance-based similarity measure allows the association of objects of high speed that do not overlap in successive frames, and our class-specific distance threshold approach is also effective to consider objects of different speeds.

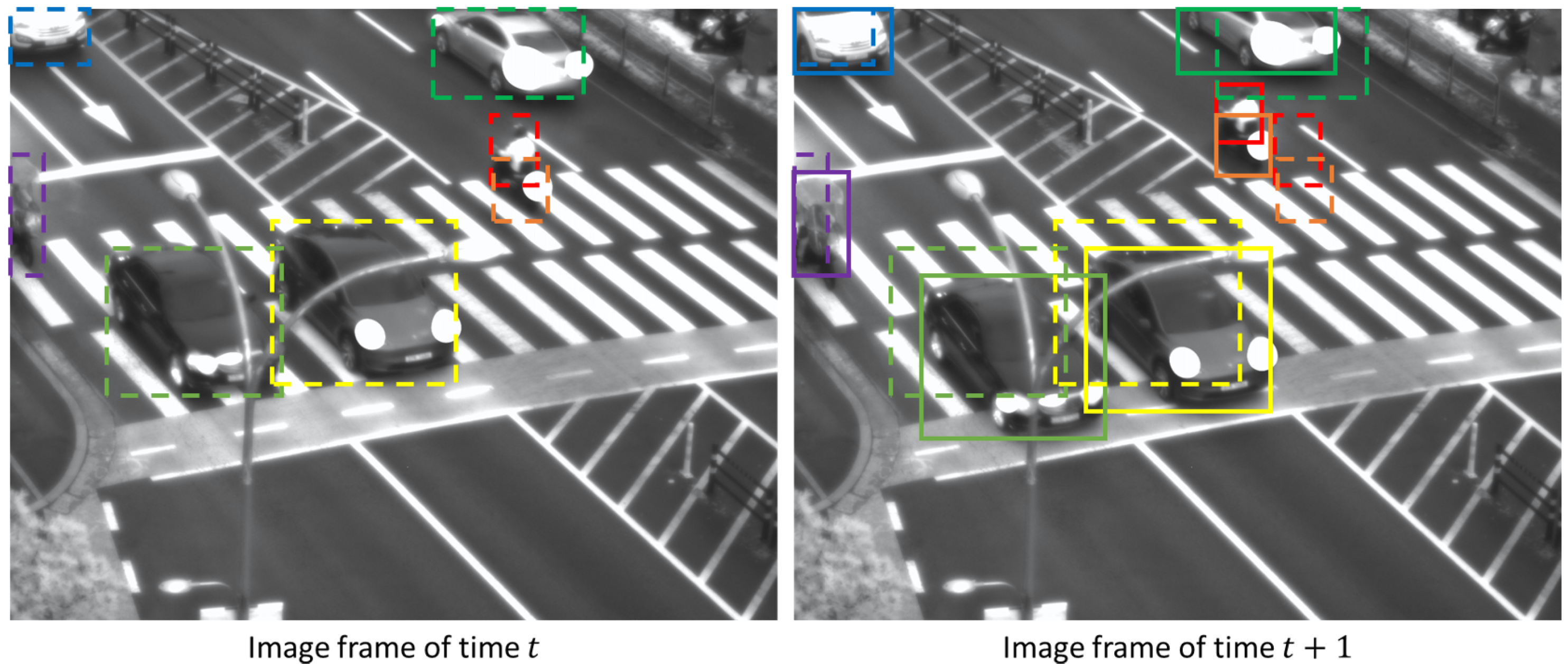

Figure 2 shows an example of tracking objects in images of consecutive time frames. Most of the objects have a high overlap between the boxes in the

t and

t + 1 time frames, but the red (a person) and orange (a motorcycle) boxes have no overlap between them. Because these objects are faster and smaller than other objects, it is difficult to track these objects using only the IoU measure.

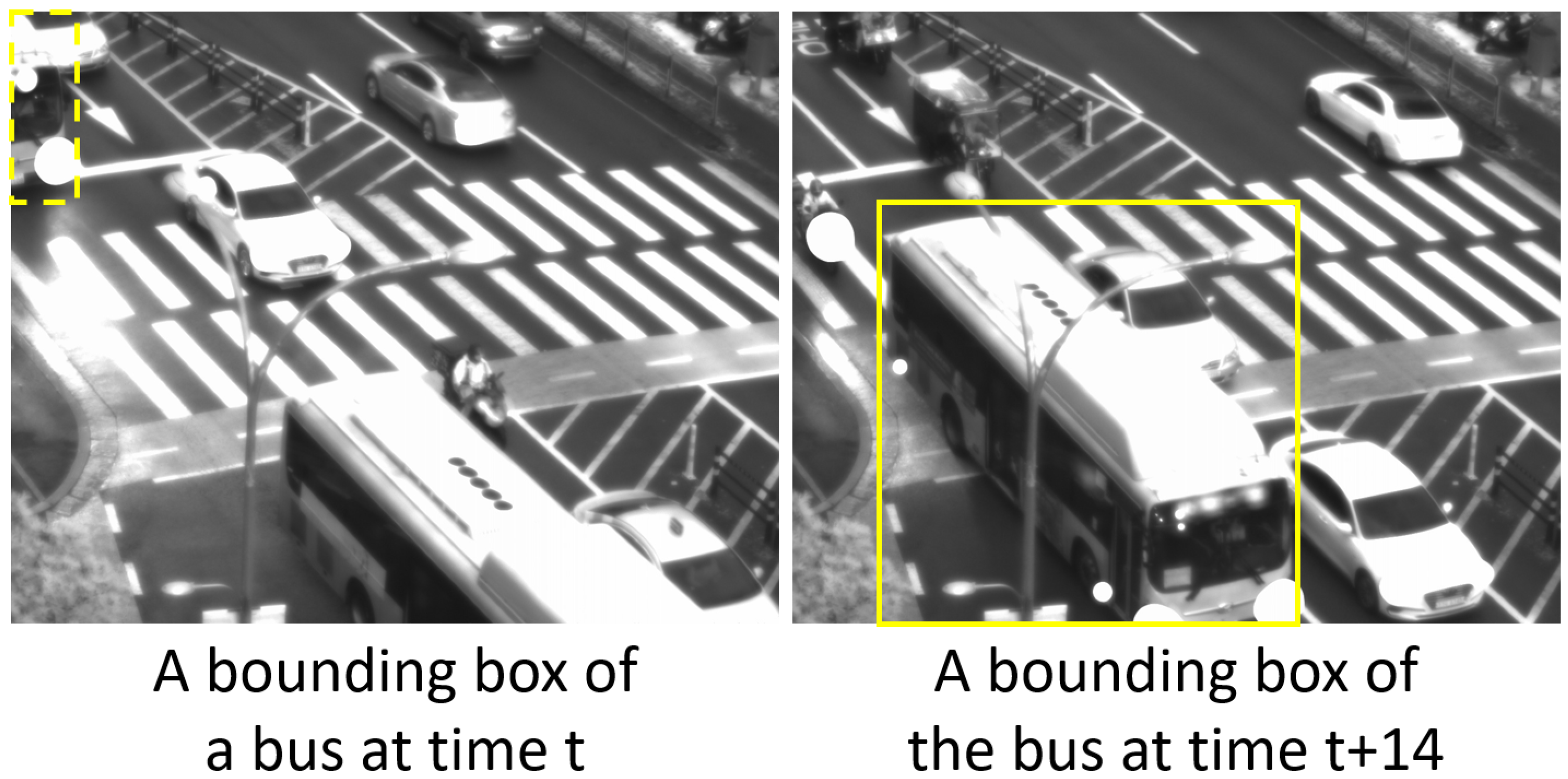

Figure 3 also gives an example of how the width-to-height ratios of identical objects can vary significantly. The bus appears as a vertically long bounding box, but after 14 time steps, it becomes almost square in shape. Unlike the pedestrian tracking dataset, the urban surveillance scenarios in our research contain such significant changes in width-to-height ratio that it is difficult to apply a fixed Kalman filter setting.

Similar to previous work on object tracking [

6,

28], we use representative multi-object tracking metrics [

32,

33], such as Multi-Object Tracking Accuracy (MOTA), the number of false positives, the number of false negatives, the number of switches, identification precision (IDP), identification recall (IDR), identification f1 score (IDF1), etc. Among these, MOTA and IDF1 are used as representative metrics. MOTA is defined by subtracting the proportion of false negatives, the proportion of false positives, and the proportion of mismatches from one [

32]. IDF1 is defined by the harmonic mean of identification precision and identification recall [

33]. Although MOTA focuses more on object detection accuracy than the IDF1 metric, IDF1 places more weight on how the tracking association matches with the ground truth than MOTA. Since MOTA and IDF1 are defined in the single-class experimental setting, we also measure the mean of MOTA (mMOTA) and the mean of IDF1 (mIDF1) by averaging the MOTA and IDF1 results for each class.