Unsupervised Video Anomaly Detection Based on Similarity with Predefined Text Descriptions

Abstract

1. Introduction

- We propose a simple unsupervised video anomaly detection method based on the improved similarity measure, text-conditional similarity, between the input frame and pre-defined multiple text descriptions of normal and abnormal situations using CLIP.

- We propose a training process for the proposed similarity measure using triplet and regularization loss without labels.

- We leverage LLMs to easily obtain text descriptions for normal and abnormal situations.

- We demonstrate the feasibility of the proposed approach by comparing it with the existing methods. The proposed method outperforms the existing unsupervised methods and achieves results that are comparable to those of weakly supervised methods, which incur additional costs for data labeling.

2. Related Work

2.1. Anomaly Detection in Surveillance Video

2.2. Large VLMs

2.3. Adaptation of VLMs

3. Proposed Method

3.1. Acquisition of Text Descriptions

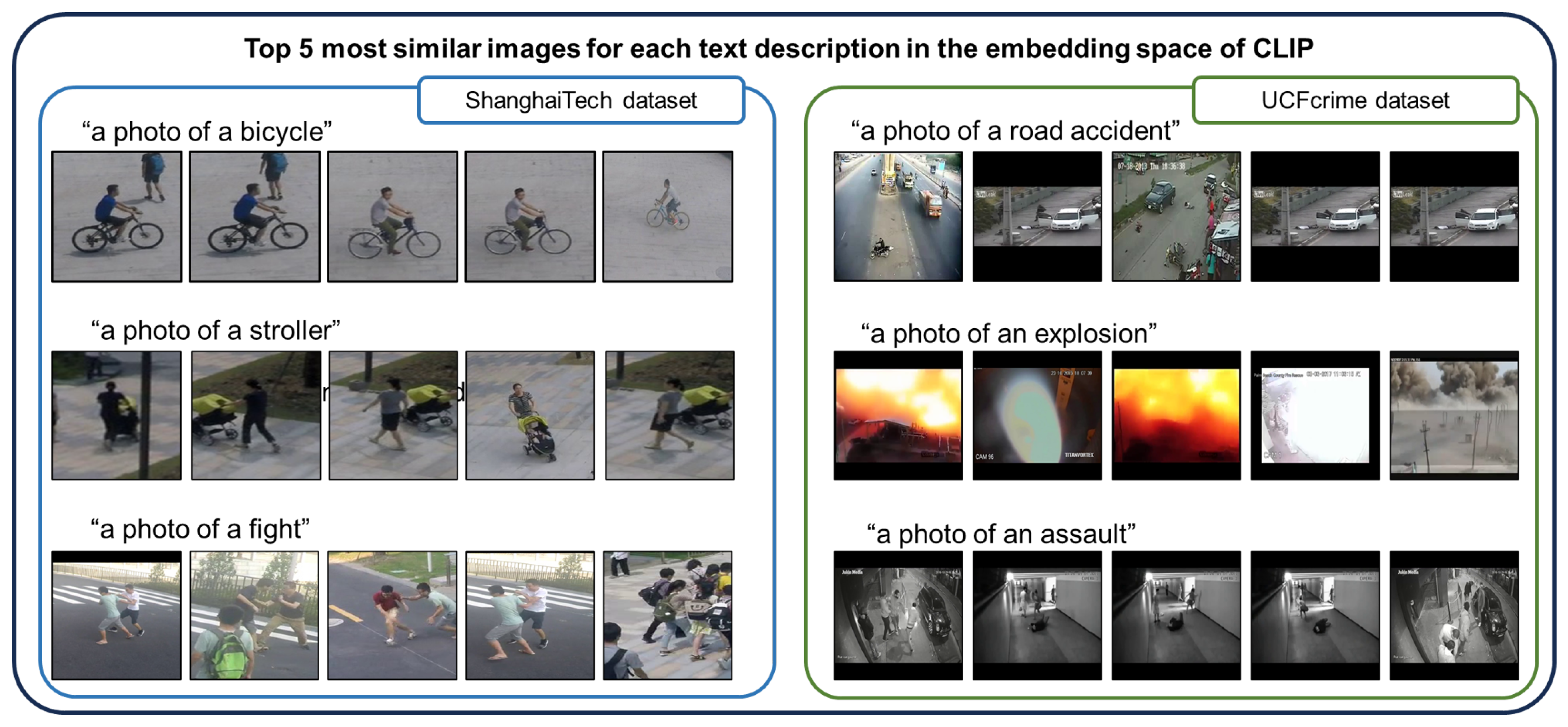

3.2. Proposed Anomaly Detector Using the Similarity Measure between Image and Text Descriptions

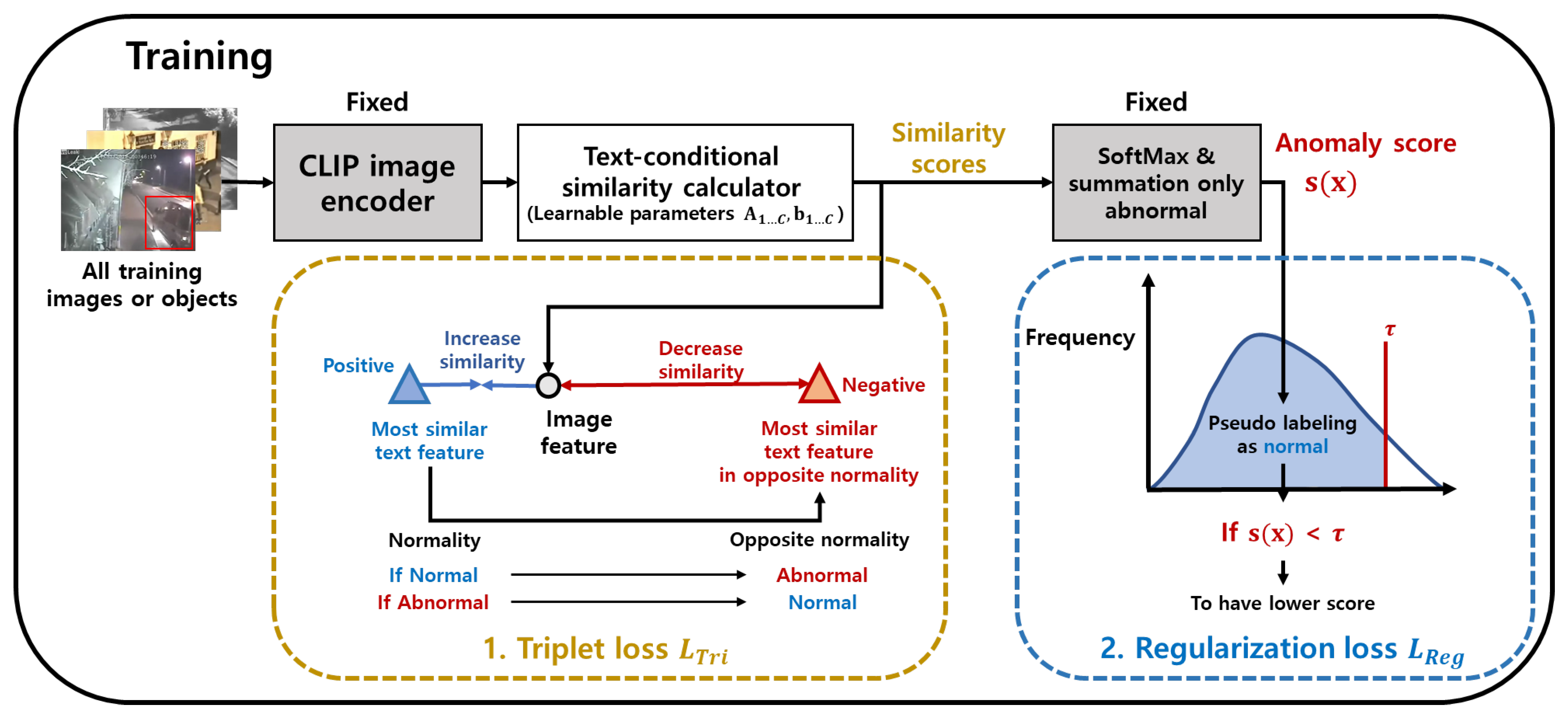

3.3. Training of the Parameters of the Proposed Similarity Measure with Text Descriptions

3.3.1. Triplet Loss

3.3.2. Regularization Loss

4. Experiments

4.1. Datasets and Text Descriptions

4.2. Implementation Details

4.2.1. Object Detector in the ShanghaiTech Dataset

4.2.2. Temporal Difference in UCFcrime Dataset

4.2.3. Temporal Smoothing of the Anomaly Score

4.2.4. Various Parameters in Use

4.3. Comparison with Existing Anomaly Detection Methods

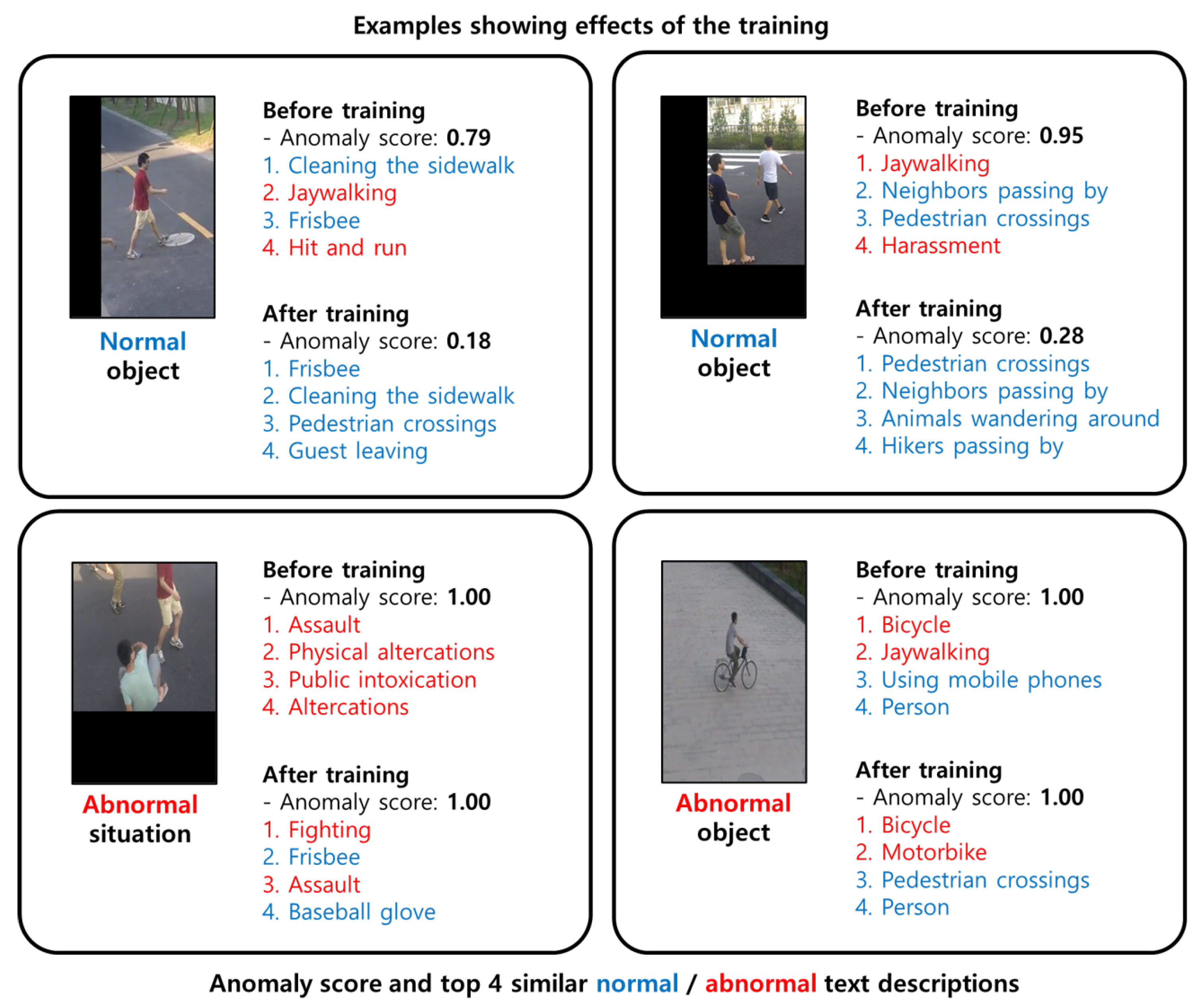

4.4. Performance Analysis

4.5. Ablation Study

4.5.1. Text-Conditional Similarity

4.5.2. Noise in the Obtained Text Descriptions

4.5.3. Ablation Study for Each Proposed Component

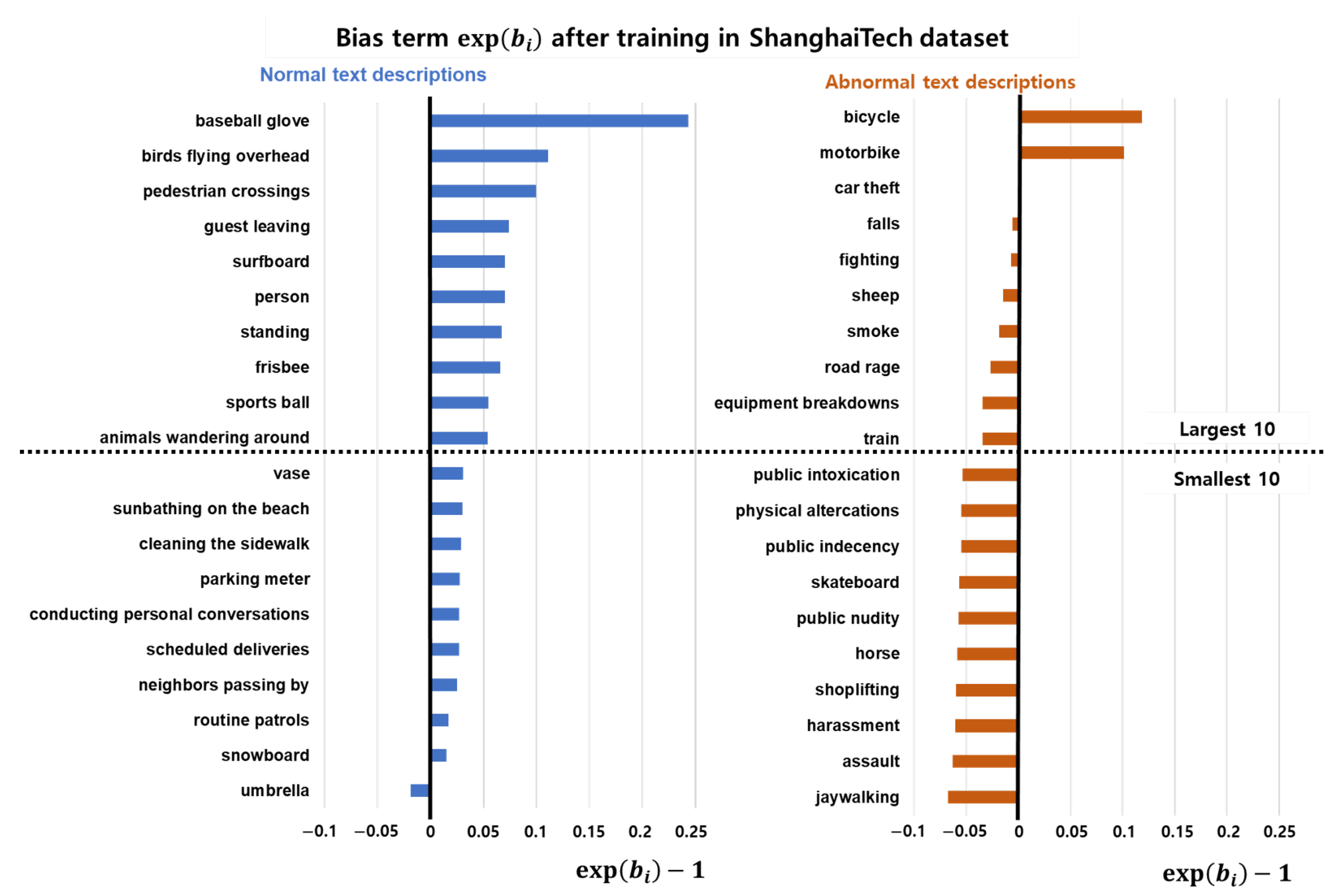

4.5.4. Bias Terms after Training

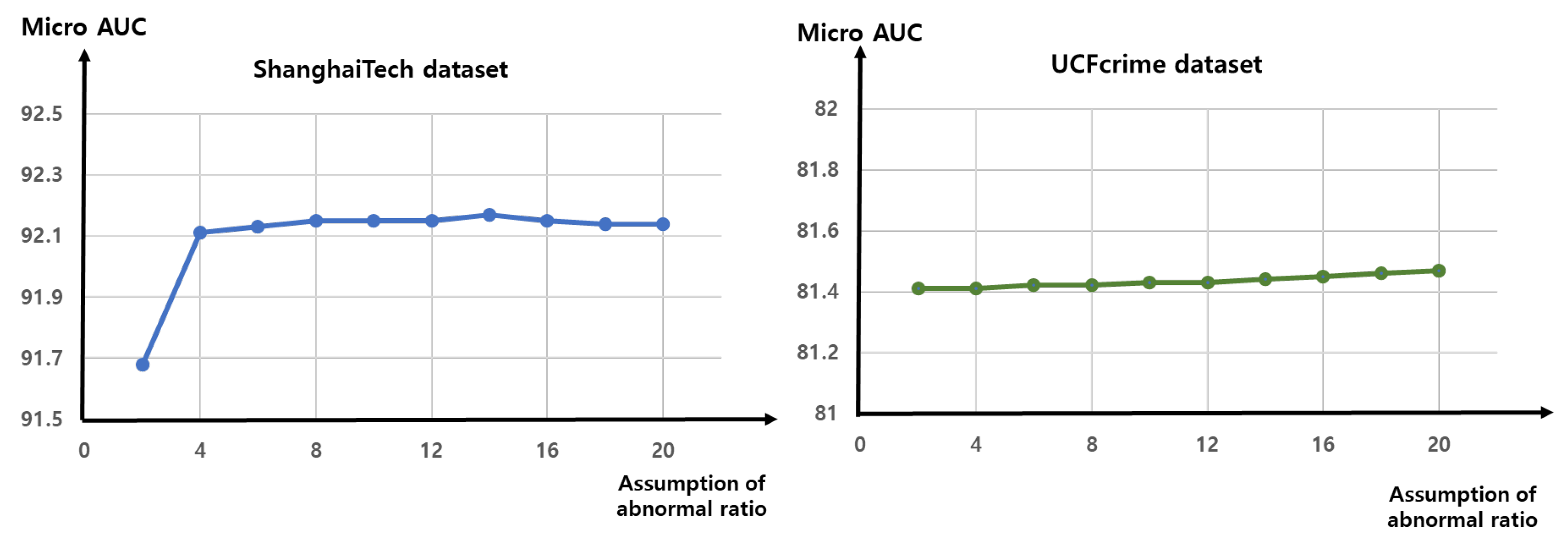

4.5.5. Assumption of Abnormal Ratio

4.5.6. Other Various Parameters

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zaheer, M.Z.; Mahmood, A.; Khan, M.H.; Segu, M.; Yu, F.; Lee, S.I. Generative cooperative learning for unsupervised video anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 14744–14754. [Google Scholar]

- Zhao, B.; Fei-Fei, L.; Xing, E.P. Online detection of unusual events in videos via dynamic sparse coding. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 3313–3320. [Google Scholar]

- Hasan, M.; Choi, J.; Neumann, J.; Roy-Chowdhury, A.K.; Davis, L.S. Learning temporal regularity in video sequences. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 733–742. [Google Scholar]

- Cheng, K.W.; Chen, Y.T.; Fang, W.H. Video anomaly detection and localization using hierarchical feature representation and Gaussian process regression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2909–2917. [Google Scholar]

- Qiao, M.; Wang, T.; Li, J.; Li, C.; Lin, Z.; Snoussi, H. Abnormal event detection based on deep autoencoder fusing optical flow. In Proceedings of the 2017 36th Chinese Control Conference (CCC), Dalian, China, 26–28 July 2017; pp. 11098–11103. [Google Scholar]

- Xu, D.; Yan, Y.; Ricci, E.; Sebe, N. Detecting anomalous events in videos by learning deep representations of appearance and motion. Comput. Vis. Image Underst. 2017, 156, 117–127. [Google Scholar] [CrossRef]

- Zaheer, M.Z.; Lee, J.h.; Astrid, M.; Lee, S.I. Old is gold: Redefining the adversarially learned one-class classifier training paradigm. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 14183–14193. [Google Scholar]

- Wang, X.; Che, Z.; Jiang, B.; Xiao, N.; Yang, K.; Tang, J.; Ye, J.; Wang, J.; Qi, Q. Robust unsupervised video anomaly detection by multipath frame prediction. IEEE Trans. Neural Networks Learn. Syst. 2021, 33, 2301–2312. [Google Scholar] [CrossRef] [PubMed]

- Georgescu, M.I.; Ionescu, R.T.; Khan, F.S.; Popescu, M.; Shah, M. A background-agnostic framework with adversarial training for abnormal event detection in video. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 4505–4523. [Google Scholar] [CrossRef] [PubMed]

- Reiss, T.; Hoshen, Y. Attribute-Based Representations for Accurate and Interpretable Video Anomaly Detection. arXiv 2022, arXiv:2212.00789. [Google Scholar]

- Sultani, W.; Chen, C.; Shah, M. Real-world anomaly detection in surveillance videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Munich, Germany, 8–14 September 2018; pp. 6479–6488. [Google Scholar]

- Tian, Y.; Pang, G.; Chen, Y.; Singh, R.; Verjans, J.W.; Carneiro, G. Weakly-supervised video anomaly detection with robust temporal feature magnitude learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 4975–4986. [Google Scholar]

- Sapkota, H.; Yu, Q. Bayesian nonparametric submodular video partition for robust anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 3212–3221. [Google Scholar]

- Wu, P.; Liu, J. Learning causal temporal relation and feature discrimination for anomaly detection. IEEE Trans. Image Process. 2021, 30, 3513–3527. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Li, G.; Qi, Y.; Wang, S.; Qing, L.; Huang, Q.; Yang, M.H. Exploiting Completeness and Uncertainty of Pseudo Labels for Weakly Supervised Video Anomaly Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 16271–16280. [Google Scholar]

- Lv, H.; Yue, Z.; Sun, Q.; Luo, B.; Cui, Z.; Zhang, H. Unbiased Multiple Instance Learning for Weakly Supervised Video Anomaly Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 8022–8031. [Google Scholar]

- Cho, M.; Kim, M.; Hwang, S.; Park, C.; Lee, K.; Lee, S. Look Around for Anomalies: Weakly-Supervised Anomaly Detection via Context-Motion Relational Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 12137–12146. [Google Scholar]

- Yu, G.; Wang, S.; Cai, Z.; Liu, X.; Xu, C.; Wu, C. Deep anomaly discovery from unlabeled videos via normality advantage and self-paced refinement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 13987–13998. [Google Scholar]

- Tur, A.O.; Dall’Asen, N.; Beyan, C.; Ricci, E. Exploring Diffusion Models for Unsupervised Video Anomaly Detection. arXiv 2023, arXiv:2304.05841. [Google Scholar]

- Sato, F.; Hachiuma, R.; Sekii, T. Prompt-Guided Zero-Shot Anomaly Action Recognition Using Pretrained Deep Skeleton Features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 6471–6480. [Google Scholar]

- Available online: Chat.openai.com (accessed on 20 March 2023).

- Menon, S.; Vondrick, C. Visual Classification via Description from Large Language Models. arXiv 2022, arXiv:2210.07183. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Jia, C.; Yang, Y.; Xia, Y.; Chen, Y.T.; Parekh, Z.; Pham, H.; Le, Q.; Sung, Y.H.; Li, Z.; Duerig, T. Scaling up visual and vision-language representation learning with noisy text supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 4904–4916. [Google Scholar]

- Li, J.; Li, D.; Xiong, C.; Hoi, S. Blip: Bootstrapping language-image pre-training for unified vision-language understanding and generation. In Proceedings of the International Conference on Machine Learning, PMLR, Baltimore, MD, USA, 17–23 July 2022; pp. 12888–12900. [Google Scholar]

- Singh, A.; Hu, R.; Goswami, V.; Couairon, G.; Galuba, W.; Rohrbach, M.; Kiela, D. Flava: A foundational language and vision alignment model. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 15638–15650. [Google Scholar]

- Zeng, Y.; Zhang, X.; Li, H. Multi-grained vision language pre-training: Aligning texts with visual concepts. arXiv 2021, arXiv:2111.08276. [Google Scholar]

- Yao, L.; Huang, R.; Hou, L.; Lu, G.; Niu, M.; Xu, H.; Liang, X.; Li, Z.; Jiang, X.; Xu, C. FILIP: Fine-grained interactive language-image pre-training. arXiv 2021, arXiv:2111.07783. [Google Scholar]

- Zhang, H.; Zhang, P.; Hu, X.; Chen, Y.C.; Li, L.H.; Dai, X.; Wang, L.; Yuan, L.; Hwang, J.N.; Gao, J. Glipv2: Unifying localization and vision-language understanding. In Advances in Neural Information Processing Systems 35, Proceedings of the Annual Conference on Neural Information Processing Systems 2022, New Orleans, LA, USA, 28 November–9 December 2022; Curran Associates, Inc.: Red Hook, NY, USA, 2022. [Google Scholar]

- Wu, P.; Liu, J.; Shen, F. A deep one-class neural network for anomalous event detection in complex scenes. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 2609–2622. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Luo, W.; Lian, D.; Gao, S. Future frame prediction for anomaly detection–a new baseline. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Munich, Germany, 8–14 September 2018; pp. 6536–6545. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Wortsman, M.; Ilharco, G.; Kim, J.W.; Li, M.; Kornblith, S.; Roelofs, R.; Lopes, R.G.; Hajishirzi, H.; Farhadi, A.; Namkoong, H.; et al. Robust fine-tuning of zero-shot models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 7959–7971. [Google Scholar]

- Zhou, K.; Yang, J.; Loy, C.C.; Liu, Z. Learning to prompt for vision-language models. Int. J. Comput. Vis. 2022, 130, 2337–2348. [Google Scholar] [CrossRef]

- Zhou, K.; Yang, J.; Loy, C.C.; Liu, Z. Conditional prompt learning for vision-language models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16816–16825. [Google Scholar]

- Lu, Y.; Liu, J.; Zhang, Y.; Liu, Y.; Tian, X. Prompt distribution learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5206–5215. [Google Scholar]

- Gao, P.; Geng, S.; Zhang, R.; Ma, T.; Fang, R.; Zhang, Y.; Li, H.; Qiao, Y. Clip-adapter: Better vision-language models with feature adapters. arXiv 2021, arXiv:2110.04544. [Google Scholar]

- Zhang, R.; Fang, R.; Zhang, W.; Gao, P.; Li, K.; Dai, J.; Qiao, Y.; Li, H. Tip-adapter: Training-free clip-adapter for better vision-language modeling. arXiv 2021, arXiv:2111.03930. [Google Scholar]

- Lazebnik, S.; Schmid, C.; Ponce, J. A sparse texture representation using local affine regions. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1265–1278. [Google Scholar] [CrossRef] [PubMed]

- Nilsback, M.E.; Zisserman, A. A visual vocabulary for flower classification. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; Volume 2, pp. 1447–1454. [Google Scholar]

- Dietterich, T.G.; Lathrop, R.H.; Lozano-Pérez, T. Solving the multiple instance problem with axis-parallel rectangles. Artif. Intell. 1997, 89, 31–71. [Google Scholar] [CrossRef]

- Teh, Y.; Jordan, M.; Beal, M.; Blei, D. Sharing clusters among related groups: Hierarchical Dirichlet processes. In Advances in Neural Information Processing Systems 17, Proceedings of the Annual Conference on Neural Information Processing Systems 2004, Vancouver, BC, Canada, 13–18 December 2004; Curran Associates, Inc.: Red Hook, NY, USA, 2004. [Google Scholar]

- Kumar, M.; Packer, B.; Koller, D. Self-paced learning for latent variable models. In Advances in Neural Information Processing Systems 23, Proceedings of the Annual Conference on Neural Information Processing Systems 2010, Vancouver, BC, Canada, 6–9 December 2010; Curran Associates, Inc.: Red Hook, NY, USA, 2010. [Google Scholar]

- Hara, K.; Kataoka, H.; Satoh, Y. Can spatiotemporal 3d cnns retrace the history of 2d cnns and imagenet? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Munich, Germany, 8–14 September 2018; pp. 6546–6555. [Google Scholar]

- Purwanto, D.; Chen, Y.T.; Fang, W.H. Dance with self-attention: A new look of conditional random fields on anomaly detection in videos. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Nashville, TN, USA, 20–25 June 2021; pp. 173–183. [Google Scholar]

- Rao, Y.; Zhao, W.; Chen, G.; Tang, Y.; Zhu, Z.; Huang, G.; Zhou, J.; Lu, J. Denseclip: Language-guided dense prediction with context-aware prompting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 18082–18091. [Google Scholar]

- Wang, C.; Chai, M.; He, M.; Chen, D.; Liao, J. Clip-nerf: Text-and-image driven manipulation of neural radiance fields. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 3835–3844. [Google Scholar]

- Shi, H.; Hayat, M.; Wu, Y.; Cai, J. ProposalCLIP: Unsupervised open-category object proposal generation via exploiting clip cues. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 9611–9620. [Google Scholar]

- Wang, Z.; Lu, Y.; Li, Q.; Tao, X.; Guo, Y.; Gong, M.; Liu, T. Cris: Clip-driven referring image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11686–11695. [Google Scholar]

- Shen, S.; Li, L.H.; Tan, H.; Bansal, M.; Rohrbach, A.; Chang, K.W.; Yao, Z.; Keutzer, K. How much can clip benefit vision-and-language tasks? arXiv 2021, arXiv:2107.06383. [Google Scholar]

- Li, M.; Xu, R.; Wang, S.; Zhou, L.; Lin, X.; Zhu, C.; Zeng, M.; Ji, H.; Chang, S.F. Clip-event: Connecting text and images with event structures. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16420–16429. [Google Scholar]

- Khandelwal, A.; Weihs, L.; Mottaghi, R.; Kembhavi, A. Simple but effective: Clip embeddings for embodied ai. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 14829–14838. [Google Scholar]

- Qian, Q.; Shang, L.; Sun, B.; Hu, J.; Li, H.; Jin, R. Softtriple loss: Deep metric learning without triplet sampling. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Long Beach, CA, USA, 15–20 June 2019; pp. 6450–6458. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13. Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Ionescu, R.T.; Khan, F.S.; Georgescu, M.I.; Shao, L. Object-centric auto-encoders and dummy anomalies for abnormal event detection in video. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7842–7851. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32, Proceedings of the Annual Conference on Neural Information Processing Systems 2019, Vancouver, BC, Canada, 8–14 December 2019; Curran Associates, Inc.: Red Hook, NY, USA, 2019; pp. 8024–8035. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. Tensorflow: A system for large-scale machine learning. In Proceedings of the 12th {USENIX} Symposium on Operating Systems Design and Implementation ({OSDI} 16), Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- Carreira, J.; Zisserman, A. Quo vadis, action recognition? A new model and the kinetics dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6299–6308. [Google Scholar]

- Liu, P.; Lyu, M.; King, I.; Xu, J. Selflow: Self-supervised learning of optical flow. In Proceedings of the IEEE/CVF conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4571–4580. [Google Scholar]

| Method | Explanation | Limitation | |

|---|---|---|---|

| OCC | Georgescu et al. [9] | Use pseudo-abnormal data | Require optical flow |

| Reiss et al. [10] | kNN distance with training data | Cannot be applied to unsupervised dataset | |

| Weakly sup. | RTFM [12] | Pseudo-labeling using top-k | Require frame-level labeled normal videos and abnormal videos |

| Sapkota et al. [13] | Pseudo-labeling using HDP-HMM | ||

| Wu et al. [14] | Tightly capture temporal relation | ||

| Purwanto et al. [45] | Consider relational propagation | ||

| Unsupervised | Yu et al. [18] | Pseudo labeling with self-paced learning | Require a significant of computation for feature extraction using 16 frames |

| Zaheer et al. [1] | Pseudo-labeling with cooperative learning | ||

| Tur et al. [19] | Reconstruction model with diffusion model | ||

| Normal | Abnormal |

|---|---|

| pedestrian traffic | vandalism |

| building access | theft |

| street performers | assault |

| outdoor dining | trespassing |

| tourists | property damage |

| dog walkers | fire |

| customer browsing | smoke |

| customer shopping | tornado |

| stocking shelves | hurricane |

| employee arrivals | medical emergencies |

| production line operations | break-in |

| scheduled deliveries | loitering |

| pet movement | drug use |

| neighbors passing by | graffiti |

| maintenance crew working | public intoxication |

| cleaning crew working | unruly crowds |

| pedestrian crossings | shoplifting |

| children playing | drug dealing |

| cleaning the sidewalk | hiding merchandise |

| hikers passing by | altercations |

| animals wandering around | fighting |

| birds flying overhead | injuries |

| sunrise | accidents |

| sunset | slips |

| routine patrols | falls |

| sitting | intruder |

| standing | equipment malfunctions |

| reading newspapers | equipment breakdowns |

| reading books | chemical spills |

| using mobile phones | chemical leaks |

| conducting personal conversations | power outages |

| walking through the station | vehicle accidents |

| guest arriving | vehicle collisions |

| guest leaving | physical altercations |

| cashier scanning | hit and run |

| cashier bagging items | car theft |

| restocking shelves | robbery |

| sunbathing on the beach | road rage |

| lounging on the beach | jaywalking |

| buying a ticket | aggressive behavior |

| delivery trucks | harassment |

| bicyclists | public indecency |

| joggers | public nudity |

| cars driving by | acts of terrorism |

| emergency vehicles | acts of violence |

| running | burglary |

| motorbike | rockslides |

| people entering parked vehicles | avalanches |

| traffic flow | dangerous wildlife |

| vehicle traffic | weapons possession |

| Normal | Abnormal | ||

|---|---|---|---|

| person | traffic light | remote | bicycle |

| fire hydrant | stop sign | mouse | car |

| parking meter | bench | tvmonitor | motorbike |

| bird | cat | dog | aeroplane |

| backpack | umbrella | handbag | bus |

| tie | suitcase | frisbee | train |

| skis | snowboard | sports ball | truck |

| kite | baseball bat | baseball glove | boat |

| surfboard | tennis racket | bottle | horse |

| wine glass | cup | fork | sheep |

| knife | spoon | bowl | cow |

| banana | apple | sandwich | elephant |

| orange | broccoli | carrot | bear |

| hot dog | pizza | donut | zebra |

| cake | chair | sofa | giraffe |

| potted plant | bed | dining table | skateboard |

| toilet | |||

| Notation | The Value Used in the Proposed Method | Reasons for the Value |

|---|---|---|

| T in Equation (1) | 0.01 | Shows best performance, as shown in Section 4.5.6 |

| in Equation (6) | 1 if 0.1 otherwise | Shows best performance, as shown in Section 4.5.6 |

| in Equation (8) | 0.1 | Does not affect much, as shown in Section 4.5.6 |

| in Equation (9) | 0.1 | Does not affect much, as shown in Section 4.5.6 |

| in Equation (8) | The value that makes 20% of the data to be abnormal by Equation (8) | Does not affect much, as shown in Section 4.5.5 |

| of 1D Gaussian filter in Section 4.2.3 | 21 | Is the same value used in Georgescu et al. [9] |

| Unsupervised | Unsupervised with text descriptions | Weakly Supervised | |||||

|---|---|---|---|---|---|---|---|

| Dataset | Metric | GCL [1] | Georgescu et al. [9] | Proposed wrt. unsup./score/wrt. w. sup. | RTFM [12] (impl./paper) | Wu and Liu[14] | Purwanto et al. [45] |

| ShanghaiTech [31] | Micro AUC | 78.93 | 84.77 | 8%/92.14/−6% | 97.39/97.21 | 97.48 | 96.85 |

| Micro AP | - | 46.64 | 18%/57.22/−11% | 59.63/- | 63.26 | - | |

| Micro AUC-AbnVideos | - | 80.49 | 5%/84.50/17% | 70.35/- | 68.47 | - | |

| Macro AUC | - | 97.21 | 1%/97.82/2% | 96.06/- | - | - | |

| Macro AP | - | 97.68 | 0%/97.85/3% | 95.15/- | - | - | |

| UCFcrime [11] | Micro AUC | 71.04 | - | 13%/81.27/−5% | 83.63/84.30 | 84.89 | 85.00 |

| Micro AP | - | - | -/27.91/−11% | 24.90/- | 31.10 | - | |

| Micro AUC-AbnVideos | - | - | -/67.72/5% | 64.38/- | - | - | |

| Macro AUC | - | - | -/85.76/3% | 83.26/- | - | - | |

| Macro AP | - | - | -/71.97/2% | 70.32/- | - | - | |

| Dataset | Georgescu et al. [9] | Proposed Method | RTFM [12] |

|---|---|---|---|

| ShanghaiTech | 0.13 | 0.13 | 2.00 |

| UCFcrime | - | 1.02 | 2.52 |

| Object-Centric Method (20 Objects per Frame) | |||||

|---|---|---|---|---|---|

| Proposed | Georgescu et al. [9] | ||||

| CLIP feat. calc. [23] | 20.49 ms | Optical flow calc. (Selflow [59]) | 57.90 ms | ||

| Similarity calc. | 0.18 ms | Recon. and classification | 4.37 ms | ||

| Total | 20.67 ms (67% faster) | Total | 62.27 ms | ||

| Non-object-centric method (Frame as input) | |||||

| Proposed | GCL [1] | RTFM [12] | |||

| CLIP feat. calc. | 4.79 ms | ResNext [44] feat. calc. | 18.79 ms | I3D [58] feat. calc. (10crop) | 68.15 ms |

| Similarity calc. | 0.18 ms | Classification | 0.11 ms | Classification | 2.51 ms |

| Total | 4.97 ms (74%, 93% faster) | Total | 18.90 ms | Total | 70.66 ms |

| Methods | WiseFT-0.2 | WiseFT-0.4 | WiseFT-0.6 | WiseFT-0.8 | WiseFT-1.0 | Proposed | |

|---|---|---|---|---|---|---|---|

| ShanghaiTech | Micro AUC | 87.25 | 88.54 | 89.59 | 91.03 | 92.12 | 92.14 |

| Micro AP | 45.03 | 47.43 | 52.8 | 54.89 | 56.07 | 57.22 | |

| Micro AUC-abnVideo | 75.75 | 75.93 | 78.38 | 78.31 | 78.35 | 84.50 | |

| Macro AUC | 97.15 | 97.25 | 97.26 | 97.19 | 97.11 | 97.82 | |

| Macro AP | 97.33 | 97.68 | 97.78 | 97.85 | 97.79 | 97.85 | |

| UCFcrime | Micro AUC | 81.95 | 80.85 | 79.45 | 78.90 | 78.90 | 81.27 |

| Micro AP | 26.77 | 24.94 | 22.03 | 21.23 | 23.62 | 27.91 | |

| Micro AUC-abnVideo | 67.41 | 66.89 | 64.36 | 63.88 | 65.61 | 67.72 | |

| Macro AUC | 85.58 | 85.04 | 84.68 | 84.27 | 86.30 | 85.76 | |

| Macro AP | 71.98 | 71.31 | 71.35 | 71.14 | 72.57 | 71.97 |

| Metric | w/o omitting | w/omitting | |

|---|---|---|---|

| ShanghaiTech | Micro AUC | 92.14 | 91.91 ± 1.02 |

| Macro AUC | 97.82 | 97.70 ± 0.20 | |

| UCFcrime | Micro AUC | 81.27 | 81.20 ± 0.81 |

| Macro AUC | 85.76 | 86.16 ± 1.21 |

| Module | Temporal difference | - | ✓ | ✓ | ✓ | ✓ | - |

| Regularization loss | - | - | ✓ | - | ✓ | ✓ | |

| Triplet loss | - | - | - | ✓ | ✓ | ✓ | |

| ShanghaiTech | Micro AUC | 76.98 | 75.04 | 79.99 | 90.81 | 91.58 | 92.14 |

| Micro AP | 19.14 | 20.19 | 27.46 | 58.30 | 60.86 | 57.22 | |

| Macro AUC | 96.43 | 97.00 | 96.98 | 97.60 | 97.73 | 97.82 | |

| Macro AP | 95.61 | 96.69 | 96.67 | 97.68 | 97.85 | 97.85 | |

| UCFcrime | Micro AUC | 77.60 | 78.90 | 81.51 | 81.47 | 81.47 | 78.56 |

| Micro AP | 23.00 | 23.62 | 27.88 | 27.91 | 27.87 | 23.59 | |

| Macro AUC | 83.21 | 86.30 | 86.01 | 86.01 | 86.00 | 83.02 | |

| Macro AP | 69.83 | 72.57 | 72.25 | 72.27 | 72.25 | 69.82 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, J.; Yoon, S.; Choi, T.; Sull, S. Unsupervised Video Anomaly Detection Based on Similarity with Predefined Text Descriptions. Sensors 2023, 23, 6256. https://doi.org/10.3390/s23146256

Kim J, Yoon S, Choi T, Sull S. Unsupervised Video Anomaly Detection Based on Similarity with Predefined Text Descriptions. Sensors. 2023; 23(14):6256. https://doi.org/10.3390/s23146256

Chicago/Turabian StyleKim, Jaehyun, Seongwook Yoon, Taehyeon Choi, and Sanghoon Sull. 2023. "Unsupervised Video Anomaly Detection Based on Similarity with Predefined Text Descriptions" Sensors 23, no. 14: 6256. https://doi.org/10.3390/s23146256

APA StyleKim, J., Yoon, S., Choi, T., & Sull, S. (2023). Unsupervised Video Anomaly Detection Based on Similarity with Predefined Text Descriptions. Sensors, 23(14), 6256. https://doi.org/10.3390/s23146256