Vision-Based Methods for Food and Fluid Intake Monitoring: A Literature Review

Abstract

1. Introduction

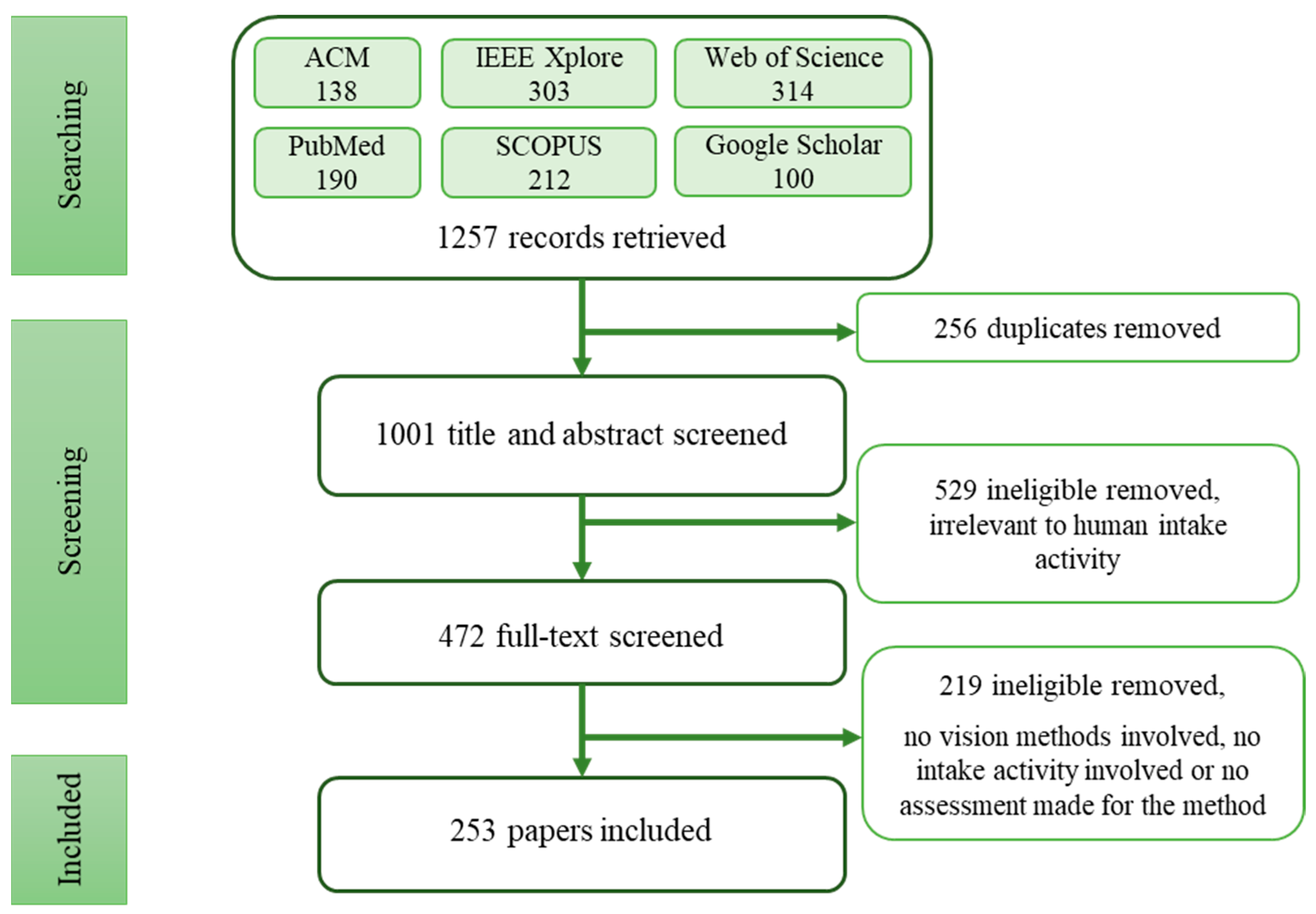

2. Methods

2.1. Literature Search

2.2. Screening

3. Overview of Vision-Based Intake Monitoring

3.1. Active and Passive Methods

3.2. Environmental Settings

3.3. Privacy Issue

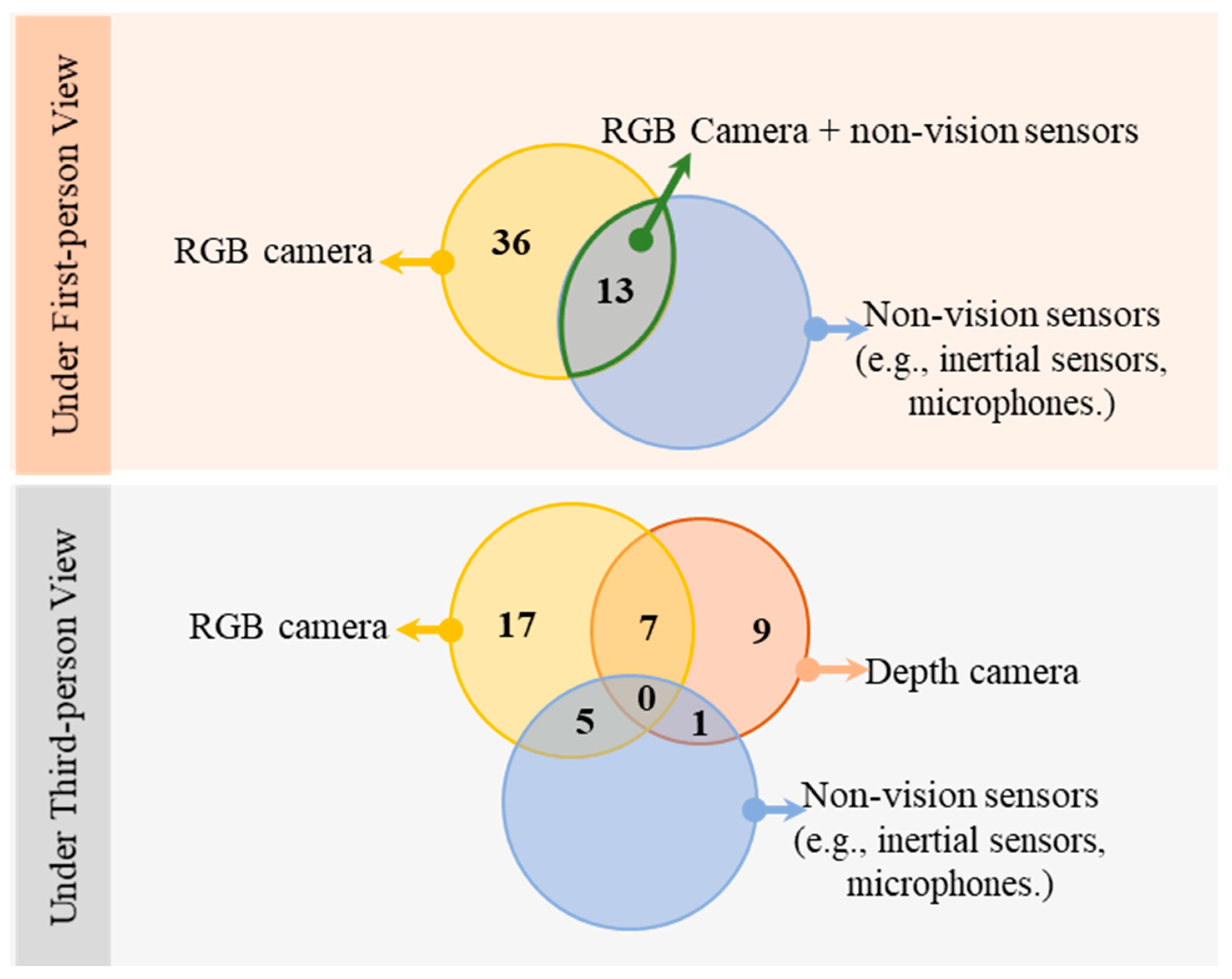

4. Viewing Angles and Devices in Monitoring Systems

4.1. First-Person Approaches

4.2. Third-Person Approaches

5. Algorithms by Task

5.1. Binary Classification

5.2. Food/Drink Type Classification

| (a) Part 1 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| References | Methods | Details of the Methods | Assessment | Drinking Included (Y/N) | Viewing Angle Applied | |||||

| ML | DL | Other | First-Person | Third-Person | Not Mentioned | |||||

| Qiu et al., 2021 | [110] | 1 | DNN | Bite counting: 74.15% top-1 accuracy and an MSE value of 0.312 when using regression. | Y | 1 | ||||

| Martinez et al., 2020 | [102] | 1 | 1 | Compared with, VGG365, FV (fine-tunning VGG365) + RF, SVMTree, FV + SVM, FV + KNN, MACNet, EnsembleCNN. | Food classification: an accuracy and F-score of 56% and 65% on the EgoFoodPlaces dataset. | Y | 1 | |||

| Du et al., 2019 | [46] | 1 | 1 | SVM on the phone for hand raising action; Faster-RCNN on the server to confirm the detected drinking activity and identify the type of drink. | Drinking activity detection was 85.64%, and types of liquids classification was 84%, respectively. | Y | 1 | |||

| Fuchs et al., 2020 | [89] | 1 | CNN (Inception ResNet V2, ResNet50 V2, and MobileNet V2) for beverage recognition. | A mAP of over 95% was observed when 100 images per product were used for training. | Y | 1 | ||||

| Zhu et al., 2010 | [27] | 1 | Take food pic before and after eating; SVM with a Gaussian radial basis kernel for classification; camera parameter estimation and model reconstruction for volume estimation. | Up to 97.2% for classification and 1% misreported on Nutrition Information (with 50% training data). | Y | 1 | ||||

| Zhang et al., 2018 | [69] | 1 | 1 | 1 | Segmentation of point cloud on colour and depth information using region growing algorithm; CNN for recognition and PCA for orientation estimation. | Average of 90.3% success rate when detecting and estimating the orientation of handle-less cups. | Y | 1 | ||

| Bellandi et al., 2012 | [108] | 1 | Halcon “Matching Assistant” tool: template matching. | Available for both object detection and Locate Glasses procedure. | Y | 1 | ||||

| Sadeq et al., 2018 | [111] | 1 | CNN. | 96% on food recognition on the FooDD dataset. | Y | 1 | ||||

| Rouast et al., 2018 | [24] | 1 | CNN. | 70% accuracy on action classification with class-balanced test data. | Y | 1 | ||||

| Chae et al., 2011 | [107] | 1 | 1 | Medial axis to determine the potential width of the template shape (shape boundary); the active contour methodology. | For 17 beverage images average relative error and standard deviation were about 11% and eight, respectively. For bread slices, the volume was 8% overestimated. | Y | 1 | |||

| Hafiz et al., 2016 | [40] | 1 | 1 | Colour-based BoF; Speed up robust features (SURF); Mean-shift segmentation. | >89% accuracy. | Y | 1 | |||

| Park et al., 2019 | [112] | 1 | A hierarchical multi-task learning framework. | Sugar level prediction with over 85% accuracy and alcoholic drink recognition over 90% accuracy. | Y | 1 | ||||

| Mezgec et al., 2017 | [78] | 1 | A new architecture; Compared with four different deep learning architectures (AlexNet, GoogLeNet, ResNet and NutriNet) and three solver types (SGD, NAG, and AdaGrad). | A classification accuracy of 86.72%, a detection accuracy of 94.47% (the binary task). | Y | 1 | ||||

| Mezgec et al., 2019 | [92] | 1 | DL-FCN-8s network, namely NutriNet. | Accuracy of 92.18%. | Y | 1 | ||||

| Mezgec et al., 2021 | [86] | 1 | DNN, namely NutriNet for food recognition (tested against AlexNet, GoogLeNet and ResNet); FCNs and ResNet for food segmentation. | AP up to 63.4% on food recognition (result from food recognition challenge). | Y | 1 | ||||

| Schiboni et al., 2018 | [55] | 1 | 1 | Image Processing and SVM for recognition and basic mathematical equation for calculating calories. | 90% recall on food detection. | N | 1 | |||

| Liu et al., 2018 | [113] | 1 | Food segmentation by an improved C-V model; 3D virtual object construction. | MAPE values for food volumes measuring were mostly below 7%; average MAPE values for all objects acquired were 4.48%. | N | 1 | ||||

| Zhou et al., 2022 | [114] | 1 | Cross-model retrieval on diabetogenic food (CMRDF). A graph-based cross-modal retrieval method. | The mAP is up to 75.9% using CMRDF-3M. | N | 1 | ||||

| Qiu, Lo, Gu, et al., 2021 | [104] | 1 | Transformer-based captioning model (Faster RCNN + ResNet were in use); Compared to other 4 algorithms. | 42.0 on Volume Estimation, 47.5 on Food Recognition and 62.7 on Action Recognition. | N | 1 | ||||

| Jiang et al., 2018 | [81] | 1 | AR overlay application: object tracking based on content-based image retrieval (CBIR) by RIS (a form of content-based image retrieval). | The average recognition rate is 75.9% at the supermarket Scene and 87.9% on FIDS30 Database. | N | 1 | ||||

| Rachakonda et al., 2020 | [75] | 1 | Machine learning for food classification; Principal Component Analysis (PCA). | 97% total accuracy for object detection (not for energy estimation or action detection). | N | 1 | ||||

| Rachakonda et al., 2019 | [115] | 1 | Tensorflow and object detection interface; Firebase Database for nutrition estimation. | 97% accuracy for calorie counts. | N | 1 | ||||

| Matei et al., 2021 | [82] | 1 | 1 | 1 | CNN for food and place detection (ResNet50, DenseNet161, and VGG16 combined with SVM, KNN, RF for binary classification); Object detector: ResNet50, DenseNet161, VGG16, GoogLeNet Inception V3 architectures pre-trained on ImageNet; Place recognition: ResNet50 pre-trained on Places365; DTW for nutritional activities analysis, output the similarity of two-time series; Isolation Forest for grouping days with same nutritional habits. | A weighted accuracy and F-score of 70% and 63%, respectively, on food/non-food classification. | N | 1 | ||

| Konstantakopoulos et al., 2021 | [116] | 1 | Two-view 3D food reconstruction. | A mean absolute percentage error from 4.6–11.1% per food dish. | N | 1 | ||||

| Rahmana et al., 2012 | [117] | 1 | Generating texture features from food images using GABOR filters. | MAP values remain above 90% for the extreme scale factor values of 0.7 and 1.4. Tables on food classification and image retrieval | N | 1 | ||||

| Iizuka et al., 2018 | [68] | 1 | NN and CNN. | Estimate the weight of each meal element within a 10% error rate. | N | 1 | ||||

| Qiu et al., 2019 | [118] | 1 | 1 | Mask R-CNN + a mechanism for hand-face distance. | See Table 2 in the literature and the description. | N | 1 | |||

| Lei et al., 2021 | [119] | 1 | Mask R-CNN for dish detection + OpenPose for pose estimation (set threshold for the 75 values obtained). | Eating state estimation 87.7% average accuracy; bite error percentage 26.2%. | N | 1 | ||||

| Sarapisto et al., 2022 | [103] | 1 | ResNet for food recognition, deeper ResNet with MenuProd for wight estimation. | 90% F1 score (averaged over classes) in a multi-label classification task of detecting the food items; approximately 15 g error per food item over all items. | N | 1 | ||||

| Rhyner et al., 2016 | [120] | Assessing the accuracy of GoCARB APP. | 85.1% successfully recognised by the APP. | N | 1 | |||||

| (b) Part 2 | ||||||||||

| Esfahani et al., 2020 | [121] | 1 | SVM (Support Vector Machine) and Logistic Regression classifiers. | Classification accuracy 0.5119 on hyperspectral and 0.4558 on RGB using SVM. | N | 1 | ||||

| Kong et al., 2011 | [122] | 1 | SVM. | 76% and 84% when recognizing arbitrary number of or single food item respectively. | N | 1 | ||||

| Tomescu, 2020 | [105] | 1 | EfficientNet for food recognition and depth map fusion for estimating volume. | A slight volume overestimation of 0–10%. | N | 1 | ||||

| Myers et al., 2015 | [73] | 1 | A CNN-based classifier (GoogleNet). | 99.02% on meal detection; an average relative error of 0.18 m on volume estimation. | N | 1 | ||||

| Pouladzadeh et al., 2013 | [123] | 1 | K-mean-Clustering for features; SVM. | 92.2% accuracy for food recognition (with all features used); area measurement 6.39% error on average. no results on calories estimation. | N | 1 | ||||

| Jayakumar et al., 2020 | [124] | 1 | CNN for food and face detection. | Accuracy of food detection was not clear but for face detection was a table. | N | 1 | ||||

| Lee et al., 2016 | [79] | 1 | Extract HOG features from IR and RGB then SVM +PCA + kPCA. | Isolate food parts with an accuracy of 97.5% and determine the type of food with an accuracy of 88.93%. | N | 1 | ||||

| Gao et al., 2019 | [94] | 1 | 1 | Maximum Length Sequence (MLS) in sound signal and single-task FCN (VGG-16) for image; (neither training images with volume information nor placing a reference object of known size). | Relative error of −0.27% to 12.37% on different objects. | N | 1 | |||

| Ravì et al., 2015 | [125] | 1 | A Fischer Vector representation together with a set of linear classifiers are used to categorize food intake based on color and texture; then being implemented with an activity recognition APP. | 0.73–0.78 of classification rate on Top-5 candidates on UEC-FOOD100; recognition rate over 84% for the 6 activities. | N | 1 | ||||

| Zhu et al., 2010 | [126] | 1 | Image analysis (CIELAB color space) and SVM for classification; 3D reconstruction for estimation on volume. | Mean classification accuracy 95.8% when 50% training data; error rate on estimation 3.4–7.0% for large item but 36.6–56.4% for small items. | N | 1 | ||||

| Singla et al., 2016 | [74] | 1 | GoogLeNet. | 99.2% on food/non-food classification; 83.6% on food categorization. | N | 1 | ||||

| Anthimopoulos et al., 2014 | [84] | 1 | Bag-of-features (BoF) model. | Classification accuracy of the order of 78%. | N | 1 | ||||

| Khan et al., 2019 | [127] | 1 | CNN. | Accuracy of 90.47%. | N | 1 | ||||

| Lu et al., 2018 | [128] | 1 | CNN for food segmentation, recognition, depth prediction and volume estimation. | Significant improvement on all results comparing to another paper ‘A Multimedia Database for Automatic Meal Assessment System’ (See tables in literature). | N | 1 | ||||

| Almaghrabi et al., 2012 | [129] | 1 | Image Processing and SVM for recognition and basic mathematical equation for calculating calories. | 89% accuracy for food recognition using SVM. 4.22% error for calories estimation. | N | 1 | ||||

| Pouladzadeh et al., 2012 | [130] | 1 | SVM. | 92.6% on food categories recognition. | N | 1 | ||||

| Martinel et al., 2015 | [98] | 1 | 1 | Extreme Learning Machine + SVM to select features. | Score improvement up to + 28.98; score (accuracy of the proposed method). | N | 1 | |||

| Martinel et al., 2016 | [99] | 1 | Extreme Learning Machine specialize a single feature type (e.g., color); structured SVM for feature filtering. | Significant higher accuracy on different datasets for food classification. | N | 1 | ||||

| Ruenin et al., 2020 | [90] | 1 | 1. Faster R-CNN and select ResNet-50 as a pre-trained model; 2. CNN which uses a a pre-trained model as InceptionResNetV2. | mAP = 73.354 for first part; MAPE = 16.9729 for the second part | N | 1 | ||||

| Islam et al., 2018 | [131] | 1 | 1. Transfer learning and re-train the DCNNs on food images; 2. extract features from pre-trained DCNN to train classifiers. | Up to 99.4% on Food-5K. | N | 1 | ||||

| Pfisterer et al., 2022 | [132] | 1 | Deep convolutional encoder-decoder food network with depth-refinement (EDFN-D). | IOU: EDFN-D 0.879; Depth-refined graph cut 0.887. Intake errors well below typical 50% (mean percent intake error: −4.2%). | N | 1 | ||||

| Chen et al., 2017 | [133] | 1 | Bilinear CNN models. | 84.92–99.28% classification rate on UECFOOD-100 and UECFOOD-256 dataset. | N | 1 | ||||

| Tammachat et al., 2014 | [134] | 1 | SVM. | Overall accuracy 70% on food type recognition and 50% on calorie estimation. | N | 1 | ||||

| McAllister et al., 2018 | [80] | 1 | 1 | Pre-trained ResNet-152 and GoogleNet CNN for feature extraction + classification based on machine leaning using ANN, SVM, Random Rorest, fully connected NN, Naïve Bayes. | ResNet-152 deep features with SVM with RBF kernel can accurately detect food items with 99.4% accuracy using Food-5K food image dataset. | N | 1 | |||

| Liu et al., 2022 | [106] | 1 | EfficientDet deep learning (DL) model. | mAP = 0.92 considering 87 types of dishes. | N | 1 | ||||

| Liu et al., 2016 | [135] | 1 | CNN. | Top-5 Accuracy 94.8% on UEC-100 and 87.2% on UEC-256. | N | 1 | ||||

| (c) Part 3 | ||||||||||

| Li et al., 2022 | [96] | 1 | YOLOv5 for food recognition. | 89.7% for food recognition; 90.1% for average nutrition composition perception accuracy. | N | 1 | ||||

| Tahir et al., 2021 | [136] | 1 | MobiletNetV3 with weights from a pre-trained model of ImageNet. | 99.1 F1 score on food/non-food classification; 81.46–91.93% Top3 food recognition F1-score. | N | 1 | ||||

| Martinel et al., 2018 | [137] | 1 | DNN, a new architecture using a slice convolution block to capture the specific vertical food traits; tested on UECFood100, UECFood256 and Food-101. | A top–1 accuracy of 90.27% on the Food-101 dataset. | N | 1 | ||||

| Christodoulidis et al., 2015 | [138] | 1 | 6-layer deep CNN. | Overall accuracy of 84.9%. | N | 1 | ||||

| Yang et al., 2010 | [139] | 1 | Get pairwise features on 8 different ingredient types; feature vector for discriminative classifier: Baseline algorithms: colour histogram + SVM and bag of SIFT features + SVM. | Nearly 80% with OM (a joint feature of orientation and midpoint). | N | 1 | ||||

| Miyano et al., 2012 | [140] | 1 | Bag-of-Features representation using local descriptors and color feature; Histogram intersection approach and SVM as classifier. | With both BOF and color feature, HI achieved 89.3% and SVM 98.7%. | N | 1 | ||||

| Zhao et al., 2021 | [100] | 1 | Graph Convolutional Network (GCN) to learn inter-class relations. | See table of different feature extractor, and different methods (1-shots, few-shot, fusion) on Food-101 and UECFood-256. | N | 1 | ||||

| Lu et al., 2020 | [91] | 1 | DNN (Inception-V3 for food recognition). | For food recognition: highest 78.2% Top-3 on Hyper2-MADiMa database; see tables for food segmentation and nutrition estimation. | N | 1 | ||||

| Aguilar et al., 2018 | [93] | 1 | FCN (Tiramisu model) for food segmentation, Yolov2 for food detection. | 90% F-measure on UNIMIB2016. | N | 1 | ||||

| Bettadapura et al., 2015 | [141] | 1 | Geo-Localizing Images; image processing (Color Moment Invariants, Hue Histograms, C-SIFT, OpponentSIFT, RGB-SIFT, SIFT); weekly Supervised Learning to train SMO-MKL multi-class SVM classification framework. | Average performance increased by 47.66% when location prior was included. (15.67%. to 63.33%). | N | 1 | ||||

| Martinel et al., 2016 | [97] | 1 | CNN, Extreme Learning Machines (ELM) and Neural Trees. | 69.3% (significantly improved from 60.2% (PMTS [paper “Real-Time Photo Mining from the Twitter Stream: Event Photo Discovery and Food Photo Detection”]) to 69.3%.). | N | 1 | ||||

| Zhu et al., 2011 | [142] | 1 | Salient Region Detection based on color and multiscale segmentation by SVM. | Average classification accuracy for 32 food classes is 44%. | N | 1 | ||||

| Yumang et al., 2021 | [95] | 1 | YOLO. trained with Pyimagesearch on classification and a formular for distance calculation. | The accuracy of the device to be 0.77 or 77% on classification and a small discrepancy of almost 0.1–0.9 margin on distance estimation. | N | 1 | ||||

| Wang et al., 2015 | [143] | 1 | 1 | Bag-of-Words Histogram (BoW) + SIFT for vision; Bossanova Image Pooling Representation; deep CNN and very deep CNN. | The fusion of visual and textual information achieves better average precision 85.1%. | N | 1 | |||

| Teng et al., 2019 | [144] | 1 | A 5-layer deep CNN. | Top-1 accuracy of 97.12% and the top-5 accuracy of 99.86%. | N | 1 | ||||

| Matsuda et al., 2012 | [83] | 1 | 1 | Candidate regions detection by DPM (a Neural Network), a circle detector and the JSEG region segmentation; feature-fusion-based food recognition method using features including bag-of-features of SIFT and CSIFT with spatial pyramid (SP-BoF), histogram of oriented gradient (HoG), and Gabor texture features. | 55.8% classification rate for a multiple food dataset. | N | 1 | |||

| Poply et al., 2021 | [145] | 1 | CNN for object detection and semantic segmentation. | mAP of 89.3% of object detection, percentage accuracy of 93.06% for calorie prediction. | N | 1 | ||||

| Nguyen et al., 2022 | [101] | 1 | Deep CNN namely ‘SibNet’. | MAE 0.13–0.15 for counting; PQ (panoptic quality) 81.68–89.83% for segmentation. | N | 1 | ||||

| Ege et al., 2017 | [146] | 1 | Multi-task CNN. | Average classification accuracy on the top-200 samples with the larger error was 71%, with the smaller error 86%; Table 3: The results on calorie and category estimation. | N | 1 | ||||

| Bolaños et al., 2016 | [87] | 1 | CNN + a Global Average Pooling (GAP) layer + Food Activation Maps (FAM) (food heat map) on food detection (food/non-food), namely GoogleNet-GAP. | Validation accuracy up to 95.64% on food/non-food; up to 91.5% on food recognition; on simultaneous test on localization and recognition see Table 2 of mean accuracy. | N | 1 | ||||

| Ciocca et al., 2020 | [88] | 1 | CNN (GoogLeNet, Inception-v3, MobileNet-v2, and ResNet50) + SVM; combined deep-based and hand-craft features. | Details are in tables of the paper, Inc-V3 + LBP-RI + SVM (RBF) achieved the best performance. | N | 1 | ||||

| Park et al., 2019 | [85] | 1 | DCNN (namely K-foodNet), compared with AlexNet, GoogLeNet, Very Deep Convolutional Neural Network, VGG and ResNet. | Test accuracy 91.3% and recognition time 0.4 ms. | N | 1 | ||||

5.3. Intake Action Recognition

5.4. Intake Amount Estimation

6. Discussion

7. Conclusions on Research Gaps

Author Contributions

Funding

Conflicts of Interest

References

- Vu, T.; Lin, F.; Alshurafa, N.; Xu, W. Wearable Food Intake Monitoring Technologies: A Comprehensive Review. Computers 2017, 6, 4. [Google Scholar] [CrossRef]

- Jayatilaka, A.; Ranasinghe, D.C. Towards unobtrusive real-time fluid intake monitoring using passive UHF RFID. In Proceedings of the 2016 IEEE International Conference on RFID (RFID), Orlando, FL, USA, 3-5 May 2016; IEEE: Orlando, FL, USA, 2016; pp. 1–4. [Google Scholar]

- Burgess, R.A.; Hartley, T.; Mehdi, Q.; Mehdi, R. Monitoring of patient fluid intake using the Xbox Kinect. In Proceedings of the CGAMES’2013 USA, Louisville, KY, USA, 30 July–1 August 2013; pp. 60–64. [Google Scholar]

- Cao, E.; Watt, M.J.; Nowell, C.J.; Quach, T.; Simpson, J.S.; De Melo Ferreira, V.; Agarwal, S.; Chu, H.; Srivastava, A.; Anderson, D.; et al. Mesenteric Lymphatic Dysfunction Promotes Insulin Resistance and Represents a Potential Treatment Target in Obesity. Nat. Metab. 2021, 3, 1175–1188. [Google Scholar] [CrossRef] [PubMed]

- Lauby-Secretan, B.; Scoccianti, C.; Loomis, D.; Grosse, Y.; Bianchini, F.; Straif, K. Body Fatness and Cancer—Viewpoint of the IARC Working Group. N. Engl. J. Med. 2016, 375, 794–798. [Google Scholar] [CrossRef] [PubMed]

- Doulah, A.; Mccrory, M.A.; Higgins, J.A.; Sazonov, E. A Systematic Review of Technology-Driven Methodologies for Estimation of Energy Intake. IEEE Access 2019, 7, 49653–49668. [Google Scholar] [CrossRef] [PubMed]

- Schoeller, D.A.; Thomas, D. Energy balance and body composition. In Nutrition for the Primary Care Provider; Karger Publishers: Basel, Switzerland, 2014. [Google Scholar] [CrossRef]

- Wang, W.; Min, W.; Li, T.; Dong, X.; Li, H.; Jiang, S. A Review on Vision-Based Analysis for Automatic Dietary Assessment. Trends Food Sci. Technol. 2022, 122, 223–237. [Google Scholar] [CrossRef]

- Lacey, J.; Corbett, J.; Forni, L.; Hooper, L.; Hughes, F.; Minto, G.; Moss, C.; Price, S.; Whyte, G.; Woodcock, T.; et al. A Multidisciplinary Consensus on Dehydration: Definitions, Diagnostic Methods and Clinical Implications. Ann. Med. 2019, 51, 232–251. [Google Scholar] [CrossRef]

- Volkert, D.; Beck, A.M.; Cederholm, T.; Cruz-Jentoft, A.; Goisser, S.; Hooper, L.; Kiesswetter, E.; Maggio, M.; Raynaud-Simon, A.; Sieber, C.C.; et al. ESPEN Guideline on Clinical Nutrition and Hydration in Geriatrics. Clin. Nutr. 2019, 38, 10–47. [Google Scholar] [CrossRef]

- Armstrong, L. Challenges of Linking Chronic Dehydration and Fluid Consumption to Health Outcomes. Nutr. Rev. 2012, 70, S121–S127. [Google Scholar] [CrossRef]

- Manz, F.; Wentz, A. 24-h Hydration Status: Parameters, Epidemiology and Recommendations. Eur. J. Clin. Nutr. 2003, 57, S10–S18. [Google Scholar] [CrossRef]

- Hooper, L.; Bunn, D.; Jimoh, F.O.; Fairweather-Tait, S.J. Water-Loss Dehydration and Aging. Mech. Ageing Dev. 2014, 136–137, 50–58. [Google Scholar] [CrossRef]

- El-Sharkawy, A.M.; Sahota, O.; Maughan, R.J.; Lobo, D.N. The Pathophysiology of Fluid and Electrolyte Balance in the Older Adult Surgical Patient. Clin. Nutr. 2014, 33, 6–13. [Google Scholar] [CrossRef] [PubMed]

- El-Sharkawy, A.M.; Watson, P.; Neal, K.R.; Ljungqvist, O.; Maughan, R.J.; Sahota, O.; Lobo, D.N. Hydration and Outcome in Older Patients Admitted to Hospital (The HOOP Prospective Cohort Study). Age Ageing 2015, 44, 943–947. [Google Scholar] [CrossRef] [PubMed]

- Kim, S. Preventable Hospitalizations of Dehydration: Implications of Inadequate Primary Health Care in the United States. Ann. Epidemiol. 2007, 17, 736. [Google Scholar] [CrossRef]

- Warren, J.L.; Bacon, W.E.; Harris, T.; McBean, A.M.; Foley, D.J.; Phillips, C. The Burden and Outcomes Associated with Dehydration among US Elderly, 1991. Am. J. Public Health 1994, 84, 1265–1269. [Google Scholar] [CrossRef]

- Jimoh, F.O.; Bunn, D.; Hooper, L. Assessment of a Self-Reported Drinks Diary for the Estimation of Drinks Intake by Care Home Residents: Fluid Intake Study in the Elderly (FISE). J. Nutr. Health Aging 2015, 19, 491–496. [Google Scholar] [CrossRef]

- Edmonds, C.J.; Harte, N.; Gardner, M. How Does Drinking Water Affect Attention and Memory? The Effect of Mouth Rinsing and Mouth Drying on Children’s Performance. Physiol. Behav. 2018, 194, 233–238. [Google Scholar] [CrossRef]

- Cohen, R.; Fernie, G.; Roshan Fekr, A. Fluid Intake Monitoring Systems for the Elderly: A Review of the Literature. Nutrients 2021, 13, 2092. [Google Scholar] [CrossRef] [PubMed]

- Dalakleidi, K.V.; Papadelli, M.; Kapolos, I.; Papadimitriou, K. Applying Image-Based Food-Recognition Systems on Dietary Assessment: A Systematic Review. Adv. Nutr. 2022, 13, 2590–2619. [Google Scholar] [CrossRef]

- Neves, P.A.; Simões, J.; Costa, R.; Pimenta, L.; Gonçalves, N.J.; Albuquerque, C.; Cunha, C.; Zdravevski, E.; Lameski, P.; Garcia, N.M.; et al. Thought on Food: A Systematic Review of Current Approaches and Challenges for Food Intake Detection. Sensors 2022, 22, 6443. [Google Scholar] [CrossRef]

- Doulah, A.; Ghosh, T.; Hossain, D.; Imtiaz, M.H.; Sazonov, E. “Automatic Ingestion Monitor Version 2”—A Novel Wearable Device for Automatic Food Intake Detection and Passive Capture of Food Images. IEEE J. Biomed. Health Inf. 2021, 25, 568–576. [Google Scholar] [CrossRef]

- Rouast, P.; Adam, M.; Burrows, T.; Chiong, R.; Rollo, M. Using deep learning and 360 video to detect eating behavior for user assistance systems. In Proceedings of the Twenty-Sixth European Conference on Information Systems (ECIS2018), Portsmouth, UK, 23–28 June 2018. [Google Scholar]

- Block, G. A Review of Validations of Dietary Assessment Methods. Am. J. Epidemiol. 1982, 115, 492–505. [Google Scholar] [CrossRef] [PubMed]

- Fluid Balance Charts: Do They Measure Up? Available online: https://www.magonlinelibrary.com/doi/epdf/10.12968/bjon.1994.3.16.816 (accessed on 5 April 2022).

- Zhu, F.; Bosch, M.; Boushey, C.J.; Delp, E.J. An image analysis system for dietary assessment and evaluation. In Proceedings of the 2010 IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 1853–1856. [Google Scholar]

- Kong, F.; Tan, J. DietCam: Automatic Dietary Assessment with Mobile Camera Phones. Pervasive Mob. Comput. 2012, 8, 147–163. [Google Scholar] [CrossRef]

- Seiderer, A.; Flutura, S.; André, E. Development of a mobile multi-device nutrition logger. In Proceedings of the 2nd ACM SIGCHI International Workshop on Multisensory Approaches to Human-Food Interaction, Glasgow, UK, 13 November 2017; ACM: New York, NY, USA, 2017; pp. 5–12. [Google Scholar]

- Jia, W.; Chen, H.-C.; Yue, Y.; Li, Z.; Fernstrom, J.; Bai, Y.; Li, C.; Sun, M. Accuracy of Food Portion Size Estimation from Digital Pictures Acquired by a Chest-Worn Camera. Public Health Nutr. 2014, 17, 1671–1681. [Google Scholar] [CrossRef] [PubMed]

- Sun, M.; Burke, L.E.; Mao, Z.-H.; Chen, Y.; Chen, H.-C.; Bai, Y.; Li, Y.; Li, C.; Jia, W. eButton: A wearable computer for health monitoring and personal assistance. In Proceedings of the DAC ‘14: The 51st Annual Design Automation Conference 2014, San Francisco, CA, USA, 1–5 June 2014; pp. 1–6. [Google Scholar] [CrossRef]

- Krebs, P.; Duncan, D.T. Health App Use Among US Mobile Phone Owners: A National Survey. JMIR mHealth uHealth 2015, 3, e101. [Google Scholar] [CrossRef] [PubMed]

- Sen, S.; Subbaraju, V.; Misra, A.; Balan, R.; Lee, Y. Annapurna: Building a real-world smartwatch-based automated food journal. In Proceedings of the 2018 IEEE 19th International Symposium on “A World of Wireless, Mobile and Multimedia Networks” (WoWMoM), Chania, Greece, 12–15 June 2018; pp. 1–6. [Google Scholar]

- Sen, S.; Subbaraju, V.; Misra, A.; Balan, R.; Lee, Y. Annapurna: An Automated Smartwatch-Based Eating Detection and Food Journaling System. Pervasive Mob. Comput. 2020, 68, 101259. [Google Scholar] [CrossRef]

- Thomaz, E.; Parnami, A.; Essa, I.; Abowd, G.D. Feasibility of identifying eating moments from first-person images leveraging human computation. In Proceedings of the SenseCam ‘13: 4th International SenseCam & Pervasive Imaging Conference, San Diego, CA, USA, 18–19 November 2013; ACM Press: New York, NY, USA, 2013; pp. 26–33. [Google Scholar]

- Bedri, A.; Li, D.; Khurana, R.; Bhuwalka, K.; Goel, M. FitByte: Automatic diet monitoring in unconstrained situations using multimodal sensing on eyeglasses. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2021; ACM: New York, NY, USA, 2020; pp. 1–12. [Google Scholar]

- Cunha, A.; Pádua, L.; Costa, L.; Trigueiros, P. Evaluation of MS Kinect for Elderly Meal Intake Monitoring. Procedia Technol. 2014, 16, 1383–1390. [Google Scholar] [CrossRef]

- Obaid, A.K.; Abdel-Qader, I.; Mickus, M. Automatic food-intake monitoring system for persons living with Alzheimer’s-vision-based embedded system. In Proceedings of the 2018 9th IEEE Annual Ubiquitous Computing, Electronics Mobile Communication Conference (UEMCON), New York, NY, USA, 8–10 November 2018; pp. 580–584. [Google Scholar]

- Hossain, D.; Ghosh, T.; Sazonov, E. Automatic Count of Bites and Chews from Videos of Eating Episodes. IEEE Access 2020, 8, 101934–101945. [Google Scholar] [CrossRef]

- Hafiz, R.; Islam, S.; Khanom, R.; Uddin, M.S. Image based drinks identification for dietary assessment. In Proceedings of the 2016 International Workshop on Computational Intelligence (IWCI), Dhaka, Bangladesh, 12–13 December 2016; pp. 192–197. [Google Scholar]

- Automatic Meal Intake Monitoring Using Hidden Markov Models | Elsevier Enhanced Reader. Available online: https://reader.elsevier.com/reader/sd/pii/S1877050916322980?token=11F68A53BC12E070DB5891E8BEA3ACDCC32BEB1B24D82F1AAEFBBA65AA6016F2E9009A4B70146365DDA53B667BB161D7&originRegion=eu-west-1&originCreation=20220216180556 (accessed on 16 February 2022).

- Al-Anssari, H.; Abdel-Qader, I. Vision based monitoring system for Alzheimer’s patients using controlled bounding boxes tracking. In Proceedings of the 2016 IEEE International Conference on Electro Information Technology (EIT), Grand Forks, ND, USA, 19–21 May 2016; pp. 821–825. [Google Scholar]

- Davies, A.; Chan, V.; Bauman, A.; Signal, L.; Hosking, C.; Gemming, L.; Allman-Farinelli, M. Using Wearable Cameras to Monitor Eating and Drinking Behaviours during Transport Journeys. Eur. J. Nutr. 2021, 60, 1875–1885. [Google Scholar] [CrossRef]

- Jia, W.; Li, Y.; Qu, R.; Baranowski, T.; Burke, L.E.; Zhang, H.; Bai, Y.; Mancino, J.M.; Xu, G.; Mao, Z.-H.; et al. Automatic Food Detection in Egocentric Images Using Artificial Intelligence Technology. Public Health Nutr. 2019, 22, 1168–1179. [Google Scholar] [CrossRef]

- Gemming, L.; Doherty, A.; Utter, J.; Shields, E.; Ni Mhurchu, C. The Use of a Wearable Camera to Capture and Categorise the Environmental and Social Context of Self-Identified Eating Episodes. Appetite 2015, 92, 118–125. [Google Scholar] [CrossRef]

- Du, B.; Lu, C.X.; Kan, X.; Wu, K.; Luo, M.; Hou, J.; Li, K.; Kanhere, S.; Shen, Y.; Wen, H. HydraDoctor: Real-Time liquids intake monitoring by collaborative sensing. In Proceedings of the 20th International Conference on Distributed Computing and Networking, Bangalore, India, 4–7 January 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 213–217. [Google Scholar]

- Hossain, D.; Imtiaz, M.H.; Ghosh, T.; Bhaskar, V.; Sazonov, E. Real-Time food intake monitoring using wearable egocnetric camera. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 4191–4195. [Google Scholar]

- Iosifidis, A.; Marami, E.; Tefas, A.; Pitas, I. Eating and drinking activity recognition based on discriminant analysis of fuzzy distances and activity volumes. In Proceedings of the 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012; pp. 2201–2204. [Google Scholar]

- Das, S.; Dai, R.; Koperski, M.; Minciullo, L.; Garattoni, L.; Bremond, F.; Francesca, G. Toyota smarthome: Real-World activities of daily living. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Raju, V.; Sazonov, E. Processing of egocentric camera images from a wearable food intake sensor. In Proceedings of the 2019 SoutheastCon, Huntsville, AL, USA, 11–14 April 2019; pp. 1–6. [Google Scholar]

- Kassim, M.F.; Mohd, M.N.H.; Tomari, M.R.M.; Suriani, N.S.; Zakaria, W.N.W.; Sari, S. A Non-Invasive and Non-Wearable Food Intake Monitoring System Based on Depth Sensor. Bull. Electr. Eng. Inform. 2020, 9, 2342–2349. [Google Scholar] [CrossRef]

- bin Kassim, M.F.; Mohd, M.N.H. Food Intake Gesture Monitoring System Based-on Depth Sensor. Bull. Electr. Eng. Inform. 2019, 8, 470–476. [Google Scholar] [CrossRef]

- Cippitelli, E.; Gambi, E.; Spinsante, S.; Gasparrini, S.; Florez-Revuelta, F. Performance analysis of self-organising neural networks tracking algorithms for intake monitoring using Kinect. In Proceedings of the IET International Conference on Technologies for Active and Assisted Living (TechAAL), London, UK, 5 November 2015; Institution of Engineering and Technology: London, UK, 2015; p. 6. [Google Scholar]

- Tham, J.S.; Chang, Y.C.; Ahmad Fauzi, M.F. Automatic identification of drinking activities at home using depth data from RGB-D camera. In Proceedings of the 2014 International Conference on Control, Automation and Information Sciences (ICCAIS 2014), Gwangju, Republic of Korea, 2–5 December 2014; pp. 153–158. [Google Scholar]

- Schiboni, G.; Wasner, F.; Amft, O. A privacy-preserving wearable camera setup for dietary event spotting in free-living. In Proceedings of the 2018 IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Athens, Greece, 19–23 March 2018; pp. 872–877. [Google Scholar]

- Hongu, N.; Pope, B.T.; Bilgiç, P.; Orr, B.J.; Suzuki, A.; Kim, A.S.; Merchant, N.C.; Roe, D.J. Usability of a Smartphone Food Picture App for Assisting 24-Hour Dietary Recall: A Pilot Study. Nutr. Res. Pract. 2015, 9, 207–212. [Google Scholar] [CrossRef] [PubMed]

- Ahmad, Z.; Khanna, N.; Kerr, D.A.; Boushey, C.J.; Delp, E.J. A mobile phone user interface for image-based dietary assessment. In Mobile Devices and Multimedia: Enabling Technologies, Algorithms, and Applications 2014; SPIE—The International Society for Optical Engineering: Bellingham, WA, USA, 2014; Volume 9030, p. 903007. [Google Scholar]

- Vinay Chandrasekhar, K.; Imtiaz, M.H.; Sazonov, E. Motion-Adaptive image capture in a body-worn wearable sensor. In Proceedings of the 2018 IEEE SENSORS, New Delhi, India, 28–31 October 2018; pp. 1–4. [Google Scholar]

- Gemming, L.; Rush, E.; Maddison, R.; Doherty, A.; Gant, N.; Utter, J.; Mhurchu, C.N. Wearable Cameras Can Reduce Dietary Under-Reporting: Doubly Labelled Water Validation of a Camera-Assisted 24 h Recall. Br. J. Nutr. 2015, 113, 284–291. [Google Scholar] [CrossRef]

- Sen, S.; Subbaraju, V.; Misra, A.; Balan, R.K.; Lee, Y. Experiences in building a real-world eating recogniser. In Proceedings of the 4th International on Workshop on Physical Analytics—WPA ‘17, Niagara Falls, NY, USA, 19 June 2017; ACM Press: New York, NY, USA, 2017; pp. 7–12. [Google Scholar]

- O’Loughlin, G.; Cullen, S.J.; McGoldrick, A.; O’Connor, S.; Blain, R.; O’Malley, S.; Warrington, G.D. Using a Wearable Camera to Increase the Accuracy of Dietary Analysis. Am. J. Prev. Med. 2013, 44, 297–301. [Google Scholar] [CrossRef]

- Cippitelli, E.; Gasparrini, S.; Gambi, E.; Spinsante, S. Unobtrusive intake actions monitoring through RGB and depth information fusion. In Proceedings of the 2016 IEEE 12th International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 8–10 September 2016; pp. 19–26. [Google Scholar]

- Blechert, J.; Liedlgruber, M.; Lender, A.; Reichenberger, J.; Wilhelm, F.H. Unobtrusive Electromyography-Based Eating Detection in Daily Life: A New Tool to Address Underreporting? Appetite 2017, 118, 168–173. [Google Scholar] [CrossRef]

- Maekawa, T. A sensor device for automatic food lifelogging that is embedded in home ceiling light: A preliminary investigation. In Proceedings of the 2013 7th International Conference on Pervasive Computing Technologies for Healthcare and Workshops, Venice, Italy, 5–8 May 2013. [Google Scholar]

- Lo, F.P.-W.; Sun, Y.; Qiu, J.; Lo, B. A Novel Vision-Based Approach for Dietary Assessment Using Deep Learning View Synthesis. In Proceedings of the 2019 IEEE 16th International Conference on Wearable and Implantable Body Sensor Networks (BSN), Chicago, IL, USA, 19–22 May 2019; pp. 1–4. [Google Scholar]

- Farooq, M.; Doulah, A.; Parton, J.; McCrory, M.A.; Higgins, J.A.; Sazonov, E. Validation of Sensor-Based Food Intake Detection by Multicamera Video Observation in an Unconstrained Environment. Nutrients 2019, 11, 609. [Google Scholar] [CrossRef]

- qm13 Azure Kinect Body Tracking Joints. Available online: https://docs.microsoft.com/en-us/azure/kinect-dk/body-joints (accessed on 23 June 2022).

- Iizuka, K.; Morimoto, M. A nutrient content estimation system of buffet menu using RGB-D sensor. In Proceedings of the 2018 Joint 7th International Conference on Informatics, Electronics & Vision (ICIEV) and 2018 2nd International Conference on Imaging, Vision & Pattern Recognition (icIVPR), Kitakyushu, Japan, 25–29 June 2018; pp. 165–168. [Google Scholar]

- Zhang, Z.; Mao, S.; Chen, K.; Xiao, L.; Liao, B.; Li, C.; Zhang, P. CNN and PCA based visual system of a wheelchair manipulator robot for automatic drinking. In Proceedings of the 2018 IEEE International Conference on Robotics and Biomimetics (ROBIO), Kuala Lumpur, Malaysia, 12–15 December 2018; pp. 1280–1286. [Google Scholar]

- Chang, M.-J.; Hsieh, J.-T.; Fang, C.-Y.; Chen, S.-W. A Vision-Based Human Action Recognition System for Moving Cameras Through Deep Learning. In Proceedings of the 2019 2nd International Conference on Signal Processing and Machine Learning, Hangzhou, China, 27–29 November 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 85–91. [Google Scholar]

- Gambi, E.; Ricciuti, M.; De Santis, A. Food Intake Actions Detection: An Improved Algorithm Toward Real-Time Analysis. J. Imaging 2020, 6, 12. [Google Scholar] [CrossRef]

- Raju, V.B.; Sazonov, E. FOODCAM: A Novel Structured Light-Stereo Imaging System for Food Portion Size Estimation. Sensors 2022, 22, 3300. [Google Scholar] [CrossRef]

- Myers, A.; Johnston, N.; Rathod, V.; Korattikara, A.; Gorban, A.; Silberman, N.; Guadarrama, S.; Papandreou, G.; Huang, J.; Murphy, K. Im2Calories: Towards an automated mobile vision food diary. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; IEEE: New York, NY, USA, 2015; pp. 1233–1241. [Google Scholar]

- Singla, A.; Yuan, L.; Ebrahimi, T. Food/Non-Food image classification and food categorization using pre-trained GoogLeNet model. In Proceedings of the 2nd International Workshop on Multimedia Assisted Dietary Management, Amsterdam, The Netherlands, 16 October 2016; ACM: New York, NY, USA, 2016; pp. 3–11. [Google Scholar]

- Rachakonda, L.; Mohanty, S.P.; Kougianos, E. iLog: An Intelligent Device for Automatic Food Intake Monitoring and Stress Detection in the IoMT. IEEE Trans. Consum. Electron. 2020, 66, 115–124. [Google Scholar] [CrossRef]

- Beltran, A.; Dadabhoy, H.; Chen, T.A.; Lin, C.; Jia, W.; Baranowski, J.; Yan, G.; Sun, M.; Baranowski, T. Adapting the eButton to the Abilities of Children for Diet Assessment. Proc. Meas. Behav. 2016, 2016, 72–81. [Google Scholar]

- Ramesh, A.; Raju, V.B.; Rao, M.; Sazonov, E. Food detection and segmentation from egocentric camera images. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Guadalajara, Mexico, 1–5 November 2021; pp. 2736–2740. [Google Scholar]

- Mezgec, S.; Koroušić Seljak, B. NutriNet: A Deep Learning Food and Drink Image Recognition System for Dietary Assessment. Nutrients 2017, 9, 657. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Banerjee, A.; Gupta, S.K.S. MT-Diet: Automated smartphone based diet assessment with infrared images. In Proceedings of the 2016 IEEE International Conference on Pervasive Computing and Communications (PerCom), Sydney, NSW, Australia, 14–19 March 2016; pp. 1–6. [Google Scholar]

- McAllister, P.; Zheng, H.; Bond, R.; Moorhead, A. Combining Deep Residual Neural Network Features with Supervised Machine Learning Algorithms to Classify Diverse Food Image Datasets. Comput. Biol. Med. 2018, 95, 217–233. [Google Scholar] [CrossRef] [PubMed]

- Jiang, H.; Starkman, J.; Liu, M.; Huang, M.-C. Food Nutrition Visualization on Google Glass: Design Tradeoff and Field Evaluation. IEEE Consum. Electron. Mag. 2018, 7, 21–31. [Google Scholar] [CrossRef]

- Matei, A.; Glavan, A.; Radeva, P.; Talavera, E. Towards Eating Habits Discovery in Egocentric Photo-Streams. IEEE Access 2021, 9, 17495–17506. [Google Scholar] [CrossRef]

- Matsuda, Y.; Hoashi, H.; Yanai, K. Recognition of multiple-food images by detecting candidate regions. In Proceedings of the 2012 IEEE International Conference on Multimedia and Expo, Melbourne, VIC, Australia, 9–13 July 2012; pp. 25–30. [Google Scholar]

- Anthimopoulos, M.M.; Gianola, L.; Scarnato, L.; Diem, P.; Mougiakakou, S.G. A Food Recognition System for Diabetic Patients Based on an Optimized Bag-of-Features Model. IEEE J. Biomed. Health Inform. 2014, 18, 1261–1271. [Google Scholar] [CrossRef]

- Park, S.-J.; Palvanov, A.; Lee, C.-H.; Jeong, N.; Cho, Y.-I.; Lee, H.-J. The Development of Food Image Detection and Recognition Model of Korean Food for Mobile Dietary Management. Nutr. Res. Pract. 2019, 13, 521–528. [Google Scholar] [CrossRef]

- Mezgec, S.; Koroušić Seljak, B. Deep Neural Networks for Image-Based Dietary Assessment. J. Vis. Exp. 2021, 169, e61906. [Google Scholar] [CrossRef]

- Bolaños, M.; Radeva, P. Simultaneous food localization and recognition. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 3140–3145. [Google Scholar]

- Ciocca, G.; Micali, G.; Napoletano, P. State Recognition of Food Images Using Deep Features. IEEE Access 2020, 8, 32003–32017. [Google Scholar] [CrossRef]

- Fuchs, K.; Haldimann, M.; Grundmann, T.; Fleisch, E. Supporting Food Choices in the Internet of People: Automatic Detection of Diet-Related Activities and Display of Real-Time Interventions via Mixed Reality Headsets. Futur. Gener. Comp. Syst. 2020, 113, 343–362. [Google Scholar] [CrossRef]

- Ruenin, P.; Bootkrajang, J.; Chawachat, J. A system to estimate the amount and calories of food that elderly people in the hospital consume. In Proceedings of the 11th International Conference on Advances in Information Technology, Bangkok, Thailand, 1–3 July 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–7. [Google Scholar]

- Lu, Y.; Stathopoulou, T.; Vasiloglou, M.F.; Pinault, L.F.; Kiley, C.; Spanakis, E.K.; Mougiakakou, S. goFOODTM: An Artificial Intelligence System for Dietary Assessment. Sensors 2020, 20, 4283. [Google Scholar] [CrossRef] [PubMed]

- Mezgec, S.; Seljak, B.K. Using deep learning for food and beverage image recognition. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; pp. 5149–5151. [Google Scholar]

- Aguilar, E.; Remeseiro, B.; Bolaños, M.; Radeva, P. Grab, Pay, and Eat: Semantic Food Detection for Smart Restaurants. IEEE Trans. Multimed. 2018, 20, 3266–3275. [Google Scholar] [CrossRef]

- Gao, J.; Tan, W.; Ma, L.; Wang, Y.; Tang, W. MUSEFood: Multi-Sensor-Based food volume estimation on smartphones. In Proceedings of the 2019 IEEE SmartWorld, Ubiquitous Intelligence & Computing, Advanced & Trusted Computing, Scalable Computing & Communications, Cloud & Big Data Computing, Internet of People and Smart City Innovation (SmartWorld/SCALCOM/UIC/ATC/CBDCom/IOP/SCI), Leicester, UK, 19–23 August 2019; pp. 899–906. [Google Scholar]

- Yumang, A.N.; Banguilan, D.E.S.; Veneracion, C.K.S. Raspberry PI based food recognition for visually impaired using YOLO algorithm. In Proceedings of the 2021 5th International Conference on Communication and Information Systems (ICCIS), Chongqing, China, 15–17 October 2021; pp. 165–169. [Google Scholar]

- Li, H.; Yang, G. Dietary Nutritional Information Autonomous Perception Method Based on Machine Vision in Smart Homes. Entropy 2022, 24, 868. [Google Scholar] [CrossRef] [PubMed]

- Martinel, N.; Piciarelli, C.; Foresti, G.L.; Micheloni, C. Mobile food recognition with an extreme deep tree. In Proceedings of the ICDSC 2016: 10th International Conference on Distributed Smart Camera, Paris, France, 12–15 September 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 56–61. [Google Scholar]

- Martinel, N.; Piciarelli, C.; Micheloni, C.; Foresti, G.L. A structured committee for food recognition. In Proceedings of the 2015 IEEE International Conference on Computer Vision Workshop (ICCVW), Santiago, Chile, 7–13 December 2015; pp. 484–492. [Google Scholar]

- Martinel, N.; Piciarelli, C.; Micheloni, C. A Supervised Extreme Learning Committee for Food Recognition. Comput. Vis. Image Underst. 2016, 148, 67–86. [Google Scholar] [CrossRef]

- Zhao, H.; Yap, K.-H.; Chichung Kot, A. Fusion learning using semantics and graph convolutional network for visual food recognition. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021; pp. 1710–1719. [Google Scholar]

- Nguyen, H.-T.; Ngo, C.-W.; Chan, W.-K. SibNet: Food Instance Counting and Segmentation. Pattern Recognit. 2022, 124, 108470. [Google Scholar] [CrossRef]

- Martinez, E.T.; Leyva-Vallina, M.; Sarker, M.M.K.; Puig, D.; Petkov, N.; Radeva, P. Hierarchical Approach to Classify Food Scenes in Egocentric Photo-Streams. IEEE J. Biomed. Health Inform. 2020, 24, 866–877. [Google Scholar] [CrossRef]

- Sarapisto, T.; Koivunen, L.; Mäkilä, T.; Klami, A.; Ojansivu, P. Camera-Based meal type and weight estimation in self-service lunch line restaurants. In Proceedings of the 2022 12th International Conference on Pattern Recognition Systems (ICPRS), Saint-Etienne, France, 7–10 June 2022; pp. 1–7. [Google Scholar]

- Qiu, J.; Lo, F.P.-W.; Gu, X.; Jobarteh, M.L.; Jia, W.; Baranowski, T.; Steiner-Asiedu, M.; Anderson, A.K.; McCrory, M.A.; Sazonov, E.; et al. Egocentric image captioning for privacy-preserved passive dietary intake monitoring. In IEEE Transactions on Cybernetics; IEEE: New York, NY, USA, 2021. [Google Scholar]

- Tomescu, V.-I. FoRConvD: An approach for food recognition on mobile devices using convolutional neural networks and depth maps. In Proceedings of the 2020 IEEE 14th International Symposium on Applied Computational Intelligence and Informatics (SACI), Timisoara, Romania, 21–23 May 2020; pp. 000129–000134. [Google Scholar]

- Liu, Y.-C.; Onthoni, D.D.; Mohapatra, S.; Irianti, D.; Sahoo, P.K. Deep-Learning-Assisted Multi-Dish Food Recognition Application for Dietary Intake Reporting. Electronics 2022, 11, 1626. [Google Scholar] [CrossRef]

- Chae, J.; Woo, I.; Kim, S.; Maciejewski, R.; Zhu, F.; Delp, E.J.; Boushey, C.J.; Ebert, D.S. Volume estimation using food specific shape templates in mobile image-based dietary assessment. In Computational Imaging IX; Bouman, C.A., Pollak, I., Wolfe, P.J., Eds.; SPIE—The International Society for Optical Engineering: Bellingham, WA, USA, 2011; Volume 7873, p. 78730K. [Google Scholar]

- Bellandi, P.; Sansoni, G.; Vertuan, A. Development and Characterization of a Multi-Camera 2D-Vision System for Enhanced Performance of a Drink Serving Robotic Cell. Robot. Comput.-Integr. Manuf. 2012, 28, 35–49. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar]

- Qiu, J.; Lo, F.P.-W.; Jiang, S.; Tsai, Y.-Y.; Sun, Y.; Lo, B. Counting Bites and Recognizing Consumed Food from Videos for Passive Dietary Monitoring. IEEE J. Biomed. Health Inform. 2021, 25, 1471–1482. [Google Scholar] [CrossRef]

- Sadeq, N.; Rahat, F.R.; Rahman, A.; Ahamed, S.I.; Hasan, M.K. Smartphone-Based calorie estimation from food image using distance information. In Proceedings of the 2018 5th International Conference on Networking, Systems and Security (NSysS), Dhaka, Bangladesh, 18–20 December 2018; pp. 1–8. [Google Scholar]

- Park, H.; Bharadhwaj, H.; Lim, B.Y. Hierarchical multi-task learning for healthy drink classification. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar]

- Liu, Z.; Xiang, C.-Q.; Chen, T. Automated Binocular Vision Measurement of Food Dimensions and Volume for Dietary Evaluation. Comput. Sci. Eng. 2018, 1, 1. [Google Scholar] [CrossRef]

- Zhou, P.; Bai, C.; Xia, J.; Chen, S. CMRDF: A Real-Time Food Alerting System Based on Multimodal Data. IEEE Internet Things J. 2022, 9, 6335–6349. [Google Scholar] [CrossRef]

- Rachakonda, L.; Kothari, A.; Mohanty, S.P.; Kougianos, E.; Ganapathiraju, M. Stress-Log: An IoT-Based smart system to monitor stress-eating. In Proceedings of the 2019 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 11–13 January 2019; pp. 1–6. [Google Scholar]

- Konstantakopoulos, F.; Georga, E.I.; Fotiadis, D.I. 3D reconstruction and volume estimation of food using stereo vision techniques. In Proceedings of the 2021 IEEE 21st International Conference on Bioinformatics and Bioengineering (BIBE), Kragujevac, Serbia, 25–27 October 2021; pp. 1–4. [Google Scholar]

- Rahmana, M.H.; Pickering, M.R.; Kerr, D.; Boushey, C.J.; Delp, E.J. A new texture feature for improved food recognition accuracy in a mobile phone based dietary assessment system. In Proceedings of the 2012 IEEE International Conference on Multimedia and Expo Workshops, Melbourne, VIC, Australia, 9–13 July 2012; pp. 418–423. [Google Scholar]

- Qiu, J.; Lo, F.P.-W.; Lo, B. Assessing individual dietary intake in food sharing scenarios with a 360 camera and deep learning. In Proceedings of the 2019 IEEE 16th International Conference on Wearable and Implantable Body Sensor Networks (BSN), Chicago, IL, USA, 19–22 May 2019; pp. 1–4. [Google Scholar]

- Lei, J.; Qiu, J.; Lo, F.P.-W.; Lo, B. Assessing individual dietary intake in food sharing scenarios with food and human pose detection. In Pattern Recognition. ICPR International Workshops and Challenges; Del Bimbo, A., Cucchiara, R., Sclaroff, S., Farinella, G.M., Mei, T., Bertini, M., Escalante, H.J., Vezzani, R., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 549–557. [Google Scholar]

- Rhyner, D.; Loher, H.; Dehais, J.; Anthimopoulos, M.; Shevchik, S.; Botwey, R.H.; Duke, D.; Stettler, C.; Diem, P.; Mougiakakou, S. Carbohydrate Estimation by a Mobile Phone-Based System Versus Self-Estimations of Individuals With Type 1 Diabetes Mellitus: A Comparative Study. J. Med. Internet Res. 2016, 18, e101. [Google Scholar] [CrossRef] [PubMed]

- Esfahani, S.N.; Muthukumar, V.; Regentova, E.E.; Taghva, K.; Trabia, M. Complex food recognition using hyper-spectral imagery. In Proceedings of the 2020 10th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 6–8 January 2020; pp. 0662–0667. [Google Scholar]

- Kong, F.; Tan, J. DietCam: Regular shape food recognition with a camera phone. In Proceedings of the 2011 International Conference on Body Sensor Networks, Dallas, TX, USA, 23–25 May 2011; pp. 127–132. [Google Scholar]

- Pouladzadeh, P.; Shirmohammadi, S.; Arici, T. Intelligent SVM based food intake measurement system. In Proceedings of the 2013 IEEE International Conference on Computational Intelligence and Virtual Environments for Measurement Systems and Applications (CIVEMSA), Milan, Italy, 15–17 July 2013; pp. 87–92. [Google Scholar]

- Jayakumar, D.; Pragathie, S.; Ramkumar, M.O.; Rajmohan, R. Mid day meals scheme monitoring system in school using image processing techniques. In Proceedings of the 2020 7th Ieee International Conference on Smart Structures and Systems (ICSSS 2020), Chennai, India, 23–24 July 2020; IEEE: New York, NY, USA, 2020; pp. 344–348. [Google Scholar]

- Ravì, D.; Lo, B.; Yang, G.-Z. Real-Time food intake classification and energy expenditure estimation on a mobile device. In Proceedings of the 2015 IEEE 12th International Conference on Wearable and Implantable Body Sensor Networks (BSN), Cambridge, MA, USA, 9–12 June 2015; pp. 1–6. [Google Scholar]

- Zhu, F.; Bosch, M.; Woo, I.; Kim, S.; Boushey, C.J.; Ebert, D.S.; Delp, E.J. The Use of Mobile Devices in Aiding Dietary Assessment and Evaluation. IEEE J. Sel. Top. Signal Process. 2010, 4, 756–766. [Google Scholar] [CrossRef]

- Khan, T.A.; Islam, M.S.; Ullah, S.M.A.; Rabby, A.S.A. A machine learning approach to recognize junk food. In Proceedings of the 2019 10th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kanpur, India, 6–8 July 2019; pp. 1–6. [Google Scholar]

- Lu, Y.; Allegra, D.; Anthimopoulos, M.; Stanco, F.; Farinella, G.M.; Mougiakakou, S. A multi-task learning approach for meal assessment. In Proceedings of the CEA/MADiMa2018: Joint Workshop on Multimedia for Cooking and Eating Activities and Multimedia Assisted Dietary Management in conjunction with the 27th International Joint Conference on Artificial Intelligence IJCAI, Stockholm, Sweden, 15 July 2018; pp. 46–52. [Google Scholar]

- Almaghrabi, R.; Villalobos, G.; Pouladzadeh, P.; Shirmohammadi, S. A novel method for measuring nutrition intake based on food image. In Proceedings of the 2012 IEEE International Instrumentation and Measurement Technology Conference Proceedings, Graz, Austria, 13–16 May 2012; pp. 366–370. [Google Scholar]

- Pouladzadeh, P.; Villalobos, G.; Almaghrabi, R.; Shirmohammadi, S. A Novel SVM based food recognition method for calorie measurement applications. In Proceedings of the 2012 IEEE International Conference on Multimedia and Expo Workshops (ICMEW), Melbourne, VIC, Australia, 9–13 July 2012; IEEE: New York, NY, USA, 2012; pp. 495–498. [Google Scholar]

- Islam, K.T.; Wijewickrema, S.; Pervez, M.; O’Leary, S. An exploration of deep transfer learning for food image classification. In Proceedings of the 2018 Digital Image Computing: Techniques and Applications (DICTA), Canberra, ACT, Australia, 10–13 December 2018; pp. 1–5. [Google Scholar]

- Pfisterer, K.J.; Amelard, R.; Chung, A.G.; Syrnyk, B.; MacLean, A.; Keller, H.H.; Wong, A. Automated Food Intake Tracking Requires Depth-Refined Semantic Segmentation to Rectify Visual-Volume Discordance in Long-Term Care Homes. Sci. Rep. 2022, 12, 83. [Google Scholar] [CrossRef]

- Chen, H.; Wang, J.; Qi, Q.; Li, Y.; Sun, H. Bilinear CNN models for food recognition. In Proceedings of the 2017 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Sydney, NSW, Australia, 29 November 2017–1 December 2017; pp. 1–6. [Google Scholar]

- Tammachat, N.; Pantuwong, N. Calories analysis of food intake using image recognition. In Proceedings of the 2014 6th International Conference on Information Technology and Electrical Engineering (ICITEE), Yogyakarta, Indonesia, 7–8 October 2014; pp. 1–4. [Google Scholar]

- Liu, C.; Cao, Y.; Luo, Y.; Chen, G.; Vokkarane, V.; Ma, Y. DeepFood: Deep learning-based food image recognition for computer-aided dietary assessment. In Inclusive Smart Cities and Digital Health; Chang, C.K., Chiari, L., Cao, Y., Jin, H., Mokhtari, M., Aloulou, H., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 37–48. [Google Scholar]

- Tahir, G.A.; Loo, C.K. Explainable Deep Learning Ensemble for Food Image Analysis on Edge Devices. Comput. Biol. Med. 2021, 139, 104972. [Google Scholar] [CrossRef] [PubMed]

- Martinel, N.; Foresti, G.L.; Micheloni, C. Wide-Slice residual networks for food recognition. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 567–576. [Google Scholar]

- Christodoulidis, S.; Anthimopoulos, M.; Mougiakakou, S. Food recognition for dietary assessment using deep convolutional neural networks. In New Trends in Image Analysis and Processing—ICIAP 2015 Workshops; Murino, V., Puppo, E., Sona, D., Cristani, M., Sansone, C., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2015; Volume 9281, pp. 458–465. ISBN 978-3-319-23221-8. [Google Scholar]

- Yang, S.; Chen, M.; Pomerleau, D.; Sukthankar, R. Food recognition using statistics of pairwise local features. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2249–2256. [Google Scholar]

- Miyano, R.; Uematsu, Y.; Saito, H. Food region detection using bag-of-features representation and color feature. In Proceedings of the International Conference on Computer Vision Theory and Applications, Rome, Italy, 24–26 February 2012; SciTePress—Science and and Technology Publications: Rome, Italy, 2012; pp. 709–713. [Google Scholar]

- Bettadapura, V.; Thomaz, E.; Parnami, A.; Abowd, G.D.; Essa, I. Leveraging context to support automated food recognition in restaurants. In Proceedings of the 2015 IEEE Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 5–9 January 2015; pp. 580–587. [Google Scholar]

- Zhu, F.; Bosch, M.; Khanna, N.; Boushey, C.J.; Delp, E.J. Multilevel segmentation for food classification in dietary assessment. In Proceedings of the 2011 7th International Symposium on Image and Signal Processing and Analysis (ISPA), Dubrovnik, Croatia, 4–6 September 2011; pp. 337–342. [Google Scholar]

- Wang, X.; Kumar, D.; Thome, N.; Cord, M.; Precioso, F. Recipe recognition with large multimodal food dataset. In Proceedings of the 2015 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Turin, Italy, 29 June–3 July 2015; pp. 1–6. [Google Scholar]

- Teng, J.; Zhang, D.; Lee, D.-J.; Chou, Y. Recognition of Chinese Food Using Convolutional Neural Network. Multimed. Tools Appl. 2019, 78, 11155–11172. [Google Scholar] [CrossRef]

- Poply, P.; Arul Jothi, J.A. Refined image segmentation for calorie estimation of multiple-dish food items. In Proceedings of the 2021 International Conference on Computing, Communication, and Intelligent Systems (ICCCIS), Greater Noida, India, 19–20 February 2021; pp. 682–687. [Google Scholar]

- Ege, T.; Yanai, K. Simultaneous estimation of food categories and calories with multi-task CNN. In Proceedings of the 2017 Fifteenth IAPR International Conference on Machine Vision Applications (MVA), Nagoya, Japan, 8–12 May 2017; pp. 198–201. [Google Scholar]

- Berndt, D.J.; Clifford, J. Using dynamic time warping to find patterns in time series. In Proceedings of the 3rd International Conference on Knowledge Discovery and Data Mining, Seattle, WA, USA, 14–17 August 1997. [Google Scholar]

- Kohonen, T. The Self-Organizing Map. Proc. IEEE 1990, 78, 1464–1480. [Google Scholar] [CrossRef]

- Coleca, F.; State, A.; Klement, S.; Barth, E.; Martinetz, T. Self-Organizing Maps for Hand and Full Body Tracking. Neurocomputing 2015, 147, 174–184. [Google Scholar] [CrossRef]

- Fritzke, B. A Growing neural gas network learns topologies. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 1994; Volume 7. [Google Scholar]

- bin Kassim, M.F.; Haji Mohd, M.N. Tracking and Counting Motion for Monitoring Food Intake Based-On Depth Sensor and UDOO Board: A Comprehensive Review. IOP Conf. Ser. Mater. Sci. Eng. 2017, 226, 012089. [Google Scholar] [CrossRef]

- Kelly, P.; Thomas, E.; Doherty, A.; Harms, T.; Burke, Ó.; Gershuny, J.; Foster, C. Developing a Method to Test the Validity of 24 Hour Time Use Diaries Using Wearable Cameras: A Feasibility Pilot. PLoS ONE 2015, 10, e0142198. [Google Scholar] [CrossRef] [PubMed]

- Fang, S.; Liu, C.; Zhu, F.; Delp, E.J.; Boushey, C.J. Single-View food portion estimation based on geometric models. In Proceedings of the 2015 IEEE International Symposium on Multimedia (ISM), Miami, FL, USA, 14–16 December 2015; pp. 385–390. [Google Scholar]

- Puri, M.; Zhu, Z.; Yu, Q.; Divakaran, A.; Sawhney, H. Recognition and volume estimation of food intake using a mobile device. In Proceedings of the 2009 Workshop on Applications of Computer Vision (WACV), Snowbird, UT, USA, 7–8 December 2009; pp. 1–8. [Google Scholar]

- Woo, I.; Otsmo, K.; Kim, S.; Ebert, D.S.; Delp, E.J.; Boushey, C.J. Automatic portion estimation and visual refinement in mobile dietary assessment. In Proceedings of the Computational Imaging VIII, San Jose, CA, USA, 4 February 2010; Bouman, C.A., Pollak, I., Wolfe, P.J., Eds.; SPIE—The International Society for Optical Engineering: Bellingham, WA, USA, 2010; pp. 188–197. [Google Scholar]

- Chiu, M.-C.; Chang, S.-P.; Chang, Y.-C.; Chu, H.-H.; Chen, C.C.-H.; Hsiao, F.-H.; Ko, J.-C. Playful bottle: A mobile social persuasion system to motivate healthy water intake. In Proceedings of the 11th International Conference on Ubiquitous Computing, Orlando, FL, USA, 30 September–3 October 2019; Association for Computing Machinery: New York, NY, USA, 2009; pp. 185–194. [Google Scholar]

| Database | Stage | Search Items Used in the Advanced Searching Function | Records |

|---|---|---|---|

| PubMed | Ⅰ | (((fluid[Title/Abstract]) OR (drink[Title/Abstract]) OR (water[Title/Abstract]) OR (liquid[Title/Abstract]) OR (food[Title/Abstract]) OR (nutrition[Title/Abstract]) OR (energy[Title/Abstract]) OR (dietary[Title/Abstract])) AND (((vision[Title/Abstract]) OR (camera[Title/Abstract])) AND ((monitoring[Title/Abstract]) OR (detection[Title/Abstract]) OR (recognition [Title/Abstract])))) AND (intake) | 64/64 |

| Ⅱ | (vision[Title/Abstract] OR camera[Title/Abstract] OR image[Title/Abstract]) AND (action[Title/Abstract] OR gesture[Title/Abstract] OR activity[Title/Abstract] OR motion[Title/Abstract]) AND (detection[Title/Abstract] OR monitoring[Title/Abstract] OR recognition[Title/Abstract]) AND (drink[Text Word] OR water[Text Word] OR liquid[Text Word] OR food[Text Word] OR nutrition[Text Word] OR energy[Text Word] OR dietary[Text Word]) AND (human[Text Word]) | 126/126 | |

| SCOPUS | Ⅰ | TITLE-ABS-KEY (((fluid) OR (drink) OR (water) OR (liquid) OR (food) OR (nutrition) OR (energy) OR (dietary)) AND ((vision) OR (camera)) AND ((monitoring) OR (detection) OR (recognition))) AND (intake) AND (LIMIT-TO (SUBJAREA, “COMP”) OR LIMIT-TO (SUBJAREA, “ENGI”) OR LIMIT-TO (SUBJAREA, “NURS”)) AND (EXCLUDE (SUBJAREA, “MATH”) OR EXCLUDE (SUBJAREA, “AGRI”) OR EXCLUDE (SUBJAREA, “PHYS”) OR EXCLUDE (SUBJAREA, “BIOC”) OR EXCLUDE (SUBJAREA, “MATE”) OR EXCLUDE (SUBJAREA, “CHEM”)) AND (EXCLUDE (SUBJAREA, “PSYC”) OR EXCLUDE (SUBJAREA, “DECI”) OR EXCLUDE (SUBJAREA, “SOCI”) OR EXCLUDE (SUBJAREA, “ENER”) OR EXCLUDE (SUBJAREA, “EART”) OR EXCLUDE (SUBJAREA, “ENVI”)) | 112/125 |

| Ⅱ | (TITLE-ABS-KEY ((action OR gesture OR activity OR motion) AND (detection OR monitoring OR recognition) AND (vision OR camera OR image)) AND ALL (human) AND ALL (drink OR water OR liquid OR food OR nutrition OR energy OR dietary)) AND (LIMIT-TO (SUBJAREA, “COMP”) OR LIMIT-TO (SUBJAREA, “ENGI”) OR LIMIT-TO (SUBJAREA, “BIOC”) OR LIMIT-TO (SUBJAREA, “HEAL”) OR LIMIT-TO (SUBJAREA, “NURS”)) AND (EXCLUDE (SUBJAREA, “MATH”) OR EXCLUDE (SUBJAREA, “PHYS”) OR EXCLUDE (SUBJAREA, “MATE”) OR EXCLUDE (SUBJAREA, “CHEM”) OR EXCLUDE (SUBJAREA, “AGRI”) OR EXCLUDE (SUBJAREA, “ENVI”) OR EXCLUDE (SUBJAREA, “SOCI”) OR EXCLUDE (SUBJAREA, “CENG”) OR EXCLUDE (SUBJAREA, “DECI”) OR EXCLUDE (SUBJAREA, “EART”) OR EXCLUDE (SUBJAREA, “ENER”) OR EXCLUDE (SUBJAREA, “PHAR”) OR EXCLUDE (SUBJAREA, “IMMU”) OR EXCLUDE (SUBJAREA, “MULT”) OR EXCLUDE (SUBJAREA, “BUSI”) OR EXCLUDE (SUBJAREA, “PSYC”) OR EXCLUDE (SUBJAREA, “ARTS”) OR EXCLUDE (SUBJAREA, “ECON”) OR EXCLUDE (SUBJAREA, “VETE”) OR EXCLUDE (SUBJAREA, “DENT”)) AND (EXCLUDE (SUBJAREA, “MEDI”) OR EXCLUDE (SUBJAREA, “BIOC”)) | 100/3334 | |

| IEEE Xplore | Ⅰ | (“All Metadata”: fluid OR “All Metadata”: drink OR “All Metadata”: water OR “All Metadata”: liquid OR “All Metadata”: food OR “All Metadata”: nutrition OR “All Metadata”: energy OR “All Metadata”: dietary) AND (“All Metadata”: vision OR “All Metadata”: camera) AND (“All Metadata”: monitoring OR “All Metadata”: detection OR “All Metadata”: recognition) AND (“Full Text and Metadata”: intake) | 203/203 |

| Ⅱ | (“All Metadata”: vision OR “All Metadata”: camera OR “All Metadata”: image) AND (“All Metadata”: action OR “All Metadata”: gesture OR “All Metadata”: activity OR “All Metadata”: motion) AND (“All Metadata”: detection OR “All Metadata”: monitoring OR “All Metadata”: recognition) AND (“Full Text and Metadata”: human) AND (“Full Text and Metadata”: drink OR “Full Text and Metadata”: water OR “Full Text and Metadata”: liquid OR “Full Text and Metadata”: food OR “Full Text and Metadata”: nutrition OR “Full Text and Metadata”: energy OR “Full Text and Metadata”: dietary) | 100/11463 | |

| ACM Digital Library | Ⅰ | [[[Title: fluid] OR [Title: drink] OR [Title: water] OR [Title: liquid] OR [Title: food] OR [Title: nutrition] OR [Title: energy] OR [Title: dietary]] AND [[Title: vision] OR [Title: camera]] AND [[Title: monitoring] OR [Title: detection] OR [Title: recognition]] AND [[Abstract: or((((fluid] OR [Abstract: drink] OR [Abstract: water] OR [Abstract: liquid] OR [Abstract: food] OR [Abstract: nutrition] OR [Abstract: energy] OR [Abstract: dietary]] AND [[All: vision] OR [All: camera]] AND [[All: monitoring] OR [All: detection] OR [All: recognition]]] OR [All:)))) and (intake)] | 100/2983 |

| Ⅱ | [[Title: action] OR [Title: gesture] OR [Title: activity] OR [Title: motion]] AND [[Title: detection] OR [Title: monitoring] OR [Title: recognition]] AND [[Title: vision] OR [Title: camera] OR [Title: image]] AND [[Abstract: or] OR [[[Abstract: action] OR [Abstract: gesture] OR [Abstract: activity] OR [Abstract: motion]] AND [[Abstract: detection] OR [Abstract: monitoring] OR [Abstract: recognition]] AND [[Abstract: vision] OR [Abstract: camera] OR [Abstract: image]]]] AND [[Full Text: drink] OR [Full Text: water] OR [Full Text: liquid] OR [Full Text: food] OR [Full Text: nutrition] OR [Full Text: energy] OR [Full Text: dietary]] AND [Full Text: human] | 38/38 | |

| Web of Science | Ⅰ | In Topic: fluid OR drink OR water OR liquid OR food OR nutrition OR energy OR dietary And in Topic: vision OR camera And in Topic: monitoring OR detection OR recognition And in All Fields: intake | 214/214 |

| Ⅱ | ((((TS = (action OR gesture OR activity OR motion)) AND TS= (detection OR monitoring OR recognition)) AND TS = (vision OR camera OR image)) AND ALL = (drink OR water OR liquid OR food OR nutrition OR energy OR dietary)) AND ALL = (human) | 100/1295 | |

| Google Scholar | Ⅰ | ((fluid) OR (drink) OR (water) OR (liquid) OR (food) OR (nutrition) OR (energy) OR (dietary)) AND ((vision) OR (camera)) AND ((monitoring) OR (detection) OR (recognition)) AND (intake) | 50/? |

| Ⅱ | (Action OR gesture OR activity OR motion) AND (detection OR monitoring OR recognition) AND (vision OR camera OR image) AND (human) AND (drink OR water OR liquid OR food OR nutrition OR energy OR dietary) | 50/? | |

| Total number of retrieved records | 1257 | ||

| Number after duplicated items removed | 1001 | ||

| References | Tasks | Usage of the Devices | Devices Model | Methods (Algorithms) | Assessment | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Binary Classification | Type Classification | Intake Action Recognition | Amount Estimation | Single Camera | Multiple Camera | Collaborative with Non-Vision | Fusion | Placement of the Camera | ||||

| Cippitelli et al., 2016 [62] | ✓ | ✓ | ✓ | mounted on ceiling, top-down view | Kinect | 1. SOM(Self-Organized Map) on the defined skeleton model for movement tracking; 2. Glass detection (circular property) from RGB stream based on Hough function; 3. RGB-D fusion based on stereo calibration theory. | Correct rate of 98.3% for intake action classification. | |||||

| Iizuka et al., 2018 [68] | ✓ | ✓ | ✓ | top-down view | Intel RealSense F200 | 1. Seperalty using a 3-layer perceptron NN and a VGG16 network-based CNN with data augmentation for meal type classification; 2. Felzenszwalb’s segmentation algorithm for segmenting each food element; 3. Using the weight density information for each meal type to estimate the weight. | 1. More than 90% recognition accuracy on meal type; 2. About 4% error rate for estimating the weight of each meal elements. | |||||

| Zhang et al., 2018 [69] | Orientation estimation | ✓ | one from upper above and one from front view pointing at the subject | Kinect 2.0 | 1. CNN for container recognition; 2. Region growing algorithm for object segmentation from point cloud on color and depth information; 3. PCA (Principal Component Analysis) for object orientation estimation. | Average of 90.3% success rate when detecting and estimating the orientation of handleless cups. | ||||||

| Lo et al., 2019 [65] | ✓ | ✓ | ✓ | from any convenient viewing angle | RealSense | 1. Unsupervised segmentation method for segmenting each food item; 2. Point completion deep Neural Network for 3D reconstruction and portion size estimation of food items. | Up to 95.41% accuracy for food volume estimation. | |||||

| Chang et al., 2019 [70] | ✓ | ✓ | ✓ | from various viewpoints | Kinect 2.0 | 1. Human region detection based on SVM from histogram of oriented gradient features extracted from the RGB streams; 2. Farnebäck method for obtaining corresponding frames of the optical flow video from RGB stream; 3. Three modified 3D CNNs to extract the spatiotemporal features of human actions and recognize them. | Average human action recognition rate of 96.4%. | |||||

| Gambi et al., 2020 [71] | ✓ | ✓ | mounted on ceiling, top-down view | Kinect v1 | 1. An improved SOM Ex algorithm to trace the movements of the subject using a model of 50 nodes; 2. Setting threshold for distance of the joints to detect the start frame (SF) and the end frame (EF) for eating. | Action detection MAE(mean absolute error) of 0.424% and a MRE(mean relative error) of 5.044%. | ||||||

| Raju et al., 2022 [72] | ✓ | ✓ | all pointing at the food | OV5640 cameras, Panasonic PIR sensor, IR dot projector | 1. Semiglobal matching (SGM) for stereo matching to find correspondence between the pixels of the stereo images; 2.3D reconstruction for a dense disparity map and then point cloud to calculate the volume. | Average accuracy of 94.4% on food portion sizes estimation. | ||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, X.; Kamavuako, E.N. Vision-Based Methods for Food and Fluid Intake Monitoring: A Literature Review. Sensors 2023, 23, 6137. https://doi.org/10.3390/s23136137

Chen X, Kamavuako EN. Vision-Based Methods for Food and Fluid Intake Monitoring: A Literature Review. Sensors. 2023; 23(13):6137. https://doi.org/10.3390/s23136137

Chicago/Turabian StyleChen, Xin, and Ernest N. Kamavuako. 2023. "Vision-Based Methods for Food and Fluid Intake Monitoring: A Literature Review" Sensors 23, no. 13: 6137. https://doi.org/10.3390/s23136137

APA StyleChen, X., & Kamavuako, E. N. (2023). Vision-Based Methods for Food and Fluid Intake Monitoring: A Literature Review. Sensors, 23(13), 6137. https://doi.org/10.3390/s23136137