Abstract

This paper presents a focused investigation into real-time segmentation in unstructured environments, a crucial aspect for enabling autonomous navigation in off-road robots. To address this challenge, an improved variant of the DDRNet23-slim model is proposed, which includes a lightweight network architecture and reclassifies ten different categories, including drivable roads, trees, high vegetation, obstacles, and buildings, based on the RUGD dataset. The model’s design includes the integration of the semantic-aware normalization and semantic-aware whitening (SAN–SAW) module into the main network to improve generalization ability beyond the visible domain. The model’s segmentation accuracy is improved through the fusion of channel attention and spatial attention mechanisms in the low-resolution branch to enhance its ability to capture fine details in complex scenes. Additionally, to tackle the issue of category imbalance in unstructured scene datasets, a rare class sampling strategy (RCS) is employed to mitigate the negative impact of low segmentation accuracy for rare classes on the overall performance of the model. Experimental results demonstrate that the improved model achieves a significant 14% increase mIoU in the invisible domain, indicating its strong generalization ability. With a parameter count of only 5.79M, the model achieves mAcc of 85.21% and mIoU of 77.75%. The model has been successfully deployed on a a Jetson Xavier NX ROS robot and tested in both real and simulated orchard environments. Speed optimization using TensorRT increased the segmentation speed to 30.17 FPS. The proposed model strikes a desirable balance between inference speed and accuracy and has good domain migration ability, making it applicable in various domains such as forestry rescue and intelligent agricultural orchard harvesting.

1. Introduction

The sensing module plays a crucial role in autonomous driving systems as it empowers the vehicle to sense and comprehend its surroundings in real time [1]. By converting environmental data, including images and LiDAR data, into meaningful information, the sensing module enhances the vehicle’s perception capabilities [2]. Compared to LiDAR, camera sensors provide an affordable, easily installable, and better real-time performance solution, making them extensively used in environmental perception. Additionally, cameras offer more detailed and accurate information, making them better suited to identify road markings, vehicles, pedestrians, and other traffic participants, especially in complex environments [3].

Presently, the majority of research has concentrated on the perception of structured roads, which refer to standardized road systems in cities and highways featuring clear markings, lane lines, traffic signals, road signs, and road markings [4]. However, unstructured environments exhibit diverse topographic surfaces, ambiguous semantic categories of natural objects with varying shapes, obstacles, and changing terrain conditions, such as high vegetation, grass, and rocks [5]. Hence, it is considerably challenging to perceive these complex unstructured environments accurately.

The traditional environment road scene recognition algorithm employed by Hoang utilized fast local Laplace filtering to assess the condition of asphalt pavement [6]. Zhao et al. proposed an improved Canny edge detection algorithm and an edge-preserving filter for detecting pavement edges [7]. Huang et al. studied the road centerline extracted from high-resolution images and proposed a road detection system based on multi-scale structural features and a support vector machine (SVM) [8]. However, the commonality across these studies lies in their feature extraction of surface elements, i.e., texture, color, and shape. This method falls short in representing and extracting high-level semantic information and deep features, resulting in inferior performance when it comes to recognizing intricate unstructured road scenes.

2. Related Work

2.1. Semantic Segmentation

In recent years, traditional algorithms have been gradually replaced by deep learning techniques. One of the earliest deep learning semantic segmentation algorithms, FCN [9], leveraged full convolutional neural networks for pixel-level classification. However, the output has a low separation rate. U-Net [10] utilizes a U-shaped network architecture, but it faces limitations in handling class imbalance, leading to inaccurate predictions for classes with fewer samples in unevenly distributed datasets. The DeepLab [11] approach uses dilated convolution to enhance output resolution while maintaining sensory field size, resulting in more accurate outputs. However, this method incurs high computational and storage costs, making it challenging to execute the model on devices with limited resources.

2.2. Semantic Segmentation in Unstructured Environments

Semantic segmentation has emerged as a popular method for environment perception in unstructured environments for robot navigation [12]. Liu introduced the hybrid attentional semantic segmentation (HASS) network designed to function in completely unstructured environments on Mars [13]. Jin et al. proposed a novel semantic segmentation network that incorporates a transformer (TrSeg) to adaptively capture multi-scale information [14]. In addition, Guan et al. proposed a new grouped attention mechanism to identify safe and navigable areas in coarse-grained unstructured environments from RGB images [15].

2.3. Domain Migration Techniques

However, most studies focus on specific datasets and scenarios, limiting the generalizability of their findings to broader domains and unseen environments. This limitation arises mainly due to the influence of various factors, such as scene variations, lighting conditions, and weather variations. To overcome this problem, researchers have proposed various techniques, including domain adaptation [16] and domain generalization [17], in recent years. These techniques aim to alleviate the domain gap of models and boost their generalization ability. Domain adaptation techniques are employed to improve the adaptability of the model by narrowing the gap between the source and target domains. In contrast, domain generalization techniques are specifically designed to operate on data from completely unseen domains.

Domain generalization techniques are considered more adaptable and generalizable compared to domain adaptation techniques due to their ability to operate without requiring access to target domain samples. This study used a fused domain generalization approach to minimize the cost of semantic segmentation annotations and improve the resilience of the machine learning model in diverse environments. This approach also boosts the generalization performance of the model when operating in multiple domains and complex, unstructured scenes, without being constrained by specific datasets or scenarios.

This paper proposes a lightweight semantic segmentation model, which incorporates domain generalization and attention mechanisms based on the DDRNet23-slim [18] model, which enhances the model’s capabilities in complex and unorganized scene environments. Through the study, the aim of this paper is to demonstrate the following advantages of our proposed approach:

- The semantic-aware normalization and semantic-aware whitening (SAN–SAW) [19] module was implemented in the backbone network to improve the model’s generalization capability. This module is less computationally intensive and significantly improves the model’s feature representation in the target domains, resulting in enhanced accuracy in real-world scenarios.

- The convolutional block attention module (CBAM) [20] was incorporated into the model’s structure to advance its feature representation capability at each stage and capture finer details.

- The rare class sampling strategy (RCS) [21] was adopted to address the class imbalance problem in unorganized scenes, thereby improving accuracy and mitigating potential accuracy degradation.

- Our model has been successfully deployed on an agricultural robot equipped with a Jetson Xavier NX and utilizing the robot operating system (ROS). Through training on the RUGD dataset [5] and testing in both real and virtual orchard environments, the experimental results demonstrate the model’s strong domain migration capabilities. It has proven to be a valuable tool in the field of precision agriculture, striking a balance between speed and accuracy.

3. Improved DDRNet23-Slim Lightweight Network

3.1. DDRNet23-Slim Network

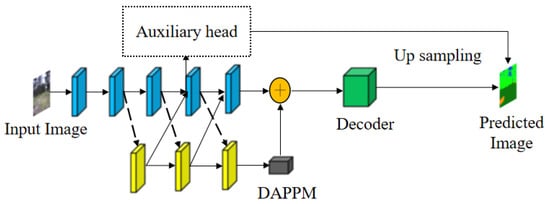

DDRNet23-slim is a deep dual-resolution network that comprises two depth branches with multiple bilateral fusions, as depicted in Figure 1. The network follows a general principle where the encoder is divided into two branches with distinct resolutions. One branch is responsible for generating feature maps with varying resolutions, while the other branch focuses on extracting rich semantic information. Additionally, the deep aggregation pyramid pooling module (DAPPM) approach can effectively expand the receptive field and fuse multi-scale contexts using low-resolution feature maps, resulting in high segmentation accuracy and speed in structured environments. However, further improvement is necessary to address the challenges posed by unstructured scene segmentation in complex environments.

Figure 1.

DDRNet23-slim model flowchart. Note: Blue branch is the high-resolution branch, yellow is the low-resolution branch.

3.2. Improved DDRNet23-Slim Network with SAN–SAW Module

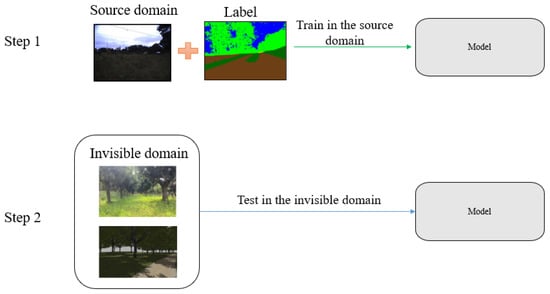

In numerous applications, acquiring target data can be challenging or unknown prior to model deployment. For instance, in biomedical applications, there may be domain shifts between data from different patients, making it impractical to collect each new patient’s data in advance. In traffic scene semantic segmentation, it is unfeasible to collect data that encompass all diverse scenes and weather conditions [22]. As shown in Figure 2, we solely utilized RUGD as the training dataset; nevertheless, the unstructured environments in which our model is deployed, including real and virtual orchard environments, remained unseen or invisible. To enhance the robustness of our model in handling various complex environments, we incorporated SAN–SAW into DDRNet23-slim. The purpose of this module is to improve the model’s adaptability and performance in unseen and diverse environments.

Figure 2.

Domain generalization flowchart. Step 1: The source domain contains both data and labels, and the model is initially trained on the source domain. Step 2: The model is then evaluated on the unseen or invisible domain.

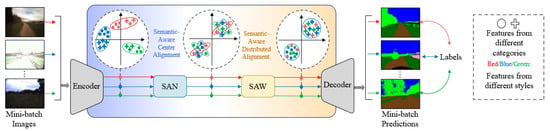

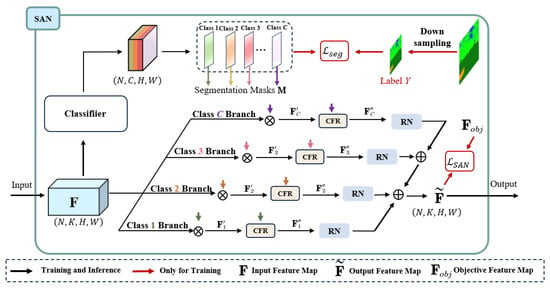

The SAN–SAW module is a type of domain-generalized semantic segmentation (DGSS) technique that enhances the model’s generalization capability without requiring access to target domain data. As illustrated in Figure 3, the SAN–SAW module collaboratively aligns category-level distributions to enhance the discriminative strength of features. It encourages both through category-level feature alignment, resulting in effective style elimination and powerful feature discrimination.

Figure 3.

Schematic diagram of SAN–SAW module [19].

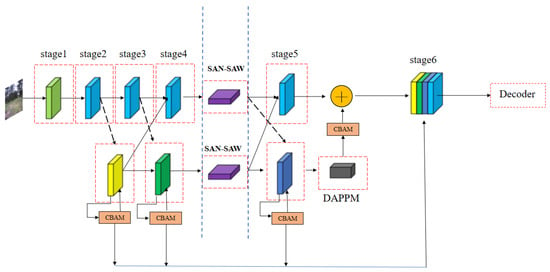

Due to the complex unstructured environment, roads have blurred edges, large curvature variations, and irregular shapes. Notably, roads of the same material can appear differently in terms of color features and texture patterns under varying lighting and weather conditions. To improve the generalization in the invisible domain without increasing the computational effort of the model, this work improved the DDRNet23-slim model, as shown in Figure 4, by incorporating SAN–SAW modules at low and high resolutions of the branches, respectively.

Figure 4.

The improved DDRNet23-slim overall framework diagram. Note: The black dotted line represents the downsampling stage, while the black solid line represents the upsampling stage. The CBAM refers to the channel attention and spatial attention. SAN–SAW denotes the domain generalization module. The “+” symbol is the feature map direct addition operation, and the concatenation is the dimension splicing operation. The blue line is the multi-scale fusion stage.

3.2.1. Semantic-Aware Normalization (SAN)

SAN aims to achieve semantic-aware center alignment. As depicted in Figure 5, it operates by manipulating the intermediate mini-batch feature map F and transforming it into a category-level centered feature map. With the aid of segmentation labels Y, the desired objective feature map can be easily obtained as:

where and are the mean and standard deviation computed from c-th category features of k-th channel, n-th sample, and the c-th category label . Features belong to c-th category in channel . The weights for scaling and shifting are denoted by and , respectively, which are both learnable parameters. The process of SAN can be briefly expressed by the following equation:

where denotes regional normalization in c-th branch, and denotes the category-level feature refinement block. and are affine parameters as per Equation (1). The loss function of the SAN process is:

where denotes the set of predicted segmentation masks.

Figure 5.

The detailed architecture SAN module [19].

3.2.2. Semantic-Aware Whitening (SAW)

As shown in Figure 6, following the SAN module feature map semantic center alignment, SAW is the module used to further enhance channel decorrelation for the distributed alignment of the already semantic-centered features. instance whitening (IW) [23] is capable of integrating the joint distribution, which proves valuable in achieving distributed alignment. Nonetheless, employing IW directly is impractical due to its potential for excessively whitening the data, thereby eliminating correlations across all channels. This unintended consequence may compromise the semantic content, resulting in the loss of crucial domain-invariant information. For the segmentation model, the results for each category’s segmentation are achieved by multiplying all channels with their respective weights and subsequently summing them together. The weight value plays a crucial role in determining the impact of each channel on the corresponding category. As shown in Figure 6, for each category, there are K weights corresponding to K channels, i.e., where . The weights of each category are ranked in descending order after converting them into absolute values. In each category, the first weight indexes are selected, corresponding to the highest weights. Therefore, the group instance whitening (GIW) [24] after channel weight regrouping optimization can be expressed as:

where denotes the m-th group of n-th sample, denotes the feature map of the SAN output. represents the j-th selected index of i-th category.

where denotes the covariance between the i-th channel and the j-th channel of . and I denote the channel correlation and identity matrix. The loss function of the SAW module is:

Figure 6.

The detailed architecture of the SAW module [19].

In this study, SAN–SAW was used as a base module to improve DDRNet23-slim, thereby improving the model’s generalization capability while mitigating the impact of color variation in pavement materials caused by varying illumination conditions in unstructured environments.

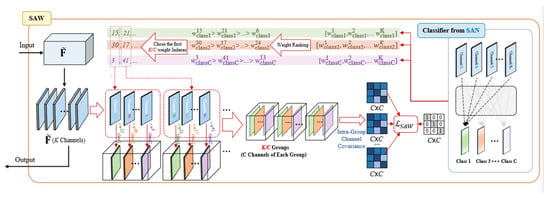

3.3. Incorporating Channel Attention and Spatial Attention Mechanisms

Channel attention and spatial attention are techniques used to adjust the importance of different channels and spatial locations in a deep learning model. Channel attention improves feature representation by learning the weight of each channel and dynamically scaling the feature map of each channel to help the network better focus on the important feature channels. Spatial attention, on the other hand, dynamically scales the feature representation of each pixel point by learning the weight of each location, enabling the network to better emphasize crucial spatial locations. The combination of channel attention and spatial attention facilitates deep learning models in capturing key features more effectively.

As shown in Figure 4, this study improved the DDRNet23-slim encoder part by employing a channel attention mechanism and a spatial attention mechanism in the low-resolution branch. This integration allows for the fusion of features from both high and low branches, leading to a significant enhancement in the model’s performance. Notably, this study adopted the CBAM module as the base module for model improvement. The advantage of the CBAM module lies in its ability to enhance model performance without adding network parameters or computational complexity.

As shown in Figure 7. The spatial information from the feature maps is first combined by using the average pooling and maximum pooling operations. This process produces two different spatial environment descriptors: and . Both descriptors are subsequently passed through a shared network in order to generate channel attention map, denoted as . This shared network consists of a multi-layer perceptron (MLP) with a single hidden layer. Following the application of the shared network to each descriptor, we combine the resulting feature vectors through element-wise summation. In summary, the computation of the channel attention can be expressed as:

where denotes the sigmoid function, and and denote MLP weights. Note that they are shared between both inputs. Furthermore, the ReLU activation function is applied after the multiplication with .

Figure 7.

Channel attention and spatial attention structure in CBAM [20].

The spatial attention mechanism involves the application of average-pooling and max-pooling operations along the channel axis, which are then concatenated to create a compact and informative feature descriptor. Channel information of a feature map is obtained by using two pooling operations, generating two 2D maps: and . Subsequently, the two 2D maps are concatenated and subjected to convolution through a standard convolution layer. The outcome of this process is a 2D spatial attention map.

where denotes the sigmoid function and represents a convolution operation with the filter size of . Given an intermediate feature map , the overall attention process can be summarized as:

3.4. Rare Class Sampling Strategy

The class imbalance problem is particularly pronounced when in a dataset such as RUGD, leading to increased challenges in performing semantic segmentation in unstructured environments compared to structured urban environments. It is proposed that the later a class is learned in training, the worse it performs at the end of training [21]. In the presence of relevant samples containing rare classes, the model is likely to tend to predict common classes, thus reducing the prediction of rare classes. The frequency of occurrence of each class in the dataset can be calculated in terms of the number of C pixels that occur:

where denotes the total number of datasets, H and W in the sub-table denote the height and width of the image, and denotes the j pixel point of the i image belonging to the category c. The frequency of occurrence of each category is obtained, with each category sampling rate:

where T is the temperature coefficient, and indicates the frequency of occurrence of other classes. Thus, the smaller the frequency of the class, the higher the sampling probability, and the model focuses more on learning samples from rare classes.

3.5. Loss Function

The literature [25] analyzed the common loss functions involved in semantic models, and He et al. proposed focal loss [26] for the category imbalance problem, which can effectively solve the category imbalance problem and improve the prediction accuracy for a small number of samples by introducing an adjustable “focus factor”, namely:

The probability of correct prediction of this classification by model when the prediction is correct is ; otherwise, . and are hyperparameters for modulating the positive and negative sample weights and controlling the hard and easy sample weights, respectively. consists of two parts and . All loss functions of the model in this paper are

4. Experiment

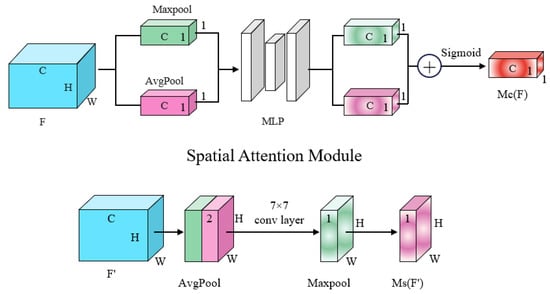

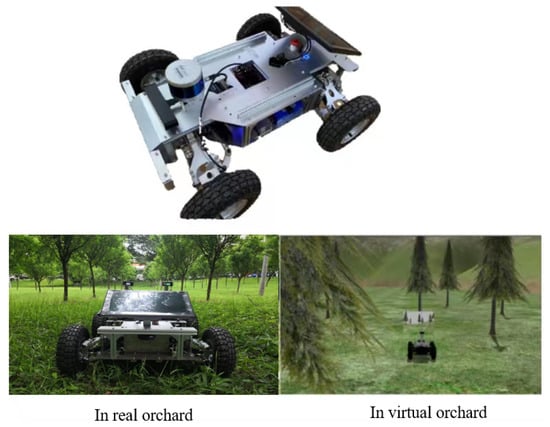

4.1. Experimental Platform

The deep learning hardware platform utilized in this study consists of a computer featuring an Intel(R) Xeon(R) Gold 6330 CPU with 16GB RAM and NVIDIA RTX 3090 GPU with CUDA 11.3 and CUDANN 7.0 deep neural network acceleration libraries installed. The model development process took place on the Linux platform based on the PyTorch framework. The segmentation model was trained, validated, and tested within this environment. To verify the model’s real-time performance, it was deployed on a ROS robot equipped with a Jetson Xavier NX and accelerated using TensorRT, as shown in Figure 8.

Figure 8.

ROS-based off-road vehicles equipped with a Jetson Xavier NX deployed in real orchards and virtual orchards. Inconsistent lighting and ground materials between the real and simulated environments.

4.2. Dataset

Recent datasets on semantic segmentation for autonomous driving primarily focus on urban environments, such as Cityscapes [4], CamVid [27], and SemanticKITTI [28], serving as the main datasets for training semantic segmentation models for urban street autonomous navigation. However, due to the difficulty of unstructured environment annotation, the main existing datasets for semantic segmentation models for off-road environment navigation are RUGD, RELLIS-3D [29], DeepScene [30], and YCOR [31]. These unstructured datasets exhibit variations in label categories and styles. For example, an area with shrubs is labeled as “shrub” in RUGD and RELLIS-3D but as “low vegetation” in YCOR, while DeepScene labels both trees and shrubs as “vegetation”. As such, to combine different datasets for training and evaluation purposes, label mapping is required for each dataset. This study addressed this issue by redefining the mapping based on the classification proposed in [32], considering the appearance and traversability characteristics of the datasets.

4.3. Model Training and Evaluation Methods

In this study, we utilized the RUGD dataset as an experimental benchmark and employed various data enhancement techniques, including histogram equalization, random inversion, random translation, and random cropping, to improve the robustness of the model. For training, we used a stochastic gradient descent optimizer with a batch size of 16, a learning rate of 0.01, a learning rate decay of 0.0005, and a polynomial learning rate strategy with a power of 0.9. The loss function shown in Equation (19) was employed during training. The image was cropped to a size of 640 × 640, which represents the median value among the four different dataset sizes. Training was carried out with a 6:2:2 allocation of training set, validation set, and test set. In addition, a training strategy of model freezing was used, where the stages before stage 2 of DDRNet23-slim were frozen, and the weight parameters of the underlying structure were shared to mitigate variability across different datasets. Subsequently, the remaining layers were unfrozen, and the whole model was trained until the training loss reached a low level. Finally, the model was benchmarked on standard segmentation metrics, such as overall accuracy (aAcc), which represents the ratio between the number of correct predictions made by the model on all test sets and the total number of predictions, the mean intersection over union (mIoU); the intersection over union mean (IoU); the mean accuracy (mAcc), which represents the average accuracy rate for all categories; and the single-class pixel accuracy (acc), and its performance was evaluated with 10 classes, namely roads, grass, trees, high vegetation, obstacles, buildings, wood, sky, rocks, and water.

4.4. Rare Class Sampling Strategy

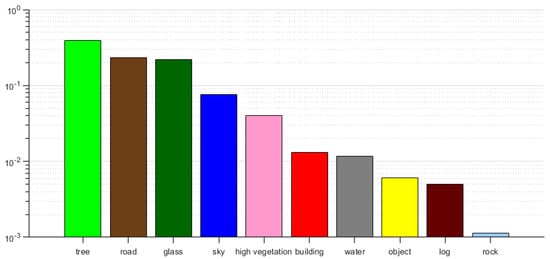

Figure 9 illustrates the pixels appearing in different classes in the RUGD dataset. Given that rare classes typically coexist with several common classes within an image, it is better to increase the sampling frequency for the rare class than for the common class. For instance, in the presence of logs, roads and grass are commonly observed. As per Equation (16), classes with lower frequencies are assigned higher sampling probabilities. The temperature parameter T controls the smoothness of the distribution. A higher T value leads to a more uniform distribution, while a lower T value places stronger emphasis on rare classes with smaller values. In this study, the RCS temperature T was set to 0.01 to maximize the sampled pixels of the class with the fewest pixels.

Figure 9.

The number of pixels in each category in the RUGD training dataset after reclassification.

4.5. Analysis of Experimental Results

To evaluate the effectiveness of the semantic segmentation model proposed in this paper, a series of ablation tests were performed to analyze the impact of each functional module on the model’s performance. The basic semantic segmentation DDRNet23-slim model was constructed, and the SAN–SAW module and channel space attention module were incorporated to evaluate their effects on performance metrics such as aAcc, acc, mAcc, IoU, mIoU, segmentation speed, and number of parameters. The objective of the first ablation experiment was to demonstrate the effectiveness of introducing the SAN–SAW feature, while the aim of the second ablation experiment was to highlight the effectiveness of the attention mechanism and the RCS. The data in the table were measured on the NVIDIA 3090 GPU platform.

4.5.1. Ablation Experiment I

According to the data in Table 1, this study successfully integrated the SAN–SAW module into the DDRNet23-slim model, resulting in a significant improvement in both aAcc and mIoU metrics within the invisible domain. To quantify the evaluation criteria, training and validation were conducted exclusively on the RUGD dataset, while testing was performed with the RUGD, DeepScene, YCOR, and RELLES-3D datasets. Compared with the unimproved model, the number of parameters and computation of the model with the integrated SAN–SAW module remained nearly unchanged, and performance was almost the same in the source domain RUGD dataset, but the average improvement in overall mIoU reached approximately 14%.

Table 1.

Performance in different datasets after being integrated into SAN–SAW.

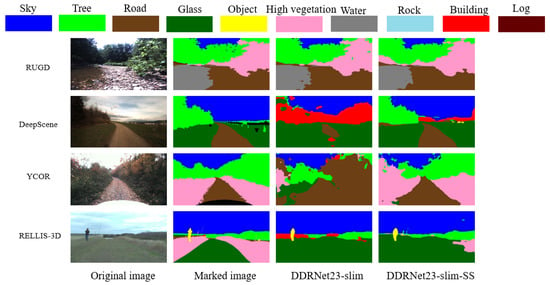

As can be seen from Figure 10, the DDRNet23-slim-SS model with integrated SAN–SAW demonstrates similar performance to the original model without integration on the RUGD dataset. When tested on the other three datasets, the DDRNet23-slim-SS model can segment the pavement and high vegetation more effectively than the original model while reducing the category misclassification and having better generalization ability. These findings underscore the efficacy of our improved model in augmenting its generalization capacity without reliance on the target domain. It is worth mentioning that the modules we incorporated consume almost no computing resources.

Figure 10.

Scene segmentation effects in different unstructured environmental datasets before and after integrating into SAN–SAW.

4.5.2. Ablation Experiment II

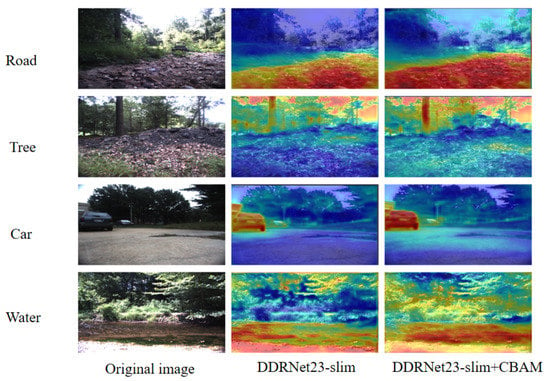

To validate the effectiveness of the fused channel attention and spatial attention models, this study first incorporated the attention mechanism into the low-resolution branch of the standard DDRNet23-slim. The model was pre-trained on the ImageNet [33] dataset to acquire the initialized weight parameter. Then, it underwent training using the RUGD dataset and tested on the 833 test sets of RUGD. The results, depicted in Figure 11, demonstrate the notable enhancement of the model’s ability to recognize roads, trees, cars, and water in the images. This is because after fusing channel spatial attention, the neural network is able to understand the spatial relationship between objects or regions in the image more accurately and focus on local regions, thus improving its perception ability. Furthermore, spatial attention facilitates the network in distinguishing adjacent objects or regions, consequently bolstering segmentation accuracy. Channel attention helps the network understand the relationship between different channels and can capture the detailed features in the image to further refine the segmentation accuracy. Table 2 showcases the improvements in acc and IoU. As observed from the data in Table 2 and Table 3, the integration of channel attention and spatial attention into the base model increases the acc and mIoU by 3.26% and 3.02%, respectively. Furthermore, the results in Table 2 and Table 3 also demonstrate an upsurge in the RCS with significant improvements in the evaluation metrics of rare classes such as obstacles, wood, rocks, and water by prioritizing the learning of uncommon classes with fewer samples. As such, the performance degradation caused by category imbalance in the dataset is mitigated, leading to improved overall performance of the model. Therefore, the mAcc and mIoU are improved by 0.61% and 1.56%, respectively.

Figure 11.

Feature map visualization results before and after incorporating channel spatial attention.

Table 2.

Single-category pixel accuracy and intersection over union mean of different functional units configured (CBAM identifies channel attention and spatial attention modules, and RCS indicates rare class sampling strategy).

Table 3.

Segmentation performance of different functional unit configurations.

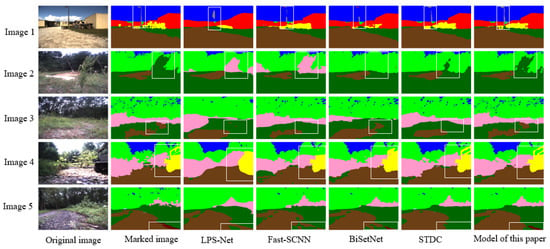

In this paper, we compare our proposed model with several lightweight semantic segmentation models, namely Fast-SCNN [34], BiSetNet [35], LPS-Net [36], and STDC [37]. All models are trained exclusively on the RUGD dataset. As depicted in Figure 12, our model exhibits superior performance in segmenting unstructured scenes compared to the other models. To elaborate further, LPS-Net is prone to information loss due to the fusion of high- and low-resolution features, resulting in blurred segmentation outcomes and limited perception of rare classes, as evidenced by the third column in the results. Fast-SCNN is mainly applicable to semantic segmentation of urban road scenes, featuring a network structure with a limited perceptual field. As such, it may fail to capture enough contextual information in complex scenes, as demonstrated by the first row’s fourth column and the fifth row’s fourth column, which struggle to accurately segment street lights and wood. BiSetNet incorporates the bilateral convolution module, which can balance global context and local details. However, the insufficient interaction between the two parallel branches can lead to confusion and loss of feature information, resulting in incomplete segmentation. For instance, the fourth column fails to segment street lights and high vegetation successfully. The STDC model uses the edge-based segmentation method. When the image edge is blurred or noisy, its segmentation effect will be affected, which may lead to an inaccurate demarcation line between the foreground and background. As exemplified by the second row’s fifth column, the segmentation of high vegetation is not successful.

Figure 12.

Comparison between semantic segmentation results of the model presented in this paper and other models.

4.5.3. Model Performance Comparison

According to Table 4, it can be inferred that the model proposed in this paper achieves an mAcc of 85.21% and mIoU of 77.75%. This notable improvement can be attributed to the incorporation of channel attention and spatial attention, enabling the model to effectively capture detailed feature information and learn rare classes. The model also maintains a relatively low number of parameters, requiring only 5.75M parameters, thereby achieving real-time performance. Furthermore, when deployed on a ROS robot equipped with a Jetson Xavier NX, the model generates promising results with a frame rate of 30.17 FPS, achieving a balance of speed and accuracy for embedded devices.

Table 4.

Performance comparison of different network models.

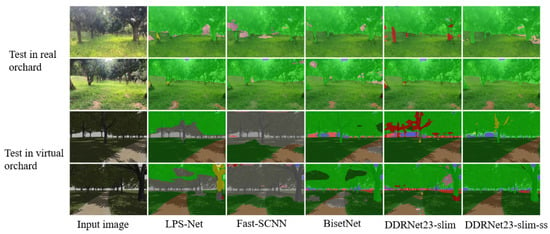

4.5.4. Deployed on ROS Robot Tested in Real-World and Simulated Environments

To evaluate the generalization ability of the proposed model in an orchard environment, we deployed it on a ROS robot equipped with a Jetson Xavier NX and accelerated it using TensorRT. It is important to note that the model was not trained with any orchard-domain-specific information. We conducted tests in both real and virtual orchard environments to evaluate the model’s performance. As depicted in Figure 13, the proposed model exhibited enhanced image segmentation generalization ability, achieving more accurate segmentation of trees and road contours while reducing the occurrence of class errors compared to the other models. The experimental results clearly indicate that the proposed model has strong generalization ability, which can effectively reduce the cost of manual labeling, and can be used as a transfer learning model for orchard environment road recognition.

Figure 13.

Test in real and virtual orchard environments, respectively.

5. Conclusions

Without adding additional computational effort, DDRNet23-slim-SS improves the generalization of the model while preserving the real-time performance of the original model. Furthermore, it demonstrates remarkable efficacy even with a limited amount of training data. It not only sustains the model’s performance on the source domain RUGD dataset but also yields a notable average improvement of approximately 14% on average when evaluated in other invisible unstructured domains.

By integrating channel attention and spatial attention into the network architecture of DDRNet23-slim, the mAcc and mIoU experience improvements of 3.26% and 3.02%, respectively. The conducted experiments reveal that the lightweight semantic segmentation model presented in this paper possesses superior capabilities compared to other lightweight semantic segmentation models, as measured by accuracy, segmentation speed, and number of parameters. It achieves superior performance while maintaining a desirable balance between speed and accuracy in segmentation tasks. The experimental results show that the RCS can solve the accuracy degradation caused by data imbalance to a certain extent.

Finally, we deployed our model on an agricultural robot equipped with the ROS and conducted tests to evaluate its generalization capability in both real and virtual orchard environments. Our model was exclusively trained on the RUGD dataset. The experimental results show that although there is no real orchard environment and no virtual orchard environment as the dataset for training, our model has better domain generalization ability compared with other alternative models. This mitigates the high cost of labeling to some extent. The applicability of this model extends beyond mountain autopilot, including but not limited to forestry rescue and intelligent harvesting of agricultural orchards.

Author Contributions

N.L. conceptualized the experiment, selected the algorithms, collected and analyzed the data, and wrote the manuscript. M.Z. and S.L. trained the algorithms, collected and analyzed data, and wrote the manuscript. W.Z. supervised the project. All authors have read and agreed to the published version of the manuscript.

Funding

The open competition program of top ten critical priorities of Agricultural Science and Technology Innovation for the 14th Five-Year Plan of Guangdong Province (2022SDZG03) and Guangdong Provincial Science and Technology Innovation Strategy Special Funds Project (pdjh2022b0078).

Data Availability Statement

The experimental dataset is a publicly available dataset, which can be obtained from the references. The model used in this study is not publicly available as it is part of the team’s upcoming work plan.

Acknowledgments

N.L. thanks S.L. for supporting and helping, W.Z. for supervision, and M.Z. for revising the article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Grigorescu, S.; Trasnea, B.; Cocias, T.; Macesanu, G. A survey of deep learning techniques for autonomous driving. J. Field Robot. 2020, 37, 362–386. [Google Scholar] [CrossRef]

- Gupta, A.; Anpalagan, A.; Guan, L.; Khwaja, A.S. Deep learning for object detection and scene perception in self-driving cars: Survey, challenges, and open issues. Array 2021, 10, 100057. [Google Scholar] [CrossRef]

- De Silva, V.; Roche, J.; Kondoz, A. Fusion of LiDAR and camera sensor data for environment sensing in driverless vehicles. arXiv 2018, arXiv:1710.06230v2. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Wigness, M.; Eum, S.; Rogers, J.G.; Han, D.; Kwon, H. A rugd dataset for autonomous navigation and visual perception in unstructured outdoor environments. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 5000–5007. [Google Scholar]

- Hoang, N.D.; Nguyen, Q.L. Fast local laplacian-based steerable and sobel filters integrated with adaptive boosting classification tree for automatic recognition of asphalt pavement cracks. Adv. Civ. Eng. 2018, 2018, 5989246. [Google Scholar] [CrossRef]

- Zhao, H.; Qin, G.; Wang, X. Improvement of canny algorithm based on pavement edge detection. In Proceedings of the 2010 3rd International Congress on Image and Signal Processing, Yantai, China, 16–18 October 2010. [Google Scholar]

- Huang, X.; Zhang, L. Road centreline extraction from high-resolution imagery based on multiscale structural features and support vector machines. Int. J. Remote Sens. 2009, 30, 1977–1987. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Baheti, B.; Innani, S.; Gajre, S.; Talbar, S. Eff-unet: A novel architecture for semantic segmentation in unstructured environment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 358–359. [Google Scholar]

- Liu, H.; Yao, M.; Xiao, X.; Cui, H. A hybrid attention semantic segmentation network for unstructured terrain on Mars. Acta Astronaut. 2023, 204, 492–499. [Google Scholar] [CrossRef]

- Jin, Y.; Han, D.; Ko, H. Trseg: Transformer for semantic segmentation. Pattern Recognit. Lett. 2021, 148, 29–35. [Google Scholar] [CrossRef]

- Guan, T.; Kothandaraman, D.; Chandra, R.; Sathyamoorthy, A.J.; Weerakoon, K.; Manocha, D. Ga-nav: Efficient terrain segmentation for robot navigation in unstructured outdoor environments. IEEE Robot. Autom. Lett. 2022, 7, 8138–8145. [Google Scholar] [CrossRef]

- Ganin, Y.; Lempitsky, V. Unsupervised domain adaptation by backpropagation. In Proceedings of the International Conference on Machine Learning, PMLR, Lille, France, 6–11 July 2015; pp. 1180–1189. [Google Scholar]

- Gan, C.; Yang, T.; Gong, B. Learning attributes equals multi-source domain generalization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 87–97. [Google Scholar]

- Pan, H.; Hong, Y.; Sun, W.; Jia, Y. Deep dual-resolution networks for real-time and accurate semantic segmentation of traffic scenes. IEEE Trans. Intell. Transp. Syst. 2022, 24, 3448–3460. [Google Scholar] [CrossRef]

- Peng, D.; Lei, Y.; Hayat, M.; Guo, Y.; Li, W. Semantic-aware domain generalized segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2594–2605. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Hoyer, L.; Dai, D.; Van Gool, L. Daformer: Improving network architectures and training strategies for domain-adaptive semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 9924–9935. [Google Scholar]

- Zhou, K.; Liu, Z.; Qiao, Y.; Xiang, T.; Loy, C.C. Domain generalization in vision: A survey. arXiv 2021, arXiv:2103.02503. [Google Scholar]

- Li, Y.; Fang, C.; Yang, J.; Wang, Z.; Lu, X.; Yang, M.H. Universal style transfer via feature transforms. In Proceedings of the Advances in Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; 30. [Google Scholar]

- Cho, W.; Choi, S.; Park, D.K.; Shin, I.; Choo, J. Image-to-image translation via group-wise deep whitening-and-coloring transformation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 10639–10647. [Google Scholar]

- Jadon, S. A survey of loss functions for semantic segmentation. In Proceedings of the 2020 IEEE Conference on Computational Intelligence in Bioinformatics and Computational Biology (CIBCB), Virtual, 27–29 October 2020; pp. 1–7. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Brostow, G.J.; Fauqueur, J.; Cipolla, R. Semantic object classes in video: A high-definition ground truth database. Pattern Recognit. Lett. 2009, 30, 88–97. [Google Scholar] [CrossRef]

- Behley, J.; Garbade, M.; Milioto, A.; Quenzel, J.; Behnke, S.; Stachniss, C.; Gall, J. Semantickitti: A dataset for semantic scene understanding of lidar sequences. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9297–9307. [Google Scholar]

- Jiang, P.; Osteen, P.; Wigness, M.; Saripalli, S. Rellis-3d dataset: Data, benchmarks and analysis. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 1110–1116. [Google Scholar]

- Valada, A.; Oliveira, G.L.; Brox, T.; Burgard, W. Deep multispectral semantic scene understanding of forested environments using multimodal fusion. In Proceedings of the 2016 International Symposium on Experimental Robotics, Tokyo, Japan, 3–6 October 2016; Springer: Berlin/Heidelberg, Germany, 2017; pp. 465–477. [Google Scholar]

- Maturana, D.; Chou, P.W.; Uenoyama, M.; Scherer, S. Real-time semantic mapping for autonomous off-road navigation. In Proceedings of the Field and Service Robotics: Results of the 11th International Conference, Zurich, Switzerland, 12–15 September 2017; Springer: Berlin/Heidelberg, Germany, 2018; pp. 335–350. [Google Scholar]

- Yang, Z.; Tan, Y.; Sen, S.; Reimann, J.; Karigiannis, J.; Yousefhussien, M.; Virani, N. Uncertainty-aware Perception Models for Off-road Autonomous Unmanned Ground Vehicles. arXiv 2022, arXiv:2209.11115. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Poudel, R.P.; Liwicki, S.; Cipolla, R. Fast-scnn: Fast semantic segmentation network. arXiv 2019, arXiv:1902.04502. [Google Scholar]

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. Bisenet: Bilateral segmentation network for real-time semantic segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 325–341. [Google Scholar]

- Zhang, Y.; Yao, T.; Qiu, Z.; Mei, T. Lightweight and Progressively-Scalable Networks for Semantic Segmentation. arXiv 2022, arXiv:2207.13600. [Google Scholar] [CrossRef]

- Fan, M.; Lai, S.; Huang, J.; Wei, X.; Chai, Z.; Luo, J.; Wei, X. Rethinking bisenet for real-time semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 9716–9725. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).