Abstract

Optimization approaches that determine sensitive sensor nodes in a large-scale, linear time-invariant, and discrete-time dynamical system are examined under the assumption of independent and identically distributed measurement noise. This study offers two novel selection algorithms, namely an approximate convex relaxation method with the Newton method and a gradient greedy method, and confirms the performance of the selection methods, including a convex relaxation method with semidefinite programming (SDP) and a pure greedy optimization method proposed in the previous studies. The matrix determinant of the observability Gramian was employed for the evaluations of the sensor subsets, while its gradient and Hessian were derived for the proposed methods. In the demonstration using numerical and real-world examples, the proposed approximate greedy method showed superiority in the run time when the sensor numbers were roughly the same as the dimensions of the latent system. The relaxation method with SDP is confirmed to be the most reasonable approach for a system with randomly generated matrices of higher dimensions. However, the degradation of the optimization results was also confirmed in the case of real-world datasets, while the pure greedy selection obtained the most stable optimization results.

1. Introduction

Collecting information from sensor measurements is often the only viable approach when estimating the internal state or hidden physical quantities. The optimization of sensor positions was intensively discussed in order to determine the most representative sensors and to reduce the resulting estimation error, such as when monitoring sensor networks [1,2,3,4], fluid flows around objects [5,6,7,8,9,10,11,12,13,14,15], plants and factories [16,17,18], infrastructures [19,20,21], circuits [22], and biological systems [23], estimating physical field [24,25,26,27], and localizing sources [28,29]. Recent advances in data science techniques have enabled us to extract reduced-order models from vastly large-scale measurements of complex phenomena [30,31,32,33,34,35,36,37,38,39]. Therefore, the optimized sensor measurement is gaining importance for the reconstruction of complex phenomena from sensor measurements based on the data-driven reduced-order models [40,41], as well as for model-free machine learning [42,43] and data assimilation [44,45,46].

The physical aspects of the phenomena are interpreted as state space models, while the assumed system representations are often nominal. Our study implements optimization frameworks for sensor node selections based on the criteria of expected estimation errors defined for these models. Sensor measurements in many nondynamical configurations are statistically described by the Fisher information matrix (FIM), which originated in the parameter sensitivity analysis [47,48]. This measure addresses the expected error ellipsoid of the estimation using instantaneous measurements, which corresponds to the inverse of the Cramer–Rao bound, see Chapter 8 of [49,50]. The combinatorial problem structure is transformed into small subproblems in a greedy formulation [40,41,51], of which, the solution quality is known to be ensured by the submodularity property in such optimization problems [52,53,54,55,56]. This property of the optimization problem helps to exploit other criteria from information theory, including entropy and various types of information distances [3,57,58]. Moreover, greedy selections are empirically confirmed to effectively work even in the absence of submodularity [51,59,60]. There are also other approaches that seek the global optimum by relaxing the integer constraints on the selection variable [50,61], or by employing proximal algorithms that form proximity operators [62,63,64].

The subset selection of sensor nodes for dynamical systems is similarly conducted based on the estimation error covariance, while the measurement quality evaluation tends to be more cumbersome. The observability Gramian is the counterpart of the FIM for linear time-invariant (LTI) representations with deterministic dynamics, as shown in [65]. The actuator node selection, which is a dual problem of the sensor node selection, is similarly obtained using the controllability Gramian [66,67]. It is worth emphasizing that a previous analysis used examples of random and regular networks of nodes of tens or hundreds due to the computational cost. For example, a previous study reported a tremendous increase in the computation time of the greedy method and the SDP relaxation method for a power grid system of such a size [66]. There are likely to be computational issues when the optimization objective is further extended to the observability for the nonlinear state space models [44,68,69], the error covariance of the Kalman filter [70,71,72,73,74], and the norm of an LTI system, which can be reduced through balanced truncation [75,76,77]. Accordingly, the main interest in this study is the applicability of the Gramian-based methods for the larger scale system constructed by the data-driven modeling methods.

The main claims of this paper are listed below:

Novel selection methods proposed: Two novel selection methods are proposed and implemented. Those two methods utilize the gradients of the objective functions while relaxing them to continuous functions. Computations in both methods are expected to be remarkably accelerated as compared to those of existing greedy or relaxation-based algorithms since the gradients of objective functions are mere quadratic forms of the dimensions of sensor candidates.

Comparison using high-dimensional and low-rank systems: A comparison of selection strategies is also provided in Section 4 to illustrate the characteristics of each selection method in terms of the execution time and the acquired optimization measure. This comparison also elaborates effective computational complexities of each selection method, which is distinct from the results of the previous study.

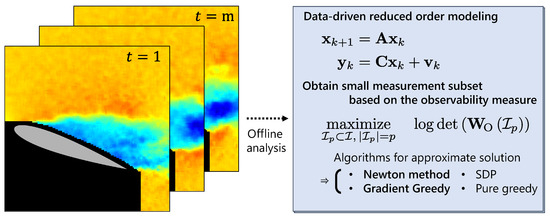

Figure 1 depicts the main frame of this manuscript. In the following sections, the basic formulation of the optimization problem is revealed in Section 2 after providing the dynamical system of interest. Section 3 subsequently addresses our novel algorithms for optimization while the previously presented approaches are briefly included. The systems are constructed in two different ways, for comparison; one is constructed via random numbers as a general case in Section 4.1, and in the other case, a data-driven method using proper orthogonal decomposition (POD) [78] is applied to real-world datasets, as seen in Section 4.2 and Section 4.3

Figure 1.

Brief description of this manuscript. (Left) The representative data point is revealed from “rich” measurement data by use of a data-driven model and optimization procedure. (Right) A data-driven method constructs linear reduced-order models before the optimization is conducted by approximate algorithms, including our novel methods, denoted by bold types.

2. LTI State Space and the Observability Gramian

This section provides rudimentary formulations that represent dynamics and optimization tasks based on the observability of the system. Problem settings are first presented with basic equations while notations for our formulation are defined. The term “observability” is omitted hereafter unless it causes confusion with the controllability Gramian or other Gram matrices. One may be reminded that the methodologies presented here are similarly adopted to controllability optimization since these properties are dual for the linear systems. A discrete-time LTI state space model is considered with states and measurements. Let be an observable pair and an autonomous system

generates a trajectory of state variables and observations . The subscript refers to a snapshot at the instance k. Assume the observation Equation (2) is corrupted by a Gaussian noise , which is independent of the state and has the variance of the same amplitude, , where is the identity matrix of size n. Here, is taking the expectation over the random variable. Some data-driven techniques systematically provide this linear representation from the measured data, such as proper orthogonal decomposition and dynamic mode decomposition [37,38]. In the state space representation obtained by the data-driven techniques, the state vector represents coordinates of a low-dimensional subspace inherent in the high-dimensional space of the measurement vector and, therefore, is assumed hereafter. The observability of the system is quantified by the Gramian equation

In our formulation, this matrix is characterized by the linear least-squares estimation of the state vector that utilizes time-series measurements. The measurement equation is extended first, using from to of Equations (1) and (2):

where and are the stacked components in the brackets in Equation (4). An estimate of is obtained by the linear inversion as follows:

where and stand for the estimate of and the Moore–Penrose pseudo inverse, respectively. The matrix is assumed to have full column rank due to . An error covariance matrix under the estimation is proportional to the inverse matrix of the Gramian, as shown in the following equation:

Here, let a small number of sensors be deployed while maintaining the quality of state estimation. The optimization problem is formulated as a selection of a subset from all available n measurement nodes, labeled as , where a measure of the Gramian is optimized under some constraints. A permutation matrix that extracts a part of the measurement corresponding to the sensor indices is defined by

where a unit vector has unity in the i-th entry, and the rest is zero. The Gramian given by the selected sensors is rewritten as a subset function

and, therefore, . Here, can be calculated by solving the following Lyapunov equation:

Some measures for the optimization of were presented in [66]. A maximization of the determinant of the Gramian is configured in the current study. The maximization problem is as follows:

The logarithmic form, which is monotone, is considered for the ease of calculations in the algorithms. This determinant maximization strategy is commonly used in the sensor placement and optimal design of interpolation methods [40,79].

3. Sensor Selection Strategies

The optimization problem (10) is a combinatorial problem and, therefore, finding the true optimum is computationally prohibitive. This section introduces four methods, as shown in Table 1, for obtaining a suboptimal yet reasonable solution to (10). Section 3.1 deals with convex relaxation approaches to (10), where the notations of SDP and approximate convex relaxation stand for the selection problems based on the semidefinite programming (SDP) of [67] and an approximate smooth convex relaxation for the Newton method, which is extended from [50], respectively. Subsequently, Section 3.2 provides formulations with regard to the pure greedy and gradient greedy methods, as a simplified greedy implementation of [66] for the matrix determinant maximization, while the latter is its linear approximation. The computational complexities are discussed in Section 3.3.

Table 1.

Selection algorithms for each relaxed problem and the expected arithmetic complexity order based on the basic matrix operations. Several approaches are proposed in the presented paper.

3.1. Convex Relaxation Methods

This strategy replaces the Gramian by the weighted sum of the Gramian for every sensor candidate, namely the relaxed Gramian. Let the sum of the selection weight be p, and the relaxed optimization problem of interest is:

This “weight” formulation is widely used in the various types of sensor selections, including linear inverse and Kalman filter estimations, [50,61]. The “gain” formulation, on the other hand, is employed to optimize the gains for yielding state variables from measurements. The group regularization is therein adopted to distinguish the representative measurement locations, which can also be found in the literature on sensor selection for linear inverse and Kalman filter estimations [62,63,64,80]. To the best of our knowledge, there is no existing study in which the gain formulation is applied to the sensor selection based on the observability Gramian. This may be because the Gramian is related to an infinite series of temporal measurements, and so are the gains for such measurements. Truncating Equation (6) to a finite time horizon is obviously a possible option, but dealing with a large solution vector and defining an appropriate finite time horizon as a hyperparameter are practically infeasible. Accordingly, the implementation of the gain formulation remains an interesting challenge.

3.1.1. Semidefinite Programming-Based Selection (SDP)

Convex relaxation for Gramian optimization was previously introduced in the section II-B of [67]; therefore, the readers should refer to the original work for more details. It should be emphasized that an optimization problem in the discrete-time form is briefly revisited in our study and is included in Section 4 for a comparison of selection methods. An optimization problem (11) is transformed into the following SDP representation:

where is the i-th row of the measurement matrix , and is a zero matrix with appropriate dimensions. It should be noted that the Lyapunov equation, imposed as a constraint in the problem, can be satisfied by SDP solvers introduced later. The complexity in Table 1 is based on a path-following method for a general primal–dual interior point method [81].

3.1.2. Newton Method for Approximate Convex Relaxation, and Its Customized Algorithm with Randomized Subspace Sampling (Approximate Convex Relaxation)

This novel method solves the convex relaxation problem by applying the Newton method and a customized randomization technique applied to Equation (11), with a penalty term that bounds the weight variables added.

The description of the proposed method starts with the extension of the formulation of sensor selection for static systems first introduced in [50].

In their approach, the Newton method solved a weight formulation of a determinant optimization for the FIM of the linear inverse problem, which returned the p-largest indices of as a result of a heuristic sensor node selection. In this study, the idea above is straightforwardly extended to the Gramian. A smooth convex objective function approximates Equation (10) as follows:

with , which adjusts the smoothness of the objective function. A Newton step is determined by minimizing the second-order approximation of the objective function under a constraint as discussed in the Section 10.2 of [50,81], with notation f referring to the objective function in Equation (13), as shown below:

The first and second derivatives, with respect to the selection weight , are given by

where is the Kronecker delta, and

is the solution of the following Lyapunov equation;

The procedure is carried out according to Algorithm 1, where the iteration is completed when the norm of the solution update falls below the threshold given.

| Algorithm 1 Newton algorithm for Equation (13) |

| Input: |

| Output: Indices of chosen p sensor positions |

| Set an initial weight |

| while convergence condition not satisfied do |

| Calculate by Equation (15) and by Equation (16) |

| Calculate by Equation (14) |

| Obtain step size t by backtracking line search |

| Set |

| end while |

| Return the indices of the p-largest components of as |

One of the most computationally demanding steps of the Newton method in Section 3.1.2 is the inverse of the Hessian in Equation (14), approximately reaching . The rest of this section describes an efficiency improvement for the Newton method of Algorithm 1, as the computational cost was relaxed using small sketches, as in [82,83]. The dimension of the sketched Newton step is , where the results for in [83] showed a drastic reduction in the total computation time for convergence in spite of the increase in the number of iterations. In this study, a sketching matrix is constructed using a permutation matrix for ease of computation, as summarized in the following and in Algorithm 2. The subspace referring to the permutation is randomly assigned from the n components, which is indexed by;

This random selection is biased, according to the selection weight in the previous iteration. This heuristic leads to a reasonable acceleration of the convergence because the weights of higher weighted sensors in the first few iterations are more likely updated frequently for convergence. In this study, half of the permutation coordinates correspond to the indices of the highest values of . The other half of the permutation coordinates were randomly selected from the remaining dimensions, and the exploration of sensor selection was further accelerated.

Accordingly, the calculations of the gradient (the first term in Equation (15)) and the Hessian (also the first term in Equation (16)) are simplified to the subspace indexed by . These subsampled derivatives and the derived Newton step are denoted by , , and The criterion of the convergence is modified from that in Algorithm 1 due to the randomness, where the algorithm stops if the update size is less than a given threshold in consecutive iterations.

| Algorithm 2 Customized algorithm of Algorithm 1 (BRS-Newton) |

| Input: |

| Output: Indices of chosen p sensor positions |

| Set |

| while convergence condition not satisfied do |

| Select [Equation (19)] and set |

| Calculate subsampled derivatives and |

| Calculate |

| Obtain step size t by backtracking line search |

| Set |

| end while |

| Return the indices of the p-largest components of as |

3.2. Greedy Algorithms

This type of algorithm sequentially adds the most effective sensor to the subset determined in the previous greedy iterations. The greedy algorithms, therefore, approximate the combinatorial aspect of the original optimization problem, yet preserve the discrete optimization structure. However, even the use of the greedy selection is empirically known to be prohibitive for the system of high-dimensional state vectors and observation vectors; therefore, the accelerating method is often applied [55,56]. Section 3.2.2 provides an approximated form of the greedy selection.

3.2.1. Greedy Selection with Simple Evaluation (Pure Greedy)

In the selection of the q-th sensor, where , the Gramian is obtained from the algebraic Lyapunov Equation (9). The submodularity in the objective function guarantees the quality of greedy solutions [52,53,84], and the derivation of the submodularity of several metrics related to the Gramian is discussed in detail in [66]. This method starts with finding the most effective single sensor; therefore, the determinant can be zero if the observability is not obtained by any single sensor. As mentioned in [66], one may evaluate the objective function for the observable subspace on such occasions. Algorithm 3 starts by decomposing the Gramian for each sensor candidate and identifying its subset , which results in the highest dimension of the observable subspace, then evaluates the determinant of the decomposed Gramian into the observable space by multiplying their nonzero eigenvalues. Once the full-rank Gramian is achieved by an obtained subset, the greedy algorithm drops the computation of the rank of the candidate Gramian since the rank is monotone-increasing [66].

| Algorithm 3 Determinant-based greedy algorithm (pure greedy) |

| Input: |

| Output: Indices of chosen p sensor positions |

| for do |

| end for |

3.2.2. Greedy Selection with Gradient Approximation (Gradient Greedy Proposed in This Study)

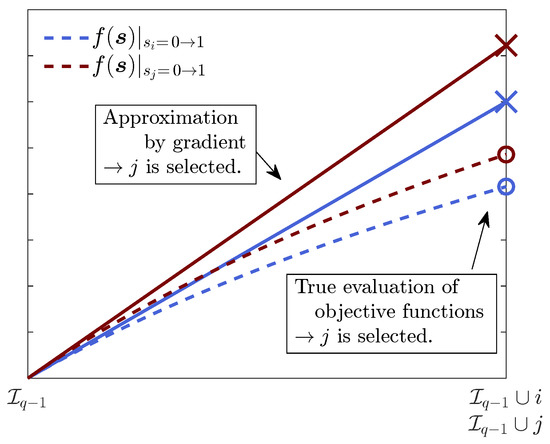

Algorithm 4 proposed in this paper is expected to accelerate the greedy evaluations by the linear approximation of the objective function, which is schematically explained in Figure 2.

Figure 2.

Schematic of the linear approximation of the gradient greedy algorithm for a convex function .

The gradient greedy algorithm selects, in the current step q, a sensor corresponding to the element of the highest gradient of the objective function in the prior step. Recall the gradient with respect to the i-th sensor candidate of Equation (15);

where is the same notation as used in (13). In the selection of the q-th sensor, the i-th component of is given by replacing as follows:

This approximation drops the number of evaluations of the Lyapunov equation and the matrix determinant from the greedy selection, and instead computes inner products of vectors with weighting matrix including the Gramian determined in the previous search. Note that the inverse in Equation (20) is singular when the system is unobservable. A small regularization term is therefore added to to ensure its regularity.

| Algorithm 4 Determinant-based gradient greedy algorithm (Gradient greedy) |

| Input: |

| Output: Indices of chosen p sensor positions |

| for do |

| Find s.t. |

| Calculate |

| end for |

3.3. Expected Computational Complexity

This section describes the expected computational complexity of selection methods based on the matrix operations found in textbooks of basic linear algebra. The leading terms, with respect to the system dimension parameters , are summarized in Table 1. This also gives the expected overhead term of the run time for each algorithm, while in practice, more compact expressions can be obtained, depending on the libraries for computation employed and the problem structures considered. Readers should also note that the notation of complexity conventionally omits the constant factors for simplicity and, therefore, lower-order terms might overwhelm the others in actual computations.

Although the optimal subset for Equation (10) can be found by calculating the objective function for all subsets of which the member size is p, the computational complexity of this brute-force search will be and barely amenable. As for the existing approaches, a naive implementation of the linear convex relaxation method assuming the semidefinite problem (SDP) structure is a simplified form of [67]. The interior point method and path-following iterations should require per iteration to construct the Newton direction. This is due to the linear matrix inequality (LMI) constraints, such as the Gramian, being semidefinite, or the selection variable being bounded, while it is known that certain problem structures ameliorate the overall costs ([81], Section 11.8). A simple greedy algorithm (pure greedy) requires solving the Lyapunov equation and calculating the determinant of the observability Gramian, of which, the cost is , for all n sensor candidates and p sensor increment iterations. The overall computational complexity is, therefore, .

The proposed methods will accelerate both of the existing approaches so far by comparing the theoretical complexities. The proposed convex relaxation method (denoted by approximate convex relaxation) utilizes the Newton method iterations for the sensor selection. The algorithm requires computations of the inversion of Hessian. Furthermore, Equation (18) will be solved for every for Equation (16), which requires computational complexities of for solving the algebraic Lyapunov Equation (18) and obtaining the matrix multiplication inside, respectively. The first term in Equation (16) requires ; therefore, the leading terms will be the sum per iteration. The sketching matrix, in Algorithm 2, compresses the dimension of the Newton system to , and reduces the costs of the above-mentioned computations by ratio. However, its computational complexity notation does not change. The proposed gradient greedy algorithm (gradient greedy) replaces the evaluations of the Lyapunov equation over n sensor candidates with inner products of vectors weighted by an matrix. The overall computational complexity will be , where an evaluation of the algebraic Lyapunov equation is taken only p times.

4. Comparison and Discussion

Numerical examples illustrate the efficiency of the considered algorithms in Section 4. The tested systems, which are represented by , are generated by synthetic datasets of random numbers and real-world counterparts of large-scale measurements. As used in Section 2, the systems of interest are in discrete-time LTI forms. The entire computation was conducted on MATLAB R2022a, and CVX 2.2 [85,86]; the MOSEK solver was used for the SDP-based selection, as noted in Appendix A. The dlyap function was used for the solutions of the discrete-time Lyapunov equations, such as Equations (9) and (18), adopting the subroutine libraries from the Subroutine Library in Control Theory (SLICOT) [87,88,89,90]. It should also be noted that there is another approach to solving the equation, such as [91]. The MATLAB programs are available through the GitHub repository of the present authors [92].

4.1. Results of the Randomly Generated System

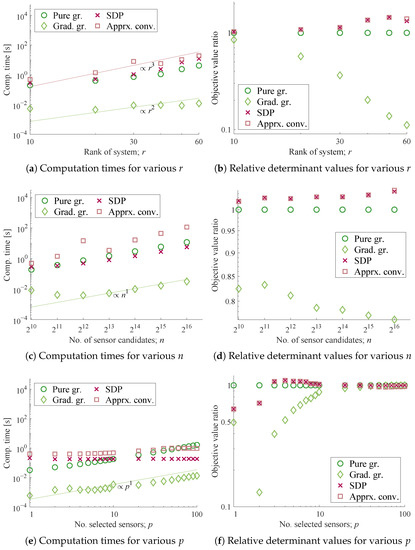

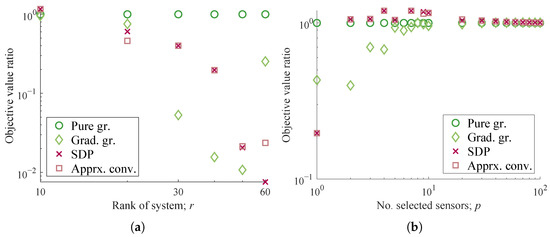

The characteristics of the sensor selection methods are investigated for different sets of system dimension parameters by varying one parameter while fixing the others. The abscissa of Figure 3a,b is the rank , which is the dimension of the reduced state variable vector and, thus, of the Gramian. In Figure 3c,d, denote the sizes of node members comprising the original measurements, and accordingly the number of sensor candidates. In Figure 3e,f, represents the varying number of sensors selected.

Figure 3.

Computation times and optimization results for randomly generated systems, for (a,b) varying the number of state variables r, i.e., the size of matrix (average of 100 times trial, ); (c,d) varying the number of sensor candidates n, i.e., the rows of matrix (average of 20 times trial, ); (e,f) varying the number of sensors selected p, (average of 100 times trial, ).

The problem setting for the system of random numbers is found in [39]. First, the conjugate complex numbers, of which the real parts are negative, are assigned to the eigenvalues of a damping continuous-time system matrix . A discrete-time system matrix can be obtained by , which is stable and full-rank, whereas is the time step of the discrete system. The observation matrix is a column-orthogonal matrix generated by the singular value decomposition for a matrix of Gaussian random numbers of appropriate dimensions. Sensors up to p are selected using the algorithms presented in the previous section, and the objective function, , is calculated for each selected subset. Figure 3 shows the performance of the selection methods applied to the system of random numbers.

Figure 3a,c,e illustrate the total computation time of each algorithm in the tests, where the gradient greedy method is the least time-consuming, followed by the pure greedy and the SDP-based methods, which produce similar results. Unfortunately, the proposed approximate convex relaxation method took the longest time to solve the optimization in almost all of the conditions tested despite its acceleration owing to the customized randomization. However, it is also expected that the computation time of the SDP-based method and the pure greedy selection will exceed the others for even larger r and p, respectively.

The empirical orders of the computation time with regard to each parameter are analyzed herein. The growth rates of the computation time of each algorithm are evaluated by solely changing the system dimension parameters r, n, and p, as summarized in Table 2, based on the results shown in Figure 3a,c,e. First, the gradient greedy method ran in time proportional to n, but it is not clear regarding r. Since the number of sensor candidates, n, is much larger than r in the first experiment, the term with is not significant for the gradient greedy method. The increase against p is on the order of unity, which is clearly reasonable. Second, the empirical orders of the pure greedy method when solely changing r, n, and p are , n, and p, respectively, which agrees with the expected leading order. One can see a term with becomes dominant as r grows in Figure 3a. Meanwhile, the estimated orders of the SDP-based method when solely changing r and n are and n, while those of the approximate convex relaxation method are and , respectively. Here, let the notation stand for (despite an obvious abuse) a real number x bounded by and j for an arbitrary positive integer j. These noninteger orders may be due to the optimized arithmetic employed in the software, such as the Strassen-like algorithm [93]. The dependency regarding p was not clear in the SDP-based method, while a slight increase was observed for the approximate convex relaxation method.

Table 2.

Practical orders of the computation times of selection methods, investigated with respect to each parameter individually.

The interesting aspect is that the dominating order of the SDP-based method with respect to n was not , which was initially expected but is approximately proportional to n. This is perhaps because the constraint posed as in Appendix A was simplified in the tested implementation as a mere diagonal block of the semidefinite linear matrix inequality (LMI), and the MOSEK solver should have taken advantage of its structure during the Newton step calculation, despite the large n assumed. It should also be noted that this efficacy was not observed for other solvers, such as the SDPT3, although the CVX parser does not seem to change its output. Nonetheless, the complexity of solving the SDP would be enormous, as expected, if the LMI included such a semidefinite relaxation [61,94] of the selection variable vector , such as being a semidefinite matrix. This agrees well with the observations from the experiment for the SDP-based method regarding r, where the LMI of the dimension of is included.

In addition to the computation time per step, the iteration numbers before convergence, as shown in Table 3, also illustrate the computationally friendly features for the high-dimensional problems. The iteration numbers of the SDP-based and the approximate convex relaxation methods do not increase significantly as r, n, and p individually increase. Interestingly, the iteration numbers of these convex relaxation methods change in a different fashion. That of the SDP-based method slightly increases with increases in n and p, and slightly decreases with an increase in r, while that of the approximate convex relaxation shows opposite results. This leads to similar growth rates against r between these two methods, as shown in Figure 3a, where the empirical computational complexity is less than for the SDP-based method and more than for the approximate convex relaxation method. Moreover, Figure 3c shows that this difference leads to growth rates that are slightly larger than for the SDP-based method and smaller than for the approximate convex relaxation method, against n. This unexpected efficiency of the SDP-based method leads to better scalability with respect to n over the approximate convex relaxation, which is the other convex relaxation method. With regard to the increase in p, the iteration numbers of the SDP-based method increased only slightly, while those of the approximate convex relaxation method exhibited an increase around , corresponding to the increase in the total run time in Figure 3e.

Table 3.

Iteration numbers of the convex relaxation approaches, investigated with respect to each parameter individually. Averages are rounded to integers.

As shown in Figure 3b,d,f, almost the same objective values were obtained by the SDP-based and the approximate convex relaxation methods, which implies a good agreement between the solutions of these relaxation methods. They yielded better or comparable objective function values as compared to the greedy methods, which were up to twice as high, except for cases. Those obtained by the gradient greedy method, on the other hand, give an inferior impression as a selection method as compared with the other methods for cases. This is possibly due to the difficulty in ensuring the observability with a limited number of sensors, especially , which should lead to an unstable calculation of the gradient Equation (20). The gradient greedy algorithm is concluded to have poor performance in achieving observability, especially when the convex objective function is hardly approximated by its linear tangent due to large r.

The discussion above illustrates that the use of the SDP-based method seems to be the most favorable among the compared methods in this experiment. The use of the pure greedy method is also a reasonable choice due to its shorter computation time, straightforward implementation, and reasonable performance, which returns half the objective function value of the convex relaxation methods in the experiments.

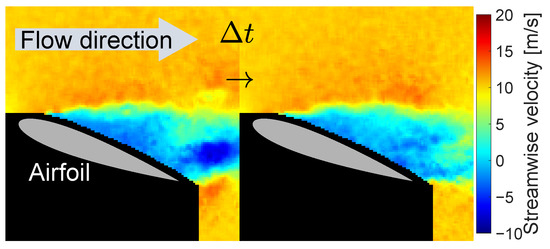

4.2. Results for Data-Driven System Derived from Real-World Experiment

An example of a practical application was conducted using the experimental dataset of flow velocity distribution around an airfoil. The data used herein are found in [95,96], which were previously acquired by the particle image velocimetry (PIV) in a wind tunnel. A brief description of the experiment is shown in Table 4; refer to the original article by the authors for more specific information. The snapshots taken in the experiment quantify velocity vectors that span the visualized plane, i.e., two components on a two-dimensional grid of points, as depicted in Figure 4.

Table 4.

Brief descriptions of PIV data [95,96].

Figure 4.

Visualized flow around NACA0015 airfoil [95,96]. Streamwise components in a time-series measurement are shown and used in the demonstration to construct the reduced-order state variables Equation (21). ( s in this figure).

Only the streamwise components (the direction shown by the arrow in Figure 4) are used, and the ensemble averages over m snapshots are subtracted at each measurement location; that is, averages for each pixel of the calculated velocity image.

As attempted in Section 4.1, a linear representation is derived first. The data-driven system identification procedure is based on the modeling method of [30,78]. Here, the snapshots of the velocity field are reshaped to -dimensional m vectors, , then the data matrix is defined by . The proper orthogonal decomposition (POD) is then adopted and the data are projected onto the subspace of the leading r POD modes [37], resulting in (, respectively). The measurement matrix is defined by , which consists of r left singular vectors related to the largest r singular values. These left singular vectors illustrate the dominant spatial coherent structures, while the right vectors represent temporal coherence, and the singular values are the amplitudes of these modes. The use of POD in our study is intended for a more fundamental discussion based on a linear system representation where arbitrary-order low-rank systems are derived from high-dimensional measurement data.

The r-dimensional state variable vector is given by

After introducing and , the system matrix is then expressed using linear least squares via the pseudo-inverse operation . The system matrix is manipulated to ensure that its eigenvalues are less than unity and, therefore, the considered systems are stable.

As discussed in Section 4.1, the comparisons are illustrated using the objective function values obtained. The sensor candidate set corresponds to each pixel obtained by the experimental visualization and, thus, its size is fixed to . The state variable vector is set to have components as a result of the order reduction at different truncation thresholds, while p is fixed to 20. Moreover, the results with respect to various p values are also provided, where with being fixed. The original dataset consisting of the snapshots is divided into consecutive snapshots and, therefore, the following results are the five-fold average.

The values of the objective function show similar trends to Figure 3b,f for the gradient greedy algorithm, while a large degradation is observed in the convex relaxation methods for . Surprisingly, those of the convex relaxation methods are 2–100 times lower than that of the pure greedy method. This trend is also partially observed for the case in Figure 5b, where the pure greedy selection corresponds to the true optimum for this configuration by definition. This clear degradation in the convex relaxations has not yet been explained in this study and may be due to the ill condition of the low-order approximation or the numerical error. A similar discussion was conducted for the previous study [66]. The cause should be investigated in detail for a practical application with the real-world data in a future study.

Figure 5.

Optimization results for a system based on the experimental data of fluid dynamics. (a) Relative determinant values for various r . (b) Relative determinant values for various p .

The results above, together with those in the previous subsection, illustrate that the pure greedy method shows a more stable performance, while the convex relaxation methods show wide variations in their performance. Therefore, the pure greedy method can be considered the most appropriate method, taking into account the computation time. It should be noted that the present study also shows the prominent performance of the SDP-based method in terms of the objective function and the computation time in the randomly generated, general problem settings. As long as the additional computation time is acceptable, the present authors recommend trying both the pure greedy and the SDP-based methods and to use a better sensor set when selecting the sensors based on the observability Gramian.

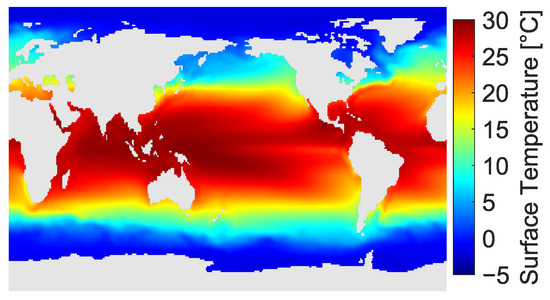

4.3. Results for Data-Driven System Derived from Weather Observation

A data-driven linear model is obtained from the time-series data of the global sea surface temperature (SST), as conducted in Section 4.2. Table 5 and Figure 6 briefly describe the conditions of processing for the SST dataset. The satellite observation, along with in situ correction composites, interpolated the distribution of SST, and the weekly mean distribution was stacked in the data matrix as a time-series measurement spanning from 1989 to 2023. The data matrix consists of 1727 snapshots of 44,219 spatial components, which correspond to the number of columns and rows, respectively. The sensor selection application to the SST dataset is a common benchmark, as found in [2,40,41,63,97,98].

Table 5.

Brief descriptions of sea surface temperature data [99,100].

Figure 6.

Mean value distribution of the global sea surface temperature (from 31 December 1989 to 29 January 2023).

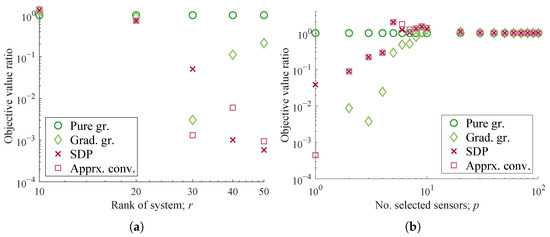

The linear model was constructed in the same data-driven manner as previously explained in Section 4.2. Moreover, with a dimension set of the system given by r = 10, n = 44,219 and , the evaluation was conducted as in the previous section by varying r and p solely as , and , respectively. The whole duration was used for the construction of the reduced-order model in this example.

The sensor selection results in Figure 7a,b support the observations of the real-world examples of PIV measurements in Section 4.2, as the optimization measures of the SDP-based method and the approximate relaxation method similarly returned deteriorated results as compared with those of the pure-greedy method at .

Figure 7.

Optimization results for a system based on the experimental data. (a) Relative determinant values for various r (p = 10, n = 44,219). (b) Relative determinant values for various p (r = 10, n = 44,219).

5. Conclusions

This research investigates efficient algorithms that give beneficial sensor positions based on the observability Gramian of a discrete-time linear dynamical system, and are essential to realizing effective state estimation and control. The selection methods were characterized using synthetic numerical examples and a data-driven system representation of a real-world dataset. An analysis of the increase in computation time with respect to the system dimension parameters showed the effectiveness of each selection method, along with comparisons of their optimization performance.

This study offers two novel approaches for sensor selection: an approximate convex relaxation solved by a customized Newton method and a linear approximation of a pure greedy evaluation. A comparison was conducted against conventional methods, including a convex relaxation to the semidefinite programming and the pure greedy method. The proposed gradient greedy method achieved a moderate solution with orders-of-magnitude speedups compared to the other methods when the dimensions of the system’s state variables did not exceed the number of sensors deployed. Meanwhile, this method was found to be incapable of ensuring the observability of the larger dimensions of the state variable. The convex relaxation methods, including the SDP-based and the approximate convex relaxation methods, achieved better solutions than the greedy methods in synthetic data, while the real-world example discloses their unstable optimization results, especially for a large-scale system. In less observable situations, the pure greedy selection is supposed to be the most reliable choice in terms of the optimization metric obtained.

Author Contributions

Conceptualization, D.T. and T.N. (Taku Nonomura); methodology, K.Y., Y.S., D.T. and T.N. (Takayuki Nagata); software, K.Y. and T.N. (Takayuki Nagata); validation, Y.S. and T.N. (Takayuki Nagata); formal analysis, K.Y.; investigation, K.Y.; resources, K.Y. and K.N.; data curation, K.Y. and K.N.; writing—original draft preparation, K.Y.; writing—review and editing, T.N. (Takayuki Nagata), T.N. (Taku Nonomura) and Y.S.; visualization, K.Y.; supervision, T.N. (Taku Nonomura); project administration, T.N. (Taku Nonomura); funding acquisition, K.Y. and T.N. (Taku Nonomura). All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by JSPS KAKENHI (21J20671), JST Moonshot (JPMJMS2287), JST CREST (JPMJCR1763), and JST FOREST (JPMJFR202C).

Data Availability Statement

The MATLAB codes used for this manuscript are found in the GitHub repository [92]. The real-world experimental data used in the results section are also in another repository [96].

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. CVX Implementation for Equation (12)

Thanks to the parser CVX [85,86], the SDP formulation defined by Equation (12) is easily implemented in MATLAB and solved by using the MOSEK solver in [67] and in our case. The actual implementation is shown below, and MATLAB codes are provided in [92].

[r,~] = size(A);

[n,~] = size(C);

cvx_solver(’MOSEK’)

cvx_begin sdp

variable z(n) nonnegative

variable X(r,r) symmetric semidefinite

maximize( det_rootn(X) )

subject to

% matrix inequality

A’*X*A-X+C’*(repmat(z,1,r).*C) >= zeros(r,r);

% bounds selection variables

z <= 1;

sum(z) == p;

cvx_end

References

- Sakiyama, A.; Tanaka, Y.; Tanaka, T.; Ortega, A. Eigendecomposition-Free Sampling Set Selection for Graph Signals. IEEE Trans. Signal Process. 2019, 67, 2679–2692. [Google Scholar] [CrossRef]

- Nomura, S.; Hara, J.; Tanaka, Y. Dynamic Sensor Placement Based on Graph Sampling Theory. arXiv 2022. [Google Scholar] [CrossRef]

- Sun, C.; Yu, Y.; Li, V.O.K.; Lam, J.C.K. Multi-Type Sensor Placements in Gaussian Spatial Fields for Environmental Monitoring. Sensors 2019, 19, 189. [Google Scholar] [CrossRef] [PubMed]

- Li, B.; Liu, H.; Wang, R. Efficient Sensor Placement for Signal Reconstruction Based on Recursive Methods. IEEE Trans. Signal Process. 2021, 69, 1885–1898. [Google Scholar] [CrossRef]

- Natarajan, M.; Freund, J.B.; Bodony, D.J. Actuator selection and placement for localized feedback flow control. J. Fluid Mech. 2016, 809, 775–792. [Google Scholar] [CrossRef]

- Inoue, T.; Ikami, T.; Egami, Y.; Nagai, H.; Naganuma, Y.; Kimura, K.; Matsuda, Y. Data-Driven Optimal Sensor Placement for High-Dimensional System Using Annealing Machine. arXiv 2022. [Google Scholar] [CrossRef]

- Inoba, R.; Uchida, K.; Iwasaki, Y.; Nagata, T.; Ozawa, Y.; Saito, Y.; Nonomura, T.; Asai, K. Optimization of sparse sensor placement for estimation of wind direction and surface pressure distribution using time-averaged pressure-sensitive paint data on automobile model. J. Wind. Eng. Ind. Aerodyn. 2022, 227, 105043. [Google Scholar] [CrossRef]

- DeVries, L.; Paley, D.A. Observability-based optimization for flow sensing and control of an underwater vehicle in a uniform flowfield. In Proceedings of the 2013 American Control Conference, Washington, DC, USA, 17–19 June 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1386–1391. [Google Scholar] [CrossRef]

- Kanda, N.; Nakai, K.; Saito, Y.; Nonomura, T.; Asai, K. Feasibility study on real-time observation of flow velocity field using sparse processing particle image velocimetry. Trans. Jpn. Soc. Aeronaut. Space Sci. 2021, 64, 242–245. [Google Scholar] [CrossRef]

- Kaneko, S.; Ozawa, Y.; Nakai, K.; Saito, Y.; Nonomura, T.; Asai, K.; Ura, H. Data-Driven Sparse Sampling for Reconstruction of Acoustic-Wave Characteristics Used in Aeroacoustic Beamforming. Appl. Sci. 2021, 11, 4216. [Google Scholar] [CrossRef]

- Carter, D.W.; De Voogt, F.; Soares, R.; Ganapathisubramani, B. Data-driven sparse reconstruction of flow over a stalled aerofoil using experimental data. Data-Centric Eng. 2021, 2, e5. [Google Scholar] [CrossRef]

- Tiwari, N.; Uchida, K.; Inoba, R.; Saito, Y.; Asai, K.; Nonomura, T. Simultaneous measurement of pressure and temperature on the same surface by sensitive paints using the sensor selection method. Exp. Fluids 2022, 63, 171. [Google Scholar] [CrossRef]

- Li, S.; Li, W.; Noack, B.R. Least-order representation of control-oriented flow estimation exemplified for the fluidic pinball. J. Phys. Conf. Ser. 2022, 2367, 012024. [Google Scholar] [CrossRef]

- Kanda, N.; Abe, C.; Goto, S.; Yamada, K.; Nakai, K.; Saito, Y.; Asai, K.; Nonomura, T. Proof-of-concept Study of Sparse Processing Particle Image Velocimetry for Real Time Flow Observation. Exp. Fluids 2022, 63, 143. [Google Scholar] [CrossRef]

- Inoue, T.; Ikami, T.; Egami, Y.; Nagai, H.; Naganuma, Y.; Kimura, K.; Matsuda, Y. Data-driven optimal sensor placement for high-dimensional system using annealing machine. Mech. Syst. Signal Process. 2023, 188, 109957. [Google Scholar] [CrossRef]

- Alonso, A.A.; Frouzakis, C.E.; Kevrekidis, I.G. Optimal sensor placement for state reconstruction of distributed process systems. AIChE J. 2004, 50, 1438–1452. [Google Scholar] [CrossRef]

- Ren, Y.; Ding, Y.; Liang, F. Adaptive evolutionary Monte Carlo algorithm for optimization with applications to sensor placement problems. Stat. Comput. 2008, 18, 375. [Google Scholar] [CrossRef]

- Hoseyni, S.M.; Di Maio, F.; Zio, E. Subset simulation for optimal sensors positioning based on value of information. Proc. Inst. Mech. Eng. Part O J. Risk Reliab. 2022, 1748006X221118432. [Google Scholar] [CrossRef]

- Castro-Triguero, R.; Murugan, S.; Gallego, R.; Friswell, M.I. Robustness of optimal sensor placement under parametric uncertainty. Mech. Syst. Signal Process. 2013, 41, 268–287. [Google Scholar] [CrossRef]

- Krause, A.; Leskovec, J.; Guestrin, C.; VanBriesen, J.; Faloutsos, C. Efficient sensor placement optimization for securing large water distribution networks. J. Water Resour. Plan. Manag. 2008, 134, 516–526. [Google Scholar] [CrossRef]

- Lee, E.T.; Eun, H.C. Optimal Sensor Placement in Reduced-Order Models Using Modal Constraint Conditions. Sensors 2022, 22, 589. [Google Scholar] [CrossRef]

- Bates, R.; Buck, R.; Riccomagno, E.; Wynn, H. Experimental design and observation for large systems. J. R. Stat. Soc. Ser. B Methodol. 1996, 58, 77–94. [Google Scholar] [CrossRef]

- Tzoumas, V.; Xue, Y.; Pequito, S.; Bogdan, P.; Pappas, G.J. Selecting sensors in biological fractional-order systems. IEEE Trans. Control. Netw. Syst. 2018, 5, 709–721. [Google Scholar] [CrossRef]

- Yildirim, B.; Chryssostomidis, C.; Karniadakis, G. Efficient sensor placement for ocean measurements using low-dimensional concepts. Ocean Model. 2009, 27, 160–173. [Google Scholar] [CrossRef]

- Kraft, T.; Mignan, A.; Giardini, D. Optimization of a large-scale microseismic monitoring network in northern Switzerland. Geophys. J. Int. 2013, 195, 474–490. [Google Scholar] [CrossRef]

- Nagata, T.; Nakai, K.; Yamada, K.; Saito, Y.; Nonomura, T.; Kano, M.; Ito, S.i.; Nagao, H. Seismic Wavefield Reconstruction based on Compressed Sensing using Data-Driven Reduced-Order Model. Geophys. J. Int. 2022, 322, 33–50. [Google Scholar] [CrossRef]

- Nakai, K.; Nagata, T.; Yamada, K.; Saito, Y.; Nonomura, T.; Kano, M.; Ito, S.i.; Nagao, H. Observation Site Selection for Physical Model Parameter Estimation toward Process-Driven Seismic Wavefield Reconstruction. arXiv 2022, arXiv:2206.04273. [Google Scholar] [CrossRef]

- Doğançay, K.; Hmam, H. On optimal sensor placement for time-difference-of-arrival localization utilizing uncertainty minimization. In Proceedings of the 2009 17th European Signal Processing Conference, Glasgow, UK, 24–28 August 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 1136–1140. [Google Scholar]

- Yeo, W.J.; Taulu, S.; Kutz, J.N. Efficient magnetometer sensor array selection for signal reconstruction and brain source localization. arXiv 2022, arXiv:2205.10925. [Google Scholar]

- Brunton, S.L.; Kutz, J.N. Data-Driven Science and Engineering: Machine Learning, Dynamical Systems, and Control; Cambridge University Press: Cambridge, UK, 2019. [Google Scholar]

- Kutz, J.N.; Brunton, S.L.; Brunton, B.W.; Proctor, J.L. Dynamic Mode Decomposition: Data-Driven Modeling of Complex Systems; SIAM: Philadelphia, PA, USA, 2016; Volume 149. [Google Scholar]

- Baddoo, P.J.; Herrmann, B.; McKeon, B.J.; Kutz, J.N.; Brunton, S.L. Physics-informed dynamic mode decomposition (piDMD). arXiv 2021, arXiv:2112.04307. [Google Scholar]

- Scherl, I.; Strom, B.; Shang, J.K.; Williams, O.; Polagye, B.L.; Brunton, S.L. Robust principal component analysis for modal decomposition of corrupt fluid flows. Phys. Rev. Fluids 2020, 5, 054401. [Google Scholar] [CrossRef]

- Jovanović, M.R.; Schmid, P.J.; Nichols, J.W. Sparsity-promoting dynamic mode decomposition. Phys. Fluids 2014, 26, 024103. [Google Scholar] [CrossRef]

- Iwasaki, Y.; Nonomura, T.; Nakai, K.; Nagata, T.; Saito, Y.; Asai, K. Evaluation of Optimization Algorithms and Noise Robustness of Sparsity-Promoting Dynamic Mode Decomposition. IEEE Access 2022, 10, 80748–80763. [Google Scholar] [CrossRef]

- Zhang, X.; Ji, T.; Xie, F.; Zheng, H.; Zheng, Y. Unsteady flow prediction from sparse measurements by compressed sensing reduced order modeling. Comput. Methods Appl. Mech. Eng. 2022, 393, 114800. [Google Scholar] [CrossRef]

- Berkooz, G.; Holmes, P.; Lumley, J.L. The proper orthogonal decomposition in the analysis of turbulent flows. Annu. Rev. Fluid Mech. 1993, 25, 539–575. [Google Scholar] [CrossRef]

- Schmid, P.J. Dynamic mode decomposition of numerical and experimental data. J. Fluid Mech. 2010, 656, 5–28. [Google Scholar] [CrossRef]

- Rowley, C.; Williams, M.; Kevrekidis, I. Dynamic Mode Decomposition and the Koopman Operator: Algorithms and Applications; IPAM, UCLA: Los Angeles, CA, USA, 2014; p. 47. [Google Scholar]

- Manohar, K.; Brunton, B.W.; Kutz, J.N.; Brunton, S.L. Data-driven sparse sensor placement for reconstruction: Demonstrating the benefits of exploiting known patterns. IEEE Control Syst. Mag. 2018, 38, 63–86. [Google Scholar] [CrossRef]

- Saito, Y.; Nonomura, T.; Yamada, K.; Nakai, K.; Nagata, T.; Asai, K.; Sasaki, Y.; Tsubakino, D. Determinant-based fast greedy sensor selection algorithm. IEEE Access 2021, 9, 68535–68551. [Google Scholar] [CrossRef]

- Paris, R.; Beneddine, S.; Dandois, J. Robust flow control and optimal sensor placement using deep reinforcement learning. J. Fluid Mech. 2021, 913, A25. [Google Scholar] [CrossRef]

- Fukami, K.; Maulik, R.; Ramachandra, N.; Fukagata, K.; Taira, K. Global field reconstruction from sparse sensors with Voronoi tessellation-assisted deep learning. Nat. Mach. Intell. 2021, 3, 945–951. [Google Scholar] [CrossRef]

- Yoshimura, R.; Yakeno, A.; Misaka, T.; Obayashi, S. Application of observability Gramian to targeted observation in WRF data assimilation. Tellus A Dyn. Meteorol. Oceanogr. 2020, 72, 1–11. [Google Scholar] [CrossRef]

- Misaka, T.; Obayashi, S. Sensitivity Analysis of Unsteady Flow Fields and Impact of Measurement Strategy. Math. Probl. Eng. 2014, 2014, 359606. [Google Scholar] [CrossRef]

- Mons, V.; Marquet, O. Linear and nonlinear sensor placement strategies for mean-flow reconstruction via data assimilation. J. Fluid Mech. 2021, 923, A1. [Google Scholar] [CrossRef]

- Fisher, R.A. Theory of Statistical Estimation. Math. Proc. Camb. Philos. Soc. 1925, 22, 700–725. [Google Scholar] [CrossRef]

- Martin, M.; Perez, J.; Plastino, A. Fisher information and nonlinear dynamics. Phys. A Stat. Mech. Its Appl. 2001, 291, 523–532. [Google Scholar] [CrossRef]

- Kay, S.M. Fundamentals of Statistical Signal Processing: Estimation Theory; Prentice-Hall, Inc.: Hoboken, NJ, USA, 1993. [Google Scholar]

- Joshi, S.; Boyd, S. Sensor selection via convex optimization. IEEE Trans. Signal Process. 2009, 57, 451–462. [Google Scholar] [CrossRef]

- Nakai, K.; Yamada, K.; Nagata, T.; Saito, Y.; Nonomura, T. Effect of Objective Function on Data-Driven Greedy Sparse Sensor Optimization. IEEE Access 2021, 9, 46731–46743. [Google Scholar] [CrossRef]

- Nemhauser, G.L.; Wolsey, L.A.; Fisher, M.L. An analysis of approximations for maximizing submodular set functions. Math. Program. 1978, 14, 265–294. [Google Scholar] [CrossRef]

- Lovász, L. Submodular functions and convexity. In Mathematical Programming the State of the Art; Springer: Berlin/Heidelberg, Germany, 1983; pp. 235–257. [Google Scholar] [CrossRef]

- Krause, A.; Golovin, D. Submodular Function Maximization. In Tractability; Cambridge University Press: Cambridge, UK, 2014; pp. 71–104. [Google Scholar] [CrossRef]

- Mirzasoleiman, B.; Badanidiyuru, A.; Karbasi, A.; Vondrák, J.; Krause, A. Lazier than lazy greedy. In Proceedings of the AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; Volume 29. [Google Scholar]

- Hashemi, A.; Ghasemi, M.; Vikalo, H.; Topcu, U. Randomized greedy sensor selection: Leveraging weak submodularity. IEEE Trans. Autom. Control 2020, 66, 199–212. [Google Scholar] [CrossRef]

- Krause, A.; Singh, A.; Guestrin, C. Near-optimal sensor placements in Gaussian processes: Theory, efficient algorithms and empirical studies. J. Mach. Learn. Res. 2008, 9, 235–284. [Google Scholar]

- Chepuri, S.P.; Leus, G. Sparse sensing for distributed detection. IEEE Trans. Signal Process. 2015, 64, 1446–1460. [Google Scholar] [CrossRef]

- Clark, E.; Askham, T.; Brunton, S.L.; Kutz, J.N. Greedy sensor placement with cost constraints. IEEE Sens. J. 2018, 19, 2642–2656. [Google Scholar] [CrossRef]

- Yamada, K.; Saito, Y.; Nankai, K.; Nonomura, T.; Asai, K.; Tsubakino, D. Fast greedy optimization of sensor selection in measurement with correlated noise. Mech. Syst. Signal Process. 2021, 158, 107619. [Google Scholar] [CrossRef]

- Liu, S.; Chepuri, S.P.; Fardad, M.; Maşazade, E.; Leus, G.; Varshney, P.K. Sensor selection for estimation with correlated measurement noise. IEEE Trans. Signal Process. 2016, 64, 3509–3522. [Google Scholar] [CrossRef]

- Dhingra, N.K.; Jovanović, M.R.; Luo, Z.Q. An ADMM algorithm for optimal sensor and actuator selection. In Proceedings of the 53rd IEEE Conference on Decision and Control, Los Angeles, CA, USA, 15–17 December 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 4039–4044. [Google Scholar] [CrossRef]

- Nagata, T.; Nonomura, T.; Nakai, K.; Yamada, K.; Saito, Y.; Ono, S. Data-driven sparse sensor selection based on A-optimal design of experiment with ADMM. IEEE Sens. J. 2021, 21, 15248–15257. [Google Scholar] [CrossRef]

- Nagata, T.; Yamada, K.; Nonomura, T.; Nakai, K.; Saito, Y.; Ono, S. Data-driven sensor selection method based on proximal optimization for high-dimensional data with correlated measurement noise. IEEE Trans. Signal Process. 2022, 70, 5251–5264. [Google Scholar] [CrossRef]

- Wouwer, A.V.; Point, N.; Porteman, S.; Remy, M. An approach to the selection of optimal sensor locations in distributed parameter systems. J. Process Control 2000, 10, 291–300. [Google Scholar] [CrossRef]

- Summers, T.H.; Cortesi, F.L.; Lygeros, J. On Submodularity and Controllability in Complex Dynamical Networks. IEEE Trans. Control. Netw. Syst. 2016, 3, 91–101. [Google Scholar] [CrossRef]

- Summers, T.; Shames, I. Convex relaxations and Gramian rank constraints for sensor and actuator selection in networks. In Proceedings of the 2016 IEEE International Symposium on Intelligent Control (ISIC), Buenos Aires, Argentina, 19–22 September 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar] [CrossRef]

- DeVries, L.; Majumdar, S.J.; Paley, D.A. Observability-based optimization of coordinated sampling trajectories for recursive estimation of a strong, spatially varying flowfield. J. Intell. Robot. Syst. 2013, 70, 527–544. [Google Scholar] [CrossRef]

- Montanari, A.N.; Freitas, L.; Proverbio, D.; Gonçalves, J. Functional observability and subspace reconstruction in nonlinear systems. Phys. Rev. Res. 2022, 4, 043195. [Google Scholar] [CrossRef]

- Shamaiah, M.; Banerjee, S.; Vikalo, H. Greedy sensor selection: Leveraging submodularity. In Proceedings of the 49th IEEE Conference on Decision and Control (CDC), Atlanta, GA, USA, 15–17 December 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 2572–2577. [Google Scholar] [CrossRef]

- Zhang, X.; Tian, Y.; Cheng, R.; Jin, Y. An Efficient Approach to Nondominated Sorting for Evolutionary Multiobjective Optimization. IEEE Trans. Evol. Comput. 2015, 19, 201–213. [Google Scholar] [CrossRef]

- Zhang, H.; Ayoub, R.; Sundaram, S. Sensor selection for Kalman filtering of linear dynamical systems: Complexity, limitations and greedy algorithms. Automatica 2017, 78, 202–210. [Google Scholar] [CrossRef]

- Ye, L.; Roy, S.; Sundaram, S. On the complexity and approximability of optimal sensor selection for Kalman filtering. In Proceedings of the 2018 Annual American Control Conference (ACC), Milwaukee, WI, USA, 27–29 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 5049–5054. [Google Scholar] [CrossRef]

- Tzoumas, V.; Jadbabaie, A.; Pappas, G.J. Sensor placement for optimal Kalman filtering: Fundamental limits, submodularity, and algorithms. In Proceedings of the 2016 American Control Conference (ACC), Boston, MA, USA, 6–8 July 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 191–196. [Google Scholar]

- Manohar, K.; Kutz, J.N.; Brunton, S.L. Optimal Sensor and Actuator Selection Using Balanced Model Reduction. IEEE Trans. Autom. Control 2021, 67, 2108–2115. [Google Scholar] [CrossRef]

- Clark, E.; Kutz, J.N.; Brunton, S.L. Sensor selection with cost constraints for dynamically relevant bases. IEEE Sens. J. 2020, 20, 11674–11687. [Google Scholar] [CrossRef]

- Zhou, K.; Salomon, G.; Wu, E. Balanced realization and model reduction for unstable systems. Int. J. Robust Nonlinear Control 1999, 9, 183–198. [Google Scholar] [CrossRef]

- Nankai, K.; Ozawa, Y.; Nonomura, T.; Asai, K. Linear Reduced-order Model Based on PIV Data of Flow Field around Airfoil. Trans. Jpn. Soc. Aeronaut. Space Sci. 2019, 62, 227–235. [Google Scholar] [CrossRef]

- Drmač, Z.; Saibaba, A.K. The Discrete Empirical Interpolation Method: Canonical Structure and Formulation in Weighted Inner Product Spaces. SIAM J. Matrix Anal. Appl. 2018, 39, 1152–1180. [Google Scholar] [CrossRef]

- Zare, A.; Mohammadi, H.; Dhingra, N.K.; Georgiou, T.T.; Jovanovic, M.R. Proximal algorithms for large-scale statistical modeling and sensor/actuator selection. IEEE Trans. Autom. Control 2019, 65, 3441–3456. [Google Scholar] [CrossRef]

- Boyd, S.; Boyd, S.P.; Vandenberghe, L. Convex Optimization; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Gower, R.; Koralev, D.; Lieder, F.; Richtarik, P. RSN: Randomized Subspace Newton. In Advances in Neural Information Processing Systems 32; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2019; pp. 616–625. [Google Scholar]

- Nonomura, T.; Ono, S.; Nakai, K.; Saito, Y. Randomized Subspace Newton Convex Method Applied to Data-Driven Sensor Selection Problem. IEEE Signal Processing Lett. 2021, 28, 284–288. [Google Scholar] [CrossRef]

- Feige, U.; Mirrokni, V.S.; Vondrák, J. Maximizing non-monotone submodular functions. SIAM J. Comput. 2011, 40, 1133–1153. [Google Scholar] [CrossRef]

- CVX Research, I. CVX: Matlab Software for Disciplined Convex Programming, (Version 2.0); CVX Research, Inc.: Austin, TX, USA, 2012; Available online: http://cvxr.com/cvx (accessed on 29 April 2023).

- Grant, M.; Boyd, S. Graph implementations for nonsmooth convex programs. In Recent Advances in Learning and Control; Blondel, V., Boyd, S., Kimura, H., Eds.; Lecture Notes in Control and Information Sciences; Springer: Berlin/Heidelberg, Germany, 2008; pp. 95–110. Available online: http://stanford.edu/~boyd/graph_dcp.html (accessed on 29 April 2023).

- Benner, P.; Mehrmann, V.; Sima, V.; Van Huffel, S.; Varga, A. SLICOT—A Subroutine Library in Systems and Control Theory: Bucharest 1, Romania. 1999. Available online: http://www.slicot.org/ (accessed on 29 April 2023).

- Van Huffel, S.; Sima, V.; Varga, A.; Hammarling, S.; Delebecque, F. High-performance numerical software for control. IEEE Control Syst. Mag. 2004, 24, 60–76. [Google Scholar]

- Barraud, A.Y. A numerical algorithm to solve AXA - X = Q. In Proceedings of the 1977 IEEE Conference on Decision and Control including the 16th Symposium on Adaptive Processes and A Special Symposium on Fuzzy Set Theory and Applications, New Orleans, LA, USA, 7–9 December 1977; pp. 420–423. [Google Scholar] [CrossRef]

- Hammarling, S.J. Numerical solution of the stable, non-negative definite lyapunov equation lyapunov equation. IMA J. Numer. Anal. 1982, 2, 303–323. [Google Scholar] [CrossRef]

- Kitagawa, G. An algorithm for solving the matrix equation X = FXF⊤ + S. Int. J. Control 1977, 25, 745–753. [Google Scholar] [CrossRef]

- Yamada, K. Sensor Placement Based on Observability Gramian. 2023. Available online: https://github.com/Aerodynamics-Lab/Sensor-Placement-Based-on-Observability-Gramian (accessed on 29 April 2023).

- Strassen, V. Gaussian elimination is not optimal. Numer. Math. 1969, 13, 354–356. [Google Scholar] [CrossRef]

- Luo, Z.Q.; Ma, W.K.; So, A.; Ye, Y.; Zhang, S. Semidefinite Relaxation of Quadratic Optimization Problems. IEEE Signal Process. Mag. 2010, 27, 20–34. [Google Scholar] [CrossRef]

- Nonomura, T.; Nankai, K.; Iwasaki, Y.; Komuro, A.; Asai, K. Quantitative evaluation of predictability of linear reduced-order model based on particle-image-velocimetry data of separated flow field around airfoil. Exp. Fluids 2021, 62, 112. [Google Scholar] [CrossRef]

- Nonomura, T.; Nankai, K.; Iwasaki, Y.; Komuro, A.; Asai, K. Airfoil PIV Data for Linear ROM. 2021. Available online: https://github.com/Aerodynamics-Lab/Airfoil-PIV-data-for-linear-ROM (accessed on 29 April 2023).

- Saito, Y.; Yamada, K.; Kanda, N.; Nakai, K.; Nagata, T.; Nonomura, T.; Asai, K. Data-Driven Determinant-Based Greedy Under/Oversampling Vector Sensor Placement. CMES-Comput. Model. Eng. Sci. 2021, 129, 1–30. [Google Scholar] [CrossRef]

- Yamada, K.; Saito, Y.; Nonomura, T.; Asai, K. Greedy Sensor Selection for Weighted Linear Least Squares Estimation under Correlated Noise. IEEE Access 2022, 10, 79356–79364. [Google Scholar] [CrossRef]

- Reynolds, R.W.; Rayner, N.A.; Smith, T.M.; Stokes, D.C.; Wang, W. An improved in situ and satellite SST analysis for climate. J. Clim. 2002, 15, 1609–1625. [Google Scholar] [CrossRef]

- NOAA. NOAA Optimal Interpolation (OI) Sea Surface Temperature (SST) V2; NOAA: Boulder, CO, USA, 2023. Available online: https://www.esrl.noaa.gov/psd/data/gridded/data.noaa.oisst.v2.html (accessed on 29 April 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).