Abstract

To mitigate the influence of satellite platform vibrations on space camera imaging quality, a novel approach is proposed to detect vibration parameters based on correlation imaging of rolling-shutter CMOS. In the meantime, a restoration method to address the image degradation of rolling-shutter CMOS caused by such vibrations is proposed. The vibration parameter detection method utilizes the time-sharing and row-by-row imaging principle of rolling-shutter CMOS to obtain relative offset by comparing two frames of correlation images from continuous imaging. Then, the space camera’s vibration parameters are derived from the fitting curve parameters of the relative offset. According to the detected vibration parameters, the discrete point spread function is obtained, and the rolling-shutter CMOS image degradation caused by vibration is restored row by row. The verification experiments demonstrate that the proposed detection method for two-dimensional vibration achieves a relative accuracy of less than 1% in period detection and less than 2% in amplitude detection. Additionally, the proposed restoration method can enhance the MTF index by over 20%. The experimental results demonstrate that the detection method is capable of detecting high-frequency vibrations through low-frame-frequency image sequences, and it exhibits excellent applicability in both push-scan cameras and staring cameras. The restoration method effectively enhances the evaluation parameters of image quality and yields a remarkable restorative effect on degraded images.

1. Introduction

During the operation of a remote sensing satellite in orbit, both internal and external factors can disturb the space attitude of the satellite platform, resulting in vibrations that are transmitted to the space camera [1,2]. This phenomenon results in the relative displacement of the objects’ projection on the focal plane during the integral imaging process of the space camera, thereby compromising image quality [3,4]. With the continuous enhancement in design and manufacturing proficiency in remote sensing optical systems, coupled with the ongoing optimization of performance indices for optical imaging devices, spatial camera resolution is gradually improving. However, these advances have also increased the sensitivity of cameras to vibration, making it an important factor that affects the image quality of high-resolution remote sensors [5]. The detection of vibration parameters is highly significant in the study of satellite platform vibration laws and the enhancement in space camera imaging performance. Additionally, it serves as a fundamental data source for both vibration suppression and degraded image restoration.

Vibration parameter detection methods can be classified into two categories: one involves direct detection using precision acquisition sensors, while the other utilizes digital image processing technology to achieve vibration parameter detection. The direct detection method boasts high sampling frequency and detection accuracy, rendering it a widely adopted technique in space platforms such as Landsat-7, Pleiades, Yaogan-26, and others [6,7,8,9,10]. However, due to spatial constraints, the direct installation of the sensor on the focal plane for vibration parameter measurement is unfeasible. Vibration tests conducted at other locations are attenuated by internal damping measures and the structural conduction of the space camera, resulting in a certain degree of error between collected vibration information and actual focal plane vibration data [11,12]. Early vibration parameter detection methods based on digital image processing are primarily developed for single-frame, fuzzy images of vibrations [13,14]. These methods can only compute the point spread function under specific motion patterns and are susceptible to noise, resulting in reduced accuracy. On the basis of single-frame image detection, a multi-frame image sequence detection method has been developed [15,16,17,18]. In this method, the reference image captured by the high-speed imaging device is utilized to detect vibration parameters through comparison with the main imaging device’s image. However, the reference image is affected by noise due to its short exposure time, which in turn impacts the final detection accuracy. At the same time, according to the sampling theorem, a sampling frequency that is more than twice the vibration frequency is necessary to prevent information loss, so the fast imaging device is required to have a higher frame frequency. For certain space cameras with unique structures, techniques have been developed to utilize parallax observation images for detection, such as the space cameras with overlapping imaging areas [19,20,21,22]. This method necessitates the presence of overlapping pixels in the camera imagery, and the quantity is influenced by the attitude of the satellite.

For images captured by a global-shutter array camera, each pixel is exposed simultaneously, ensuring consistent vibration effects across the entire image. This implies the possibility of utilizing a consistent point spread function (PSF) for image restoration [10,23]. For a rolling-shutter array camera, due to its time-sharing and row-by-row imaging characteristics, there exist differences and coupling between image rows. Therefore, it is impossible to employ a unified point spread function for image restoration [24]. Currently, there is no specialized method for restoring vibration degradation in rolling-shutter array cameras. The only available reference is image restoration technology suitable for linear-array push-scan sensors. Wolberg and Loce formulated the imaging model based on the operational principle of a linear-array push-scan camera, proposed a method for calculating the point spread function of vibration in linear-array cameras, and reconstructed blurred images caused by vibrations [25]. A TDICCD vibration image restoration method was proposed by Zhejiang University which is capable of restoring degraded vibration images row by row through the calculation of point spread functions for each individual row when the instantaneous speed of motion is known [26].

This paper proposes a method for detecting vibration parameters based on correlation imaging of rolling-shutter CMOS. Additionally, a restoration method for degraded images caused by vibration in rolling-shutter CMOS cameras is proposed. These methods are highly applicable to both push-scan and staring cameras. The vibration parameter detection method utilizes the correlation between two consecutive frames of rolling-shutter CMOS images to extract displacement information through comparison. Thereby, the vibration parameters of focal plane position is obtained. Meanwhile, owing to the imaging characteristics of the rolling shutter, the minimum sampling period for detecting vibration parameters is determined by the interval between row exposure commencements. Therefore, the proposed vibration parameter detection method in this paper enables the detection of high-frequency vibration parameters through low-frame-frequency image sequences. According to the detected vibration parameters, the proposed rolling-shutter CMOS point spread function discretization method and image restoration method for vibration degradation can effectively enhance the image quality evaluation index of degraded images.

In this study, a novel vibration detection method is proposed which makes full use of the imaging characteristics of rolling-shutter CMOS and can effectively improve the detection frequency range. At the same time, a novel vibration degradation restoration method for rolling-shutter CMOS is proposed which can effectively improve image quality. In this article, we will introduce the principle and formula derivation of a vibration detection method in the second section, introduce the principle and formula derivation of degraded image restoration method in the third section, introduce the specific experimental method and data results in the fourth section, and present the conclusion in the last section.

2. Vibration Parameter Detection

2.1. Influence of Vibration

The satellite’s vibration can be decomposed into two components: the vibration along the optical axis and the two-dimensional vibrations perpendicular to each other in a direction orthogonal to the optical axis. Due to the significantly larger object distance compared to the focal length for the space camera, any vibration along the optical axis has negligible impact on image quality. In the directions orthogonal to the optical axis, low-frequency vibrations can cause significant jitter in the camera output, resulting in image position changes that are visually manifested as shifts, stretches, or compressions, and high-frequency vibrations lead to blurred images. Both low-frequency and high-frequency vibrations along the directions orthogonal to the optical axis result in a reduction in the Modulation Transfer Function (MTF) and other image parameters. This paper focuses on the detection of vibration parameters along the directions orthogonal to the optical axis.

2.2. Principle of Detection Method

The rolling-shutter CMOS image sensor operates based on the principles of time-sharing and row-by-row imaging. Each row of the sensor sequentially begins and ends its exposure, while each row of images has an equal exposure duration. Therefore, while each row of images is initially independent at the start of exposure, their integration times overlap, resulting in inter-row coupling. Thus, each row of exposure time is staggered and coupled on the temporal axis. Meanwhile, for the image captured by the same row of pixels in two consecutive frames, the time interval at the onset of exposure remains constant and equals that required for capturing one frame of an image. In this paper, we refer to the interrelated imaging method described above as correlation imaging.

In essence, sensor image capture involves the systematic sampling of scene information. The time-sharing and row-by-row imaging of the rolling-shutter CMOS is equivalent to sequentially sampling the scene according to the direction of the shutter. The duration of each sampling corresponds to the exposure time of a single row, while the sampling interval represents the temporal difference between the initial time of two consecutive rows’ exposure. Therefore, each row is subjected to varying magnitudes of vibration-induced impact during the continuous imaging process of a rolling-shutter CMOS. This implies that the corresponding image captures the effects of vibration throughout the time period, while the continuous sequence of images documents all vibrational data during the entire imaging process. The entire array of pixels in the global-shutter sensor is simultaneously exposed during a single time period, resulting in a sampling frequency equivalent to the frame frequency. In contrast, the rolling-shutter sensor significantly enhances the sampling frequency, expands the detection range of vibration frequencies, and enables high-frequency vibration detection through low-frame-rate image sequences.

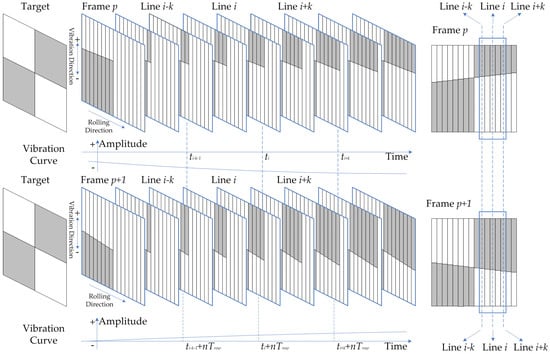

For analytical purposes, it is assumed that the space camera operates as a staring camera and experiences no relative motion with respect to the rolling-shutter CMOS image sensor, except for vibrations. The relative relationship between two consecutive frames of images is analyzed, as illustrated in Figure 1: the row i of the frame p and the frame p + 1 both capture the same scene with a fixed time interval of , where m represents the number of rows on the CMOS image sensor and denotes the temporal difference between the initial time of two consecutive rows’ exposure, also known as row time. If the imaging is affected by vibration and the period of vibration satisfies condition , , a relative offset appears in the scene captured at row i of two consecutive frames. However, the data contained in a single row are insufficient for detecting vibration offset. Adjacent rows to row i should be utilized for accurate detection. In practical engineering projects, the camera is secured by a flexible device, and the transmitted vibration to the camera is typically below 200 Hz, indicating that the vibration period exceeds 5 ms. While the row time of the rolling-shutter CMOS is on the order of microseconds, it is generally believed that the vibration influences of adjacent rows are consistent, allowing for the expansion of a single-row image into a small image suitable for vibration detection. Image registration methods, such as the gray projection algorithm and normalized cross-correlation algorithm [27,28], can be employed to determine the relative offset between images captured at time and time of the same scene by comparing rows i − k − 1 to i + k in frame p with those in frame p + 1.

Figure 1.

Correlation imaging of rolling-shutter CMOS.

By segmenting an image frame into multiple blocks by modifying , the relative offset sequence can be obtained by comparing corresponding blocks between two consecutive frames, and the parameters of the offset curve can be fitted. The sampling interval is determined by the distance between image blocks, whereas the sampling frequency can be adjusted through the modification of this distance.

Within a specific time interval, any vibration can be considered a periodic function that can be decomposed into Fourier series. Therefore, this paper primarily focuses on the study of sinusoidal vibrations. Assuming a sinusoidal function, the vibration equation is expressed as follows:

where . Within the integration time , the average offset of row i in frame p, which is affected by vibration, can be expressed as follows:

where , .

In a similar manner, the average offset of row i in frame p + 1, which is affected by vibration, can be expressed as follows:

Then, the relative offset between the row i of frame p and that of frame p + 1 can be expressed as:

Equation (4) represents the equation for relative offset. Meanwhile, it is assumed that the fitting equation for the detected offset is:

By comparing Equation (4) with Equation (5), vibration parameters can be obtained from the parameters , , and of the fitting equation for the detected offset. The specific calculation formula is:

Here, Equation (6) to Equation (8) is the calculation formula of vibration parameters. These equations can be used to calculate both the two-dimensional vibrations perpendicular to each other in a direction orthogonal to the optical axis.

2.3. Influence of Image Motion

The preceding section examined the vibration detection of a staring camera, while this section scrutinizes the vibration detection of a push-scan camera and evaluates the impact of image motion on the detection method. For a rolling-shutter CMOS sensor, each row has a consistent exposure time despite starting imaging at different times. Therefore, when the image motion is uniform, the distance of each row’s image motion remains constant, resulting in consistent point spread functions across all rows. It is assumed that the flight direction of the satellite is the x axis, the direction perpendicular to the flighting is the y axis, and the imaging direction of the rolling shutter is perpendicular to the flight direction. Thus, we need to investigate whether the shift on the x axis has any impact on vibration detection. According to the linear system theory, the degraded image under the influence of vibration and image motion is expressed as:

where represents the motion-free image and denotes the degradation function whose Fourier spectrum corresponds to the point spread function of motion blur. and denote the amount of vibration between the camera and object in the x and y directions at time t, while represents the degree of image movement in the flight direction.

According to Equation (1), the maximum vibration velocity of sinusoidal function is . Based on the vibration data obtained from the Yaogan-26 satellite, the maximum vibration velocity can be calculated to be of magnitude m/s. The image motion speed on the focal plane can be estimated to be of magnitude m/s for a space camera with an orbital altitude of 760 km and a focal length of 10 m. Due to the significant disparity between the two, the vibration offset is relatively negligible compared to the image displacement during the exposure time, and it can be considered a constant value throughout the imaging process. Formula (9) can be transformed as follows:

The Fourier spectrum of the degraded image is represented as follows:

Substitute Equation (10) into Equation (11) and transform it as follows:

Substitute and into Equation (12) to obtain:

Applying Fourier inverse transform to both sides of Equation (13), we obtain:

Take ; then, Equation (14) can be transformed as follows:

Meanwhile, can be transformed as follows:

Due to , Equation (16) is transformed into:

Image motion is characterized by uniform motion, which can be mathematically expressed as and subsequently substituted into Equation (17):

According to Equation (18), function is a normalized function.

The vibration offset of a specific image row, as shown in Equation (15), is the result of synthesizing multiple rows involved in . In other words, the offset of an image affected by vibration under the influence of image motion can be expressed as:

Moreover, as the vibration offset remains constant over a period of time , where is a fixed value and , Equation (19) can be transformed as follows:

As serves as a normalization function, Equation (20) is thereby transformed as follows:

According to Equation (21), if the velocity of image motion greatly exceeds that of the vibration, the offset caused by vibration in the image will not be affected by the image motion. Therefore, the presence of image motion does not significantly affect the correlation vibration parameter detection method of rolling-shutter CMOS. Even in the presence of image motion, vibration parameters can still be directly detected without taking its influence into consideration. In conclusion, the proposed method in this paper is universally applicable to various types of space cameras, including push-scan and staring cameras, exhibiting extensive versatility.

3. Degraded Image Restoration

After obtaining the vibration parameters through the proposed vibration detection method in this paper, image restoration of degraded images can be achieved. However, due to the time-sharing and row-by-row exposure imaging characteristics of rolling-shutter CMOS, it differs from global-shutter CMOS. The subsequent sections present a comprehensive introduction to the discretization method of point spread function and the image restoration method for vibration degradation.

3.1. Method of PSF Discretization

In order to facilitate the calculation, the influence of noise and image motion is not considered in the derivation of the formula. Then, the degraded image can be obtained from Equation (9) as follows:

Equation (22) is a continuous function; however, digital images are discrete in nature. Therefore, prior to any digital image processing, discretization must be performed. For rolling-shutter CMOS, the exposure time is an integral multiple () of the line time, namely, , which can be obtained by substituting into Equation (22):

For the vibration with a vibration function of , the maximum distance of vibration within is:

Taking the vibration parameters of the Yaogan-26 satellite as an example, when substituted into Equation (24), the resulting vibration distance within time will not exceed 0.1 pixel. In the range , can be regarded as a constant value.

If we define that , then Equation (23) can be transformed as follows:

Define and . Since and may not be integers, and pixel coordinates in digital images must be integer values, the linear interpolation method is employed for fitting. Define and , where represents rounding down to the nearest integer, and define:

By utilizing linear interpolation fitting, we can obtain:

Here it is defined:

If Equations (31) to (34) are substituted into Equation (30), the following results can be obtained:

Equation (35) is supplemented and complete within the whole image range, that is, except for PSFS at the above four pixel positions; all other PSFs are 0. Then, Equation (35) can be transformed as follows:

where is:

Substituting Equation (36) into Equation (25), we obtain:

In contrast to Equation (22), it is evident that:

Based on known parameters of vibration, the discrete point spread function of vibration can be derived utilizing Equation (39).

3.2. Degraded Image Restoration of Rolling-Shutter CMOS

In the rolling-shutter CMOS camera, the exposure start time of each row varies, resulting in a unique point spread function for each row. Therefore, the utilization of a unified PSF for complete image restoration is unfeasible, necessitating row-by-row restoration.

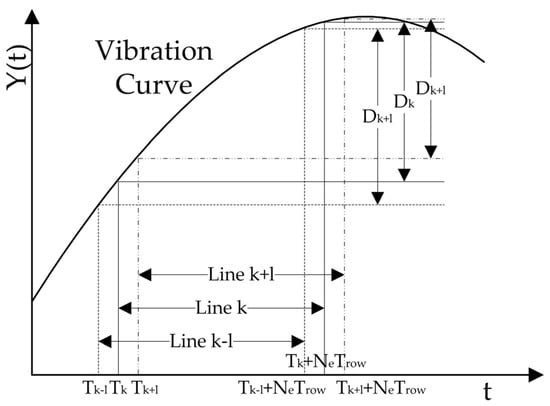

Figure 2 illustrates the operation of a rolling-shutter CMOS camera: the pixel located in row k performs exposure imaging within the range of to , and undergoes a focal plane displacement of during the exposure process; in a similar manner, the pixel located in row k − l performs exposure imaging within the range of to , and undergoes a focal plane displacement of during the exposure process; the pixel located in row k + l performs exposure imaging within the range of to , and undergoes a focal plane displacement of during the exposure process. It is evident that the vibration distance varies during the imaging process of different rows, indicating a distinct PSF for each row. However, the vibration effects overlap in several adjacent rows. By appropriately selecting l, we can approximate that the vibration effect on the image remains consistent from row k − l to row k + l. The vibration effect of these rows is consistent with that of row k, indicating that the point spread functions within this range are identical to those of row k. Thus, the point spread function of row k can be utilized for image restoration of rows k − l to k + l. The central row of the restored image is then designated as the rolling-shutter CMOS restored image’s row k. Following this process, the same operation is applied to each row of the image, resulting in a restored rolling-shutter CMOS image of the entire frame.

Figure 2.

The impact of vibration on various rows of rolling-shutter CMOS.

4. Experiment

4.1. Platform of Vibration Detection

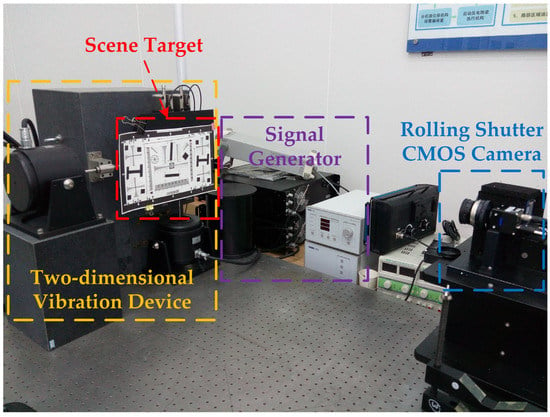

The experimental equipment mainly included an optical platform, two-dimensional vibration device, signal generator, rolling-shutter CMOS camera, scene target, etc. The experimental platform is shown in Figure 3.

Figure 3.

Platform of vibration detection.

4.2. Experiment of Vibration Detection and Result Analysis

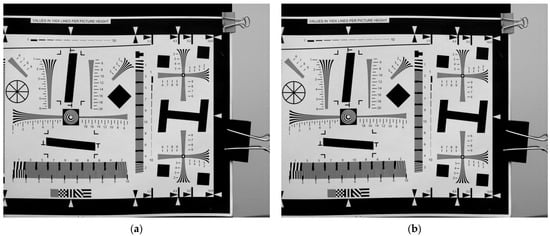

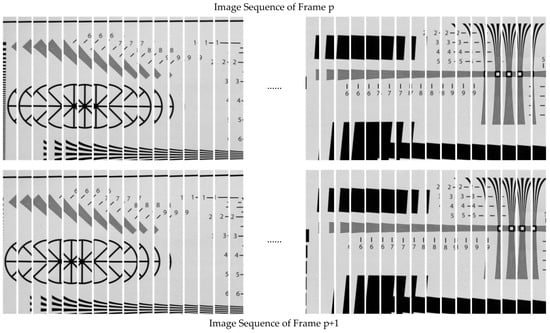

Since the motion is relative, the target motion was used in the experiment to simulate camera vibration. A two-dimensional vibration device was used to add vibration excitation to the target in both horizontal and vertical directions. The equivalent vibration parameters on the focal plane after conversion are shown in Table 1. The direction of the camera rolling shutter was horizontal. First, we selected the ISO 12233 camera resolution chart as the scene target. The rolling-shutter CMOS camera has a row time of 20.52 μs, with an exposure time that is 20 times the row time (410.4 μs). The correlation images captured in two consecutive frames are presented in Figure 4. It can be seen from Figure 4a,b that under the influence of vibration, the straight line in the horizontal direction distorted, while the straight line in the vertical direction shifted in the transversal direction. There were also some differences in vibration effects between frame p and frame p + 1.

Table 1.

The equivalent vibration parameters of the focal plane.

Figure 4.

Correlation image sequence impacted by vibration (ISO 12233). (a) Frame p; (b) frame p + 1.

The two frames of continuously exposed images were processed by windowing, and the appropriate windowing size and windowing interval were selected. The windowing interval was the sampling frequency of the vibration detection. The two groups of windowing image sequences are shown in Figure 5. By comparing the image sequence, we can observe that there are displacements in both the horizontal and vertical directions between the two frames, indicating that they were affected by vibration, resulting in twisting and stretching. Furthermore, there are dissimilarities between the impacts on frame p and frame p + 1.

Figure 5.

Windowing of correlation image.

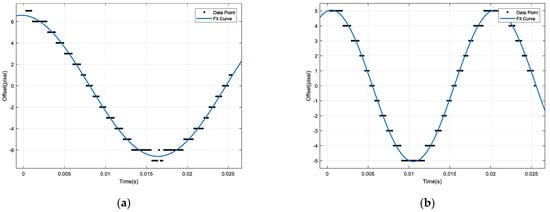

A vibration parameter detection algorithm based on rolling-shutter CMOS correlation imaging was utilized to compute the gray projection of corresponding image pairs in two sets of windowing image sequences. The relative offset array of vibration between two continuous exposure rolling-shutter CMOS correlation images was subsequently obtained. By performing Fourier fitting on the array, we can obtain the fitting curve and its corresponding parameters for vibration offset. The offset fitting curves for the horizontal and vertical directions are depicted in Figure 6.

Figure 6.

Relative offset fitting curve. (a) Horizontal; (b) vertical.

According to the fitting curve parameters, the horizontal and vertical vibration parameters can be obtained from Equations (6) to (8), and the results are shown in Table 2.

Table 2.

Vibration detection results and analysis (ISO 12233).

In order to simulate the in-orbit imaging state, the scene target was replaced by a satellite remote sensing image printed by the HD printer. The two frames of correlation images of continuous exposure are shown in Figure 7.

Figure 7.

Correlation image sequence impacted by vibration (in-orbit simulation). (a) Frame p; (b) frame p + 1.

After the same process, the results are shown in Table 3.

Table 3.

Vibration detection results and analysis (in-orbit simulation).

From Table 2 and Table 3, the results demonstrate that the proposed vibration parameter detection method in this paper is capable of effectively detecting the vibration parameters of space cameras with high accuracy, with a relative accuracy for period detection less than 1% and amplitude detection less than 2%. The detection of 50 Hz vibration requires a global-shutter imaging device to operate at a minimum frame rate of 100 fps. However, the rolling-shutter CMOS requires only 24 fps to accurately detect high-frequency vibrations, thereby achieving the objective of detecting such vibrations through a low-frame-rate image sequence.

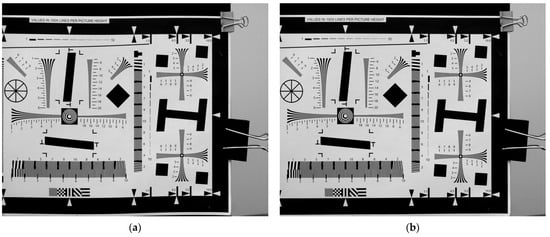

4.3. Experiment of Image Restoration and Result Analysis

According to the proposed image restoration method in this paper, a degraded image can be restored by utilizing detected vibration parameters. The pre- and post-restoration comparison of ISO 12233 as the scene target is illustrated in Figure 8, while the pre- and post-restoration comparison of a satellite remote sensing image as the scene target is depicted in Figure 9.

Figure 8.

Pre- and post-restoration comparison of ISO 12233 as the scene target. (a) Pre-restoration; (b) post-restoration.

Figure 9.

Pre- and post-restoration comparison of in-orbit simulation as the scene target. (a) Pre-restoration; (b) post-restoration.

The visual improvement in the twisting and stretching of the image after restoration is readily apparent. Subsequently, the evaluation parameters for correlation images pre- and post-restoration were computed to assess the effectiveness of image restoration based on the resulting data. The imaging quality evaluation parameters used in this paper include: Peak Signal-to-Noise Ratio (PSNR), Structural Similarity Index Measurement, SSIM, and MTF. PSNR is a metric used to quantify the level of signal distortion between images; higher values indicate lower levels of distortion. SSIM is a metric utilized for gauging the likeness between two images; values range from 0 to 1. The closer the value is to 1, the higher the degree of similarity; conversely, as it approaches 0, so does the level of resemblance. MTF is a crucial parameter for the objective assessment of camera imaging quality, as it reflects the camera’s ability to transmit frequency domain information. In this paper, MTF is determined using the slanted-edge method, and subsequently, the MTF values for both horizontal and vertical directions are computed separately. Imaging quality evaluation parameters pre- and post-restoration are presented in Table 4.

Table 4.

Imaging quality evaluation parameters pre- and post-restoration.

The experimental results demonstrate that the proposed degraded image restoration method can significantly enhance the evaluation parameters of imaging quality. In particular, the proposed method exhibits a significant improvement in MTF by over 20%, indicating its efficacy in mitigating image quality degradation caused by vibration. Moreover, this indirectly validates the precision of the vibration parameter detection method.

5. Conclusions

A vibration parameter detection method based on correlation imaging of rolling-shutter CMOS is proposed in this paper. The method can effectively and accurately detect the vibration parameters of space cameras. The period detection has a relative accuracy of less than 1%, while the amplitude detection has a relative accuracy of less than 2%. Furthermore, the utilization of rolling-shutter CMOS time-sharing and row-by-row imaging principles enables the detection of high-frequency vibration through a low-frame-frequency image sequence. After detecting the vibration parameters and obtaining the discrete point spread function, the proposed method of degraded image restoration for rolling-shutter CMOS can effectively enhance image quality evaluation parameters. In particular, the proposed method demonstrates a significant improvement of over 20% in MTF, indicating its effectiveness in restoring image degradation caused by vibration. The methods proposed in this paper are universally applicable to various types of space cameras, including push-scan and staring cameras. These methods possess broad applicability and hold significant implications for suppressing vibrations in space cameras.

Author Contributions

Conceptualization, H.L. (Hailong Liu); methodology, H.L. (Hailong Liu); software, H.L. (Hailong Liu); validation, H.L. (Hengyi Lv), Y.Z. and C.H.; formal analysis, H.L. (Hailong Liu); investigation, H.L. (Hailong Liu); resources, H.L. (Hailong Liu); data curation, H.L. (Hailong Liu); writing—original draft preparation, H.L. (Hailong Liu); writing—review and editing, H.L. (Hengyi Lv); visualization, H.L. (Hailong Liu); supervision, H.L. (Hengyi Lv) and Y.Z.; project administration, C.H.; funding acquisition, H.L. (Hailong Liu). All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Natural Science Foundation of China (62005269).

Data Availability Statement

No new data were created or analyzed in this study. Data sharing does not apply to this article.

Acknowledgments

We sincerely appreciate the editors and reviewers for their helpful comments and constructive suggestions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zaman, I.U.; Boyraz, O. Impact of receiver architecture on small satellite optical link in the presence of pointing jitter. Appl. Opt. 2020, 59, 10177–10184. [Google Scholar] [CrossRef]

- Yu, Z.; Jiang, L.; Ling, K.; Yao, Z. Study on the Influence of Random Vibration of Space-Based Payload on Area-Array Camera Frame-by-Frame Imaging. Photonics 2022, 9, 455. [Google Scholar] [CrossRef]

- Yang, L.; Wang, Y.S.; Wei, L.; Hu, Z.Q. Study on microvibration effect of an optical satellite based on the imaging absence method. Opt. Eng. 2021, 60, 013107. [Google Scholar] [CrossRef]

- Xu, J.; Wang, D.; Nie, K.; Gao, J. Digital domain dynamic path accumulation method to compensate for image vibration distortion for CMOS-time-delay-integration image sensor. Opt. Eng. 2020, 59, 103101. [Google Scholar] [CrossRef]

- KIM, S.; YOUK, Y. Suppressing effects of micro-vibration for MTF measurement of high-resolution electro-optical satellite payload in an optical alignment ground facility. Opt. Express 2023, 31, 4942–4953. [Google Scholar] [CrossRef]

- Dial, G.; Bowen, H.; Gerlach, F.; Grodecki, J.; Oleszczuk, R. IKONOS satellite, imagery, and products. Remote Sens. Environ. 2003, 88, 23–36. [Google Scholar] [CrossRef]

- Aguilar, M.A.; del Mar Saldana, M.; Aguilar, F.J. Assessing geometric accuracy of the orthorectification process from GeoEye-1 and WorldView-2 panchromatic images. Int. J. Appl. Earth Obs. Geoinf. 2013, 21, 427–435. [Google Scholar] [CrossRef]

- Wang, M.; Fan, C.; Pan, J.; Jin, S.; Chang, X. Image jitter detection and compensation using a high-frequency angular displacement method for Yaogan-26 remote sensing satellite—ScienceDirect. ISPRS J. Photogramm. Remote Sens. 2017, 130, 32–43. [Google Scholar] [CrossRef]

- Wang, X.; Li, C.; Jia, J.; Wu, J.; Shu, R.; Zhang, L.; Wang, J. Angular micro-vibration of the Micius satellite measured by an optical sensor and the method for its suppression. Appl. Opt. 2021, 60, 1881–1887. [Google Scholar] [CrossRef]

- Yue, R.; Wang, H.; Jin, T.; Gao, Y.; Sun, X.; Yan, T.; Zang, J.; Yin, K.; Wang, S. Image Motion Measurement and Image Restoration System Based on an Inertial Reference Laser. Sensors 2021, 21, 3309. [Google Scholar] [CrossRef]

- Huang, J.; Qiu, M.J.; Hou, P. Determination of satellite structural modes of vibration using machine vision. Opt. Eng. 2020, 59, 014101. [Google Scholar] [CrossRef]

- Park, Y.-H.; Kwon, S.-C.; Koo, K.-R.; Oh, H.-U. High Damping Passive Launch Vibration Isolation System Using Superelastic SMA with Multilayered Viscous Lamina. Aerospace 2021, 8, 201. [Google Scholar] [CrossRef]

- Yitzhaky, Y.; Boshusha, G.; Levi, Y.; Kopeika, N.S. Restoration of an image degraded by vibrations using only a single frame. Opt. Eng. 2000, 39, 2083–2091. [Google Scholar] [CrossRef]

- Stern, A.; Kempner, E.; Shukrun, A.; Kopeika, N.S. Restoration and resolution enhancement of a single image from a vibration-distorted image sequence. Opt. Eng. 2000, 39, 2451–2457. [Google Scholar] [CrossRef]

- Stiller, C.; Konrad, J. Estimating motion in image sequences. IEEE Signal Process. Mag. 1999, 16, 70–91. [Google Scholar] [CrossRef]

- Timoner, S.J.; Freeman, D.M. Multi-Image Gradient-Based Algorithms for Motion Estimation. Opt. Eng. 2001, 40, 2003–2006. [Google Scholar] [CrossRef]

- Liu, H.; Li, X.; Xue, X.; Han, C.; Hu, C.; Sun, X. Vibration parameter detection of space camera by taking advantage of CMOS self-correlation Imaging of plane array of roller shutter. Opt. Precis. Eng. 2016, 24, 1474–1481. [Google Scholar]

- Zhu, Y.; Yang, T.; Wang, M.; Hong, H.; Zhang, Y.; Wang, L.; Rao, Q. Jitter Detection Method Based on Sequence CMOS Images Captured by Rolling Shutter Mode for High-Resolution Remote Sensing Satellite. Remote Sens. 2022, 14, 342. [Google Scholar] [CrossRef]

- Hochman, G.; Yitzhaky, Y.; Kopeika, N.S.; Lauber, Y.; Citroen, M.; Stern, A. Restoration of images captured by a staggered time delay and integration camera in the presence of mechanical vibrations. Appl. Opt. 2004, 43, 4345–4354. [Google Scholar] [CrossRef]

- Liu, H.; Han, C.; Li, X.; Jiang, X.; Sun, X.; Fu, Y. Vibration parameter measurement of TDICCD space camera with mechanical assembly. Opt. Precis. Eng. 2015, 23, 720–737. [Google Scholar]

- Zhu, Y.; Wang, M.; Cheng, Y.; He, L.; Xue, L. An Improved Jitter Detection Method Based on Parallax Observation of Multispectral Sensors for Gaofen-1 02/03/04 Satellites. Remote Sens. 2019, 11, 16. [Google Scholar] [CrossRef]

- Liu, H.; Ma, H.; Jiang, Z.; Yan, D. Jitter detection based on parallax observations and attitude data for Chinese Heavenly Palace-1 satellite. Opt. Express 2019, 27, 1099–1123. [Google Scholar] [CrossRef]

- He, L.; Cui, G.; Feng, H.; Xu, Z.; Li, Q.; Chen, Y. Fast image restoration method based on coded exposure and vibration detection. J. Opt. Eng. 2015, 54, 103107. [Google Scholar] [CrossRef]

- Zhang, S.; Xing, F.; Sun, T.; You, Z. Variable Angular Rate Measurement for a Spacecraft Based on the Rolling Shutter Mode of a Star Tracker. Electronics 2023, 12, 1875. [Google Scholar] [CrossRef]

- Wolberg, G.; Loce, R. Restoration of images scanned in the presence of vibrations. J. Electron. Imaging 1996, 5, 50–61. [Google Scholar]

- Wu, J.; Zheng, Z.; Feng, H.; Xu, Z.; Qi, L.; Chen, Y. Restoration of TDI camera images with motion distortion and blur. Opt. Laser Technol. 2010, 42, 1198–1203. [Google Scholar] [CrossRef]

- Zhang, C.; Li, D. Mechanical and Electronic Video Stabilization Strategy of Mortars with Trajectory Correction Fuze Based on Infrared Image Sensor. Sensors 2020, 20, 2461. [Google Scholar] [CrossRef]

- Wang, J.; Lv, X.; Huang, Z.; Fu, X. An Epipolar HS-NCC Flow Algorithm for DSM Generation Using GaoFen-3 Stereo SAR Images. Remote Sens. 2023, 15, 129. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).