Towards Recognition of Human Actions in Collaborative Tasks with Robots: Extending Action Recognition with Tool Recognition Methods

Abstract

1. Introduction

1.1. Application Domain

1.2. Research Gap

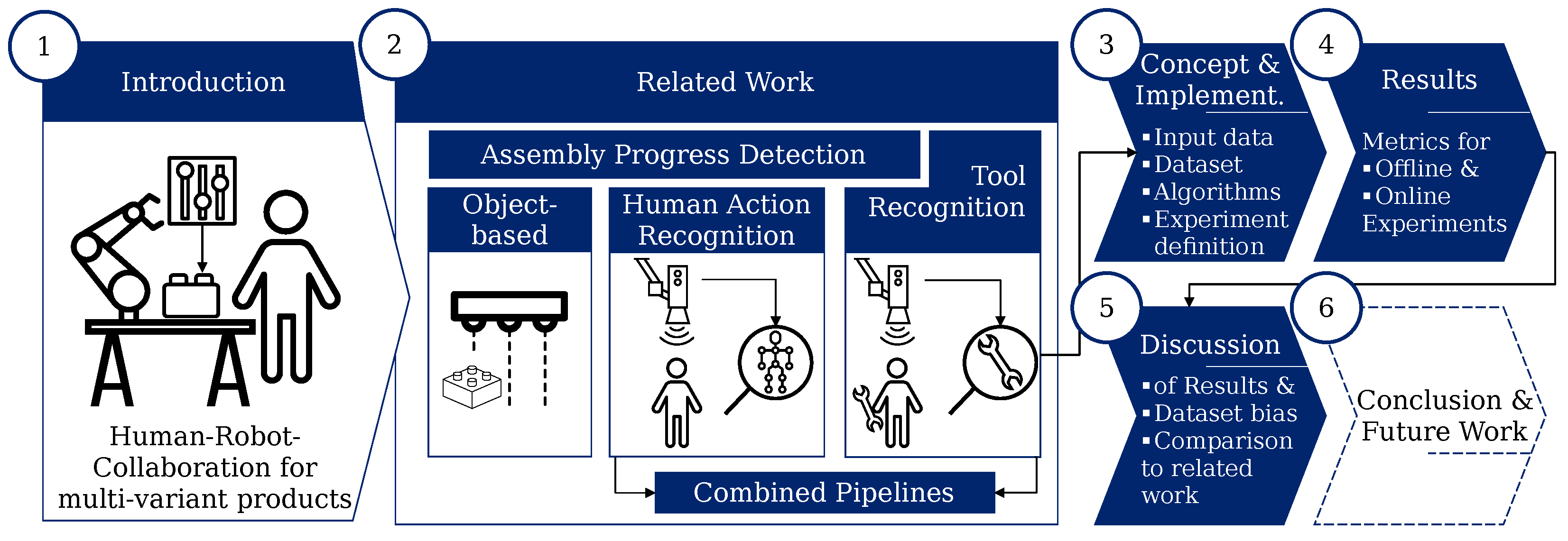

1.3. Outline of This Work

2. Related Work

2.1. Progress Detection in Manual Assembly

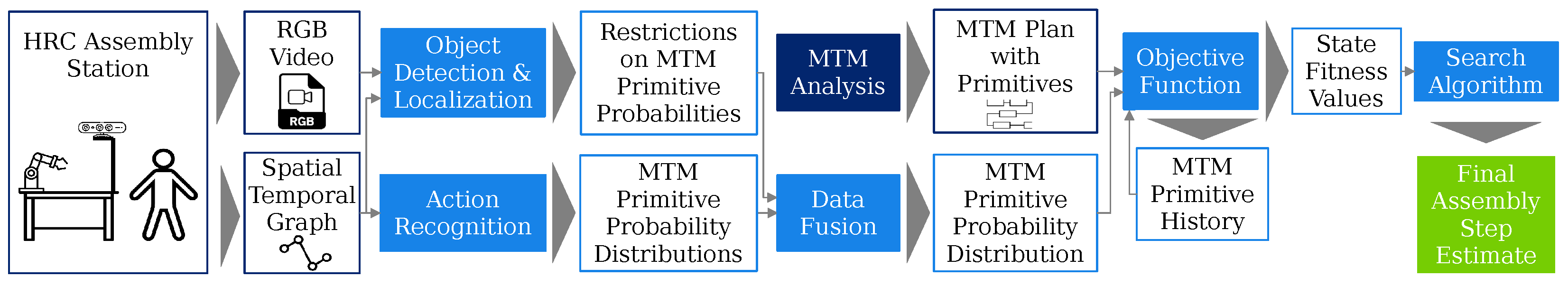

2.1.1. Assembly Step Estimation

2.1.2. Human Action Recognition Pipeline

2.2. Visual Tool Recognition

2.2.1. Tool-Recognition Work

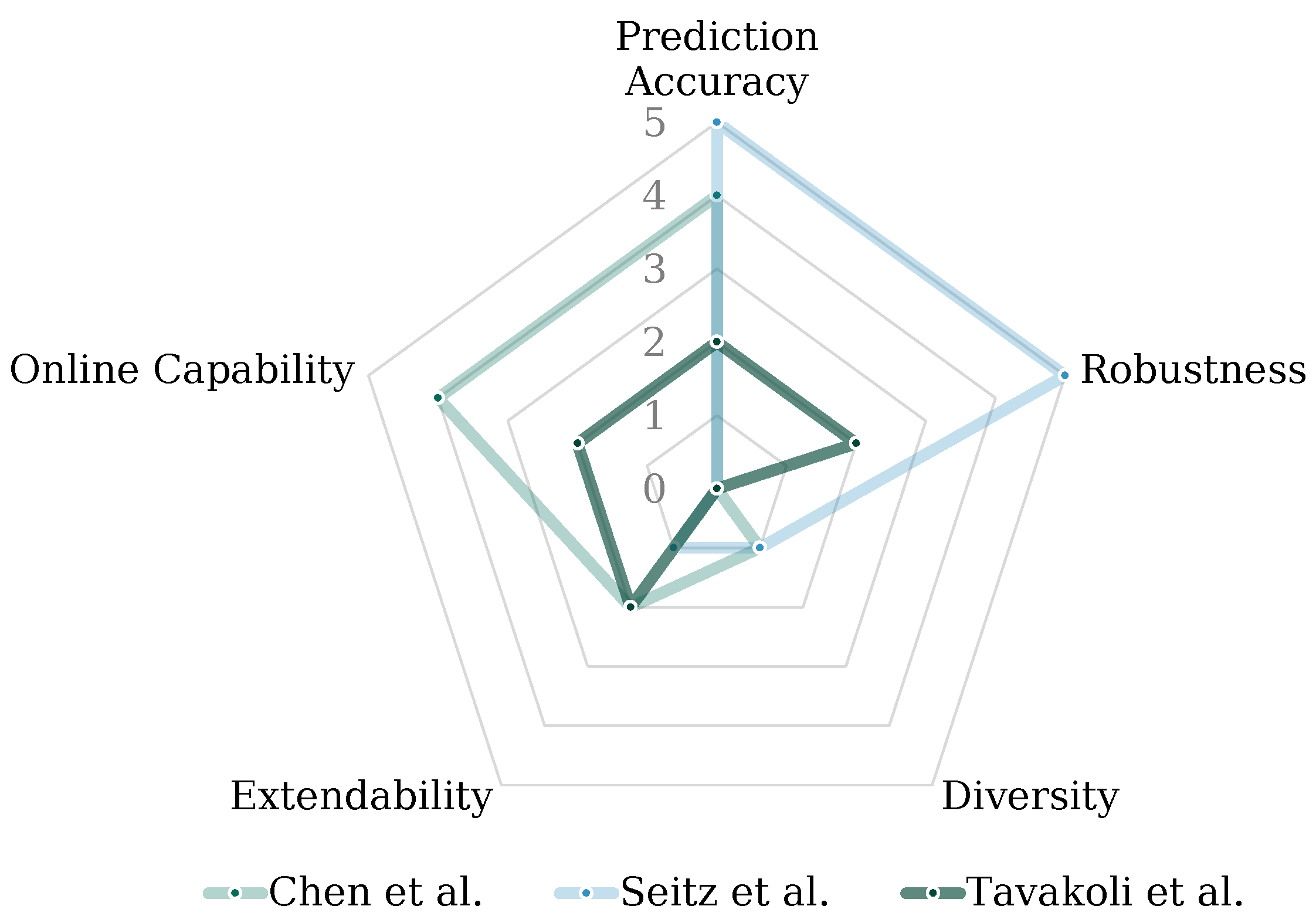

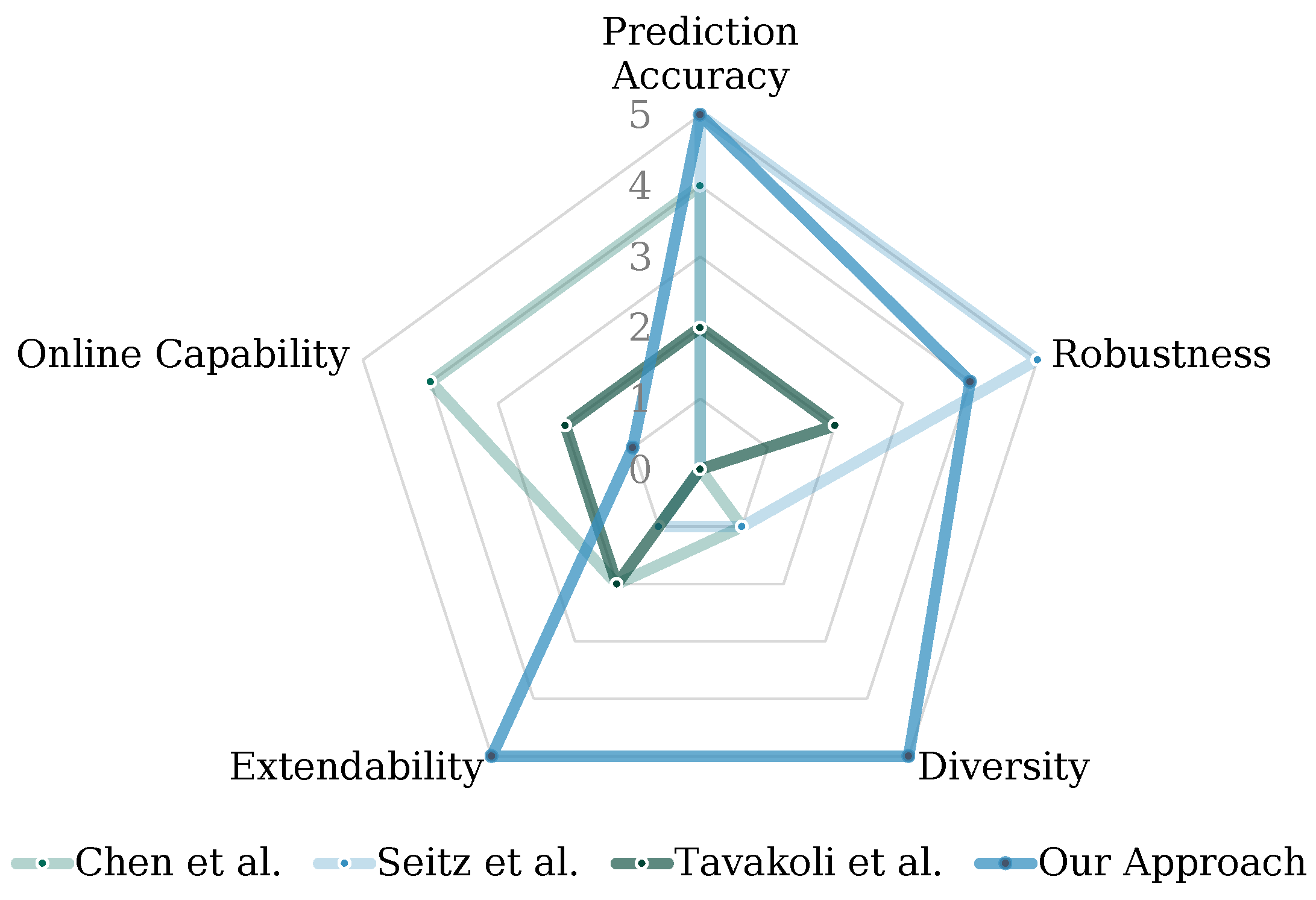

- Prediction accuracy: Sufficient tool prediction accuracy is necessary to avoid misclassifications of detected tools.

- Robustness: Robustness of the tool-recognition applications is necessary to account for changes in the application environment, such as backgrounds and lighting.

- Tool/object/action diversity: Diversity of the tool spectrum considered.

- Extendability and flexibility: If new components/processes/tools are introduced, extensions of the tool-recognition applications are necessary. This criterion evaluates whether the considered approach can be extended with new classes and how it handles non-tool-related manual processes.

- Online capability: Capability of the approach to perform at 30 FPS as it is to be used in near real-time environments.

2.2.2. Data Availability for Tool Recognition

3. Tool-Recognition Pipeline—Concept and Implementation

3.1. Acquisition of Input Data

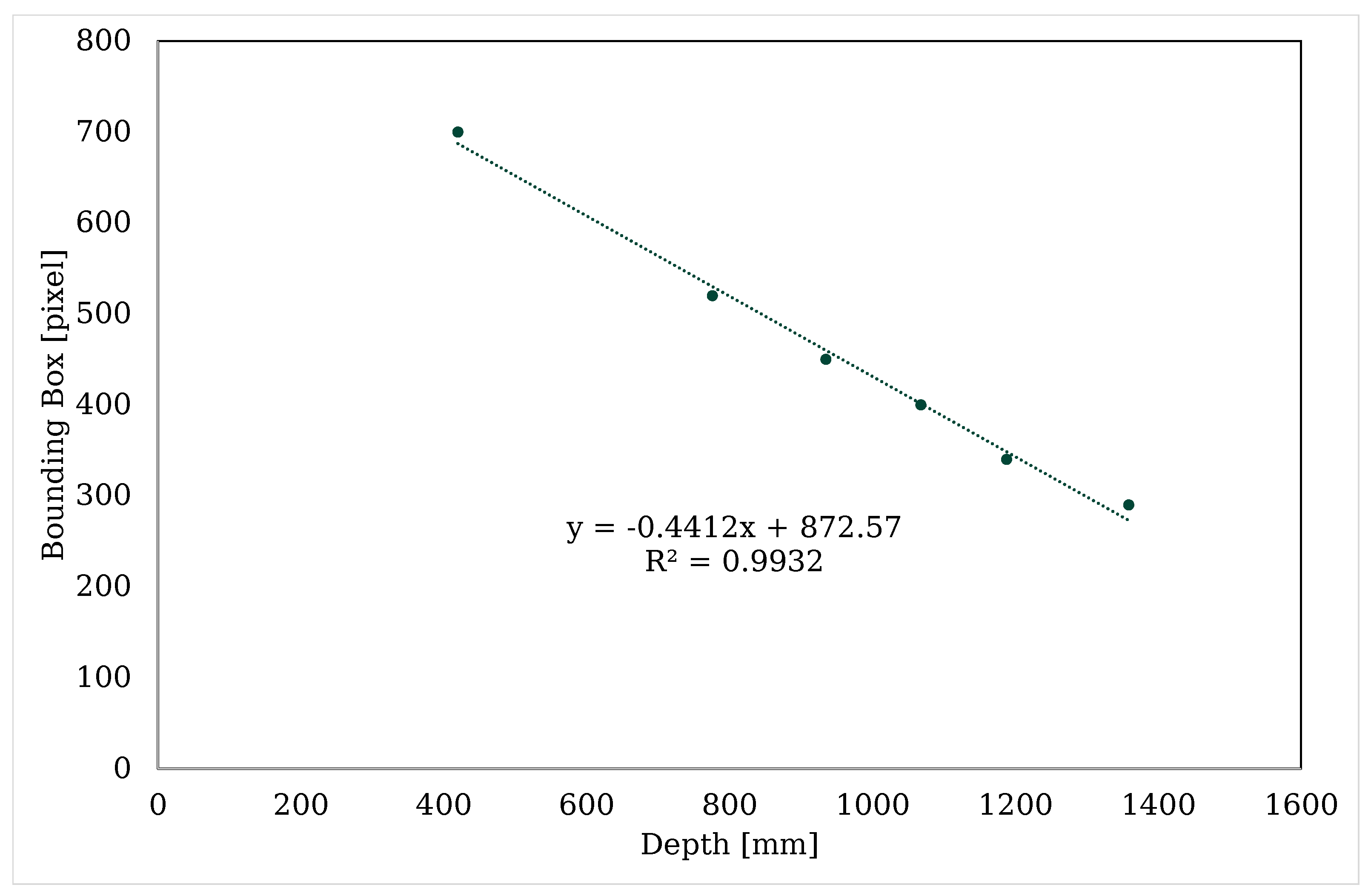

3.2. Region of Interest

3.3. Image Classification

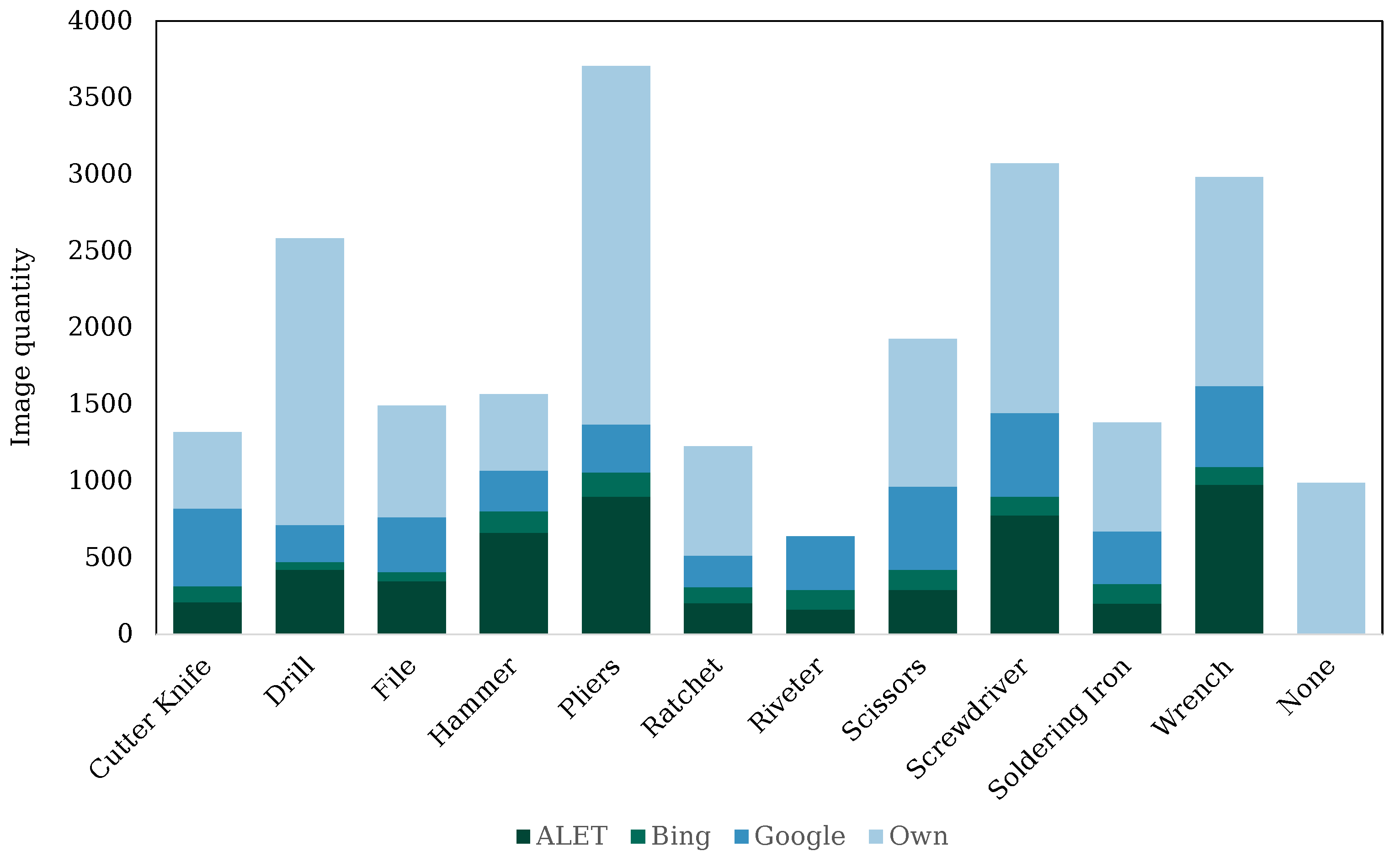

3.3.1. Dataset

- Extracted images from the ALET dataset [31].

- Scraped images from popular search engines, in our case Bing Images (bing.com/images) and Google Images (images.google.com).

- Newly captured photos of real tools held in the hand.

3.3.2. Image Classification Algorithm—Network Used

- DCNN-based classification uses convolutional layers to extract features from given input images by convolving them with filters/kernels of various sizes. This allows the network to learn hierarchical representations of the input data that are increasingly abstract and complex. This principle has become state-of-the-art in various image-processing tasks and is well established with networks such as VGG [40], ResNet [41], MobileNet [42], and InceptionNet [43]. Out of these, ResNet50 was chosen as an exemplary model for DCNN-based classification principles due to its unique architecture, which allows a certain depth of the network without resulting in the vanishing gradient problem. The deep architecture of ResNet allows it to learn and capture intricate and abstract features from the input data. This capability enables ResNet to discriminate between subtle differences and fine-grained details, which may be crucial for distinguishing similar objects. We presumed the to-be-classified objects to be similar, and similar objects may have subtle variations in shape, texture, or appearance, which require a model to extract highly detailed features for accurate differentiation. The increased depth of ResNet enables it to learn and represent these subtle differences by building hierarchical and increasingly abstract representations of the input data.

- Transformer-based classification, on the other hand, relies on a self-attention mechanism to encode the patches of the given input image and capture long-range dependencies between the different elements of the sequence. This allows the model to incorporate differentiated features in its final decision-making. With established success in natural language processing, the use of transformer networks for image processing is rising in popularity. Architectures such as Vision Transformer (ViT) [44] have shown initial success in outperforming DCNN-based networks in various applications and are chosen as an alternative to the ResNet model.

3.4. Experiments’ Description

- First, an offline test was conducted to display the capability of the above-outlined tool classification network as a stand-alone application. The two model architectures were trained with the training dataset and evaluated against the test dataset, introduced in Section 3.3.1.

- Afterwards, experiments with different live scenarios were conducted to demonstrate and test the capability of the entire tool-recognition pipeline in combination with the action recognition. For this, several scenarios are defined:

- (a)

- All classes: This experiment was performed to test the principal applicability of the integrated image classificator in the pipeline. For this, all eleven tool classes and the none class were included in the test.

- (b)

- All classes—challenging backgrounds: To test the robustness of the approach in challenging environments, this scenario took place in front of chaotic backgrounds that pose distractions for typical vision applications. Backgrounds are considered challenging with multiple objects in view and/or complex shadow and light situations. This test was also performed with all classes.

- (c)

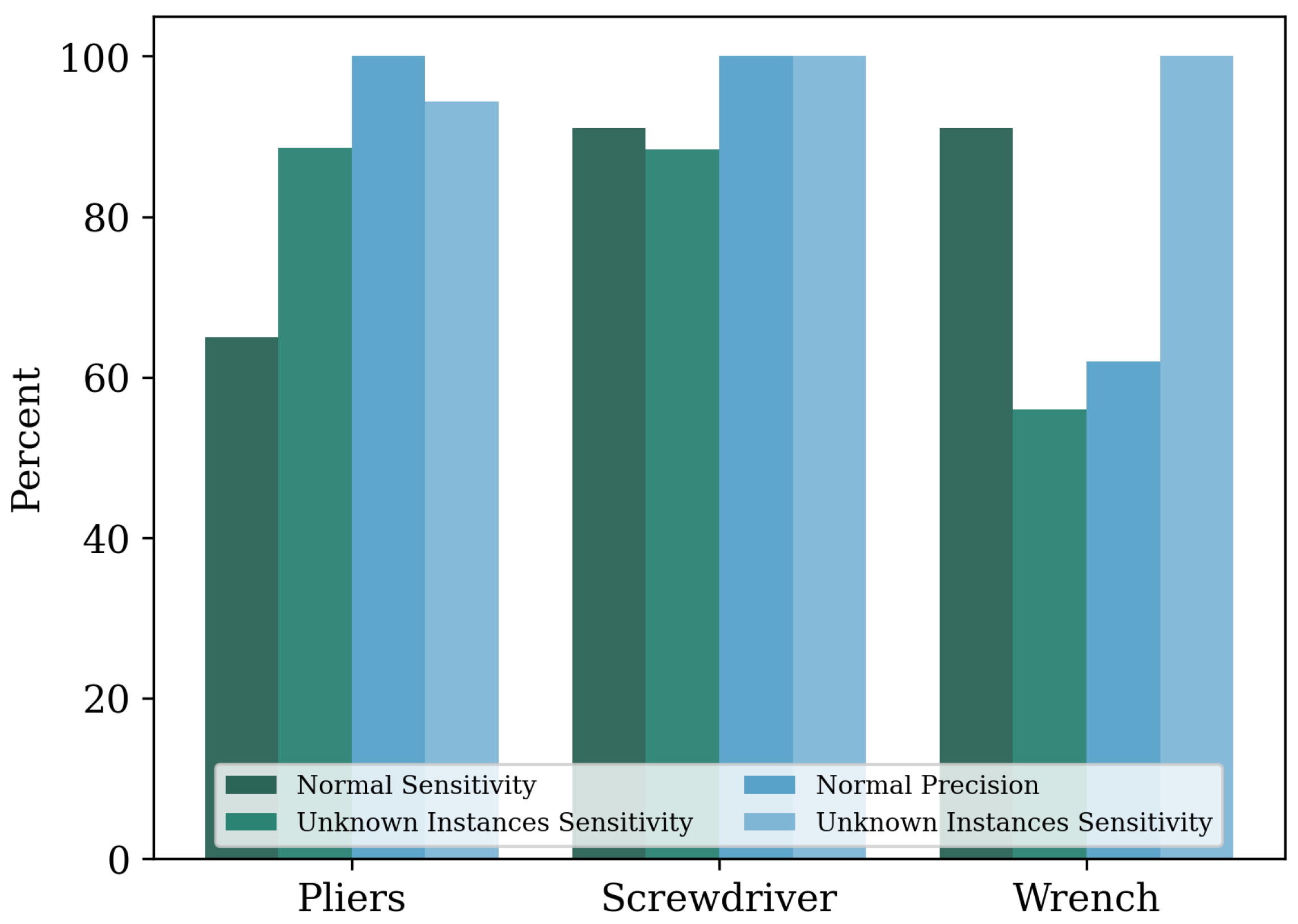

- Three known classes—unknown instances of tools: To test the transferability of the approach towards different instances of a tool class, additional tools of the three classes (1) pliers, (2) screwdriver, and (3) wrench were used. Those new instances distinguish themselves from the instances represented in the training dataset by different features such as color schemes and geometric individualities.

- (d)

- Specific assembly scenarios

- One tool class and the none class: Assuming prior knowledge about a specific assembly step and anticipation of that step, a tool-recognition scenario would not include a manifold of tools in that step, but rather a single tool that was either used for that specific assembly step or not. Thus, a binary classification can take place between the none class and the specific tool class. To test the behavior of the AI detector between those two variants, this experiment was conducted.

- Two tool classes: Similar to the above case, now, instead of the none class, another tool class was used in this test to demonstrate the increasing feasibility of the approach for reduced task complexities.

4. Results

4.1. Hyperparameter Settings and Training

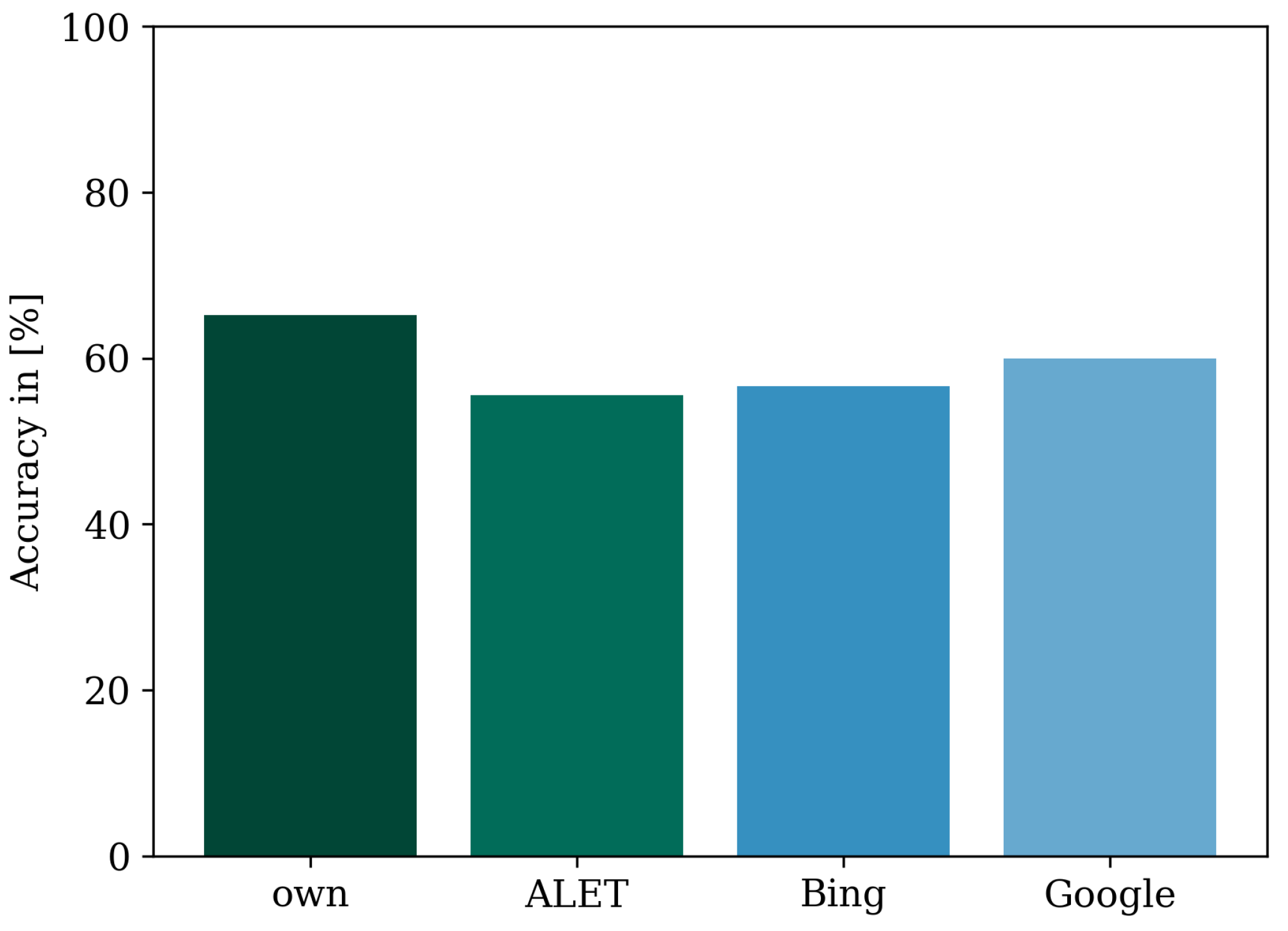

4.2. Classification Results—Offline Test Data

| ResNet50 | 0.61 |

| ViT | 0.98 |

Reducing the FP Quota of the ResNet

4.3. Results of Live Scenarios—Online Pipeline

4.3.1. All Classes—Normal and Challenging Backgrounds

| Normal | 0.81 |

| Modified BG | 0.88 |

4.3.2. Three Known Classes—Unknown Instances

4.3.3. Two Classes—Assembly Scenarios

| Screwdriver and none | 98.3% |

| Drill and screwdriver | 96.5% |

4.3.4. Measuring Online Usability of Toolbox—FPS

5. Discussion

5.1. Discussion of the Offline Image Classification Results

5.2. Discussion of the Online Pipeline Experiments

5.3. Discussion of the Resulting Frame Rate

5.4. Discussion of Bias in the Dataset

5.5. Comparison of the Approach to Related Work

5.6. Discussion of the Assembly Step Estimation

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| HAR | Human Action Recognition |

| HMI | Human–Machine Interface |

| HRC | Human–Robot Collaboration |

| ASE | Assembly Step Estimation |

| HMM | Hidden Markov Model |

| FSM | Finite-State Machine |

| MTM | Methods-Time Measurement |

| ROI | Region of Interest |

| SDK | Software Development Kit |

| BB | Bounding Box |

| ViT | Vision Transformer |

| TP | True Positive |

| FP | False Positive |

Appendix A

| Tool | Model Quantity |

|---|---|

| Scissors | 3 |

| Hammer | 1 |

| Soldering iron | 1 |

| Drill | 2 |

| Cutter Knife | 1 |

| Screwdriver | 4 |

| Wrench | 4 |

| Ratchet | 2 |

| File | 2 |

| Pliers | 5 |

References

- Buxbaum, H.J. Mensch-Roboter-Kollaboration; Springer Fachmedien Wiesbaden: Wiesbaden, Germany, 2020. [Google Scholar] [CrossRef]

- Müller, R.; Vette, M.; Geenen, A. Skill-based Dynamic Task Allocation in Human-Robot-Cooperation with the Example of Welding Application. Procedia Manuf. 2017, 11, 13–21. [Google Scholar] [CrossRef]

- Masiak, T. Entwicklung Eines Mensch-Roboter-Kollaborationsfähigen Nietprozesses unter Verwendung von KI-Algorithmen und Blockchain-Technologien: Unter Randbedingungen der Flugzeugstrukturmontage. Doctoral Thesis, Universität des Saarlandes, Saarbrücken, Germany, 2020. [Google Scholar]

- Rautiainen, S.; Pantano, M.; Traganos, K.; Ahmadi, S.; Saenz, J.; Mohammed, W.M.; Martinez Lastra, J.L. Multimodal Interface for Human–Robot Collaboration. Machines 2022, 10, 957. [Google Scholar] [CrossRef]

- Pérez, L.; Rodríguez-Jiménez, S.; Rodríguez, N.; Usamentiaga, R.; García, D.F.; Wang, L. Symbiotic human–robot collaborative approach for increased productivity and enhanced safety in the aerospace manufacturing industry. Int. J. Adv. Manuf. Technol. 2020, 106, 851–863. [Google Scholar] [CrossRef]

- Kalscheuer, F.; Eschen, H.; Schüppstuhl, T. (Eds.) Towards Semi Automated Pre-Assembly for Aircraft Interior Production; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar] [CrossRef]

- Adler, P.; Syniawa, D.; Jakschik, M.; Christ, L.; Hypki, A.; Kuhlenkötter, B. Automated Assembly of Large-Scale Water Electrolyzers. Ind. 4.0 Manag. 2022, 2022, 12–16. [Google Scholar] [CrossRef]

- Gierecker, J.; Schüppstuhl, T. Assembly specific viewpoint generation as part of a simulation based sensor planning pipeline. Procedia CIRP 2021, 104, 981–986. [Google Scholar] [CrossRef]

- Chen, C.; Wang, T.; Li, D.; Hong, J. Repetitive assembly action recognition based on object detection and pose estimation. J. Manuf. Syst. 2020, 55, 325–333. [Google Scholar] [CrossRef]

- Goto, H.; Miura, J.; Sugiyama, J. Human-robot collaborative assembly by on-line human action recognition based on an FSM task model. In Human-Robot Interaction 2013: Workshop on Collaborative Manipulation; IEEE Press: New York, NY, USA, 2013; ISBN 9781467330558. [Google Scholar]

- Koch, J.; Büsch, L.; Gomse, M.; Schüppstuhl, T. A Methods-Time-Measurement based Approach to enable Action Recognition for Multi-Variant Assembly in Human-Robot Collaboration. Procedia CIRP 2022, 106, 233–238. [Google Scholar] [CrossRef]

- Reining, C.; Niemann, F.; Moya Rueda, F.; Fink, G.A.; ten Hompel, M. Human Activity Recognition for Production and Logistics—A Systematic Literature Review. Information 2019, 10, 245. [Google Scholar] [CrossRef]

- Rückert, P.; Papenberg, B.; Tracht, K. Classification of assembly operations using machine learning algorithms based on visual sensor data. Procedia CIRP 2021, 97, 110–116. [Google Scholar] [CrossRef]

- Xue, J.; Hou, X.; Zeng, Y. Review of Image-Based 3D Reconstruction of Building for Automated Construction Progress Monitoring. Appl. Sci. 2021, 11, 7840. [Google Scholar] [CrossRef]

- Wang, L.; Du Huynh, Q.; Koniusz, P. A Comparative Review of Recent Kinect-Based Action Recognition Algorithms. IEEE Trans. Image Process. Publ. IEEE Signal Process. Soc. 2020, 29, 15–28. [Google Scholar] [CrossRef]

- Dallel, M.; Hardvard, V.; Baudry, D.; Savatier, X. InHARD—Industrial Human Action Recognition Dataset in the Context of Industrial Collaborative Robotics. In Proceedings of the 2020 IEEE International Conference on Human-Machine Systems (ICHMS), Rome, Italy, 7–9 September 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Gierecker, J.; Schoepflin, D.; Schmedemann, O.; Schüppstuhl, T. Configuration and Enablement of Vision Sensor Solutions Through a Combined Simulation Based Process Chain. In Annals of Scientific Society for Assembly, Handling and Industrial Robotics 2021; Schüppstuhl, T., Tracht, K., Raatz, A., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 313–324. [Google Scholar] [CrossRef]

- Berg, J.; Reckordt, T.; Richter, C.; Reinhart, G. Action Recognition in Assembly for Human-Robot-Cooperation using Hidden Markov Models. Procedia CIRP 2018, 76, 205–210. [Google Scholar] [CrossRef]

- Berger, J.; Lu, S. A Multi-camera System for Human Detection and Activity Recognition. Procedia CIRP 2022, 112, 191–196. [Google Scholar] [CrossRef]

- Rückert, P.; Birgy, K.; Tracht, K. Image Based Classification of Methods-Time Measurement Operations in Assembly Using Recurrent Neuronal Networks. In Advances in System-Integrated Intelligence; Lecture Notes in Networks and Systems; Valle, M., Lehmhus, D., Gianoglio, C., Ragusa, E., Seminara, L., Bosse, S., Ibrahim, A., Thoben, K.D., Eds.; Springer: Cham, Switzerland, 2023; Volume 546, pp. 53–62. [Google Scholar] [CrossRef]

- Gomberg, W.; Maynard, H.B.; Stegemerten, G.J.; Schwab, J.L. Methods-Time Measurement. Ind. Labor Relations Rev. 1949, 2, 456–458. [Google Scholar] [CrossRef]

- Dallel, M.; Havard, V.; Dupuis, Y.; Baudry, D. A Sliding Window Based Approach With Majority Voting for Online Human Action Recognition using Spatial Temporal Graph Convolutional Neural Networks. In Proceedings of the 7th International Conference on Machine Learning Technologies (ICMLT), Rome, Italy, 11–13 March 2022; pp. 155–163. [Google Scholar] [CrossRef]

- Dallel, M.; Havard, V.; Dupuis, Y.; Baudry, D. Digital twin of an industrial workstation: A novel method of an auto-labeled data generator using virtual reality for human action recognition in the context of human–robot collaboration. Eng. Appl. Artif. Intell. 2023, 118, 105655. [Google Scholar] [CrossRef]

- Delamare, M.; Laville, C.; Cabani, A.; Chafouk, H. Graph Convolutional Networks Skeleton-based Action Recognition for Continuous Data Stream: A Sliding Window Approach. In Proceedings of the 16th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Online Streaming, 8–10 February 2021; SCITEPRESS—Science and Technology Publications: Setúbal, Portugal, 2021; pp. 427–435. [Google Scholar] [CrossRef]

- Seitz, J.; Nickel, C.; Christ, T.; Karbownik, P.; Vaupel, T. Location awareness and context detection for handheld tools in assembly processes. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation (IPIN), Nantes, France, 24–27 September 2018. [Google Scholar]

- Tavakoli, H.; Walunj, S.; Pahlevannejad, P.; Plociennik, C.; Ruskowski, M. Small Object Detection for Near Real-Time Egocentric Perception in a Manual Assembly Scenario. arXiv 2021, arXiv:2106.06403. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Computer Vision—ECCV 2014; Lecture Notes in Computer Science; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer: Cham, Switzerland, 2014; Volume 8693, pp. 740–755. [Google Scholar] [CrossRef]

- Kuznetsova, A.; Rom, H.; Alldrin, N.; Uijlings, J.; Krasin, I.; Pont-Tuset, J.; Kamali, S.; Popov, S.; Malloci, M.; Kolesnikov, A.; et al. The Open Images Dataset V4. Int. J. Comput. Vis. 2020, 128, 1956–1981. [Google Scholar] [CrossRef]

- Shilkrot, R.; Narasimhaswamy, S.; Vazir, S.; Hoai, M. WorkingHands: A Hand-Tool Assembly Dataset for Image Segmentation and Activity Mining. In Proceedings of the British Machine Vision Conference, Cardiff, Wales, 9–12 September 2019. [Google Scholar]

- Kurnaz, F.C.; Hocaoglu, B.; Yılmaz, M.K.; Sülo, İ.; Kalkan, S. ALET (Automated Labeling of Equipment and Tools): A Dataset for Tool Detection and Human Worker Safety Detection. In Computer Vision—ECCV 2020 Workshops; Lecture Notes in Computer Science; Bartoli, A., Fusiello, A., Eds.; Springer International Publishing: Cham, Switzerland, 2020; Volume 12538, pp. 371–386. [Google Scholar] [CrossRef]

- Qin, Z.; Liu, Y.; Perera, M.; Gedeon, T.; Ji, P.; Kim, D.; Anwar, S. ANUBIS: Skeleton Action Recognition Dataset, Review, and Benchmark. arXiv 2022, arXiv:2205.02071. [Google Scholar] [CrossRef]

- Albert, J.A.; Owolabi, V.; Gebel, A.; Brahms, C.M.; Granacher, U.; Arnrich, B. Evaluation of the Pose Tracking Performance of the Azure Kinect and Kinect v2 for Gait Analysis in Comparison with a Gold Standard: A Pilot Study. Sensors 2020, 20, 5104. [Google Scholar] [CrossRef]

- Tölgyessy, M.; Dekan, M.; Chovanec, Ľ.; Hubinský, P. Evaluation of the Azure Kinect and Its Comparison to Kinect V1 and Kinect V2. Sensors 2021, 21, 413. [Google Scholar] [CrossRef]

- Romeo, L.; Marani, R.; Malosio, M.; Perri, A.G.; D’Orazio, T. Performance Analysis of Body Tracking with the Microsoft Azure Kinect. In Proceedings of the 2021 29th Mediterranean Conference on Control and Automation (MED), Puglia, Italy, 22–25 June 2021; pp. 572–577. [Google Scholar] [CrossRef]

- ibaiGorordoro. pyKinectAzure. Available online: https://github.com/ibaiGorordo/pyKinectAzure (accessed on 10 June 2023).

- Use Azure Kinect Calibration Functions. Available online: https://learn.microsoft.com/en-us/azure/kinect-dk/use-calibration-functions (accessed on 10 June 2023).

- Bradski, G. The OpenCV Library. Dr. Dobb’s J. Softw. Tools 2000, 25, 120–123. [Google Scholar]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning; Springer: New York, NY, USA, 2009. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar] [CrossRef]

- Chollet, F. Keras, GitHub. 2015. Available online: https://github.com/fchollet/keras (accessed on 14 June 2023).

- TensorFlow Developers. TensorFlow. 2023. Available online: https://zenodo.org/record/7987192 (accessed on 10 June 2023).

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. HuggingFace’s Transformers: State-of-the-art Natural Language Processing. arXiv 2019, arXiv:1910.03771. [Google Scholar] [CrossRef]

- Akiba, T.; Sano, S.; Yanase, T.; Ohta, T.; Koyama, M. Optuna: A Next-generation Hyperparameter Optimization Framework. arXiv 2019, arXiv:1907.10902. [Google Scholar] [CrossRef]

- Torralba, A.; Efros, A.A. Unbiased look at dataset bias. In Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011; pp. 1521–1528. [Google Scholar] [CrossRef]

| Layer | Hyperparameter | Search Space |

|---|---|---|

| ResNet50 Base Model | Trainable Layers | [0…197] |

| Flatten | - | - |

| Dense | Number of Neurons | [50…5000] |

| Activation Function | [Relu, tanh] | |

| Dropout | Option | [True, False] |

| Dropout Rate | [0.1…0.2] | |

| Optimizer | [Adam, SGD, RMSPROP, AdaDelta] | |

| Learning Rate | [ …] | |

| Batch Size | 32 | |

| Epochs | 25 |

| Hyperparameter | Search Space |

|---|---|

| Epochs | [1, 5, 10, 15] |

| Learning Rate | […] |

| Batch Size | [8, 16, 32, 64, 128] |

| Layer | Hyperparameter | Best Trial |

|---|---|---|

| ResNet50 Base Model | Not Trainable Layers | 0–12 |

| ResNet50 Base Model | Trainable Layers | 120–197 |

| Dense | Number of Neurons | 3296 |

| Activation Function | Relu | |

| Dropout | Option | True |

| Dropout Rate | 0.10 | |

| Optimizer | RMSprop | |

| Learning Rate | ||

| Epochs | 55 | |

| Batch Size | 32 |

| Hyperparameter | Best Trial |

|---|---|

| Number of Trials | 50 |

| Epochs | 10 |

| Learning Rate | |

| Batch Size | 32 |

| Confidence Threshold | Accuracy |

|---|---|

| 10% | 0.65 |

| 15% | 0.84 |

| 17% | 0.88 |

| Sensitivity | Precision | |||||

|---|---|---|---|---|---|---|

| Class | Normal | Mod. BG | Delta | Normal | Mod. BG | Delta |

| Cutter | 99.2 | 100.0 | −0.8 | 99.7 | 100.0 | −0.3 |

| Drill | 100.0 | 100.0 | 0.0 | 85.3 | 79.1 | 6.2 |

| File | 32.6 | 0.0 | 32.6 | 62.8 | - | - |

| Hammer | 51.5 | 89.6 | −38.0 | 99.6 | 100.0 | −0.4 |

| None | 82.8 | 97.8 | −15.0 | 60.4 | 100.0 | −39.6 |

| Pliers | 65.6 | 76.4 | −10.8 | 100.0 | 99.2 | 0.8 |

| Ratchet | 87.0 | 100.0 | −13.0 | 82.9 | 78.4 | 4.5 |

| Scissors | 95.0 | 100.0 | −5.0 | 78.8 | 52.2 | 26.6 |

| Screwdriver | 92.9 | 97.6 | −4.8 | 99.7 | 100.0 | −0.3 |

| Soldering Iron | 96.6 | 100.0 | −3.4 | 98.7 | 100.0 | −1.3 |

| Wrench | 92.8 | 84.8 | 8.0 | 63.2 | 100.0 | −36.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Büsch, L.; Koch, J.; Schoepflin, D.; Schulze, M.; Schüppstuhl, T. Towards Recognition of Human Actions in Collaborative Tasks with Robots: Extending Action Recognition with Tool Recognition Methods. Sensors 2023, 23, 5718. https://doi.org/10.3390/s23125718

Büsch L, Koch J, Schoepflin D, Schulze M, Schüppstuhl T. Towards Recognition of Human Actions in Collaborative Tasks with Robots: Extending Action Recognition with Tool Recognition Methods. Sensors. 2023; 23(12):5718. https://doi.org/10.3390/s23125718

Chicago/Turabian StyleBüsch, Lukas, Julian Koch, Daniel Schoepflin, Michelle Schulze, and Thorsten Schüppstuhl. 2023. "Towards Recognition of Human Actions in Collaborative Tasks with Robots: Extending Action Recognition with Tool Recognition Methods" Sensors 23, no. 12: 5718. https://doi.org/10.3390/s23125718

APA StyleBüsch, L., Koch, J., Schoepflin, D., Schulze, M., & Schüppstuhl, T. (2023). Towards Recognition of Human Actions in Collaborative Tasks with Robots: Extending Action Recognition with Tool Recognition Methods. Sensors, 23(12), 5718. https://doi.org/10.3390/s23125718