NISQE: Non-Intrusive Speech Quality Evaluator Based on Natural Statistics of Mean Subtracted Contrast Normalized Coefficients of Spectrogram

Abstract

1. Introduction

Related Work

- NSS features based on the gradient magnitude and Laplacian of Gaussian are used for SQA;

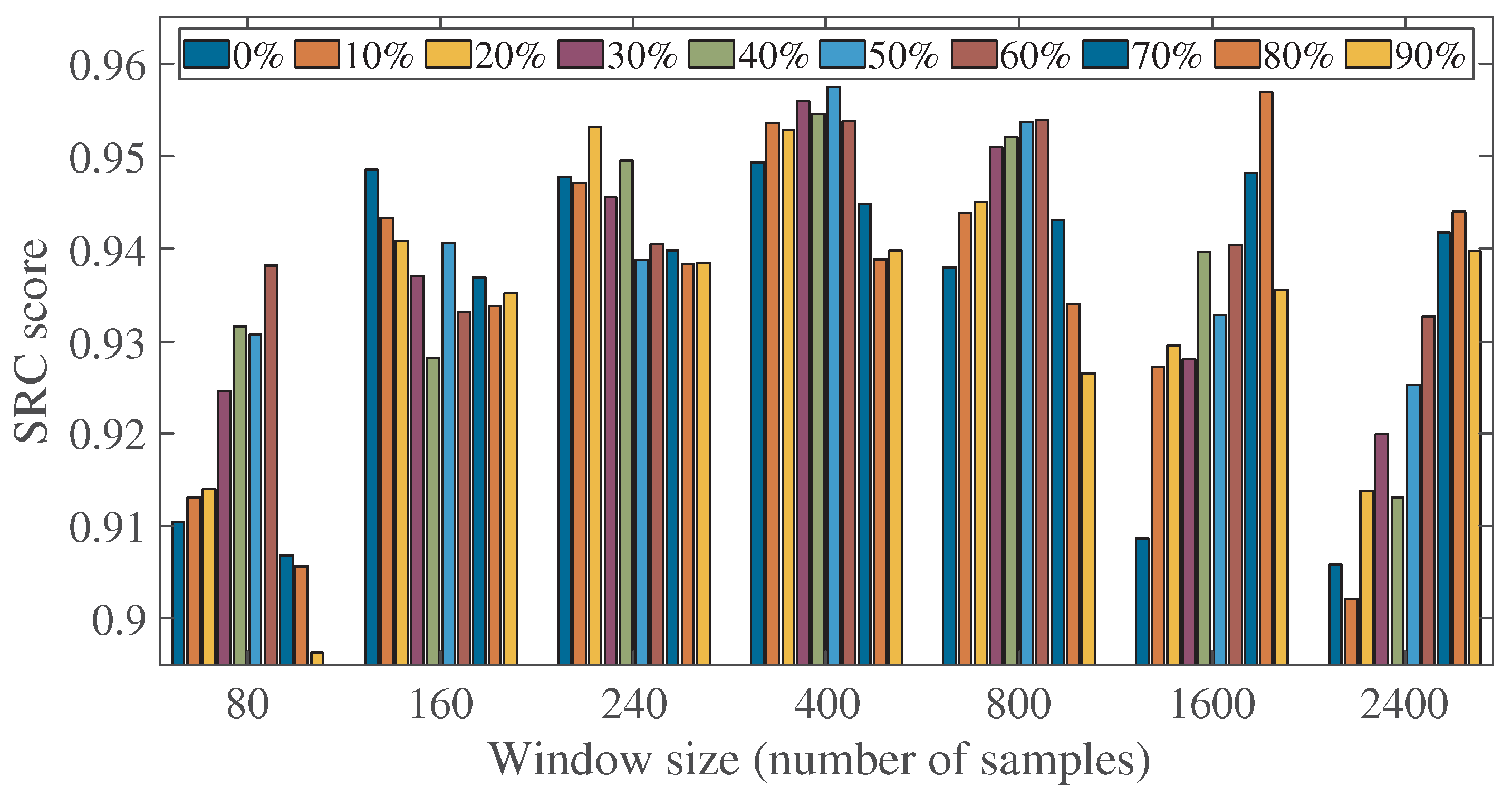

- The impact of the spectrogram window size is investigated in detail to assess the optimum window size and percentage of signal overlap.

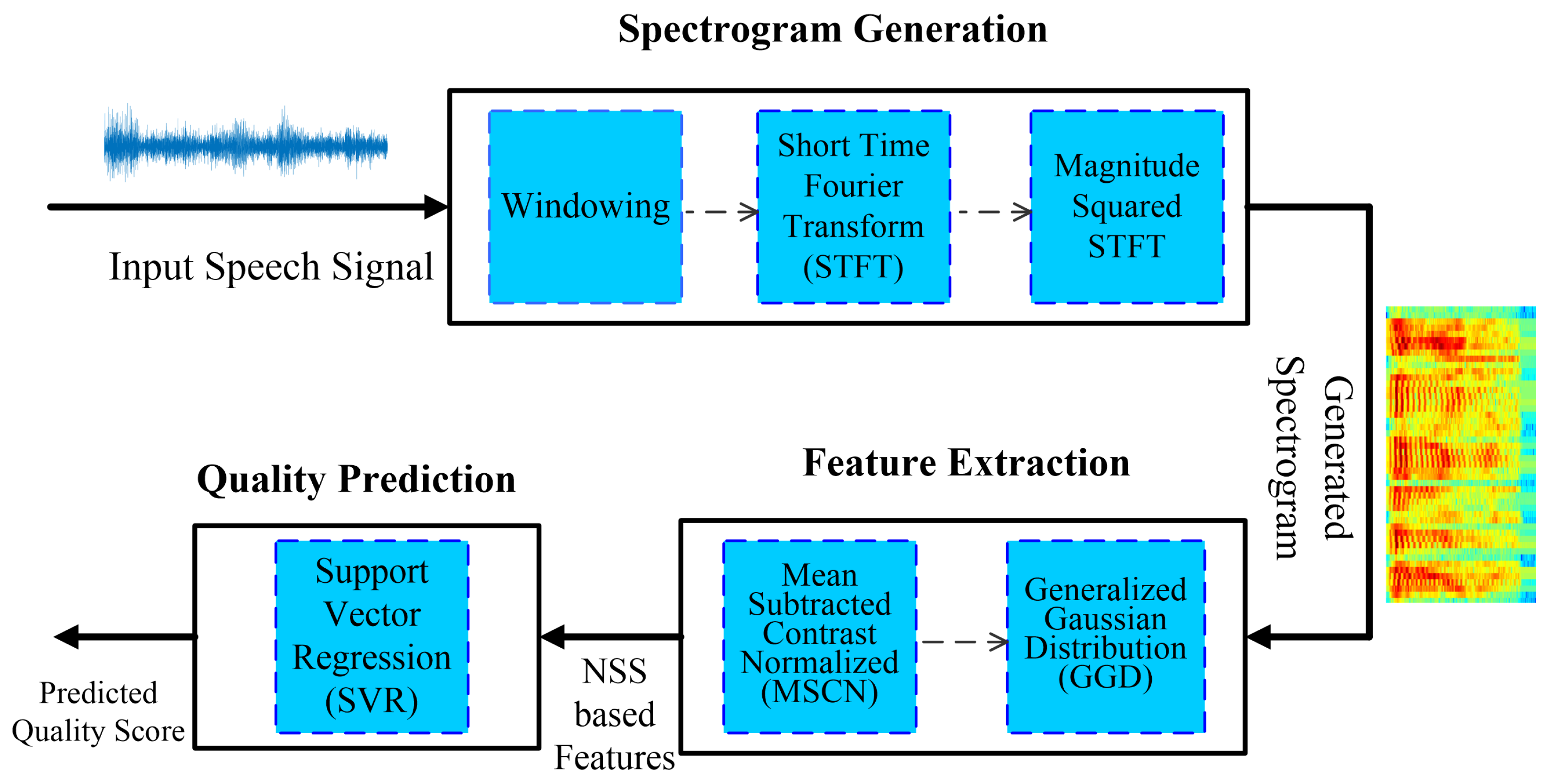

2. Proposed Methodology for NI-SQA

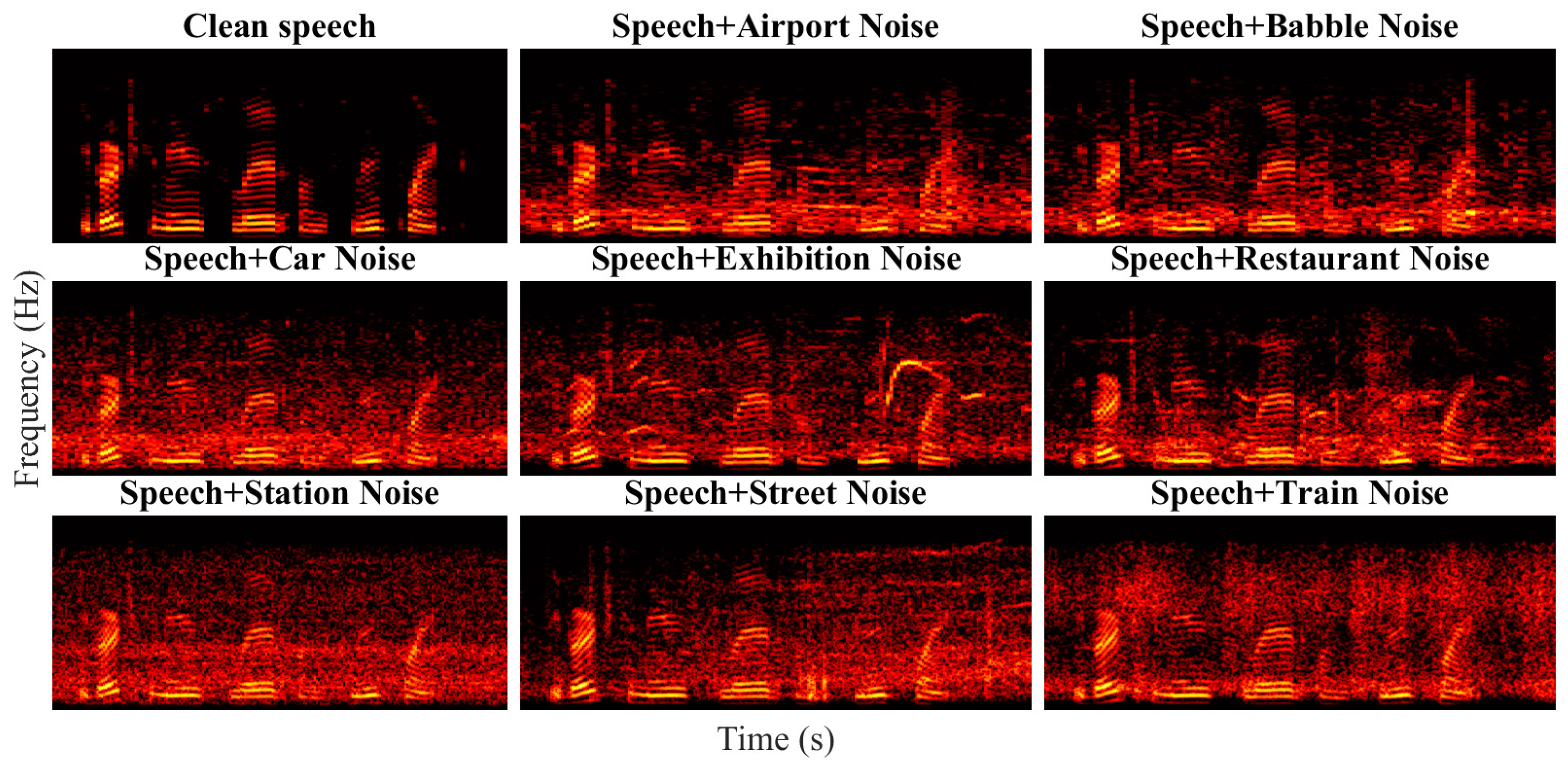

2.1. Spectrogram Generation

2.2. Feature Extraction

2.3. Quality Prediction

3. Experimental Results

3.1. Database Description and Training Setup

3.2. Performance Evaluation Criteria

3.3. Performance Analysis

3.4. Discussion

4. Summary, Conclusions, and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Cisco Visual Networking Index: Global Mobile Data Traffic Forecast Update, 2017–2022. Available online: http://media.mediapost.com/uploads/CiscoForecast.pdf (accessed on 1 May 2023).

- Avila, A.R.; Gamper, H.; Reddy, C.; Cutler, R.; Tashev, I.; Gehrke, J. Non-intrusive speech quality assessment using neural networks. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 631–635. [Google Scholar]

- Zequeira Jiménez, R.; Llagostera, A.; Naderi, B.; Möller, S.; Berger, J. Intra-and Inter-rater Agreement in a Subjective Speech Quality Assessment Task in Crowdsourcing. In Proceedings of the 2019 World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 1138–1143. [Google Scholar]

- Streijl, R.C.; Winkler, S.; Hands, D.S. Mean opinion score (MOS) revisited: Methods and applications, limitations and alternatives. Multimed. Syst. 2016, 22, 213–227. [Google Scholar] [CrossRef]

- Series, B. Method for the subjective assessment of intermediate quality level of audio systems. In International Telecommunication Union Radiocommunication Assembly; International Telecommunication Union (ITU): Geneva, Switzerland, 2014. [Google Scholar]

- Malfait, L.; Berger, J.; Kastner, M. P. 563—The ITU-T standard for single-ended speech quality assessment. IEEE Trans. Audio Speech Lang. Process. 2006, 14, 1924–1934. [Google Scholar] [CrossRef]

- Affonso, E.T.; Rosa, R.L.; Rodriguez, D.Z. Speech quality assessment over lossy transmission channels using deep belief networks. IEEE Signal Process. Lett. 2017, 25, 70–74. [Google Scholar] [CrossRef]

- Affonso, E.T.; Nunes, R.D.; Rosa, R.L.; Pivaro, G.F.; Rodriguez, D.Z. Speech quality assessment in wireless voip communication using deep belief network. IEEE Access 2018, 6, 77022–77032. [Google Scholar] [CrossRef]

- Rodríguez, D.Z.; Rosa, R.L.; Almeida, F.L.; Mittag, G.; Möller, S. Speech quality assessment in wireless communications with mimo systems using a parametric model. IEEE Access 2019, 7, 35719–35730. [Google Scholar] [CrossRef]

- Wang, J.; Shan, Y.; Xie, X.; Kuang, J. Output-based speech quality assessment using autoencoder and support vector regression. Speech Commun. 2019, 110, 13–20. [Google Scholar] [CrossRef]

- Jassim, W.A.; Zilany, M.S. NSQM: A non-intrusive assessment of speech quality using normalized energies of the neurogram. Comput. Speech Lang. 2019, 58, 260–279. [Google Scholar] [CrossRef]

- Fu, S.W.; Liao, C.F.; Tsao, Y. Learning with learned loss function: Speech enhancement with quality-net to improve perceptual evaluation of speech quality. IEEE Signal Process. Lett. 2019, 27, 26–30. [Google Scholar] [CrossRef]

- Kin, M.J.; Brachmański, S. Quality assessment of musical and speech signals broadcasted via Single Frequency Network DAB+. Int. J. Electron. Telecommun. 2020, 66, 139–144. [Google Scholar]

- Naderi, B.; Möller, S.; Neubert, F.; Höller, V.; Köster, F.; Fernández Gallardo, L. Influence of environmental background noise on speech quality assessments task in crowdsourcing microtask platform. J. Acoust. Soc. Am. 2017, 141, 3909–3910. [Google Scholar] [CrossRef]

- Sharma, D.; Wang, Y.; Naylor, P.A.; Brookes, M. A data-driven non-intrusive measure of speech quality and intelligibility. Speech Commun. 2016, 80, 84–94. [Google Scholar] [CrossRef]

- Avila, A.R.; Alam, J.; O’Shaughnessy, D.; Falk, T.H. Intrusive Quality Measurement of Noisy and Enhanced Speech based on i-Vector Similarity. In Proceedings of the 2019 Eleventh International Conference on Quality of Multimedia Experience (QoMEX), Berlin, Germany, 5–7 June 2019; pp. 1–5. [Google Scholar]

- Terekhov, A.; Korchagina, A. Improved Accuracy Intrusive Method for Speech Quality Evaluation Based on Consideration of Intonation Impact. In Proceedings of the 2020 IEEE Systems of Signals Generating and Processing in the Field of on Board Communications, Moscow, Russia, 19–20 March 2020; pp. 1–4. [Google Scholar]

- Rec, I. P. 800: Methods for Subjective Determination of Transmission Quality; International Telecommunication Union: Geneva, Switzerland, 1996; p. 22. [Google Scholar]

- Zafar, S.; Nizami, I.F.; Majid, M. Non-intrusive Speech Quality Assessment using Natural Spectrogram Statistics. In Proceedings of the 2020 IEEE 3rd International Conference on Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Pakistan, 29–30 January 2020; pp. 1–4. [Google Scholar]

- Cauchi, B.; Siedenburg, K.; Santos, J.F.; Falk, T.H.; Doclo, S.; Goetze, S. Non-Intrusive Speech Quality Prediction Using Modulation Energies and LSTM-Network. IEEE/ACM Trans. Audio Speech Lang. Process. 2019, 27, 1151–1163. [Google Scholar] [CrossRef]

- Zafar, S.; Nizami, I.F.; Majid, M. Speech Quality Assessment using Mel Frequency Spectrograms of Speech Signals. In Proceedings of the 2021 IEEE International Conference on Digital Futures and Transformative Technologies (ICoDT2), Islamabad, Pakistan, 20–21 May 2021; pp. 1–5. [Google Scholar]

- Soni, M.H.; Patil, H.A. Non-intrusive quality assessment of noise-suppressed speech using unsupervised deep features. Speech Commun. 2021, 130, 27–44. [Google Scholar] [CrossRef]

- Recommendation, I.T. Perceptual evaluation of speech quality (PESQ): An objective method for end-to-end speech quality assessment of narrow-band telephone networks and speech codecs. In Proceedings of the 2001 IEEE International Conference on Acoustics, Speech, and Signal Processing. Proceedings (Cat. No. 01CH37221), Salt Lake City, UT, USA, 7–11 May 2001. [Google Scholar]

- Beerends, J.G.; Schmidmer, C.; Berger, J.; Obermann, M.; Ullmann, R.; Pomy, J.; Keyhl, M. Perceptual objective listening quality assessment (POLQA), the third generation ITU-T standard for end-to-end speech quality measurement part I—Temporal alignment. J. Audio Eng. Soc. 2013, 61, 366–384. [Google Scholar]

- Rix, A.W.; Beerends, J.G.; Kim, D.S.; Kroon, P.; Ghitza, O. Objective assessment of speech and audio quality—Technology and applications. IEEE Trans. Audio Speech Lang. Process. 2006, 14, 1890–1901. [Google Scholar] [CrossRef]

- Mittag, G.; Möller, S. Full-Reference Speech Quality Estimation with Attentional Siamese Neural Networks. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 346–350. [Google Scholar]

- Möller, S.; Köster, F. Review of recent standardization activities in speech quality of experience. Qual. User Exp. 2017, 2, 9. [Google Scholar] [CrossRef]

- Parmar, N.; Dubey, R.K. Comparison of performance of the features of speech signal for non-intrusive speech quality assessment. In Proceedings of the 2015 IEEE International Conference on Signal Processing and Communication (ICSC), New Deli, India, 16–18 March 2015; pp. 243–248. [Google Scholar]

- Mohammed, R.A.; Ali, A.E.; Hassan, N.F. Advantages and disadvantages of automatic speaker recognition systems. J. -Qadisiyah Comput. Sci. Math. 2019, 11, 21. [Google Scholar]

- Dubey, R.K.; Kumar, A. Lyon’s auditory features and MRAM features comparison for non-intrusive speech quality assessment in narrowband speech. In Proceedings of the 2016 IEEE 3rd International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 11–12 February 2016; pp. 28–33. [Google Scholar]

- Jiang, Y.; Sabitha, R.; Shankar, A. An IoT Technology for Development of Smart English Language Translation and Grammar Learning Applications. Arab. J. Sci. Eng. 2021, 48, 2601. [Google Scholar] [CrossRef]

- Yang, H.; Byun, K.; Kang, H.G.; Kwak, Y. Parametric-based non-intrusive speech quality assessment by deep neural network. In Proceedings of the 2016 IEEE International Conference on Digital Signal Processing (DSP), Beijing, China, 16–18 October 2016; pp. 99–103. [Google Scholar]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional neural networks: An overview and application in radiology. Insights Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef]

- Dubey, R.K.; Kumar, A. Non-intrusive speech quality assessment using multi-resolution auditory model features for degraded narrowband speech. IET Signal Process. 2015, 9, 638–646. [Google Scholar] [CrossRef]

- Vincent, P.; Larochelle, H.; Bengio, Y.; Manzagol, P.A. Extracting and composing robust features with denoising autoencoders. In Proceedings of the 25th International Conference on Machine Learning, Helsinki, Finland, 5–9 July 2008; pp. 1096–1103. [Google Scholar]

- Vincent, P.; Larochelle, H.; Lajoie, I.; Bengio, Y.; Manzagol, P.A.; Bottou, L. Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion. J. Mach. Learn. Res. 2010, 11, 3371–3408. [Google Scholar]

- Kumawat, P.; Manikandan, M.S. SSQA: Speech Signal Quality Assessment Method using Spectrogram and 2-D Convolutional Neural Networks for Improving Efficiency of ASR Devices. In Proceedings of the 2019 IEEE Seventh International Conference on Digital Information Processing and Communications (ICDIPC), Trabzon, Turkey, 2–4 May 2019; pp. 29–34. [Google Scholar]

- Naderi, B.; Zequeira Jiménez, R.; Hirth, M.; Möller, S.; Metzger, F.; Hoßfeld, T. Towards speech quality assessment using a crowdsourcing approach: Evaluation of standardized methods. Qual. User Exp. 2021, 6, 2. [Google Scholar] [CrossRef]

- Zhou, W.; Zhu, Z. A novel BNMF-DNN based speech reconstruction method for speech quality evaluation under complex environments. Int. J. Mach. Learn. Cybern. 2021, 12, 959–972. [Google Scholar] [CrossRef]

- Kim, D.S.; Tarraf, A. ANIQUE+: A new American national standard for non-intrusive estimation of narrowband speech quality. Bell Labs Tech. J. 2007, 12, 221–236. [Google Scholar] [CrossRef]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef]

- Ruderman, D.L. The statistics of natural images. Netw. Comput. Neural Syst. 1994, 5, 517–548. [Google Scholar] [CrossRef]

- Chang, C.C.; Lin, C.J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 27. [Google Scholar] [CrossRef]

- Veaux, C.; Yamagishi, J.; MacDonald, K. CSTR VCTK Corpus: English Multi-Speaker Corpus for CSTR Voice Cloning Toolkit; The Centre for Speech Technology Research (CSTR), University of Edinburgh: Edinburgh, UK, 2017. [Google Scholar]

- Hu, Y.; Loizou, P.C. A comparative intelligibility study of single-microphone noise reduction algorithms. J. Acoust. Soc. Am. 2007, 122, 1777–1786. [Google Scholar] [CrossRef]

- Rix, A.W.; Hollier, M.P.; Hekstra, A.P.; Beerends, J.G. Perceptual Evaluation of Speech Quality (PESQ) The New ITU Standard for End-to-End Speech Quality Assessment Part I—Time-Delay Compensation. J. Audio Eng. Soc. 2002, 50, 755–764. [Google Scholar]

- Benesty, J.; Chen, J.; Huang, Y.; Cohen, I. Pearson correlation coefficient. In Noise Reduction in Speech Processing; Springer: Berlin/Heidelberg, Germany, 2009; pp. 1–4. [Google Scholar]

- Dubey, R.K.; Kumar, A. Non-intrusive objective speech quality evaluation using multiple time-scale estimates of multi-resolution auditory model (MRAM) features. In Proceedings of the 2016 IEEE Second International Innovative Applications of Computational Intelligence on Power, Energy and Controls with their Impact on Humanity (CIPECH), Ghaziabad, India, 18–19 November 2016; pp. 249–253. [Google Scholar]

- Avila, A.R.; Alam, J.; O’Shaughnessy, D.; Falk, T.H. On the use of the i-vector speech representation for instrumental quality measurement. Qual. User Exp. 2020, 5, 6. [Google Scholar] [CrossRef]

| Database | Types of Distortions | ITU-T Rec. P.563 [6] | Lyon + MRAM [30] | MRAM + Features [48] | MRAM, MFCC and LSF [10] | Proposed Model |

|---|---|---|---|---|---|---|

| NOIZEUS-960 | Airport | 0.694 | 0.770 | 0.892 | 0.874 | 0.957 |

| Babble | 0.790 | 0.829 | 0.924 | 0.924 | 0.941 | |

| Car | 0.788 | 0.819 | 0.890 | 0.909 | 0.950 | |

| Exhibition | 0.725 | 0.705 | 0.855 | 0.847 | 0.923 | |

| Restaurant | 0.622 | 0.798 | 0.894 | 0.885 | 0.932 | |

| Station | 0.597 | 0.745 | 0.864 | 0.864 | 0.921 | |

| Street | 0.736 | 0.751 | 0.855 | 0.830 | 0.920 | |

| Train | 0.813 | 0.808 | 0.894 | 0.873 | 0.842 |

| Technique | Database | SRC | PCC | RMSE |

|---|---|---|---|---|

| ITU-T Rec. P.563 [6] | NOIZEUS-960 | - | 0.717 | |

| Lyon + MRAM [30] | NOIZEUS-960 | - | 0.883 | 0.326 |

| i-Vector Framework [16] | NOIZEUS-960 | 0.900 | - | 0.300 |

| MRAM + MFCC [34] | NOIZEUS-960 | 0.854 | - | 0.368 |

| NSQM [11] | NOIZEUS-960 | - | 0.880 | 0.210 |

| i-vector average model [49] | NOIZEUS-960 | - | 0.890 | 0.240 |

| i-vector VQ model [49] | NOIZEUS-960 | - | 0.950 | 0.210 |

| ANIQUE+ [40] | NOIZEOUS-960 | 0.886 | 0.890 | 0.324 |

| ANIQUE+ [40] | VCTK-Corpus | 0.842 | 0.849 | 0.301 |

| NSS SQA [19] | NOIZEUS-960 | 0.920 | 0.922 | 0.159 |

| NSS SQA [19] | VCTK-Corpus | 0.894 | 0.894 | 0.213 |

| Proposed | NOIZEUS-960 | 0.958 | 0.960 | 0.114 |

| Proposed | VCTK-Corpus | 0.902 | 0.891 | 0.206 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zafar, S.; Nizami, I.F.; Rehman, M.U.; Majid, M.; Ryu, J. NISQE: Non-Intrusive Speech Quality Evaluator Based on Natural Statistics of Mean Subtracted Contrast Normalized Coefficients of Spectrogram. Sensors 2023, 23, 5652. https://doi.org/10.3390/s23125652

Zafar S, Nizami IF, Rehman MU, Majid M, Ryu J. NISQE: Non-Intrusive Speech Quality Evaluator Based on Natural Statistics of Mean Subtracted Contrast Normalized Coefficients of Spectrogram. Sensors. 2023; 23(12):5652. https://doi.org/10.3390/s23125652

Chicago/Turabian StyleZafar, Shakeel, Imran Fareed Nizami, Mobeen Ur Rehman, Muhammad Majid, and Jihyoung Ryu. 2023. "NISQE: Non-Intrusive Speech Quality Evaluator Based on Natural Statistics of Mean Subtracted Contrast Normalized Coefficients of Spectrogram" Sensors 23, no. 12: 5652. https://doi.org/10.3390/s23125652

APA StyleZafar, S., Nizami, I. F., Rehman, M. U., Majid, M., & Ryu, J. (2023). NISQE: Non-Intrusive Speech Quality Evaluator Based on Natural Statistics of Mean Subtracted Contrast Normalized Coefficients of Spectrogram. Sensors, 23(12), 5652. https://doi.org/10.3390/s23125652