eWaSR—An Embedded-Compute-Ready Maritime Obstacle Detection Network

Abstract

1. Introduction

2. Related Work

2.1. Maritime Obstacle Detection

2.2. Efficient Neural Networks

3. WaSR Architecture Analysis

4. Embedded-Compute-Ready Obstacle Detection Network eWaSR

5. Results

5.1. Implementation Details

5.2. Training and Evaluation Hardware

5.3. Datasets

5.4. Influence of Lightweight Backbones on WaSR Performance

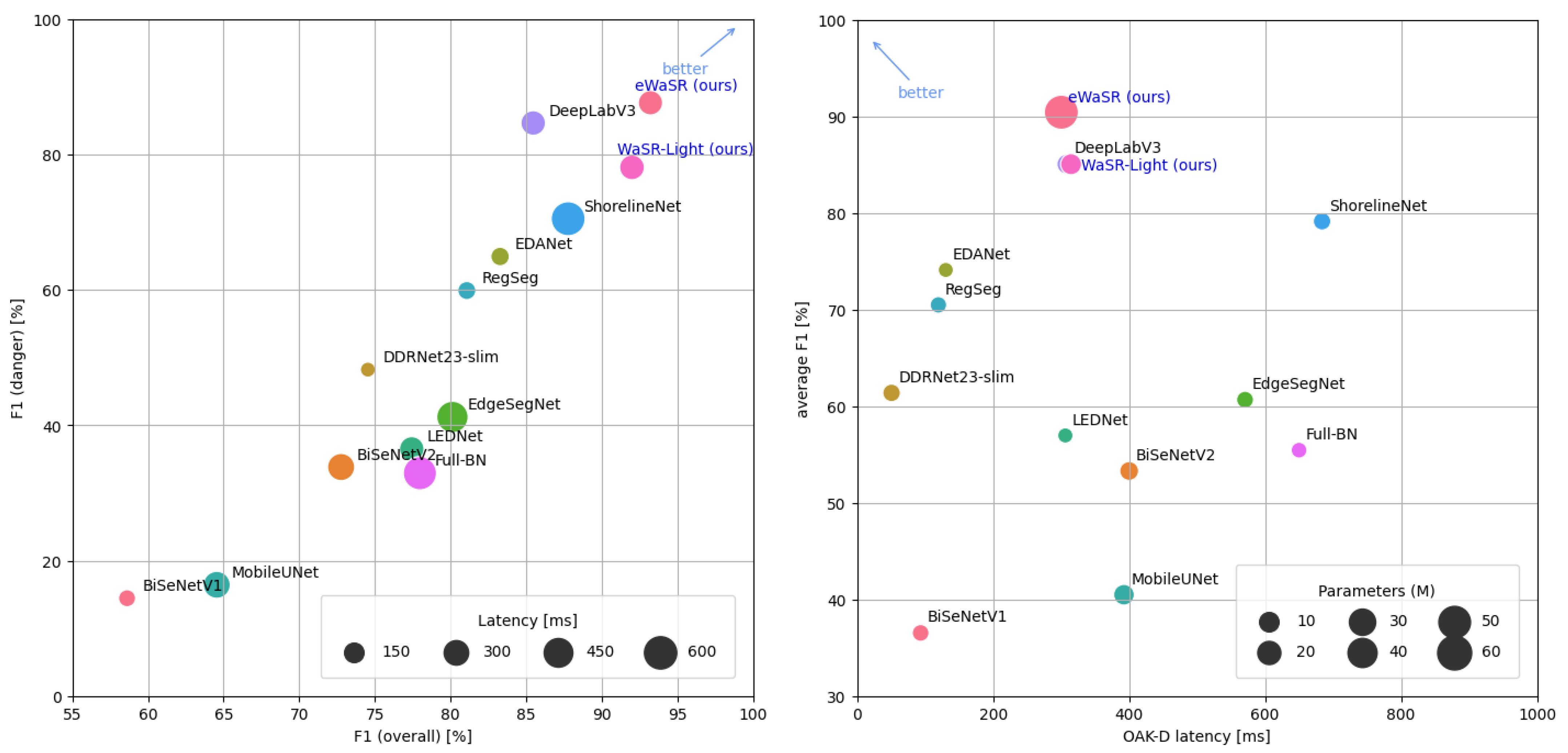

5.5. Comparison with the State of the Art

5.6. Ablation Studies

5.6.1. Influence of Backbones

5.6.2. Token Mixer Analysis

5.6.3. Channel Reduction Speedup

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

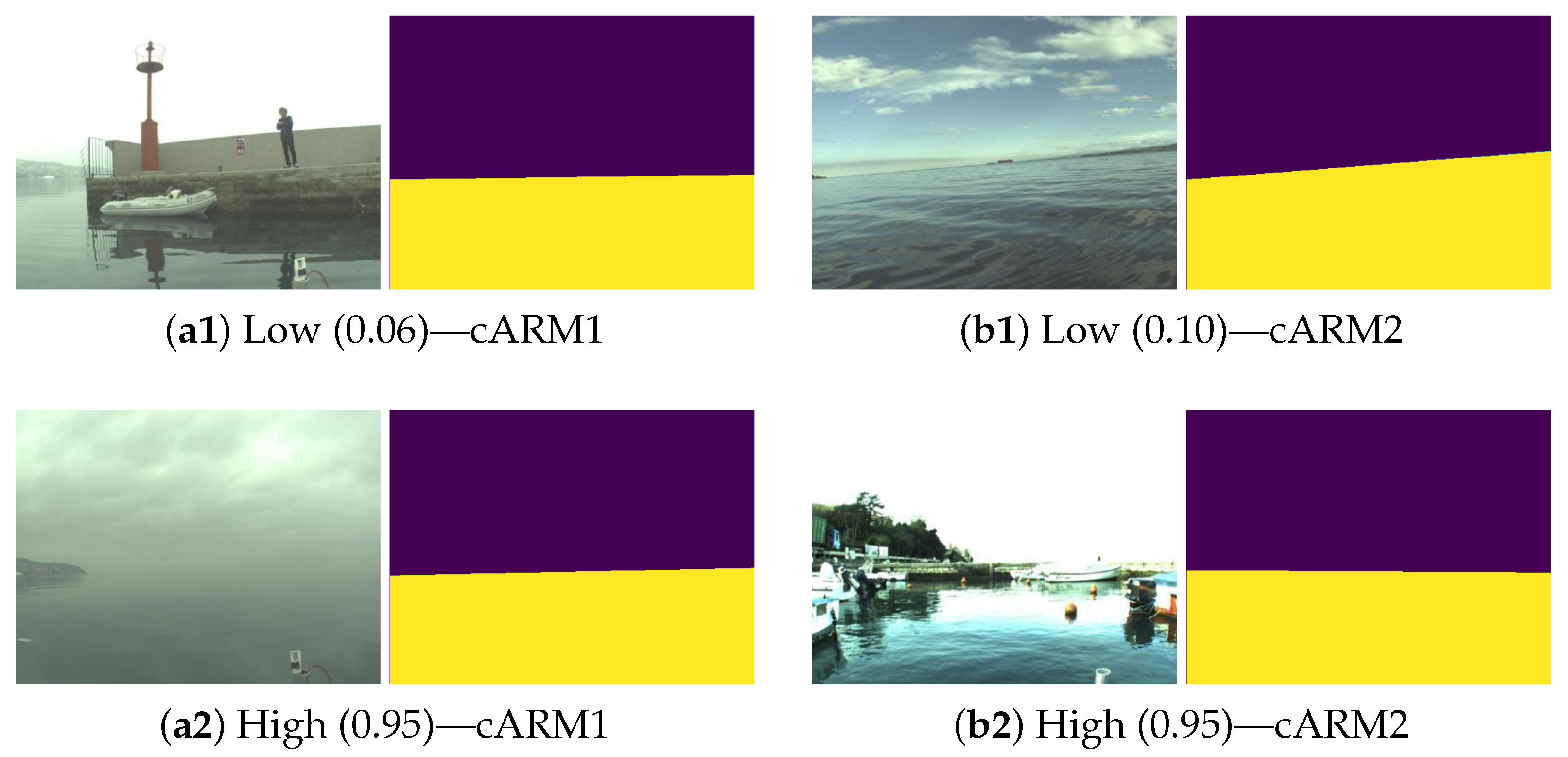

Appendix A. IMU Channel Weights in cARM Blocks

References

- Bovcon, B.; Kristan, M. WaSR—A Water Segmentation and Refinement Maritime Obstacle Detection Network. IEEE Trans. Cybern. 2021, 52, 12661–12674. [Google Scholar] [CrossRef]

- Žust, L.; Kristan, M. Temporal Context for Robust Maritime Obstacle Detection. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022. [Google Scholar]

- Yao, L.; Kanoulas, D.; Ji, Z.; Liu, Y. ShorelineNet: An efficient deep learning approach for shoreline semantic segmentation for unmanned surface vehicles. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 5403–5409. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Google. Google Coral Edge TPU. Available online: https://coral.ai/ (accessed on 25 May 2023).

- NVIDIA. Jetson Nano: A Powerful Low-Cost Platform for AI at the Edge. Available online: https://developer.nvidia.com/embedded/jetson-nano-developer-kit (accessed on 25 May 2023).

- Intel. Intel Movidius Myriad™ X Vision Processing Units. 2020. Available online: https://www.intel.com/content/www/us/en/products/details/processors/movidius-vpu/movidius-myriad-x.html (accessed on 25 May 2023).

- Luxonis. OAK-D. Available online: https://www.luxonis.com/ (accessed on 25 May 2023).

- Steccanella, L.; Bloisi, D.D.; Castellini, A.; Farinelli, A. Waterline and obstacle detection in images from low-cost autonomous boats for environmental monitoring. Robot. Auton. Syst. 2020, 124, 103346. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. GhostNet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 1580–1589. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. ShuffleNet V2: Practical guidelines for efficient cnn architecture design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Radosavovic, I.; Kosaraju, R.P.; Girshick, R.; He, K.; Dollár, P. Designing network design spaces. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 10428–10436. [Google Scholar]

- Li, Y.; Chen, Y.; Dai, X.; Chen, D.; Liu, M.; Yuan, L.; Liu, Z.; Zhang, L.; Vasconcelos, N. MicroNet: Improving Image Recognition with Extremely Low FLOPs. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montréal, QC, Canada, 11–17 October 2021; pp. 468–477. [Google Scholar]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. RepVGG: Making VGG-style Convnets Great Again. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 13733–13742. [Google Scholar]

- Vasu, P.K.A.; Gabriel, J.; Zhu, J.; Tuzel, O.; Ranjan, A. An Improved One millisecond Mobile Backbone. arXiv 2022, arXiv:2206.04040. [Google Scholar]

- Lee, J.M.; Lee, K.H.; Nam, B.; Wu, Y. Study on image-based ship detection for AR navigation. In Proceedings of the 2016 6th International Conference on IT Convergence and Security (ICITCS), Prague, Czech Republic, 26–29 September 2016; pp. 1–4. [Google Scholar]

- Bloisi, D.D.; Previtali, F.; Pennisi, A.; Nardi, D.; Fiorini, M. Enhancing automatic maritime surveillance systems with visual information. IEEE Trans. Intell. Transp. Syst. 2016, 18, 824–833. [Google Scholar] [CrossRef]

- Loomans, M.J.; de With, P.H.; Wijnhoven, R.G. Robust automatic ship tracking in harbours using active cameras. In Proceedings of the 2013 IEEE International Conference on Image Processing, Melbourne, Australia, 15–18 September 2013; pp. 4117–4121. [Google Scholar]

- Kristan, M.; Kenk, V.S.; Kovačič, S.; Perš, J. Fast image-based obstacle detection from unmanned surface vehicles. IEEE Trans. Cybern. 2015, 46, 641–654. [Google Scholar] [CrossRef] [PubMed]

- Prasad, D.K.; Prasath, C.K.; Rajan, D.; Rachmawati, L.; Rajabally, E.; Quek, C. Object detection in a maritime environment: Performance evaluation of background subtraction methods. IEEE Trans. Intell. Transp. Syst. 2018, 20, 1787–1802. [Google Scholar] [CrossRef]

- Cane, T.; Ferryman, J. Saliency-based detection for maritime object tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 18–25. [Google Scholar]

- Bovcon, B.; Kristan, M. Obstacle detection for usvs by joint stereo-view semantic segmentation. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 5807–5812. [Google Scholar]

- Muhovic, J.; Mandeljc, R.; Perš, J.; Bovcon, B. Depth fingerprinting for obstacle tracking using 3D point cloud. In Proceedings of the 23rd Computer Vision Winter Workshop, Český Krumlov, Czech Republic, 5–7 February 2018; pp. 71–78. [Google Scholar]

- Muhovič, J.; Bovcon, B.; Kristan, M.; Perš, J. Obstacle tracking for unmanned surface vessels using 3-D point cloud. IEEE J. Ocean. Eng. 2019, 45, 786–798. [Google Scholar] [CrossRef]

- Zhou, B.; Zhao, H.; Puig, X.; Fidler, S.; Barriuso, A.; Torralba, A. Scene parsing through ADE20K dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 633–641. [Google Scholar]

- Zhang, W.; Huang, Z.; Luo, G.; Chen, T.; Wang, X.; Liu, W.; Yu, G.; Shen, C. TopFormer: Token Pyramid Transformer for Mobile Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 12083–12093. [Google Scholar]

- Kuric, I.; Kandera, M.; Klarák, J.; Ivanov, V.; Więcek, D. Visual product inspection based on deep learning methods. In Proceedings of the Advanced Manufacturing Processes: Selected Papers from the Grabchenko’s International Conference on Advanced Manufacturing Processes (InterPartner-2019), Odessa, Ukraine, 10–13 September 2019; pp. 148–156. [Google Scholar]

- Lee, S.J.; Roh, M.I.; Lee, H.W.; Ha, J.S.; Woo, I.G. Image-based ship detection and classification for unmanned surface vehicle using real-time object detection neural networks. In Proceedings of the 28th International Ocean and Polar Engineering Conference, Sapporo, Japan, 10–15 June 2018. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Prasad, D.K.; Rajan, D.; Rachmawati, L.; Rajabally, E.; Quek, C. Video processing from electro-optical sensors for object detection and tracking in a maritime environment: A survey. IEEE Trans. Intell. Transp. Syst. 2017, 18, 1993–2016. [Google Scholar] [CrossRef]

- Yang, J.; Li, Y.; Zhang, Q.; Ren, Y. Surface vehicle detection and tracking with deep learning and appearance feature. In Proceedings of the 2019 5th International Conference on Control, Automation and Robotics (ICCAR), Beijing, China, 19–22 April 2019; pp. 276–280. [Google Scholar]

- Ma, L.Y.; Xie, W.; Huang, H.B. Convolutional neural network based obstacle detection for unmanned surface vehicle. Math. Biosci. Eng. MBE 2019, 17, 845–861. [Google Scholar] [CrossRef] [PubMed]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Cane, T.; Ferryman, J. Evaluating deep semantic segmentation networks for object detection in maritime surveillance. In Proceedings of the 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018; pp. 1–6. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Paszke, A.; Chaurasia, A.; Kim, S.; Culurciello, E. ENet: A deep neural network architecture for real-time semantic segmentation. arXiv 2016, arXiv:1606.02147. [Google Scholar]

- Mehta, S.; Rastegari, M.; Caspi, A.; Shapiro, L.; Hajishirzi, H. ESPNet: Efficient spatial pyramid of dilated convolutions for semantic segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 14–18 September 2018; pp. 552–568. [Google Scholar]

- Bovcon, B.; Muhovič, J.; Perš, J.; Kristan, M. The MaSTr1325 dataset for training deep USV obstacle detection models. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), The Venetian Macao, Macau, 3–8 November 2019; pp. 3431–3438. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. BiSeNet: Bilateral Segmentation Network for Real-time Semantic Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 14–18 September 2018; pp. 325–341. [Google Scholar]

- Bovcon, B.; Muhovič, J.; Vranac, D.; Mozetič, D.; Perš, J.; Kristan, M. MODS—A USV-oriented object detection and obstacle segmentation benchmark. IEEE Trans. Intell. Transp. Syst. 2021, 23, 13403–13418. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for MobileNetV3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, South Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Rxcitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6848–6856. [Google Scholar]

- Tan, M.; Le, Q. EfficientNet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019; pp. 6105–6114. [Google Scholar]

- Tan, M.; Le, Q. EfficientNetV2: Smaller Models and Faster Training. In Proceedings of the International Conference on Machine Learning, Vienna, Austria, 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2015, arXiv:1409.1556v6. [Google Scholar]

- Yu, W.; Luo, M.; Zhou, P.; Si, C.; Zhou, Y.; Wang, X.; Feng, J.; Yan, S. MetaFormer is Actually What You Need for Vision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 10819–10829. [Google Scholar]

- Zhao, H.; Qi, X.; Shen, X.; Shi, J.; Jia, J. ICNet for real-time semantic segmentation on high-resolution images. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 405–420. [Google Scholar]

- Wang, H.; Jiang, X.; Ren, H.; Hu, Y.; Bai, S. SwiftNet: Real-time video object segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 1296–1305. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Honolulu, HI, USA, 21–26 July 2017; pp. 2980–2988. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Krogh, A.; Hertz, J. A simple weight decay can improve generalization. Adv. Neural Inf. Process. Syst. 1991, 4, 950–957. [Google Scholar]

- Popel, M.; Bojar, O. Training tips for the transformer model. arXiv 2018, arXiv:1804.00247. [Google Scholar] [CrossRef]

- Pytorch. TorchVision. Available online: https://github.com/pytorch/vision (accessed on 25 May 2023).

- Yu, C.; Gao, C.; Wang, J.; Yu, G.; Shen, C.; Sang, N. BiSeNet V2: Bilateral Network with Guided Aggregation for Real-time Semantic Segmentation. arXiv 2020, arXiv:2004.02147. [Google Scholar] [CrossRef]

- Hong, Y.; Pan, H.; Sun, W.; Jia, Y. Deep Dual-resolution Networks for Real-time and Accurate Semantic Segmentation of Road Scenes. arXiv 2021, arXiv:2101.06085. [Google Scholar]

- Pan, H.; Hong, Y.; Sun, W.; Jia, Y. Deep Dual-Resolution Networks for Real-Time and Accurate Semantic Segmentation of Traffic Scenes. IEEE Trans. Intell. Transp. Syst. 2022, 24, 3448–3460. [Google Scholar] [CrossRef]

- Lo, S.Y.; Hang, H.M.; Chan, S.W.; Lin, J.J. Efficient dense modules of asymmetric convolution for real-time semantic segmentation. In Proceedings of the ACM Multimedia Asia, Beijing, China, 16–18 December 2019; pp. 1–6. [Google Scholar]

- Lin, Z.Q.; Chwyl, B.; Wong, A. Edgesegnet: A compact network for semantic segmentation. arXiv 2019, arXiv:1905.04222. [Google Scholar]

- Wang, Y.; Zhou, Q.; Liu, J.; Xiong, J.; Gao, G.; Wu, X.; Latecki, L.J. Lednet: A lightweight encoder-decoder network for real-time semantic segmentation. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 1860–1864. [Google Scholar]

- Jing, J.; Wang, Z.; Rätsch, M.; Zhang, H. Mobile-Unet: An efficient convolutional neural network for fabric defect detection. Text. Res. J. 2022, 92, 30–42. [Google Scholar] [CrossRef]

- Gao, R. Rethink dilated convolution for real-time semantic segmentation. arXiv 2021, arXiv:2111.09957. [Google Scholar]

- Rolls-Royce. RR and Finferries Demonstrate World’s First Fully Autonomous Ferry. 2018. Available online: https://www.rolls-royce.com/media/press-releases/2018/03-12-2018-rr-and-finferries-demonstrate-worlds-first-fully-autonomous-ferry.aspx (accessed on 25 May 2023).

- FLIR Systems. Automatic Collision Avoidance System (OSCAR) Brings Peace of Mind to Sailors. 2018. Available online: https://www.flir.com/discover/cores-components/automatic-collision-avoidance-system-oscar-brings-peace-of-mind-to-sailors/ (accessed on 25 May 2023).

| WaSR | Original | −ASPP1 | −cARM1 | −FFM | −cARM2 | −ASPP | −FFM1 |

|---|---|---|---|---|---|---|---|

| F1 | 93.5% | % | % | % | % | % | % |

| Block | Parameters (M) | FLOPs (B) | Total Execution Time [ms] | Slowest Operation [ms] |

|---|---|---|---|---|

| ASPP1 | 1.77 | 5.436 | 48.83 | 18.06 |

| cARM1 | 4.20 | 0.0105 | 12.36 | 3.99 |

| FFM | 21.28 | 58.928 | 279.40 | 266.32 |

| cARM2 | 0.79 | 1.617 | 19.81 | 10.54 |

| ASPP | 0.11 | 1.359 | 66.75 | 17.16 |

| FFM1 | 13.91 | 145.121 | 641.23 | 589.55 |

| Overall | Danger Zone (<15 m) | Latency | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Encoder | W-E | Pr | Re | F1 | Pr | Re | F1 | OAK | GPU | chs |

| ResNet-18 [37] | 20 px | 93.46 | 91.67 | 66.98 | 93.61 | 960 | ||||

| RepVGG-A0 [17] | 19 px | 91.71 | 91.9 | 70.49 | 94.44 | 1616 | ||||

| MobileOne-S0 [18] | 18 px | 92.2 | 90.33 | 73.59 | 93.64 | 1456 | ||||

| MobileNetV2 [12] | 24 px | 90.05 | 85.99 | 63.71 | 91.69 | 472 | ||||

| GhostNet [13] | 21 px | 90.47 | 89.72 | 59.4 | 92.96 | |||||

| MicroNet [16] | 43 px | 63.59 | 74.22 | 15.28 | 71.8 | 436 | ||||

| RegNetX [15] | 18 px | 91.89 | 89.55 | 76.75 | 92.65 | 1152 | ||||

| ShuffleNet [49] | 23 px | 90.14 | 87.38 | 61.18 | 90.95 | 1192 | ||||

| Overall | Danger Zone (<15 m) | Latency | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Model | W-E | Pr | Re | F1 | Pr | Re | F1 | OAK | GPU |

| BiSeNetV1MBNV2 [12] [45] | 46 px | 45.94 | 80.94 | 7.9 | 83.32 | ||||

| BiSeNetV2 [62] | 36 px | 64.96 | 82.71 | 21.55 | 78.54 | ||||

| DDRNet23-Slim [63,64] | 54 px | 74.82 | 74.24 | 35.5 | 75.08 | ||||

| EDANet [65] | 34 px | 82.06 | 84.53 | 52.82 | 84.22 | ||||

| EdgeSegNet [66] | 58 px | 75.49 | 85.39 | 27.48 | 82.33 | ||||

| LEDNet [67] | 92 px | 74.64 | 80.46 | 24.33 | 73.01 | ||||

| MobileUNet [68] | 47 px | 52.54 | 83.68 | 9.21 | 75.36 | ||||

| RegSeg [69] | 54 px | 84.98 | 77.53 | 48.44 | 78.44 | ||||

| ShorelineNet [3] | 19 px | 90.25 | 85.44 | 57.26 | 91.72 | ||||

| DeepLabV3MBNV2 [12] [5] | 29 px | 90.95 | 80.62 | 82.54 | 86.84 | ||||

| ENet [41] | 34 px | 46.12 | 83.24 | 7.08 | 78.07 | / | 7.52 | ||

| Full-BN [10] | 33 px | 71.79 | 85.34 | 20.43 | 84.53 | ||||

| TopFormer [29] | 20 px | 93.72 | 90.82 | 75.53 | 94.38 | 9.53 | |||

| WaSR [1] | 18 px | 95.22 | 91.92 | 82.69 | 94.87 | / | |||

| WaSR-Light (ours) | 20 px | 93.46 | 91.67 | 66.98 | 93.61 | ||||

| eWaSR (ours) | px | 95.63 | 90.55 | 82.09 | 93.98 | ||||

| Overall | Danger Zone (< 15 m) | Latency | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| bb | W-E | Pr | Re | F1 | Pr | Re | F1 | OAK | GPU | chs |

| MobileOne-S0 [18] | 20 px | 92.83 | 91.23 | 73.18 | 94.56 | 1456 | ||||

| RepVGG-A0 [17] | 20 px | 95.33 | 90.49 | 80.1 | 94.26 | 1616 | ||||

| RegNetX [15] | 21 px | 94.64 | 89.2 | 72.08 | 94.01 | 1152 | ||||

| MobileNetV2 [12] | 21 px | 93.44 | 88.35 | 68.91 | 92.96 | 472 | ||||

| GhostNet [13] | 23 px | 88.96 | 86.4 | 56.39 | 91.41 | 304 | ||||

| ResNet-18 [37] | px | 95.63 | 90.55 | 82.09 | 93.98 | 960 | ||||

| Overall | Danger | Latency | |||

|---|---|---|---|---|---|

| Modification | W-E | F1 | F1 | OAK | GPU |

| eWaSR | px | ||||

| ¬long-skip | 21 px | ||||

| ¬SRM | 19 px | ||||

| reduction | 18 px | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Teršek, M.; Žust, L.; Kristan, M. eWaSR—An Embedded-Compute-Ready Maritime Obstacle Detection Network. Sensors 2023, 23, 5386. https://doi.org/10.3390/s23125386

Teršek M, Žust L, Kristan M. eWaSR—An Embedded-Compute-Ready Maritime Obstacle Detection Network. Sensors. 2023; 23(12):5386. https://doi.org/10.3390/s23125386

Chicago/Turabian StyleTeršek, Matija, Lojze Žust, and Matej Kristan. 2023. "eWaSR—An Embedded-Compute-Ready Maritime Obstacle Detection Network" Sensors 23, no. 12: 5386. https://doi.org/10.3390/s23125386

APA StyleTeršek, M., Žust, L., & Kristan, M. (2023). eWaSR—An Embedded-Compute-Ready Maritime Obstacle Detection Network. Sensors, 23(12), 5386. https://doi.org/10.3390/s23125386