Unsupervised Monocular Depth and Camera Pose Estimation with Multiple Masks and Geometric Consistency Constraints

Abstract

1. Introduction

2. Related Works

2.1. Supervised Learning of Scene Depth and Camera Pose

2.2. Unsupervised Learning of Scene Depth and Camera Pose

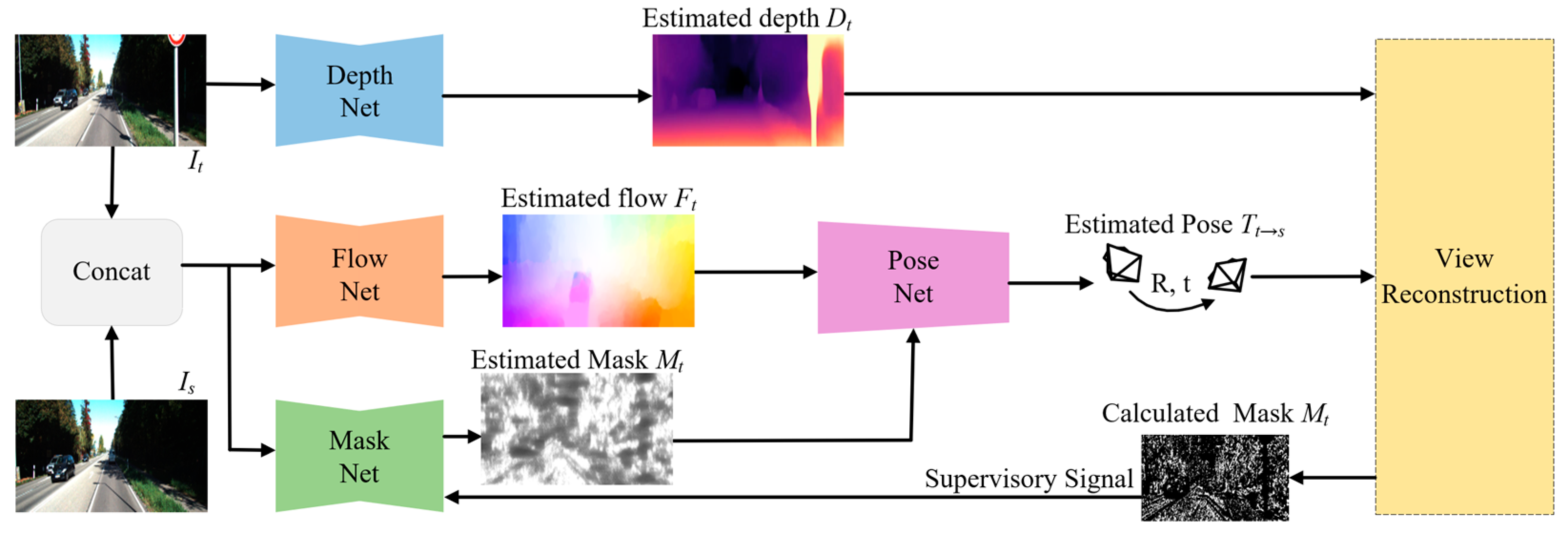

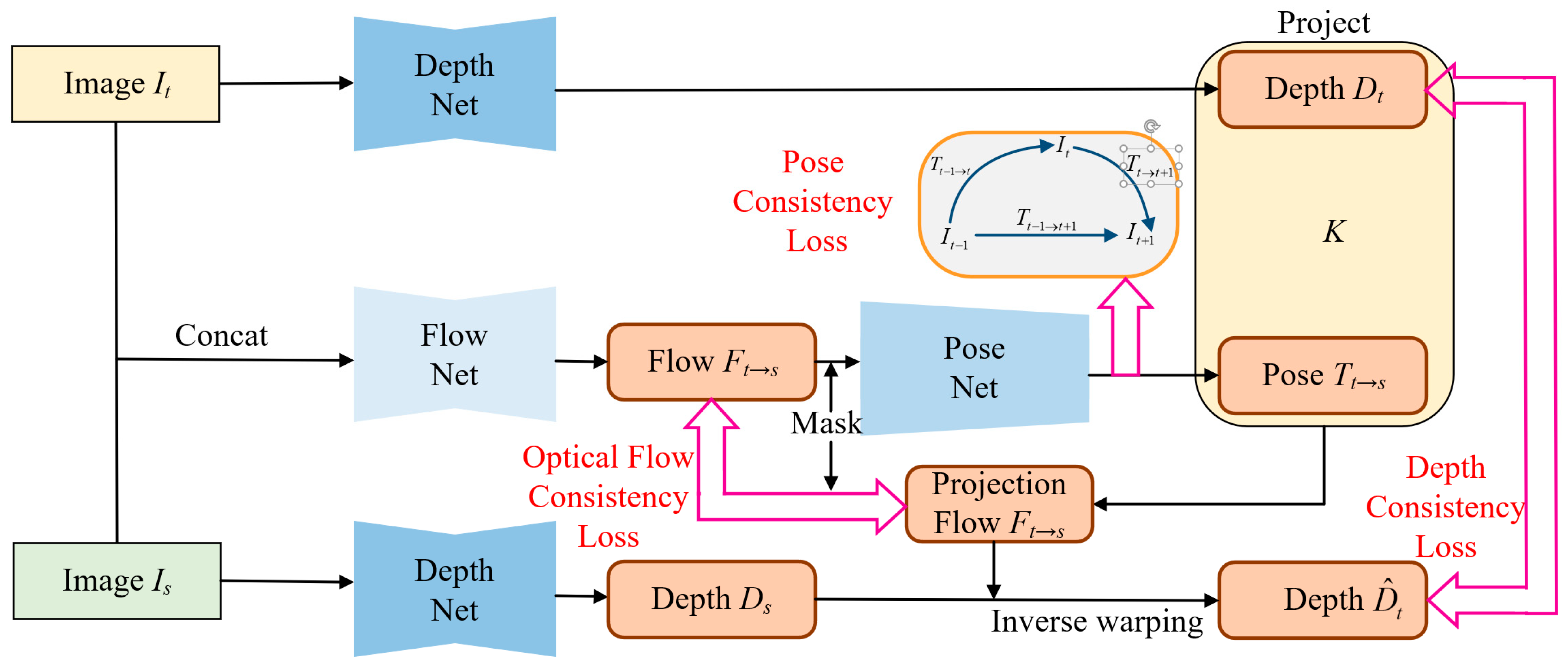

3. Methods

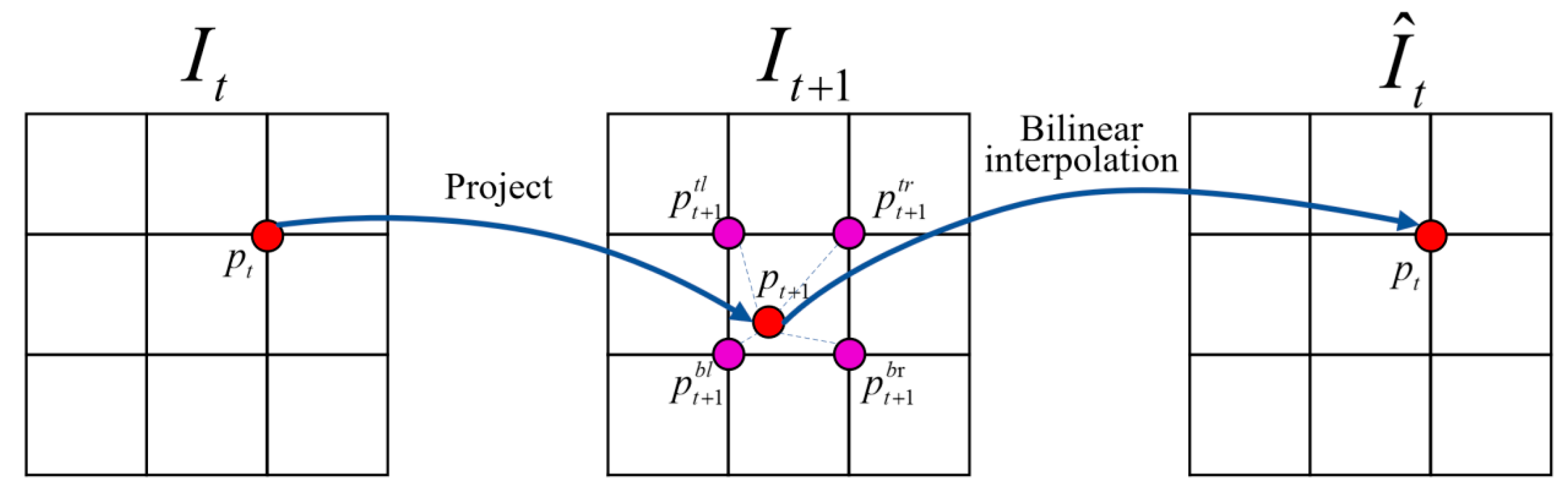

3.1. Photometric Consistency Loss and Smoothness Loss

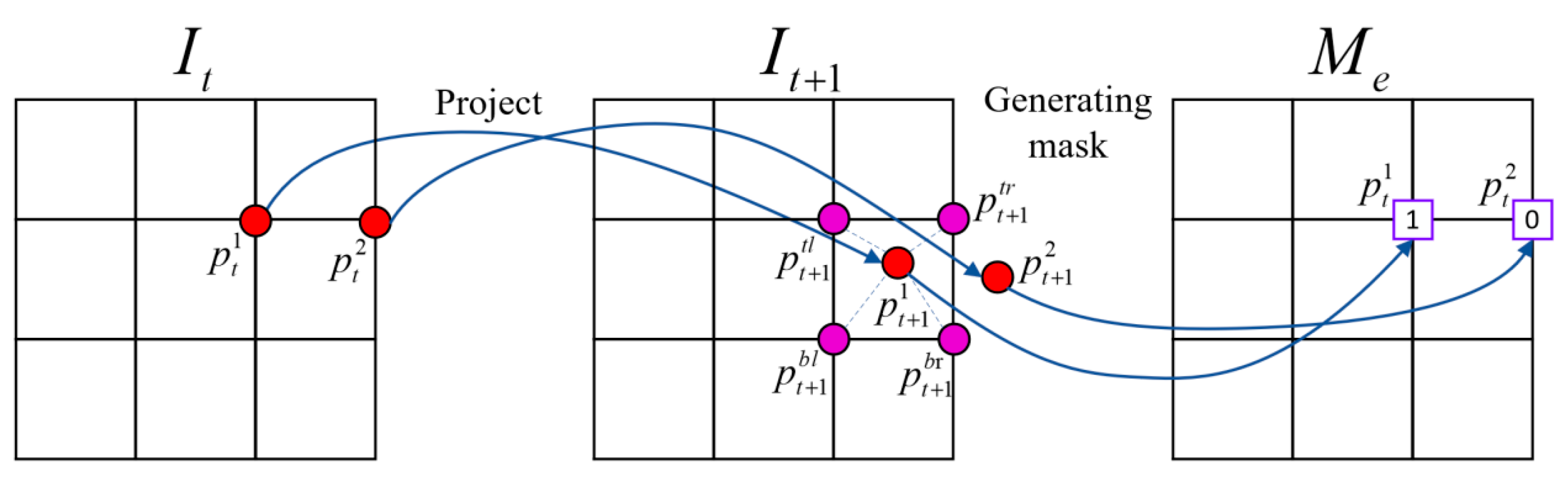

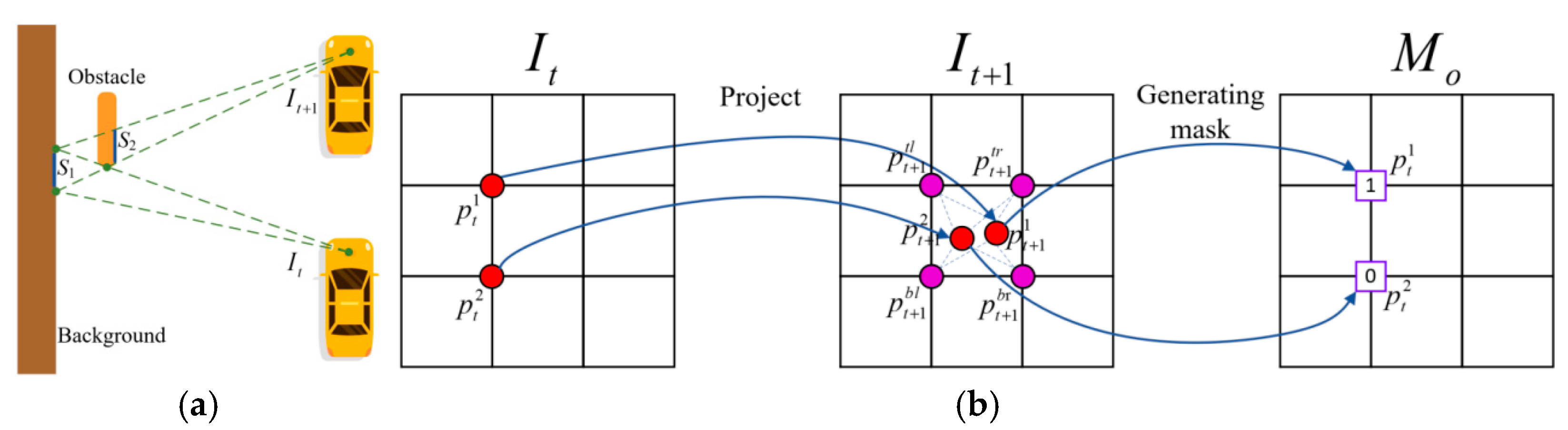

3.2. Calculated Mask and Mask Loss

3.3. Geometric Consistency Loss

4. Experiments

4.1. Implementation Details

4.2. Datasets and Metrics

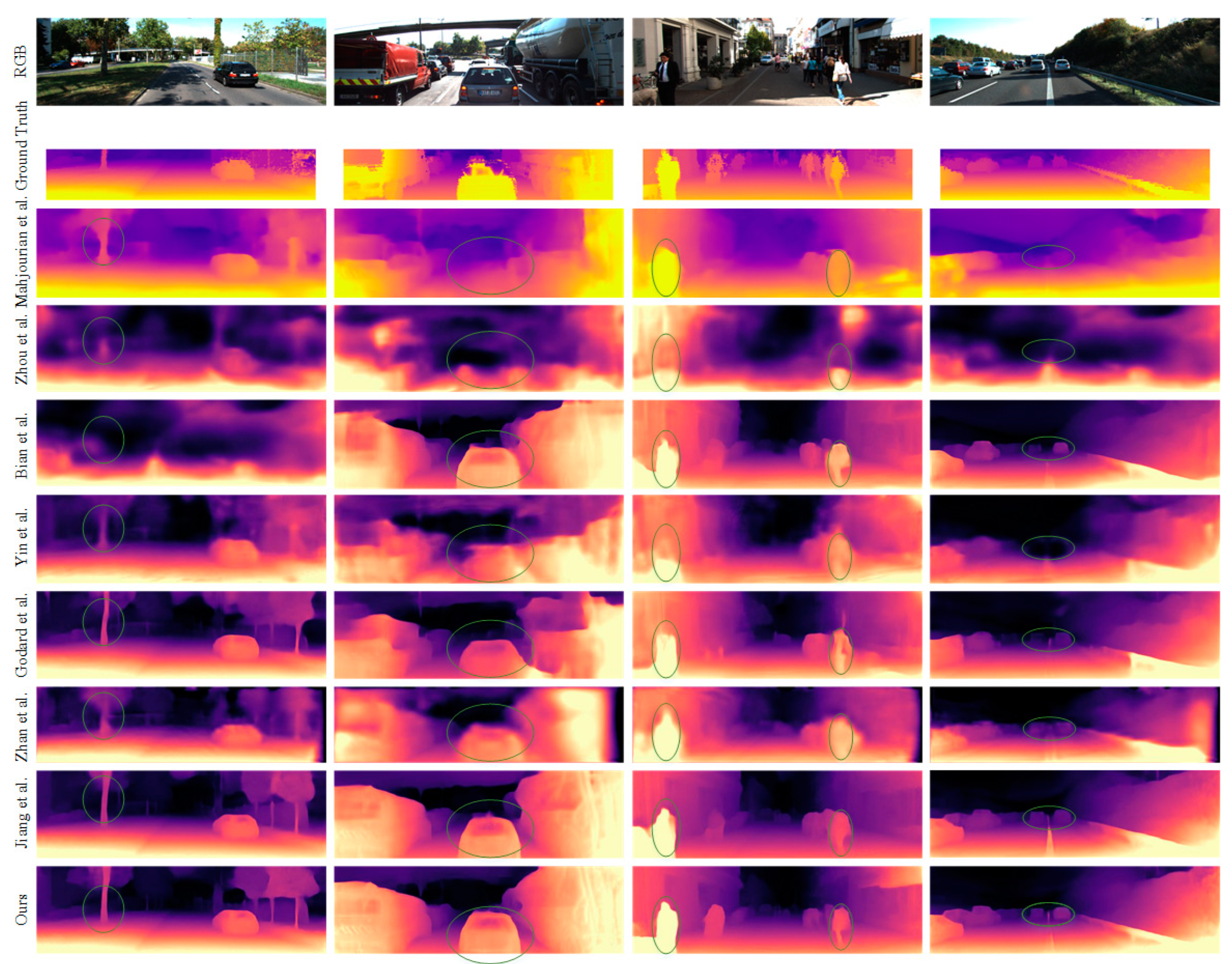

4.3. Evaluation of Depth Estimation

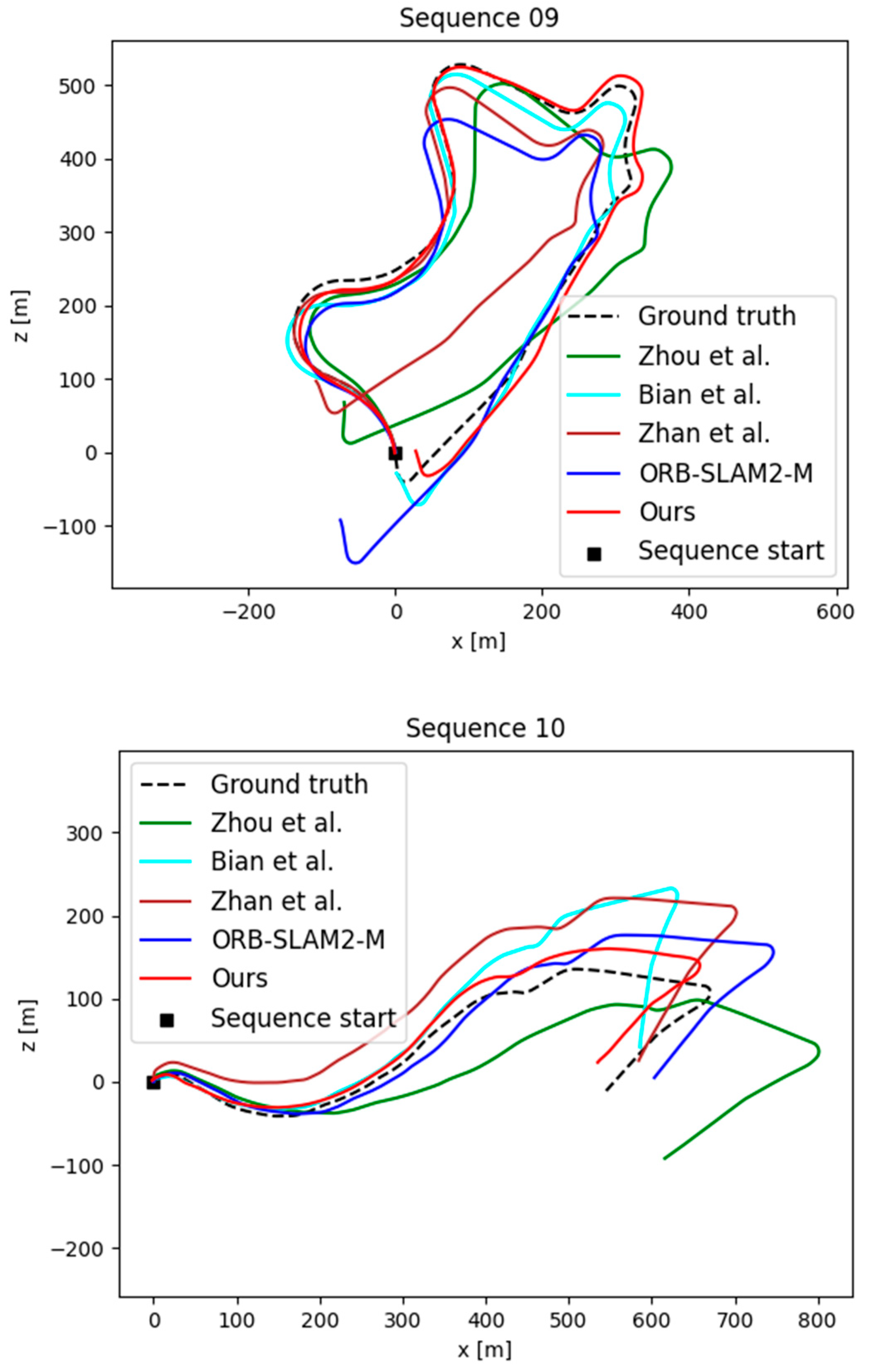

4.4. Evaluation of VO Estimation

4.5. Ablation Study

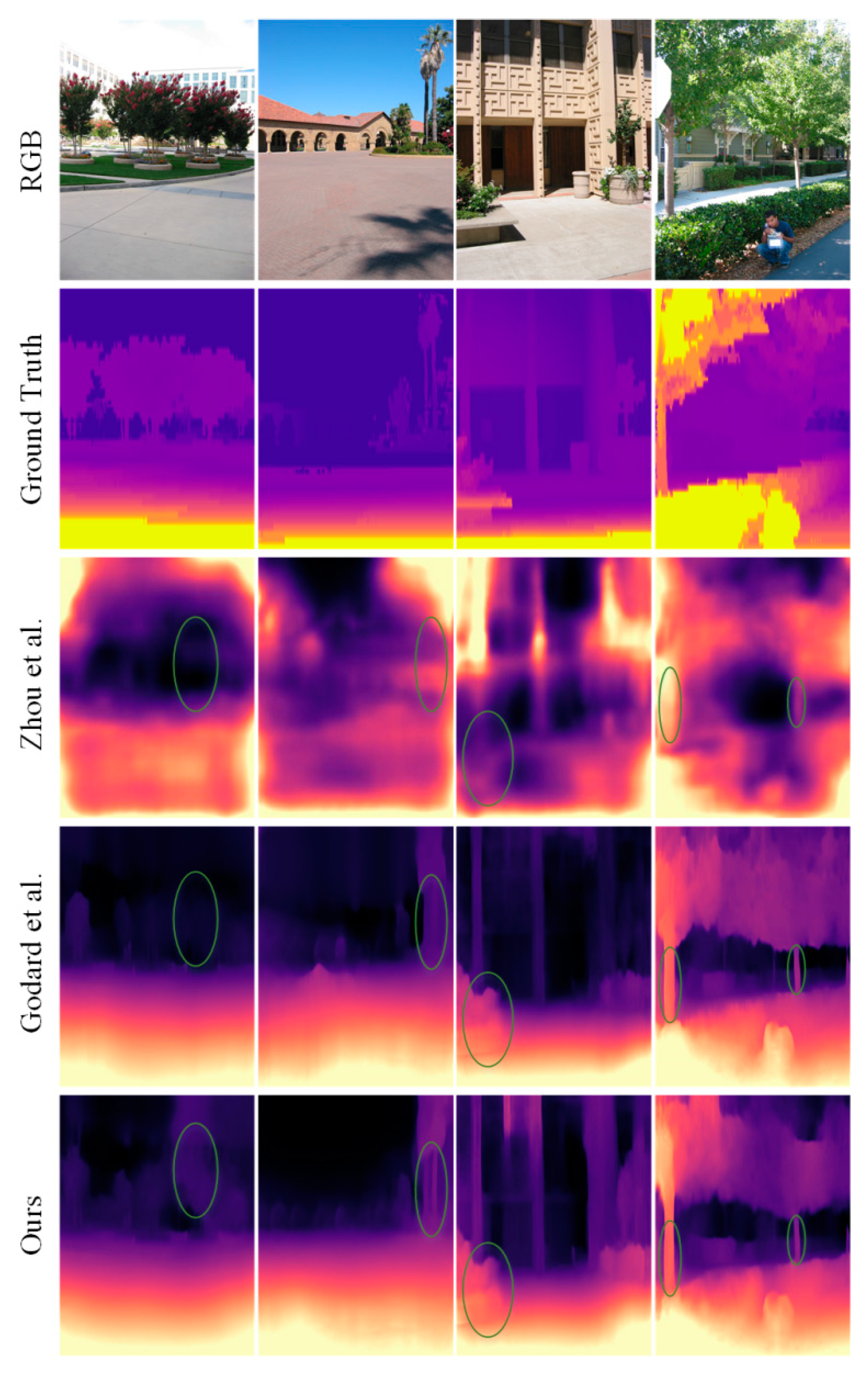

4.6. Generalization on Make3D Dataset

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wei, H.; Huang, Y.; Hu, F.; Zhao, B.; Guo, Z.; Zhang, R. Motion Estimation Using Region-Level Segmentation and Extended Kalman Filter for Autonomous Driving. Remote Sens. 2021, 13, 1828. [Google Scholar] [CrossRef]

- Rosique, F.; Navarro, P.J.; Miller, L.; Salas, E. Autonomous Vehicle Dataset with Real Multi-Driver Scenes and Biometric Data. Sensors 2023, 23, 2009. [Google Scholar] [CrossRef] [PubMed]

- Luo, G.; Xiong, G.; Huang, X.; Zhao, X.; Tong, Y.; Chen, Q.; Zhu, Z.; Lei, H.; Lin, J. Geometry Sampling-Based Adaption to DCGAN for 3D Face Generation. Sensors 2023, 23, 1937. [Google Scholar] [CrossRef] [PubMed]

- Zou, Y.; Eldemiry, A.; Li, Y.; Chen, W. Robust RGB-D SLAM Using Point and Line Features for Low Textured Scene. Sensors 2020, 20, 4984. [Google Scholar] [CrossRef] [PubMed]

- Romero-Ramirez, F.J.; Muñoz-Salinas, R.; Marín-Jiménez, M.J.; Cazorla, M.; Medina-Carnicer, R. sSLAM: Speeded-Up Visual SLAM Mixing Artificial Markers and Temporary Keypoints. Sensors 2023, 23, 2210. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Liang, Y.; Yang, Y.; Fan, X.; Cui, T. Efficient and Accurate Hierarchical SfM Based on Adaptive Track Selection for Large-Scale Oblique Images. Remote Sens. 2023, 15, 1374. [Google Scholar] [CrossRef]

- Liu, Z.; Qv, W.; Cai, H.; Guan, H.; Zhang, S. An Efficient and Robust Hybrid SfM Method for Large-Scale Scenes. Remote Sens. 2023, 15, 0769. [Google Scholar] [CrossRef]

- Fang, X.; Li, Q.; Li, Q.; Ding, K.; Zhu, J. Exploiting Graph and Geodesic Distance Constraint for Deep Learning-Based Visual Odometry. Remote Sens. 2022, 14, 1854. [Google Scholar] [CrossRef]

- Wan, Y.; Zhao, Q.; Guo, C.; Xu, C.; Fang, L. Multi-Sensor Fusion Self-Supervised Deep Odometry and Depth Estimation. Remote Sens. 2022, 14, 1228. [Google Scholar] [CrossRef]

- Garg, R.; Bg, V.K.; Carneiro, G.; Reid, I. Unsupervised CNN for Single View Depth Estimation: Geometry to the Rescue. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 740–756. [Google Scholar]

- Godard, C.; Aodha, O.M.; Firman, M.; Brostow, G. Digging into Self-Supervised Monocular Depth Estimation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; IEEE: Seoul, Republic of Korea, 2019; pp. 3827–3837. [Google Scholar]

- Zhan, H.; Garg, R.; Weerasekera, C.S.; Li, K.; Agarwal, H.; Reid, I.M. Unsupervised Learning of Monocular Depth Estimation and Visual Odometry with Deep Feature Reconstruction. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; IEEE: Salt Lake City, UT, USA, 2018; pp. 340–349. [Google Scholar]

- Van Dis, E.A.; Bollen, J.; Zuidema, W.; van Rooij, R.; Bockting, C.L. ChatGPT: Five Priorities for Research. Nature 2023, 614, 224–226. [Google Scholar] [CrossRef]

- Zhou, T.; Brown, M.; Snavely, N.; Lowe, D.G. Unsupervised Learning of Depth and Ego-Motion from Video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6612–6619. [Google Scholar]

- Klodt, M.; Vedaldi, A. Supervising the New with the Old: Learning SFM from SFM. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; Springer: Cham, Switzerland, 2018; pp. 713–728. [Google Scholar]

- Chen, S.; Han, J.; Tang, M.; Dong, R.; Kan, J. Encoder-Decoder Structure with Multiscale Receptive Field Block for Unsupervised Depth Estimation from Monocular Video. Remote Sens. 2022, 14, 2906. [Google Scholar] [CrossRef]

- Bian, J.-W.; Li, Z.; Wang, N.; Zhan, H.; Shen, C.; Cheng, M.-M.; Reid, I. Unsupervised Scale-Consistent Depth and Ego-Motion Learning from Monocular Video. arXiv 2019, arXiv:1908.10553. [Google Scholar]

- Zhao, B.; Huang, Y.; Ci, W.; Hu, X. Unsupervised Learning of Monocular Depth and Ego-Motion with Optical Flow Features and Multiple Constraints. Sensors 2022, 22, 1383. [Google Scholar] [CrossRef] [PubMed]

- Wang, G.; Wang, H.; Liu, Y.; Chen, W. Unsupervised Learning of Monocular Depth and Ego-Motion Using Multiple Masks. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; IEEE: Montreal, QC, Canada, 2019; pp. 4724–4730. [Google Scholar]

- Jiang, H.; Ding, L.; Sun, Z.; Huang, R. Unsupervised Monocular Depth Perception: Focusing on Moving Objects. IEEE Sensors J. 2021, 21, 27225–27237. [Google Scholar] [CrossRef]

- Eigen, D.; Puhrsch, C.; Fergus, R. Depth Map Prediction from a Single Image Using a Multi-Scale Deep Network. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 13 December 2014; pp. 2366–2374. [Google Scholar]

- Liu, F.; Shen, C.; Lin, G.; Reid, I. Learning Depth from Single Monocular Images Using Deep Convolutional Neural Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 2024–2039. [Google Scholar] [CrossRef]

- Muller, P.; Savakis, A. Flowdometry: An Optical Flow and Deep Learning Based Approach to Visual Odometry. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 24–31 March 2017; IEEE: Santa Rosa, CA, USA, 2017; pp. 624–631. [Google Scholar]

- Costante, G.; Ciarfuglia, T.A. LS-VO: Learning Dense Optical Subspace for Robust Visual Odometry Estimation. IEEE Robot. Autom. Lett. 2018, 3, 1735–1742. [Google Scholar] [CrossRef]

- Zhao, B.; Huang, Y.; Wei, H.; Hu, X. Ego-Motion Estimation Using Recurrent Convolutional Neural Networks through Optical Flow Learning. Electronics 2021, 10, 222. [Google Scholar] [CrossRef]

- Mahjourian, R.; Wicke, M.; Angelova, A. Unsupervised Learning of Depth and Ego-Motion from Monocular Video Using 3D Geometric Constraints. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; IEEE: Salt Lake City, UT, USA, 2018; pp. 5667–5675. [Google Scholar]

- Jin, F.; Zhao, Y.; Wan, C.; Yuan, Y.; Wang, S. Unsupervised Learning of Depth from Monocular Videos Using 3D–2D Corresponding Constraints. Remote Sens. 2021, 13, 1764. [Google Scholar] [CrossRef]

- Sun, Q.; Tang, Y.; Zhang, C.; Zhao, C.; Qian, F.; Kurths, J. Unsupervised Estimation of Monocular Depth and VO in Dynamic Environments via Hybrid Masks. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 2023–2033. [Google Scholar] [CrossRef]

- Mahdy, A.M.S. A Numerical Method for Solving the Nonlinear Equations of Emden-Fowler Models. J. Ocean. Eng. Sci. 2022, 1–8. [Google Scholar] [CrossRef]

- Yin, Z.; Shi, J. GeoNet: Unsupervised Learning of Dense Depth, Optical Flow and Camera Pose. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; IEEE: Salt Lake City, UT, USA, 2018; pp. 1983–1992. [Google Scholar]

- Zhang, J.-N.; Su, Q.-X.; Liu, P.-Y.; Ge, H.-Y.; Zhang, Z.-F. MuDeepNet: Unsupervised Learning of Dense Depth, Optical Flow and Camera Pose Using Multi-View Consistency Loss. Int. J. Control. Autom. Syst. 2019, 17, 2586–2596. [Google Scholar] [CrossRef]

- Zou, Y.; Luo, Z.; Huang, J.-B. DF-Net: Unsupervised Joint Learning of Depth and Flow Using Cross-Task Consistency. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; Springer: Cham, Switzerland, 2018; pp. 38–55. [Google Scholar]

- Ranjan, A.; Jampani, V.; Balles, L.; Kim, K.; Sun, D.; Wulff, J.; Black, M.J. Competitive Collaboration: Joint Unsupervised Learning of Depth, Camera Motion, Optical Flow and Motion Segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; IEEE: Long Beach, CA, USA, 2019; pp. 12232–12241. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention, MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Springer: Munich, Germany, 2015; pp. 234–241. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; IEEE: Las Vegas, NV, USA, 2016; pp. 770–778. [Google Scholar]

- Zhao, S.; Sheng, Y.; Dong, Y.; Chang, E.I.; Xu, Y. MaskFlownet: Asymmetric Feature Matching with Learnable Occlusion Mask. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 6277–6286. [Google Scholar]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic Differentiation in Pytorch. In Proceedings of the Advances in Neural Information Processing Systems Workshop, Long Beach, CA, USA, 9 December 2017. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M. Imagenet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 3213–3223. [Google Scholar]

- Saxena, A.; Sun, M.; Ng, A.Y. Learning 3-d scene structure from a single still image. In Proceedings of the 2007 IEEE International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–21 October 2007; pp. 1–8. [Google Scholar]

- Zhang, Z.; Scaramuzza, D. A Tutorial on Quantitative Trajectory Evaluation for Visual(-Inertial) Odometry. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: Madrid, Spain, 2018; pp. 7244–7251. [Google Scholar]

| Method | Supervised Signal | Training Dataset | Error Metric | Accuracy Metric | |||||

|---|---|---|---|---|---|---|---|---|---|

| Abs.Rel | Sq.Rel | RMSE | RMSE (log) | ||||||

| Eigen et al. [22] Coarse | Depth | K | 0.214 | 1.605 | 6.563 | 0.292 | 0.673 | 0.884 | 0.957 |

| Eigen et al. [22] Fine | Depth | K | 0.203 | 1.548 | 6.307 | 0.282 | 0.702 | 0.890 | 0.958 |

| Liu et al. [23] | Depth | K | 0.202 | 1.614 | 6.523 | 0.275 | 0.678 | 0.895 | 0.965 |

| Zhan et al. [13] | Stereo | K | 0.144 | 1.391 | 5.869 | 0.241 | 0.803 | 0.928 | 0.969 |

| Godard et al. [12] | Stereo | K | 0.148 | 1.344 | 5.927 | 0.247 | 0.803 | 0.922 | 0.964 |

| Zhou et al. [15] | Mono | K | 0.208 | 1.768 | 6.856 | 0.283 | 0.678 | 0.885 | 0.957 |

| Zhou et al. [15] updated | Mono | K | 0.183 | 1.595 | 6.709 | 0.270 | 0.734 | 0.902 | 0.959 |

| Mahjourian et al. [27] | Mono | K | 0.163 | 1.240 | 6.220 | 0.250 | 0.762 | 0.916 | 0.968 |

| Jin et al. [28] | Mono | K | 0.169 | 1.387 | 6.670 | 0.267 | 0.748 | 0.904 | 0.960 |

| Yin et al. [31] | Mono | K | 0.155 | 1.296 | 5.857 | 0.233 | 0.793 | 0.931 | 0.973 |

| Ranjan et al. [34] | Mono | K | 0.140 | 1.070 | 5.326 | 0.217 | 0.826 | 0.941 | 0.975 |

| Wang et al. [20] | Mono | K | 0.147 | 0.889 | 4.290 | 0.214 | 0.808 | 0.942 | 0.979 |

| Bian et al. [18] | Mono | K | 0.137 | 1.089 | 5.439 | 0.217 | 0.830 | 0.942 | 0.975 |

| Jiang et al. [21] | Mono | K | 0.112 | 0.875 | 4.795 | 0.190 | 0.880 | 0.960 | 0.981 |

| Godard et al. [12] | Mono | K | 0.154 | 1.218 | 5.699 | 0.231 | 0.798 | 0.932 | 0.973 |

| Ours | Mono | K | 0.110 | 0.793 | 4.553 | 0.184 | 0.886 | 0.964 | 0.983 |

| Zhou et al. [15] | Mono | CS + K | 0.198 | 1.836 | 6.565 | 0.275 | 0.718 | 0.901 | 0.960 |

| Mahjourian et al. [27] | Mono | CS + K | 0.159 | 1.231 | 5.912 | 0.243 | 0.784 | 0.923 | 0.970 |

| Jin et al. [28] | Mono | CS + K | 0.162 | 1.039 | 4.851 | 0.244 | 0.767 | 0.920 | 0.969 |

| Yin et al. [31] | Mono | CS + K | 0.153 | 1.328 | 5.737 | 0.232 | 0.802 | 0.934 | 0.972 |

| Ranjan et al. [34] | Mono | CS + K | 0.139 | 1.032 | 5.199 | 0.213 | 0.827 | 0.943 | 0.977 |

| Wang et al. [20] | Mono | CS + K | 0.155 | 1.184 | 5.765 | 0.229 | 0.790 | 0.933 | 0.975 |

| Ours | Mono | CS + K | 0.103 | 0.634 | 3.367 | 0.178 | 0.899 | 0.969 | 0.984 |

| Method | ATE of Seq.09 | ATE of Seq.10 |

|---|---|---|

| ORB-SLAM [6] | 0.014 ± 0.008 | 0.012 ± 0.011 |

| Zhou et al. [15] | 0.016 ± 0.009 | 0.013 ± 0.009 |

| Bian et al. [18] | 0.016 ± 0.007 | 0.015 ± 0.015 |

| Mahjourian et al. [27] | 0.013 ± 0.010 | 0.012 ± 0.011 |

| Yin et al. [31] | 0.012 ± 0.007 | 0.012 ± 0.009 |

| Ranjan et al. [34] | 0.012 ± 0.007 | 0.012 ± 0.008 |

| Ours | 0.008 ± 0.005 | 0.007 ± 0.005 |

| Method | ATE of Seq.09 | ATE of Seq.10 |

|---|---|---|

| Baseline | 0.018 ± 0.011 | 0.017 ± 0.011 |

| 0.014 ± 0.010 | 0.013 ± 0.010 | |

| 0.012 ± 0.008 | 0.011 ± 0.008 | |

| + | 0.012 ± 0.007 | 0.010 ± 0.007 |

| + | 0.011 ± 0.009 | 0.009 ± 0.006 |

| + | 0.009 ± 0.005 | 0.007± 0.005 |

| + | 0.008 ± 0.005 | 0.007 ± 0.005 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Zhao, B.; Yao, J.; Wu, G. Unsupervised Monocular Depth and Camera Pose Estimation with Multiple Masks and Geometric Consistency Constraints. Sensors 2023, 23, 5329. https://doi.org/10.3390/s23115329

Zhang X, Zhao B, Yao J, Wu G. Unsupervised Monocular Depth and Camera Pose Estimation with Multiple Masks and Geometric Consistency Constraints. Sensors. 2023; 23(11):5329. https://doi.org/10.3390/s23115329

Chicago/Turabian StyleZhang, Xudong, Baigan Zhao, Jiannan Yao, and Guoqing Wu. 2023. "Unsupervised Monocular Depth and Camera Pose Estimation with Multiple Masks and Geometric Consistency Constraints" Sensors 23, no. 11: 5329. https://doi.org/10.3390/s23115329

APA StyleZhang, X., Zhao, B., Yao, J., & Wu, G. (2023). Unsupervised Monocular Depth and Camera Pose Estimation with Multiple Masks and Geometric Consistency Constraints. Sensors, 23(11), 5329. https://doi.org/10.3390/s23115329