An Assessment of In-the-Wild Datasets for Multimodal Emotion Recognition

Abstract

1. Introduction

- A review of different works that use in-the-wild datasets. This review comprises works from recent years concerning multimodal emotion recognition methods and the datasets used in their experiments. A detailed description of these datasets is also provided.

- A descriptive analysis of the four selected datasets for our study. This description includes the frequency distribution of emotions, visualization of some samples and details related to the original extraction sources.

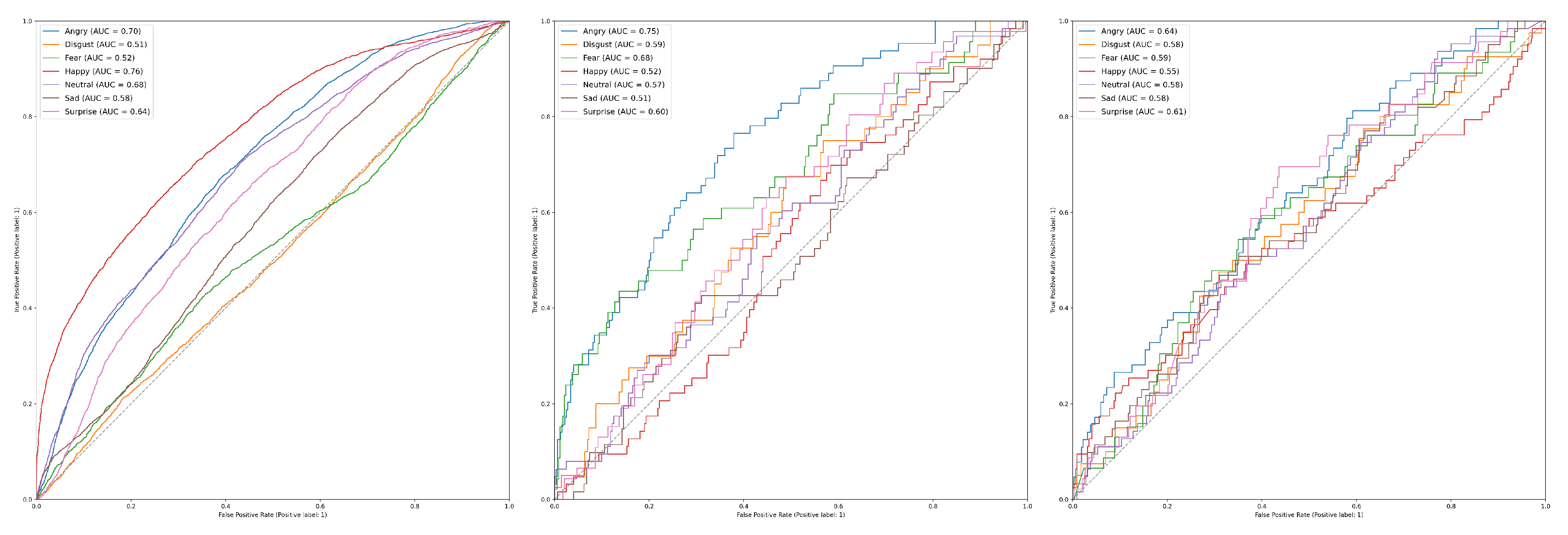

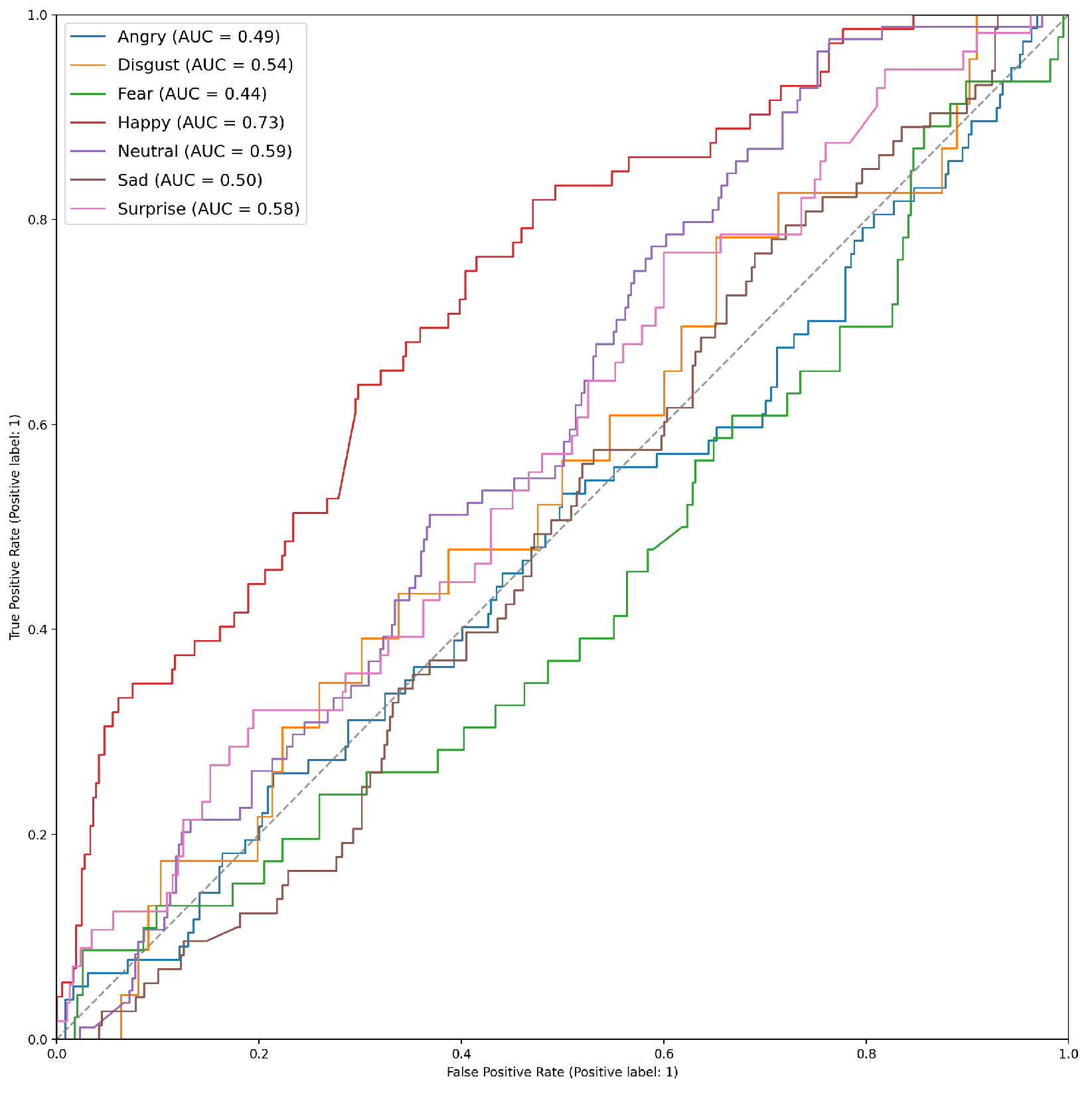

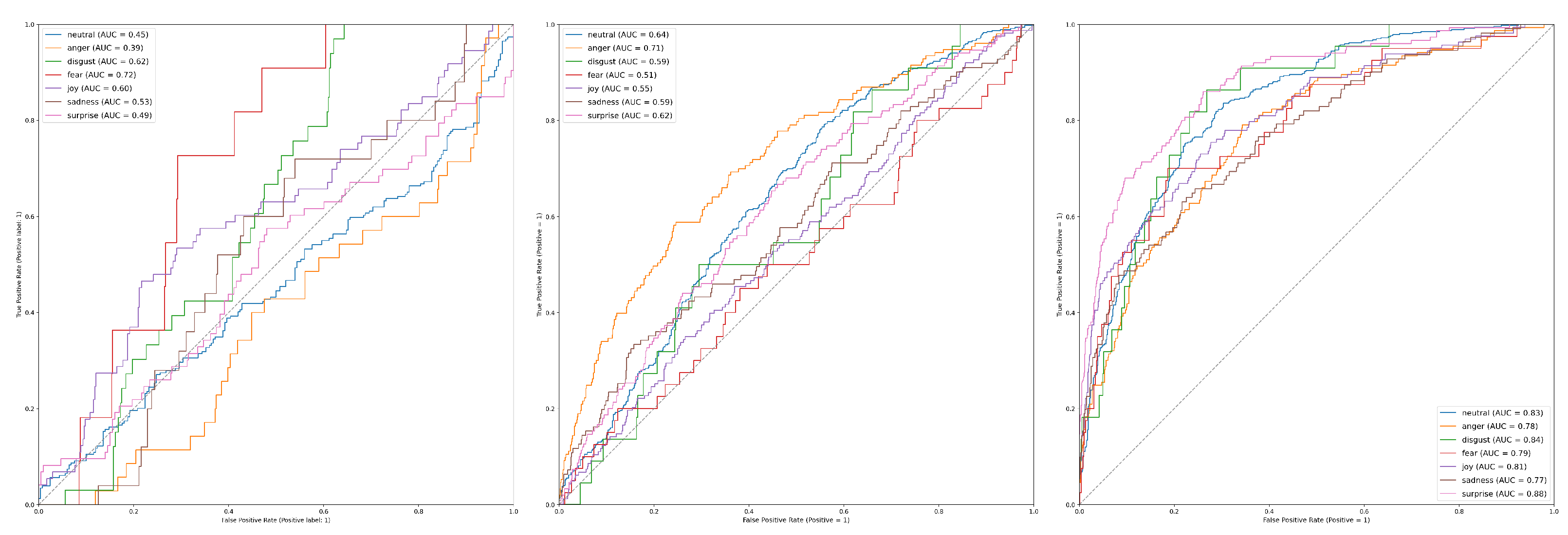

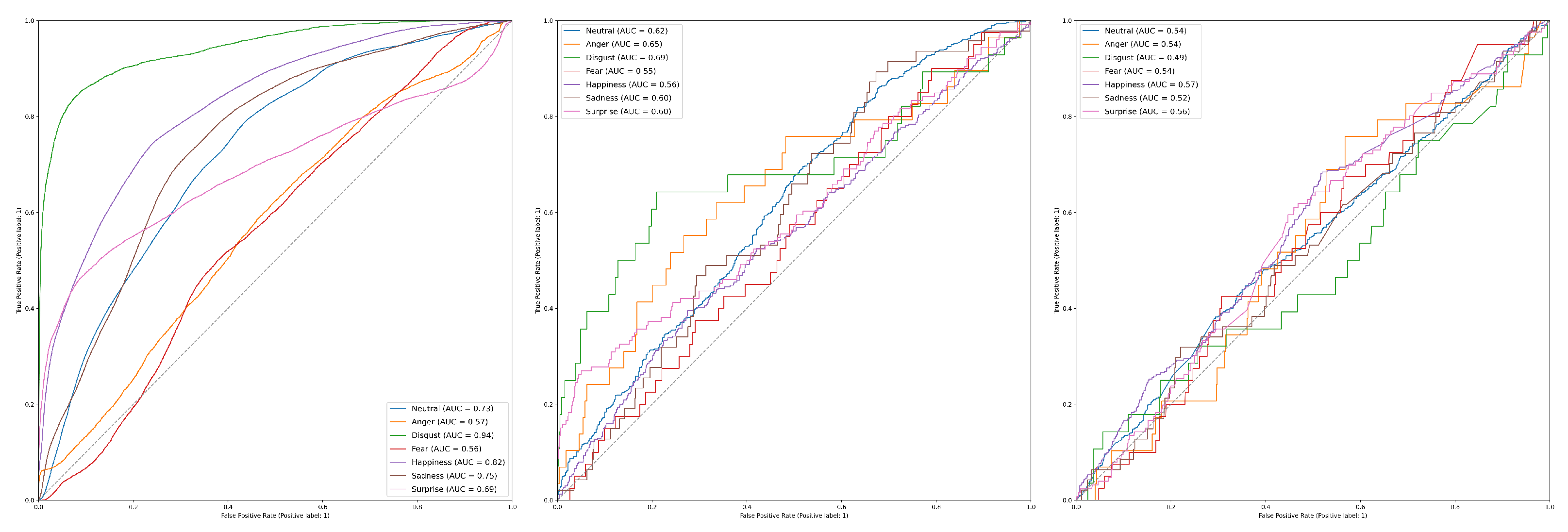

- An evaluation in terms of performance using an ensemble of Deep Learning architectures and fusion methods. The tests include ablation experiments reporting individual results by modality and fusion results.

2. Related Work

3. A Selection of In-the-Wild Datasets

- AFEW: This is a dyna.mic temporal facial expressions data corpus proposed by A. Dhall et al. in 2011 [77]. It has been used as a database for the Emotion Recognition in-the-wild Challenge (EmotiW) since 2013. Different versions have appeared every year for each challenge. For the sake of simplicity, the following refers to the most recent version as AFEW. It consists of close to real world environment instances, extracted from movies and reality TV shows, including 1809 video clips of 300–5400 ms with various head poses, occlusions and lighting. The database contains a large age range of subjects from 1 to 70 years from various races, genders and ages and with multiple subjects in a scene. Around 330 subjects have been labeled with information such as name, age of character, age of actor, gender, pose and individual facial expressions. AFEW consists of separate training (773), validation (383) and test (653) video clips, in which samples are tagged with discrete emotion labels: the six universal emotions (angry, disgust, fear, happy, sad and surprise) and neutral. Audio and video are in WAV and AVI formats. Modalities to explore in this database include facial, audio and posture. Figure 1 shows some samples from this dataset.Each emotion is represented by a set of short videos with different actors showing the emotion in different situations, e.g., a person with a face showing anger (Figure 2). It should be noted that the test folder does not contain the videos categorized by emotion and that the quality of the videos is variable, with some containing scenes with different levels of image quality (Figure 3). The official site of this dataset is the School of Computing, Australian National University (https://cs.anu.edu.au/few/, accessed on 27 May 2023).

- SFEW: A static facial expression database in the wild (SFEW) was created with frames containing facial expressions extracted from AFEW. The database includes unconstrained facial expressions, varied head poses, a large age range, occlusions, varied focus, different resolutions of face and close to real world illumination. Frames extracted were labeled with two independent labelers based on the label of the sequence in AFEW with six basic expressions: angry, disgust, fear, happy, sad, surprise and neutral. It is composed of 1766 images, including 958 for training, 436 for validation and 372 for testing. Each of the images has been assigned to one of seven expression categories, neutral and the six basic expressions. The expression labels of the training and validation sets are provided, while those of the testing set are held back by the challenge organizers. [73]. Figure 4 shows some samples of this database. This corpus is a widely used benchmark database for facial expression recognition in the wild. The official website is the same as AFEW. Table 3 shows the number of images available in SFEW per emotion.

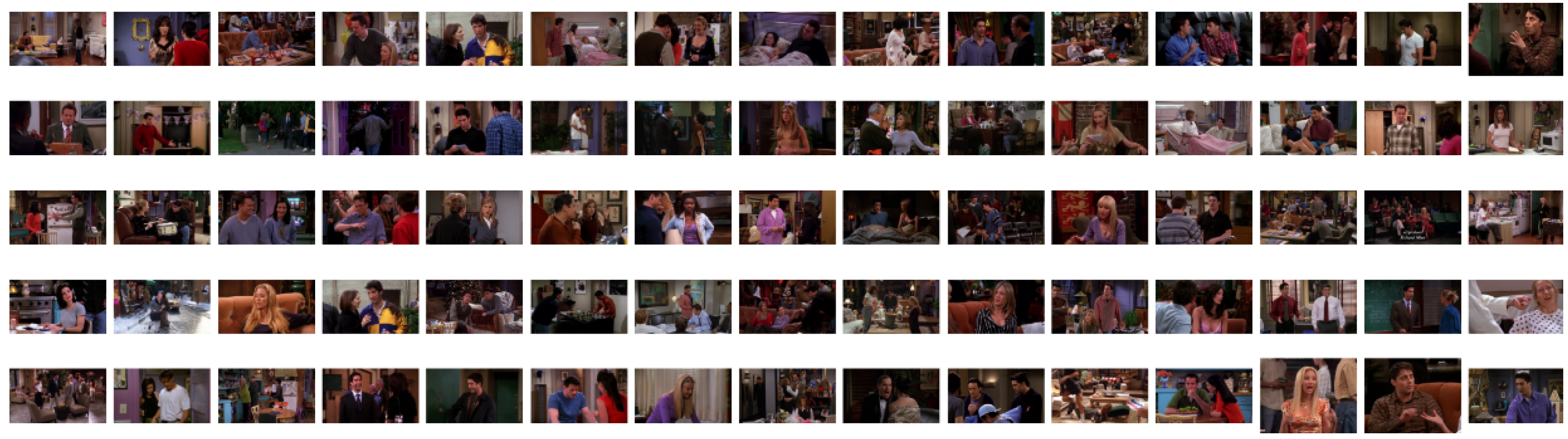

- MELD: the Multimodal EmotionLines Dataset (MELD) is an extension and enhancement of EmotionLines [78]. The MELD corpus was constructed by extracting the starting and ending timestamps of all utterances from every dialog in the EmotionLines dataset, given that timestamps of the utterances in a dialog must be in an increasing order and all the utterances in a dialog have to belong to the same episode and scene. After obtaining the timestamp of each utterance, the corresponding audiovisual clips were extracted from the source episode followed by the extraction of audio content from these clips. The audio files were formatted as 16-bit PCM WAV files. The final dataset includes visual, audio and textual modalities for each utterance. MELD contains about 13,000 utterances from 1433 dialogs from the TV series Friends with different speakers participating in these dialogs. It provides multimodal sources including not only textual dialogs, but also their corresponding visual and audio counterparts. Each utterance is annotated with emotion and sentiment labels. Emotions correspond to Ekman’s six universal emotions (joy, sadness, fear, anger, surprise and disgust) with an additional emotion label neutral. For sentiments, three classes were distinguished as negative, positive and neutral [52]. Figure 5 shows an extract of this dataset. Each frame is an extract collected from a video and most of these frames contain several people expressing different emotions (Figure 6). The dataset contains only the raw data for the complete video frames; individual faces are not cropped or tagged.Within the MELD site (https://github.com/declare-lab/MELD, accessed on 27 May 2023), the data are structured in different folders. In the data folder in particular, there are three different CSV files which corresponds to train, dev and test datasets with 9990, 1110 and 2611 lines, respectively. Each file has the same structure, containing utterance, speaker, emotion and sentiment information, as well as data concerning the source.

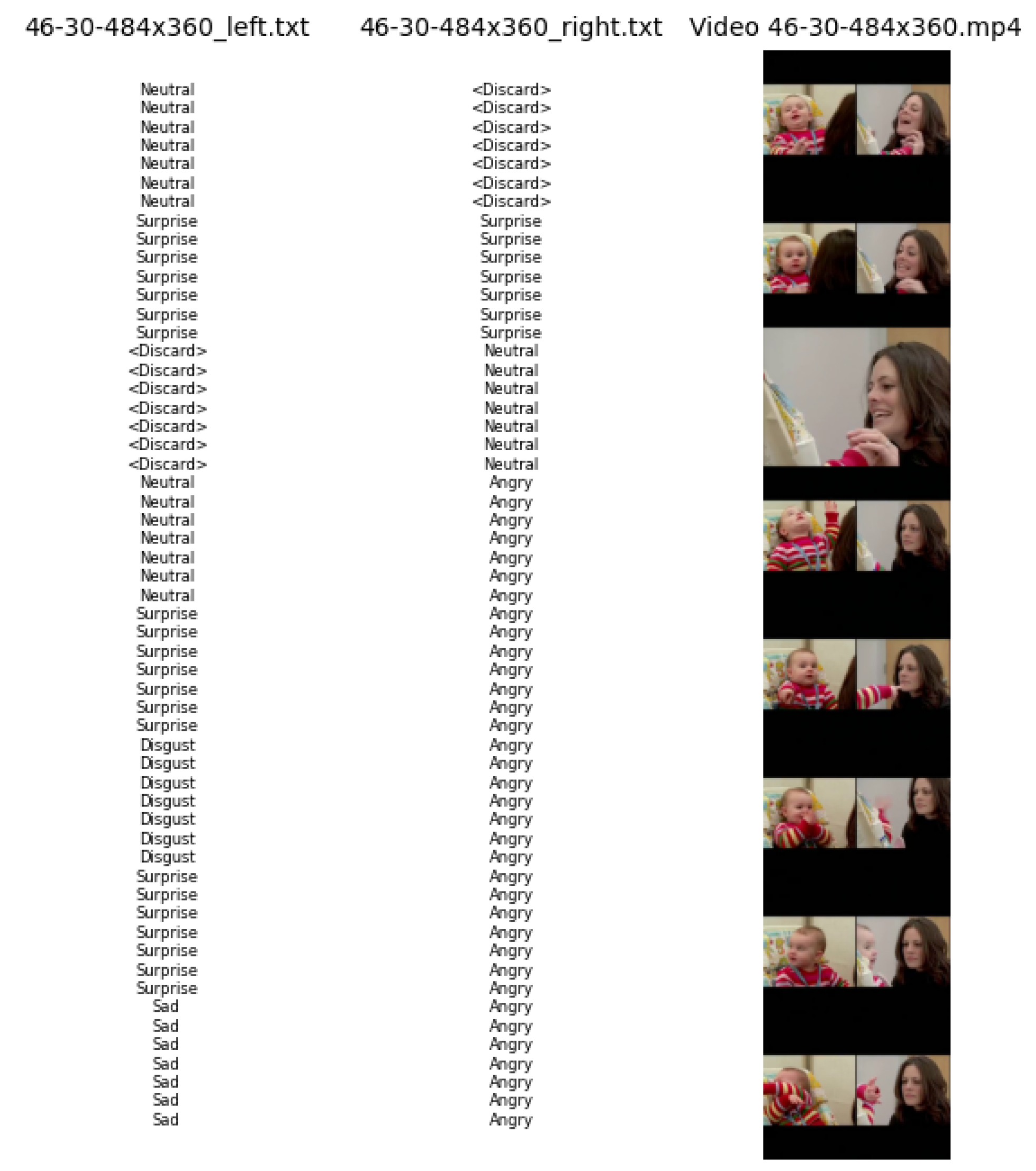

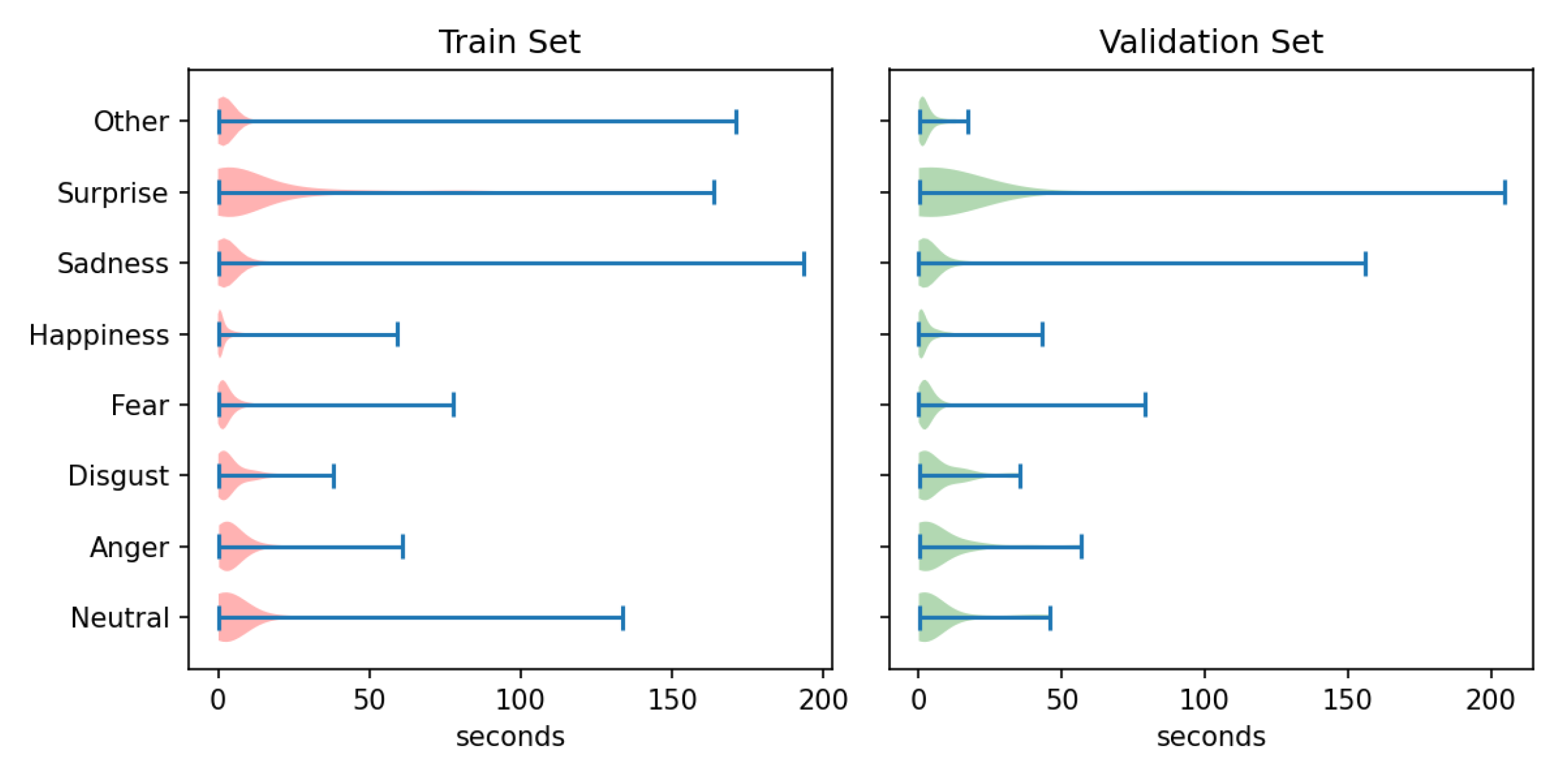

- AffWild2: This is an extension of the AffWild dataset which was designed for the First Affect-in-the-Wild Challenge [79]. AffWild collected data available in video-sharing websites such as YouTube and selected videos that display the affective behavior of people, for example, videos that display the behavior of people when watching a trailer, a movie, a disturbing clip or reactions to pranks. It was designed to train and test an end-to-end deep neural architecture for the estimation of continuous emotion dimensions based on visual cues [56] and was annotated in terms of the valence-arousal dimensions. A total of 298 videos displaying reactions of 200 subjects, with a total video duration of more than 30 h, were collected. Later, AffWild2 extended the data with 260 more subjects and 1,413,000 new video frames [57]. The videos were downloaded from YouTube and have large variations in pose, age, illumination conditions, ethnicity and profession. The set contains 558 videos with million frames in total of people reacting to events or audiovisual content or speaking with the camera. The videos involve a wide range in subjects’ age, ethnicity and profession and they have different head poses, illumination conditions, occlusions and emotions. The videos were processed, trimmed and reformatted to MP4. Frames were annotated for three tasks (valence arousal, emotion classification and action unit detection) [80]. This dataset is also distributed as a set of cropped frames from each video, centered on the face. The number of images available is shown in Table 4.For emotion classification, each frame was annotated with one of the six main emotions (anger, disgust, fear, happiness, sadness, surprise, other) and a neutral label. Some frames are labeled as discarded (with a “”) for this task. The official site of AffWild2 belongs to the Intelligent Behaviour Understanding Group (iBUG), Department of Computing at Imperial College London (https://ibug.doc.ic.ac.uk/resources/aff-wild2/, accessed on 27 May 2023). Figure 7 shows some samples from the AffWild2 dataset.

3.1. Preprocessing

3.1.1. Face Modality

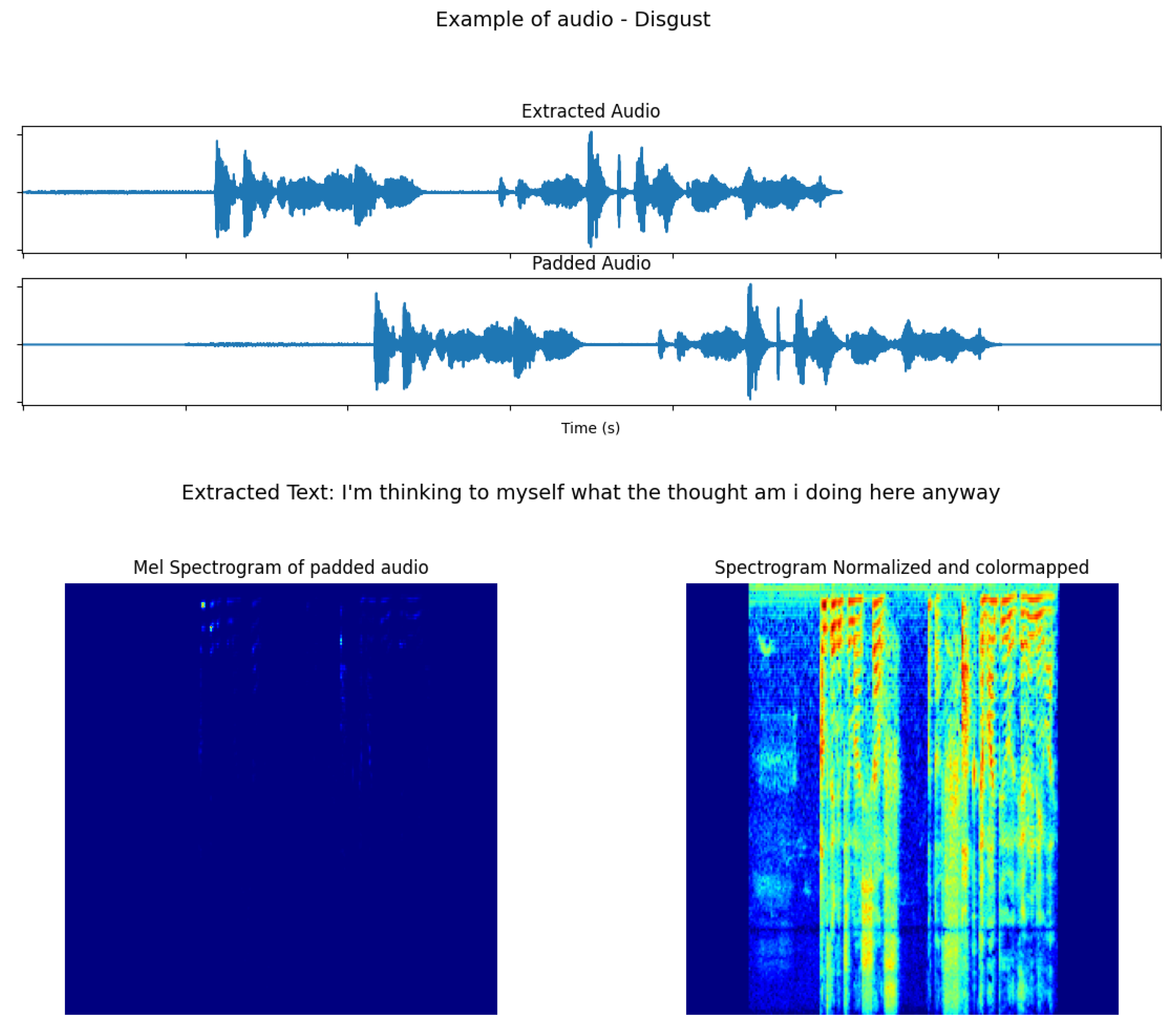

3.1.2. Audio Modality

3.1.3. Text Modality

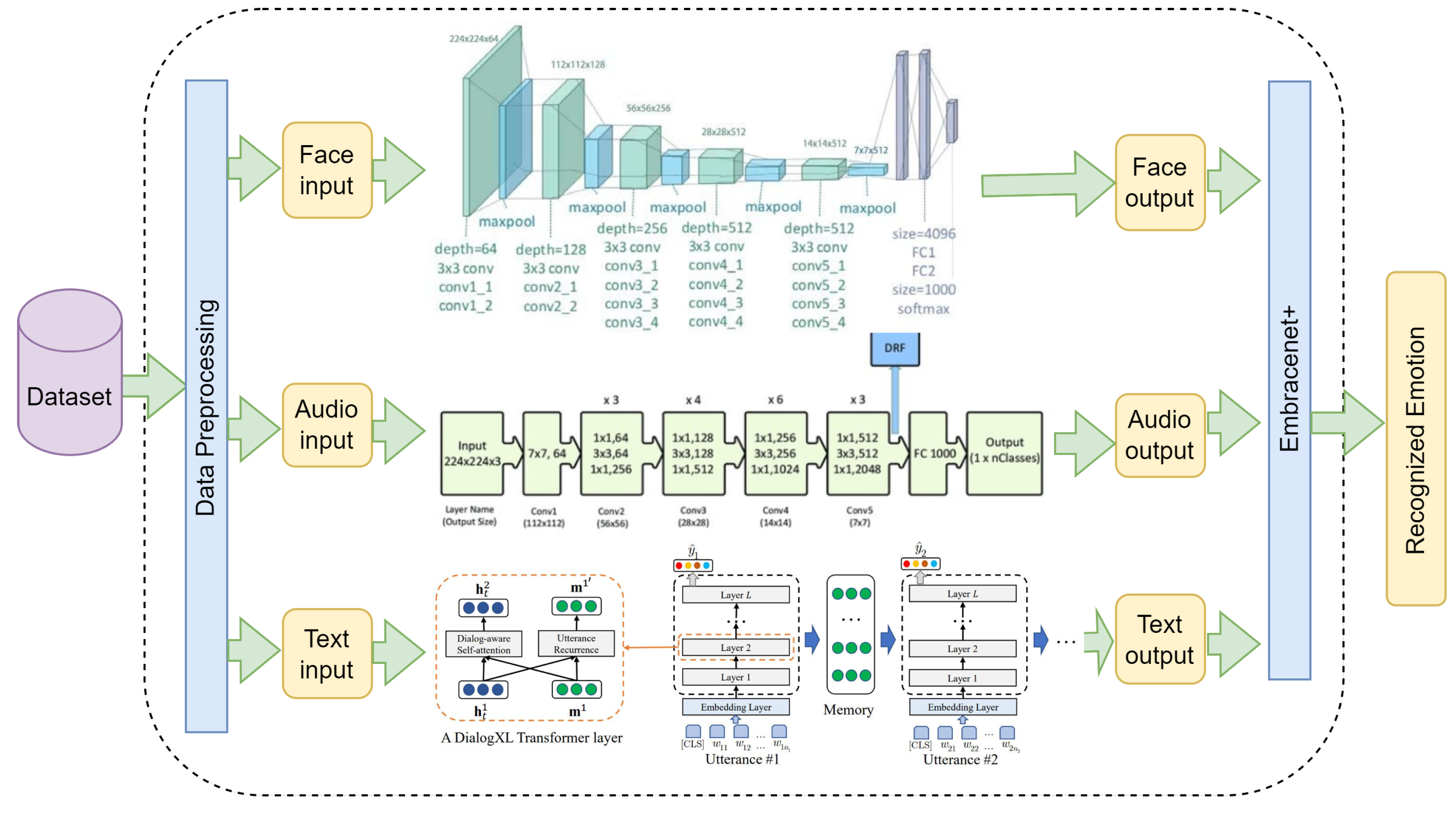

4. A Multimodal Framework for Emotion Recognition

- Face Modality processing. We used a VGG19 architecture [86] as our classifier. This model is built using 19 convolutional layers with filters of size . Following 2 layers with 64 channels each, the output is reduced using a max pooling operation of size . This continues with alternation of pooling with groups of 2 layers of 128 channels, 4 layers of 256 channels, 4 layers of 512 channels and 4 layers of 512 channels. After a final max pooling operation, the output goes to an MLP network with 3 dense layers of sizes 4096, 4096 and 1000 and then a final layer with a Softmax activation function.

- Audio Modality processing. We used a ResNet50 architecture [60] trained from scratch, replacing the original architecture proposed by Venkataramanan and Rajamohan [87], which was used on our previous work. Our expected input is an image with size , representing the spectrogram of the input audio sample. After a convolutional layer with filter size of and 64 channels, the input is passed through a number of residual blocks. These residual blocks are composed of three convolutional layers of filter sizes , and , then the input of the block is added to the block output, providing residual information of higher level features. After a number of groups, the output is max pooled, reducing its size. On ResNet50, this operation occurs after 3, 4, 6 and 3 ResNet blocks. Finally, the output is average pooled, creating a 2048-length vector of features. This vector is then passed to a dense layer and an output layer with a Softmax activation. This last output layer has a number of neurons corresponding to the number of emotions. This is shown in the audio segment of Figure 12.

- Text Modality processing. We used DialogXL [85], a PyTorch implementation for Emotion Recognition in Conversation (ERC) based on XLNet. It consists of an embedding layer, 12 Transformer layers and a feed-forward neural network. DialogXL has an enhanced memory to store longer historical context and a Dialog-Aware Self-Attention component to deal with the multi-party structures. The recurrence mechanism of XLNet was modified from segment-level to utterance-level in order to better model the conversational data. Additionally, Dialog-Aware Self-Attention was used in replacement of the vanilla self-attention in XLNet to capture useful intra- and interspeaker dependencies. Every utterance (sentence) made by a speaker is routed via an embedding layer, which tokenizes the sentence into a series of vectors. This representation is then fed into a stack of neural networks, each layer of which outputs a vector that is fed into the layer below. Each layer of the stack has a Dialog-Aware Self-Attention component and an Utterance Recurrence Component. The hidden state of the categorization token and the historical context are fed through a feed-forward neural network at the end of the last layer to produce the recognized emotion.

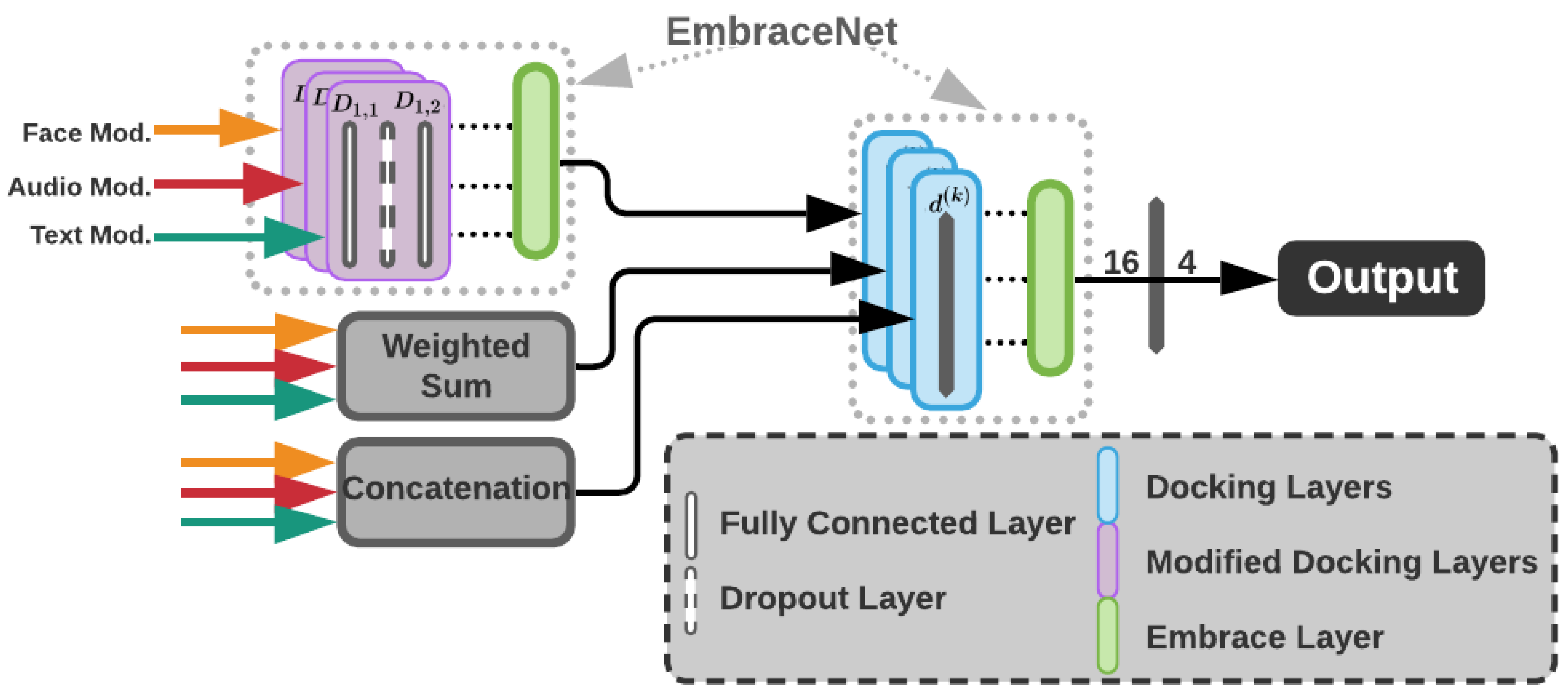

- Fusion method. Individual modalities were fused using Embracenet+, which is presented as an improvement of the Embracenet approach in [15]. The architecture involves three simple Embracenet models working to improve the modalities’ correlation learning as well as the final results. Each Embracenet model used has one more linear layer and a dropout layer, which hardens the model a bit to improve learning. Figure 13 shows the Embracenet+ architecture. In it, a linear layer of 32 neurons (D1,1), a dropout layer with 0.5 decay probability and another linear layer of 16 neurons (D1,2) compose each of the altered docking layers. Additionally, a weighted sum, whose output is a vector of n probabilities ( number of emotion categories), and a concatenation, whose output is a vector of (due to the number of modalities), are used as fusion techniques. Afterwards, another Embracenet receives three vectors of 16, n and values (that work as modalities). These vectors are handled by docking layers of one linear layer of 16 neurons each (), leading to an extra linear layer of n neurons, which outputs the final prediction.

5. Dataset Evaluation

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Dzedzickis, A.; Kaklauskas, A.; Bucinskas, V. Human Emotion Recognition: Review of Sensors and Methods. Sensors 2020, 20, 592. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Ho, S.B.; Cambria, E. A Review of Emotion Sensing: Categorization Models and Algorithms. Multimed. Tools Appl. 2020, 79, 35553–35582. [Google Scholar] [CrossRef]

- Shaver, P.; Schwartz, J.; Kirson, D.; O’Connor, C. Emotion Knowledge: Further Exploration of a Prototype Approach. J. Pers. Soc. Psychol. 1987, 52, 1061–1086. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P. An Argument for Basic Emotions. Cognit. Emo 1992, 6, 169–200. [Google Scholar] [CrossRef]

- Stahelski, A.; Anderson, A.; Browitt, N.; Radeke, M. Facial Expressions and Emotion Labels Are Separate Initiators of Trait Inferences from the Face. Front. Psychol. 2021, 12, 749933. [Google Scholar] [CrossRef]

- Schulz, A.; Thanh, T.D.; Paulheim, H.; Schweizer, I. A Fine-Grained Sentiment Analysis Approach for Detecting Crisis Related Microposts. In Proceedings of the 10th International ISCRAM Conference, Baden-Baden, Germany, 12–15 May 2013. [Google Scholar]

- Latinjak, A. The Underlying Structure of Emotions: A Tri-Dimensional Model of Core Affect and Emotion Concepts for Sports. Rev. Iberoam. Psicol. Ejecicio Deporte (Iberoam. J. Exerc. Sport Psychol.) 2012, 7, 71–87. [Google Scholar]

- Feng, K.; Chaspari, T. A Review of Generalizable Transfer Learning in Automatic Emotion Recognition. Front. Comput. Sci. 2020, 2, 9. [Google Scholar] [CrossRef]

- Lhommet, M.; Marsella, S.C. Expressing Emotion through Posture and Gesture. In Oxford Handbook of Affective Computing; Calvo, R.A., D’Mello, S.K., Gratch, J., Kappas, A., Eds.; Oxford University Press: Oxford, UK, 2014; pp. 273–285. [Google Scholar]

- Pease, A.; Chandler, J. Body Language; Sheldon Press: Hachette, UK, 1997. [Google Scholar]

- Cowen, A.S.; Keltner, D. What the Face Displays: Mapping 28 Emotions Conveyed by Naturalistic Expression. Am. Psychol. 2020, 75, 349–364. [Google Scholar] [CrossRef]

- Mittal, T.; Guhan, P.; Bhattacharya, U.; Chandra, R.; Bera, A.; Manocha, D. EmotiCon: Context-Aware Multimodal Emotion Recognition Using Frege’s Principle. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 14222–14231. [Google Scholar]

- Mittal, T.; Bhattacharya, U.; Chandra, R.; Bera, A.; Manocha, D. M3ER: Multiplicative Multimodal Emotion Recognition using Facial, Textual and Speech Cues. Proc. Aaai Conf. Artif. Intell. AAAI 2020, 34, 1359–1367. [Google Scholar] [CrossRef]

- Subramanian, G.; Cholendiran, N.; Prathyusha, K.; Balasubramanain, N.; Aravinth, J. Multimodal Emotion Recognition Using Different Fusion Techniques. In Proceedings of the 2021 Seventh International Conference on Bio Signals, Images and Instrumentation (ICBSII), Chennai, India, 25–27 March 2021; pp. 1–6. [Google Scholar]

- Heredia, J.; Lopes-Silva, E.; Cardinale, Y.; Diaz-Amado, J.; Dongo, I.; Graterol, W.; Aguilera, A. Adaptive Multimodal Emotion Detection Architecture for Social Robots. IEEE Access 2022, 10, 20727–20744. [Google Scholar] [CrossRef]

- Poria, S.; Chaturvedi, I.; Cambria, E.; Hussain, A. Convolutional MKL Based Multimodal Emotion Recognition and Sentiment Analysis. In Proceedings of the 2016 IEEE 16th International Conference on Data Mining (ICDM), Barcelona, Spain, 12–15 December 2016; pp. 439–448. [Google Scholar]

- Kratzwald, B.; Ilić, S.; Kraus, M.; Feuerriegel, S.; Prendinger, H. Deep Learning for Affective Computing: Text-Based Emotion Recognition in Decision Support. Decis. Support. Syst. 2018, 115, 24–35. [Google Scholar] [CrossRef]

- Soleymani, M.; Garcia, D.; Jou, B.; Schuller, B.; Chang, S.F.; Pantic, M. A survey of Multimodal Sentiment Analysis. Image Vis. Comput. 2017, 65, 3–14. [Google Scholar] [CrossRef]

- Ahmed, N.; Aghbari, Z.A.; Girija, S. A systematic Survey on Multimodal Emotion Recognition using Learning Algorithms. Intell. Syst. Appl. 2023, 17, 200171. [Google Scholar] [CrossRef]

- Xu, H.; Zhang, H.; Han, K.; Wang, Y.; Peng, Y.; Li, X. Learning Alignment for Multimodal Emotion Recognition from Speech. arXiv 2019, arXiv:1909.05645. [Google Scholar]

- Salama, E.S.; El-Khoribi, R.A.; Shoman, M.E.; Wahby Shalaby, M.A. A 3D-convolutional Neural Network Framework with Ensemble Learning Techniques for Multi-Modal Emotion recognition. Egypt. Inform. J. 2021, 22, 167–176. [Google Scholar] [CrossRef]

- Cimtay, Y.; Ekmekcioglu, E.; Caglar-Ozhan, S. Cross-Subject Multimodal Emotion Recognition Based on Hybrid Fusion. IEEE Access 2020, 8, 168865–168878. [Google Scholar] [CrossRef]

- Tripathi, S.; Tripathi, S.; Beigi, H. Multi-Modal Emotion Recognition on IEMOCAP Dataset using Deep Learning. arXiv 2018, arXiv:1804.05788. [Google Scholar]

- Li, C.; Bao, Z.; Li, L.; Zhao, Z. Exploring Temporal Representations by Leveraging Attention-Based Bidirectional LSTM-RNNs for Multi-Modal Emotion Recognition. Inf. Process. Manag. 2020, 57, 102185. [Google Scholar] [CrossRef]

- Liu, W.; Qiu, J.L.; Zheng, W.L.; Lu, B.L. Comparing Recognition Performance and Robustness of Multimodal Deep Learning Models for Multimodal Emotion Recognition. IEEE Trans. Cogn. Develop. Syst. 2021, 14, 715–729. [Google Scholar] [CrossRef]

- Ranganathan, H.; Chakraborty, S.; Panchanathan, S. Multimodal Emotion Recognition Using Deep Learning Architectures. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; pp. 1–9. [Google Scholar]

- Abdullah, S.M.S.A.; Ameen, S.Y.A.; Sadeeq, M.A.; Zeebaree, S. Multimodal Emotion Recognition Using Deep Learning. J. Appl. Sci. Technol. Trends. 2021, 2, 52–58. [Google Scholar] [CrossRef]

- Tzirakis, P.; Trigeorgis, G.; Nicolaou, M.A.; Schuller, B.W.; Zafeiriou, S. End-to-End Multimodal Emotion Recognition Using Deep Neural Networks. IEEE J. Sel. Top. Signal Process. 2017, 11, 1301–1309. [Google Scholar] [CrossRef]

- Alaba, S.Y.; Nabi, M.M.; Shah, C.; Prior, J.; Campbell, M.D.; Wallace, F.; Ball, J.E.; Moorhead, R. Class-Aware Fish Species Recognition Using Deep Learning for an Imbalanced Dataset. Sensors 2022, 22, 8268. [Google Scholar] [CrossRef] [PubMed]

- Zhao, M.; Liu, Q.; Jha, A.; Deng, R.; Yao, T.; Mahadevan-Jansen, A.; Tyska, M.J.; Millis, B.A.; Huo, Y. VoxelEmbed: 3D Instance Segmentation and Tracking with Voxel Embedding based Deep Learning. In Machine Learning in Medical Imaging; Springer: Cham, Switzerland, 2021; pp. 437–446. [Google Scholar]

- Jin, B.; Cruz, L.; Gonçalves, N. Pseudo RGB-D Face Recognition. IEEE Sens. J. 2022, 22, 21780–21794. [Google Scholar] [CrossRef]

- Yao, T.; Qu, C.; Liu, Q.; Deng, R.; Tian, Y.; Xu, J.; Jha, A.; Bao, S.; Zhao, M.; Fogo, A.B.; et al. Compound Figure Separation of Biomedical Images with Side Loss. In Proceedings of the Deep Generative Models and Data Augmentation, Labelling and Imperfections: First Workshop, DGM4MICCAI 2021 and First Workshop, DALI 2021, Held in Conjunction with MICCAI 2021, Strasbourg, France, 1 October 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 173–183. [Google Scholar]

- Jin, B.; Cruz, L.; Gonçalves, N. Deep Facial Diagnosis: Deep Transfer Learning from Face Recognition to Facial Diagnosis. IEEE Access 2020, 8, 123649–123661. [Google Scholar] [CrossRef]

- Zheng, Q.; Zhao, P.; Li, Y.; Wang, H.; Yang, Y. Spectrum Interference-based Two-Level data Augmentation Method in Deep Learning for Automatic Modulation Classification. Neural Comput. Appl. 2020, 33, 7723–7745. [Google Scholar] [CrossRef]

- Garcia-Garcia, J.M.; Lozano, M.D.; Penichet, V.M.R.; Law, E.L.C. Building a Three-Level Multimodal Emotion Recognition Framework. Multimed. Tools Appl. 2023, 82, 239–269. [Google Scholar] [CrossRef]

- Samadiani, N.; Huang, G.; Luo, W.; Shu, Y.; Wang, R.; Kocaturk, T. A Novel Video Emotion Recognition System in the Wild Using a Random Forest Classifier. In Data Science; Springer: Singapore, 2020; pp. 275–284. [Google Scholar]

- Samadiani, N.; Huang, G.; Luo, W.; Shu, Y.; Wang, R.; Kocaturk, T. A multiple Feature Fusion Framework for Video Emotion Recognition in the Wild. Concurr. Computat. Pract. Exper. 2022, 34, e5764. [Google Scholar] [CrossRef]

- Liu, T.; Wang, J.; Yang, B.; Wang, X. Facial Expression Recognition Method with Multi-Label Distribution Learning for Non-Verbal Behavior Understanding in the Classroom. Infrared Phys. Technol. 2021, 112, 103594. [Google Scholar] [CrossRef]

- Li, X.; Li, T.; Li, S.; Tian, B.; Ju, J.; Liu, T.; Liu, H. Learning Fusion Feature Representation for Garbage Image Classification Model in Human–Robot Interaction. Infrared Phys. Technol. 2023, 128, 104457. [Google Scholar] [CrossRef]

- Kollias, D.; Zafeiriou, S. Exploiting Multi-CNN Features in CNN-RNN based Dimensional Emotion Recognition on the OMG in-the-Wild Dataset. arXiv 2019, arXiv:1910.01417. [Google Scholar] [CrossRef]

- Chen, J.; Wang, C.; Wang, K.; Yin, C.; Zhao, C.; Xu, T.; Zhang, X.; Huang, Z.; Liu, M.; Yang, T. HEU Emotion: A Large-Scale Database for Multimodal Emotion Recognition in the Wild. Neural Comput. Applic. 2021, 33, 8669–8685. [Google Scholar] [CrossRef]

- Li, S.; Deng, W. Deep Facial Expression Recognition: A Survey. IEEE Trans. Affect. Comput. 2020, 13, 1195–1215. [Google Scholar] [CrossRef]

- Riaz, M.N.; Shen, Y.; Sohail, M.; Guo, M. eXnet: An Efficient Approach for Emotion Recognition in the Wild. Sensors 2020, 20, 1087. [Google Scholar] [CrossRef]

- Dhall, A.; Sharma, G.; Goecke, R.; Gedeon, T. EmotiW 2020: Driver Gaze, Group Emotion, Student Engagement and Physiological Signal based Challenges. In Proceedings of the ICMI ’20: 2020 International Conference on Multimodal Interaction, Virtual Event, The Netherlands, 25–29 October 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 784–789. [Google Scholar]

- Hu, P.; Cai, D.; Wang, S.; Yao, A.; Chen, Y. Learning Supervised Scoring Ensemble for Emotion recognition in the wild. In Proceedings of the ICMI’17: 19th ACM International Conference on Multimodal Interaction, Glasgow, UK, 13–17 November 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 553–560. [Google Scholar]

- Li, S.; Zheng, W.; Zong, Y.; Lu, C.; Tang, C.; Jiang, X.; Liu, J.; Xia, W. Bi-modality Fusion for Emotion Recognition in the Wild. In Proceedings of the ICMI’19: 2019 International Conference on Multimodal Interaction, Suzhou, China, 14–18 October 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 589–594. [Google Scholar]

- Salah, A.A.; Kaya, H.; Gürpınar, F. Video-Based Emotion Recognition in the Wild. In Multimodal Behavior Analysis in the Wild; Academic Press: Cambridge, MA, USA, 2019; pp. 369–386. [Google Scholar]

- Yu, Z.; Zhang, C. Image Based Static Facial Expression Recognition with Multiple Deep Network Learning. In Proceedings of the 2015 ACM on International Conference on Multimodal Interaction, Seattle, WA, USA, 9–13 November 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 435–442. [Google Scholar]

- Munir, A.; Hussain, A.; Khan, S.A.; Nadeem, M.; Arshid, S. Illumination Invariant Facial Expression Recognition using Selected Merged Binary Patterns for Real World Images. Optik 2018, 158, 1016–1025. [Google Scholar] [CrossRef]

- Cai, J.; Meng, Z.; Khan, A.S.; Li, Z.; O’Reilly, J.; Tong, Y. Island Loss for Learning Discriminative Features in Facial Expression Recognition. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; pp. 302–309. [Google Scholar]

- Ruan, D.; Yan, Y.; Lai, S.; Chai, Z.; Shen, C.; Wang, H. Feature Decomposition and Reconstruction Learning for Effective Facial Expression Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 7660–7669. [Google Scholar]

- Poria, S.; Hazarika, D.; Majumder, N.; Naik, G.; Cambria, E.; Mihalcea, R. MELD: A Multimodal Multi-Party Dataset for Emotion Recognition in Conversations. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; Association for Computational Linguistics: Florence, Italy, 2019; pp. 527–536. [Google Scholar]

- Xie, B.; Sidulova, M.; Park, C.H. Robust Multimodal Emotion Recognition from Conversation with Transformer-Based Crossmodality Fusion. Sensors 2021, 21, 4913. [Google Scholar] [CrossRef]

- Ho, N.H.; Yang, H.J.; Kim, S.H.; Lee, G. Multimodal Approach of Speech Emotion Recognition Using Multi-Level Multi-Head Fusion Attention-Based Recurrent Neural Network. IEEE Access 2020, 8, 61672–61686. [Google Scholar] [CrossRef]

- Hu, J.; Liu, Y.; Zhao, J.; Jin, Q. MMGCN: Multimodal Fusion via Deep Graph Convolution Network for Emotion Recognition in Conversation. arXiv 2021, arXiv:2107.06779. [Google Scholar]

- Kollias, D.; Tzirakis, P.; Nicolaou, M.A.; Papaioannou, A.; Zhao, G.; Schuller, B.; Kotsia, I.; Zafeiriou, S. Deep Affect Prediction in-the-Wild: AffWild Database and Challenge, Deep Architectures and Beyond. arXiv 2018, arXiv:1804.10938. [Google Scholar] [CrossRef]

- Kollias, D.; Zafeiriou, S. Aff-Wild2: Extending the AffWild Database for Affect Recognition. arXiv 2019, arXiv:1811.07770. [Google Scholar]

- Barros, P.; Sciutti, A. The FaceChannelS: Strike of the Sequences for the AffWild 2 Challenge. arXiv 2020, arXiv:2010.01557. [Google Scholar]

- Liu, Y.; Zhang, X.; Kauttonen, J.; Zhao, G. Uncertain Facial Expression Recognition via Multi-task Assisted Correction. arXiv 2022, arXiv:2212.07144. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Guo, Y.; Zhang, L.; Hu, Y.; He, X.; Gao, J. MS-Celeb-1M: A Dataset and Benchmark for Large-Scale Face Recognition. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part III 14. Springer: Cham, Switzerland, 2016; pp. 87–102. [Google Scholar]

- Yu, J.; Cai, Z.; He, P.; Xie, G.; Ling, Q. Multi-Model Ensemble Learning Method for Human Expression Recognition. arXiv 2022, arXiv:2203.14466. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking Model Scaling for Convolutional Neural Networks. In International Conference on Machine Learning; PMLR: Long Beach, CA, USA, 2019; pp. 6105–6114. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Zhang, W.; Qiu, F.; Wang, S.; Zeng, H.; Zhang, Z.; An, R.; Ma, B.; Ding, Y. Transformer-based Multimodal Information Fusion for Facial Expression Analysis. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), New Orleans, LA, USA, 19–20 June 2022. [Google Scholar]

- Mollahosseini, A.; Hasani, B.; Mahoor, M.H. AffectNet: A Database for Facial Expression, Valence and Arousal Computing in the Wild. arXiv 2017, arXiv:1708.03985. [Google Scholar] [CrossRef]

- Dhall, A.; Goecke, R.; Lucey, S.; Gedeon, T. Collecting Large, Richly Annotated Facial-Expression Databases from Movies. IEEE Multimed. 2012, 19, 34–41. [Google Scholar] [CrossRef]

- Dhall, A.; Ramana Murthy, O.V.; Goecke, R.; Joshi, J.; Gedeon, T. Video and Image Based Emotion Recognition Challenges in the Wild: EmotiW 2015. In Proceedings of the ICMI ’15: 2015 ACM on International Conference on Multimodal Interaction, Seattle, WA, USA, 9–13 November 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 423–426. [Google Scholar]

- Dhall, A.; Goecke, R.; Joshi, J.; Hoey, J.; Gedeon, T. EmotiW 2016: Video and Group-Level Emotion Recognition Challenges. In Proceedings of the ICMI ’16: 18th ACM International Conference on Multimodal Interaction, Tokyo, Japan, 12–16 November 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 427–432. [Google Scholar]

- Lucey, P.; Cohn, J.F.; Kanade, T.; Saragih, J.; Ambadar, Z.; Matthews, I. The Extended Cohn-Kanade Dataset (CK+): A Complete Dataset for Action Unit and Emotion-Specified Expression. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition—Workshops, San Francisco, CA, USA, 13–18 June 2010; pp. 94–101. [Google Scholar]

- Goodfellow, I.J.; Erhan, D.; Carrier, P.L.; Courville, A.; Mirza, M.; Hamner, B.; Cukierski, W.; Tang, Y.; Thaler, D.; Lee, D.H.; et al. Challenges in Representation Learning: A Report on Three Machine Learning Contests. arXiv 2013, arXiv:1307.0414. [Google Scholar]

- Dhall, A.; Goecke, R.; Lucey, S.; Gedeon, T. Static Facial Expression Analysis in Tough Conditions: Data, Evaluation Protocol and Benchmark. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011; pp. 2106–2112. [Google Scholar]

- Li, S.; Deng, W.; Du, J. Reliable Crowdsourcing and Deep Locality-Preserving Learning for Expression Recognition in the Wild. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2584–2593. [Google Scholar]

- Zadeh, A.; Liang, P.P.; Poria, S.; Cambria, E.; Morency, L.P. Multimodal Language Analysis in the Wild: CMU-MOSEI Dataset and Interpretable Dynamic Fusion Graph. In Proceedings of the Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; Association for Computational Linguistics: Melbourne, Australia, 2018; pp. 2236–2246. [Google Scholar]

- Busso, C.; Bulut, M.; Lee, C.C.; Kazemzadeh, A.; Mower, E.; Kim, S.; Chang, J.N.; Lee, S.; Narayanan, S.S. IEMOCAP: Interactive Emotional Dyadic Motion Capture Database. Lang. Resour. Eval. 2008, 42, 335–359. [Google Scholar] [CrossRef]

- Dhall, A.; Goecke, R.; Lucey, S.; Gedeon, T. Acted Facial Expressions in the Wild Database. In Technical Report TR-CS-11-02; Australian National University: Canberra, Australia, 2011. [Google Scholar]

- Chen, S.Y.; Hsu, C.C.; Kuo, C.C.; Huang, T.-H.; Ku, L.W. EmotionLines: An Emotion Corpus of Multi-Party Conversations. arXiv 2018, arXiv:1802.08379. [Google Scholar]

- Kollias, D.; Nicolaou, M.A.; Kotsia, I.; Zhao, G.; Zafeiriou, S. Recognition of Affect in the Wild Using Deep Neural Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1972–1979. [Google Scholar]

- Kollias, D. Abaw: Valence-Arousal Estimation, Expression Recognition, Action Unit Detection & Multi-Task Learning Challenges. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 2328–2336. [Google Scholar]

- Tomar, S. Converting Video Formats with FFmpeg. Linux J. 2006, 2006, 10. [Google Scholar]

- McFee, B.; Raffel, C.; Liang, D.; Ellis, D.P.; McVicar, M.; Battenberg, E.; Nieto, O. Librosa: Audio and Music Signal Analysis in Python. In Proceedings of the 14th Python in Science Conference, Austin, TX, USA, 6–12 July 2015; Volume 8, pp. 18–25. [Google Scholar]

- Lech, M.; Stolar, M.; Best, C.; Bolia, R. Real-Time Speech Emotion Recognition Using a Pre-trained Image Classification Network: Effects of Bandwidth Reduction and Companding. Front. Comput. Sci. 2020, 2, 14. [Google Scholar] [CrossRef]

- Ravanelli, M.; Parcollet, T.; Plantinga, P.; Rouhe, A.; Cornell, S.; Lugosch, L.; Subakan, C.; Dawalatabad, N.; Heba, A.; Zhong, J.; et al. SpeechBrain: A General-Purpose Speech Toolkit. arXiv 2021, arXiv:2106.04624. [Google Scholar]

- Shen, W.; Chen, J.; Quan, X.; Xie, Z. DialogXL: All-in-One XLNet for Multi-Party Conversation Emotion Recognition. arXiv 2020, arXiv:2012.08695. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations (ICLR 2015), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Venkataramanan, K.; Rajamohan, H.R. Emotion Recognition from Speech. arXiv 2019, arXiv:1912.10458. [Google Scholar]

| Author | Method | Dataset/Database Name | Details | Accuracy |

|---|---|---|---|---|

| Riaz et al. [43] | Convolutional Neural Network (eXnet) | FER-2013 CK+ RAF-DB | 12 Convolution Batch- normalization ReLU + 2 Fully connected layers | 71.67% 95.63% 84% |

| Chen et al. [41] | Chen’s method | HEU-part1 HEU-part2 CK+ AFEW 7.0 | CNN + GRU + 3D ResNeXt + OpenSMILE + MMA | 49.22% (HEU-part1) 55.04% (HEU-part2) |

| Samadiani et al. [36] | Random Forest (RF) | AFEW 4.0 | Generalized Procrustes analysis + RF | 39.71% |

| Huet al. [45] | Deep CNNs with top-layer supervision (SSE) | AFEW 7.0 | Fusion of 1 SSE-ResNet + 1 SSE-DenseNet + 1 SSE-HoloNet + 1 hand-crafted model + 1 audio model | 60.34% |

| Li et al. [46] | Bimodality fusion method | AFEW 7.0 AffectNet | VGG-Face, BLSTM, ResNet-18, DenseNet-121, VGG-AffectNet | 62.8% |

| Salah et al. [47] | Salah’s method | AFEW 5.0 AFEW 7.0 FER-2013 | VGG-Face + Kernel Extreme Learning Machine + Partial Least Squares regression | 54.55% (AFEW 5.0) 52.11% (AFEW 7.0) |

| Yu and Zhang [48] | CNN, Relu, the log likelihood loss, the hinge loss | FER-2013- SFEW 2.0 | 5 convolutional layers, 3 stochastic pooling layers and 3 fully connected layers. JDA, DCNN, MoT detectors | 52.29% (validation) 58.06% (test) |

| Munir et al. [49] | SMO, KNN, simple logistic MLP | SFEW | Fast Fourier transform and contrast limited adaptive histogram equalisation and merged binary pattern code | 96.2% (holistic based) 65.7% (division based) |

| Cai et al. [50] | CNN + IL | CK+, MMI, Oulu-CASIA, SFEW | 3 convolutional layers, full connected layers with an IL and the Softmax loss at the decision layer | SFEW results: 51.83% (validation) 56.99% (test) |

| Ruan et al. [51] | ResNet-18, full connected layers | CK+, MMI, Oulu-CASIA, RAF-DB, SFEW | Ensemble of networks (FDN, FRN) | 89.47% (RAF-DB) 62.16% (SFEW) |

| Xie et al. [53] | Transformer-based crossmodality fusion with the Embracenet architecture | MELD | GPT, WaveRNN, FaceNet + GRU, transformer-based fusion mechanism with Embracenet | 64% |

| Ho et al. [54] | Multi-Level Multi-Head Fusion Attention mechanism and recurrent neural network (RNN) | IEMOCAP, MELD, CMU-MOSEI | MFCC, BERT, multi-head attention technique | MELD results: 63.26% (accuracy), 60.59% (F1-Score) |

| Huet al. [55] | Multimodal fused graph convolutional network (MMGCN) | IEMOCAP, MELD | Modality Encoder (full connected networks + LSTM), Multimodal Graph Convolutional Network, Emotion Classifier (full connected network) | 66.22% (IEMOCAP) and 58.65% (MELD) |

| Barros and Sciutti [58] | FaceChannelS | AffWild2 | VGG16 base with fewer parameters and LSTM for temporal information. | 34% Accuracy by frame. 41% as sequences. |

| Liu et al. [59] | Multi-Task Assisted Correction (MTAC) | AffWild2, RAF-DB, AffectNet | ResNet-18, DenseNet | 62.78% ResNet18. 63.51% DenseNet |

| Yu et al. [63] | Ensemble of models and multi-fold training | AffWild2 | ResNet50, EfficientNet, InceptionNet. | F1-Score ResNet50 0.263, EfficientNet 0.296, InceptionNet 0.27 |

| Zhang et al. [66] | Unified transformer-based multimodal framework | AffWild2 | 3 GRU + MLP | F1-Score 39.4% (official fold AffWild2) |

| This work | Adaptive multimodal framework | AFEW, SFEW, MELD, AffWild2 | VGG19, ResNet50, DialogXL, Embracenet+ | 18.87% (AFEW), 21.81% (SFEW), 45.63% (MELD), 58.94% (AffWild2) |

| Acronym | Dataset/Database Name | Environment | Modality | Samples | Emotions |

|---|---|---|---|---|---|

| AffectNet [67] | Facial Affect from the InterNet | Wild | Facial | 456,000 images | 80,276 Neutral, 146,198 Happy, 29,487 Sad, 16,288 Surprise, 8191 Fear, 5264 Disgust, 28,130 Anger, 5135 Contempt, 35,322 None, 13,163 Uncertain, 88,895 Non-Face |

| AFEW 4.0 [68] | Acted Facial Expression in the Wild | Wild | Facial, audio, and posture | 1426 video clips | 194 Anger, 123 Disgust, 156 Fear, 165 Sadness, 387 Happiness, 257 Neutral, 144 Surprise |

| AFEW 5.0 [69] | Acted Facial Expression in the Wild | Wild | Facial, audio, and posture | 1645 video clips | Angry, Disgust, Fear, Sadness, Happiness, Neutral, Surprise |

| AFEW 7.0 [70] | Acted Facial Expression in the Wild | Wild | Facial, audio, and posture | 1809 video clips | 295 Angry, 154 Disgust, 197 Fear, 258 Sadness, 357 Happiness, 400 Neutral, 148 Surprise |

| AffWild2 [57] | AffWild2 database | Wild | Facial | 558 Videos with M frames | 22,699 Anger, 16,067 Disgust, 17,488 Fear, 129,974 Happiness, 259,456 Neutral, 272,310 Other, 103,908 Sadness, 43,947 Surprise |

| CK+ [71] | Extended Cohn- Kanade Dataset (CK+) | Lab | Facial | 327 labeled facial videos | 45 Angry, 18 Contempt, 59 Disgust, 25 Fear, 69 Happy, 28 Sadness, 83 Surprise |

| FER-2013 [72] | Facial Expression Recognition 2013 (FER-2013) | Wild | Facial | 35,887 images | 4953 Anger, 547 Disgust, 5121 Fear, 8989 Happiness, 6077 Sadness, 4002 Surprise, 6198 Neutral |

| HEU-part1 [41] | Multimodal emotion | Wild | Face and posture | 16,569 videos | 1631 Anger, 691 Bored, 1662 Confused, 1097 Disappointed, 881 Disgust, 1268 Fear, 4313 Happy, 2568 Neutral, 1529 Sad, 929 Surprise |

| HEU-part2 [41] | Multimodal emotion | Wild | Face, speech and posture | 2435 videos | 389 Anger, 50 Bored, 131 Confused, 108 Disappointed, 162 Disgust, 221 Fear, 460 Happy, 350 Neutral, 364 Sad, 243 Surprise |

| SFEW 2.0 [73] | Static Facial Expression in Wild | Wild | Facial | 1322 samples | 255 anger, 75 disgust, 124 fear, 228 neutral, 256 happiness, 234 sadness and 150 surprise |

| RAF-DB [74] | Real-world Affective Faces Database (RAF-DB) | Wild | Facial | 30,000 facial images | 1619 Surprised, 355 Fearful, 877 Disgusted, 5957 Happy, 2460 Sad, 867 Angry, 560 Fearfully surprised, 148 Disgustedly surprised, 697 Happily surprised, 86 Sadly surprised, 176 Angrily surprised, 129 Sadly fearful, 8 Fearfully disgusted, 266 Happily disgusted, 738 Sadly disgusted, 841 Angrily disgusted, 150 Fearfully angry, 163 Sadly angry |

| MELD [52] | Multimodal EmotionLines Dataset | Wild | Audio, visual, and textual | 13,158 videos | 1607 anger, 361 disgust, 1002 sadness, 2308 joy, 6436 neutral, 1636 surprise, 358 fear |

| CMU-MOSEI [75] | CMU Multimodal Opinion Sentiment and Emotion Intensity | Wild | Language, vision, and audio | 23,453 annotated sentences from more than 1000 online speakers and 250 different topics | 1438 happy, 502 sad, 384 angry, 55 fear, 150 disgust, 78 surprise |

| IEMOCAP [76] | Interactive emotional dyadic motion capture database | Lab | Audio, video and facial | 10,038 samples | 1229 anger, 1182 sadness, 495 happiness, 575 neutral, 2505 excited, 24 surprise, 135 fear, 4 disgust, 3830 frustration, 59 other |

| Emotion | Train | Validation | Total |

|---|---|---|---|

| Angry | 178 | 77 | 255 |

| Disgust | 52 | 23 | 75 |

| Fear | 78 | 46 | 124 |

| Happy | 184 | 72 | 256 |

| Neutral | 144 | 84 | 228 |

| Sad | 161 | 73 | 234 |

| Surprise | 94 | 56 | 150 |

| Emotion | Train | Validation | Total |

|---|---|---|---|

| Anger | 16,573 | 6126 | 22,699 |

| Disgust | 10,771 | 5296 | 16,067 |

| Fear | 9080 | 8408 | 17,488 |

| Happiness | 95,463 | 34,511 | 129,974 |

| Neutral | 177,198 | 82,258 | 259,456 |

| Other | 165,866 | 106,444 | 272,310 |

| Sadness | 78,751 | 25,157 | 103,908 |

| Surprise | 31,615 | 12,332 | 43,947 |

| Dataset | Samples | Modalities | # Emotions | Size |

|---|---|---|---|---|

| AFEW | 1154 faces, 1154 audios, 747 texts | Face, Audio, Text | 7 | 2.65 GB |

| SFEW | 1007 faces | Face | 7 | 995 MB |

| MELD | 7487 audios, 7487 texts | Face, Audio, Text | 7 | 10.1 GB |

| AffWild2 | 865,849 images extracted from 548 videos | Face | 8 | 17.5 GB |

| Emotion | Face | Audio | Text | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Acc. | F1-Score | Support | Acc. | F1-Score | Support | Acc. | F1-Score | Support | |

| Angry | 3216 | 64 | 64 | ||||||

| Disgust | 2404 | 40 | 40 | ||||||

| Fear | 1805 | 46 | 46 | ||||||

| Happy | 3389 | 63 | 63 | ||||||

| Neutral | 3614 | 63 | 63 | ||||||

| Sad | 3287 | 61 | 61 | ||||||

| Surprise | 2130 | 46 | 46 | ||||||

| Weighted Average | 19845 | 383 | 383 | ||||||

| Emotion Category | Face | ||

|---|---|---|---|

| Accuracy | F1-Score | Support | |

| Angry | 77 | ||

| Disgust | 23 | ||

| Fear | 46 | ||

| Happy | 72 | ||

| Neutral | 84 | ||

| Sad | 73 | ||

| Surprise | 56 | ||

| Weighted Average | 431 | ||

| Emotion | Face | Audio | Text | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Acc. | F1-Score | Support | Acc. | F1-Score | Support | Acc. | F1-Score | Support | |

| Neutral | 229 | 469 | 469 | ||||||

| Anger | 35 | 153 | 153 | ||||||

| Disgust | 33 | 22 | 22 | ||||||

| Fear | 11 | 40 | 40 | ||||||

| Happiness | 73 | 163 | 163 | ||||||

| Sadness | 25 | 111 | 111 | ||||||

| Surprise | 73 | 150 | 150 | ||||||

| Weighted average | 479 | 1108 | 1108 | ||||||

| Emotion | Face | Audio | Text | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Acc. | F1-Score | Support | Acc. | F1-Score | Support | Acc. | F1-Score | Support | |

| Neutral | 897 | 897 | |||||||

| Anger | 6126 | 29 | 29 | ||||||

| Disgust | 5296 | 28 | 28 | ||||||

| Fear | 8408 | 40 | 40 | ||||||

| Happiness | 301 | 301 | |||||||

| Sadness | 47 | 47 | |||||||

| Surprise | 126 | 126 | |||||||

| Weighted Average | 1468 | 1468 | |||||||

| Modalities | Evaluated Datasets | |||||||

|---|---|---|---|---|---|---|---|---|

| AFEW | MELD | AffWild2 | ||||||

| Face | Audio | Text | Li et al. [46] | Ours | MMGCN [55] | Ours | Fusion Transformer [66] | Ours |

| x | 53.91% | 19.58% | 33.27% | 45.63% | - | 62.01% | ||

| x | 25.59% | 16.17% | 42.63% | 45.63% | - | 40.52% | ||

| x | - | 14.82% | 57.72% | 46.60% | - | 61.05% | ||

| x | x | 54.30% | 18.06% | - | 45.63% | 32.60% | 48.91% | |

| x | x | - | 18.87% | 57.92% | 45.63% | 36.30% | 60.78% | |

| x | x | - | 15.90% | 58.02% | 20.39% | 51.20% | 52.52% | |

| x | x | x | - | 18.87% | 58.65% | 45.63% | 39.40% | 58.94% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aguilera, A.; Mellado, D.; Rojas, F. An Assessment of In-the-Wild Datasets for Multimodal Emotion Recognition. Sensors 2023, 23, 5184. https://doi.org/10.3390/s23115184

Aguilera A, Mellado D, Rojas F. An Assessment of In-the-Wild Datasets for Multimodal Emotion Recognition. Sensors. 2023; 23(11):5184. https://doi.org/10.3390/s23115184

Chicago/Turabian StyleAguilera, Ana, Diego Mellado, and Felipe Rojas. 2023. "An Assessment of In-the-Wild Datasets for Multimodal Emotion Recognition" Sensors 23, no. 11: 5184. https://doi.org/10.3390/s23115184

APA StyleAguilera, A., Mellado, D., & Rojas, F. (2023). An Assessment of In-the-Wild Datasets for Multimodal Emotion Recognition. Sensors, 23(11), 5184. https://doi.org/10.3390/s23115184