Generating Defective Epoxy Drop Images for Die Attachment in Integrated Circuit Manufacturing via Enhanced Loss Function CycleGAN

Abstract

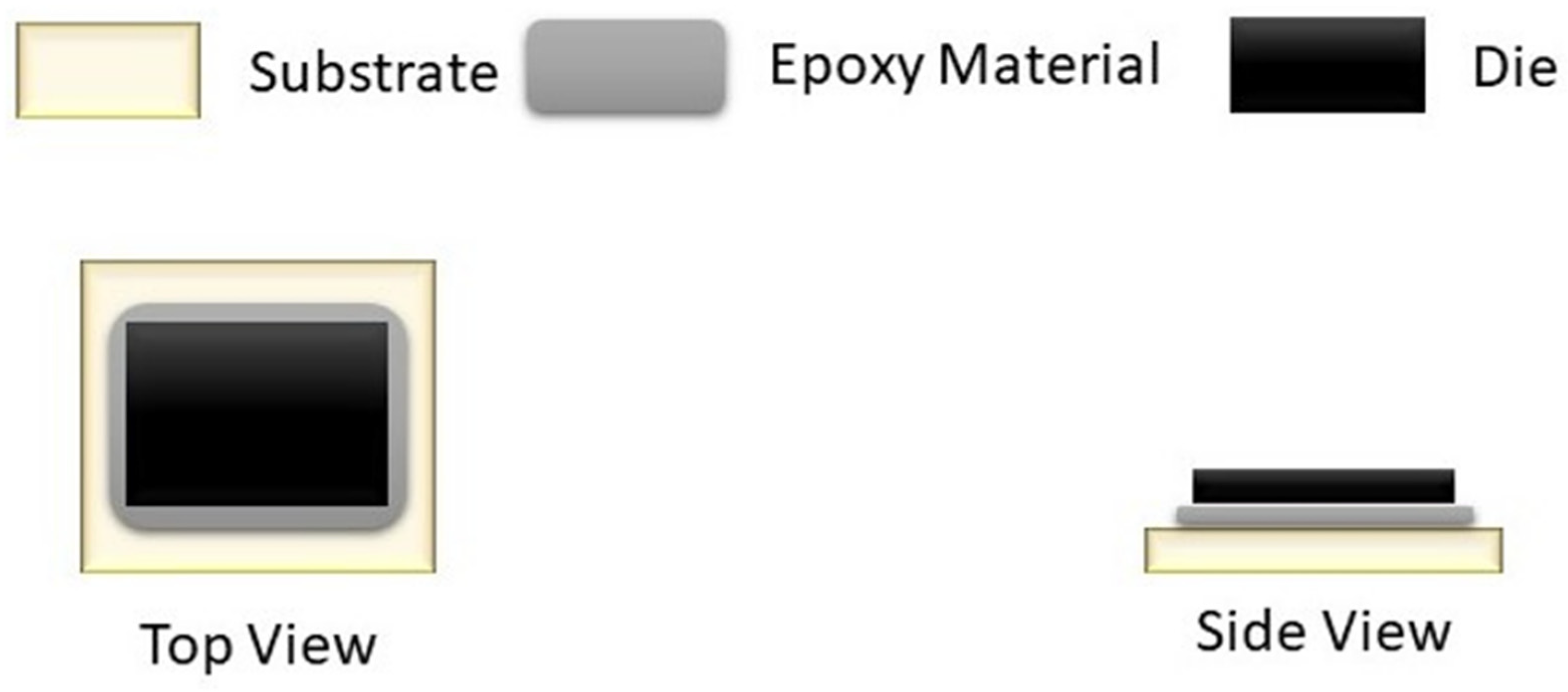

1. Introduction

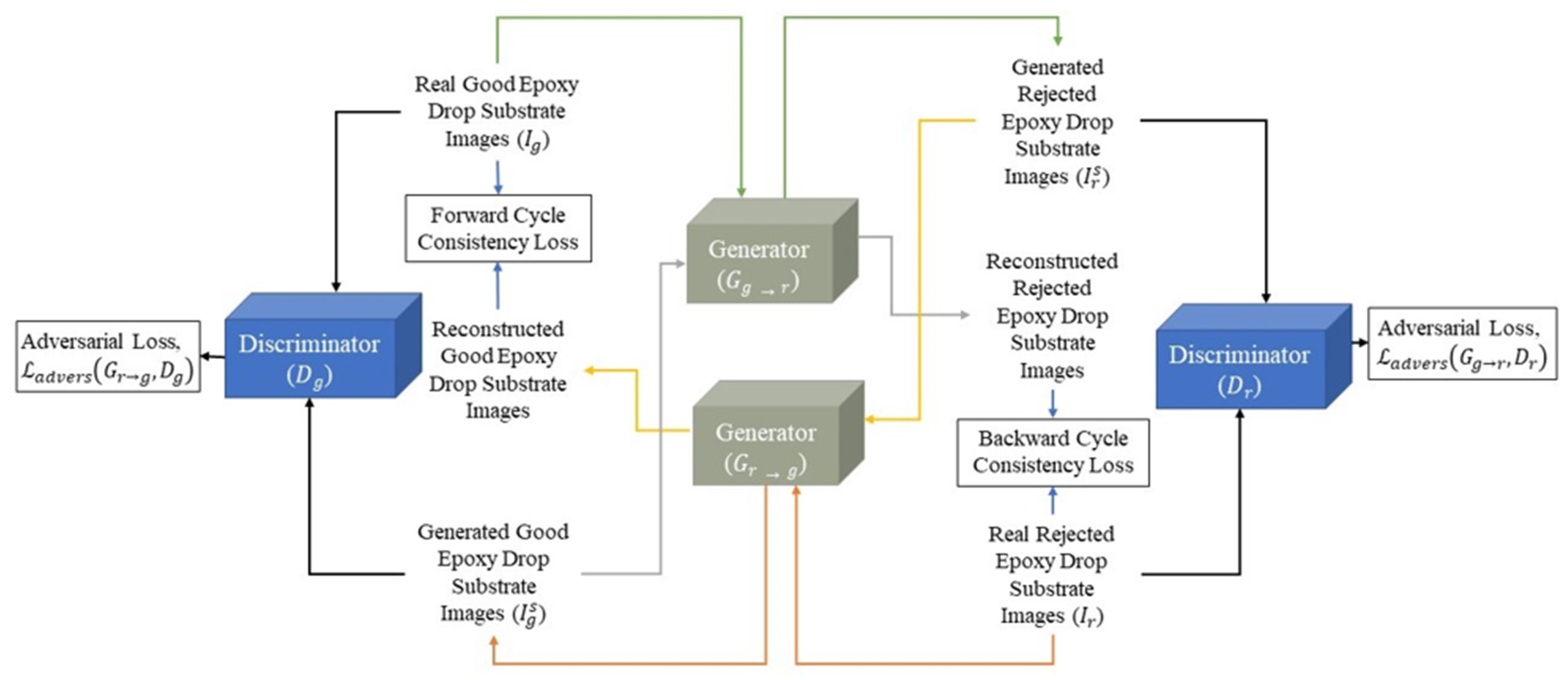

2. Previous Works on Data Augmentation Using a GAN

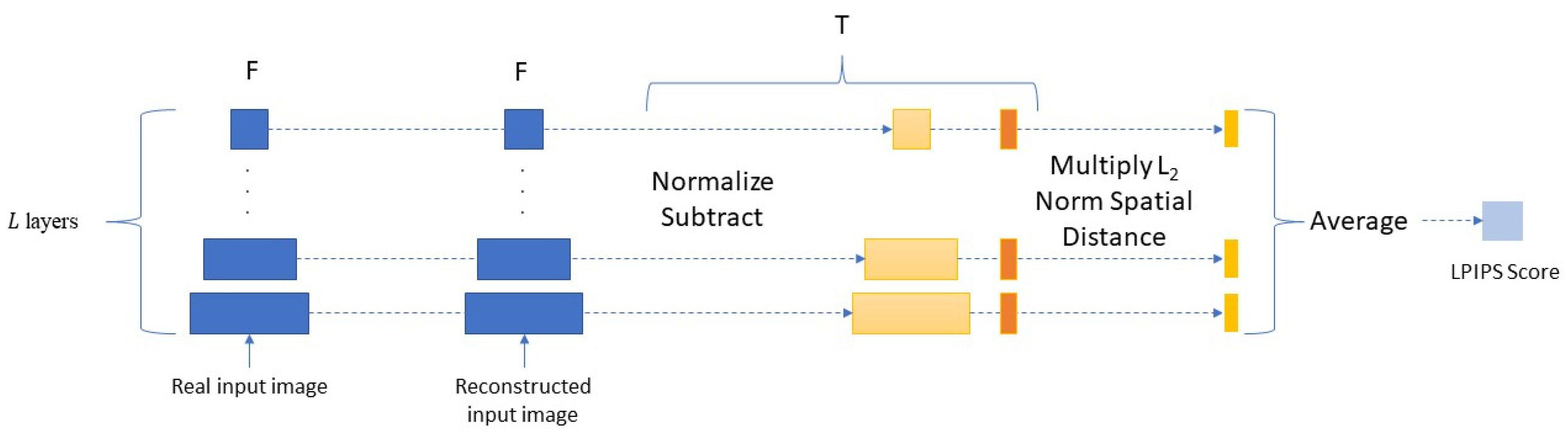

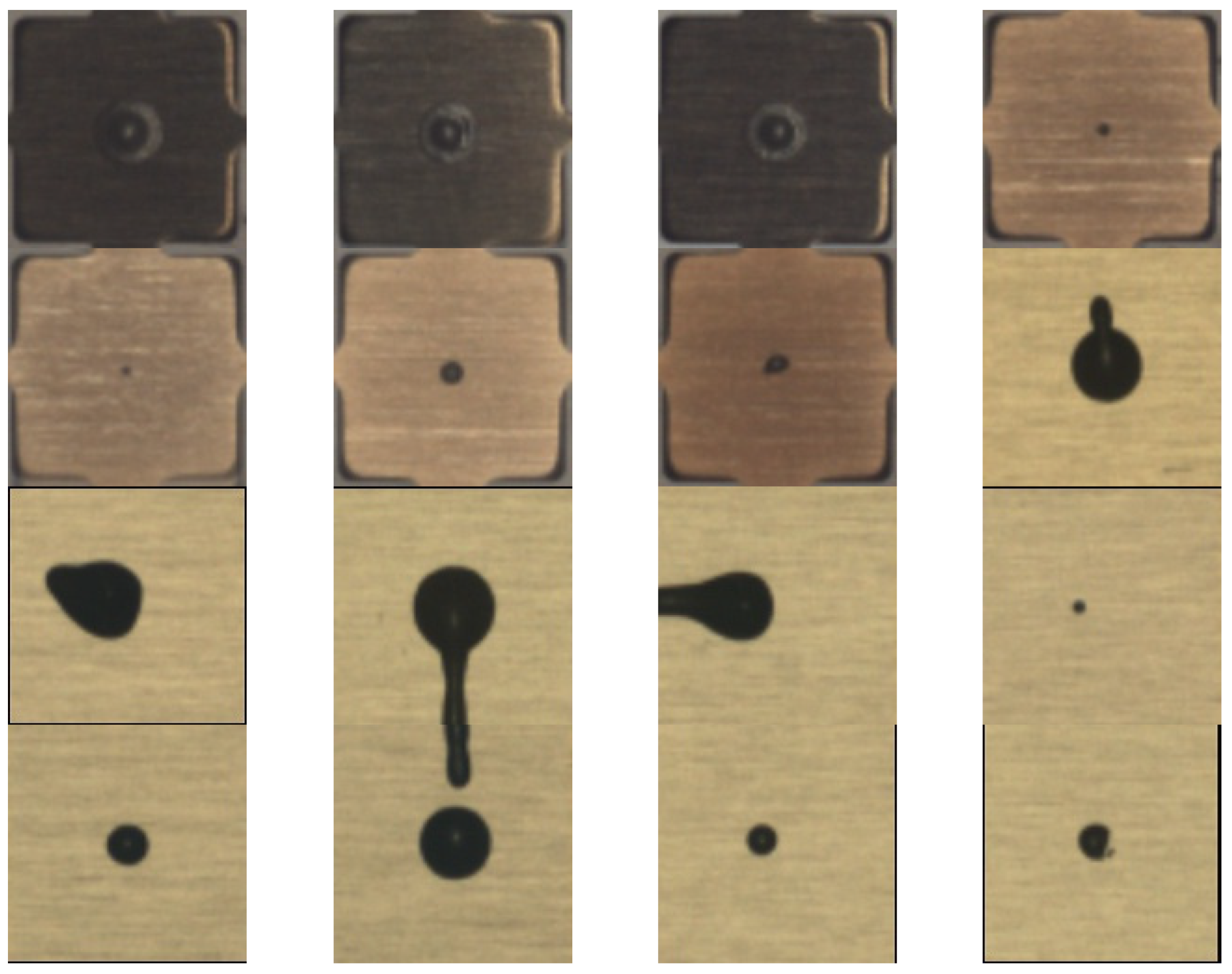

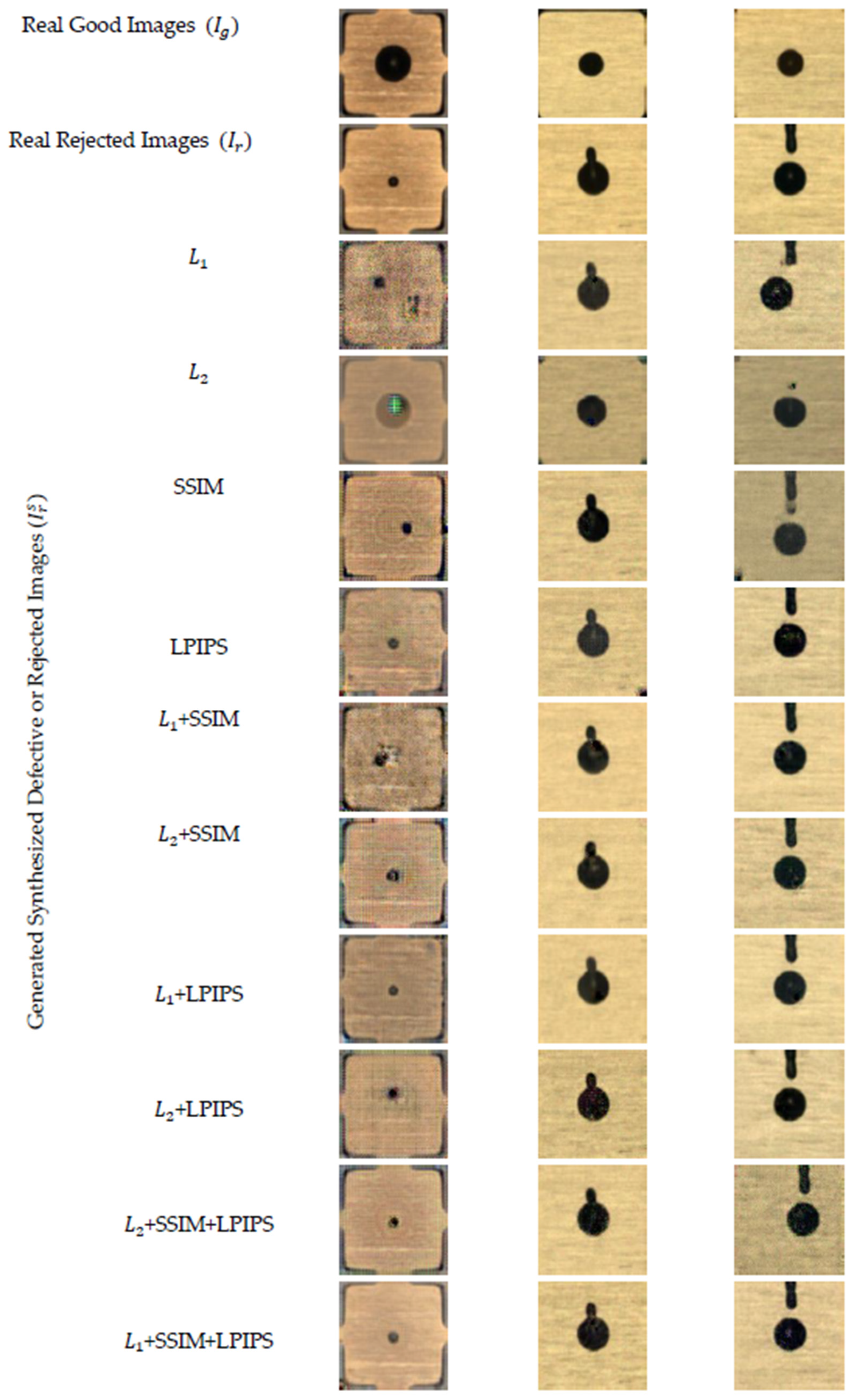

3. Generating Synthesized Defective Images via CycleGAN

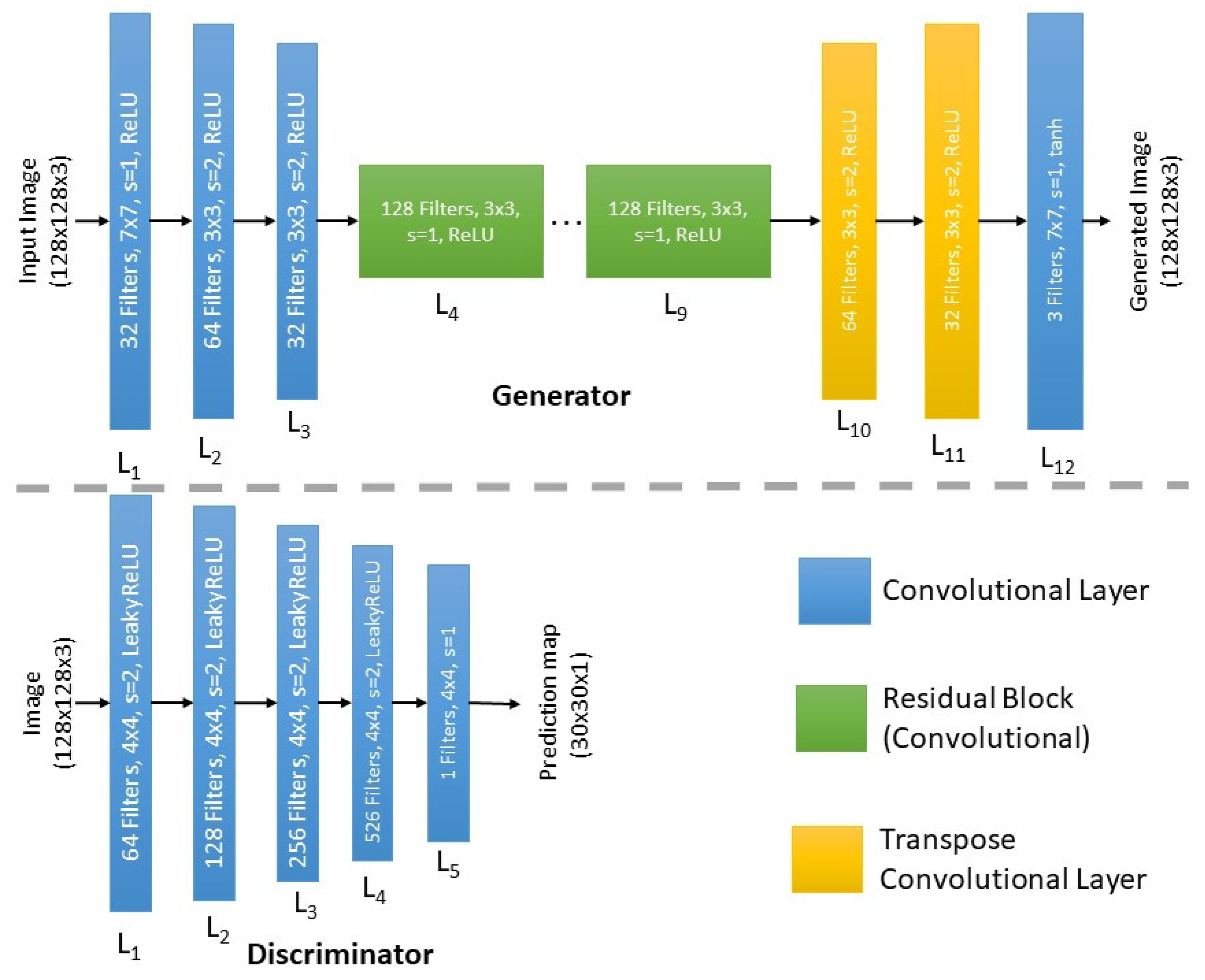

Model Architecture and Training

| Algorithm 1. Cycle GAN Training |

| 1: for number of epochs do |

| 2: for k iterations do |

| 3: Draw a minibatch of samples from data distribution, domain |

| 4: Draw a minibatch of samples from data distribution, domain |

| 5: Generate m synthetic samples |

| 6: Compute adversarial losses // Combination of discriminator loss on both real and fake images |

| 7: Update the discriminators and |

| 8: Generate m cycle samples |

| 9: Compute Cycle Consistency Loss |

| /* Different loss functions separately and in combination were used to calculate the cycle consistency losses and */ |

| 10: Compute total generator loss |

| 11: Update the generators and |

4. Experimental Results and Discussion

4.1. Quantative Evaluation of Generated Images

4.1.1. Evaluation Metrics

4.1.2. Dataset

4.1.3. Results and Discussion

4.2. Impact of Generated Images on Defect Identification

4.2.1. Identification Metrics

4.2.2. Datasets

4.2.3. Identification Outcomes

- By using real rejected epoxy drop substrate images;

- By using rejected epoxy drop substrate images and generated rejected epoxy drop substrate images based on the standard loss function CycleGAN;

- By using rejected epoxy drop substrate images and generated rejected epoxy drop substrate images based on our enhanced loss function CycleGAN.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, Y.; Wong, C.P. Recent advances of conductive adhesives as a lead-free alternative in electronic packaging: Materials, processing, reliability, and applications. Mater. Sci. Eng. R Rep. 2006, 51, 1–35. [Google Scholar] [CrossRef]

- Capili, M.D. Understanding die attach epoxy open time. Int. Res. J. Adv. Eng. Sci. 2019, 4, 11–14. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the 27th International Conference on Neural Information Processing Systems—Volume 2, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Antoniou, A.; Storkey, A.; Edwards, H. Augmenting Image Classifiers Using Data Augmentation Generative Adversarial Networks. In Proceedings of the 27th International Conference on Artificial Neural Networks, Rhodes, Greece, 4–7 October 2018; Kůrková, V., Manolopoulos, Y., Hammer, B., Iliadis, L., Maglogiannis, I., Eds.; Lecture Notes in Computer Science (LNTCS, Volume 11141). Springer: Cham, Greece, 2018. [Google Scholar] [CrossRef]

- Chen, Y.; Yang, X.-H.; Wei, Z.; Heidari, A.A.; Zheng, N.; Li, Z.; Chen, H.; Hu, H.; Zhou, Q.; Guan, Q. Generative adversarial networks in medical image augmentation: A review. Comput. Biol. Med. 2022, 144, 105382. [Google Scholar] [CrossRef]

- Sampath, V.; Maurtua, I.; Aguilar Martín, J.J.; Iriondo, A.; Lluvia, I.; Aizpurua, G. Intraclass image augmentation for defect detection using generative adversarial neural networks. Sensors 2023, 23, 1861. [Google Scholar] [CrossRef]

- Wen, L.; Wang, Y.; Li, X. A New Cycle-consistent adversarial networks with attention mechanism for surface defect classification with small samples. IEEE Trans. Ind. Inf. 2022, 18, 8988–8998. [Google Scholar] [CrossRef]

- Wang, R.; Hoppe, S.; Monari, E.; Huber, M.F. Defect Transfer GAN: Diverse Defect Synthesis for Data Augmentation. In Proceedings of the 33rd British Machine Vision Conference (BMVC 2022), London, UK, 21–24 November 2022. [Google Scholar] [CrossRef]

- Yang, B.; Liu, Z.; Duan, G.; Tan, J. Mask2Defect: A prior knowledge-based data augmentation method for metal surface defect inspection. IEEE Trans. Ind. Inf. 2022, 18, 6743–6755. [Google Scholar] [CrossRef]

- Niu, S.; Li, B.; Wang, X.; Peng, Y. Region- and Strength-Controllable GAN for defect generation and segmentation in industrial images. IEEE Trans. Ind. Inf. 2022, 18, 4531–4541. [Google Scholar] [CrossRef]

- Hu, J.; Yan, P.; Su, Y.; Wu, D.; Zhou, H. A method for classification of surface defect on metal workpieces based on twin attention mechanism generative adversarial network. IEEE Sens. J. 2021, 21, 13430–13441. [Google Scholar] [CrossRef]

- Zhang, H.; Pan, D.; Liu, J.; Jiang, Z. A novel MAS-GAN-based data synthesis method for object surface defect detection. Neurocomputing 2022, 499, 106–114. [Google Scholar] [CrossRef]

- Zhang, G.; Cui, K.; Hung, T.-Y.; Lu, S. Defect-GAN: High-Fidelity Defect Synthesis for Automated Defect Inspection. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021; pp. 2523–2533. [Google Scholar] [CrossRef]

- Jain, S.; Seth, G.; Paruthi, A.; Soni, U.; Kumar, G. Synthetic data augmentation for surface defect detection and classification using deep learning. J. Intell. Manuf. 2022, 33, 1007–1020. [Google Scholar] [CrossRef]

- Du, Z.; Gao, L.; Li, X. A new contrastive GAN with data augmentation for surface defect recognition under limited data. IEEE Trans. Instrum Meas. 2023, 72, 3502713. [Google Scholar] [CrossRef]

- He, Y.; Song, K.; Dong, H.; Yan, Y. Semi-supervised defect classification of steel surface based on multi-training and generative adversarial network. Opt. Lasers Eng. 2019, 122, 294–302. [Google Scholar] [CrossRef]

- Niu, S.; Li, B.; Wang, X.; Lin, H. Defect image sample generation with GAN for improving defect recognition. IEEE Trans. Autom. Sci. Eng. 2022, 17, 1611–1622. [Google Scholar] [CrossRef]

- Zhai, W.; Zhu, J.; Cao, Y.; Wang, Z. A Generative Adversarial Network Based Framework for Unsupervised Visual Surface Inspection. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 1283–1287. [Google Scholar] [CrossRef]

- Lai, Y.T.K.; Hu, J.S.; Tsai, Y.H.; Chiu, W.Y. Industrial Anomaly Detection and One-class Classification using Generative Adversarial Networks. In Proceedings of the 2018 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Auckland, New Zealand, 9–12 July 2018; pp. 1444–1449. [Google Scholar] [CrossRef]

- Lu, H.-P.; Su, C.-T. CNNs combined with a conditional GAN for mura defect classification in TFT-LCDs. IEEE Trans. Semicond. Manuf. 2021, 34, 25–33. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, B.G.; Li, L. Defect detection of fabrics With Generative Adversarial Network Based flaws modeling. In Proceedings of the 2020 Chinese Automation Congress (CAC), Shanghai, China, 6–8 November 2020; pp. 3334–3338. [Google Scholar] [CrossRef]

- Qian, W.; Xu, Y.; Li, H. A self-sparse generative adversarial network for autonomous early-stage design of architectural sketches. Comput. Aided Civ. Inf. 2022, 37, 612–628. [Google Scholar] [CrossRef]

- Xu, Y.; Tian, Y.; Li, H. Unsupervised deep learning method for bridge condition assessment based on intra-and inter-class probabilistic correlations of quasi-static responses. Struct. Health Monit. 2023, 22, 600–620. [Google Scholar] [CrossRef]

- Chen, K.; Cai, N.; Wu, Z.; Xia, H.; Zhou, S.; Wang, H. Multi-scale GAN with transformer for surface defect inspection of IC metal packages. Expert Syst. Appl. 2023, 212, 118788. [Google Scholar] [CrossRef]

- Li, J.; Cai, N.; Mo, Z.; Zhou, G.; Wang, H. IC solder joint inspection via generator-adversarial-network based template. Mach. Vis. Appl. 2021, 32, 96. [Google Scholar] [CrossRef]

- Chen, S.-H.; Kang, C.-H.; Perng, D.-B. Detecting and measuring defects in wafer die using GAN and YOLOv3. Appl. Sci. 2020, 10, 8725. [Google Scholar] [CrossRef]

- Jeon, Y.; Kim, H.; Lee, H.; Jo, S.; Kim, J. GAN-based Defect Image Generation for Imbalanced Defect Classification of OLED panels. In Proceedings of the Eurographics Symposium on Rendering 2022, Prague, Czech Republic, 4–6 July 2022; Ghosh, A., Wei, L.-Y., Eds.; The Eurographics Association: Eindhoven, The Netherlands, 2022. [Google Scholar] [CrossRef]

- Abu-Srhan, A.; Abushariah, M.A.M.; Al-Kadi, O.S. The effect of loss function on conditional generative adversarial networks. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 6977–6988. [Google Scholar] [CrossRef]

- Pathak, D.; Krahenbuhl, P.; Donahue, J.; Darrell, T.; Efros, A.A. Context Encoders: Feature Learning by Inpainting. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2536–2544. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.; Zhou, T.; Efros, A. Image-to-Image Translation with Conditional Adversarial Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5967–5976. [Google Scholar] [CrossRef]

- Abobakr, A.; Hossny, M.; Nahavandi, S. SSIMLayer: Towards Robust Deep Representation Learning via Nonlinear Structural Similarity. In Proceedings of the 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC), Bari, Italy, 6–9 October 2019; pp. 1234–1238. [Google Scholar] [CrossRef]

- Zhao, H.; Gallo, O.; Frosio, I.; Kautz, J. Loss functions for image restoration with neural networks. IEEE Trans. Comput. Imaging 2017, 3, 47–57. [Google Scholar] [CrossRef]

- Shao, G.; Huang, M.; Gao, F.; Liu, T.; Li, L. DuCaGAN: Unified dual capsule generative adversarial network for unsupervised image-to-image translation. IEEE Access 2020, 8, 154691–154707. [Google Scholar] [CrossRef]

- Niu, S.; Li, B.; Wang, X.; He, S.; Peng, Y. Defect attention template generation CycleGAN for weakly supervised surface defect segmentation. Pattern Recognit. 2022, 123, 108396. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Yamins, D.L.; DiCarlo, J.J. Using goal-driven deep learning models to understand sensory cortex. Nat Neurosci. 2016, 19, 356–365. [Google Scholar] [CrossRef]

- Li, C.; Wand, M. Precomputed Real-Time Texture Synthesis with Markovian Generative Adversarial Networks. In Proceedings of the Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2017; Volume 9907. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, S. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C. A universal image quality index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

- Sheikh, H.R.; Bovik, A.C. Image information and visual quality. IEEE Trans. Image Process. 2006, 15, 430–444. [Google Scholar] [CrossRef]

- Jo, Y.; Yang, S.; Kim, S.J. Investigating Loss Functions for Extreme Super-Resolution. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 1705–1712. [Google Scholar] [CrossRef]

| PSNR | UQI | VFI | |

|---|---|---|---|

| Mean ± STD | Mean ± STD | Mean ± STD | |

| (Standard Cycle GAN) | 18.77 ± 4.88 | 0.86 ± 0.16 | 0.19 ± 0.08 |

| 16.65 ± 1.66 | 0.89 ± 0.09 | 0.08 ± 0.05 | |

| SSIM | 21.06 ± 2.23 | 0.93 ± 0.05 | 0.20 ± 0.06 |

| LPIPS | 22.51 ± 4.23 | 0.94 ± 0.09 | 0.26 ± 0.02 |

| + SSIM | 22.94 ± 4.50 | 0.93 ± 0.07 | 0.24 ± 0.02 |

| + SSIM | 23.39 ± 4.46 | 0.93 ± 0.08 | 0.25 ± 0.02 |

| + LPIPS | 23.26 ± 3.48 | 0.93 ± 0.07 | 0.32 ± 0.03 |

| + LPIPS | 22.24 ± 4.98 | 0.90 ± 0.15 | 0.27 ± 0.02 |

| + SSIM + LPIPS | 20.81 ± 6.75 | 0.90 ± 0.10 | 0.17 ± 0.02 |

| + SSIM + LPIPS | 29.86 ± 5.11 | 0.98 ± 0.01 | 0.44 ± 0.03 |

| Correct Labels | |||

|---|---|---|---|

| Rejected | Good | ||

| Predicted Labels | Rejected | TP | FP |

| Good | FN | TN | |

| Data Augmentation Method | Confusion Matrix | Identification Metrics | Accuracy | ||||

|---|---|---|---|---|---|---|---|

| Correct Labels | Precision | Recall | |||||

| Rejected | Good | ||||||

| No augmentation (only real data) | Predicted Labels | Rejected | 23 | 02 | 0.92 | 0.04 | 36% |

| Good | 540 | 278 | |||||

| CycleGAN standard with loss function | Predicted Labels | Rejected | 381 | 05 | 0.99 | 0.68 | 78% |

| Good | 182 | 275 | |||||

| CycleGAN with enhanced loss function | Predicted Labels | Rejected | 458 | 13 | 0.97 | 0.81 | 86% |

| Good | 105 | 267 | |||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alam, L.; Kehtarnavaz, N. Generating Defective Epoxy Drop Images for Die Attachment in Integrated Circuit Manufacturing via Enhanced Loss Function CycleGAN. Sensors 2023, 23, 4864. https://doi.org/10.3390/s23104864

Alam L, Kehtarnavaz N. Generating Defective Epoxy Drop Images for Die Attachment in Integrated Circuit Manufacturing via Enhanced Loss Function CycleGAN. Sensors. 2023; 23(10):4864. https://doi.org/10.3390/s23104864

Chicago/Turabian StyleAlam, Lamia, and Nasser Kehtarnavaz. 2023. "Generating Defective Epoxy Drop Images for Die Attachment in Integrated Circuit Manufacturing via Enhanced Loss Function CycleGAN" Sensors 23, no. 10: 4864. https://doi.org/10.3390/s23104864

APA StyleAlam, L., & Kehtarnavaz, N. (2023). Generating Defective Epoxy Drop Images for Die Attachment in Integrated Circuit Manufacturing via Enhanced Loss Function CycleGAN. Sensors, 23(10), 4864. https://doi.org/10.3390/s23104864