Autoencoders Based on 2D Convolution Implemented for Reconstruction Point Clouds from Line Laser Sensors

Abstract

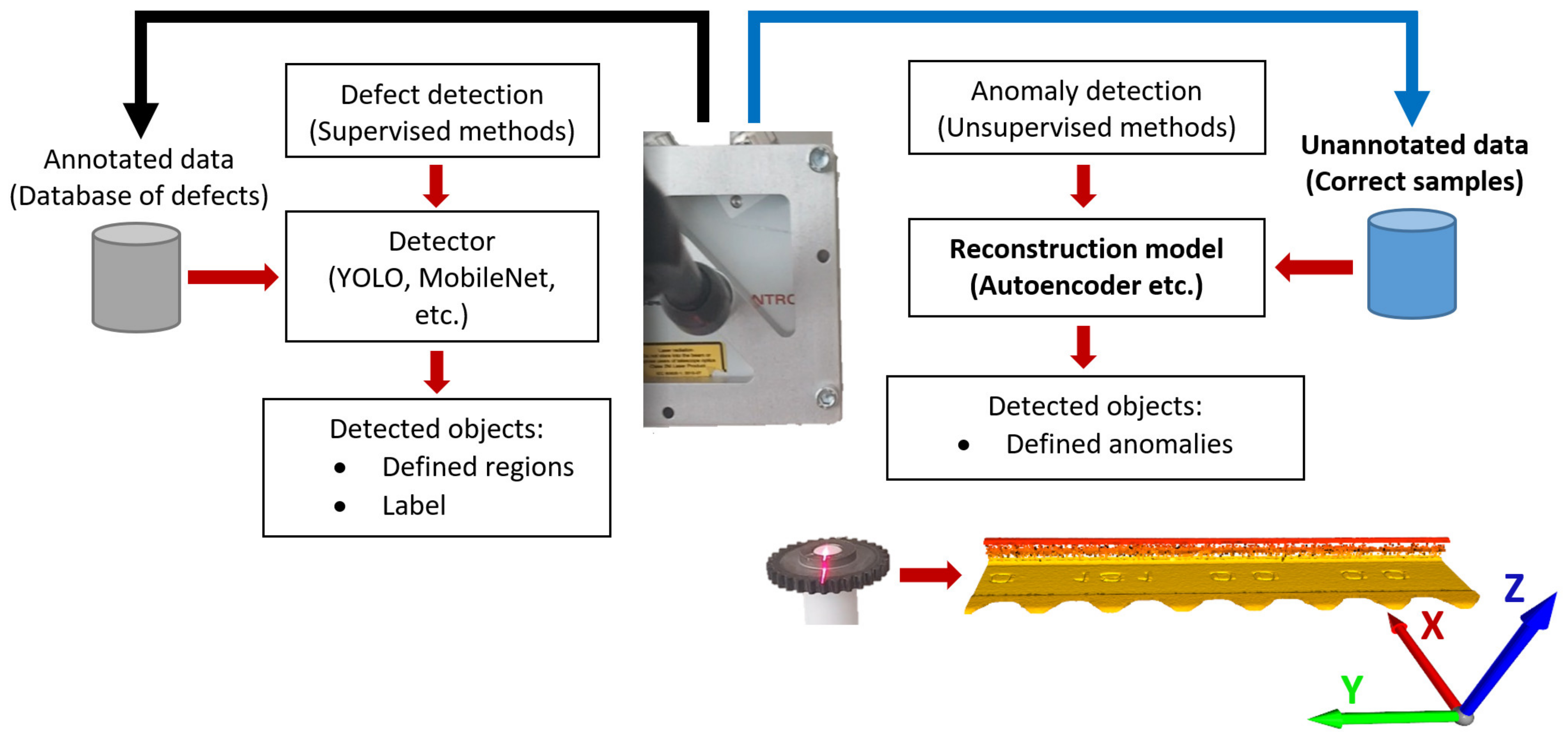

1. Introduction

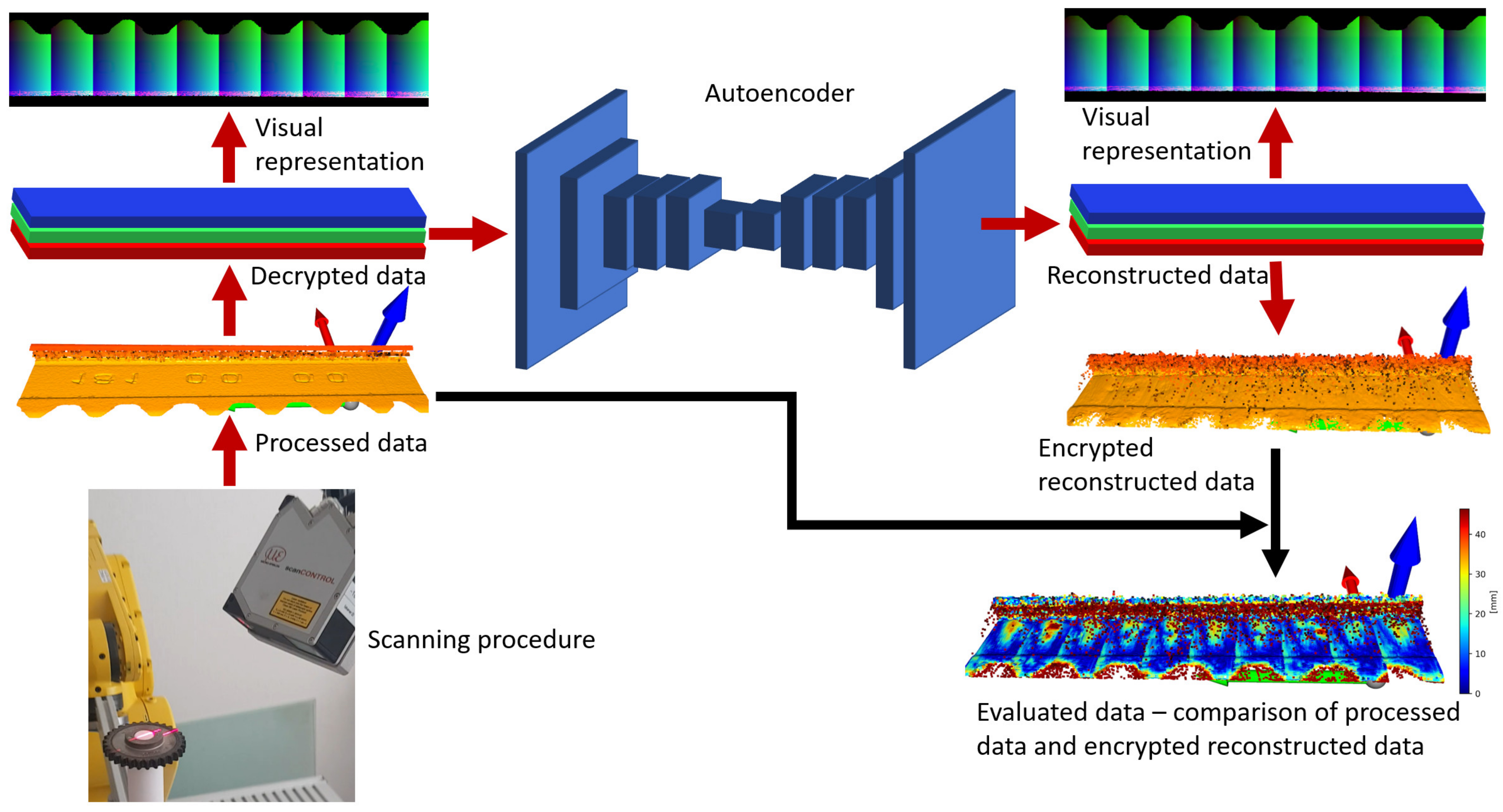

2. Point Cloud Reconstruction Based on 2D Convolution

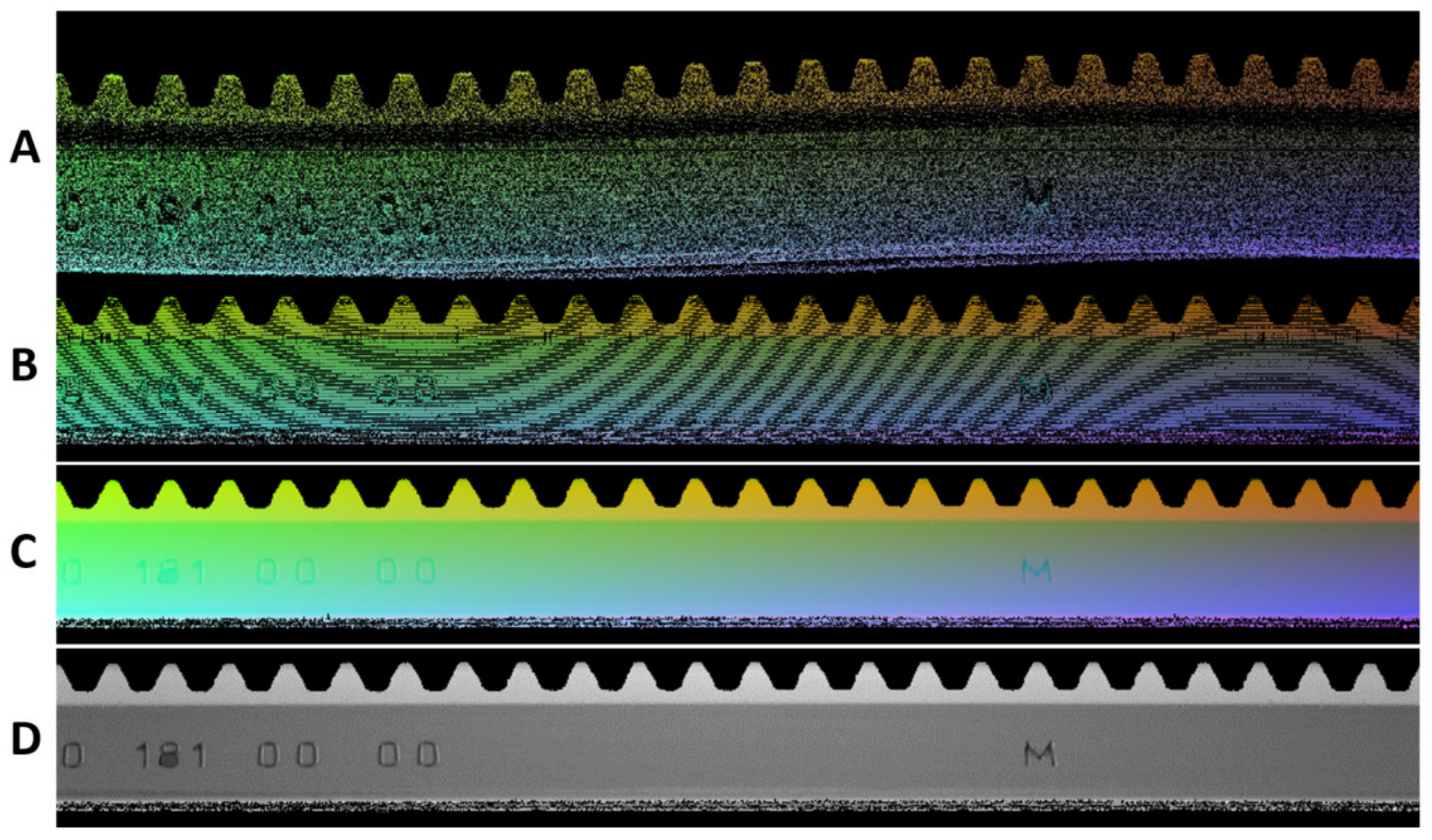

2.1. Preprocessing Data

2.2. Architectures of Autoencoders

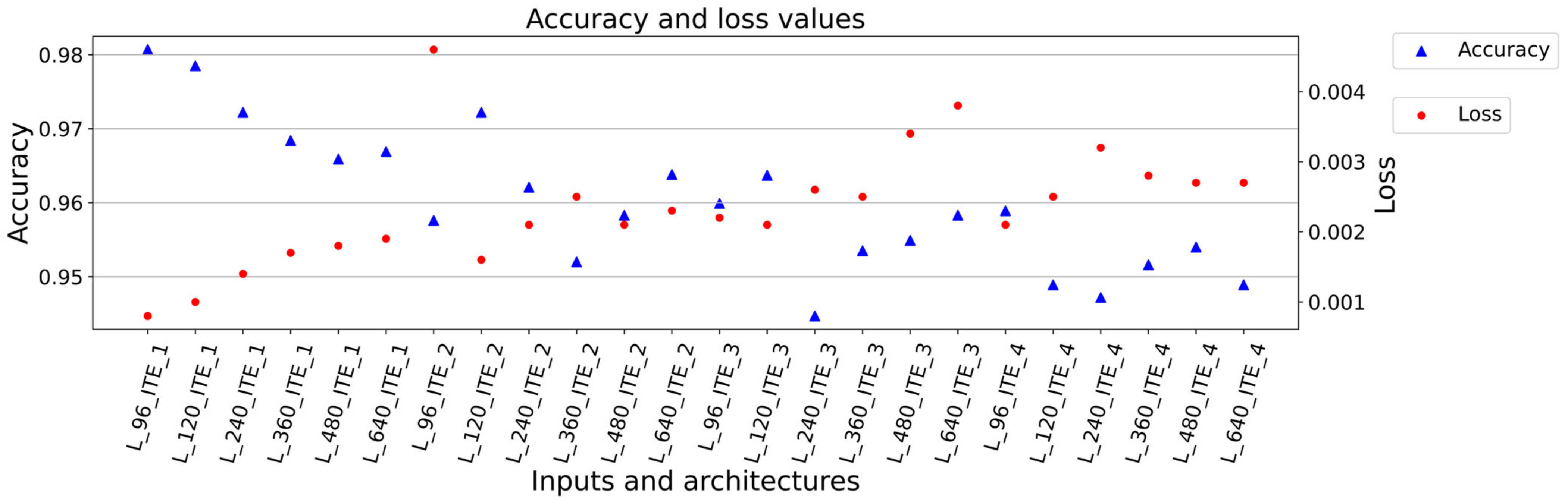

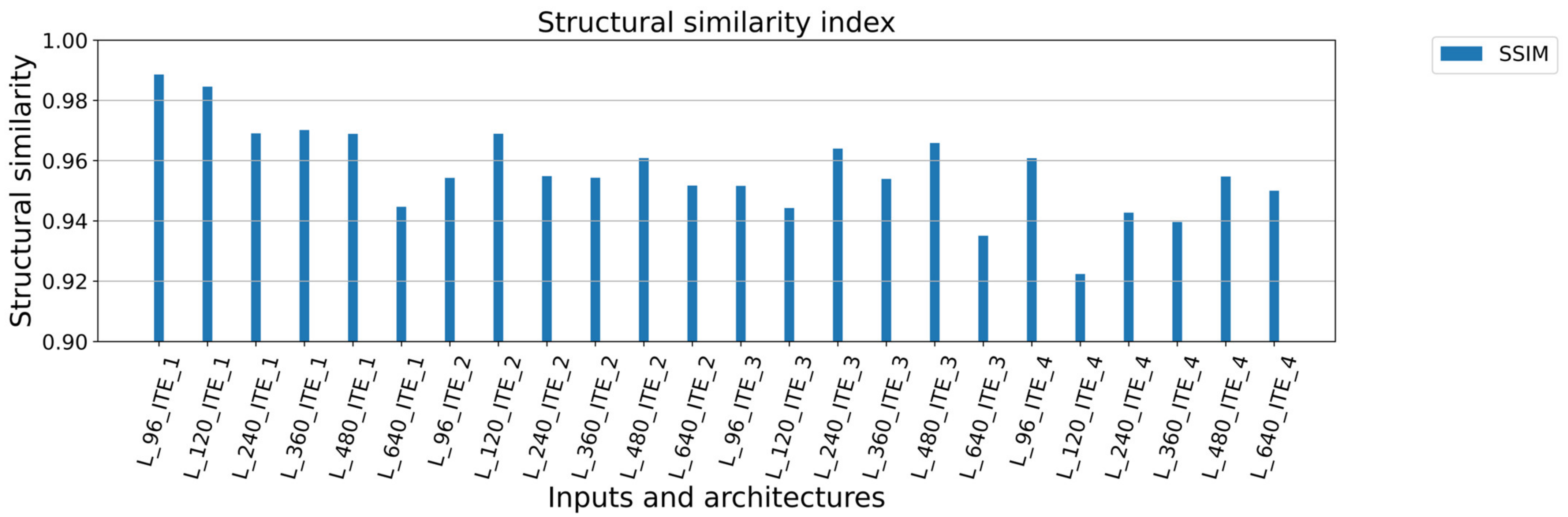

3. Results

4. Conclusions

5. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| No.: | Architecture: | Total Parameters: Trainable Parameters: Non-Trainable Parameters: | Input Size: | Train. Dataset: Val. Dataset: | Accuracy: Loss: Val. Accuracy: Val. Loss: | Mean of Square Error [mm]: Standard Deviation of Square Error [mm]: Structural Similarity Metric (SSIM): |

|---|---|---|---|---|---|---|

| 1 | L_96_ITE_1 | 896,227 894,429 1798 | 640 × 96 | 679 227 | 0.9807 0.0008 0.9826 0.0008 | 0.015829 0.047211 0.988568 |

| 2 | L_120_ITE_1 | 896,227 894,429 1798 | 640 × 120 | 541 181 | 0.9785 0.0010 0.9801 0.0010 | 0.016108 0.053263 0.984566 |

| 3 | L_240_ITE_1 | 896,227 894,429 1798 | 640 × 240 | 265 89 | 0.9722 0.0014 0.9747 0.0015 | 0.025456 0.062530 0.969051 |

| 4 | L_360_ITE_1 | 896,227 894,429 1798 | 640 × 360 | 175 59 | 0.9684 0.0017 0.9675 0.0016 | 0.021630 0.066946 0.970153 |

| 5 | L_480_ITE_1 | 896,227 894,429 1798 | 640 × 480 | 127 43 | 0.9659 0.0018 0.9690 0.0017 | 0.025276 0.067987 0.968888 |

| 6 | L_640_ITE_1 | 896,227 894,429 1798 | 640 × 640 | 97 33 | 0.9669 0.0019 0.9664 0.0021 | 0.033767 0.071704 0.944652 |

| 7 | L_96_ITE_2 | 7,996,899 7,991,517 5382 | 640 × 96 | 679 227 | 0.9576 0.0046 0.9632 0.0037 | 0.038118 0.090202 0.954267 |

| 8 | L_120_ITE_2 | 7,996,899 7,991,517 5382 | 640 × 120 | 541 181 | 0.9722 0.0016 0.9584 0.0019 | 0.033286 0.069123 0.968925 |

| 9 | L_240_ITE_2 | 7,996,899 7,991,517 5382 | 640 × 240 | 265 89 | 0.9621 0.0021 0.9628 0.0020 | 0.030853 0.072560 0.954858 |

| 10 | L_360_ITE_2 | 7,996,899 7,991,517 5382 | 640 × 360 | 175 59 | 0.9520 0.0025 0.9317 0.0025 | 0.040637 0.075443 0.954289 |

| 11 | L_480_ITE_2 | 7,996,899 7,991,517 5382 | 640 × 480 | 127 43 | 0.9583 0.0021 0.9627 0.0020 | 0.028388 0.070746 0.960838 |

| 12 | L_640_ITE_2 | 7,996,899 7,991,517 5382 | 640 × 640 | 97 33 | 0.9638 0.0023 0.9456 0.0031 | 0.049036 0.080666 0.951701 |

| 13 | L_96_ITE_3 | 26,442,243 26,429,763 12,480 | 640 × 96 | 679 227 | 0.9599 0.0022 0.9614 0.0022 | 0.034843 0.073919 0.951601 |

| 14 | L_120_ITE_3 | 26,442,243 26,429,763 12,480 | 640 × 120 | 541 181 | 0.9637 0.0021 0.9544 0.0023 | 0.036443 0.075231 0.944264 |

| 15 | L_240_ITE_3 | 26,442,243 26,429,763 12,480 | 640 × 240 | 265 89 | 0.9447 0.0026 0.9553 0.0024 | 0.037398 0.074976 0.963985 |

| 16 | L_360_ITE_3 | 26,442,243 26,429,763 12,480 | 640 × 360 | 175 59 | 0.9535 0.0025 0.9467 0.0028 | 0.044122 0.079939 0.953952 |

| 17 | L_480_ITE_3 | 26,442,243 26,429,763 12,480 | 640 × 480 | 127 43 | 0.9549 0.0034 0.9579 0.0032 | 0.045042 0.086710 0.965870 |

| 18 | L_640_ITE_3 | 26,442,243 26,429,763 12,480 | 640 × 640 | 97 33 | 0.9583 0.0038 0.9603 0.0026 | 0.035180 0.081573 0.935048 |

| 19 | L_96_ITE_4 | 18,923,011 18,914,819 8192 | 640 × 96 | 679 227 | 0.9589 0.0021 0.9623 0.0022 | 0.027502 0.075493 0.960815 |

| 20 | L_120_ITE_4 | 18,923,011 18,914,819 8192 | 640 × 120 | 541 181 | 0.9489 0.0025 0.9551 0.0026 | 0.043441 0.078784 0.922372 |

| 21 | L_240_ITE_4 | 18,923,011 18,914,819 8192 | 640 × 240 | 265 89 | 0.9472 0.0032 0.9547 0.0027 | 0.039939 0.079776 0.942701 |

| 22 | L_360_ITE_4 | 18,923,011 18,914,819 8192 | 640 × 360 | 175 59 | 0.9516 0.0028 0.9443 0.0031 | 0.033598 0.088179 0.939626 |

| 23 | L_480_ITE_4 | 18,923,011 18,914,819 8192 | 640 × 480 | 127 43 | 0.9540 0.0027 0.9619 0.0022 | 0.028731 0.075250 0.954734 |

| 24 | L_640_ITE_4 | 18,923,011 18,914,819 8192 | 640 × 640 | 97 33 | 0.9489 0.0027 0.9631 0.0036 | 0.059413 0.085243 0.950013 |

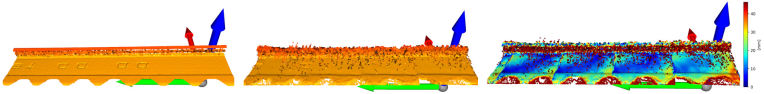

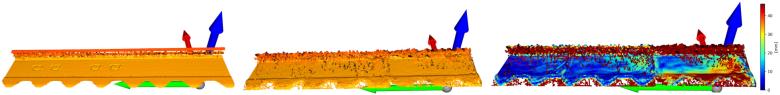

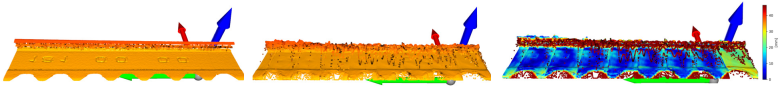

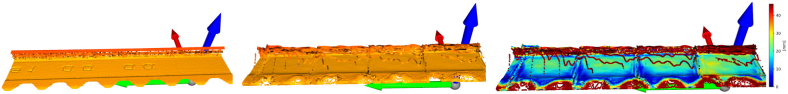

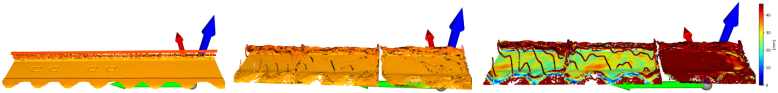

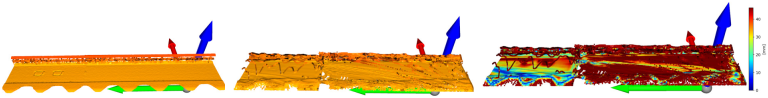

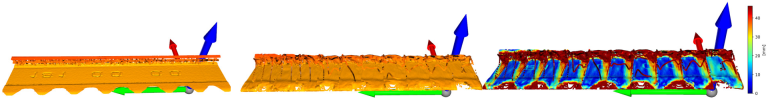

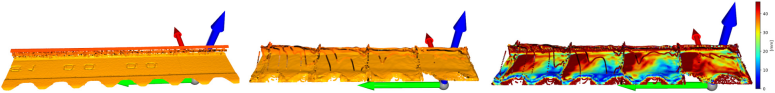

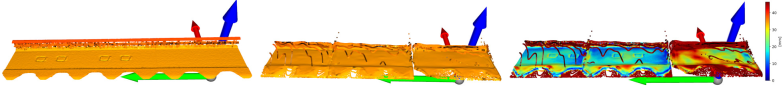

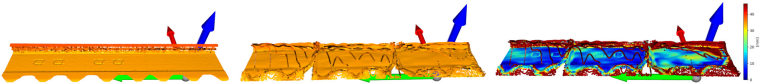

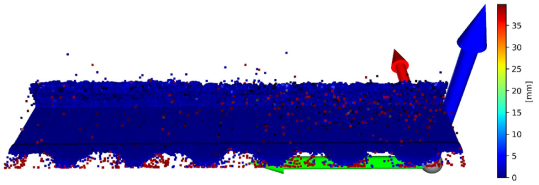

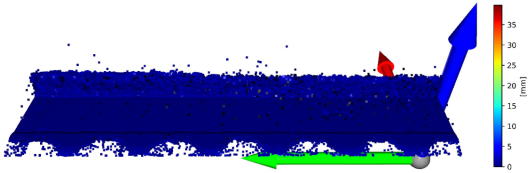

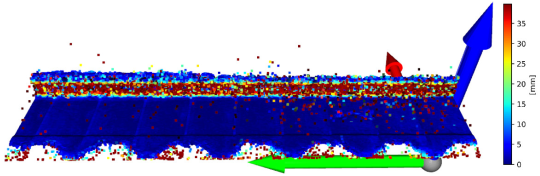

| No. | Input | Reconstructed 3D | Difference 3D |

|---|---|---|---|

| 1 |  | ||

| 2 |  | ||

| 3 |  | ||

| 4 |  | ||

| 5 |  | ||

| 6 |  | ||

| 7 |  | ||

| 8 |  | ||

| 9 |  | ||

| 10 |  | ||

| 11 |  | ||

| 12 |  | ||

| 13 |  | ||

| 14 |  | ||

| 15 |  | ||

| 16 |  | ||

| 17 |  | ||

| 18 |  | ||

| 19 |  | ||

| 20 |  | ||

| 21 |  | ||

| 22 |  | ||

| 23 |  | ||

| 24 |  | ||

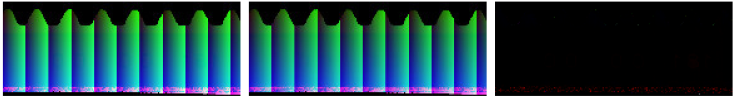

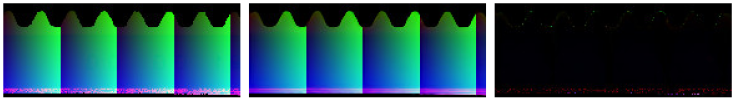

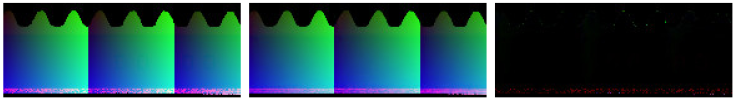

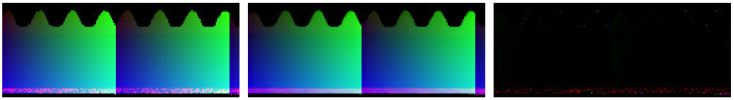

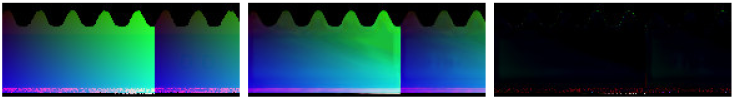

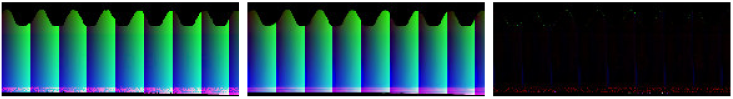

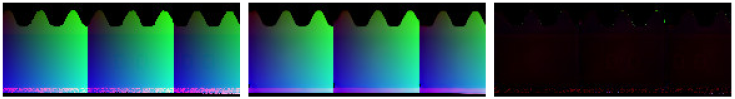

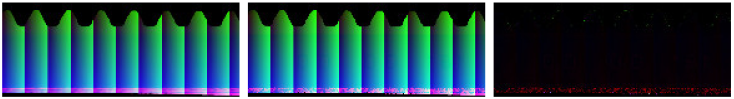

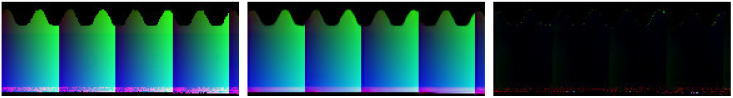

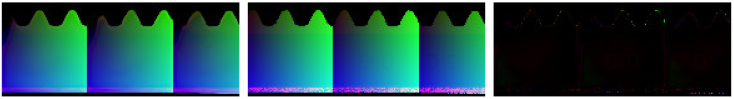

| No. | Input 2D | Reconstructed 2D | Difference between Input 2D and Reconstructed 2D |

|---|---|---|---|

| 1 |  | ||

| 2 |  | ||

| 3 |  | ||

| 4 |  | ||

| 5 |  | ||

| 6 |  | ||

| 7 |  | ||

| 8 |  | ||

| 9 |  | ||

| 10 |  | ||

| 11 |  | ||

| 12 |  | ||

| 13 |  | ||

| 14 |  | ||

| 15 |  | ||

| 16 |  | ||

| 17 |  | ||

| 18 |  | ||

| 19 |  | ||

| 20 |  | ||

| 21 |  | ||

| 22 |  | ||

| 23 |  | ||

| 24 |  | ||

Appendix B

| L_640_ITE_1 | ||

| Layer (type) | Output Shape | Param # |

| conv2d_36 (Conv2D) | (None, 640, 640, 3) | 84 |

| batch_normalization_32 | (Batch (None, 640, 640, 3) | 12 |

| max_pooling2d_12 | (MaxPooling (None, 320, 320, 3) | 0 |

| conv2d_37 (Conv2D) | (None, 320, 320, 128) | 3584 |

| batch_normalization_33 | (Batch (None, 320, 320, 128) | 512 |

| max_pooling2d_13 | (MaxPooling (None, 160, 160, 128) | 0 |

| conv2d_38 (Conv2D) | (None, 160, 160, 128) | 147,584 |

| batch_normalization_34 | (Batch (None, 160, 160, 128) | 512 |

| max_pooling2d_14 | (MaxPooling (None, 80, 80, 128) | 0 |

| conv2d_39 (Conv2D) | (None, 80, 80, 128) | 147,584 |

| batch_normalization_35 | (Batch (None, 80, 80, 128) | 512 |

| conv2d_40 (Conv2D) | (None, 80, 80, 128) | 147,584 |

| batch_normalization_36 | (Batch (None, 80, 80, 128) | 512 |

| up_sampling2d_12 | (UpSampling (None, 160, 160, 128) | 0 |

| conv2d_41 (Conv2D) | (None, 160, 160, 128) | 147,584 |

| batch_normalization_37 | (Batch (None, 160, 160, 128) | 512 |

| up_sampling2d_13 | (UpSampling (None, 320, 320, 128) | 0 |

| conv2d_42 (Conv2D) | (None, 320, 320, 128) | 147,584 |

| batch_normalization_38 | (Batch (None, 320, 320, 128) | 512 |

| up_sampling2d_14 | (UpSampling (None, 640, 640, 128) | 0 |

| conv2d_43 (Conv2D) | (None, 640, 640, 128) | 147,584 |

| batch_normalization_39 | (Batch (None, 640, 640, 128) | 512 |

| conv2d_44 (Conv2D) | (None, 640, 640, 3) | 3459 |

| L_640_ITE_2 | ||

| Layer (type) | Output Shape | Param # |

| conv2d_195 (Conv2D) | (None, 640, 640, 3) | 84 |

| batch_normalization_173 | (Batch (None, 640, 640, 3) | 12 |

| max_pooling2d (MaxPooling2D) | (None, 320, 320, 3) | 0 |

| conv2d_1 (Conv2D) | (None, 320, 320, 384) | 10,752 |

| batch_normalization_1 | (Batch (None, 320, 320, 384) | 1536 |

| max_pooling2d_1 | (MaxPooling (None, 160, 160, 384) | 0 |

| conv2d_2 (Conv2D) | (None, 160, 160, 384) | 1,327,488 |

| batch_normalization_2 | (Batch (None, 160, 160, 384) | 1536 |

| max_pooling2d_2 | (MaxPooling (None, 80, 80, 384) | 0 |

| conv2d_3 (Conv2D) | (None, 80, 80, 384) | 1,327,488 |

| batch_normalization_3 | (Batch (None, 80, 80, 384) | 1536 |

| conv2d_4 (Conv2D) | (None, 80, 80, 384) | 1,327,488 |

| batch_normalization_4 | (Batch (None, 80, 80, 384) | 1536 |

| up_sampling2d | (UpSampling (None, 160, 160, 384) | 0 |

| conv2d_5 (Conv2D) | (None, 160, 160, 384) | 1,327,488 |

| batch_normalization_5 | (Batch (None, 160, 160, 384) | 1536 |

| up_sampling2d_1 | (UpSampling (None, 320, 320, 384) | 0 |

| conv2d_6 (Conv2D) | (None, 320, 320, 384) | 1,327,488 |

| batch_normalization_6 | (Batch (None, 320, 320, 384) | 1536 |

| up_sampling2d_2 | (UpSampling (None, 640, 640, 384) | 0 |

| conv2d_7 (Conv2D) | (None, 640, 640, 384) | 1,327,488 |

| batch_normalization_7 | (Batch (None, 640, 640, 384) | 1536 |

| conv2d_8 (Conv2D) | (None, 640, 640, 3) | 10,371 |

| L_640_ITE_3 | ||

| Layer (type) | Output Shape | Param # |

| conv2d_81 (Conv2D) | (None, 640, 640, 96) | 2688 |

| batch_normalization_72 | (Batc (None, 640, 640, 96) | 384 |

| max_pooling2d_27 | (MaxPooling (None, 320, 320, 96) | 0 |

| conv2d_82 (Conv2D) | (None, 320, 320, 512) | 442,880 |

| batch_normalization_73 | (Batc (None, 320, 320, 512) | 2048 |

| conv2d_83 (Conv2D) | (None, 320, 320, 512) | 2,359,808 |

| batch_normalization_74 | (Batc (None, 320, 320, 512) | 2048 |

| max_pooling2d_28 | (MaxPooling (None, 160, 160, 512) | 0 |

| conv2d_84 (Conv2D) | (None, 160, 160, 512) | 2,359,808 |

| batch_normalization_75 | (Batc (None, 160, 160, 512) | 2048 |

| conv2d_85 (Conv2D) | (None, 160, 160, 512) | 2,359,808 |

| batch_normalization_76 | (Batc (None, 160, 160, 512) | 2048 |

| max_pooling2d_29 | (MaxPooling (None, 80, 80, 512) | 0 |

| conv2d_86 (Conv2D) | (None, 80, 80, 512) | 2,359,808 |

| batch_normalization_77 | (Batc (None, 80, 80, 512) | 2048 |

| conv2d_87 (Conv2D) | (None, 80, 80, 512) | 2,359,808 |

| batch_normalization_78 | (Batc (None, 80, 80, 512) | 2048 |

| up_sampling2d_27 | (UpSampling (None, 160, 160, 512) | 0 |

| conv2d_88 (Conv2D) | (None, 160, 160, 512) | 2,359,808 |

| batch_normalization_79 | (Batc (None, 160, 160, 512) | 2048 |

| conv2d_89 (Conv2D) | (None, 160, 160, 512) | 2,359,808 |

| batch_normalization_80 | (Batc (None, 160, 160, 512) | 2048 |

| up_sampling2d_28 | (UpSampling (None, 320, 320, 512) | 0 |

| conv2d_90 (Conv2D) | (None, 320, 320, 512) | 2,359,808 |

| batch_normalization_81 | (Batc (None, 320, 320, 512) | 2048 |

| conv2d_91 (Conv2D) | (None, 320, 320, 512) | 2,359,808 |

| batch_normalization_82 | (Batc (None, 320, 320, 512) | 2048 |

| up_sampling2d_29 | (UpSampling (None, 640, 640, 512) | 0 |

| conv2d_92 (Conv2D) | (None, 640, 640, 512) | 2,359,808 |

| batch_normalization_83 | (Batc (None, 640, 640, 512) | 2048 |

| conv2d_93 (Conv2D) | (None, 640, 640, 512) | 2,359,808 |

| batch_normalization_84 | (Batc (None, 640, 640, 512) | 2048 |

| conv2d_94 (Conv2D) | (None, 640, 640, 3) | 13,827 |

| L_640_ITE_4 | ||

| Layer (type) | Output Shape | Param # |

| conv2d_78 (Conv2D) | (None, 640, 640, 512) | 14,336 |

| conv2d_79 (Conv2D) | (None, 640, 640, 512) | 2,359,808 |

| batch_normalization_64 | (Batc (None, 640, 640, 512) | 2048 |

| max_pooling2d_24 | (MaxPooling (None, 320, 320, 512) | 0 |

| conv2d_80 (Conv2D) | (None, 320, 320, 512) | 2,359,808 |

| batch_normalization_65 | (Batc (None, 320, 320, 512) | 2048 |

| max_pooling2d_25 | (MaxPooling (None, 160, 160, 512) | 0 |

| conv2d_81 (Conv2D) | (None, 160, 160, 512) | 2,359,808 |

| batch_normalization_66 | (Batc (None, 160, 160, 512) | 2048 |

| max_pooling2d_26 | (MaxPooling (None, 80, 80, 512) | 0 |

| conv2d_82 (Conv2D) | (None, 80, 80, 512) | 2,359,808 |

| batch_normalization_67 | (Batc (None, 80, 80, 512) | 2048 |

| conv2d_83 (Conv2D) | (None, 80, 80, 512) | 2,359,808 |

| batch_normalization_68 | (Batc (None, 80, 80, 512) | 2048 |

| up_sampling2d_24 | (UpSampling (None, 160, 160, 512) | 0 |

| conv2d_84 (Conv2D) | (None, 160, 160, 512) | 2,359,808 |

| batch_normalization_69 | (Batc (None, 160, 160, 512) | 2048 |

| up_sampling2d_25 | (UpSampling (None, 320, 320, 512) | 0 |

| conv2d_85 (Conv2D) | (None, 320, 320, 512) | 2,359,808 |

| batch_normalization_70 | (Batc (None, 320, 320, 512) | 2048 |

| up_sampling2d_26 | (UpSampling (None, 640, 640, 512) | 0 |

| conv2d_86 (Conv2D) | (None, 640, 640, 512) | 2,359,808 |

| batch_normalization_71 | (Batc (None, 640, 640, 512) | 2048 |

| conv2d_87 (Conv2D) | (None, 640, 640, 3) | 13,827 |

References

- Wu, T.; Zheng, W.; Yin, W.; Zhang, H. Development and Performance Evaluation of a Very Low-Cost UAV-Lidar System for Forestry Applications. Remote Sens. 2020, 13, 77. [Google Scholar] [CrossRef]

- Bolourian, N.; Hammad, A. LiDAR-equipped UAV path planning considering potential locations of defects for bridge inspection. Autom. Constr. 2020, 117, 103250. [Google Scholar] [CrossRef]

- Zhao, Z.; Zhang, Y.; Shi, J.; Long, L.; Lu, Z. Robust Lidar-Inertial Odometry with Ground Condition Perception and Optimization Algorithm for UGV. Sensors 2022, 22, 7424. [Google Scholar] [CrossRef]

- Gao, H.; Cheng, S.; Chen, Z.; Song, X.; Xu, Z.; Xu, X. Design and Implementation of Autonomous Mapping System for UGV Based on Lidar. In Proceedings of the 2022 IEEE International Conference on Networking, Sensing and Control (ICNSC), Shanghai, China, 15–18 December 2022; IEEE: New York NY, USA, 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Sun, X.; Wang, M.; Du, J.; Sun, Y.; Cheng, S.S.; Xie, W. A Task-Driven Scene-Aware LiDAR Point Cloud Coding Framework for Autonomous Vehicles. IEEE Trans. Ind. Inform. 2022. early access. [Google Scholar] [CrossRef]

- Bouazizi, M.; Lorite Mora, A.; Ohtsuki, T. A 2D-Lidar-Equipped Unmanned Robot-Based Approach for Indoor Human Activity Detection. Sensors 2023, 23, 2534. [Google Scholar] [CrossRef] [PubMed]

- Hartansky, R.; Mierka, M.; Jancarik, V.; Bittera, M.; Halgos, J.; Dzuris, M.; Krchnak, J.; Hricko, J.; Andok, R. Towards a MEMS Force Sensor via the Electromagnetic Principle. Sensors 2023, 23, 1241. [Google Scholar] [CrossRef] [PubMed]

- Miškiv-Pavlík, M.; Jurko, J. Dynamic Measurement of the Surface After Process of Turning with Application of Laser Displacement Sensors. In EAI/Springer Innovations in Communication and Computing; Springer: Berlin/Heidelberg, Germany, 2022; pp. 197–208. [Google Scholar] [CrossRef]

- Bolibruchová, D.; Matejka, M.; Kuriš, M. Analysis of the impact of the change of primary and secondary AlSi9Cu3 alloy ratio in the batch on its performance. Manuf. Technol. 2019, 19, 734–739. [Google Scholar] [CrossRef]

- Šutka, J.; Koňar, R.; Moravec, J.; Petričko, L. Arc welding renovation of permanent steel molds. Arch. Foundry Eng. 2021, 21, 35–40. [Google Scholar]

- Laser Profile Sensors for Precise 2D/3D Measurements. Available online: https://www.micro-epsilon.co.uk/2D_3D/laser-scanner/ (accessed on 15 January 2021).

- Klarák, J.; Kuric, I.; Zajačko, I.; Bulej, V.; Tlach, V.; Józwik, J. Analysis of Laser Sensors and Camera Vision in the Shoe Position Inspection System. Sensors 2021, 21, 7531. [Google Scholar] [CrossRef]

- In-Sight 3D-L4000-Specifications|Cognex. Available online: https://www.cognex.com/products/machine-vision/3d-machine-vision-systems/in-sight-3d-l4000/specifications (accessed on 5 September 2022).

- Versatile Profilometer Eliminates Blind Spots and Measures Glossy Surfaces|3D Optical Profilometer VR-6000 Series | KEYENCE International Belgium. Available online: https://www.keyence.eu/products/microscope/macroscope/vr-6000/index_pr.jsp (accessed on 5 September 2022).

- Penar, M.; Zychla, W. Object-oriented build automation—A case study. Comput. Inform. 2021, 40, 754–771. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. 2015. pp. 1440–1448. Available online: https://github.com/rbgirshick/ (accessed on 7 December 2022).

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Xiong, Z.; Li, Q.; Mao, Q.; Zou, Q. A 3D Laser Profiling System for Rail Surface Defect Detection. Sensors 2017, 17, 1791. [Google Scholar] [CrossRef] [PubMed]

- Cao, X.; Xie, W.; Ahmed, S.M.; Li, C.R. Defect detection method for rail surface based on line-structured light. Measurement 2020, 159, 107771. [Google Scholar] [CrossRef]

- Tao, X.; Zhang, D.; Ma, W.; Liu, X.; Xu, D. Automatic metallic surface defect detection and recognition with convolutional neural networks. Appl. Sci. 2018, 8, 1575. [Google Scholar] [CrossRef]

- Zhou, W.; Yang, Q.; Jiang, Q.; Zhai, G.; Member, S.; Lin, W. Blind Quality Assessment of 3D Dense Point Clouds with Structure Guided Resampling. 2022. Available online: https://arxiv.org/abs/2208.14603v1 (accessed on 9 May 2023).

- Gadelha, M.; Wang, R.; Maji, S. Multiresolution Tree Networks for 3D Point Cloud Processing. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Yan, W.; Shao, Y.; Liu, S.; Li, T.H.; Li, Z.; Li, G. Deep AutoEncoder-based Lossy Geometry Compression for Point Clouds. arXiv 2019, arXiv:1905.03691. [Google Scholar]

- Wang, J.; Ding, D.; Li, Z.; Ma, Z. Multiscale Point Cloud Geometry Compression. In 2021 Data Compression Conference (DCC); IEEE: New York, NY, USA, 2021; pp. 73–82. [Google Scholar] [CrossRef]

- Wiesmann, L.; Milioto, A.; Chen, X.; Stachniss, C.; Behley, J. Deep Compression for Dense Point Cloud Maps. IEEE Robot. Autom. Lett. 2021, 6, 2060–2067. [Google Scholar] [CrossRef]

- Shen, W.; Ren, Q.; Liu, D.; Zhang, Q.; Jiao, S.; University, T. Interpreting Representation Quality of DNNs for 3D Point Cloud Processing. Adv. Neural Inf. Process. Syst. 2021, 34, 8857–8870. [Google Scholar]

- Cheng, A.-C.; Li, X.; Sun, M.; Yang, M.-H.; Liu, S. Learning 3D Dense Correspondence via Canonical Point Autoencoder. Available online: https://anjiecheng.github.io/cpae/ (accessed on 17 January 2023).

- You, K.; Gao, P. Patch-Based Deep Autoencoder for Point Cloud Geometry Compression. In Patch-Based Deep Autoencoder for Point Cloud Geometry Compression; ACM: New York, NY, USA, 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Pang, Y.; Wang, W.; Tay, F.E.H.; Liu, W.; Tian, Y.; Yuan, L. Masked Autoencoders for Point Cloud Self-supervised Learning. Available online: https://github.com/Pang- (accessed on 17 January 2023).

- Zhang, C.; Shi, J.; Deng, X.; Wu, Z. Upsampling Autoencoder for Self-Supervised Point Cloud Learning. arXiv 2022, arXiv:2203.10768. [Google Scholar] [CrossRef]

- Yue, G.; Xiong, J.; Tian, S.; Li, B.; Zhu, S.; Lu, Y. A Single Stage and Single View 3D Point Cloud Reconstruction Network Based on DetNet. Sensors 2022, 22, 8235. [Google Scholar] [CrossRef]

- Yu, X.; Tang, L.; Rao, Y.; Huang, T.; Zhou, J.; Lu, J. Point-BERT: Pre-training 3D Point Cloud Transformers with Masked Point Modeling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, Louisiana, 19–24 June 2022. [Google Scholar]

- Ma, S.; Li, X.; Tang, J.; Guo, F. EAA-Net: Rethinking the Autoencoder Architecture with Intra-class Features for Medical Image Segmentation. 2022. Available online: https://arxiv.org/abs/2208.09197v1 (accessed on 9 May 2023).

- Klarák, J.; Andok, R.; Hricko, J.; Klačková, I.; Tsai, H.Y. Design of the Automated Calibration Process for an Experimental Laser Inspection Stand. Sensors 2022, 22, 5306. [Google Scholar] [CrossRef]

- Spyder: Anaconda.org. Available online: https://anaconda.org/anaconda/spyder (accessed on 24 March 2023).

- tf.keras.layers.Layer. TensorFlow v2.10.0. Available online: https://www.tensorflow.org/api_docs/python/tf/keras/layers/Layer (accessed on 10 March 2023).

- Kingma, D.P.; Ba, J.L. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference for Learning Representations. ICLR 2015, San Diego, CA, USA, 7–9 May 2015; Available online: https://arxiv.org/abs/1412.6980v9 (accessed on 23 February 2022).

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Structural Similarity Index—Skimage v0.20.0 Docs. Available online: https://scikit-image.org/docs/stable/auto_examples/transform/plot_ssim.html (accessed on 4 April 2023).

| Type of Architecture | No. of All Parameters | No. of Filters in One Conv. Layer | No. of Conv. Layers | Comment |

|---|---|---|---|---|

| ITE_1 | 896227 | 128 | 9 | Basic architecture with small number with filters |

| ITE_2 | 7996899 | 384 | 9 | The same architecture as basic architecture, but with average number of filters in convolution layers used in this work |

| ITE_3 | 26442243 | 512 | 14 | Included more convolution layers, with higher number of filters in first convolution layer. The 2 convolution layers at the end of architecture in shape (None, 640, 640, 512) |

| ITE_4 | 18923011 | 512 | 10 | Balanced architecture in way of similarity of convolution layers and number of parameters for start and end architecture |

| Type of Architecture | Results |

|---|---|

| ITE_1 | Basic architecture with fast training and lower consumption of GPU memory. The results are sufficient. |

| ITE_2 | Little worse results compared to ITE_1. High sensitivity to overtraining, the necessity to use 30 epochs for training. For L_480 and L_640, 50 epochs were used for training. |

| ITE_3 | L_96: 30 epochs, 30–50 epochs were used for other types. High sensitivity to overtraining. The results compared to other types of architectures are average. Presumably, there is a lack of data to reach better results for architectures with more convolution layers. |

| ITE_4 | Training performed with 30 epochs. The results are below average. |

| Axis | Error in Specific Axis (L_96_ITE_1) | Mean Square Error [mm] |

|---|---|---|

| X axis |  | 0.091116 |

| Y axis |  | 0.000000 |

| Z axis |  | 0.101825 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Klarák, J.; Klačková, I.; Andok, R.; Hricko, J.; Bulej, V.; Tsai, H.-Y. Autoencoders Based on 2D Convolution Implemented for Reconstruction Point Clouds from Line Laser Sensors. Sensors 2023, 23, 4772. https://doi.org/10.3390/s23104772

Klarák J, Klačková I, Andok R, Hricko J, Bulej V, Tsai H-Y. Autoencoders Based on 2D Convolution Implemented for Reconstruction Point Clouds from Line Laser Sensors. Sensors. 2023; 23(10):4772. https://doi.org/10.3390/s23104772

Chicago/Turabian StyleKlarák, Jaromír, Ivana Klačková, Robert Andok, Jaroslav Hricko, Vladimír Bulej, and Hung-Yin Tsai. 2023. "Autoencoders Based on 2D Convolution Implemented for Reconstruction Point Clouds from Line Laser Sensors" Sensors 23, no. 10: 4772. https://doi.org/10.3390/s23104772

APA StyleKlarák, J., Klačková, I., Andok, R., Hricko, J., Bulej, V., & Tsai, H.-Y. (2023). Autoencoders Based on 2D Convolution Implemented for Reconstruction Point Clouds from Line Laser Sensors. Sensors, 23(10), 4772. https://doi.org/10.3390/s23104772