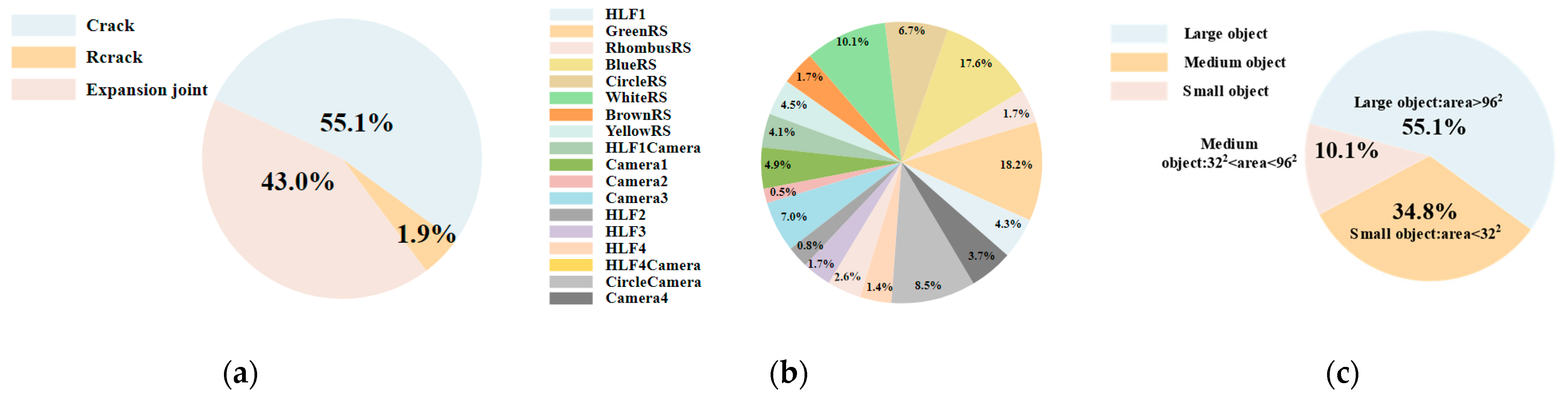

3.1. CBAM Attention Module

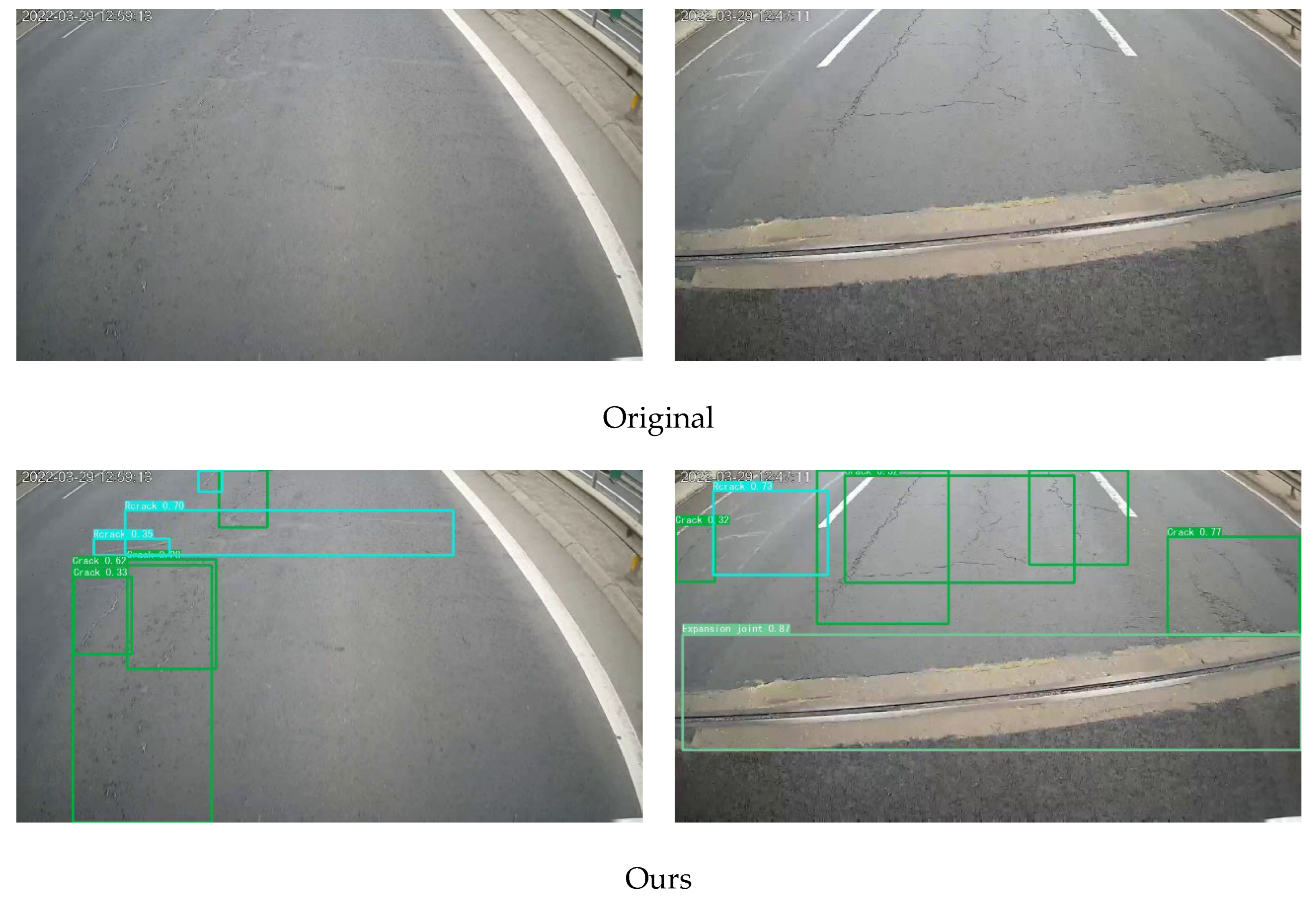

Because cracks account for a small proportion of the image, overlap with gray values of road materials, and are similar to the repaired crack shape, which directly results in lower recognition accuracy, the CBAM feature attention module [

30] is introduced to improve the feature extraction ability of the network. The module internally contains a channel attention module and a spatial attention module, which consider the importance of pixels in different channels and different locations of the same channel in both spatial and channel dimensions to finally localize and identify the target, reduce the redundant information overwhelming the target due to convolution, and refine the extracted features. To avoid bias in network focus due to the premature inclusion of the attention mechanism, this module is added to the last layer in the backbone network. CBAM is shown in

Figure 2.

The module operation process and the output can be expressed as (1) and (2), where

F is the feature map of the input,

is the channel attention mechanism, and

is the spatial attention mechanism.

denotes the add operation, and

denotes element-wise multiplication.

F″ denotes the final output feature map after the CBAM attention module. The output of the CBAM module is shown in Equations (1) and (2) as follows:

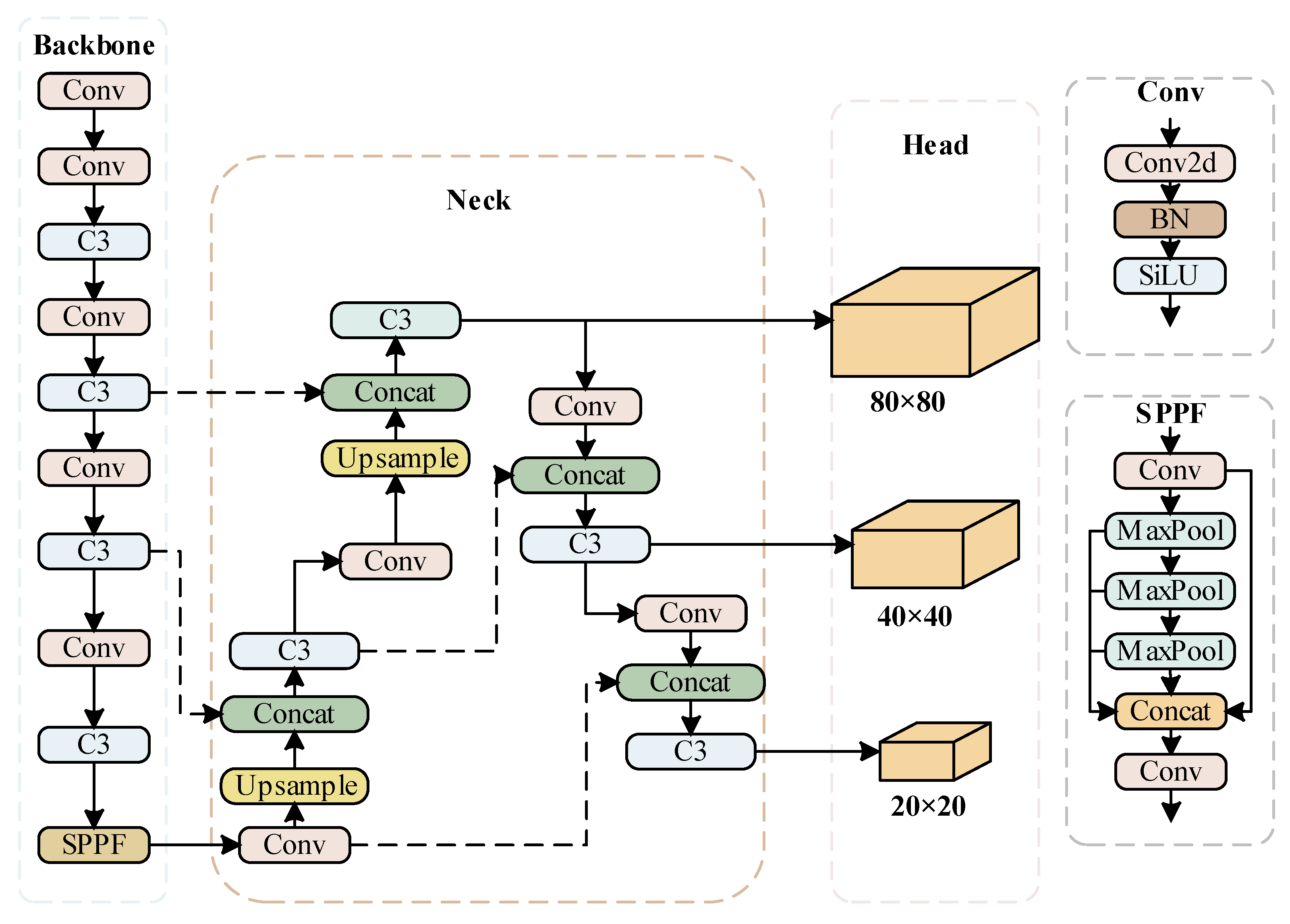

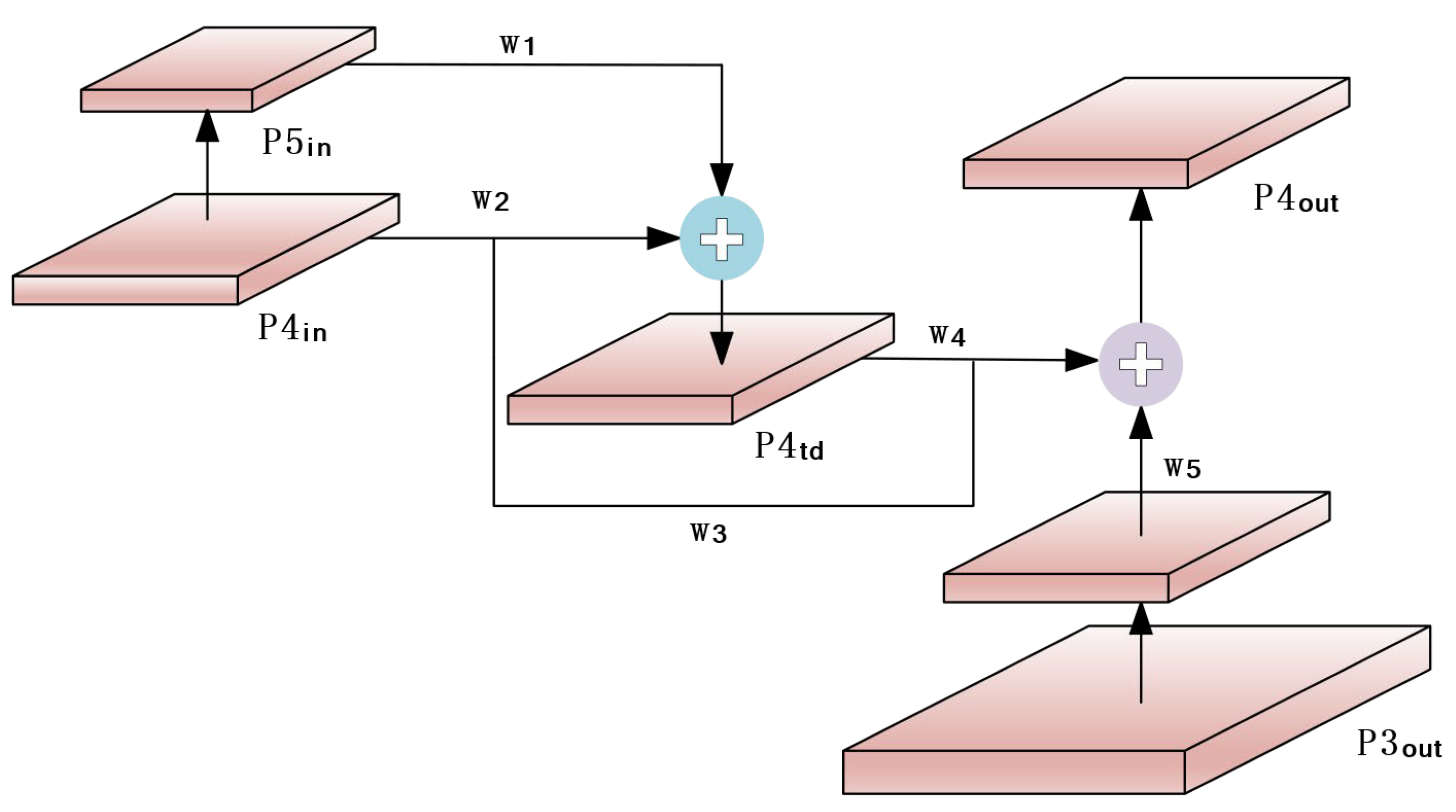

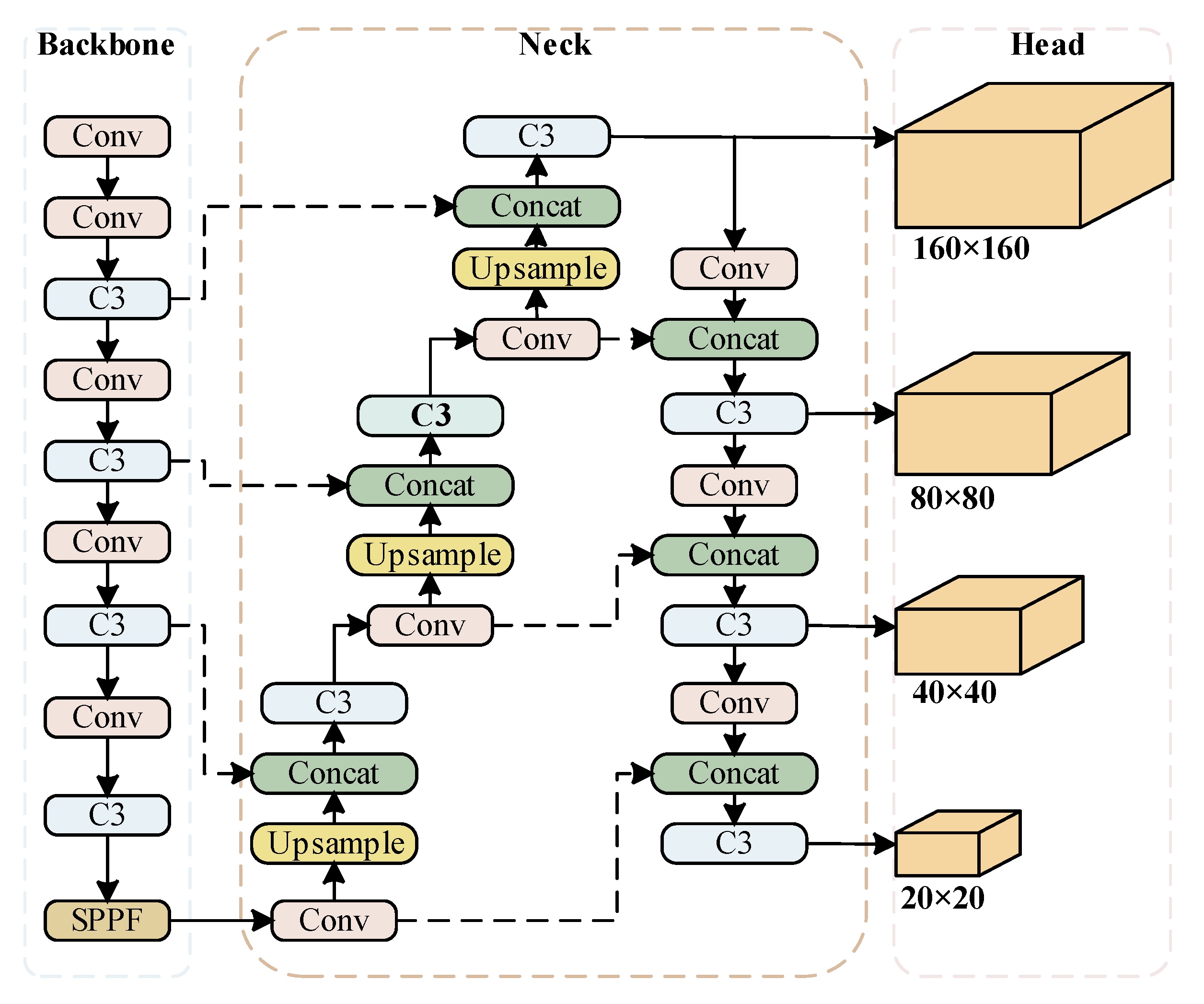

3.2. Bi-FPN Structure

In the feature fusion process, deep features and shallow features have different resolutions when fused across scales, and this difference directly affects the output results. The FPN-PAN structure of YOLOv5 uses an equivalent fusion strategy for input feature maps from different scales, while the Bi-FPN structure introduces adaptive weights in the feature fusion process at different scales, and adaptive weights can be gradually adjusted with deeper training, allowing the network to learn and enhance the differentiation ability of input feature importance, and to suppress or enhance different input features according to the weights, balancing the feature information between different scales. Its weighting formula is shown in Equation (3):

where

represents the learnable weights, and as the model is continuously trained, the value of this parameter changes with the optimizer update toward making the loss function optimal, and the value is set to 1 at initialization.

represents the input feature map in the network structure,

is set constant equal to 0.0001 to ensure stable weight values, and the weight coefficients are normalized to between 0 and 1 using the ReLU function. For a layer in the middle of the network, the fusion is shown in

Figure 3.

“

” denotes the add operation, and

denotes the feature fusion weight values on different paths, where

is the weight value on the directly connected path from

to

,

is the weight value on the cross-scale path from

, and

is the weight value on the directly connected path from

. Finally, the process and output of feature fusion can be expressed as Equations (4) and (5) according to Equation (3):

To investigate the feature fusion effect of the Bi-FPN structure and whether the CBAM attention mechanism works, three sets of experiments were designed, and the same training techniques were used for the three sets of experiments, the batch size was set to 16, the epoch was set to 100 rounds, the initial learning rate was set to 0.01, and SGD algorithm was used. The experimental data are shown in

Table 1.

After changing the network structure, the network parameters and FLOPS increased by 15.5% and 6.1%, respectively. mAP@0.5 increased by 1.8% and mAP@0.5:0.95 decreased by 2.2%. Experiments have shown that the CBAM module sends better feature maps to the neck layer, so Bi-FPN can better complete the cross-layer fusion for multi-scale features. The CBAM module insertion point is the last layer of the backbone and each feature fusion structure in the BIFPN structure. Due to the introduction of additional modules, the data read and write operations are increased, which raises the GPU computing cost and leads to a slight decrease in detection speed. In order to be able to meet the needs of high-precision and low-cost industrial tasks, this study continues to use depth-wise separable convolutional kernels to replace standard convolutional kernels to reduce the complexity of the model and improve the detection capability based on the current effect.

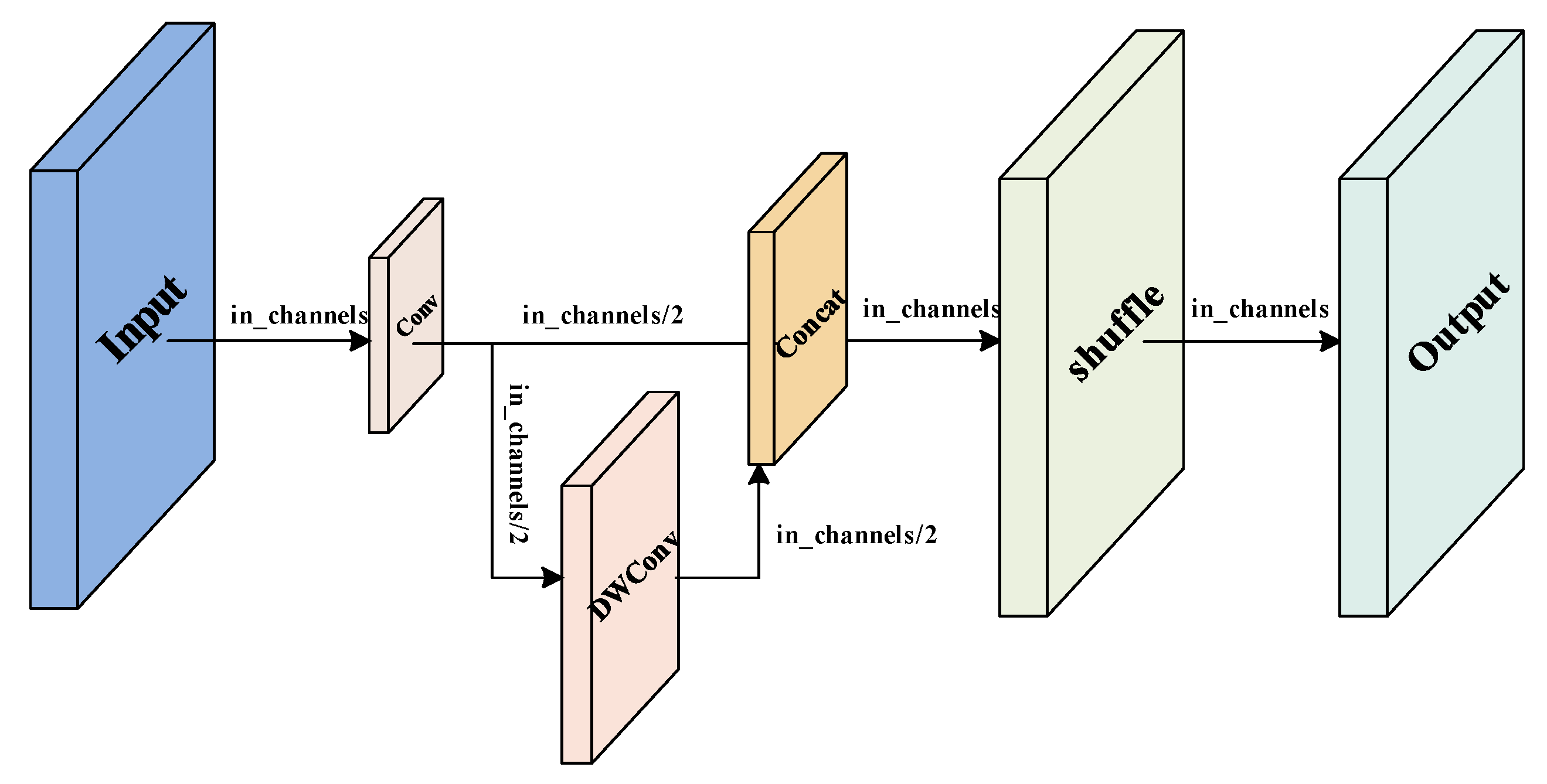

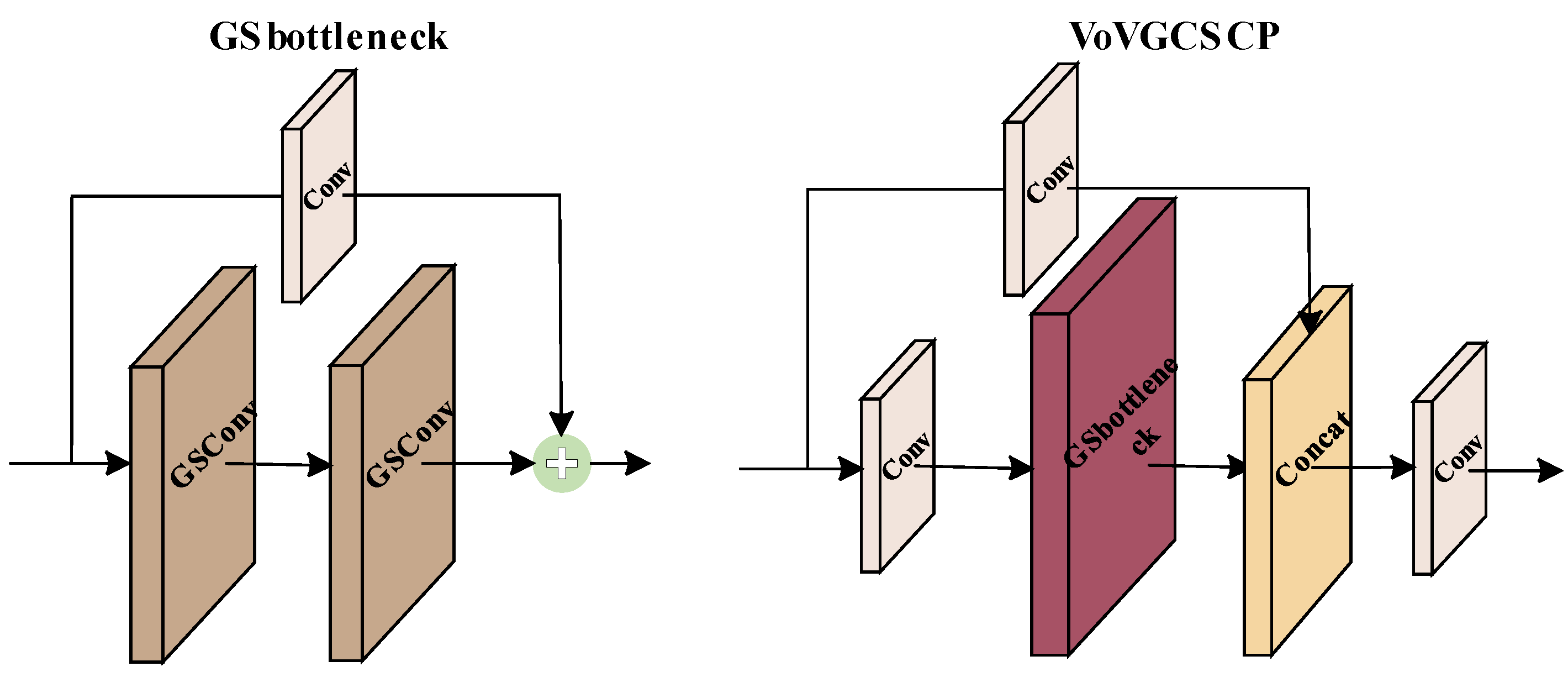

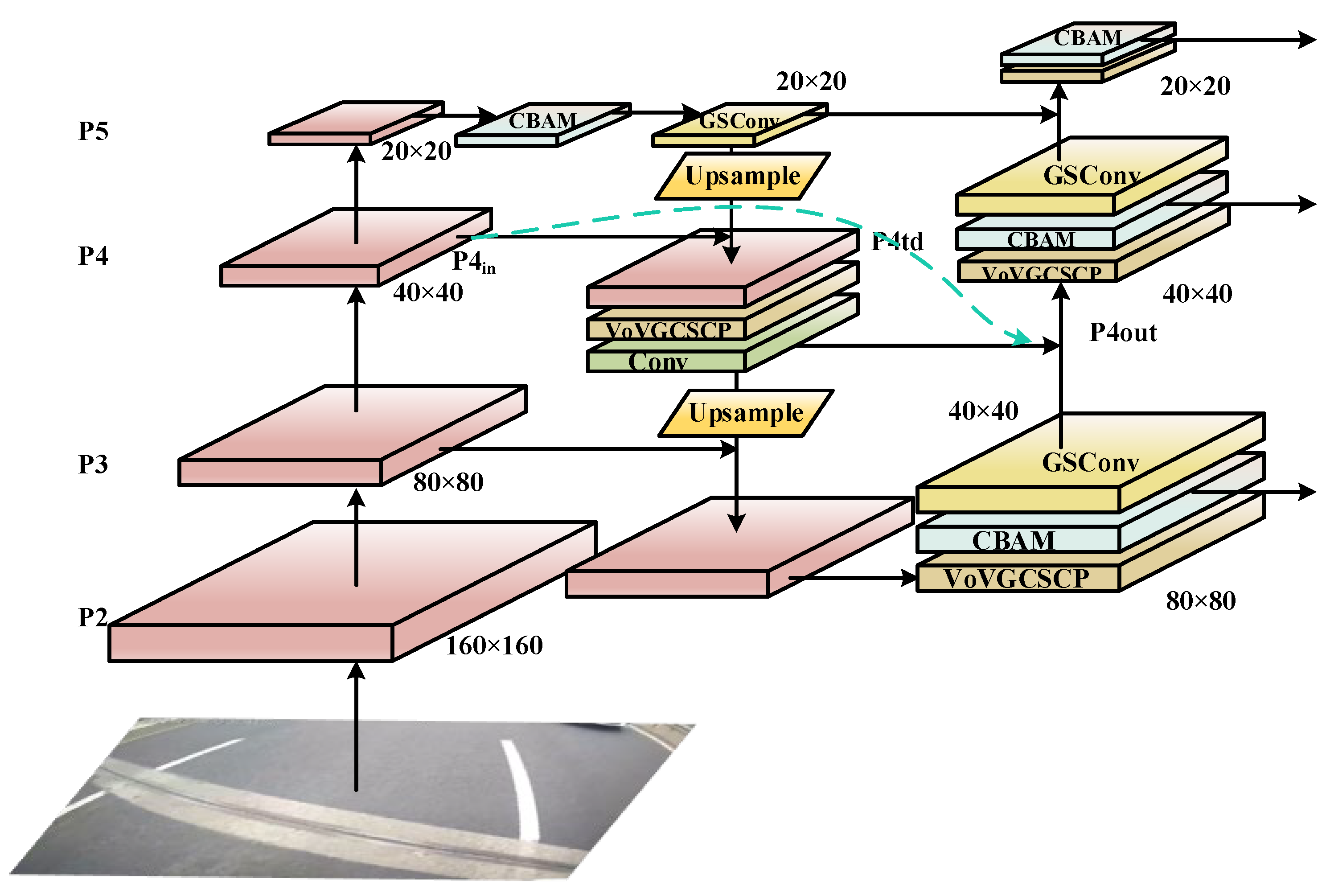

3.3. GS-BiFPN Structure

The GS-BiFPN structure is modified based on the Bi-FPN structure by replacing the original Conv module with GSconv and the C3 module with VoVGCSCP, which improves the feature fusion effect, speeds up the network inference, and effectively reduces the network complexity. The GSConv module is composed of a standard convolutional kernel, a depth-wise separable convolutional (DWConv) module, and a shuffle module [

31]. The traditional DWConv module uses separate channels of convolution, which does significantly reduce the computational effort and the number of parameters, but also directly leads to the lack of feature information at the same spatial location during the convolution process and reduces the ability to extract features. In order to make up for this defect, the GSConv module combines the feature maps of the standard convolutional block and the DWConv module through the Concat operation and uses a shuffle strategy for the fused feature maps. The shuffle strategy mixes the feature information from the deep convolution module and the convolution module evenly, exchanges the feature information locally in such a way that the final feature map effect is as close as possible to the effect after standard convolution, and finally achieves a reduction in the number of parameters and FLOPs of the model while maintaining the accuracy. In addition, the VoVGCSCP module was designed based on the GSConv module, which reduces the complexity of the network. The structure of GSConv is shown in

Figure 4, and the VoVGCSCP module is shown in

Figure 5.

To verify the feature extraction ability of GSConv and how well it can optimize the parameters of the model, this study conducted experiments by adding the GSConv module to the backbone and neck, only the neck layer, and the original YOLOv5 model for comparison. In these three experiments, the input picture size was set as 640 × 640, batch size as 16, epoch as 100 rounds, initial learning rate as 0.01, and the SGD algorithm was adopted.

Comparing the three sets of experimental data, it can be seen that the feature extraction ability of GSConv is indeed inferior to that of the standard convolution kernel, and the drop in mAP@0.5:0.95 is obvious when it is applied to the backbone network. However, after the feature map has been effectively extracted by standard convolution in the backbone, the size of the feature map reaches the minimum value, and the number of channels reaches the maximum value when entering the neck layer. At this time, using depth-wise separable convolution has the minimum loss for feature extraction, ultimately achieving more effective extraction. The improvement of mAP@0.5 and mAP@0.5:0.95 values proves that the GSConv module effectively exchanged feature local information in the neck layer.

The reason why the number of parameters in group 1 and group 3 did not change significantly was that the width of the neck layer was much smaller than that of the backbone network, so the number of parameters did not decrease significantly.

To further improve the network detection ability, this study studied the feature extraction ability of the standard convolution module and the GSConv module in the neck layer. Group 1 using GSConv only in the neck layer and group 4 using GSConv for the overall network were added as a contrast. In the second and third groups, the standard convolution module is used to replace the GSConv module as the convolution module in the output small and medium object detection head. The same training technique was used in four sets of experiments, the batch size was set to 16, the epoch was set to 100 rounds, the initial learning rate was set to 0.01, and the SGD algorithm was used.

In the neck layer, the medium object detection head has a smaller size and a larger number of channels than the smallest object detection head. Comparing groups 1 and 3 of experimental data, the GSConv module can be compared with the standard convolution module in the feature extraction ability of deep networks. Compared with the experimental data of groups 1 and 2 of experiments, although the feature extraction of standard convolution is not as soft as GSConv in deep networks, the early use of standard convolution can provide high-quality feature maps with more obvious features for the later network, and improve the positioning ability of the network in the case of a high threshold. The results of the four groups of experimental data are basically in line with the research conclusions in

Table 2. Considering these results comprehensively, this study uses group 2 as the improved network. In terms of detection speed, although GSconv does effectively reduce the complexity of the model, the introduction of modules such as depth-wise separable convolution increases the data processing process of the model, which directly leads to a reduction in detection speed. However, the FPS metric is still good for real-time detection tasks. In this study, the CBAM attention module and the improved GS-BiFPN feature fusion structure are introduced into the backbone to improve the low accuracy of the model. The improved GS-BiFPN structure is shown in the following

Figure 6, where the pink square is the feature map after feature extraction.

The existence of the Bi-FPN provides a feasible approach for this study, but for the objective of this study, the performance based on the original Bi-FPN structure is not excellent, and the number of parameters and calculation amount do not meet the expected requirements of the study. Therefore, while retaining the idea of cross-scale connection, we made changes on the basis of the Bi-FPN structure to ease the problem of excessive resource consumption of the feature fusion structure, and we adopted the GSConv structure to make the feature fusion process softer, avoiding the excessive violent convolution operation of the original Bi-FPN structure resulting in the loss of target feature information. This makes the network focus too much on background information. Finally, according to

Table 1 and

Table 3, the number of network parameters and GFLOPS decreased by 4.7% and 11%, respectively. mAP@0.5 and mAP@0.5:0.95 increased 1.7% and 2.3%, respectively.