1. Introduction

Currently, image stitching is still a topical research issue and is widely used in computer vision, unmanned aerial vehicle (UAV) reconnaissance and other fields [

1,

2]. Image stitching consists of synthesizing different types of images captured by different imaging devices at different shooting positions into an image with a larger field of vision [

3,

4]. On the one hand, image stitching can synthesize panoramic and ultra-wide-view images, so that ordinary cameras can achieve grand scene shooting. On the other hand, image stitching can synthesize fragmented images into a complete image. The stitching technology can be applied to combine medical images, scientific microscope fragments or local images from seabed exploration into a complete image. In addition, image mosaic is also a basic technology in scene rendering methods, which uses panoramic images instead of 3D scenes to model and draw. However, image stitching still encounters many challenges at present, such as parallax caused by viewpoint change, ghosting, distortion, detail distortion and image unevenness [

5,

6,

7].

Up to now, many image stitching methods have been proposed by researchers. Li et al. [

8] proposed a model of appearance and motion variation based on the traditional speeded up robust features (SURF) algorithm, which mainly contains Hessian matrix construction, Hessian matrix determinant approximation calculations and non-maximal suppression determination feature points in its key steps. In order to improve the matching accuracy and robustness, Liu et al. [

9] introduced an improved random sample consensus (RANSAC) feature image matching method based on SURF. First of all, the SURF method is used to detect and extract image features, and the fast library of the approximate nearest neighbor-based matcher method is used to perform initial matching on image feature points. The RANSAC algorithm is improved to increase the probability of correct matching points. In 2017, Guan et al. [

10] presented an interest point detector and binary feature descriptor for spherical images. Inspired by the Binary Robust Invariant Scalable Keypoints (BRISKs), they adapted the method to operate on spherical images. All of the processing is intrinsic to the sphere and avoids the distortion inherent in storing and indexing spherical images in a 2D representation. Liu et al. [

11] developed the BRISK_D algorithm, which effectively combines features from the Accelerated Segment Test (FAST) and BRISK methods. The keypoints are detected by the FAST algorithm and the location of the keypoint is refined in scale and space. The scale factor of the keypoint is directly computed with the depth information of the image. Zhang et al. [

12] proposed a screening method based on binary mutual information for the mismatch problem. The feature points extracted by the ORB algorithm are distributed in a color change area. Then, the new feature points are obtained by internal points. In this way, feature points can be eliminated and the best transformation matrix can be obtained by using an iterative method. In 2020, Ordóñez et al. [

13] introduced a registration method for hyperspectral remote sensing images based on MSER, which effectively utilized the information contained in different spectral bands. Elgamal et al. [

14] proposed an improved Harris hawks optimization by utilizing elite opposite-based learning and proposing a new search mechanism. This method can avoid falling into local optimum, improve the calculation accuracy and accelerate the convergence speed. Debnath et al. [

15] utilized Min Eigen feature extraction based on the Shi-Tomasi corner detection method which detects interest points to identify image forgery.

With the continuous development of machine learning, many scholars have applied it to the field of image stitching. However, learning-based image stitching solutions are rarely studied due to the lack of labeled data, making the supervised methods unreliable. To address this limitation, Lang Nie et al. [

16] proposed an unsupervised deep image stitching framework consisting of two stages: unsupervised coarse image alignment and unsupervised image reconstruction. The stitching effect of this method is more obvious for images with few features or low resolution. However, stitching algorithms based on machine learning require extensive training, have a high resource consumption and are highly time consuming.

Alcantarilla et al. [

17] introduced the KAZE feature in 2012. Their results revealed that it performs better than other feature-based detection methods in terms of detection and description. Pourfard et al. [

18] proposed an improved version of the KAZE algorithm with the accelerated robust feature (SURF) descriptor for SAR image registration. Discrete random second-order nonlinear partial differential equations (PDEs) are used to model the edge structure of SAR images. The KAZE algorithm uses nonlinear diffusion filtering to build up the scale levels of the SIFT descriptor. It preserves edges while smoothing the image and reduces speckle noise.

For the purpose of alleviating the sensitivity of KAZE and improving the stitching effect, we proposed image stitching based on color difference and KAZE with a fast guided filter as an efficient stitching method. The contributions of this paper are as follows: firstly, we introduce a fast guided filter into the KAZE algorithm to effectively reduce the mismatch information and improve the matching efficiency. Secondly, we use the improved RANSAC algorithm to increase the probability of correct matching points sampled and effectively eliminate the wrong matching point pairs. Thirdly, we introduce color difference and brightness difference to compensate for the whole image when fusing and stitching images. This method cannot just only effectively eliminate seams, but also results in perfectly uniform color and luminance.

The remainder of the paper is organized as follows.

Section 2 depicts the fast guided filter and Additive Operator Splitting (AOS) algorithm briefly.

Section 3 details the proposed method in this paper.

Section 4 presents the experimental results and assessments. Finally, the conclusions and outstanding issues are listed in

Section 5.

3. Methods

This section presents our proposed stitching method completely. Aiming at the problems of inaccurate matching and obvious splicing seams in stitched images, we propose an efficacious stitching method based on color difference and an improved KAZE with a fast guided filter.

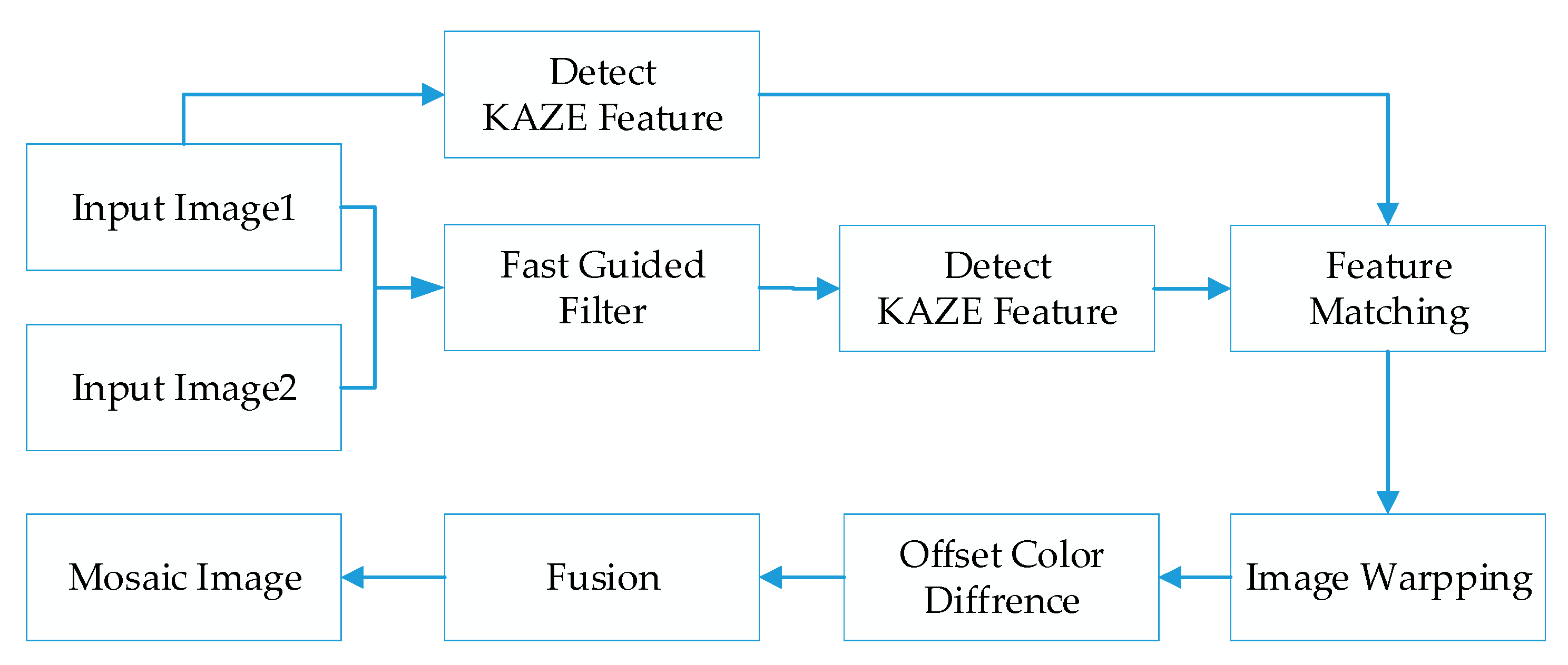

Figure 1 describes the overall process of the proposed method. Allowing for the phenomenon that feature points detected by KAZE are commonly large and intensive, we introduce a fast guide filter which uses one image as a guide map to filter the other image. This method can efficiently diminish the mismatching point pairs with preservation of original visual features. During feature matching, the KAZE algorithm based on RANSAC is utilized to match the feature points which further eliminates the mismatched points. Since the color and luminance of the images may be different, there are several clear seams in the stitching result. In order to improve the splicing effect, we produce a compensation method based on color and brightness to calculate the color difference in the overlapping area. Then, the obtained local color and luminance compensation values are applied to the overall image to be spliced. In addition, our proposed method can realize the stitching of multiple images. In order to avoid excessive variation, we use the middle image as the reference image to warp the images.

3.1. Fast Guided Filtering

The first image is imported as a guide map to repair the texture information in the other image. The purpose of introducing fast guided filtering is to decline the mismatch rate of the KAZE algorithm. Here, we select a window size of 8 pixels and set the regularization parameter that controls the smoothness level to 0.01. The sampling ratio is set to four pixels in the fast guided filter.

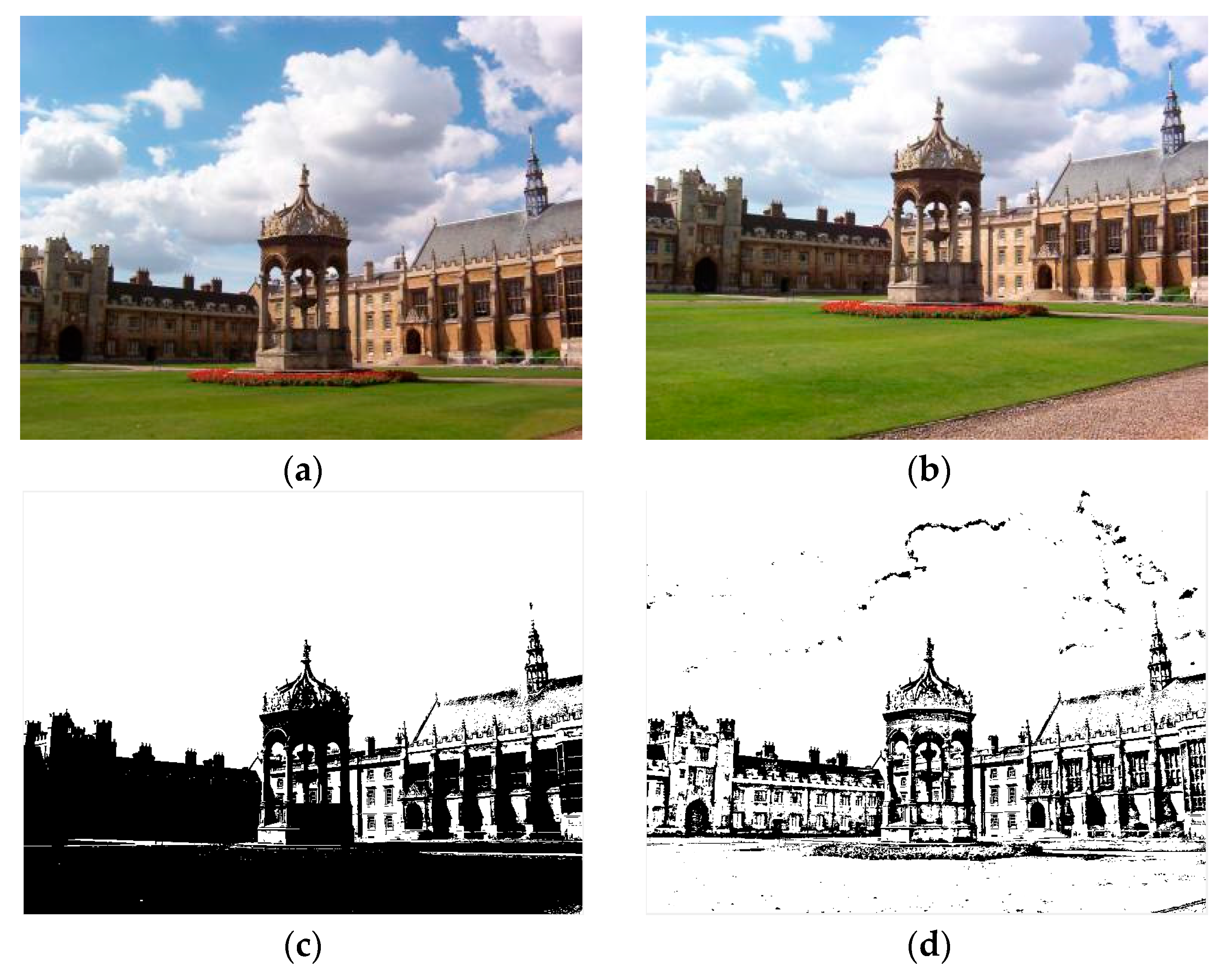

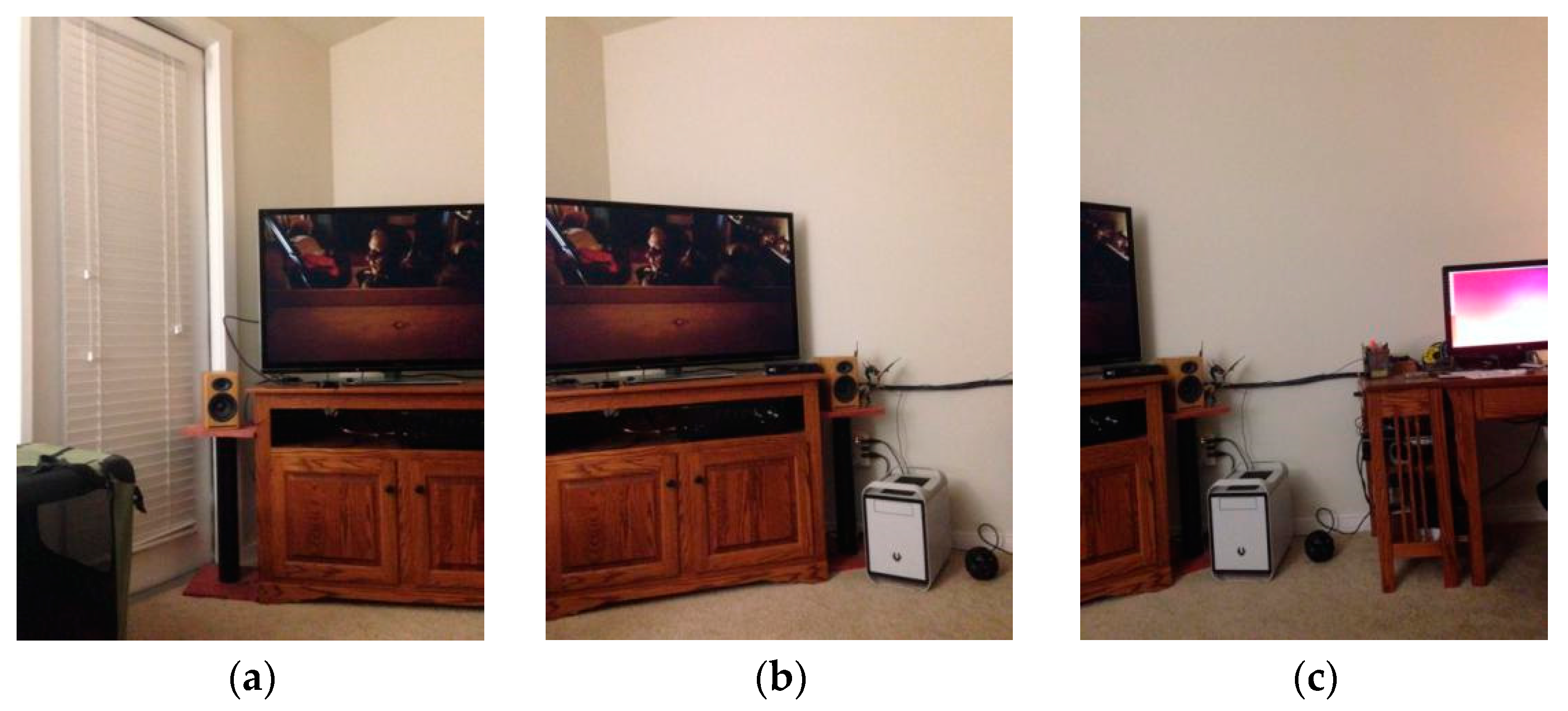

Figure 2 shows the results of fast guided filtering.

Figure 2a,b contains input images, where (a) is the original image and (b) is the guide image. The fast guided filter filters redundant information by analyzing common textures and spatial features in both the original image and guide image. Additionally, then the fast guided filter can restore the boundaries while smoothing the original image by the guide map. This method greatly reduces the number of feature matching pairs, which results in a lower mismatch rate. After guided filtering, a lot of invalid information in the image can be filtered out, which widely improves the iterative efficiency during matching. At the same time, it can also reduce the mismatch rate to a certain extent.

3.2. KAZE Algorithm Based on RANSAC

Both the SIFT algorithm and the SURF algorithm detect feature points in a linear scale space, which can notably cause blurred boundaries and loss of detail. The KAZE algorithm detects feature points in the nonlinear scale space by constructing the nonlinear scale space, which retains more image detail. In the KAZE algorithm, AOS technology and variable transfer diffusion are used to establish a nonlinear scale space for the input images. Then, the 2D features of interest that exhibit the maximum value of scale-normalized determinant detection of Hessian response are detected by the nonlinear scale space. Finally, the scale and rotation invariance scriptors considering the first-order image derivatives are obtained through calculating the principal direction of the key point.

We introduce the RANSAC algorithm on the basis of the traditional KAZE algorithm, which can further eliminate mismatched point pairs. The flow of the KAZE algorithm based on RANSAC is shown in

Figure 3.

3.2.1. Construction of Nonlinear Scale Space

The KAZE algorithm constructs a nonlinear scale space through nonlinear diffusion filtering and the AOS algorithm [

26]. The scale of KAZE features increases logarithmically. Each level of the KAZE algorithm adopts the same resolution as the original image. According to the principle of the difference of Gaussian (DoG) pyramid model [

27], each group needs

layers of images to detect the extreme points of

scales. Then, the parameter of scale space,

, can be described as follows:

where

is the baseline scale,

is the index of the group octave and

represents the index of the intra-group layer. The scale parameters of key points are calculated according to the group of key points and the number of layers in the group in combination with Equation (10).

The scale parameters of each layer in the group for constructing the Gaussian pyramid are calculated according to the following formulas:

From the above formulas, the scale parameters of the same layer in different groups are the same. The scale calculation formula of a layer of images in the group is shown in Equation (13).

To ensure the continuity of the scale space, the first image of a group is obtained by sampling the penultimate layer of the previous group. We assume that the initial scale of the first group is

. Then, the scale parameters of each layer in the first group are

,

,

and so on. The scale of the penultimate layer can be defined as follows:

Since the nonlinear diffusion filter model is based on time, it is necessary to convert the scale parameters into evolution time. We suppose that the standard deviation used in Gaussian scale space is

. Then, the convolution of the Gaussian is equivalent to filtering the image with a duration of

. According to a set of evolution time, all images in the nonlinear scale space can be obtained by the AOS algorithm as follows:

3.2.2. Detection and Location of Feature Points

The feature points are obtained by seeking the local maximum points of the Hessian determinant normalized by different scales. For multi-scale feature detection, we normalize the set of differential operators for scale:

where

and

are the second-order horizontal derivative and vertical derivative, respectively, and

expresses the second-order cross derivative. For the filtered image set in a nonlinear scale space, we analyze the response of the detector at different scale levels

. The maximum values of scale and spatial position are searched in all filtered images except

and

. Then, we check the response in a

pixels window to quickly find the maximum value.

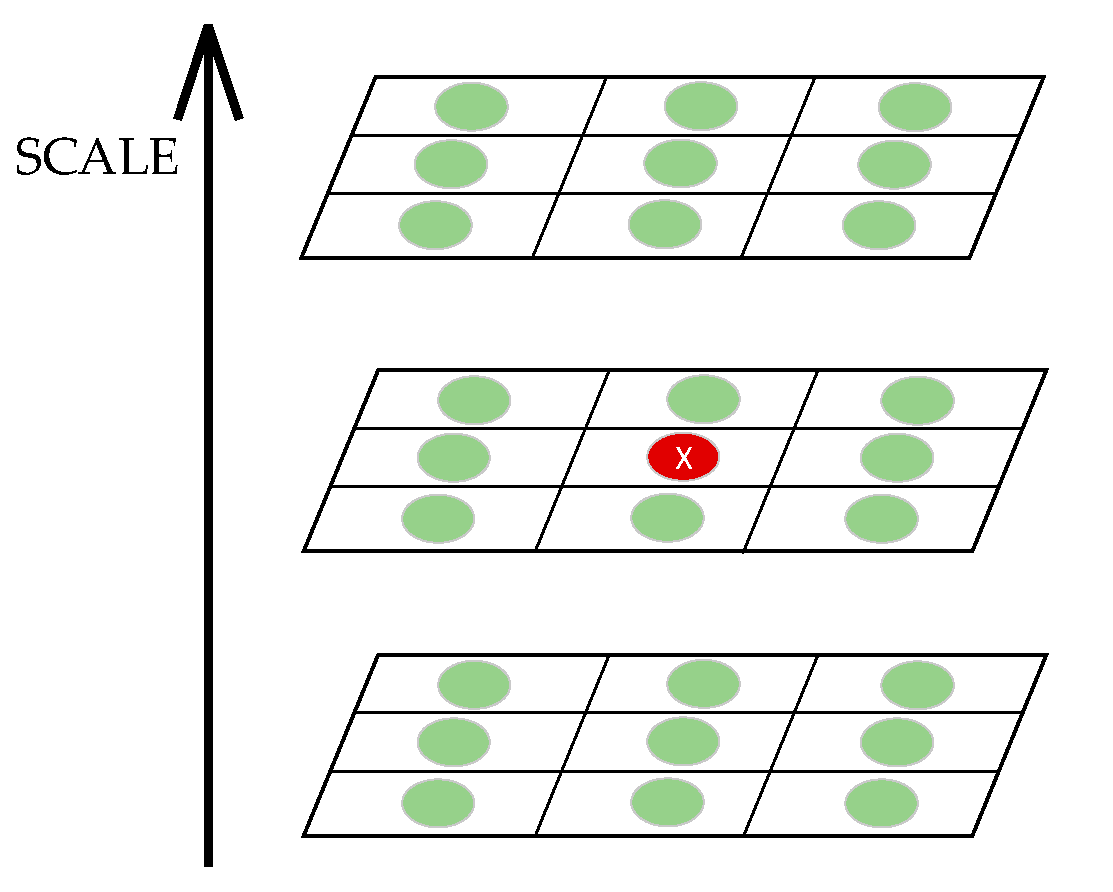

As shown in

Figure 4, each feature point is compared with 26 pixel points from the same layer and two adjacent layers. All pixels are traversed in this way until the maximum point is found. The derivative is calculated by the Taylor expression.

We take the derivative of the above equation and make it equal to zero. Then, the solution can be obtained by the following.

One of the feature points that meets is selected as the key point.

3.2.3. Determination of Main Direction

Assuming that the scale parameter of the characteristic point is , the search radius is set to . We make six 60 degree sector regions in this radius and count the sum of Haar wavelet features in each sector region. The direction with the largest sum of wavelet features is the main direction.

3.2.4. Generation of Feature Descriptors

For feature points whose scale parameter is

, we take a window of

in the gradient image centered on the feature point. Then, the window is divided into 4 × 4 sub-regions with a size of

. Adjacent sub-areas have an overlapping zone with a width of

. In the process of calculating the sub-region description vector, a Gaussian kernel with a weight of

is used for each sub-region to obtain the description vector as Equation (20):

After that, the vector of each sub-region is weighted by another 4 × 4 Gaussian window where . Finally, a 64-dimensional description vector is attained through normalization processing.

3.2.5. Eliminate Mismatched Point Pairs

In the case of information loss caused by image blur, noise interference and compression reconstruction, the robustness of KAZE feature point detection is significantly better than other features. In addition, the nonlinear scale space does not cause boundary blurring and detail loss compared to the linear scale space. However, the matching of KAZE features is sensitive to the setting of parameters, which can easily prompt mismatching. Aiming at the problem of excessive concentration and mismatch of feature points, the RANSAC method is introduced to effectively eliminate mismatched points. Firstly, KAZE feature matching is performed on the previous input image and the filtered image. Additionally, then RANSAC algorithm is applied to eliminate the mismatched point pairs.

The RANSAC algorithm is used to find an optimal homography matrix. The standardized matrix satisfies Equation (21).

To calculate the homography matrix, we randomly extract four samples from the matching dataset which are not collinear. Then, the model is utilized for all datasets to calculate the number of points and the projection error. If the model is optimal, the loss function is the smallest. The loss function can be calculated by Equation (22).

The process of the RANSAC algorithm is shown in Algorithm 1.

| Algorithm 1: The process of RANSAC |

Input: The feature point pair S of the image to be spliced and the maximum iteration number k.

Output: Remove the feature point pair D of mismatched point pairs.

1: S->D.

2: Repeat:

3: Randomly extract four sample data from all feature point pairs detected, which cannot be collinear.

4: Use Equations (21) and (22) to calculate the transformation matrix H, and record it as model M.

5: Calculate the projection error between all data in the dataset and model M. If the error is less than the threshold, add the interior point set I.

6: If the number of elements of the current interior point set I is greater than the optimal interior point set I_ Best, update I_ Best = I, update the number of iterations at the same time .

7: Until iterations are greater than the iteration number k. |

The iteration number k is constantly updated rather than fixed when it is not greater than the maximum iteration number. The formula for calculation of the iteration number is as follows:

where p expresses the confidence level,

is the proportion of the interior point and

is the minimum number of samples required to calculate the model.

The RANSAC algorithm performs precise matching of feature points through the above iterative process, which effectively eliminates mismatched point pairs. The inner point pairs obtained by the method are the most advantageous pairs. The matching accuracy is the foundation for accurately evaluating warped equations. If there are many mismatched pairs, it is easy for problems such as ghosting and distortion to occur in the splicing result.

3.3. Color Difference Compensation

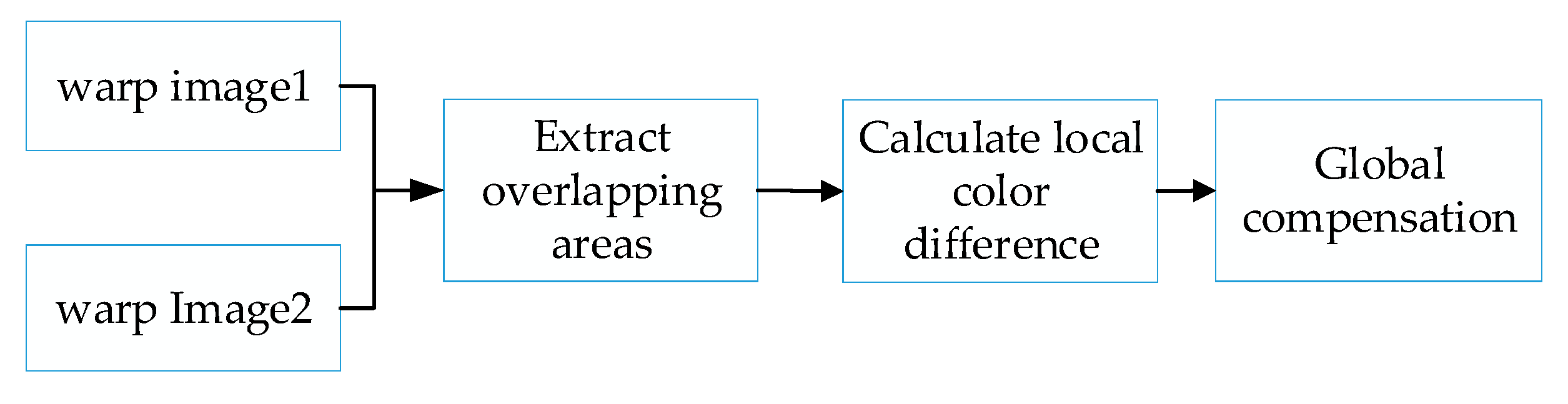

The luminance and color of the image to be stitched may be uneven, which can easily result in obvious stitching seams in the stitching result. To improve this problem, we put forward a color difference compensation method. The process is shown in

Figure 5.

We calculate the color difference and luminance difference of the overlapping area of the two distorted images. Here, we chose the LAB color model. The average color difference is calculated as follows:

where

represents the average brightness difference,

is the brightness of the overlapping area of the first image and

is the brightness of the overlapping area of the second image. Similarly,

and

are the average color difference,

and

are the colors of the overlapping area of the first image and

and

are the colors of the overlapping area of the second image. We chose the image with the higher brightness as the standard to compensate for the global brightness and color of another image. Finally, the compensated distorted image is fused to obtain the final mosaic image.

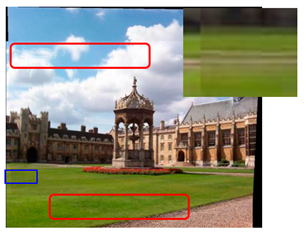

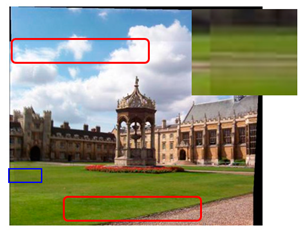

Figure 6 shows the original images and

Figure 7 shows the comparison of color compensated and uncompensated splicing results.

It can be seen from the figure that the stitching effect after color compensation is smoother. Not only does the stitching result have no obvious seams, but also has an improved uniformity.

4. Experiments

This section introduces some experiments to evaluate our proposed splicing method. The images used in the experiment are mainly from the ground truth database of the University of Washington [

28] and the USI-SIPI image database of the University of Southern California [

29]. The ground truth database of the University of Washington addresses the need for experimental data to quantitatively evaluate emerging algorithms. The high-quality and high-resolution color images in the database represent valuable extended duration digitized footage to those interested in driving scenarios or ego-motion. The images in the USI-SIPI image database of the University of Southern California have been provided for research purposes. The USI-SIPI image database contains multiple types of image sets, including texture images, aerial images, miscellaneous images and sequence images. Most of the material was scanned many years ago in the research group from a variety of sources.

At the same time, all test methods are run on a 2.60 GHz CPU with 16 GB RAM under the same experimental settings in this paper. In order to better evaluate the algorithm, we compared subjective images and quantitative evaluation. We compare the results with several popular splicing methods.

4.1. Intuitive Effect

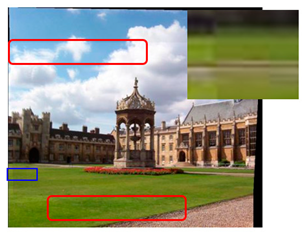

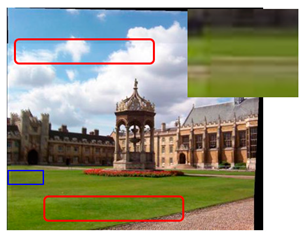

We took a group of images in the USI-SIPI image database of the University of Southern California as an example to compare our method with SURF, BRISK, Harris, MinEigen, MSER and ORB matching algorithms. The splicing effect is shown in

Table 1.

The upper right corner of the stitched image in the table shows a magnification of the area marked in blue. It is not difficult to see from the table that the proposed algorithm is not only better than other algorithms regarding detail, but also more uniform in the color and brightness of the mosaic image. As shown in the red marked area in the table, the stitching method we proposed has no obvious stitching seams, while other algorithms have several obvious stitching seams.

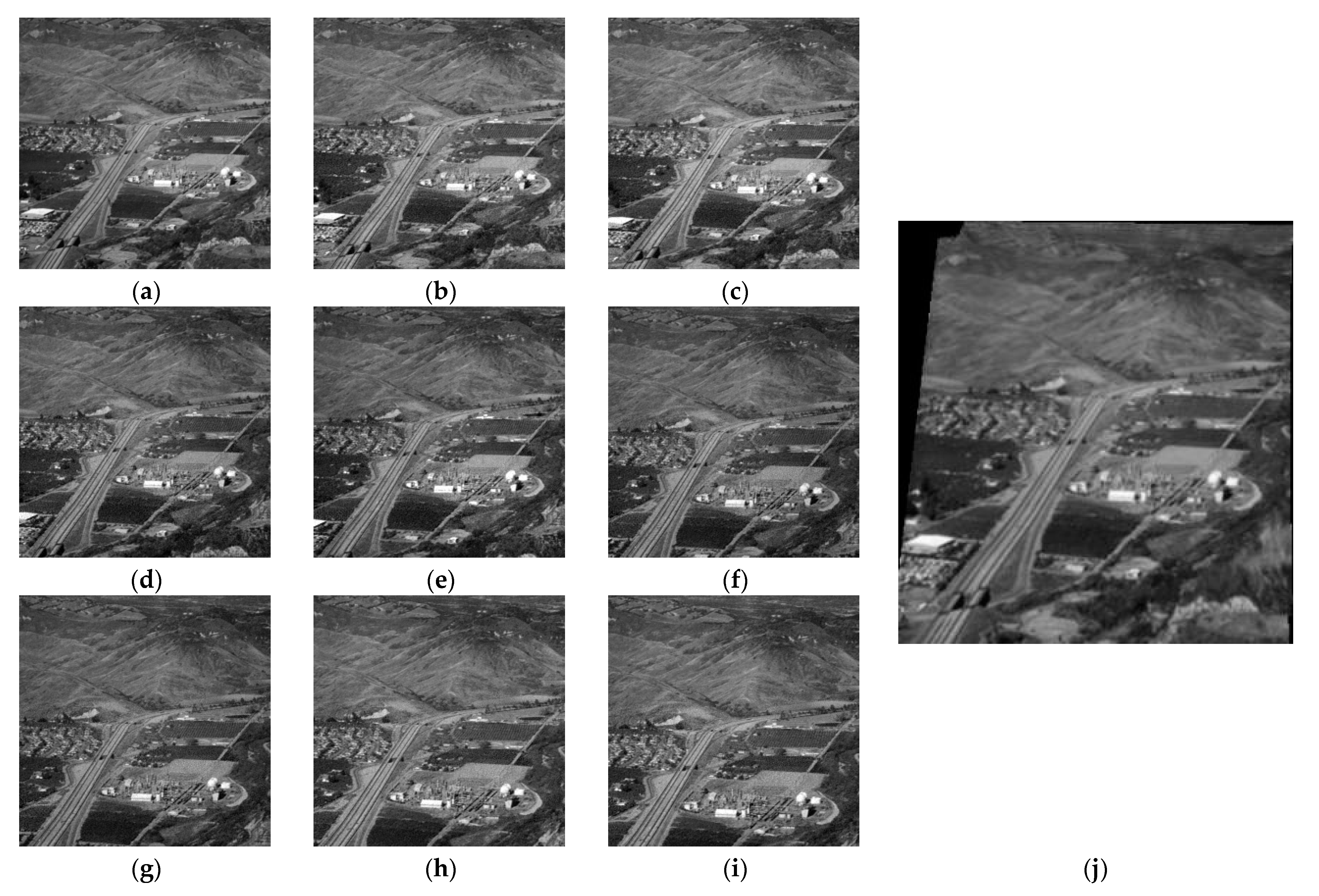

In addition, our method can be applied to multiple image stitching. We selected multiple UAV images from the image library, and the splicing effect is shown in

Figure 8.

4.2. Quantitative Evaluation

In order to effectively evaluate the effectiveness of the algorithm, we quantitatively evaluated the above algorithms from four aspects: the number of matching pairs, the correct matching rate, the root mean square error (RMSE) and the mean absolute error (MAE) [

30,

31,

32].

Assuming that the total number of matches is N and the correct matching logarithm is A, the correct matching rate can be defined as Equation (27).

We randomly selected eight groups (

Table 2) of data from the two databases for feature matching. The table shows the matching logarithms and the matching accuracy results of several comparison algorithms.

It can be seen from the above table that the extent of feature matching based on ORB is the largest. Although the overall matching of the ORB is relatively stable, the matching rate is lower than the proposed method in this paper. Other comparison algorithms detect fewer feature points. Even though other algorithms have high matching rates for individual data, their performance in terms of the number of feature points, matching accuracy and stability is poor. The improved KAZE algorithm based on a fast guided filter is second only to the ORB algorithm in the number of feature matching pairs. Our proposed method further filters out the mismatched point pairs, thereby improving the matching efficiency and matching stability.

In order to judge the stability of the matching algorithm more intuitively, we compared the accuracy of several algorithms in a line chart (

Figure 9).

The red color in the figure shows the matching accuracy of our proposed algorithm. It is not difficult to see from the figure that the algorithm we proposed is not only more accurate, but also more stable.

For two images, I

1 and I

2, to be spliced, given N matching pairs (pi1 and pi2), where i = 1, 2, N, the RMSE and the MAE are defined as follows:

Additionally, taking the selected eight groups (

Table 3) of data as an example for splicing, the table shows the comparison results of the RMSE and the MAE of several different algorithms.

The table shows that the RMSE and the MAE values of our proposed method are lower. The overall stitching effect is better than other stitching algorithms.

In addition, we also compared the processing time of the proposed algorithm with other algorithms. The result shows that the processing time of the proposed algorithm is similar to that of other algorithms. The comparison results of processing time are shown in

Table 4.

It is not difficult to draw the conclusion from

Table 4 that the processing time of the SURF algorithm is relatively low and the ORB algorithm is the most time consuming among the studied algorithms. The proposed method has similar processing times compared to other methods.

5. Conclusions

In this paper, we propose an improved image stitching algorithm based on color difference and KAZE with a fast guided filter, which solves the problems of a high mismatch rate in feature matching and obvious seams in the stitched image. In this paper, a fast guide filter is introduced to reduce the mismatch rate before matching. The KAZE algorithm based on RANSAC is used for feature matching of the image to be spliced, and the matrix transformation of the image to be spliced is performed. Then, the color difference and brightness difference of the overlapping area of the image to be spliced is calculated, and overall adjustments of the image to be spliced are made so as to improve the nonuniformity of the spliced image. Finally, the converted image is fused to obtain the stitched image.

Our proposed method was evaluated via the resulting visual image and quantitative value of the images of the ground truth database and USI-SIPI image database and compared with other popular algorithms. On the one hand, the stitching method we proposed can achieve smoother and more detailed stitched images. On the other hand, the algorithm proposed in this paper is superior to other algorithms in terms of matching accuracy, the RMSE and the MAE.

The proposed stitching method in this paper can be used in many fields, such as UAV panoramic stitching and virtual reality. However, the limitations of our proposed method are as follows: firstly, the method proposed in this paper is prone to ghosting when splicing moving objects in close proximity. Secondly, the proposed algorithm has a similar processing time compared to other algorithms, but cannot achieve the effect of real-time stitching. In the future, we will further improve the efficiency of image stitching.