Encoder–Decoder Architecture for 3D Seismic Inversion

Abstract

1. Introduction

2. Problem Formulation

3. The Deep Learning Approach

3.1. Encoder–Decoder Architecture

3.2. Computational Considerations

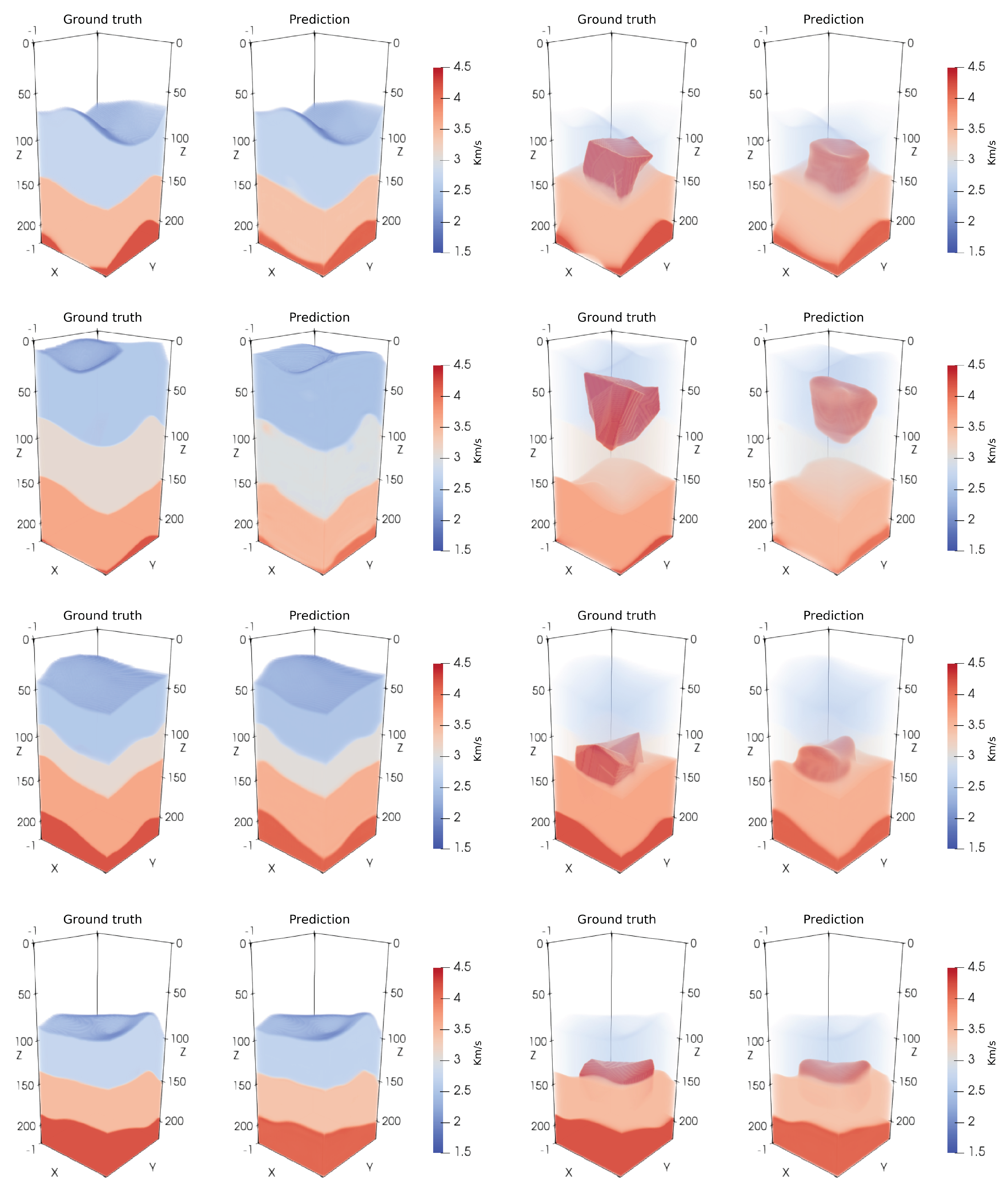

4. Performance Evaluation

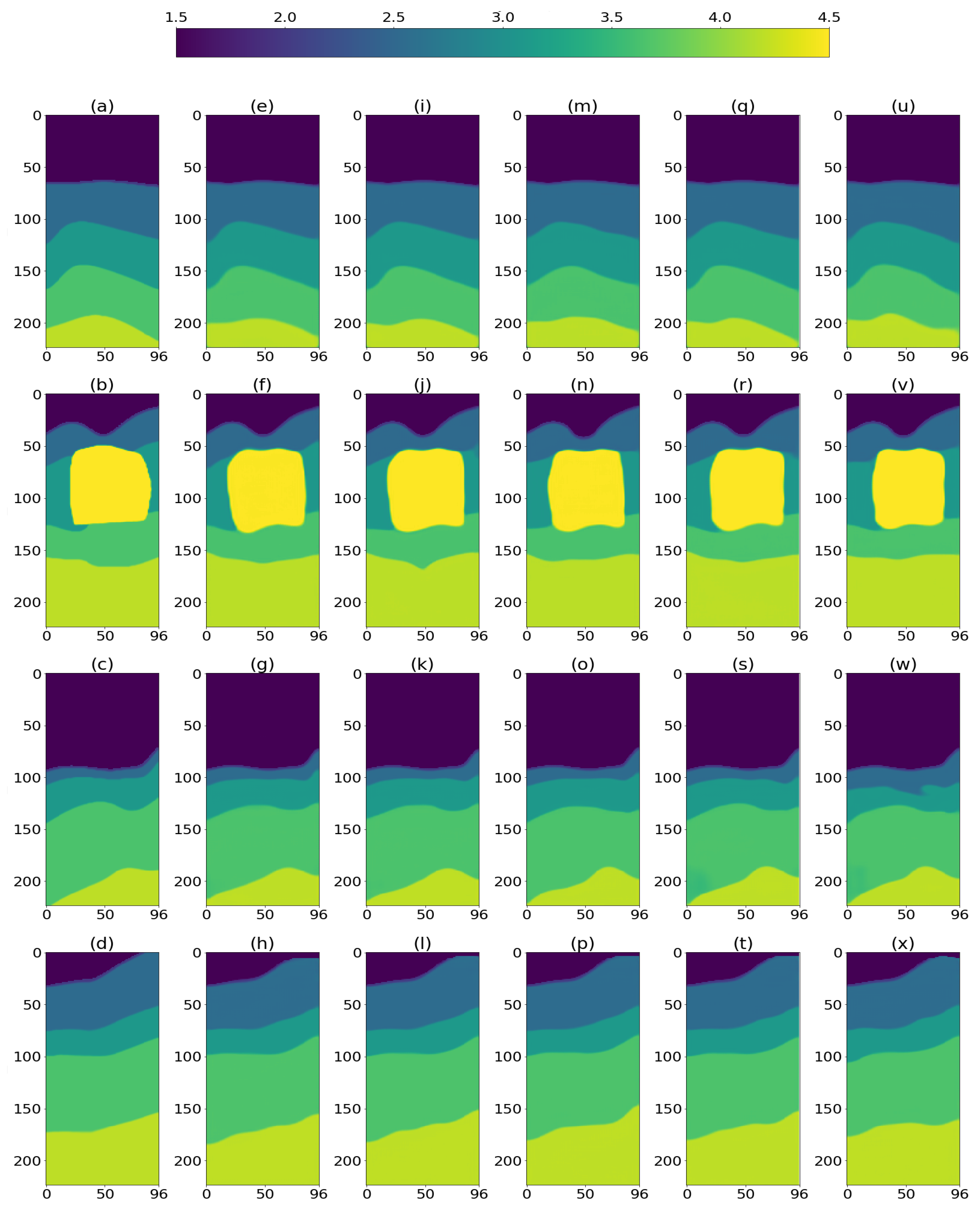

4.1. Data Preparation

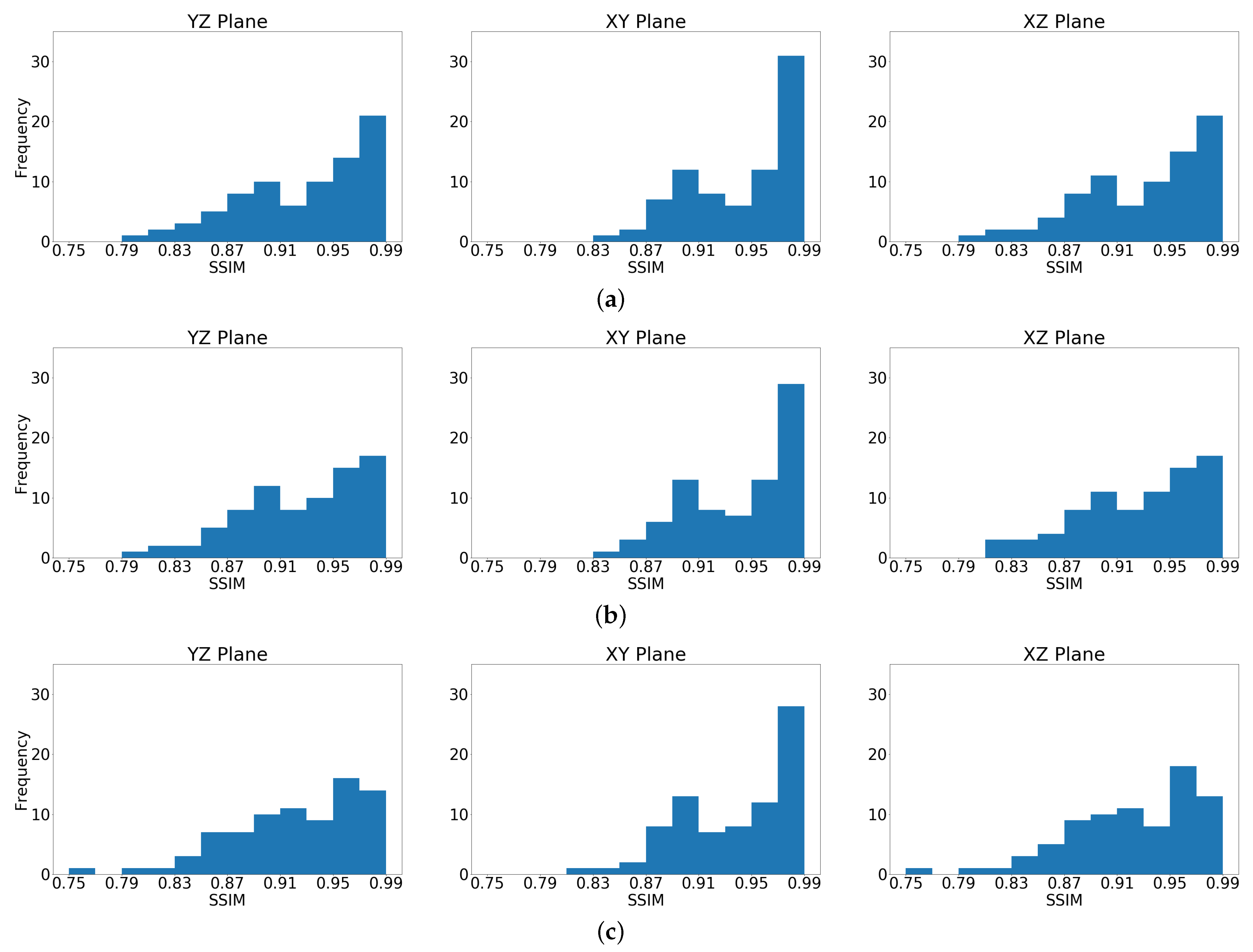

4.2. Evaluation Metrics

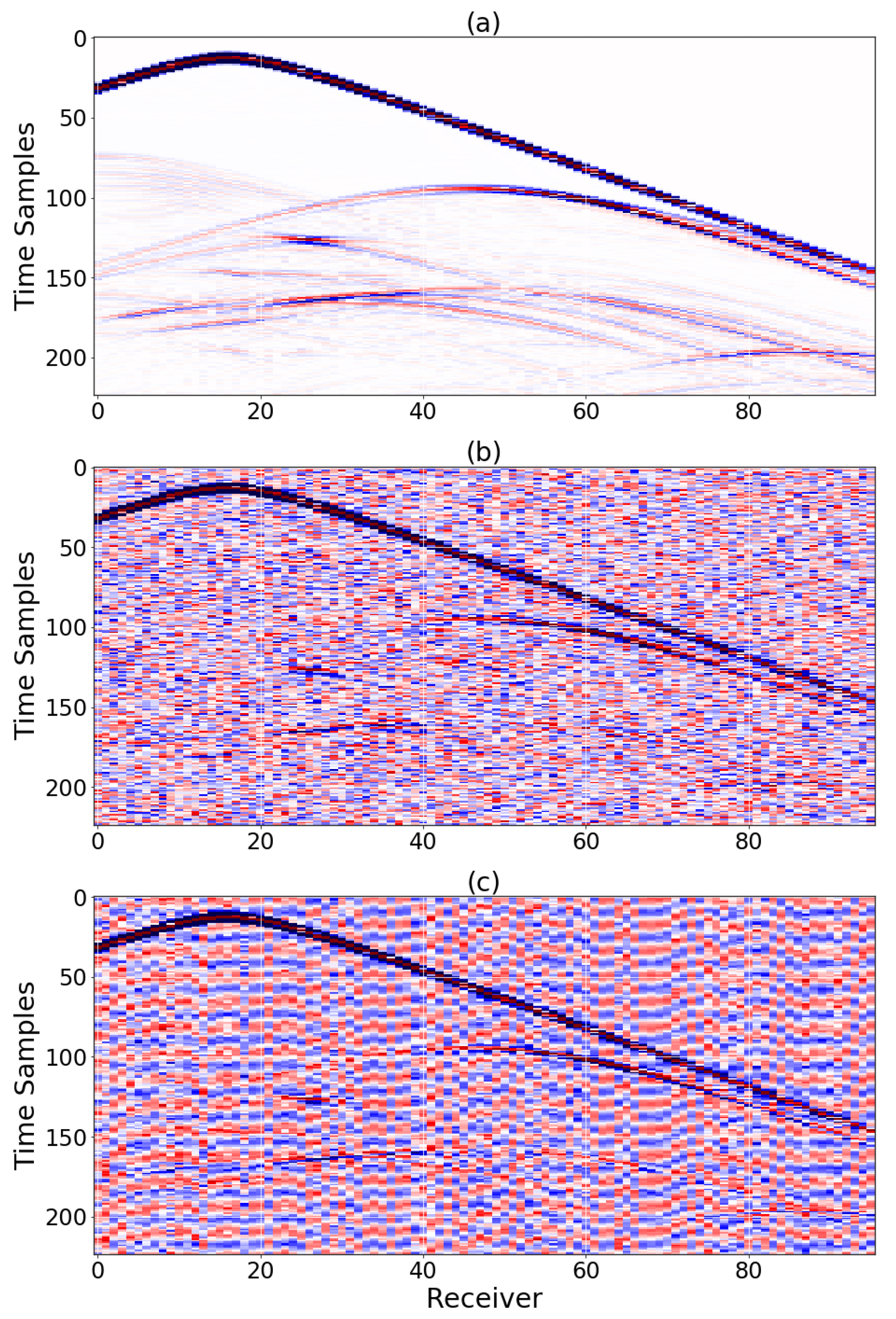

4.3. Noisy Data Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Jin, K.H.; McCann, M.T.; Froustey, E.; Unser, M. Deep Convolutional Neural Network for Inverse Problems in Imaging. IEEE Trans. Image Process. 2017, 26, 4509–4522. [Google Scholar] [CrossRef] [PubMed]

- Adler, A.; Araya-Polo, M.; Poggio, T. Deep Learning for Seismic Inverse Problems: Toward the Acceleration of Geophysical Analysis Workflows. IEEE Signal Process. Mag. 2021, 38, 89–119. [Google Scholar] [CrossRef]

- Mousavi, S.M.; Beroza, G.C. Deep-learning seismology. Science 2022, 377, eabm4470. [Google Scholar] [CrossRef] [PubMed]

- Araya-Polo, M.; Jennings, J.; Adler, A.; Dahlke, T. Deep-learning tomography. Lead. Edge 2018, 37, 58–66. [Google Scholar] [CrossRef]

- Yang, F.; Ma, J. Deep-learning inversion: A next generation seismic velocity-model building method. Geophysics 2019, 84, R583–R599. [Google Scholar] [CrossRef]

- Adler, A.; Araya-Polo, M.; Poggio, T. Deep recurrent architectures for seismic tomography. In Proceedings of the 81st EAGE Conference and Exhibition 2019, London, UK, 3–6 June 2019; European Association of Geoscientists & Engineers: Bunnik, The Netherlands, 2019; Volume 2019, pp. 1–5. [Google Scholar]

- Araya-Polo, M.; Adler, A.; Farris, S.; Jennings, J. Fast and accurate seismic tomography via deep learning. In Deep Learning: Algorithms and Applications; Springer: Berlin/Heidelberg, Germany, 2020; pp. 129–156. [Google Scholar]

- Park, M.J.; Sacchi, M.D. Automatic velocity analysis using convolutional neural network and transfer learning. Geophysics 2020, 85, V33–V43. [Google Scholar] [CrossRef]

- Zhang, Z.; Alkhalifah, T. Regularized elastic full-waveform inversion using deep learning. In Advances in Subsurface Data Analytics; Elsevier: Amsterdam, The Netherlands, 2022; pp. 219–250. [Google Scholar]

- Li, Y.; Alkhalifah, T. Target-Oriented Time-Lapse Elastic Full-Waveform Inversion Constrained by Deep Learning-Based Prior Model. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Fabien-Ouellet, G.; Sarkar, R. Seismic velocity estimation: A deep recurrent neural-network approach. Geophysics 2020, 85, U21–U29. [Google Scholar] [CrossRef]

- Zhu, W.; Xu, K.; Darve, E.; Biondi, B.; Beroza, G.C. Integrating deep neural networks with full-waveform inversion: Reparameterization, regularization, and uncertainty quantification. Geophysics 2022, 87, R93–R109. [Google Scholar] [CrossRef]

- Das, V.; Pollack, A.; Wollner, U.; Mukerji, T. Convolutional neural network for seismic impedance inversion. Geophysics 2019, 84, R869–R880. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, Q.; Lu, W.; Li, H. Physics-constrained seismic impedance inversion based on deep learning. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Wang, Y.Q.; Wang, Q.; Lu, W.K.; Ge, Q.; Yan, X.F. Seismic impedance inversion based on cycle-consistent generative adversarial network. Pet. Sci. 2022, 19, 147–161. [Google Scholar] [CrossRef]

- Kim, Y.; Nakata, N. Geophysical inversion versus machine learning in inverse problems. Lead. Edge 2018, 37, 894–901. [Google Scholar] [CrossRef]

- Biswas, R.; Sen, M.K.; Das, V.; Mukerji, T. Prestack and poststack inversion using a physics-guided convolutional neural network. Interpretation 2019, 7, SE161–SE174. [Google Scholar] [CrossRef]

- Russell, B. Machine learning and geophysical inversion—A numerical study. Lead. Edge 2019, 38, 512–519. [Google Scholar] [CrossRef]

- Sun, H.; Demanet, L. Low frequency extrapolation with deep learning. In SEG Technical Program Expanded Abstracts 2018; Society of Exploration Geophysicists: Houston, TX, USA, 2018; pp. 2011–2015. [Google Scholar]

- Ovcharenko, O.; Kazei, V.; Kalita, M.; Peter, D.; Alkhalifah, T. Deep learning for low-frequency extrapolation from multioffset seismic data. Geophysics 2019, 84, R989–R1001. [Google Scholar] [CrossRef]

- Ovcharenko, O.; Kazei, V.; Alkhalifah, T.A.; Peter, D.B. Multi-Task Learning for Low-Frequency Extrapolation and Elastic Model Building From Seismic Data. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Sun, H.; Demanet, L. Extrapolated full-waveform inversion with deep learning. Geophysics 2020, 85, R275–R288. [Google Scholar] [CrossRef]

- Sun, H.; Demanet, L. Deep Learning for Low-Frequency Extrapolation of Multicomponent Data in Elastic FWI. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–11. [Google Scholar] [CrossRef]

- Tarantola, A. Inversion of seismic reflection data in the acoustic approximation. Geophysics 1984, 49, 1259–1266. [Google Scholar] [CrossRef]

- Schuster, G.T. Seismic Inversion; Society of Exploration Geophysicists: Houston, TX, USA, 2017. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Akhiyarov, D.; Gherbi, A.; Araya-Polo, M. Machine Learning Scalability Requires High Performance Computing Strategies. In Proceedings of the First EAGE Conference on Machine Learning in Americas, Online, 22–24 September 2020; European Association of Geoscientists and Engineers: Bunnik, The Netherlands, 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Li, Y.F.; Bou Matar, O. Convolutional perfectly matched layer for elastic second-order wave equation. J. Acoust. Soc. Am. 2010, 127, 1318–1327. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Bovik, A.; Sheikh, H.; Simoncelli, E. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

| Block | Layer | Unit | Comments |

|---|---|---|---|

| Input | 0 | Seismic Cube | grid points |

| 1 | Conv3D(32, (), ReLU) | + InstanceNormalization | |

| Enc1 | 2 | Conv3D(32, (), ReLU) | + InstanceNormalization |

| 3 | MaxPool3D | + Dropout(0.2) | |

| Enc2 | 4–6 | Enc1(64) | |

| Enc3 | 7–9 | Enc1(128) | |

| Enc4 | 10–12 | Enc1(256) | |

| Enc5 | 13–14 | Enc1(512) | without MaxPool3D |

| 15 | ConvTrans3D(256, (), ReLU) | + InstanceNormalization | |

| Dec1 | 16 | Conv3D(256, (), ReLU) | + InstanceNormalization |

| 17 | Conv3D(256, (), ReLU) | + InstanceNormalization | |

| Dec2 | 18–20 | Dec1(128) | |

| Dec3 | 21–23 | Dec1(64) | |

| Dec4 | 24–26 | Dec1(32) | |

| 27 | Conv3D(1, (), ReLU) | final reconstruction layer | |

| Output | 28 | Velocity Model | grid points |

| Metric | Noiseless | White Noise | White Noise | White Noise | Field Noise | Field Noise | Field Noise |

|---|---|---|---|---|---|---|---|

| Data | (SNR = 20 dB) | (SNR = 10 dB) | (SNR = 0 dB) | (SNR = 20 dB) | (SNR = 10 dB) | (SNR = 0 dB) | |

| SSIM(3D) | 0.9335 (0.0449) | 0.9316 (0.0440) | 0.9192 (0.0465) | 0.8621 (0.0528) | 0.9271 (0.0461) | 0.9143 (0.0493) | 0.8605 (0.0488) |

| SSIM(XZ) | 0.9294 (0.0458) | 0.9272 (0.0446) | 0.9135 (0.0467) | 0.8475 (0.0484) | 0.9222 (0.0470) | 0.9078 (0.0495) | 0.8455 (0.0425) |

| SSIM(XY) | 0.9433 (0.0388) | 0.9417 (0.0394) | 0.9329 (0.0409) | 0.8960 (0.0425) | 0.9385 (0.0407) | 0.9296 (0.0427) | 0.8953 (0.0401) |

| SSIM(YZ) | 0.9280 (0.0471) | 0.9257 (0.0460) | 0.9113 (0.0484) | 0.8426 (0.0497) | 0.9207 (0.0481) | 0.9054 (0.0515) | 0.8407 (0.0438) |

| MAE(3D) | 0.0380 (0.0349) | 0.0394 (0.0343) | 0.0490 (0.0428) | 0.1214 (0.0716) | 0.0426 (0.0375) | 0.0550 (0.0510) | 0.1206 (0.0667) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gelboim, M.; Adler, A.; Sun, Y.; Araya-Polo, M. Encoder–Decoder Architecture for 3D Seismic Inversion. Sensors 2023, 23, 61. https://doi.org/10.3390/s23010061

Gelboim M, Adler A, Sun Y, Araya-Polo M. Encoder–Decoder Architecture for 3D Seismic Inversion. Sensors. 2023; 23(1):61. https://doi.org/10.3390/s23010061

Chicago/Turabian StyleGelboim, Maayan, Amir Adler, Yen Sun, and Mauricio Araya-Polo. 2023. "Encoder–Decoder Architecture for 3D Seismic Inversion" Sensors 23, no. 1: 61. https://doi.org/10.3390/s23010061

APA StyleGelboim, M., Adler, A., Sun, Y., & Araya-Polo, M. (2023). Encoder–Decoder Architecture for 3D Seismic Inversion. Sensors, 23(1), 61. https://doi.org/10.3390/s23010061