1. Introduction

Civil infrastructure systems, such as bridges, can be subjected over their service life to excessive loads and adverse environmental conditions. As a result, with the progress of time, cracks and other surface defects begin to appear. Therefore, routine inspection and maintenance are periodically required to ensure satisfactory serviceability and safe operation. Visual inspection of structures for surface defects is the conventional way that is widely adopted in practice. In concrete structures, cracks are considered an early sign of structural deterioration and, therefore, require careful monitoring. Geometric characteristics of concrete cracks such as their length, width, direction, and pattern of propagation are usually used to determine their severity. These are the parameters that are commonly estimated and recorded by an inspection crew during on-site investigations. However, performing on-site inspection can be labor-intensive, costly, error-prone, and unsafe, as well as disruptive to or interfering with the traffic. As a result, there is a surge of interest in automated inspection systems in which optical sensors are utilized to detect and locate visual defects and measure their properties using computer-based image processing techniques.

In recent years, there has been a surge of interest among researchers in utilizing machine learning techniques to predict visual surface defects, e.g., [

1], as well as, mechanical strength of concrete structural components, e.g., [

2]. With regard to crack detection, researchers have conventionally adopted different computer-vision algorithms to visualize crack locations in images. Edge detection techniques such as Canny and Sobel filters are traditionally used for crack detection [

3]. However, these methods are sensitive to the image conditions such as camera distance, light conditions, and background [

4]. Thus, the outcome of such techniques can be inconsistent. In search of more reliable techniques, recent studies focus on utilizing the power of deep convolutional neural networks (CNNs) for crack detection and segmentation. These studies demonstrated that fully convolutional neural networks (FCNs) have remarkable outcomes for crack segmentation which is the task of crack prediction at a pixel level [

5,

6,

7,

8,

9,

10]. The great advantage of FCNs is that they can process raw inspection images and produce high-resolution predicted crack masks. Effectively, when trained with large datasets FCNs can generate accurate predictions without pre-processing. However, the drawback of FCNs for concrete crack segmentation is that when trained with small datasets—such as those publicly available labeled images for crack segmentation—they may have poor performance when tested on images with different scenes and backgrounds. Thus, even though new architectures that are customized for various surface defection (e.g., SDDNet [

11] and SrcNet [

12]) are being developed by researchers, the practical application of segmentation networks may remain limited until more labeled datasets for crack segmentation become available. For the case of small training datasets, an effective solution to improve the accuracy and generalization of networks is to follow the method of Transfer Learning (TL). The concept of TL is to utilize the skills that a network learned when trained for a source task with a large dataset to improve the performance of the network for a target task with a small dataset. A common approach in TL is to reuse the parameters from a network pre-trained for the source task to initialize the parameters of the new network. The initialized network can then be fine-tuned for the target task using the relevant dataset.

In many applications, it is proved that when dealing with small datasets, CNNs trained using TL outperform those networks that are trained from scratch [

13]. For surface damage detection it is common to employ networks pre-trained on general visual object detection datasets such as ImageNet [

14]. For example, Gopalakrishnan et al. [

15] used a popular CNN classifier called VGG-16 pre-trained on ImageNet to detect cracks on concrete and asphalt pavements. Gao and Mosalam [

16] also utilized CNN classifiers pre-trained on ImageNet for structural damage classification. Zhang et al. [

17] adopted a similar approach for pavement crack classification. Similarly, Dais et al. [

18] adopted popular image classification networks, such as MobileNet [

19], which were pre-trained on ImageNet for crack detection in masonry surfaces. In a similar approach, Yang et al. [

20] fine-tuned the weights resulted from training on ImageNet for crack classification. For the task of road crack segmentation, Bang et al. [

21] used ImageNet to pre-train the convolutional segment of an encoder-decoder network. Using a different dataset, Choi and Cha [

11] employed a modified version of the semantic segmentation dataset Cityscapes [

22] for pre-training a new crack segmentation network called SDDNet. Networks pre-trained on large-scale datasets such as ImageNet and Cityscapes with many different categories (e.g., cars, cats, chairs, etc.) have the skills necessary for detecting objects in a scene. However, these networks do not specifically learn the features associated with cracks. Additionally, these datasets may lack the common type of backgrounds that may appear in crack images.

A more efficient solution is to pre-train segmentation networks with the available crack datasets and use synthesized crack images for fine-tuning the network. The synthesized crack images should include the unique features that appear in an actual image. In a method called CutMix, a random patch from one image in the dataset is cropped and pasted onto another image to generate a dataset that resembles real conditions. This method was first introduced by Yun et al. [

23] as a regularization strategy to improve generalization of CNN classifiers. They empirically demonstrated that CutMix data augmentation can significantly boost performance of the classifiers. However, randomly selecting the location of the cropped patches may result in a non-descriptive image and can limit its performance gain. Therefore, Walawalkar et al. [

24] proposed an enhanced version of this method called Attentive CutMix where, instead of a random combination, only the most important regions of the image are cropped. These regions are selected based on the feature maps of another trained CNN classifier. Li et al. [

25] incorporated Adaptive CutMix in their TL pipeline to expand the dataset of road defects. Following a similar mixing concept, in Mosaic data augmentation method four images are combined to form new training data. Yi et al. [

26] used the Mosaic augmentation technique to increase the size of their training dataset for defect detection inside sewer systems. For the task of crack segmentation, however, the random selection of image patches may result in images that have no distinctive features associated with cracks. That is because cracks consist of thin linear shapes that occupy only a fraction of the image. Additionally, methods such as Attentive CutMix require an additional feature extractor network to pick up the regions that have the most relevant information which increases the complexity of the method. Therefore, in this study, a simple yet effective data synthesis method based on CutMix is proposed where the cropped patch is selected by considering the spread and distribution of cracks. This reduces the chance of generating non-descriptive crack images. Considering the significant discrepancy between the backgrounds of images in publicly available crack datasets and those of the images used in practice, in this study, in an automated manner, background information from uncracked scenes is employed to provide segmentation networks with a boost of performance. This can potentially reduce the possibility of false detection of background objects that resemble cracks, and thus, improve the precision of detection.

Another solution to improve accuracy, and hence performance, of existing segmentation networks is to incorporate post-processing algorithms. Particularly, for a sequence of crack images captured from the same structure at different points in time, the outcome of crack segmentation can be aggregated to improve the accuracy of the segmentation network. That is because cracks grow gradually, and their lengths and width increase with the progress of time. Therefore, detections from a future step can inform the position of cracks in the previous steps. Additionally, previous steps can be used to correct segmentation errors that might be caused by unexpected changes in the image conditions. Following this idea, in this study, a temporal data fusion method is proposed to enhance the outcome of segmentation networks. The proposed method is applicable for cases in which images of a concrete structure are sequentially captured over time. It is shown that the segmentation results of such images can be fused to improve the detection recall.

The remainder of this paper is organized as follows.

Section 2 states the objective and significance of the research.

Section 3 provides an overview of the proposed improvements. In

Section 4, the details and training procedure of the segmentation networks are described. In

Section 5, the proposed data synthesis technique is explained and validated.

Section 6 describes details of the temporal data fusion and its effect on segmentation accuracy. Lastly, a summary and concluding remarks are given in

Section 7.

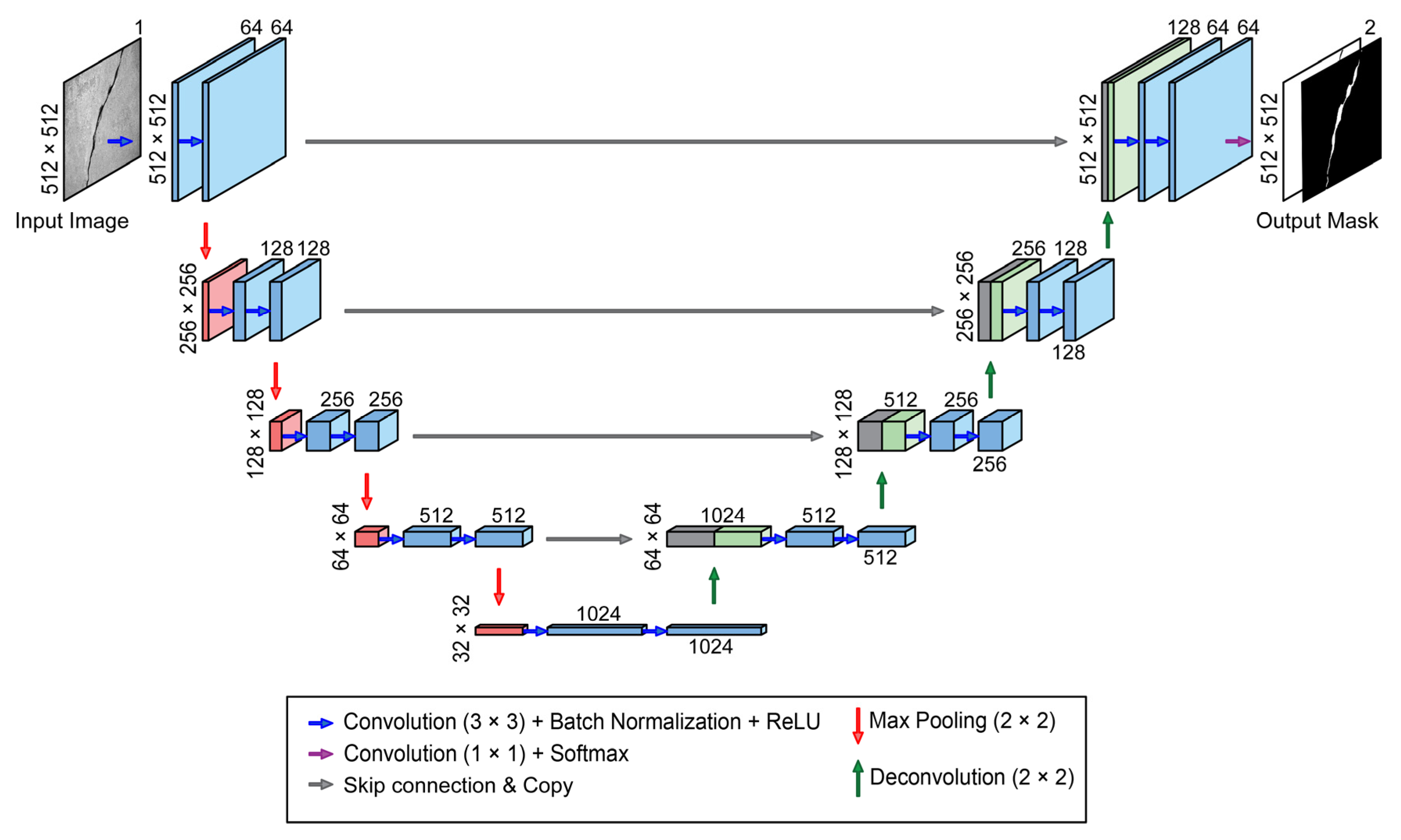

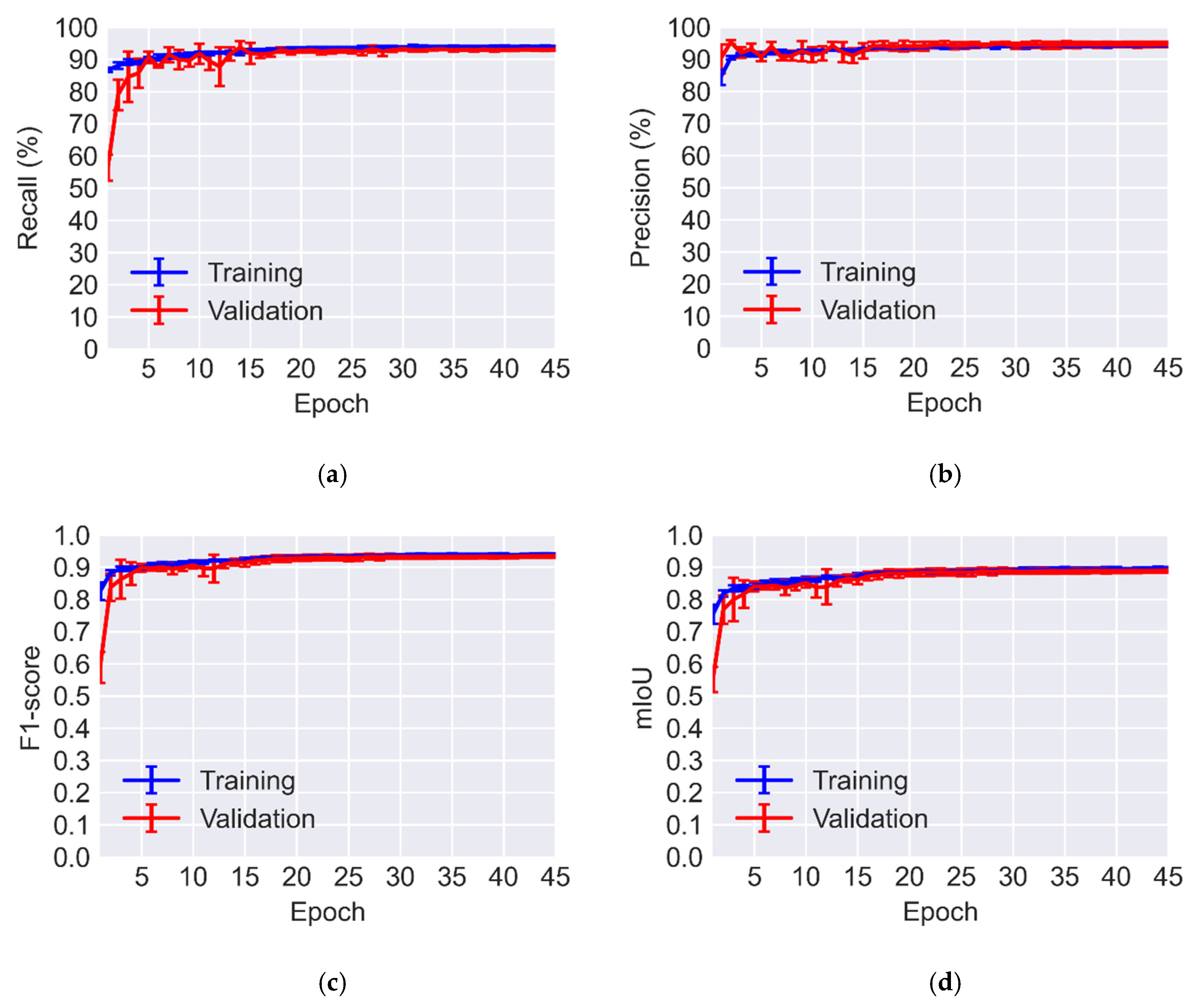

5. Fine-Tuning with Synthetic CutMix Data

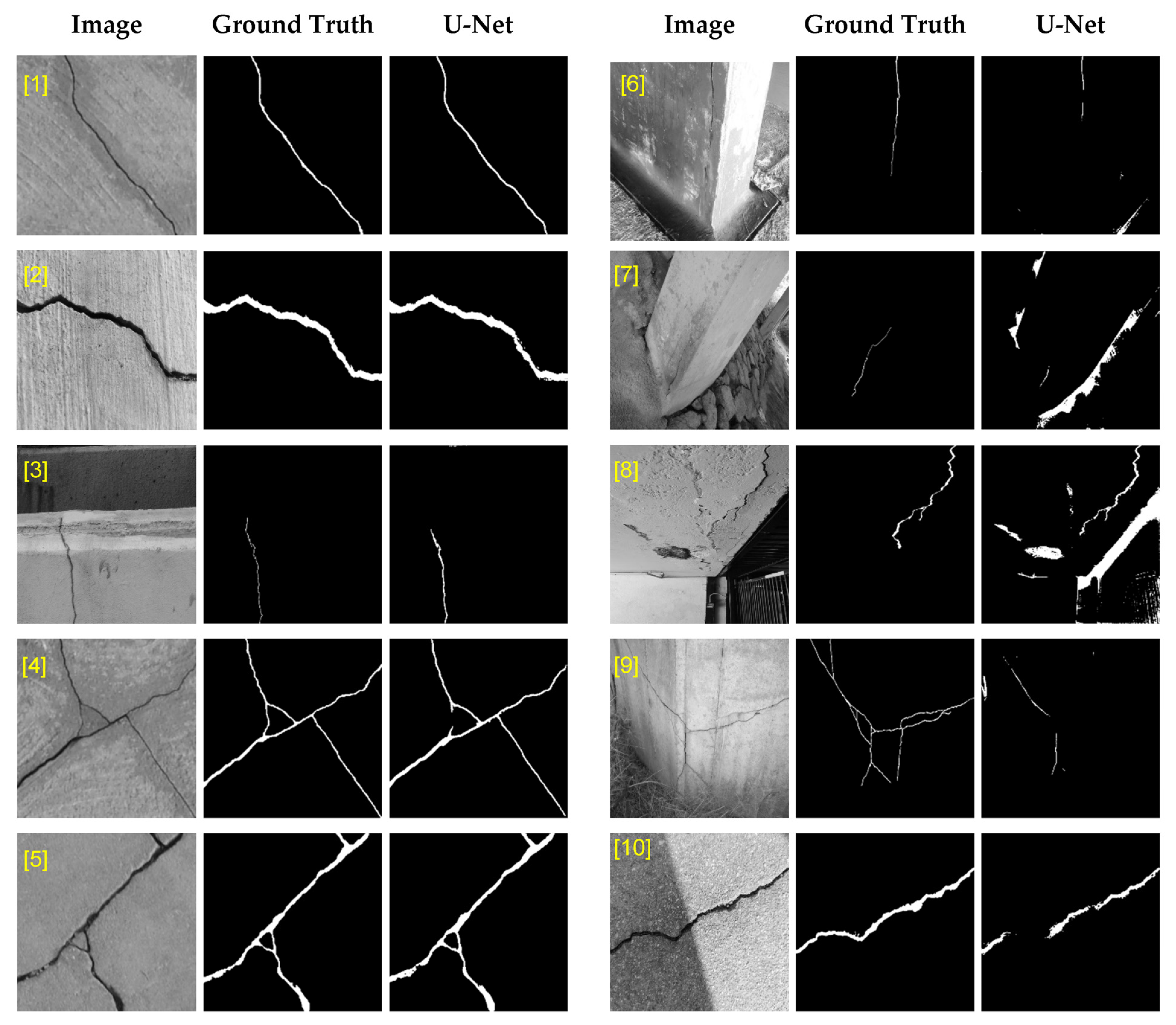

As shown in

Figure 9, the Base Model struggles with distinguishing actual cracks from background objects and surfaces. This is because the new scene of the target dataset is unique and had no representation in the training dataset. To address this problem, a solution is to fine-tune the model with new images with backgrounds similar to the target dataset. In accordance with the Transfer Learning procedure, the Base Model is used as a pre-trained model whose parameters are used to initialize the new model before fine-tuning. This helps the network to learn the features that are specific to the target dataset while maintaining its crack segmentation skills. In this study, during re-training of the U-Net, all layers are allowed to be updated and none of the parameters are frozen.

A solution for capturing background information is to use an image of a scene with no cracks. In the case of the beam-column joint specimen used in this study, an image before the beginning of the experiment can be utilized for this purpose. A major problem with re-training the U-Net with a dataset of no crack pixels is that it can make the network converge to a trivial solution where it assumes that the pixels consistently belong to the background. As a result, the U-Net can rapidly lose the skills learned to detect cracks. A more effective solution is combining the background information of the target dataset with the crack images in the public dataset. In this way, the network can be trained with a dataset that has crack information along with the features specific to the target dataset. The method proposed here to synthesize such a dataset is an extension to CutMix data augmentation technique proposed by Yun et al. [

23]. The CutMix technique is intended to improve the generalization capability of the CNN classifiers. Here, it is repurposed to generate a dataset that is a mix of a public labeled dataset and the background information of the target dataset.

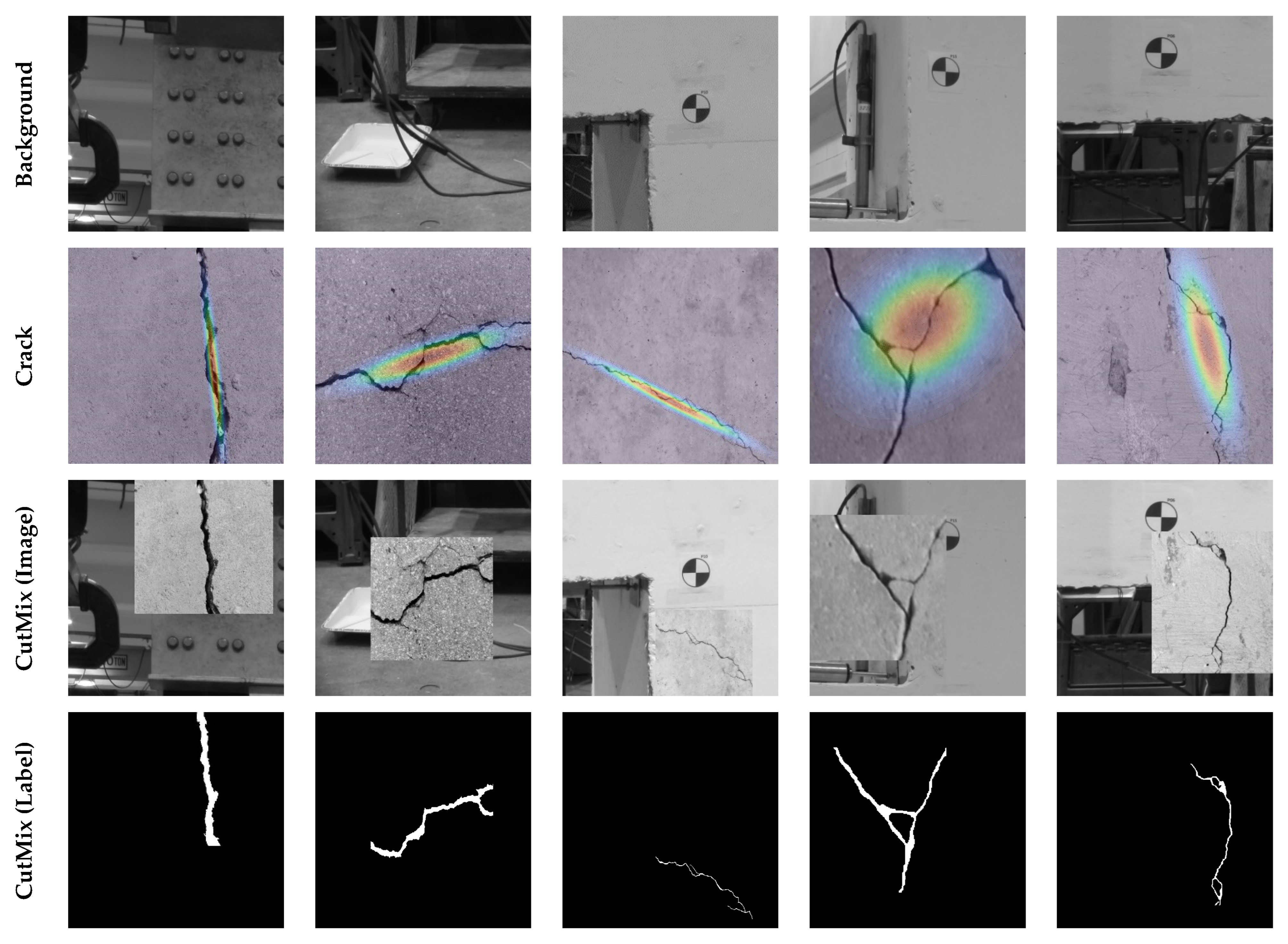

As illustrated in

Figure 11, in this technique a pair of images are randomly selected. One image is a crack image, i.e., Image (A), drawn from the public dataset with its corresponding label. The other image, i.e., Image (B), is from a scene of the target structure when cracks are not initiated yet, therefore, its label, i.e., Label (B), contains no crack pixels. In this way, all pixels in Image (B) can be labeled as background. Here, both images have equal dimensions of 512 × 512 pixels. To combine these two images, a rectangular patch with the center at coordinates

, displayed by the “+” symbol in

Figure 11, and width,

, and height,

, is randomly generated. As shown in

Figure 11, in the new combined Image (C), the area inside the rectangle is cropped from Image (A) and pasted in Image (B). Following a similar procedure, the corresponding Label (C) for the new image can be produced as well.

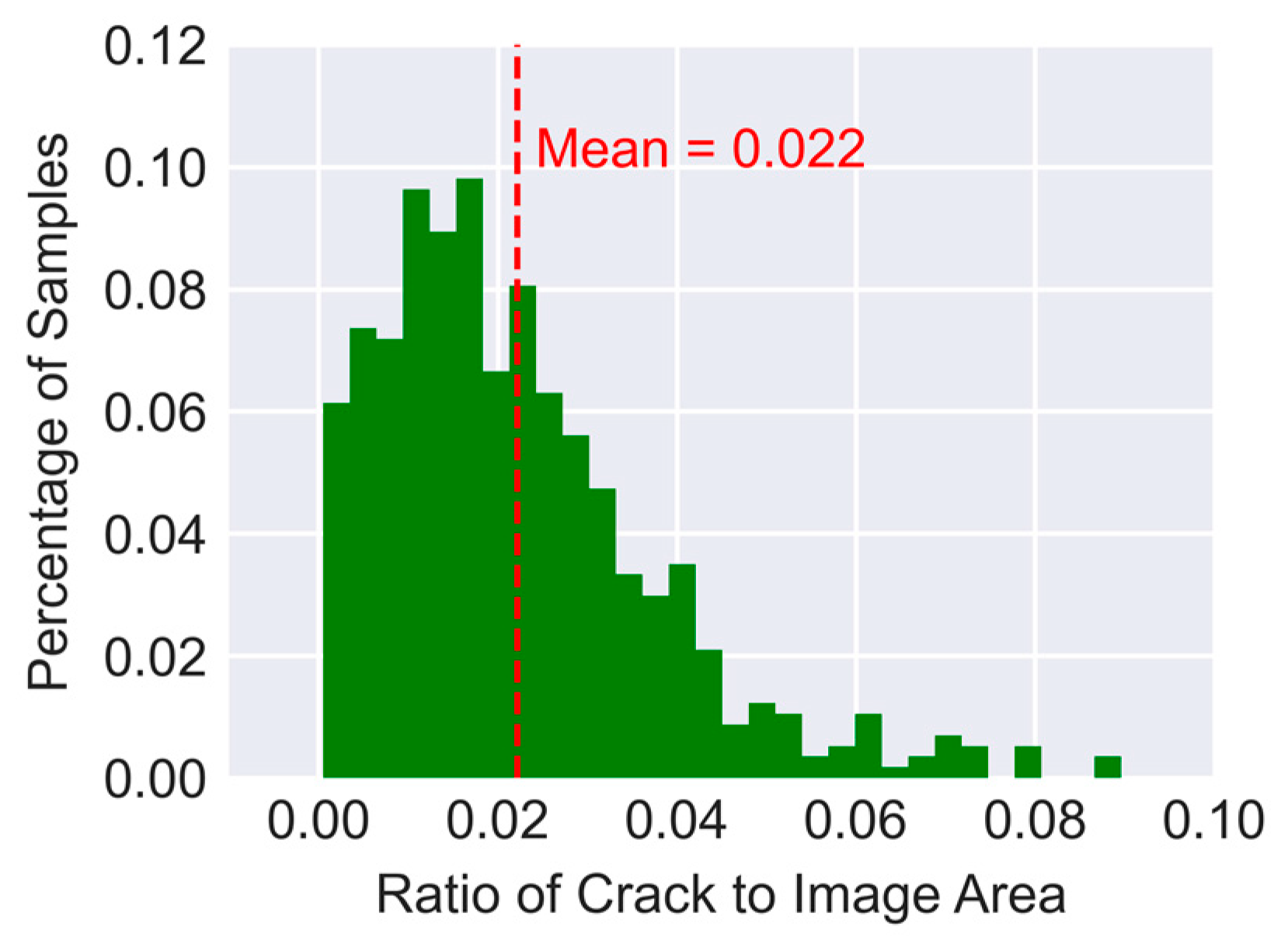

In the original CutMix technique, the coordinate for the center of the rectangular patch is drawn from a uniform distribution. For a general image classification task, where objects occupy a large portion of any given image, uniform random distribution for selecting the center point is a reasonable choice. However, for crack images, this approach can be problematic. The reason being since cracks are made up of small lines the odds of selecting a rectangle that includes no crack are high. As a result, the new dataset may have a significant number of samples with no crack information. To avoid this issue, a better approach is to select the center point from a multivariate normal distribution as shown in the following equation,

where the mean vector

and the covariance matrix

are determined based on Label (A). These parameters can be calculated as in Equations (9) and (10). In these equations,

is the value of each pixel in Label (A) which is equal to 1 for crack pixels and zero for background pixels. Equation (9) calculates the mean vector which gives the coordinates of the center of the crack

, and Equation (10) finds the covariance matrix which reflects the spread of the crack in both directions. The parameter

can be used to tighten the spread of the distribution. The lower

is the higher is the chance of positioning the center of the rectangle closer to the center of the crack.

As shown in Equation (11), the width and height of the rectangle are calculated through variable

, where

is sampled from a uniform distribution (Equation (12)). In this manner, the resulting rectangle maintains the aspect ratio of the underlying image.

The lower and upper bounds and are introduced to control the ratio that the crack image is allowed to cover the background image. Lastly, for the cases where the generated rectangular patch goes beyond the image boundaries only the parts that are inside are considered and the outside region is cropped out.

Figure 12 illustrates examples of images generated through the CutMix synthesis. The images in the first row are from the background of the target structure, and the images in the second row show examples of crack images from the public dataset. The contours overlayed on top of the crack images are the multivariate normal distribution derived from their corresponding binary mask. As shown, images with cracks propagated in multiple directions have wider distributions, and images with thin linear cracks have a narrow distribution in the major direction of the crack. These distributions determine the probability of the center points of rectangles used to create the CutMix images shown in the third row. In this study, it is assumed that

,

, and

.

The CutMix data synthesis is performed “on the fly” during fine-tuning of the Base Model. Following a similar tiling method described in

Section 4.3, an image of the concrete specimen before the beginning of the experiment is selected and divided into 81 images. These background images are randomly mixed with a crack image from the public dataset of 540 images to generate new training samples. Meanwhile, the standard data augmentation techniques described in

Section 4.2 are also performed on the crack images prior to CutMix synthesis. The Base Model was fine-tuned for 60 epochs with the learning rates of 10

−5, 10

−6, and 10

−7 for the first, second, and third 20 epochs. Other hyperparameters remained unchanged during fine-tuning. In

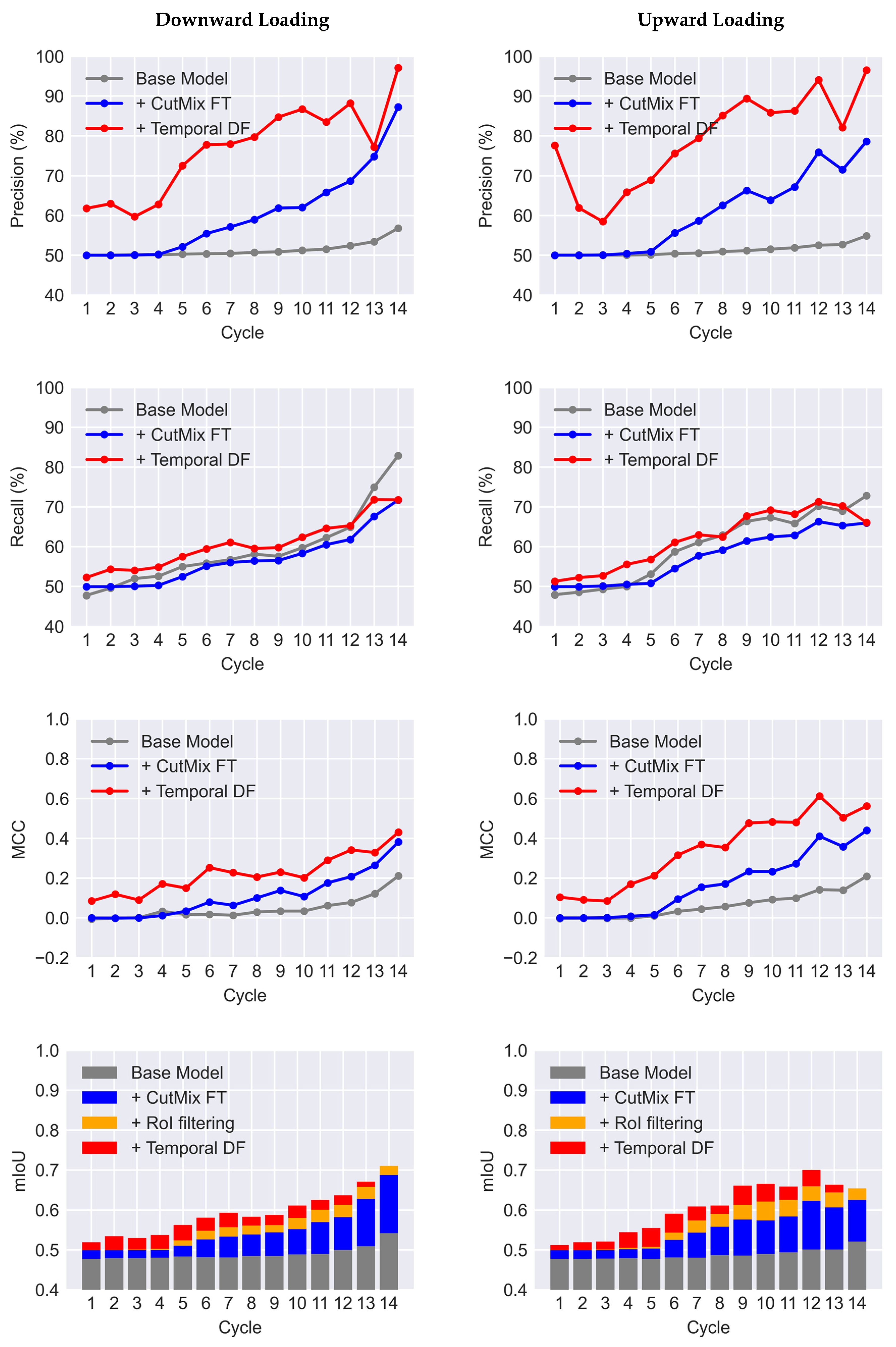

Figure 9, results of the fine-tuned model are demonstrated on samples of the target dataset. As can be seen, after fine-tuning with the CutMix synthetic data, the number of false positives is remarkably reduced. The network could effectively filter out background objects from the initial detections of the Base Model. As a result, the precision improves 8.94% (see

Table 1). According to

Figure 10, the improvement in precision becomes more pronounced for the later cycles of the experiment in such a way that for Cycle 14 of downward loading it jumps from 56.76% to 87.26%. The recall, however, has a slight 2% average reduction.

Figure 10 confirms that for most cycles the recall decreases. Nonetheless, the F1-score, mIoU, and MCC have an increase of up to 0.17, 0.14, and 0.27, with an average gain of 0.07, 0.06, and 0.08, respectively.

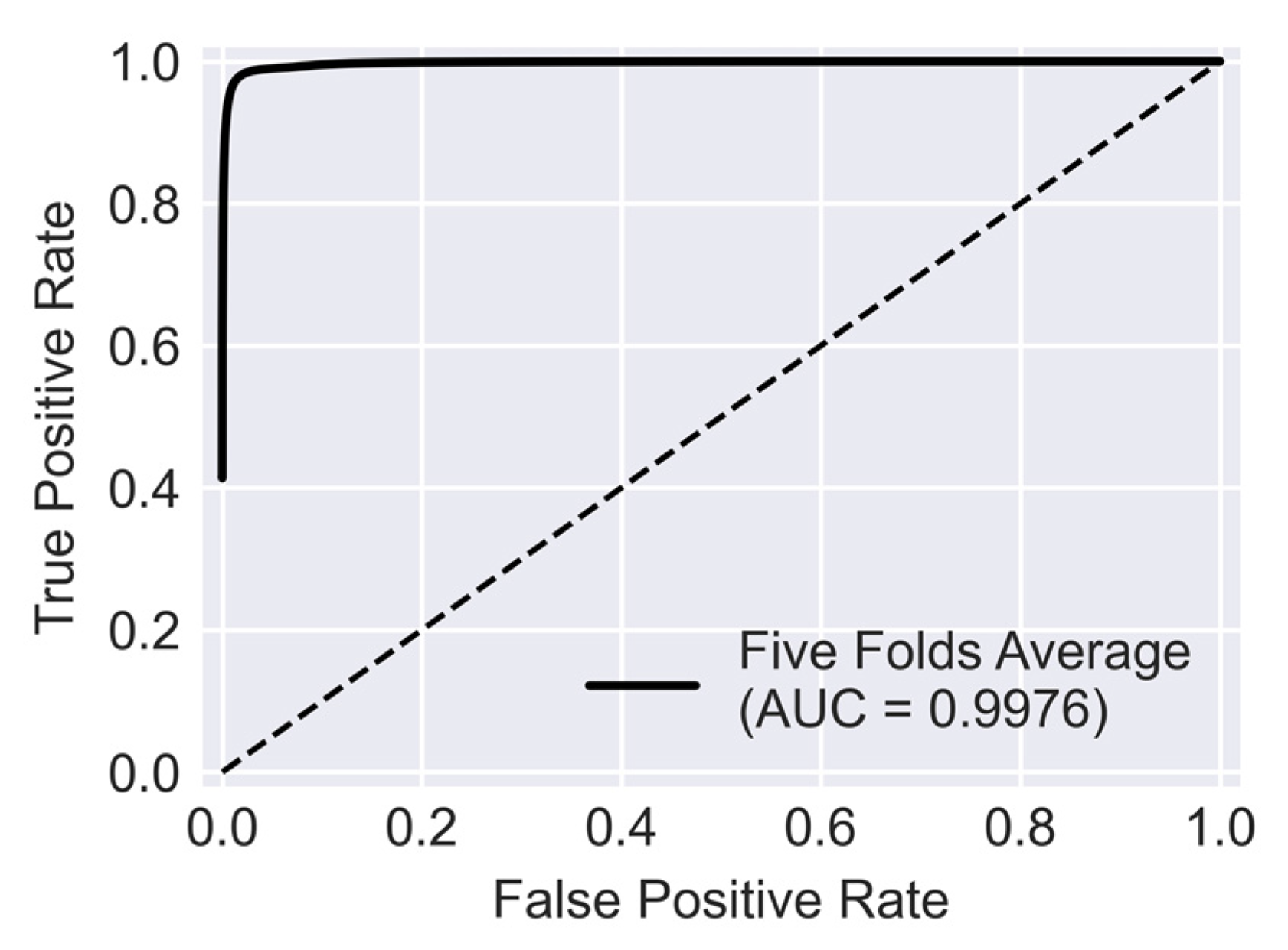

In

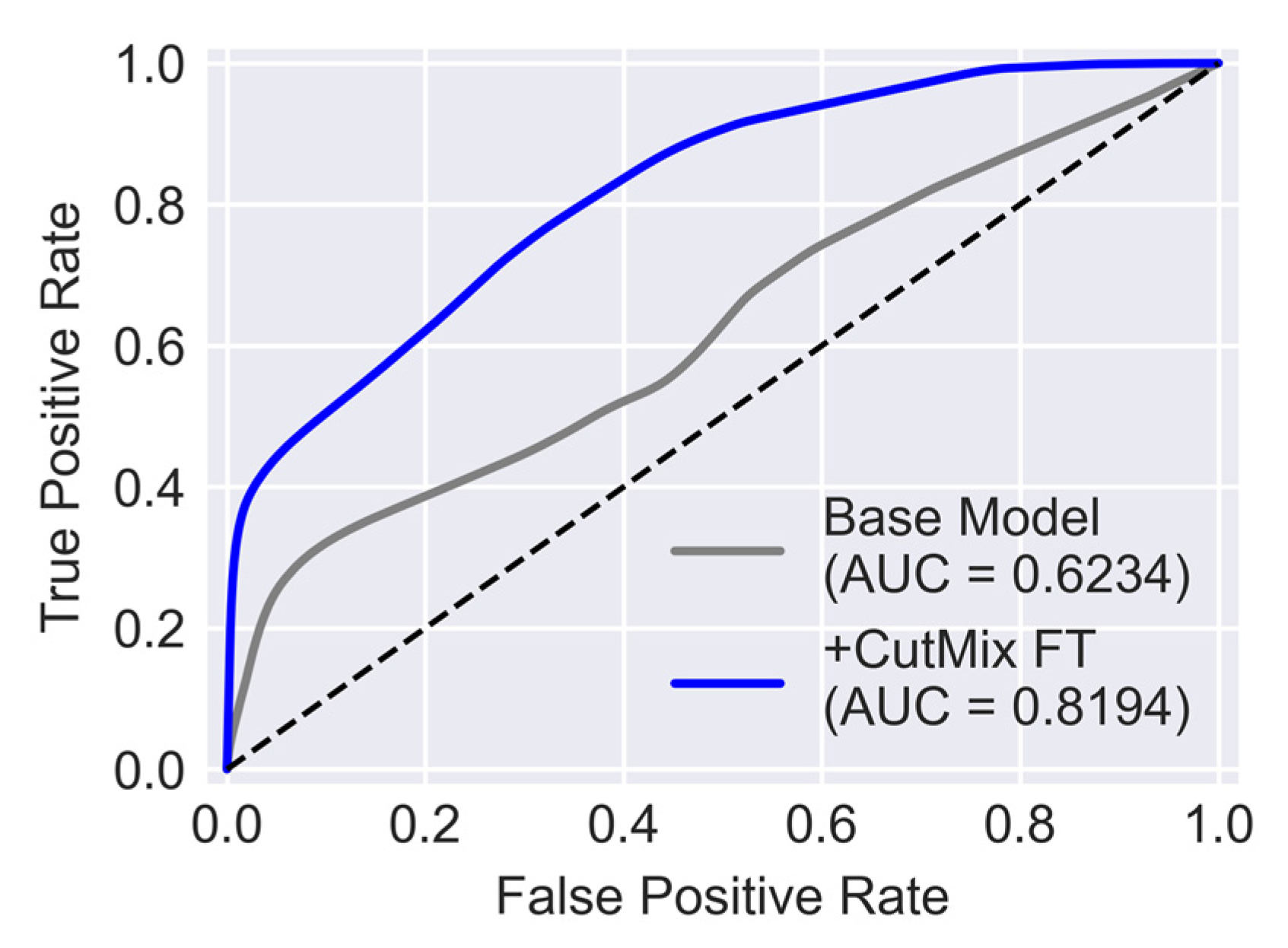

Figure 13, the ROC curve for the Base Model is compared with that of the model trained with CutMix synthesized data. Poor performance of the Base model when evaluated with the target dataset is evident by the fact that its ROC curve is closer to the diagonal line. Meanwhile, the model trained with CutMix data is relatively more skillful in separating crack pixels from background pixels. As a result, this model has an AUC equal to 0.8194 which, compared to the AUC of the Base Model equal to 0.6234, is a significant improvement.

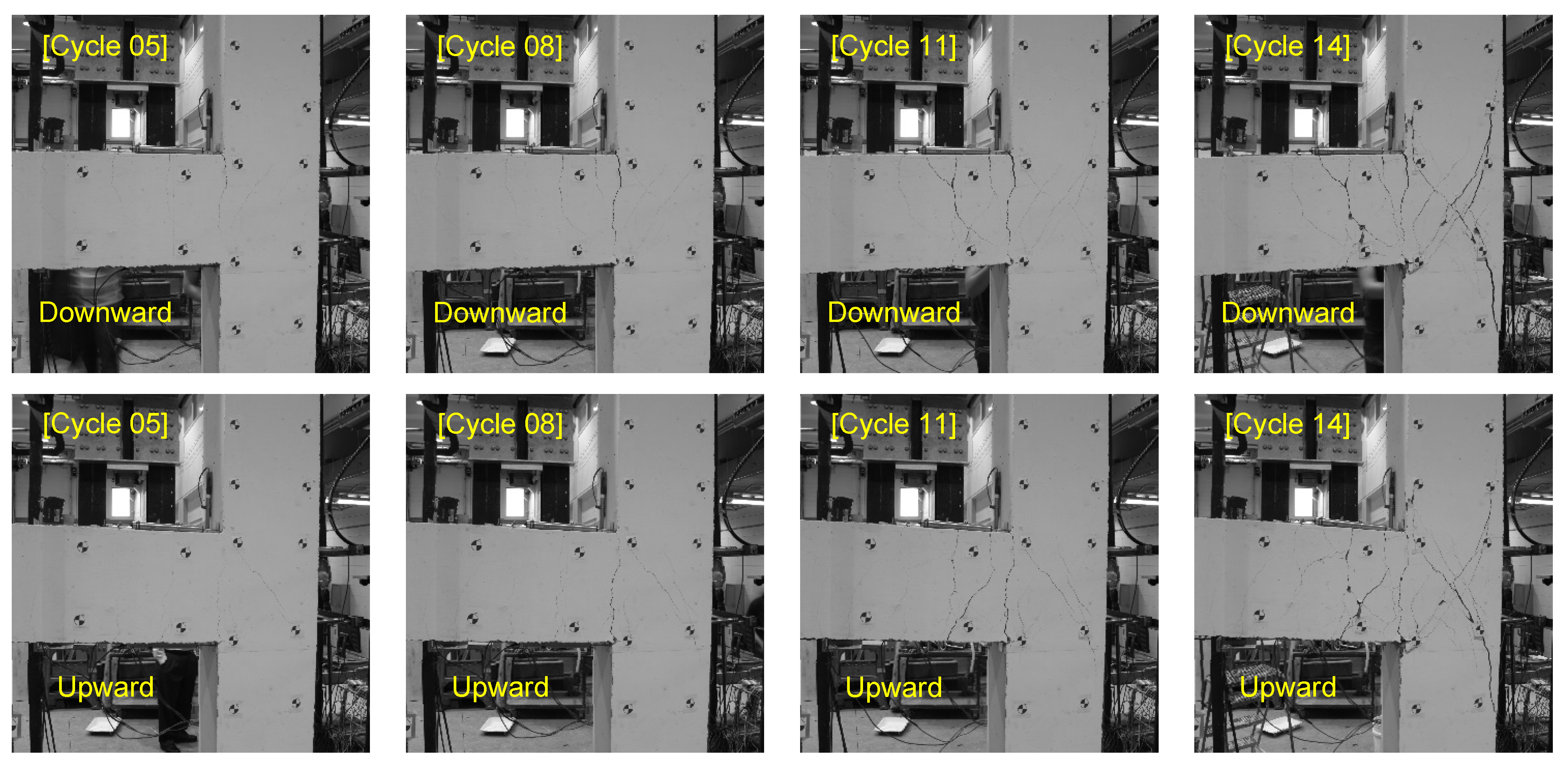

6. Temporal Data Fusion

Segmentation networks can easily miss thin cracks which results in a reduction in their recall. However, when a set of images from various cycles of a developing crack is available, detected thick cracks in future steps can be employed to locate thin cracks in the past steps. That is because crack propagation is a gradual process and thin cracks in images of earlier cycles turn into larger and more distinct cracks later. Thus, by fusing the data of multiple steps in the sequence of images crack pixels that are initially detected as background can be retrieved. The first step to aggregate the segmentation outcomes of multiple cycles is to correct the relative movements due to the structural deformations. Doing so requires tracking unique features in the images. In this study, as can be seen in

Figure 1, there are 14 fiducial circular markers attached to the surface of the concrete specimen [

37]. These markers can be utilized to track feature points in various images. The precise center point of each marker is found through the Normalized Cross-Correlation (NCC) method [

38]. Based on the center points of these markers a region of interest (ROI) can be defined similar to the green polygon shown in

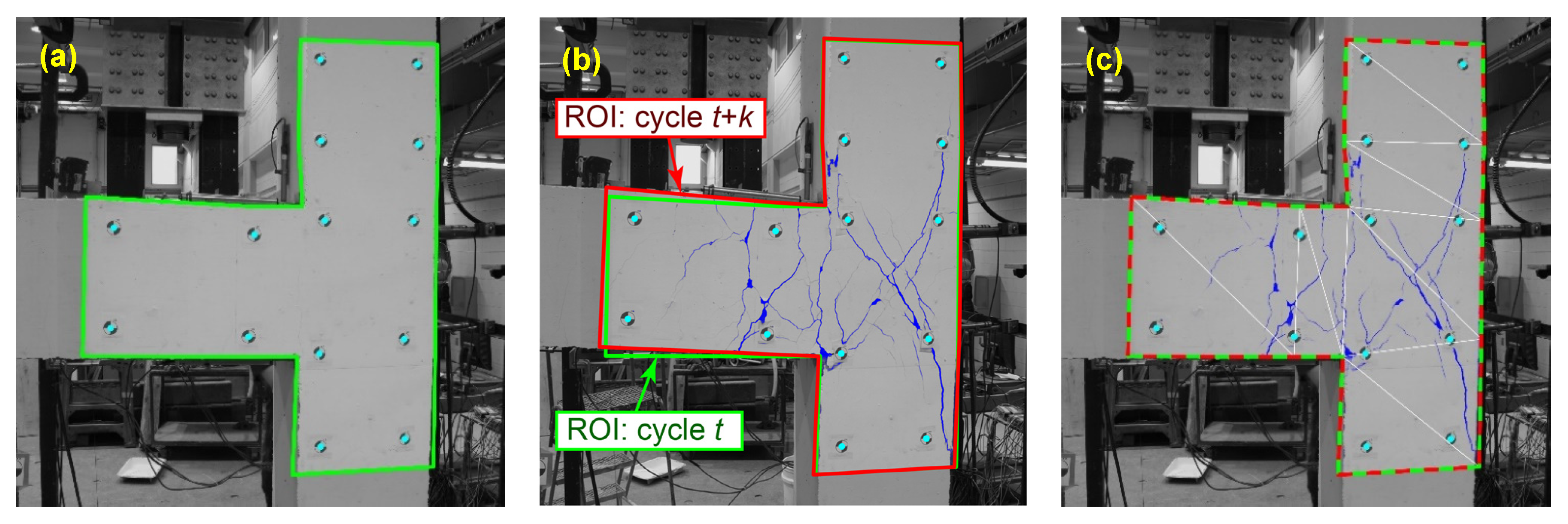

Figure 14a. In the defined ROI, each corner of the polygon has a fixed distance relative to the closest marker’s center point. Given that the position of the camera remained unchanged for all images, the center points can be used as key points to make a transformation between ROIs in different cycles and thus cancel the effect of structural deformations.

Figure 14b illustrates the image and crack mask of cycle

t + k. In this image, the ROIs corresponding to steps

t and

t + k are drawn in green and red, respectively. By matching the key points of these two ROIs, a transformation can be created that maps the crack mask from cycle

t + k back onto cycle

t. Since the concrete specimen underwent a non-rigid deformation, a piecewise linear transformation was used in this study. The ROI of cycle

t + k is divided into 12 triangles and the key points of each triangle are matched with the corresponding points in step

t via an affine transformation.

Figure 14c displays the result of the transformed mask overlaid on the image of step

t. The affine transformation is done using MATLAB Image Processing Toolbox.

Following the described mapping process, as shown in

Figure 15, for cycle

t a new projected crack mask

can be defined as the crack mask from cycle

ahead mapped onto the ROI of the current step

t. In this figure,

is the crack mask of the current step. Therefore, using Equation (13) the effect of

future steps can be accumulated by defining

as the weighted average of all future predictions.

In this equation,

is the weight associated with each considered step. Since closer steps have higher correlations with the current step it is reasonable that they receive higher weights. Thus, following a linear decay rule, the weight of step

k can be calculated as in Equation (14).

The purpose of the proposed data fusion is to capture the crack pixels that are falsely detected as background. Therefore, the future steps should only affect the background pixels. Equation (15) is the mathematical representation of this constraint. In this equation,

is a matrix of ones with dimensions

W ×

H, and operation “

” is element-wise multiplication. Additionally, output of the indicator function 𝕀(.) is equal to one for elements of

that are greater than 0.5, otherwise, it is equal to zero. Lastly,

is the final crack mask resulting from the proposed temporal data fusion procedure.

In the current study, all the future cycles are included to find the weighted average

(Equation (15)). Additionally, only images of loading directions that are consistent with the current step are considered in data fusion. That is because the propagation of cracks in concrete elements depends on the direction of loading. As a result, during the upward and downward loading the geometry of crack patterns is different. As shown in

Table 1, application of the described data fusion method on the target dataset has a significant effect on the accuracy of the resulting crack mask. The average precision and recall have a 17.28% and a 4.03% increase, respectively. Additionally, the average F1-score and mIoU improved to 0.6564 and 0.5975, respectively. It should be mentioned that the increase in the precision can be attributed to the fact that during the data fusion process false detections outside the defined ROI are filtered out. However, the increase in recall is directly due to the improvements achieved through the application of data fusion. Specifically, data fusion could capture false negatives using the information from the future steps. As shown in

Figure 10, data fusion has positive impacts on the recall of all cycles from 1 to 13. Cycle 14, as the final step in the sequence, could not benefit from data fusion, thus, for this cycle all metrics remained unchanged. To better evaluate the performance of the proposed data fusion method in the plot of mIoU the increase due to ROI filtering is separated from the improvements resulting from data fusion. As shown, especially for the earlier cycles, data fusion has a significant impact which can be up to 8.6% relative improvement.

The weight defined in Equation (14) puts more emphasis on the closer steps and discounts the effect of further steps. In this study, the weight of each step is assigned based on a linear decay rule due to its simplicity. However, it should be noted that the only hyperparameter that needs to be selected is the total number of steps considered for data fusion which depends on the rate at which cracks are growing between cycles.

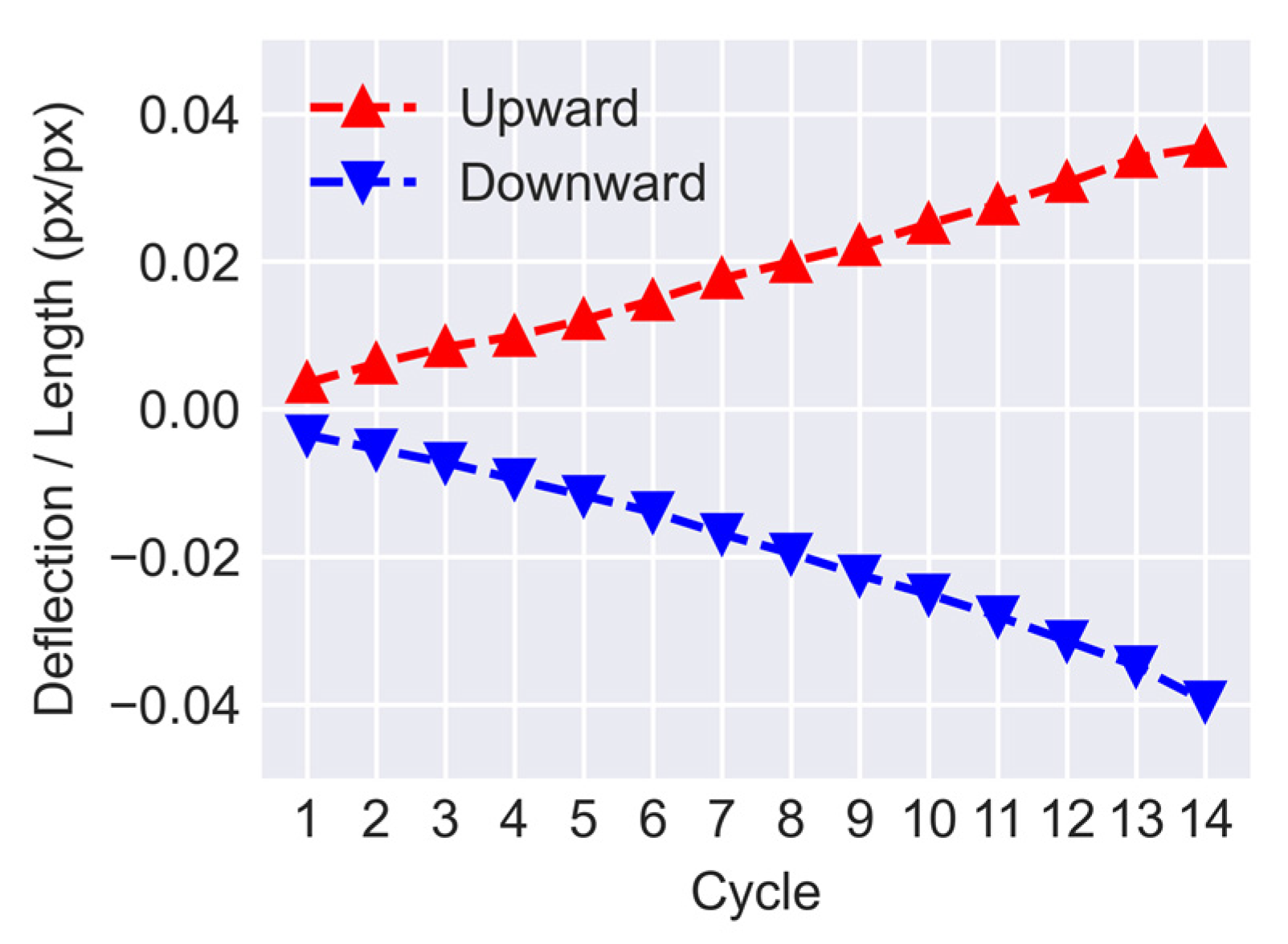

Figure 16 displays the ratio of the beam’s deflection over its length for cycles 1 to 14. The displacements and lengths are measured in the pixel domain using the position of the circular markers attached to the specimen. As can be seen, for both downward and upward loadings the deflection ratio of the beam is approximately changing linearly over time with an average difference of 0.003 between each consecutive cycle. A large interval between cycles can affect the outcome of crack mask transformations. Typically transformed masks of further steps have more misalignment errors when compared with the current step. That is due to larger deformations of the structural elements and the relative out-of-plane motion of the markers. The effect of such errors can be reduced by either using shorter intervals or more markers which can result in a finer mesh and a more accurate transformation.

Lastly, it should be noted that the proposed framework is able to approximately detect crack widths as small as 2.0 pixels. Based on the parameters of the camera and the configuration of the setup, the minimum crack width that the proposed framework is capable of detecting is around 1.0 mm. The ability of the proposed framework to detect thinner cracks can be enhanced by using a camera with a longer focal length or by capturing images at a shorter distance.

7. Summary and Conclusions

This study focuses on enhancing the accuracy of segmentation networks for concrete crack detection. Considering the small size of publicly available datasets for concrete crack segmentation, it is demonstrated that direct application of trained networks on a new dataset with different characteristics can result in poor performance. U-Net architecture, as a popular segmentation network, was trained on a public dataset to establish a Base Model. It is illustrated that such a model results in low precision when tested on a target dataset. The target dataset employed in this study is based on the images obtained during a cyclic loading test of a concrete beam-column joint specimen in a laboratory setting. The low accuracy of the Base Model is mainly due to the overwhelming number of false positives. Therefore, to improve precision of the network, the Base Model is fine-tuned with a synthetic dataset generated using a proposed CutMix method. In the proposed method, background images from the target structure are combined with the crack images from the public dataset. Meanwhile, during the combination process, the distributions of crack masks are taken into. As a result, only the most relevant region of the crack image is mixed with the background to avoid producing non-descriptive synthetic crack images. By fine-tuning the Base Model using the synthetic data it is shown that the average precision increases from 51.21% to 60.53%, which is a significant improvement. Therefore, it can be concluded that using the proposed method the segmentation network can learn the unique background features of a target dataset while maintaining its crack segmentation ability. It should be mentioned that the proposed data synthesis technique is based on the assumption that images of the intact states of structures are available. This is a valid assumption in long-term monitoring systems where histories of structures’ visual information are recorded. However, it may be a limitation in circumstances when images of structures’ initial states cannot be acquired.

Moreover, in this study, a temporal data fusion method is developed for the case where a sequence of crack images from the same concrete structure is available. The proposed data fusion method is a post-processing approach that leverages the shared information among multiple images to aggregate the detection outcomes from other time steps. Doing so improves the accuracy of detection by retrieving the pixels that are falsely detected as background. It is shown that for the target dataset used in this study this method can improve the recall from 57.24% to 61.27%. It should be noted that in this study deformations of the specimen components, i.e., the beam and the column, are corrected by using makers attached to the specimen. However, for more practical situations with no designated markers feature detection algorithms, such as scale-invariant feature transform (SIFT) [

39], can be employed to find the key points on the structure. Overall, it is illustrated that the proposed techniques can improve the F1-score and mIoU of the crack segmentation of U-Net from 0.5113 and 0.4888 to 0.6564 and 0.5975, i.e., by 28.4% and 22.2%, respectively. Meanwhile, MCC increases from 0.0541 to 0.2831, which is a remarkable improvement for this accuracy metric. The achieved accuracy metrics are still below the case when U-Net is tested with a dataset similar to its training dataset. However, applying the proposed improvements can boost the performance of the network significantly.

The methods proposed in this study can be applied for long-term continuous inspection or laboratory testing of concrete structures. Here, images from a fixed camera are used to validate the methods. However, these methods are also applicable to cases where images are captured by a non-stationary camera as long as the camera’s path is known or can be retrieved accurately. Furthermore, an area of future study involves application of the proposed techniques to target datasets composed of images captured in the field.