Study on an Assembly Prediction Method of RV Reducer Based on IGWO Algorithm and SVR Model

Abstract

1. Introduction

1.1. Literature Review

1.2. Major Contributions

- An improved grey wolf optimization algorithm is proposed, with three improvements:

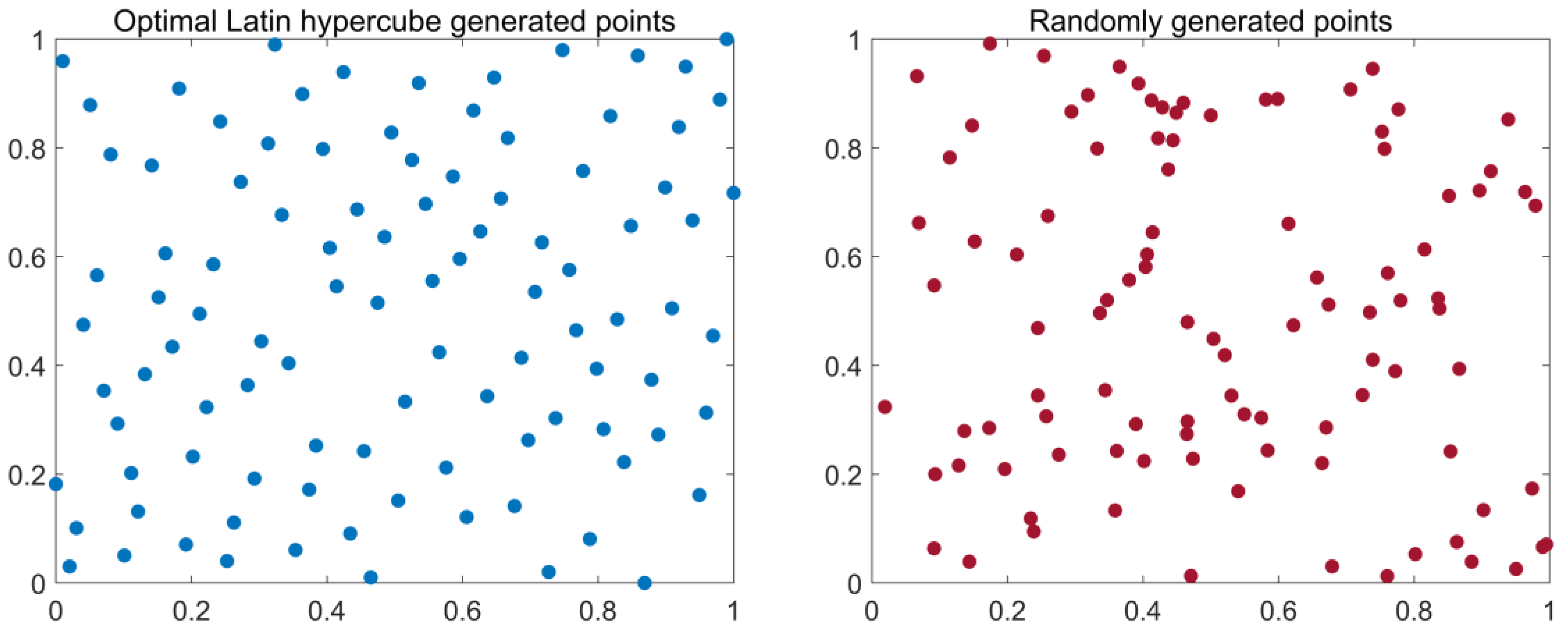

- Improving their initialized populations through the optimal Latin hypercube sampling idea as a way to increase initial population diversity.

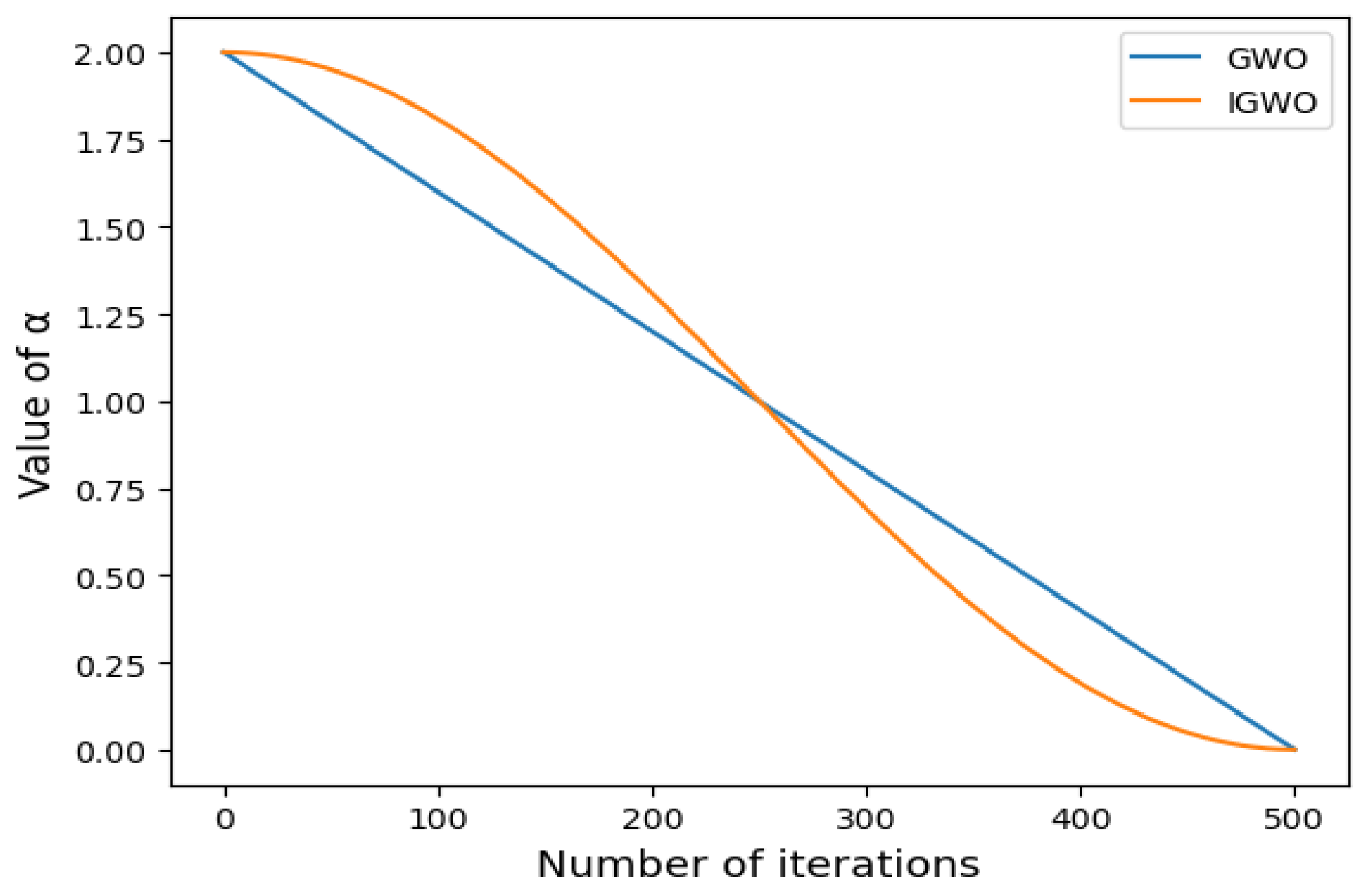

- Improving the convergence factor by the cosine nonlinear function, which improves the global search ability in the early stage and the convergence speed in the later stage of the algorithm.

- Improving the speed of convergence of this algorithm to the optimal solution through the improvement of the dynamic weighting strategy.

- Establish a new rotation error prediction method based on the IGWO algorithm and the SVR model to achieve fast and accurate predictions of rotation errors.

- The IGWO-SVR method shows better prediction performance relative to other rotation error prediction methods, and the IGWO algorithm also shows good parameter optimization performance, as verified by the RV reducer example.

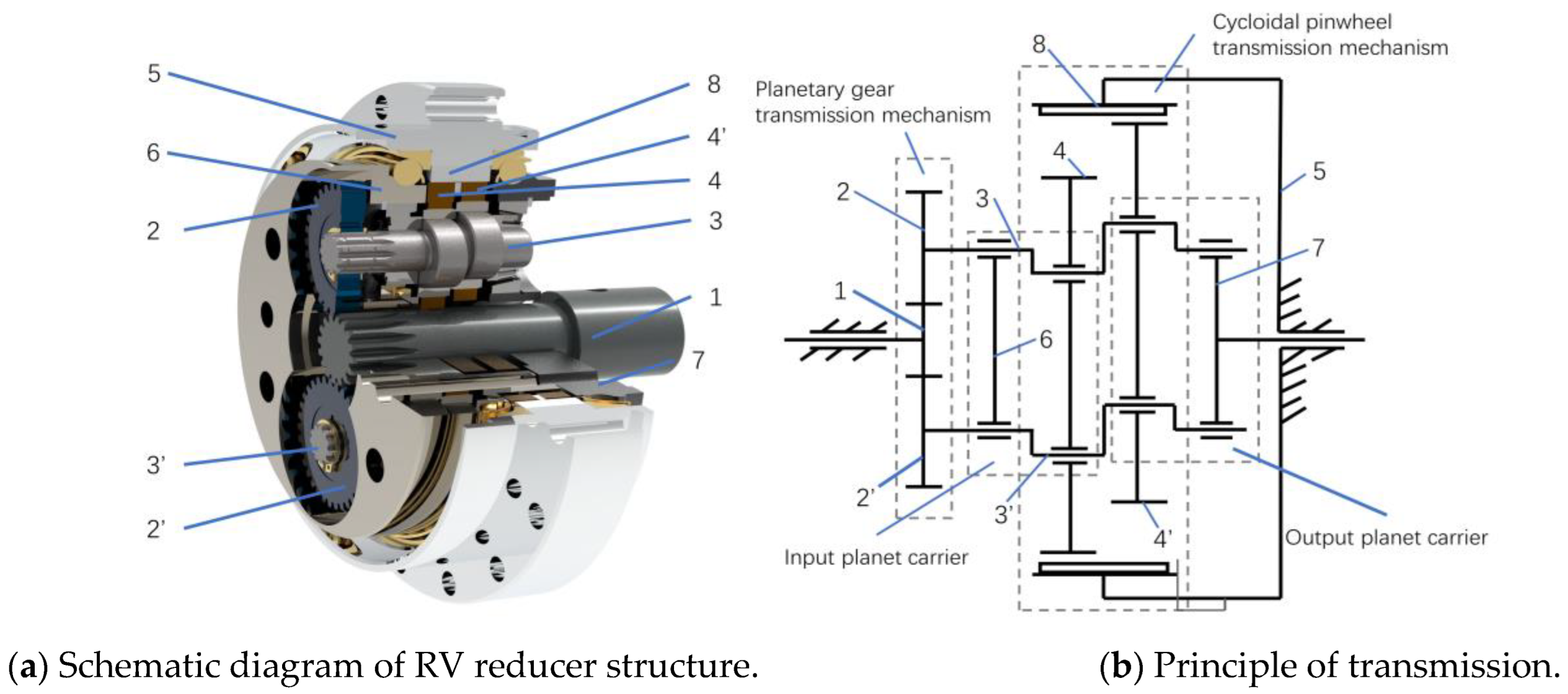

2. Structural Principle and Rotation Error of RV Reducer

2.1. Structural Principle Analysis of RV Reducer

2.2. Analysis of Influencing Factors of Rotation Error

3. The Improvement of the GWO Algorithm

3.1. GWO Algorithm

3.2. Improved GWO Algorithm

3.2.1. Wolf Pack Initialization by the OLHS Method

3.2.2. Nonlinear Convergence Factor

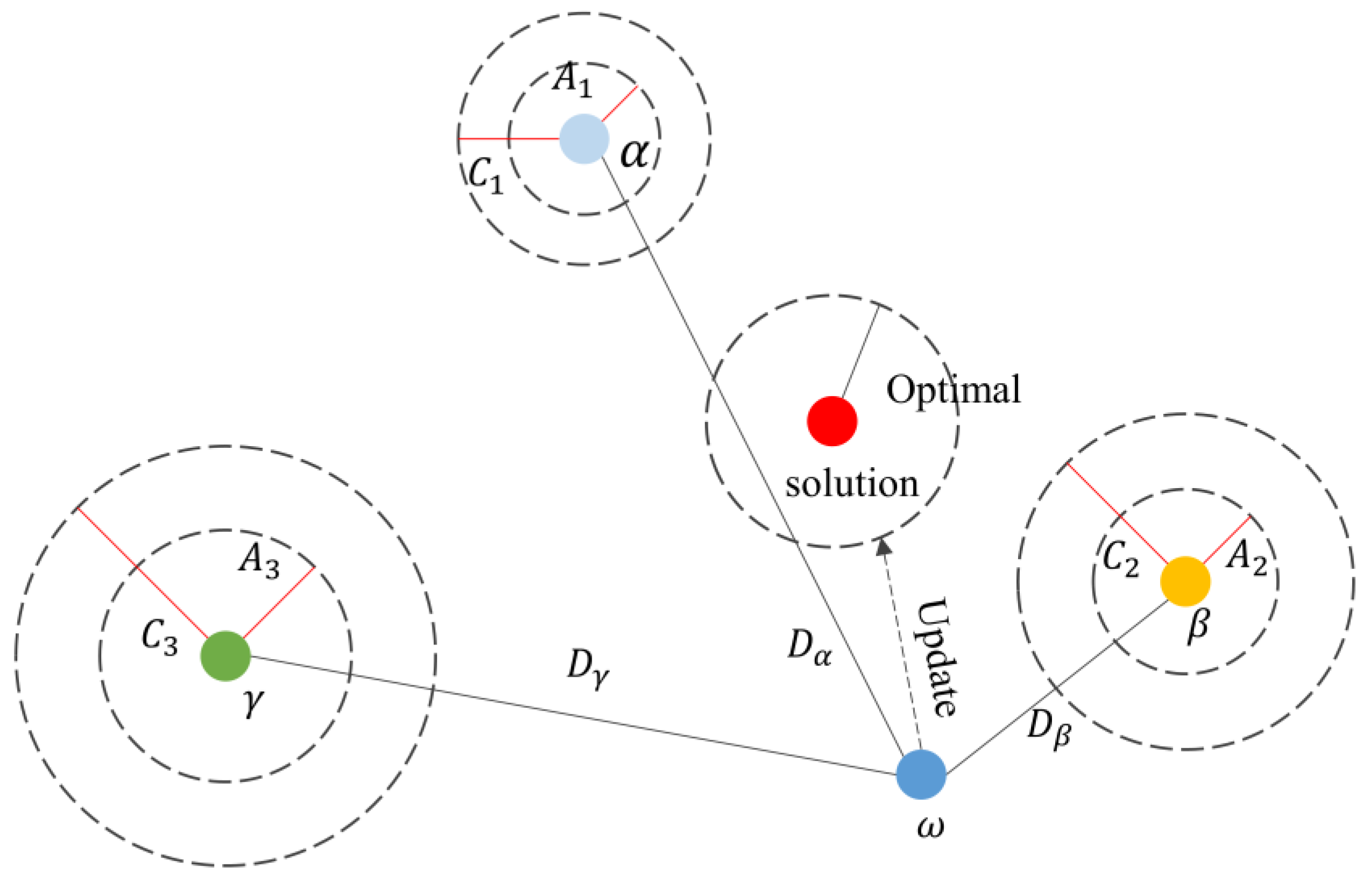

3.2.3. Weight-Based Grey Wolf Position Update

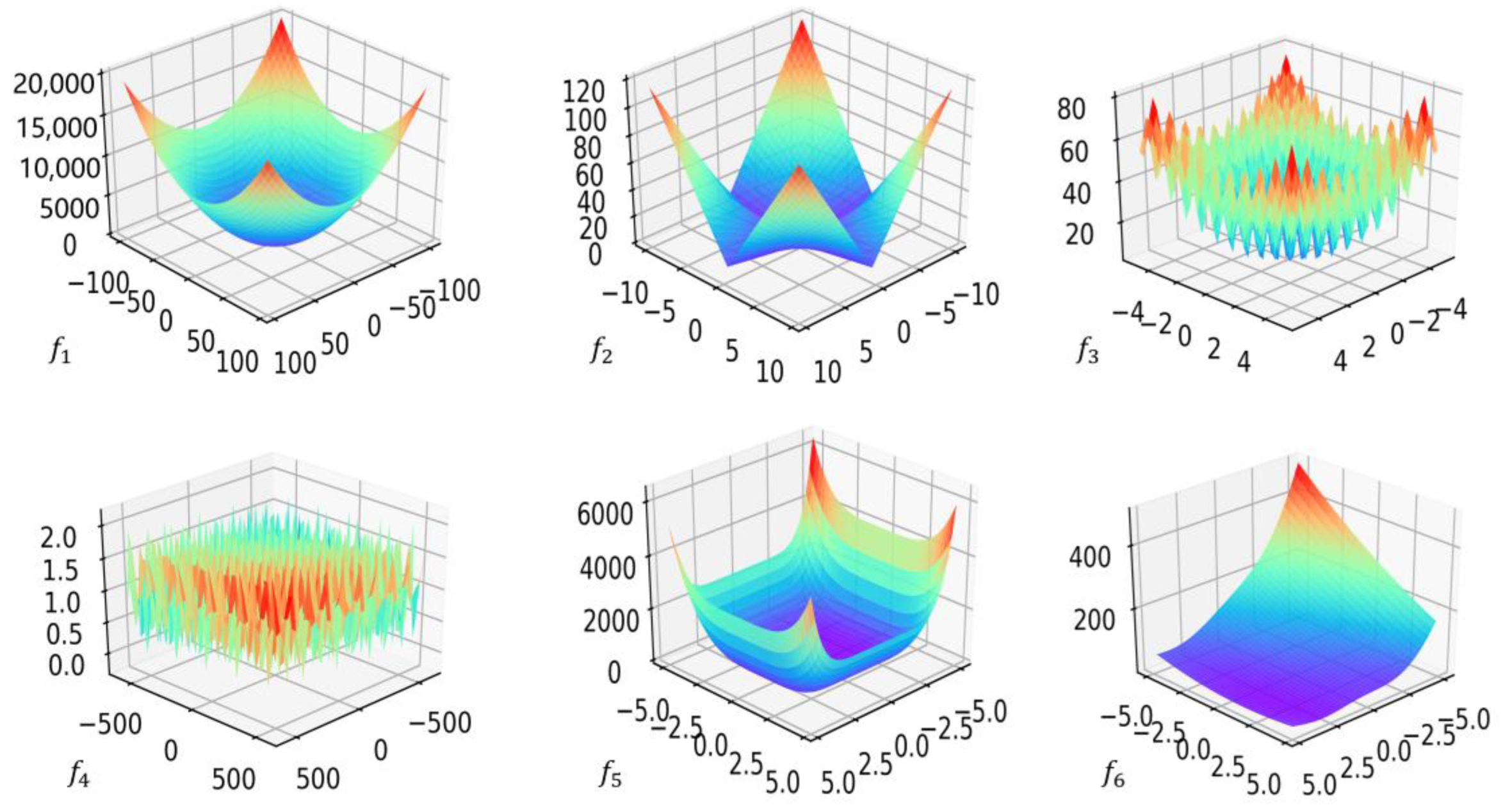

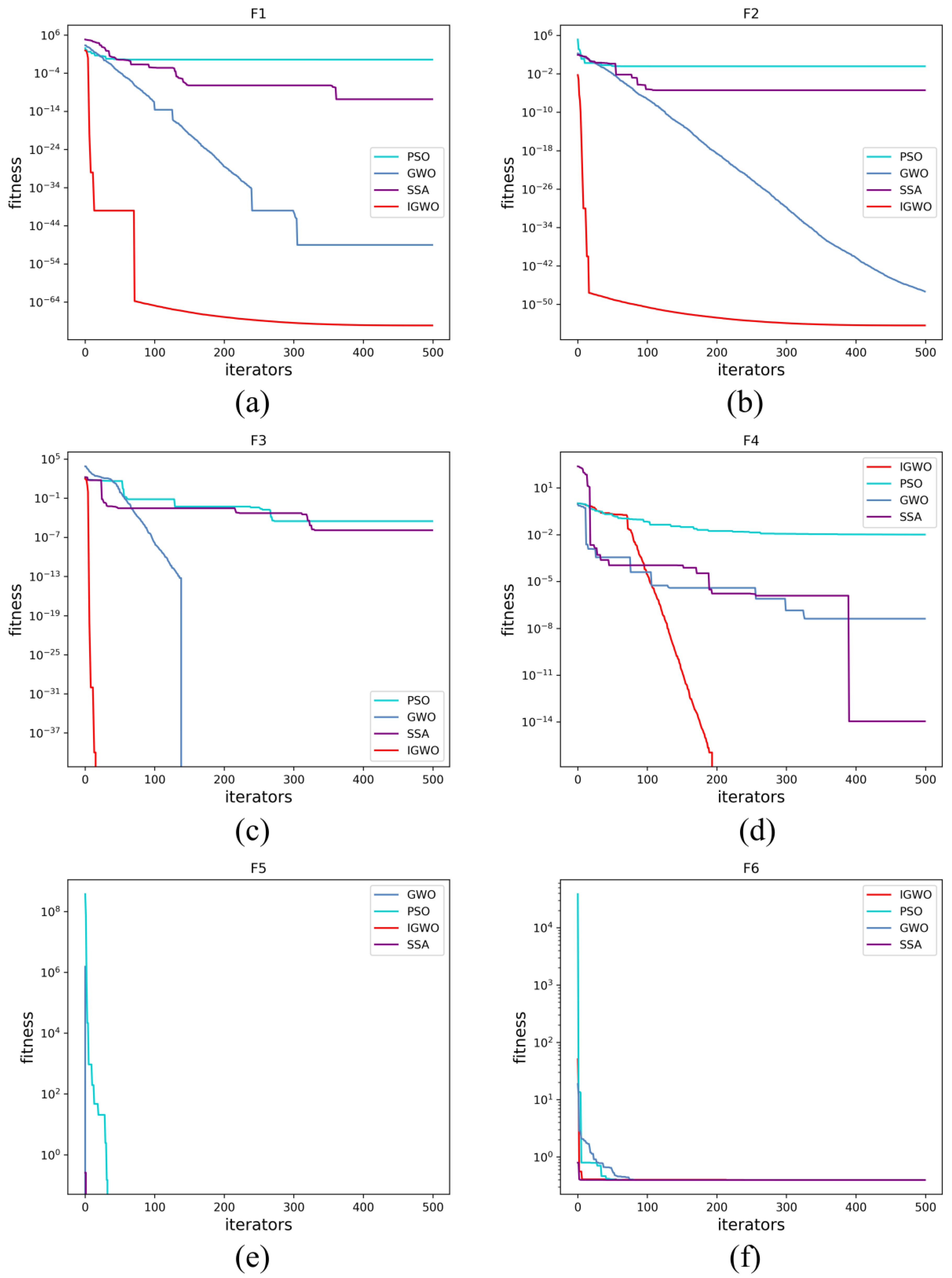

3.2.4. Validation of IGWO Algorithm

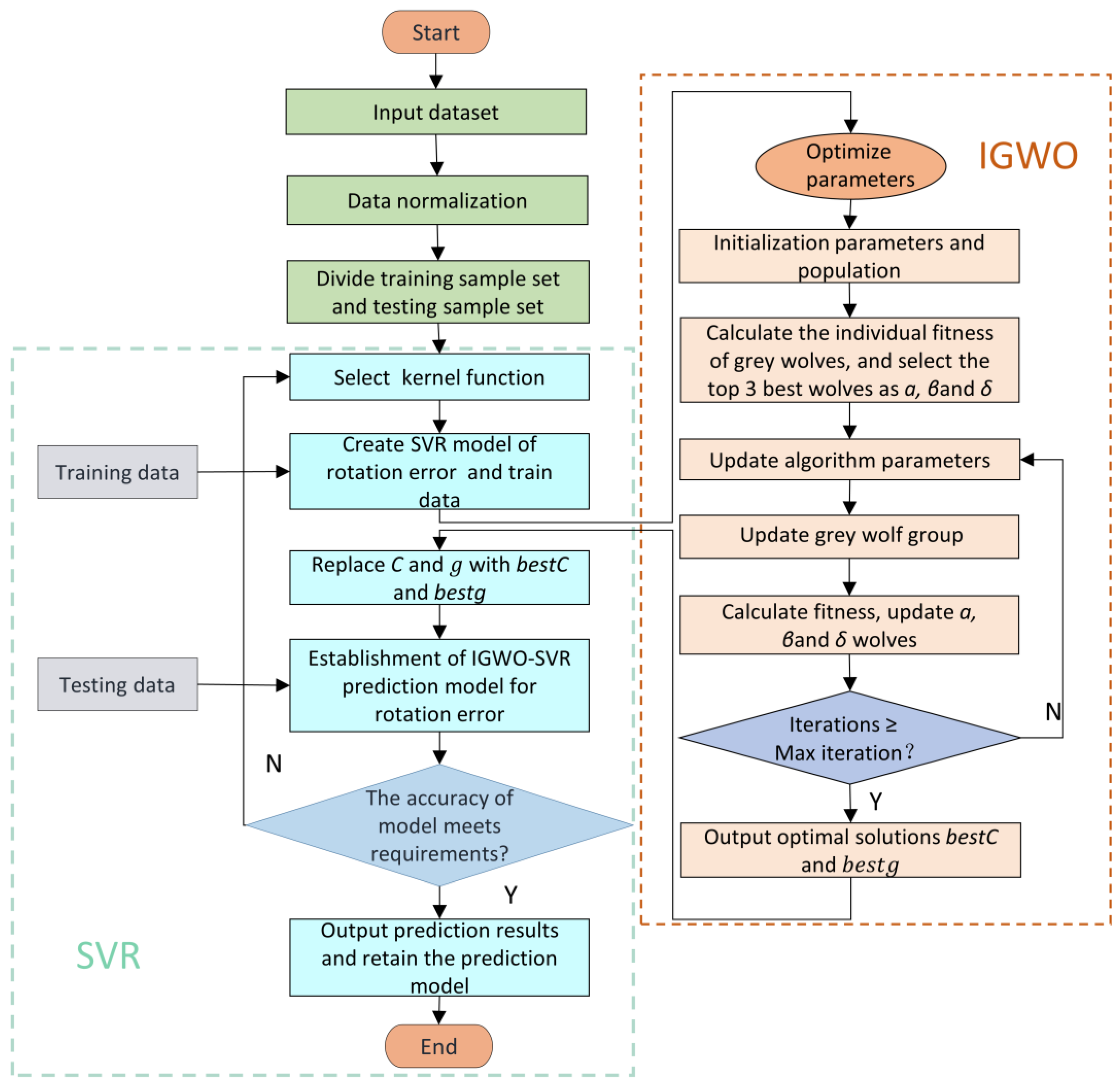

4. Rotation Error Prediction Model Based on IGWO-SVR

4.1. SVR Model

4.2. Process of Building Rotation Error Prediction Model

5. Result and Discussion

5.1. Preprocessing of Data

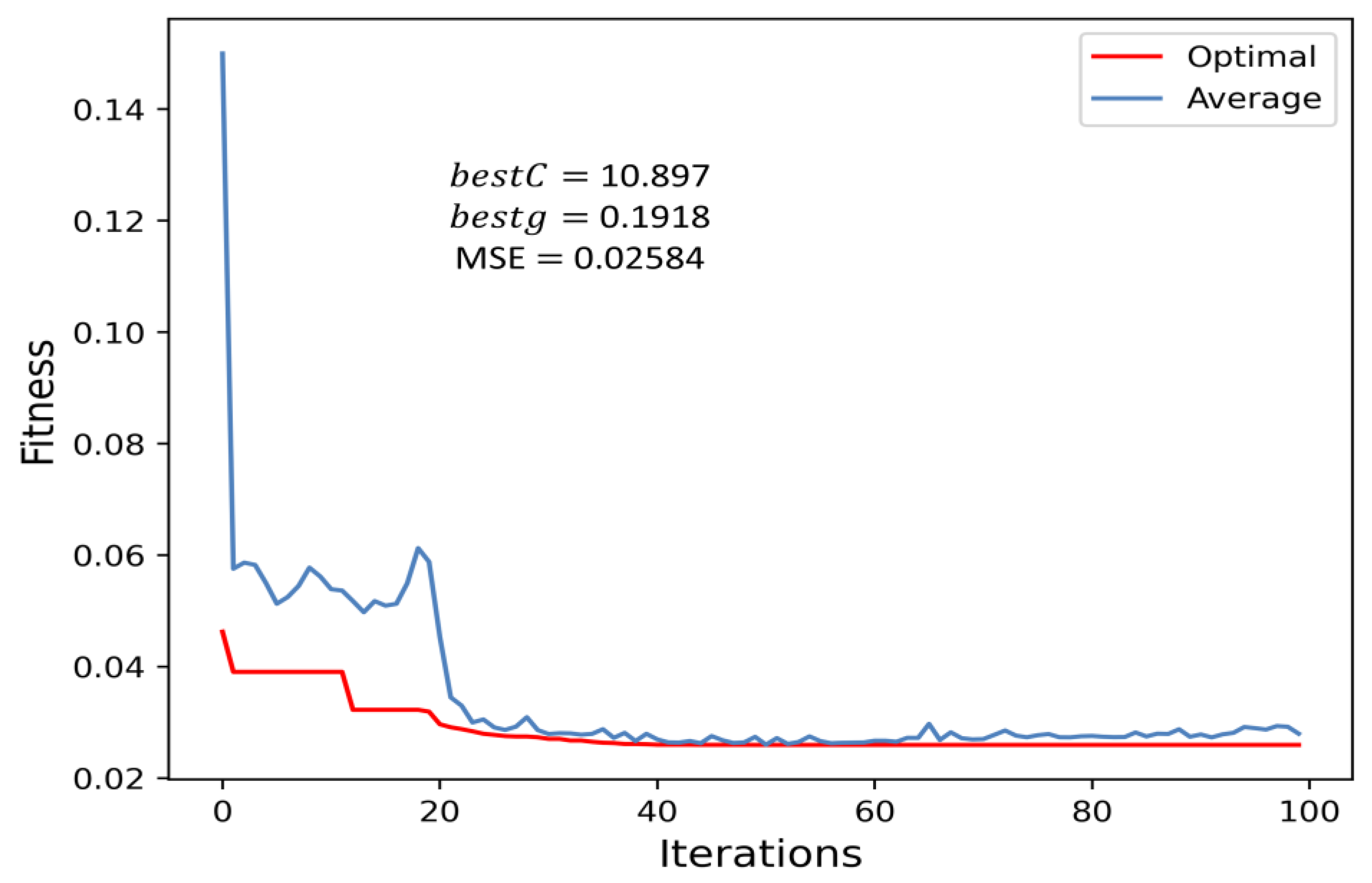

5.2. Optimization Results of Parameters

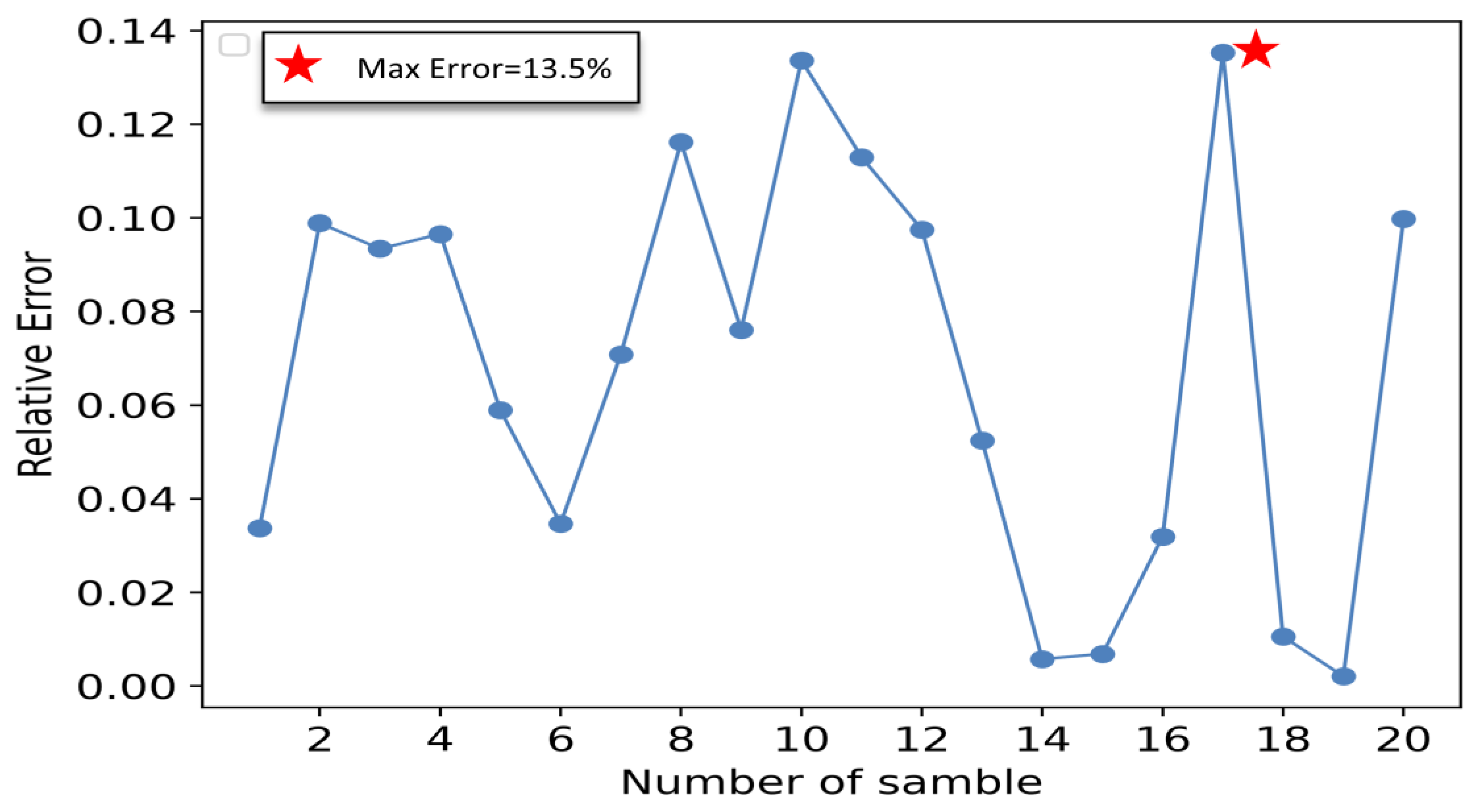

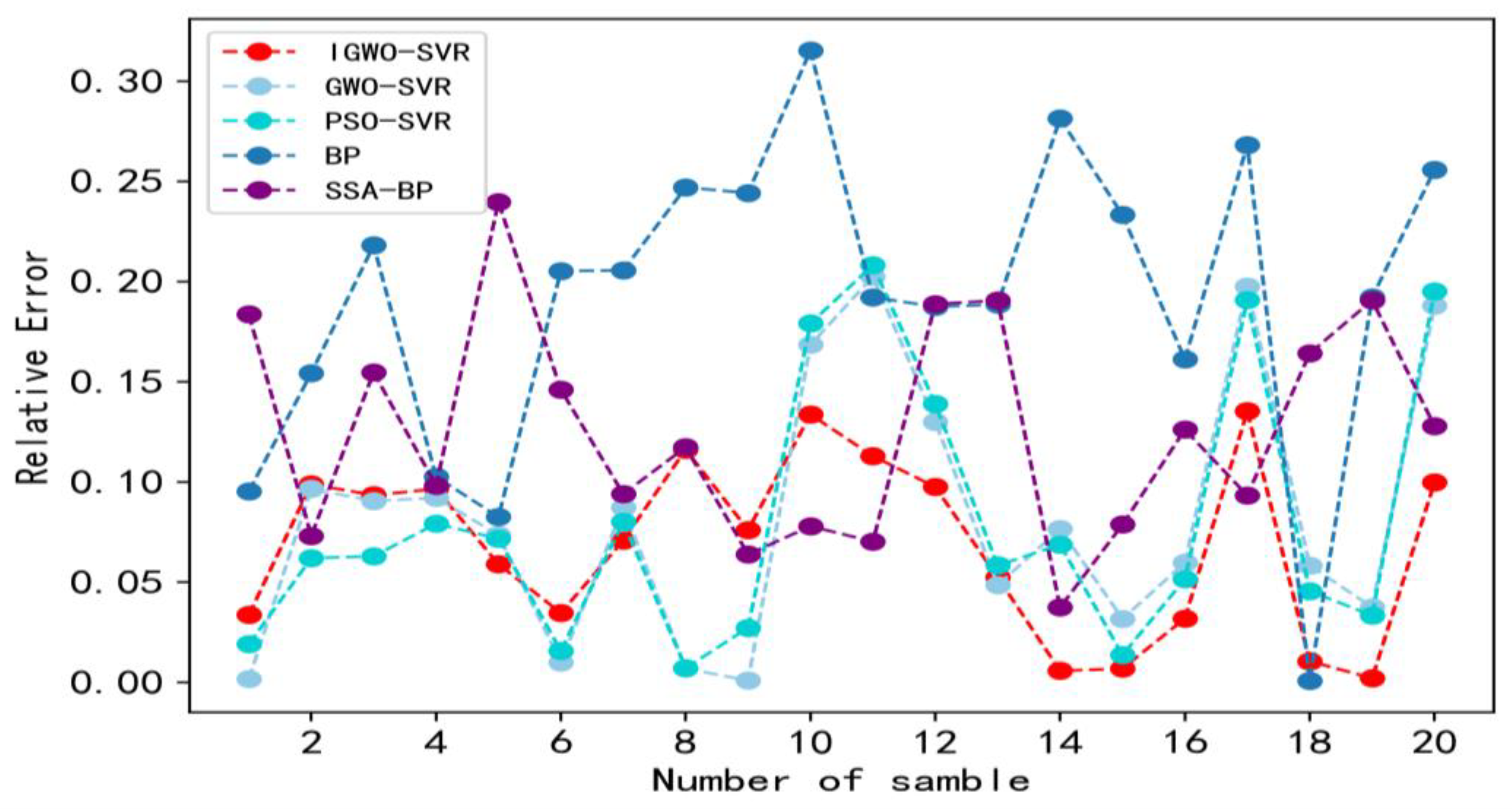

5.3. Analysis of Predictive Effect of the IGWO-SVR Model

5.4. Performance Evaluation of Model

6. Conclusions

- (1)

- Traditional GWO algorithm is enhanced based on the OLHS method, the cosine nonlinear convergence factor, and the dynamic weight strategy. Through verification, the IGWO algorithm has good optimization performance.

- (2)

- The prediction model for the rotation error of the RV reducer based on IGWO-SVR is established by optimizing the C and of SVR by using the IGWO algorithm. Additionally, its MSE is 0.026, running time is 7.843 s, and maximum relative error is 13.5%, which can meet the requirements of production beat and the product quality of enterprise.

- (3)

- A comparison of the IGWO-SVR method with other methods shows that the former provides better prediction performance and the IGWO algorithm shows better parameter optimization performance.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yin, Y. Research on On-line Monitoring and Rating of Transmission Accuracy of Industrial Robot RV Reducer. Master’s Thesis, China University of Mining and Technology, Xuzhou, China, 2021. [Google Scholar]

- Zhao, L.; Zhang, F.; Li, P.; Zhu, P.; Yang, X.; Jiang, W.; Xavior, A.; Cai, J.; You, L. Analysis on Dynamic Transmission Accuracy for RV Reducer. In MATEC Web of Conferences; EDP Sciences: Les Ulis, France, 2017; Volume 100. [Google Scholar]

- Blanche, J.G.; Yang, D.C.H. Cycloid drives with machining tolerances. Mech. Transm. Autom. Des. 1989, 111, 337–344. [Google Scholar] [CrossRef]

- Ishida, T.; Wang, H.; Hidaka, T. A study on opening the turning error of the K-H-V star congratulations device using the Cycloid congratulations vehicle (article 2, effects of various processing and assembly errors on turning error). Trans. Jpn. Soc. Mech. Eng. 1994, 60, 278–285. [Google Scholar]

- Zhang, Y.H.; Chen, Z.; He, W.D. Virtual Prototype Simulation and Transmission Error Analysis for RV Reducer. Appl. Mech. Mater. 2015, 789–790, 226–230. [Google Scholar] [CrossRef]

- Tong, X.T. Research on Dynamic Transmission Error of RV Reducer based on Virtual Prototype technology. Master’s Thesis, Zhejiang University of Technology, Hangzhou, China, 2019. [Google Scholar]

- Sun, H.Z.; Yuan, H.B.; Yu, B. the RV reducer transmission error prediction based on SSA-BP. J. Mech. Transm. 2022, 46, 149–154. [Google Scholar]

- Dai, W.; Zhang, C.Y.; Meng, L.L.; Li, J.H.; Xiao, P.F. A support vector machine milling cutter wear prediction model based on deep learning and feature post-processing. Comput. Integr. Manuf. Syst. 2022, 26, 2331–2343. [Google Scholar]

- Balogun, A.L.; Rezaie, F.; Pham, Q.B.; Gigović, L.; Drobnjak, S.; Aina, Y.A.; Lee, S. Spatial prediction of landslide susceptibility in western Serbia using hybrid support vector regression (SVR) with GWO, BAT and COA algorithms. Geosci. Front. 2021, 12, 101104. [Google Scholar] [CrossRef]

- Zhang, Z.; Hong, W.C. Application of variational mode decomposition and chaotic grey wolf optimizer with support vector regression for forecasting electric loads. Knowl. Based Syst. 2021, 228, 107297. [Google Scholar] [CrossRef]

- Peng, S.; Zhang, Z.; Liu, E.; Liu, W.; Qiao, W. A new hybrid algorithm model for prediction of internal corrosion rate of multiphase pipeline. J. Nat. Gas Sci. Eng. 2021, 85, 103716. [Google Scholar] [CrossRef]

- Nguyen, H.; Choi, Y.; Bui, X.N.; Nguyen-Thoi, T. Predicting Blast-Induced Ground Vibration in Open-Pit Mines Using Vibration Sensors and Support Vector Regression-Based Optimization Algorithms. Sensors 2019, 20, 132. [Google Scholar] [CrossRef]

- Zhang, B.; Li, K.; Hu, Y.; Ji, K.; Han, B. Prediction of Backfill Strength Based on Support Vector Regression Improved by Grey Wolf Optimization. J. Shanghai Jiaotong Univ. (Sci.) 2022, 1–9. Available online: https://link.springer.com/article/10.1007/s12204-022-2408-7 (accessed on 3 November 2022). [CrossRef]

- Tang, J.; Liu, G.; Pan, Q. A review on representative swarm intelligence algorithms for solving optimization problems: Applications and trends. IEEE/CAA J. Autom. Sin. 2021, 8, 1627–1643. [Google Scholar] [CrossRef]

- Liu, Z.F.; Li, L.L.; Liu, Y.W.; Liu, J.Q.; Li, H.Y.; Shen, Q. Dynamic economic emission dispatch considering renewable energy generation: A novel multi-objective optimization approach. Energy 2021, 235, 121407. [Google Scholar] [CrossRef]

- Li, L.L.; Liu, Z.F.; Tseng, M.L.; Zheng, S.J.; Lim, M.K. Improved tunicate swarm algorithm: Solving the dynamic economic emission dispatch problems. Appl. Soft Comput. 2021, 108, 107504. [Google Scholar] [CrossRef]

- Tubishat, M.; Ja’afar, S.; Alswaitti, M.; Mirjalili, S.; Idris, N.; Ismail, M.A.; Omar, M.S. Dynamic salp swarm algorithm for feature selection. Expert Syst Appl. 2021, 164, 113873. [Google Scholar] [CrossRef]

- Zhang, H.; Liu, T.; Ye, X.; Heidari, A.A.; Liang, G.; Chen, H.; Pan, Z. Differential evolution-assisted salp swarm algorithm with chaotic structure for real-world problems. Eng. Comput. Germany. 2022, 1–35. [Google Scholar] [CrossRef]

- Yildiz, A.R.; Abderazek, H.; Mirjalili, S. A comparative study of recent non-traditional methods for mechanical design optimization. Arch. Comput. Method E. 2020, 27, 1031–1048. [Google Scholar] [CrossRef]

- Abderazek, H.; Hamza, F.; Yildiz, A.R.; Gao, L.; Sait, S.M. A comparative analysis of the queuing search algorithm, the sine-cosine algorithm, the ant lion algorithm to determine the optimal weight design problem of a spur gear drive system. Mater Test. 2021, 63, 442–447. [Google Scholar] [CrossRef]

- Wang, Y.; Ni, C.; Fan, X.; Qian, Q.; Jin, S. Cellular differential evolutionary algorithm with double-stage external population-leading and its application. Eng. Comput. Germany 2022, 38, 2101–2120. [Google Scholar] [CrossRef]

- Kamarzarrin, M.; Refan, M.H. Intelligent Sliding Mode Adaptive Controller Design for Wind Turbine Pitch Control System Using PSO-SVM in Presence of Disturbance. J. Control Autom. Electr. Syst. 2020, 31, 912–925. [Google Scholar] [CrossRef]

- Yang, Z.; Wang, Y.; Kong, C. Remaining Useful Life Prediction of Lithium-Ion Batteries Based on a Mixture of Ensemble Empirical Mode Decomposition and GWO-SVR Model. IEEE Trans. Instrum. Meas. 2021, 70, 1–11. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Zhang, X.F.; Wang, X.Y. A Survey of Gray Wolf Optimization Algorithms. Comput. Sci. 2022, 46, 30–38. [Google Scholar]

- Zhao, X.; Ren, S.; Quan, H.; Gao, Q. Routing Protocol for Heterogeneous Wireless Sensor Networks Based on a Modified Grey Wolf Optimizer. Sensors 2020, 20, 820. [Google Scholar] [CrossRef]

- Jin, S.S.; Tong, X.T.; Wang, Y.L. Influencing Factors on Rotate Vector Reducer Dynamic Transmission Error. Int. J. Autom. Technol 2019, 13, 545–556. [Google Scholar] [CrossRef]

- Liu, T.D.; Lu, M.; Shao, G.F.; Wang, R.Y. Transmission ERROR Modeling and Optimization of Robot Reducer. Control Theory Appl. 2022, 37, 215–221. [Google Scholar]

- Lei, B.; Jin, Y.T.; Liu, H.L. Job Scheduling for Cross-layer Shuttle Vehicle Storage System with FJSP Problem. Comput. Integr. Manuf. Syst. 2022, 1, 14. [Google Scholar]

- Johnson, M.E.; Leslie, M.M.; Donald, Y. Minimax and maximin distance designs. J. Stat. Plan. Infer. 1990, 26, 131–148. [Google Scholar] [CrossRef]

- Morris, M.D.; Toby, J.M. Exploratory designs for computational experiments. J. Stat. Plan. Infer. 1995, 43, 381–402. [Google Scholar] [CrossRef]

- Lin, L.; Chen, F.J.; Xie, J.L.; Li, F. Online Public opinion Prediction based on improved Grey Wolf Optimized Support Vector Regression. Syst. Eng. Theory Pract. 2022, 42, 487–498. [Google Scholar]

- Hou, Y.; Gao, H.; Wang, Z.; Du, C. Improved Grey Wolf Optimization Algorithm and Application. Sensors 2022, 22, 3810. [Google Scholar] [CrossRef]

- Zhu, A.J.; Xu, C.P.; Li, Z.; Wu, J.; Liu, Z.B. Hybridizing grey wolf optimization with differential evolution for global optimization and test scheduling for 3D stacked SoC. J. Syst. Eng. Electr. 2015, 26, 317–328. [Google Scholar] [CrossRef]

- Yan, J.W.; Zhong, X.H.; Fan, Y.; Guo, S.M. Residual life prediction of high power semiconductor lasers based on cluster sampling and support vector regression. Model. China Mech. Eng. 2022, 32, 1523–1529. [Google Scholar]

| Manufacturing Errors of Key Components | Index of Sensitivity | Weight % |

|---|---|---|

| Cycloid gear isometric modification error () | 1.6131 | 23.040 |

| Radius error of needle tooth center circle () | 1.102 | 15.746 |

| Cycloid gear shift modification error () | −1.1024 | 15.746 |

| Needle tooth radius error () | −0.8065 | 11.519 |

| Crankshaft eccentricity error () | 0.00007 | 0.001 |

| Accumulated pitch error of cycloidal gear () | −0.589 | 8.410 |

| Needle hole circumferential position error () | 0.587 | 8.341 |

| Cycloid ring gear radial runout error () | 0.201 | 2.871 |

| Crank-bearing clearance () | 1.000 | 14.283 |

| Test Functions | Dimension | Range | Min |

|---|---|---|---|

| 30 | [−100, 100] | 0 | |

| 30 | [−10, 10] | 0 | |

| 30 | [−5.12, 5.12] | 0 | |

| 30 | [−600, 600] | 0 | |

| 2 | [−5, 5] | −1.0316 | |

| 2 | [−5, 5] | 0.3979 |

| Function | Algorithm | Average | St.dev |

|---|---|---|---|

| PSO | 3.73 × 10−12 | 5.45 × 10−12 | |

| GWO | 3.88 × 10−48 | 6.79 × 10−48 | |

| SSA | 2.76 × 10−7 | 6.27 × 10−7 | |

| IGWO | 1.69 × 10−77 | 1.97 × 10−78 | |

| PSO | 1.59 × 10−3 | 1.84 × 10−2 | |

| GWO | 8.65 × 10−45 | 5.89 × 10−44 | |

| SSA | 5.54 × 10−6 | 1.59 × 10−5 | |

| IGWO | 4.07 × 10−56 | 1.43 × 10−58 | |

| PSO | 3.67 × 10−2 | 5.32 × 10−2 | |

| GWO | 5.44 × 10−15 | 1.09 × 10−16 | |

| SSA | 7.98 × 10−6 | 2.06 × 10−5 | |

| IGWO | 0 | 0 | |

| PSO | 0.0098 | 0.0105 | |

| GWO | 0.0025 | 0.0189 | |

| SSA | 3.75 × 10−8 | 9.42 × 10−8 | |

| IGWO | 0 | 0 | |

| PSO | −1.0316 | 4.66 × 10−8 | |

| GWO | −1.0316 | 7.77 × 10−8 | |

| SSA | −1.0316 | 7.54 × 10−5 | |

| IGWO | −1.0316 | 3.57 × 10−8 | |

| PSO | 0.3979 | 5.29 × 10−7 | |

| GWO | 0.3979 | 1.82 × 10−8 | |

| SSA | 0.3979 | 1.97 × 10−4 | |

| IGWO | 0.3979 | 1.83 × 10−8 |

| Sample | ||||||

|---|---|---|---|---|---|---|

| 1 | 0.156 | 0.500 | 0.903 | 0.850 | 0.800 | 1.133 |

| 2 | 0.250 | 0.350 | 0.288 | 0.350 | 0.400 | 1.231 |

| 3 | 0.750 | 0.650 | 0.711 | 0.350 | 0.600 | 1.938 |

| 4 | 0.750 | 0.500 | 0.288 | 0.50 | 0.400 | 1.452 |

| 5 | 0.500 | 0.650 | 0.288 | 0.650 | 0.400 | 1.564 |

| 6 | 0.843 | 0.150 | 0.903 | 0.850 | 0.500 | 1.272 |

| 7 | 0.500 | 0.150 | 0.903 | 0.500 | 0.800 | 1.464 |

| 8 | 0.843 | 0.850 | 0.500 | 0.150 | 0.800 | 1.473 |

| 9 | 0.312 | 0.500 | 0.807 | 0.750 | 0.700 | 1.190 |

| 10 | 0.5 | 0.250 | 0.500 | 0.500 | 0.700 | 1.240 |

| Number of Optimizations | Scope of Optimizations | Number of Wolves | Maximum Iterations | Mode Norm of Space |

|---|---|---|---|---|

| 2 | [0.01, 100] | 20 | 100 | 10 |

| Model | Parameter | Value |

|---|---|---|

| BP neural network | Learning rate | 0.01 |

| optimizer | Stochastic gradient descent | |

| SSA-BP neural network | Learning rate | 0.01 |

| optimizer | Stochastic gradient descent | |

| IGWO-SVR | 10.897 | |

| 0.1918 | ||

| GWO-SVR | 1.275 | |

| 6.183 | ||

| PSO-SVR | 1.059 | |

| 7.532 |

| Prediction Model | Evaluating Indicator | Time Duration/s | ||

|---|---|---|---|---|

| MSE | MRE | MAE | ||

| IGWO-SVR | 0.0260 | 0.0784 | 0.1195 | 7.843 |

| PSO-SVR | 0.0358 | 0.0883 | 0.1339 | 8.926 |

| GWO-SVR | 0.0364 | 0.0911 | 0.1368 | 6.542 |

| BP neural network | 0.1211 | 0.1915 | 0.2809 | 10.508 |

| SSA-BP neural network | 0.0363 | 0.1258 | 0.1771 | 11.851 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jin, S.; Cao, M.; Qian, Q.; Zhang, G.; Wang, Y. Study on an Assembly Prediction Method of RV Reducer Based on IGWO Algorithm and SVR Model. Sensors 2023, 23, 366. https://doi.org/10.3390/s23010366

Jin S, Cao M, Qian Q, Zhang G, Wang Y. Study on an Assembly Prediction Method of RV Reducer Based on IGWO Algorithm and SVR Model. Sensors. 2023; 23(1):366. https://doi.org/10.3390/s23010366

Chicago/Turabian StyleJin, Shousong, Mengyi Cao, Qiancheng Qian, Guo Zhang, and Yaliang Wang. 2023. "Study on an Assembly Prediction Method of RV Reducer Based on IGWO Algorithm and SVR Model" Sensors 23, no. 1: 366. https://doi.org/10.3390/s23010366

APA StyleJin, S., Cao, M., Qian, Q., Zhang, G., & Wang, Y. (2023). Study on an Assembly Prediction Method of RV Reducer Based on IGWO Algorithm and SVR Model. Sensors, 23(1), 366. https://doi.org/10.3390/s23010366