BioTouch: Reliable Re-Authentication via Finger Bio-Capacitance and Touching Behavior †

Abstract

:1. Introduction

- Accurate. Re-authentication schemes should have a low False Alarm Rate (FAR). Otherwise, an authorized user may be flagged as anomalous frequently, which hinders a consecutive use of the device.

- Robust. Re-authentication schemes should be available for diverse scenarios in practice, where users may use the device in different emotions, movements and body actions.

- We propose BioTouch, a novel scheme for reliable user re-authentication that provides continuous security insurance for the device by transparently verifying the legality of the current user in every on-screen operation. It makes up the security issue of attacker preventing after device unlock that cannot be achieved by traditional authentication schemes, and provides a new idea to improve the security of computational devices as well as Zero-Trust model based systems.

- Our system is transparent during usage, and can continuously check the user’s identity without interfering in their normal operations, thereby effectively ensuring the continuous security of the device and maintaining a good user experience.

- We designed a novel data extraction algorithm (as described in Section 5.1) that can effectively eliminate the impact of different touching operations on user behavior and ensure the robustness of BioTouch in practical applications.

- We construct a user-legitimate model to describe the user’s legitimacy much more accurately, which can further improve the accuracy of the re-authentication.

2. Related Works

2.1. Behavior-Based User Re-Authentication

2.2. Biometric-Based User Re-Authentication

3. BioTouch in a Nutshell

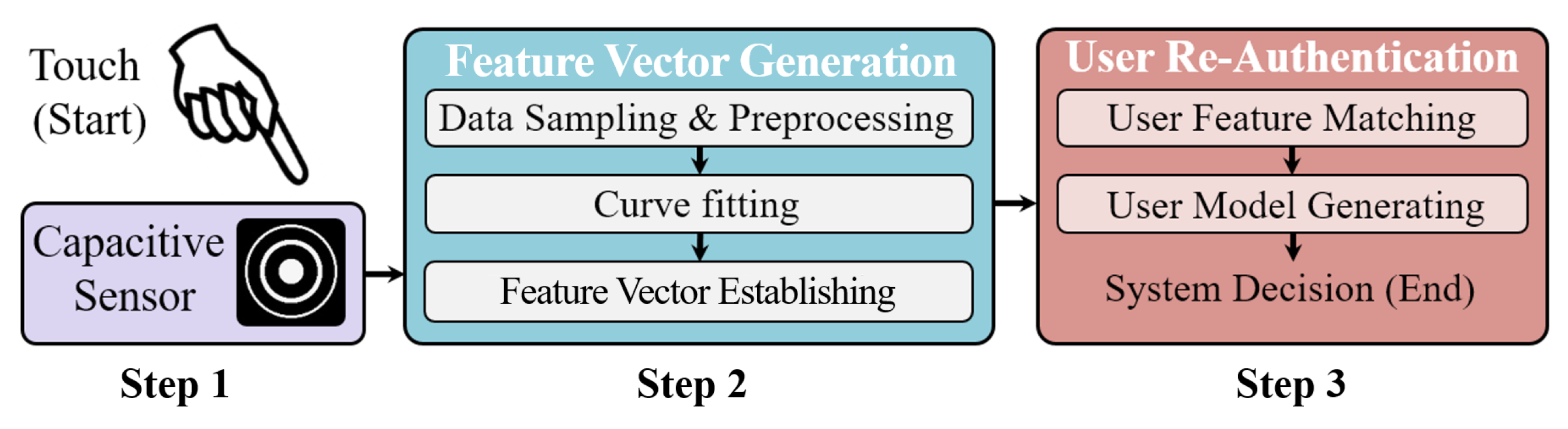

- Step 1: Combined user feature collection. In the first step, BioTouch transparently collects both the bio-capacitance and touching behavior features from the user as they freely operate the device. The feature collection progress is transparent, and both the features are simultaneously collected via the sensor without attracting the user’s attention. The details are presented in Section 4.

- Step 2: Feature vector generation. In the second step, BioTouch generates a four-dimensional feature vector as the identity characteristic for the user based on the information collected in step 1. In this progress, BioTouch samples the sensor and pre-processes the sampled data to remove the impact from different types of touching operations. Then, BioTouch fits the curve and establishes the feature vector based on the coefficients of curve functions. The design details are presented in Section 5.

- Step 3: User re-authentication. In the final step, BioTouch continuously authenticates the user’s legitimacy by comparing the generated feature vector with the stored legitimate database. If it matches, the system judges the current user’s identity is legal. Otherwise, it is illegal. Based on multiple authentication results in the usage, BioTouch generates a user-legitimacy model with a user legal score to eliminate misjudgment and describe the user’s legitimacy more accurately. The score dynamically updates during the usage session, which increases if the result is legal and drops if the result is illegal. If the score drops below the legitimate threshold, BioTouch locks the device and forces the user to log out to avoid possible malicious access to the private data. The design details are presented in Section 6.

4. Combined Feature Collection

5. Feature Vector Generating

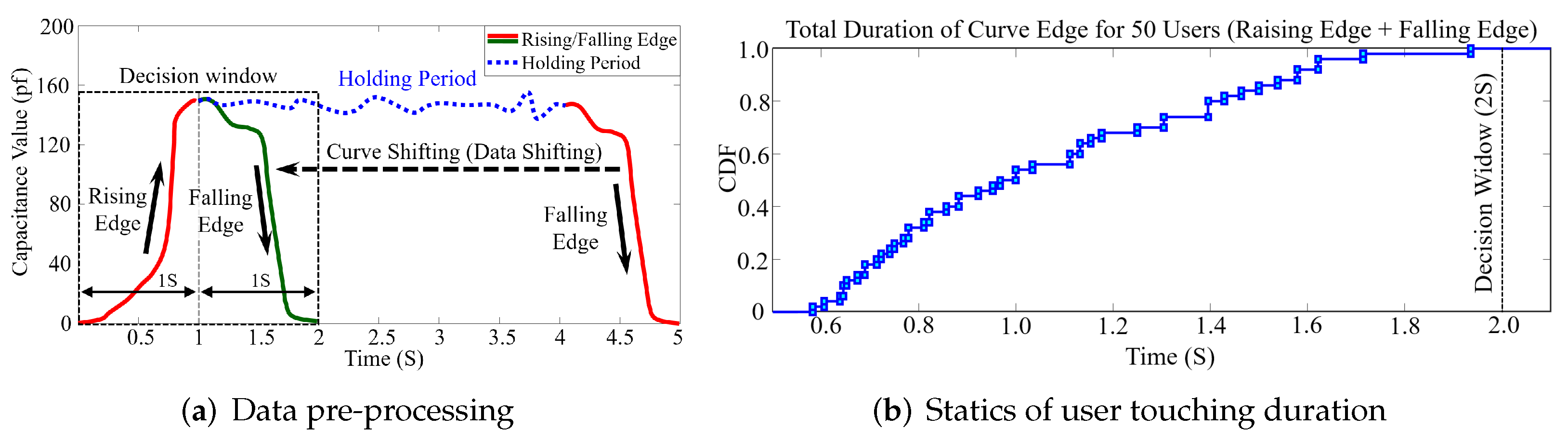

5.1. Data Sampling and Pre-Processing

| Algorithm 1 Capacitance Extraction |

|

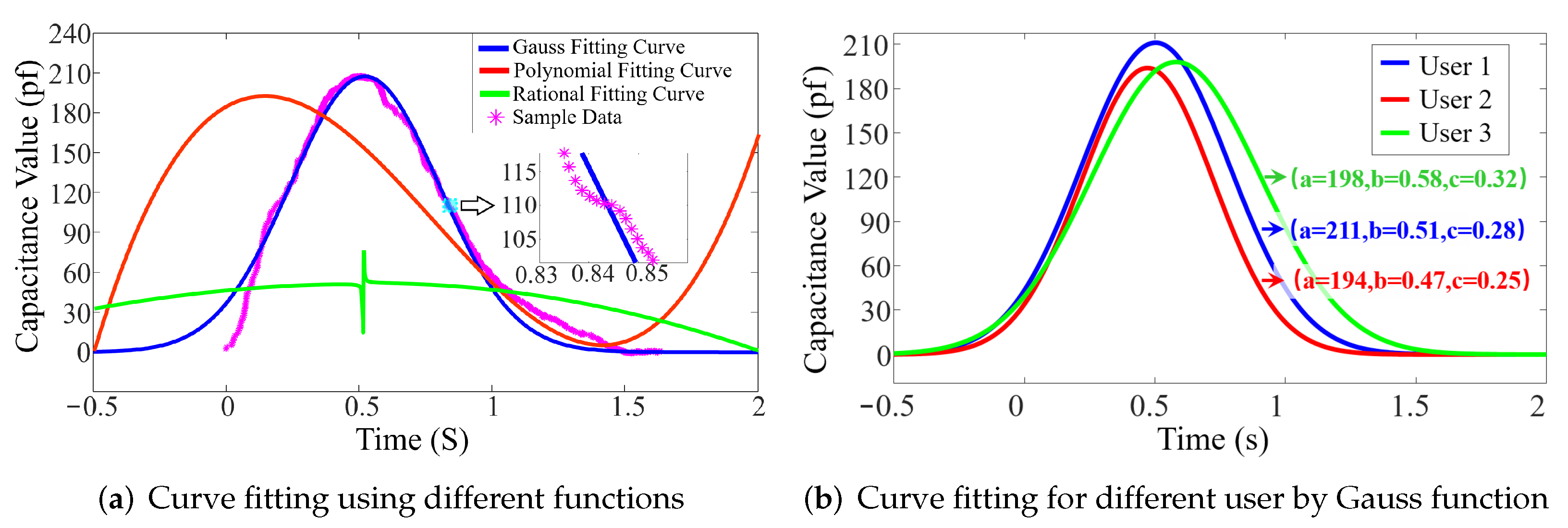

5.2. Curve Fitting

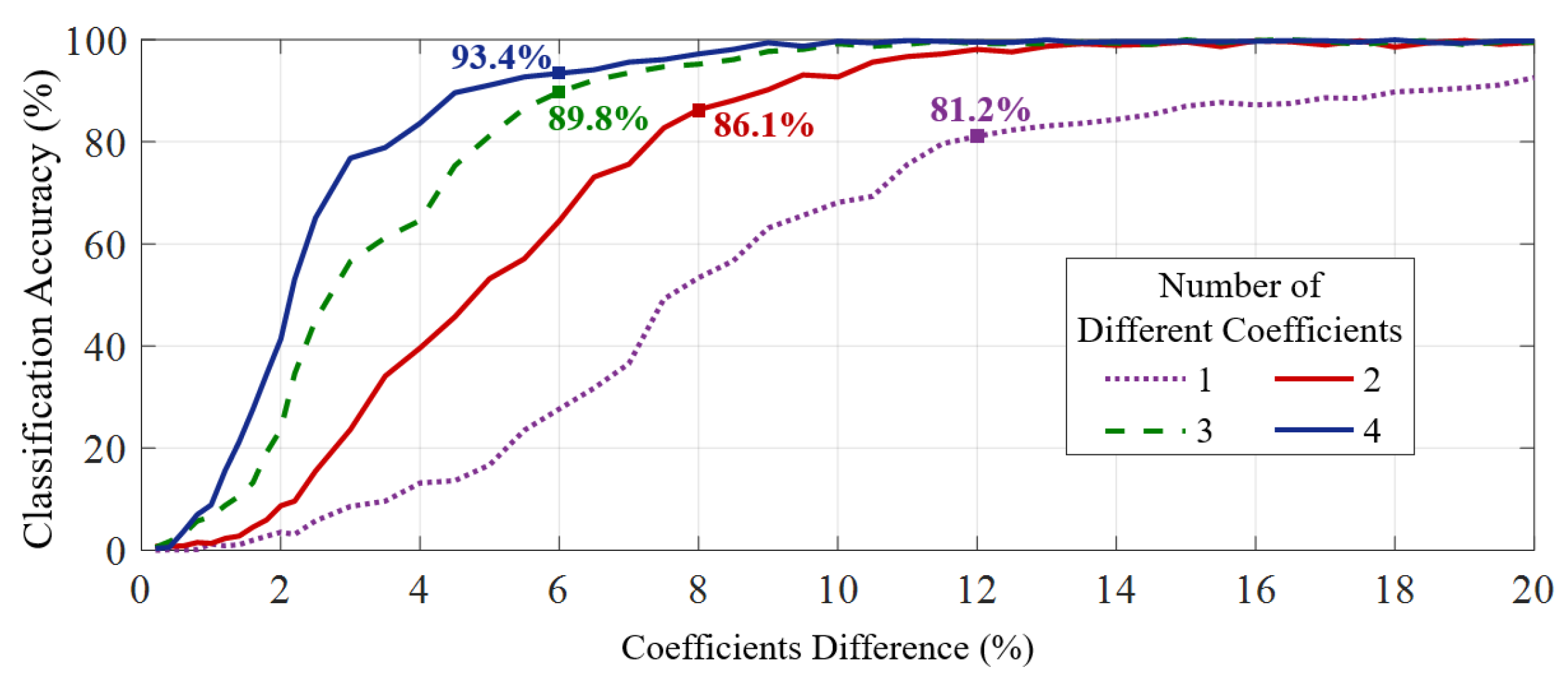

5.3. Feature Vector Establishing

6. User Re-Authentication

6.1. Feature Matching

6.2. User Model Generating

| Algorithm 2 User-legitimacy Module |

Authentication result of each finger-touching operation. User’s legitimacy score and system decision (Keep user login or kick user out).

|

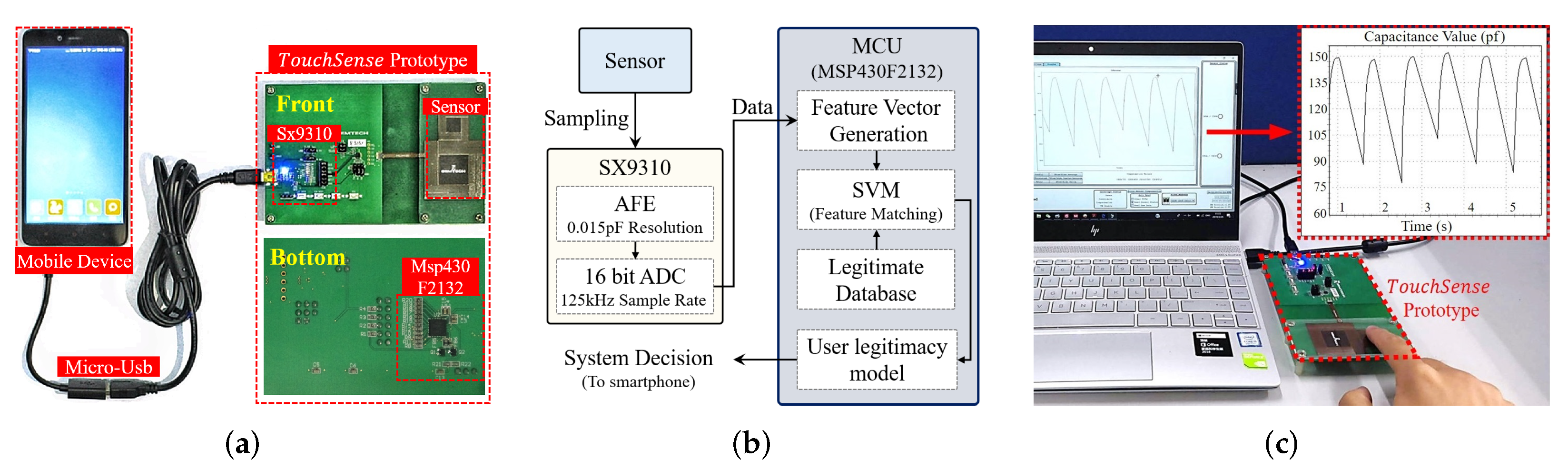

7. Implementation

7.1. Hardware Implementation

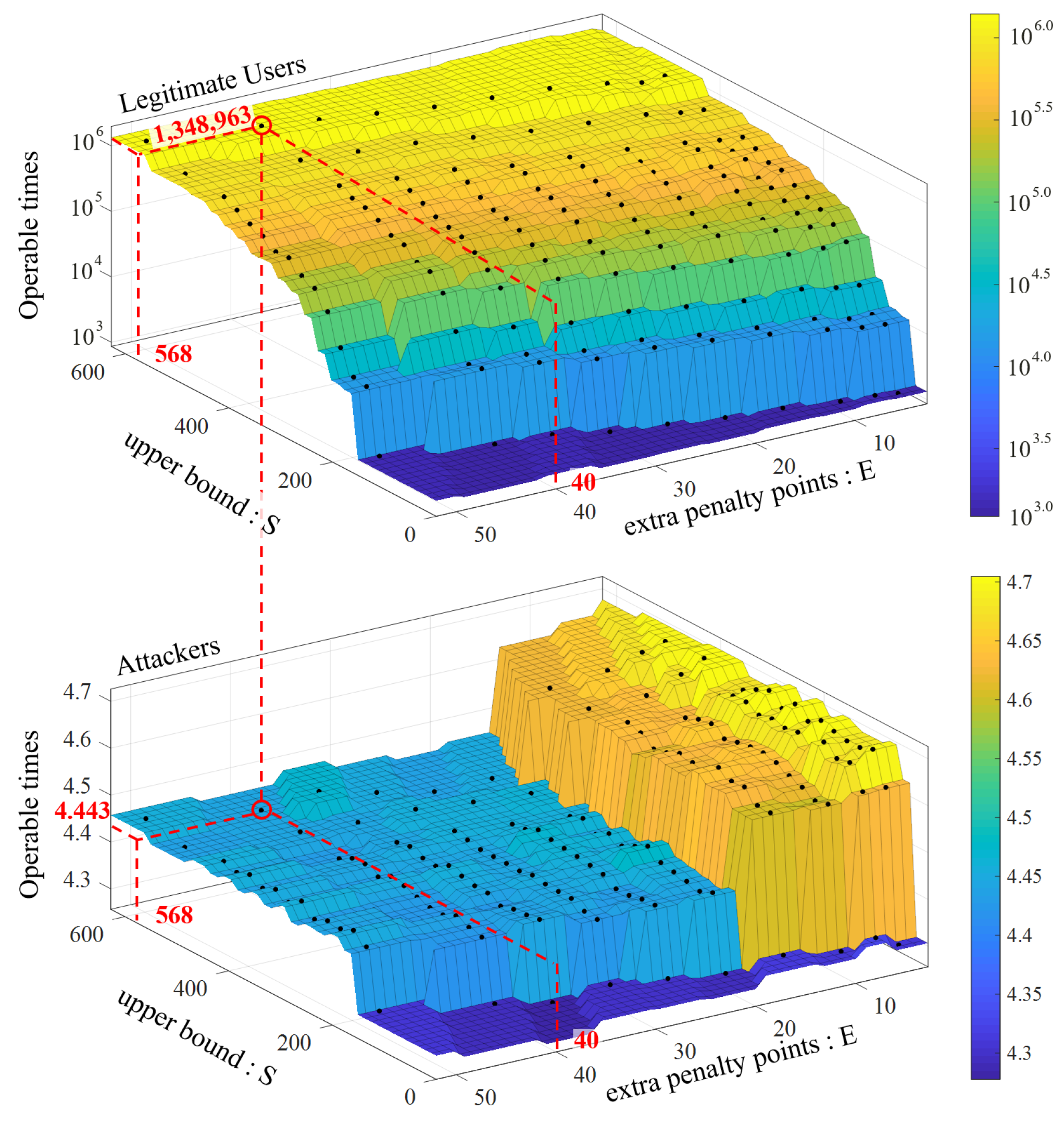

7.2. User Model Parameter Setting

8. Evaluation Results

8.1. Touching Detection Rate

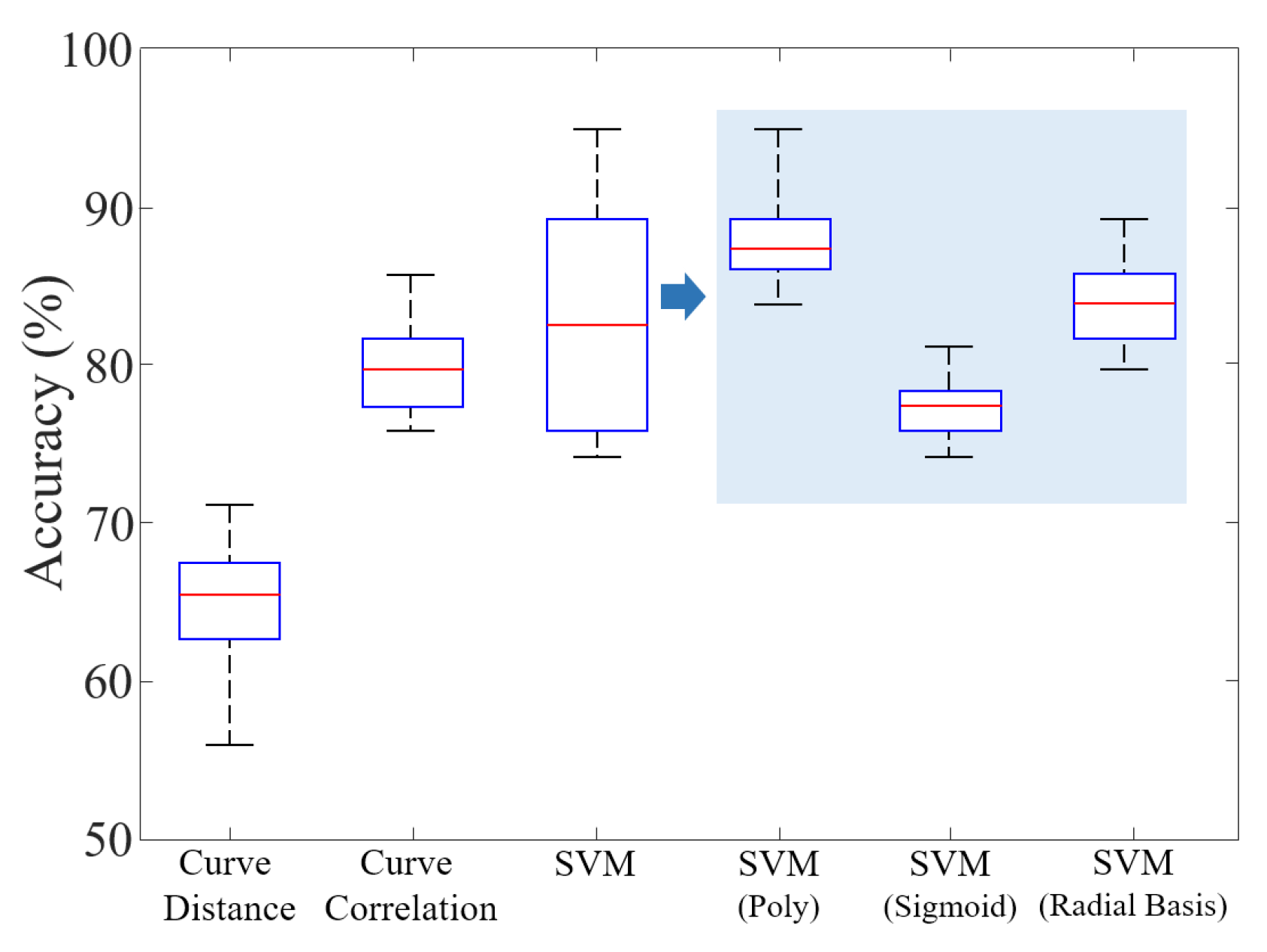

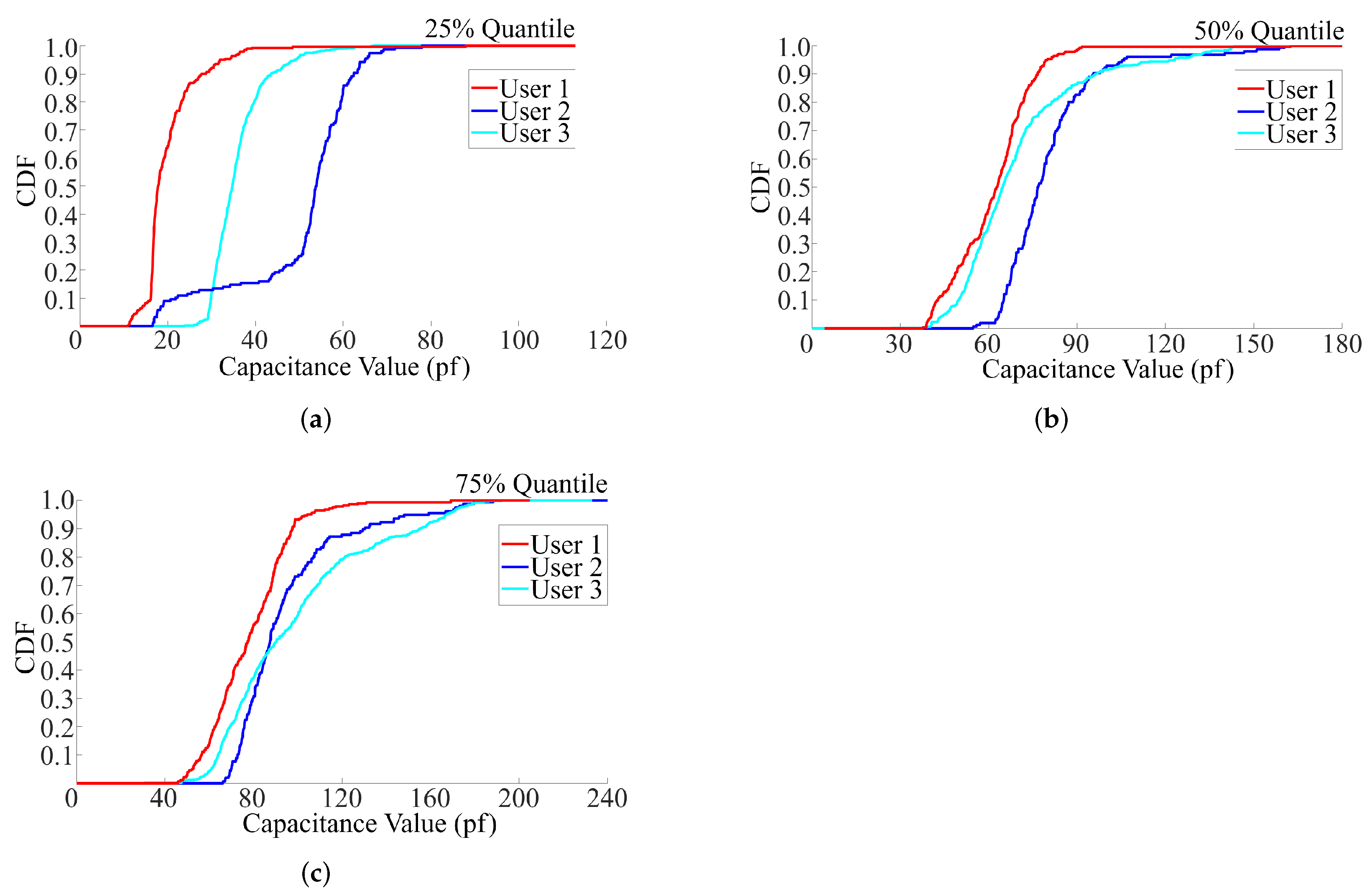

8.2. User Differentiation Rate

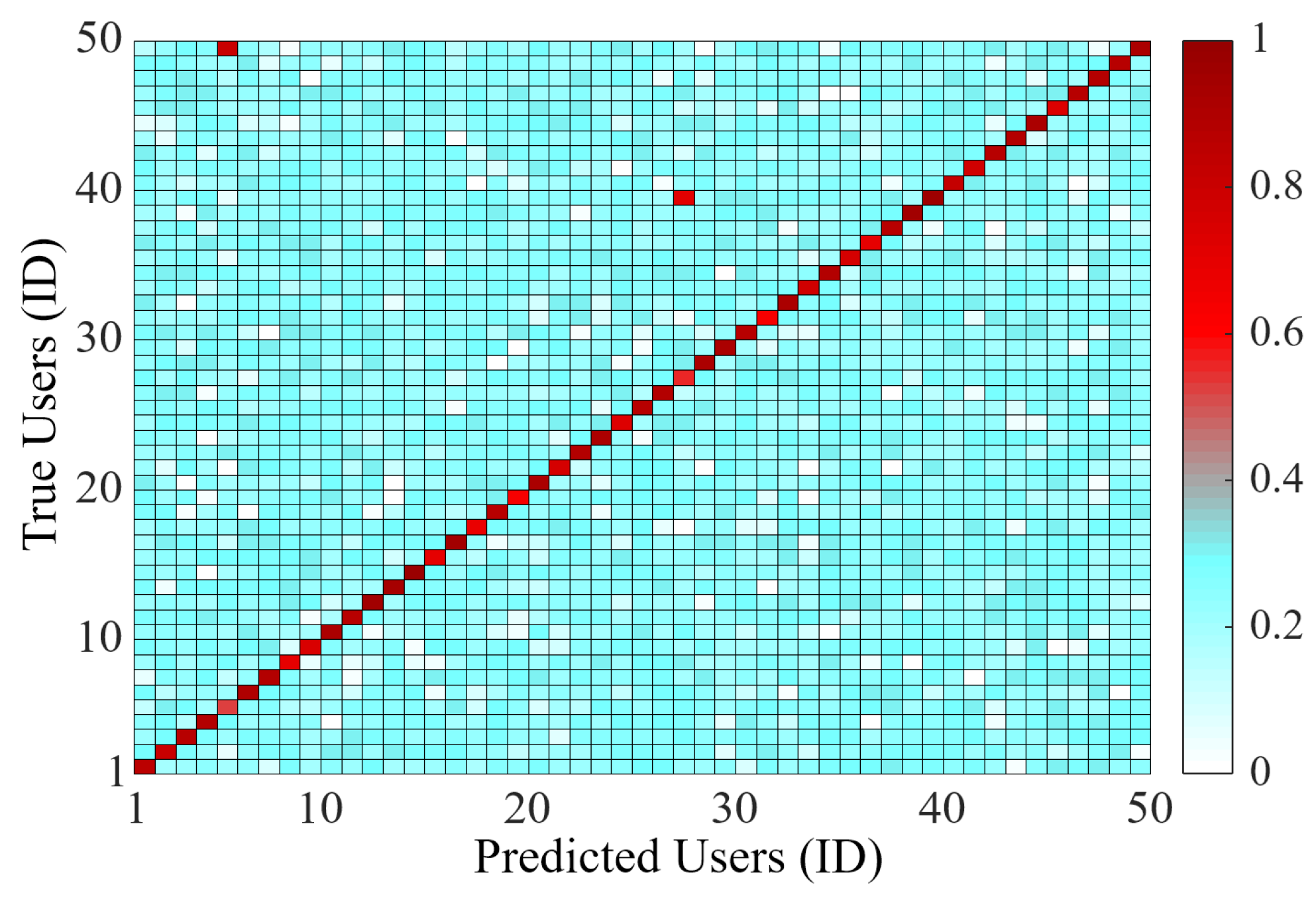

8.3. Confusion Matrix of Feature Vector for 50 Users

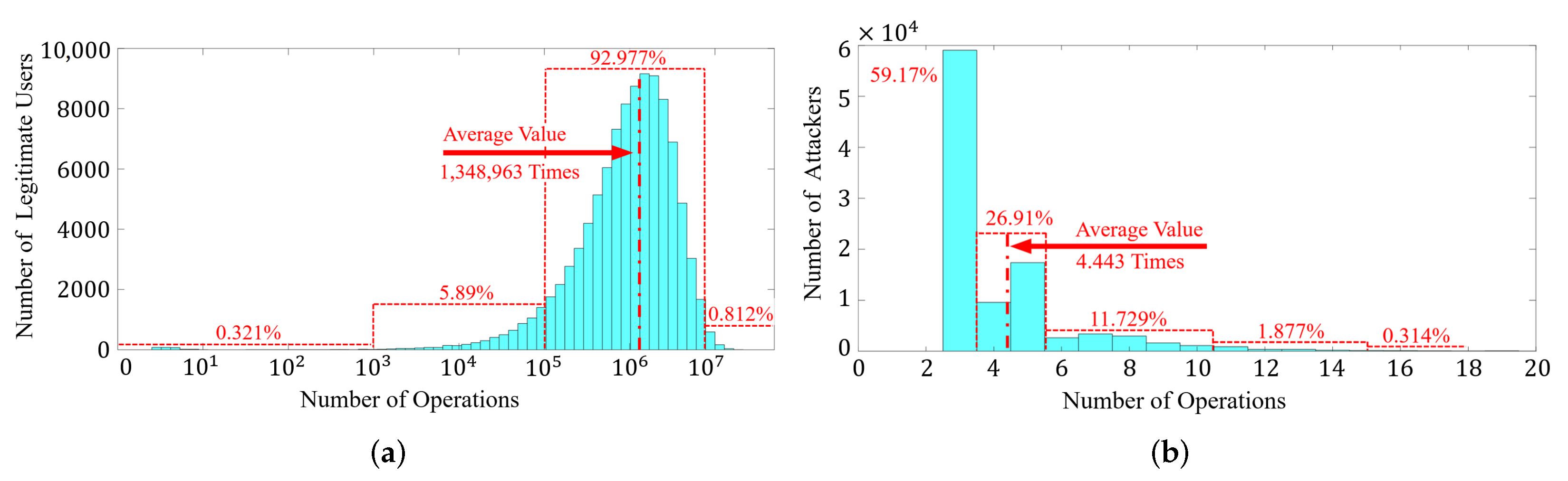

8.4. Re-Authentication Performance under 100,000 Samples of Legitimate Users and Attackers

8.5. Motion Influence

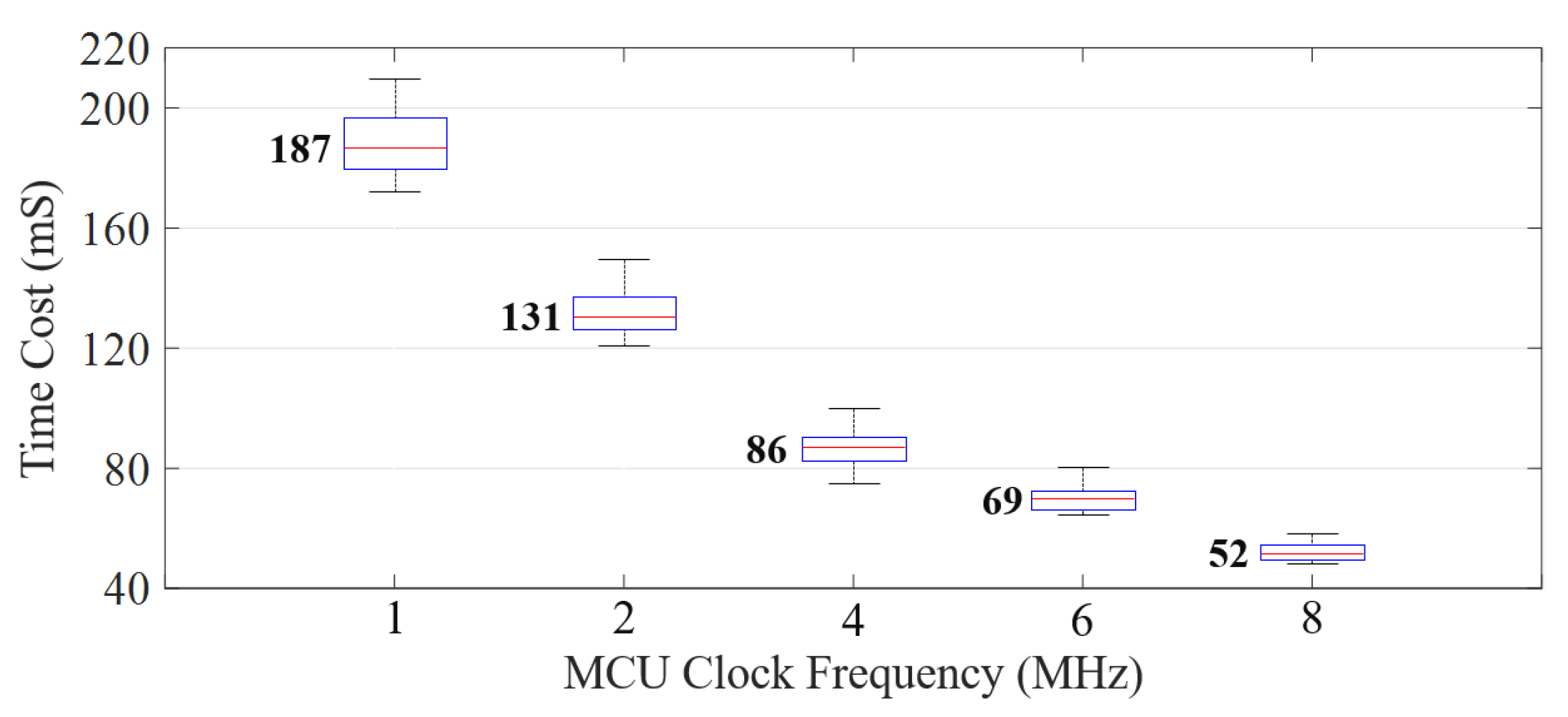

8.6. Time Cost to Identify a User

8.7. Long-Time Usage Simulation for 100,000 Samples of Legitimate Users and Attackers

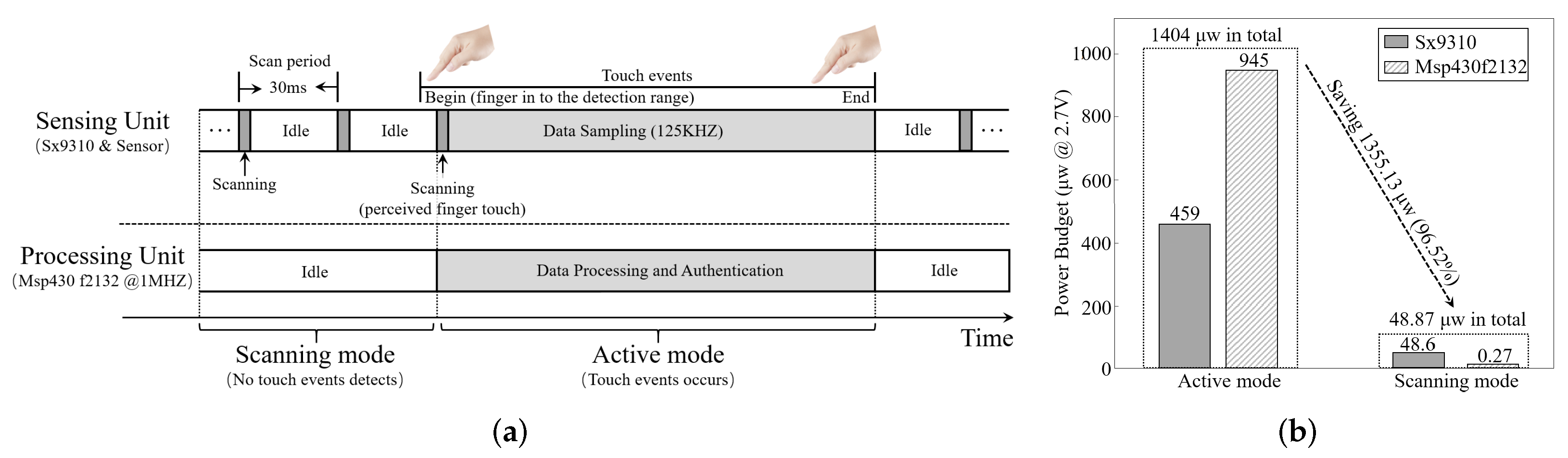

8.8. System Power Consumption

9. Discussion

9.1. External Influence

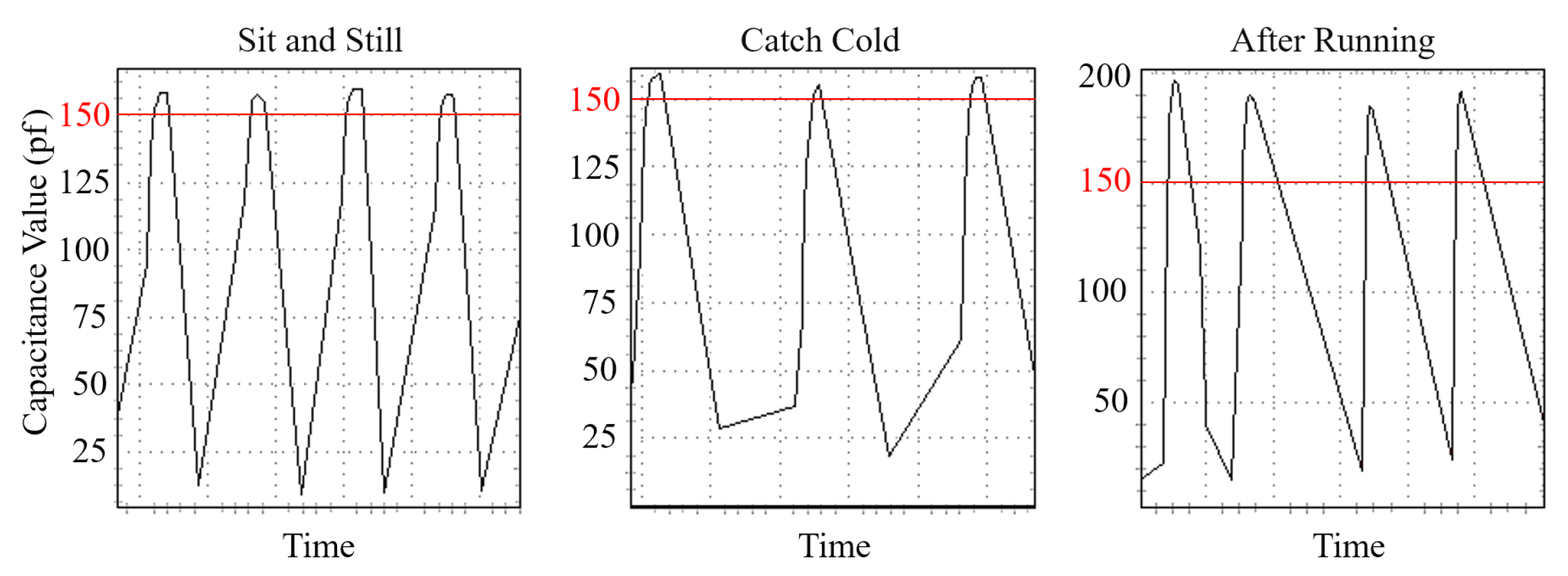

- User body condition. We consider that if a user becomes ill or after strenuous exercise, their behavior might be affected by body conditions, which affects the accuracy of re-authentication. Indeed, such changes might vary from person to person, which is hard to predict. The sensed bio-capacitances of a user under different body conditions are shown in Figure 16. We could tell that the user’s characteristics change in different states. Therefore, the limitation of BioTouch is that it cannot cover those special application scenarios.

- Wet finger. We also considered possible performance influence on wet fingers. We asked ten users to wet their fingers and operate the device. The result shows that all users (including the device owner) are identified as attackers and logged out by the system. The reason stems from the fact that the sensed capacitance value changes much more than different users as the wet finger wets the sensor during touching. Under such conditions, neither the owner nor attackers can fit the information with the stored legitimate data set. Despite the fact that the system does not work well in terms of influencing the usage of the owner, it does not bring security risks of falsely accepting attackers.

- Static Electricity. Finally, we considered the possible effect of static electricity on our system, which may occur in a dry environment such as in the winter. Through the test, we found that the static electricity could only affect the first touch as it discharges and will not affect all subsequent operations. In addition, we also added a Zener diode in the prototype circuit, which could effectively protect the system hardware from static electricity discharges.

9.2. Future Works

9.3. Technical Specifications Comparison

9.4. Ethical Concerns

10. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| HMI | Human Machine Interface |

| CDF | Cumulative Distribution Function |

| SVM | Support Vector Machine |

| FAR | False Alarm Rate |

| FPR | False Positive Rate |

| AFE | Analog-Front-End |

| ADC | Analogue to Digital Converter |

| PPG | Photoplethysmography |

References

- Wikipedia. Zero Trust Security Model. Available online: https://en.wikipedia.org/wiki/Zero_trust_security_model (accessed on 5 February 2021).

- Buck, C.; Olenberger, C.; Schweizer, A.; Völter, F.; Eymann, T. Never trust, always verify: A multivocal literature review on current knowledge and research gaps of zero-trust. Comput. Secur. 2021, 110, 102436. [Google Scholar] [CrossRef]

- Wylde, A. Zero trust: Never trust, always verify. In Proceedings of the 2021 International Conference on Cyber Situational Awareness, Data Analytics and Assessment (CyberSA), Dublin, Ireland, 14–18 June 2021; pp. 1–4. [Google Scholar]

- Darlene, M. Easy Way to Bypass Passcode Lock Screens on iPhones, iPads Running iOS 12. Available online: https://www.computerworld.com/article/3041302/4-new-ways-to-bypass-passcode-lock-screen-on-iphones-ipads-running-ios-9.html. (accessed on 18 September 2018).

- TycoyokeI. How to Fool a Fingerprint Security System as Easy as ABC. Available online: https://www.instructables.com/id/How-To-Fool-a-Fingerprint-Security-System-As-Easy-/ (accessed on 12 January 2019).

- Matsumoto, T.; Matsumoto, H.; Yamada, K.; Hoshino, S. Impact of artificial “gummy” fingers on fingerprint systems. Electron. Imaging 2002, 4677, 275–289. [Google Scholar]

- Patel, V.M.; Chellappa, R.; Chandra, D.; Barbello, B. Continuous User Authentication on Mobile Devices: Recent progress and remaining challenges. IEEE Signal Process. Mag. 2016, 33, 49–61. [Google Scholar] [CrossRef]

- Mantyjarvi, J.; Lindholm, M.; Vildjiounaite, E.; Makela, S.; Ailisto, H. Identifying users of portable devices from gait pattern with accelerometers. IEEE Int. Conf. Acoust. Speech Signal Process. 2005, 2, 973–976. [Google Scholar]

- Gabor, B. Method and System for Re-Authentication in IP Multimedia Core Network System (IMS). US Patent 6,859,651, 22 February 2005. [Google Scholar]

- Crouse, D.; Han, H.; Chandra, D.; Barbello, B.; Jain, A.K. Continuous authentication of mobile user: Fusion of face image and inertial measurement unit data. In Proceedings of the 2015 International Conference, Phuket, Thailand, 19–22 May 2015; pp. 135–142. [Google Scholar]

- Dong, Y.; Su, H.; Wu, B.; Li, Z.; Liu, W.; Zhang, T.; Zhu, J. Efficient Decision-Based Black-Box Adversarial Attacks on Face Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Lovejoy, B. 3D Mask or Photo Fools Airport and Payment Face-Recognition, But Not Face ID. Available online: https://www.reddit.com/r/apple/comments/ebfl6x/3d_mask_or_photo_fools_airport_facerecognition/ (accessed on 16 December 2019).

- Feng, H.; Fawaz, K.; Shin, K.G. Continuous authentication for voice assistants. In Proceedings of the 23rd Annual International Conference on Mobile Computing and Networking, Snowbird, UT, USA, 16–20 August 2017; pp. 343–355. [Google Scholar]

- Mufandaidza, M.; Ramotsoela, T.; Hancke, G. Continuous User Authentication in Smartphones Using Gait Analysis. In Proceedings of the IECON 2018—44th Annual Conference of the IEEE Industrial Electronics Society, Washington, DC, USA, 20–23 October 2018; pp. 4656–4661. [Google Scholar] [CrossRef]

- Papavasileiou, I.; Qiao, Z.; Zhang, C.; Zhang, W.; Bi, J.; Han, S. GaitCode: Gait-based continuous authentication using multimodal learning and wearable sensors. Smart Health 2021, 19, 100162. [Google Scholar] [CrossRef]

- Wu, G.; Wang, J.; Zhang, Y.; Jiang, S. A Continuous Identity Authentication Scheme Based on Physiological and Behavioral Characteristics. Sensors 2018, 18, 179. [Google Scholar] [CrossRef] [Green Version]

- Mondal, S.; Bours, P. A study on continuous authentication using a combination of keystroke and mouse biometrics. Neurocomputing 2017, 230, 1–22. [Google Scholar] [CrossRef]

- Almalki, S.; Chatterjee, P.; Roy, K. Continuous authentication using mouse clickstream data analysis. In International Conference on Security, Privacy and Anonymity in Computation, Communication and Storage; Springer: Berlin/Heidelberg, Germany, 2019; pp. 76–85. [Google Scholar]

- Murphy, C.; Huang, J.; Hou, D.; Schuckers, S. Shared dataset on natural human-computer interaction to support continuous authentication research. In Proceedings of the 2017 IEEE International Joint Conference on Biometrics (IJCB), Denver, CO, USA, 1–4 October 2017; pp. 525–530. [Google Scholar]

- Li, B.; Wang, W.; Gao, Y.; Phoha, V.V.; Jin, Z. Wrist in Motion: A Seamless Context-Aware Continuous Authentication Framework Using Your Clickings and Typings. IEEE Trans. Biom. Behav. Identity Sci. 2020, 2, 294–307. [Google Scholar] [CrossRef]

- Li, L.; Zhao, X.; Xue, G. Unobservable re-authentication for smartphones. NDSS 2013, 56, 57–59. [Google Scholar]

- Amini, S.; Noroozi, V.; Pande, A.; Gupte, S.; Yu, P.S.; Kanich, C. DeepAuth: A Framework for Continuous User Re-Authentication in Mobile Apps. In Proceedings of the 27th ACM International Conference on Information and Knowledge Management; Association for Computing Machinery, New York, NY, USA, 22–26 October 2018; pp. 2027–2035. [Google Scholar] [CrossRef]

- Zhang, C.; Li, S.; Song, Y.; Lu, L.; Hou, M. TouchSense: Accurate and Transparent User Re-authentication via Finger Touching. In Edge Computing and IoT: Systems, Management and Security; Jiang, H., Wu, H., Zeng, F., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 105–125. [Google Scholar]

- Fujiwara, O.; Ikawa, T. Numerical calculation of human-body capacitance by surface charge method. Electron. Commun. 2002, 85, 38–44. [Google Scholar] [CrossRef]

- Sălceanu, A.; Neacşu, O.; David, V.; Luncă, E. Measurements Upon Human Body Capacitance: Theory and Experimental Setup; Faculty of Electrical Engineering: Iasi, Romania, 2004; Available online: http://home.mit.bme.hu/~kollar/IMEKO-procfiles-for-web/tc4/TC4-15th-Iasi-2007/Final_Papers/F191.pdf (accessed on 7 December 2004).

- Lorenzo, A.D.; Andreoli, A.; Battisti, P.; Talluri, T.; Yasumura, S. Total Body Capacitance Correlates with Total Body Potassium. Ann. N. Y. Acad. Ences 2010, 904, 259–262. [Google Scholar] [CrossRef] [PubMed]

- Semtech. Semtech SX9310, Ultra-Low Power Smart Proximity Sensor for SAR. Available online: https://semtech.my.salesforce.com/sfc/p/#E0000000JelG/a/44000000MDi2/DkrQo3QO5lhnCZQaRN2Zew2lb9U2YxNS5GJgtBtrNy0 (accessed on 20 January 2015).

- Instruments, T. MSP430F2132 16-bit Ultra-Low-Power Microcontroller, 8kB Flash, 512B RAM, 10 bit ADC, 1 USCI. Available online: http://www.ti.com/lit/ds/symlink/msp430f22-ep.pdf (accessed on 18 January 2012).

- Mahfouz, A.; Mahmoud, T.M.; Eldin, A.S. A Survey on Behavioral Biometric Authentication on Smartphones. Inf. Secur. Tech. Rep. 2018, 37, 28–37. [Google Scholar] [CrossRef] [Green Version]

- Hao, Z.; Li, Q. Towards user re-authentication on mobile devices via on-screen keyboard. Hot Topics in Web Systems and Technologies (HotWeb). In Proceedings of the 2016 Fourth IEEE Workshop, Washington, DC, USA, 24–25 October 2016; pp. 78–83. [Google Scholar]

- Frank, M.; Biedert, R.; Ma, E.; Martinovic, I.; Song, D. Touchalytics: On the applicability of touchscreen input as a behavioral biometric for continuous authentication. IEEE Trans. Inf. Forensics Secur. 2013, 8, 136–148. [Google Scholar] [CrossRef] [Green Version]

- Dascalescu, A. What Is Biometric Authentication? A Complete Overview. Available online: https://heimdalsecurity.com/blog/biometric-authentication/ (accessed on 15 July 2021).

- Sampath, K.; Balaji, P.; Balaji, P. Biometric Methods—A Secure Survey. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=1493905 (accessed on 10 March 2022).

- Zhao, N.; Fu, C.X. A Sub-Pixel Localization Algorithm on the Basis of Gauss Curve Fitting in Gradient Direction. Adv. Mater. Res. 2011, 219–220, 1149–1152. [Google Scholar] [CrossRef]

- Dong, W.; Bie, H. Edge Detection Algorithm at Sub-pixel Level Based on Curve Fitting of Gauss Function. In Proceedings of the 2015 World Conference on Control, Electronics and Electrical Engineering (WCEE 2015), Shanghai, China, 17–18 January 2015. [Google Scholar]

- Horstman, R.; Peters, K.; Meltzer, R.; Vieth, M.; Hirayama, H. An Estimation of Hardness of Hardened Steels by X-ray Diffraction Using a Gaussian Curve-Fitting Method. J. Test. Eval. 1984, 12, 13–19. [Google Scholar] [CrossRef]

- Mustafa, E.K.; Liu, G.; Hassan, A.; Damos, M.A.; Tarawally, M. Predicting of Land Surface Temperature Distribution in Freetown City, Sierra Leone by Using Polynomial Curve Fitting Model. J. Geogr. Inf. Syst. 2020, 12, 531–544. [Google Scholar] [CrossRef]

- Iik, A.; Zkan, K.; Ergn, S. Biometric person authentication framework using polynomial curve fitting-based ECG feature extractio. Turk. J. Electr. Eng. Comput. Sci. 2019, 27, 3682–3698. [Google Scholar] [CrossRef]

- Xiang, W.; Gong, J.; Nie, Z. Random generation of convex granule packing based on weighted Voronoi tessellation and cubic-polynomial-curve fitting. Comput. Geotech. 2019, 113, 103088. [Google Scholar]

- Paluzo-Hidalgo, E.; Gonzalez-Díaz, R.; Mainar, E.; Rubio, B. Neural-Network-Based Curve Fitting Using Totally Positive Rational Bases. Mathematics 2020, 8, 2197. [Google Scholar]

- Xu, M.; Yan, X.; Lin, W.; Huang, B.; Wang, Y.; Dong, D.; Wang, D. Early Warning of Lithium Battery Progressive Fault Based on Box Plot. In Proceedings of the 2019 3rd International Conference on Electronic Information Technology and Computer Engineering (EITCE), Xiamen, China, 18–20 October 2019; pp. 1072–1075. [Google Scholar] [CrossRef]

- Thirumalai, C.; Kanimozhi, R.; Vaishnavi, B. Data analysis using box plot on electricity consumption. In Proceedings of the 2017 International conference of Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, 20–22 April 2017; Volume 2, pp. 598–600. [Google Scholar] [CrossRef]

- Thirumalai, C.; Auxilia, L.A.; Sowmiya, K.; Kavitha, E. Examination of sea-surface temperature, salinity and water level predicted by coastal ocean models utilizing box-plot method. In Proceedings of the 2017 International Conference on Trends in Electronics and Informatics (ICEI), Tirunelveli, India, 11–12 May 2017; pp. 1044–1047. [Google Scholar] [CrossRef]

- Gong, M.; Jiao, L.; Yang, D. Corrections on the Box Plots of the Coverage Metric in “Multiobjective Immune Algorithm with Nondominated Neighbor-based Selection”. Evol. Comput. 2009, 17, 131–134. [Google Scholar] [CrossRef]

- Praveen, V.; Delhi Narendran, T.; Pavithran, R.; Thirumalai, C. Data analysis using box plot and control chart for air quality. In Proceedings of the 2017 International Conference on Trends in Electronics and Informatics (ICEI), Tirunelveli, India, 11–12 May 2017; pp. 1082–1085. [Google Scholar] [CrossRef]

- Scikit-Learn. Support Vector Machines. Available online: https://scikit-learn.org/stable/modules/svm.html (accessed on 23 June 2017).

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction; Springer Science & Business Media: Berlin, Germany, 2009. [Google Scholar]

- Barrett, G.; Omote, R. Projected-Capacitive Touch Technology. Inf. Disp. 2010, 26, 16–21. [Google Scholar] [CrossRef]

- Liu, S.Y.; Wang, Y.J.; Lu, J.G.; Shieh, H.P.D. 38.3: One Glass Solution with a Single Layer of Sensors for Projected-Capacitive Touch Panels. In Sid Symposium Digest of Technical Papers; Society for Information Display: Santa Ana, CA, USA, 2014; Volume 45, pp. 548–550. [Google Scholar]

- Mi, D. Single-Layer Projected Capacitive Touch Panel and Method of Manufacturing the Same. U.S. Patent 20,150,317,031, 3 October 2017. [Google Scholar]

- Gray, T. Projected Capacitive Touch Basics: A Practical Guide for Engineers; Springer: Berlin, Germany, 2019. [Google Scholar]

- Zhang, S. Main Construction Of Capacitive Touch Screen. Available online: https://www.vtouchscreen.com/news/main-construction-of-capacitive-touch-screen-28265581.html (accessed on 15 June 2019).

- Mo, M.; Li, H.; Zhang, J. Capacitance Touch Screen with Mesh Electrodes. US Patent Application 13/226,902, 15 March 2012. [Google Scholar]

- Ting, K.M. Confusion Matrix. In Encyclopedia of Machine Learning; Sammut, C., Webb, G.I., Eds.; Springer: Boston, MA, USA, 2010; p. 209. [Google Scholar] [CrossRef]

- Milijic, M. 29+ Smartphone Usage Statistics: Around the World in 2020. Available online: https://leftronic.com/smartphone-usage-statistics/ (accessed on 7 February 2021).

- How Much Time Does the Average Person Spend on Their Phone? Available online: https://kommandotech.com/statistics/how-much-time-does-the-average-person-spend-on-their-phone/ (accessed on 13 April 2020).

- Fact Check: Is Smartphone Battery Capacity Growing or Staying the Same? Available online: https://www.androidauthority.com/smartphone-battery-capacity-887305/ (accessed on 21 July 2018).

| Feature/Approach | Required User Cooperation | FAR | Robustness |

|---|---|---|---|

| Facial [10] | Face to camera | 0.5% | ✓ |

| Voice [13] | Talk | 0.1% | ✓ |

| Gait [14,15,16] | Walk | 2.13% | ✓ |

| Mouse and Keyboard Operation [17,18,19,20] | None | 4.75% | × |

| Touching Track [21] | None | 4.32% | × |

| Vibration [22] | None | 3.3% | × |

| BioTouch (This Work) | None | 0.32% | ✓ |

| Parameter | Value | Description |

|---|---|---|

| Scan Period | 30 ms | The interval to scan touch events in system standby (as described in Section 8.8) |

| Sampling Frequency | 125 kHz | The frequency to sample the sensor when a touching event is detected. |

| Filter Threshold | 16 Samples | The system filters the sampled result in every 16 consecutive samples (take the average value). |

| Resolution | 0.015 pF | The set resolution for the prototype to sense the bio-capacitance during user touching. |

| Signal Gain | 32 times | The collected signal is gained by 32 times to make a better distinguish between different users. |

| Sensing Range | 240 pF | The capacitance sensing range is up to 240 pF (7.5 pF× 32) with gain. |

| User ID | Gender | Environment Temperature | Motion | Actual Motions | Detected Motions | Missed Detection |

|---|---|---|---|---|---|---|

| 1 | Male | 30 C | Tapping | 68 | 68 | 0 |

| 6 | Female | 30 C | Sliding | 57 | 56 | 1 |

| 10 | Female | 10 C | Holding | 49 | 49 | 0 |

| 12 | Male | 30 C | Tapping | 93 | 92 | 1 |

| 20 | Male | 10 C | Holding | 41 | 41 | 0 |

| 25 | Female | 10 C | Tapping | 61 | 59 | 2 |

| 32 | Female | 30 C | Sliding | 96 | 91 | 1 |

| 38 | Male | 30 C | Tapping | 71 | 71 | 0 |

| 43 | Female | 10 C | Holding | 56 | 56 | 0 |

| 47 | Male | 10 C | Sliding | 64 | 64 | 0 |

| Motion detection rate among 50 users: 98.9%. | ||||||

| Scheme | Technical Specifications | Application Requirements |

|---|---|---|

| Facial-Based [10] | Can logout 73 of the 82 attackers (89%) in 2 min. | Required users keep facing the front camera during use and avoid obstruction. |

| Voice-Based [13] | Achieve 97% accuracy with 30 voice commands. | Require voice assistance, hence does not suit quiet environments and long-time usage. |

| Gait-Based [16] | Achieve 98.5% accuracy with acceleration, angular velocity and PPG information in walking. | Require users to wear customized equipment for information collection, and performance well only in walking state. |

| Keystroke and mouse movement based [17] | Logout attackers on average after 252 actions. | Can be applied to computers that operate with the mouse and keyboard. |

| Touching track based [21] | Achieves 79% to 95% accuracy in a variety of different touching gestures. | Works base on finger movement trajectory, suitable for touching devices. |

| Vibration [22] | Achieve 96.7% accuracy in 20 s of usage. | Work based device vibration in using, suit for device handheld scenarios. |

| BioTouch (Our Design) | Logout attackers on average after 4.43 operations and achieves 99.84% accuracy after 18 operations. | Work base on touching operations and keep transparent in the entire touching usage. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, C.; Li, S.; Song, Y.; Meng, Q.; Lu, L.; Hou, M. BioTouch: Reliable Re-Authentication via Finger Bio-Capacitance and Touching Behavior. Sensors 2022, 22, 3583. https://doi.org/10.3390/s22093583

Zhang C, Li S, Song Y, Meng Q, Lu L, Hou M. BioTouch: Reliable Re-Authentication via Finger Bio-Capacitance and Touching Behavior. Sensors. 2022; 22(9):3583. https://doi.org/10.3390/s22093583

Chicago/Turabian StyleZhang, Chong, Songfan Li, Yihang Song, Qianhe Meng, Li Lu, and Mengshu Hou. 2022. "BioTouch: Reliable Re-Authentication via Finger Bio-Capacitance and Touching Behavior" Sensors 22, no. 9: 3583. https://doi.org/10.3390/s22093583

APA StyleZhang, C., Li, S., Song, Y., Meng, Q., Lu, L., & Hou, M. (2022). BioTouch: Reliable Re-Authentication via Finger Bio-Capacitance and Touching Behavior. Sensors, 22(9), 3583. https://doi.org/10.3390/s22093583