Research on UAV Robust Adaptive Positioning Algorithm Based on IMU/GNSS/VO in Complex Scenes

Abstract

:1. Introduction

2. Multi-Source Fusion Model

2.1. ESKF (Error State Kalman Filter)

2.1.1. Predictive Model

2.1.2. Measurement Update

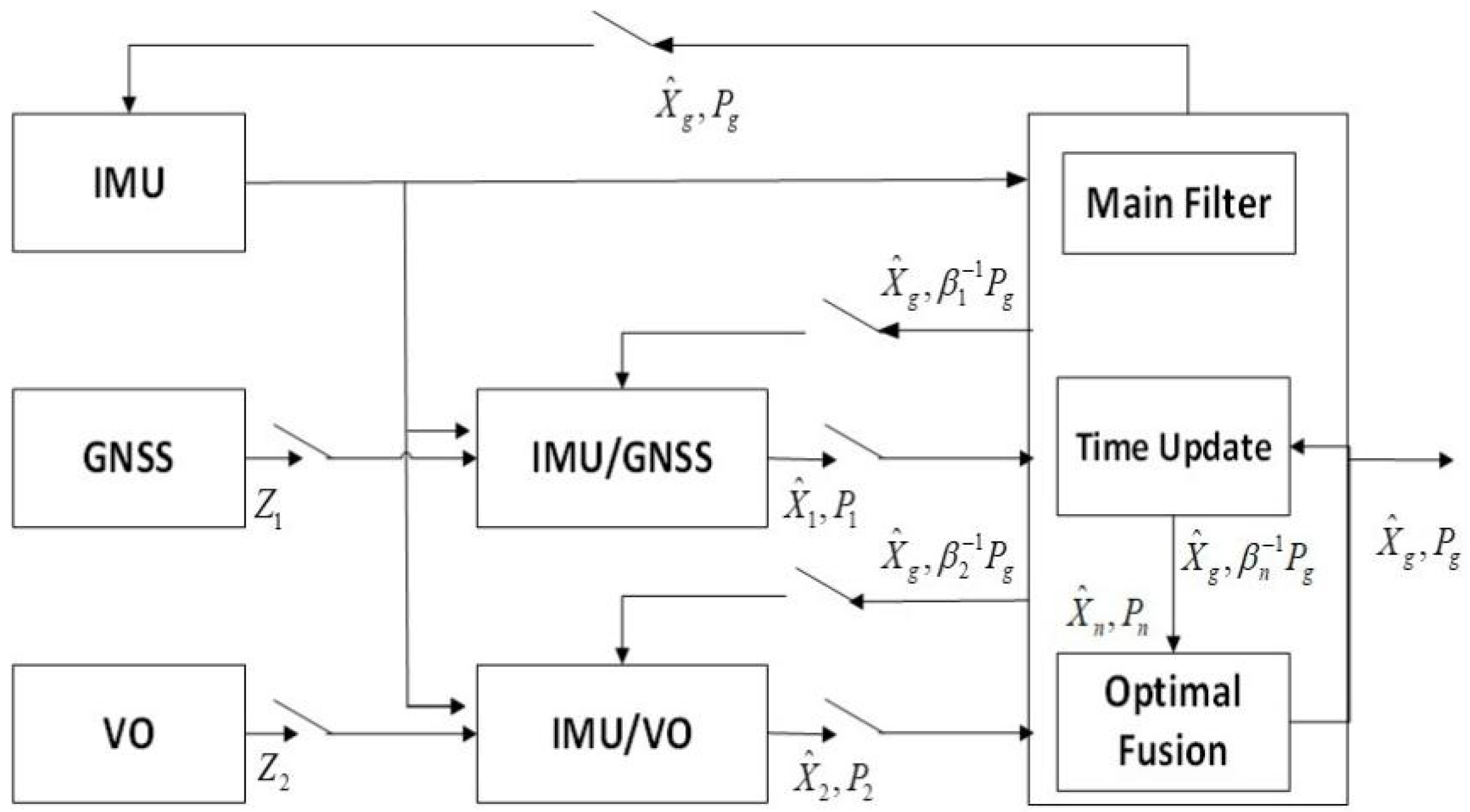

2.2. Fusion Model

2.2.1. Time Update

2.2.2. Measurement Update

2.2.3. Information Fusion

2.2.4. Information Sharing and Feedback

3. Robust Adaptive Filtering

3.1. Robust Equivalent Weight Filtering

3.2. Adaptive Information Sharing Coefficient

3.3. Robust Adaptive Multi-Source Model

4. Simulation Experiment

4.1. Simulation Settings

4.1.1. Track Settings

4.1.2. Scene Settings

4.1.3. Sensor Parameter Settings

4.1.4. Simulation Scheme

4.2. Experimental Results and Discussions

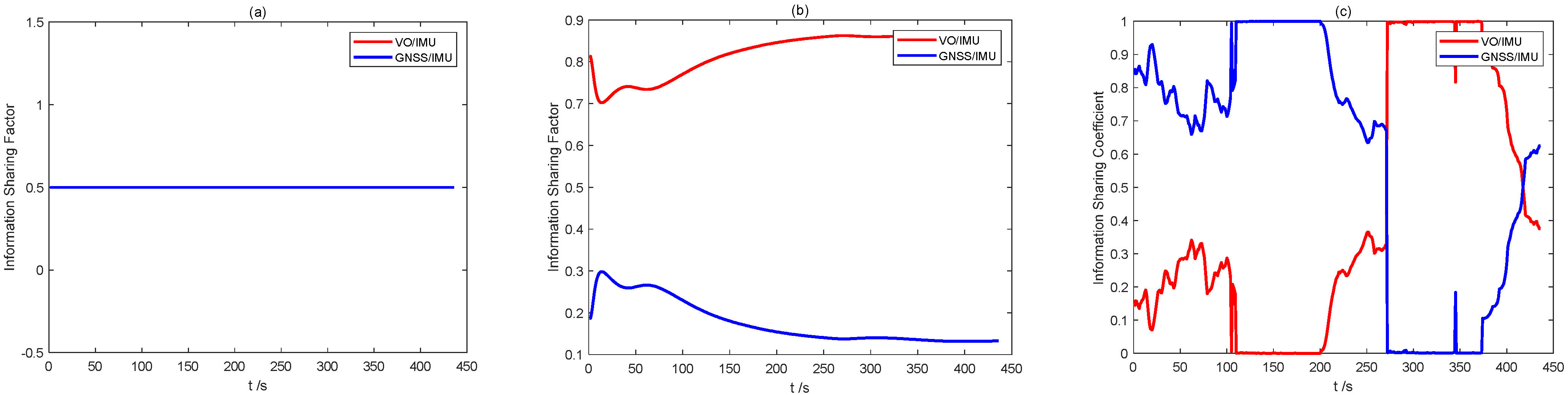

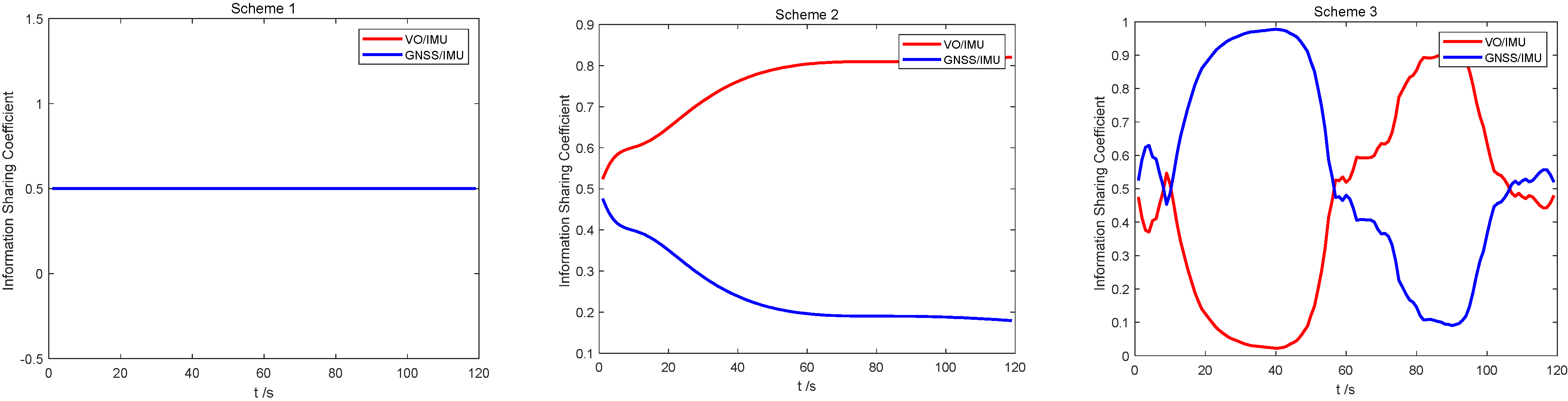

4.2.1. Information Sharing Coefficient Simulation

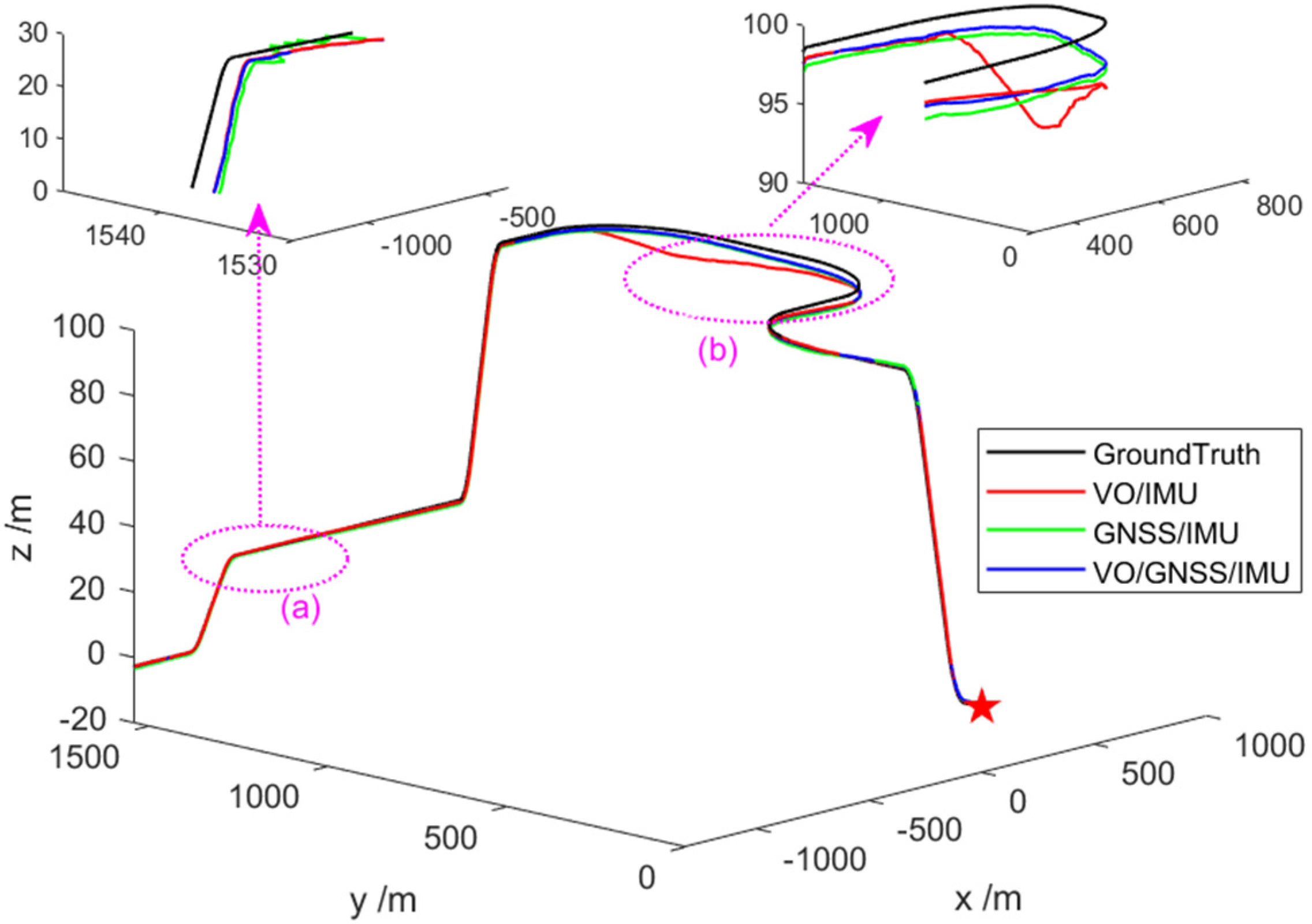

4.2.2. Comparison of State Estimation of Different Combined Systems

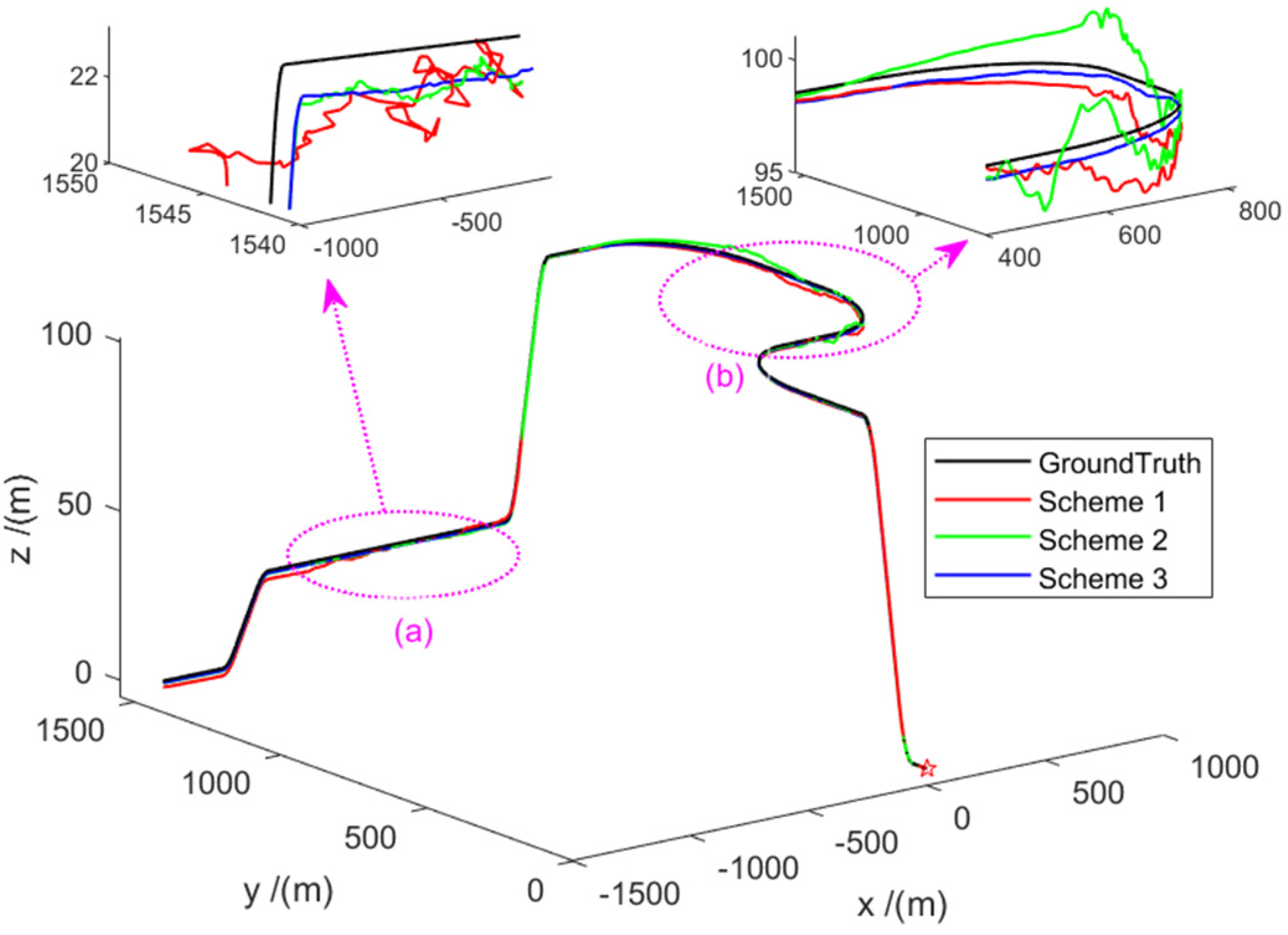

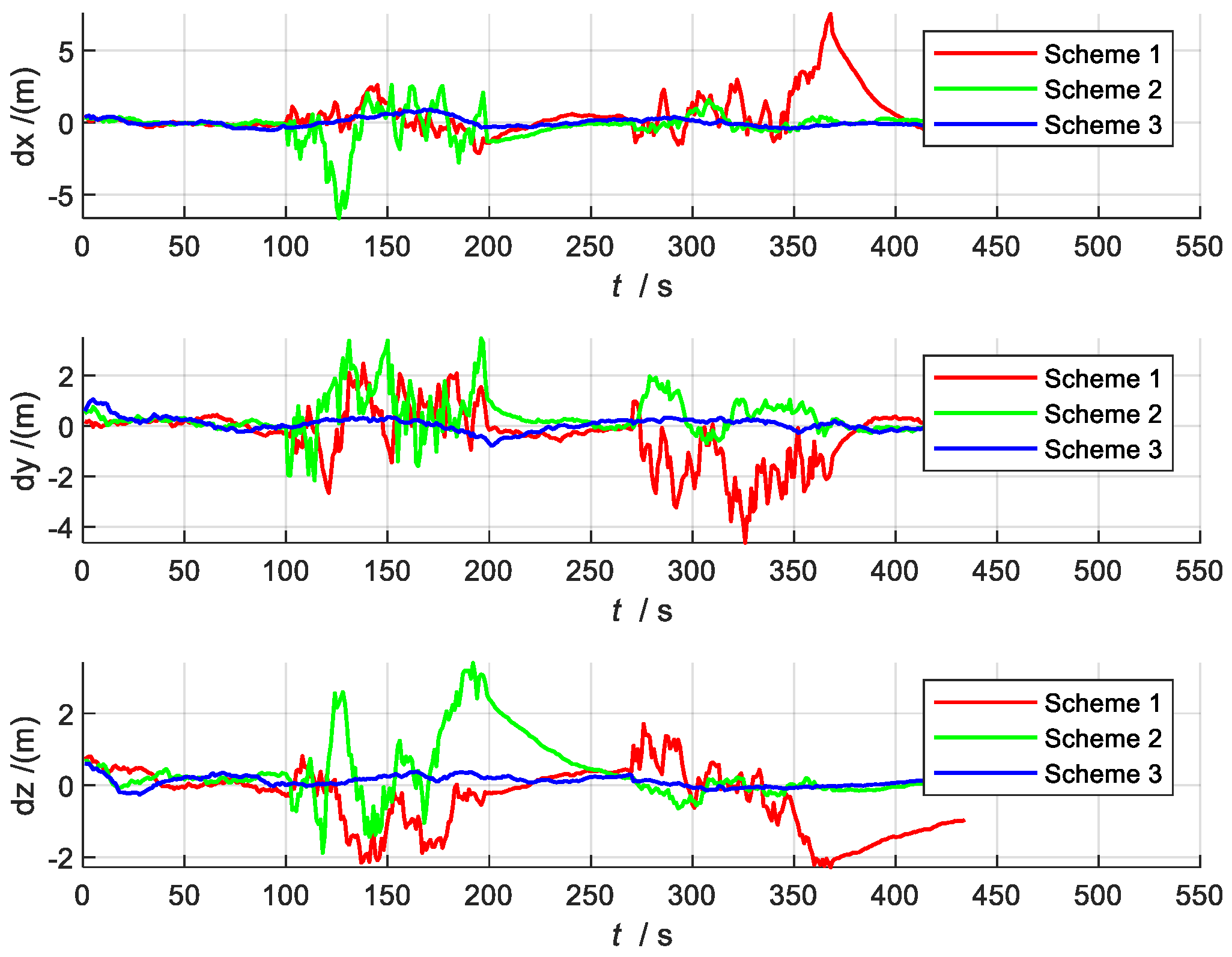

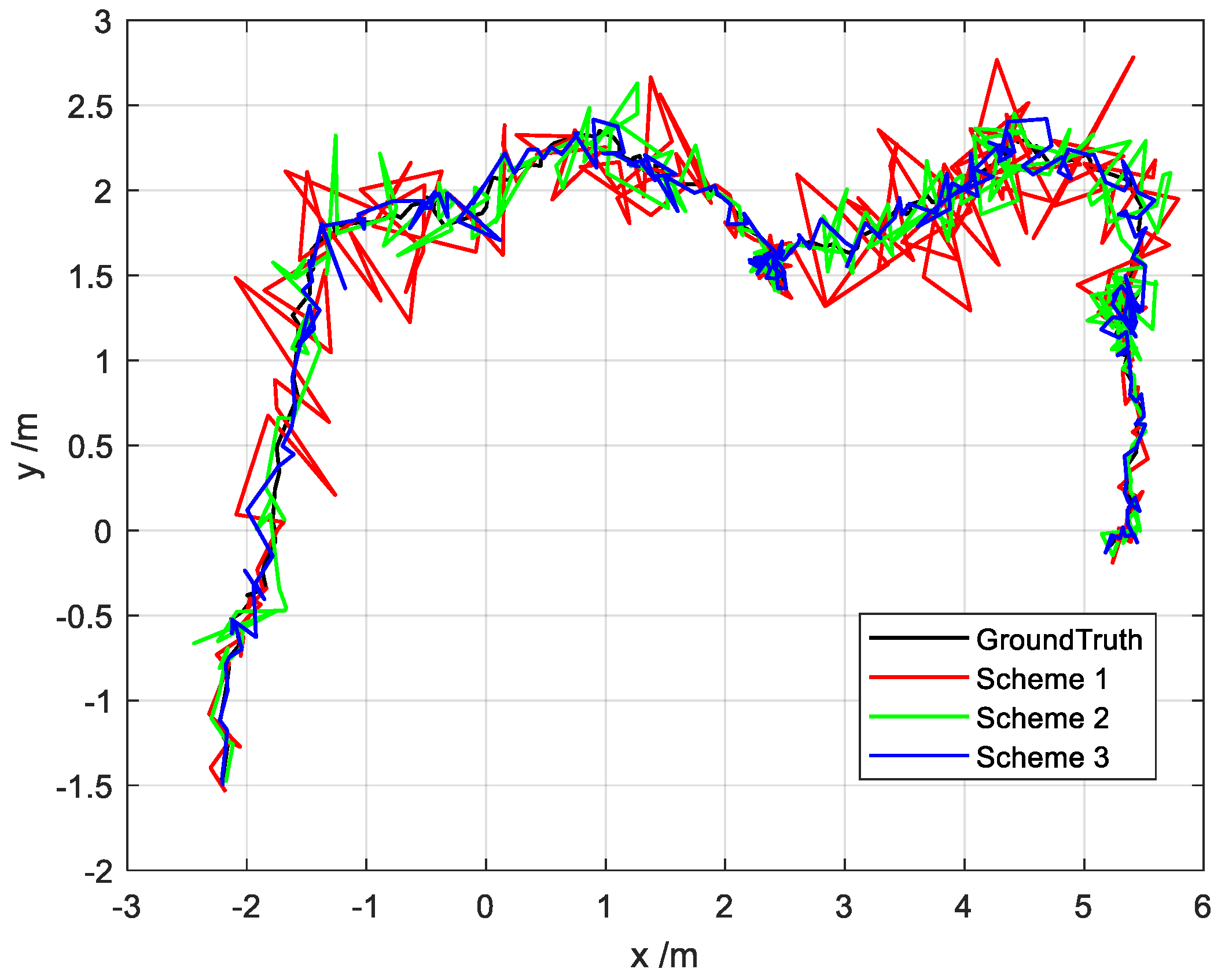

4.2.3. Comparison of the Results of Different Schemes

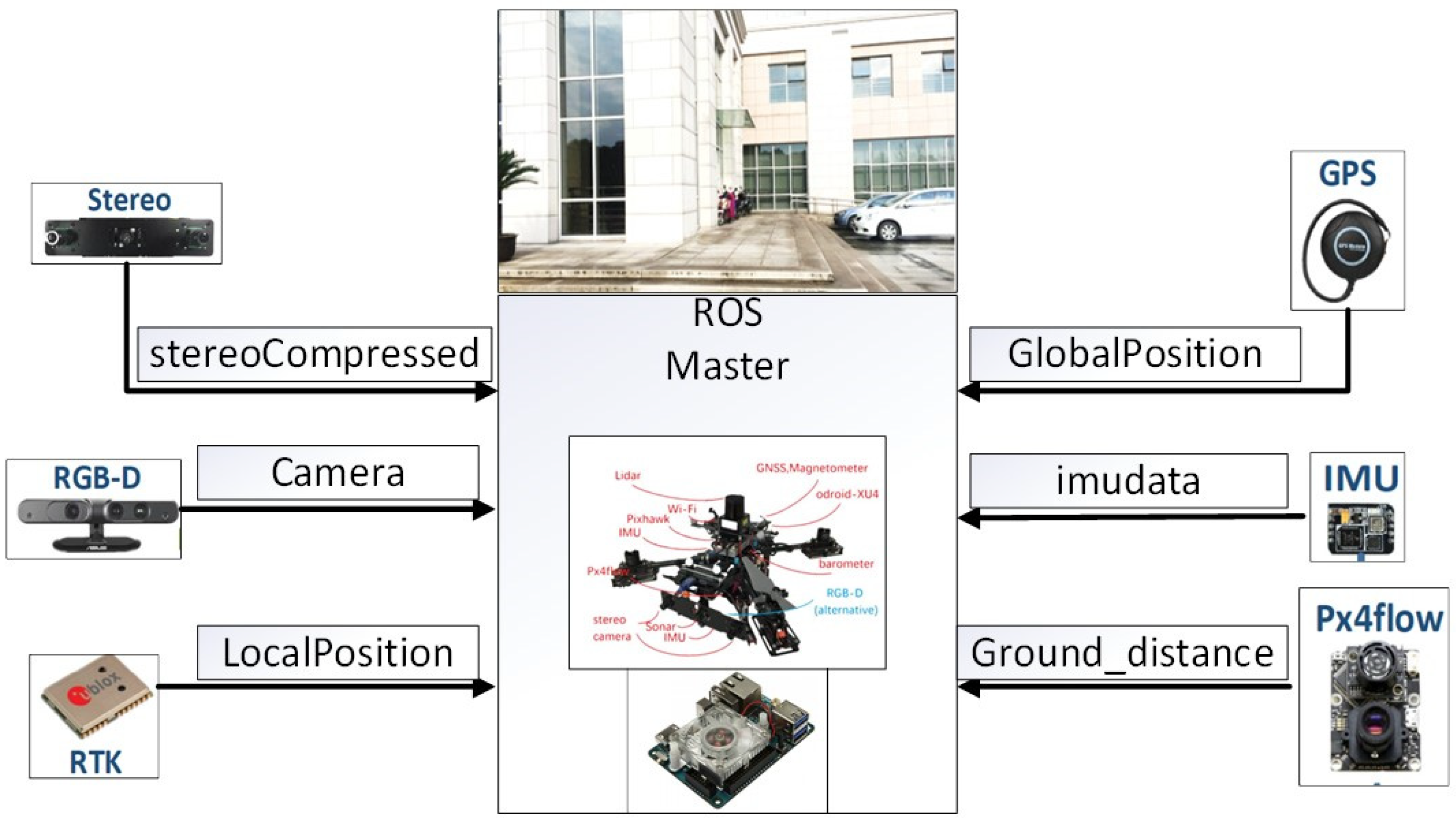

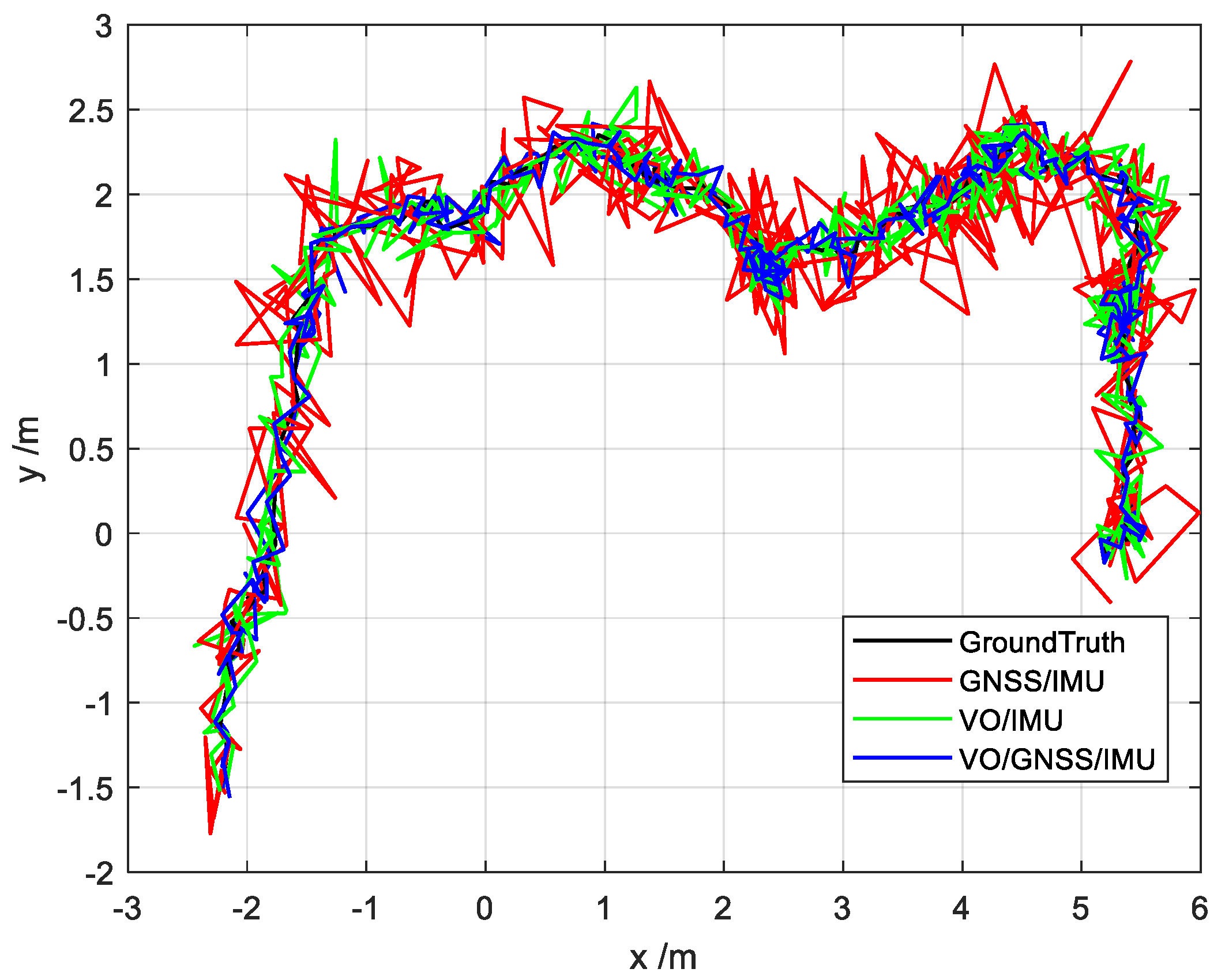

5. Dataset Validation

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sun, Y.; Song, L.; Wang, G. Overview of the development of foreign ground unmanned autonomous systems in 2019. Aerodyn. Missile J. 2020, 30–34. [Google Scholar]

- Cao, F.; Zhuang, Y.; Yan, F. Long-term Autonomous Environment Adaptation of Mobile Robots: State-of-the-art Methods and Prospects. Acta Autom. Sin. 2020, 46, 205–221. [Google Scholar]

- Zhang, T.; Li, Q.; Zhang, C.S.; Liang, H.W.; Li, P.; Wang, T.M.; Li, S.; Zhu, Y.L.; Wu, C. Current Trends in the Development of Intelligent Unmanned Autonomous Systems. Unmanned Syst. Technol. 2018, 11–22. [Google Scholar] [CrossRef] [Green Version]

- Jiang, W.; Li, Y.; Rizos, C. A Multi-sensor Navigation System Based on an Adaptive Fault-Tolerant GOF Algorithm. IEEE Trans. Intell. Transp. Syst. 2016, 18, 103–113. [Google Scholar] [CrossRef]

- Groves, D.P. Principles of GNSS, inertial, and multi-sensor integrated navigation systems. Ind. Robot. 2013, 67, 191–192. [Google Scholar]

- Guo, C. Key Technical Research of Information Fusion for Multiple Source Integrated Navigation System. Ph.D. Thesis, University of Electronic Science and Technology of China, Chengdu, China, 2018. [Google Scholar]

- Gao, B.; Hu, G.; Zhong, Y.; Zhu, X. Cubature rule-based distributed optimal fusion with identification and prediction of kinematic model error for integrated UAV navigation. Aerosp. Sci. Technol. 2020, 109, 106447. [Google Scholar] [CrossRef]

- Wang, D.; Lu, Y.; Zhang, L.; Jiang, G. Intelligent Positioning for a Commercial Mobile Platform in Seamless Indoor/Outdoor Scenes based on Multi-sensor Fusion. Sensors 2019, 19, 1696. [Google Scholar] [CrossRef] [Green Version]

- Carlson, N.A. Federated Square Root Filter for Decentralized Parallel Processes. IEEE Trans. Aerosp. Electron. Syst. 1990, 26, 517–525. [Google Scholar] [CrossRef]

- Shen, K.; Wang, M.; Fu, M.; Yang, Y.; Yin, Z. Observability Analysis and Adaptive Information Fusion for Integrated Navigation of Unmanned Ground Vehicles. IEEE Trans. Ind. Electron. 2019, 67, 7659–7668. [Google Scholar] [CrossRef]

- Xiong, Z.; Chen, J.H.; Wang, R.; Liu, J.Y. A new dynamic vector formed information sharing algorithm in federated filter. Aerosp. Sci. Technol. 2013, 29, 37–46. [Google Scholar] [CrossRef]

- Zhang, J.; Chen, H.; Chen, Y. A Multi-sensor Fusion Positioning Algorithm Based on Federated Kalman Filter. Missiles Space Veh. 2018, 90–98. [Google Scholar]

- Yue, Z.; Lian, B.; Tang, C.; Tong, K. A novel adaptive federated filter for GNSS/INS/VO integrated navigation system. Meas. Sci. Technol. 2020, 31, 085102. [Google Scholar] [CrossRef]

- Lyu, W.; Cheng, X.; Wang, J. Adaptive Federated IMM Filter for AUV Integrated Navigation Systems. Sensors 2020, 20, 6806. [Google Scholar] [CrossRef] [PubMed]

- Guo, T.; Chen, S.; Tan, J. Research on Multi-source Fusion Integrated Navigation Algorithm of SINS/GNSS/OD/Altimeter Based on Federated Filtering. Navig. Position. Timing 2021, 8, 20–26. [Google Scholar]

- Tang, L.; Tang, X.; Li, B.; Liu, X. A Survey of Fusion Algorithms for Multi-source Fusion Navigation Systems. GNSS Word China 2018, 43, 39–44. [Google Scholar]

- Yan, G.; Deng, Y. Review on practical Kalman filtering techniques in traditional integrated navigation system. Navig. Position. Timing 2020, 7, 50–64. [Google Scholar]

- Xu, X.; Pang, F.; Ran, Y.; Bai, Y.; Zhang, L.; Tan, Z.; Wei, C.; Luo, M. An Indoor Mobile Robot Positioning Algorithm Based on Adaptive Federated Kalman Filter. IEEE Sens. J. 2021, 21, 23098–23107. [Google Scholar] [CrossRef]

- Yang, Y.X. The principle of equivalent weight-robust least squares solution of parametric adjustment model. Bull. Surv. Mapp. 1994, 4, 33–35. [Google Scholar]

- Yang, Y.X. Robust Estimation Theory and Its Application; Bayi Press: Beijing, China, 1993; p. 77. [Google Scholar]

- Yang, Y.X. Adaptive Dynamic Navigation and Positioning; Surveying and Mapping Press: Beijing, China, 2006; pp. 191–192. [Google Scholar]

- Hu, G.; Wang, W.; Zhong, Y.; Gao, B.; Gu, C. A new direct filtering approach to INS/GNSS integration. Aerosp. Sci. Technol. 2018, 77, 755–764. [Google Scholar] [CrossRef]

- Li, H.; Tang, S.; Huang, J. A discussion on the selection of constants for several selection iterative methods in robust estimation. Surv. Mapp. Sci. 2006, 70–71. [Google Scholar]

- Wang, Y.; Zhao, B.; Zhang, W.; Li, K. Simulation Experiment and Analysis of GNSS/INS/LEO/5G Integrated Navigation Based on Federated Filtering Algorithm. Sensors 2022, 22, 550. [Google Scholar] [CrossRef] [PubMed]

- Qi-Tai, G.U.; Wang, S. Optimized Algorithm for Information-sharing Coefficients of Federated Filter. J. Chin. Inert. Technol. 2003, 11, 1–6. [Google Scholar]

- Wang, Q.; Cui, X.; Li, Y.; Ye, F. Performance Enhancement of a USV INS/CNS/DVL Integration Navigation System Based on an Adaptive Information Sharing Factor Federated Filter. Sensors 2017, 17, 239. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hu, G.; Gao, S.; Zhong, Y.; Gao, B.; Subic, A. Modified federated Kalman filter for INS/GNSS/CNS integration. Proc. Inst. Mech. Eng. Part G J. Aerosp. Eng. 2016, 230, 30–44. [Google Scholar] [CrossRef]

- Xu, J.; Xiong, Z.; Liu, J.; Wang, R. A Dynamic Vector-Formed Information Sharing Algorithm Based on Two-State Chi Square Detection in an Adaptive Federated Filter. J. Navig. 2019, 72, 101–120. [Google Scholar] [CrossRef]

| Sensor Type | Parameter | Value |

|---|---|---|

| IMU | Gyro bias error | |

| Gyro random walk error | ||

| Accelerometer bias error | ||

| Accelerometer random walk error | ||

| Frequency | 100 Hz | |

| GNSS | Position error | [1 m; 1 m; 3 m] |

| Speed error | [0.1 m/s; 0.1 m/s; 0.1 m/s] | |

| Frequency | 1 Hz | |

| VO | Position error | [0.5 m; 0.5 m; 0.5 m] |

| Attitude error | [0.5°; 0.5°; 0.5°] | |

| Frequency | 2 Hz |

| Number | Scheme 1 | Scheme 2 | Scheme 3 |

|---|---|---|---|

| 1 | 0.4818 | 0.1813 | 0.1470 |

| 2 | 0.3065 | 0.1891 | 0.0755 |

| 3 | 0.3805 | 0.2339 | 0.1125 |

| 4 | 0.3480 | 0.1894 | 0.0616 |

| 5 | 0.3754 | 0.1758 | 0.0641 |

| 6 | 0.4136 | 0.1953 | 0.0983 |

| 7 | 0.4064 | 0.2052 | 0.0929 |

| 8 | 0.3724 | 0.2280 | 0.0258 |

| 9 | 0.4319 | 0.1735 | 0.0093 |

| 10 | 0.4257 | 0.2243 | 0.0267 |

| 11 | 0.4267 | 0.2148 | 0.0140 |

| 12 | 0.3838 | 0.2646 | 0.0730 |

| 13 | 0.4428 | 0.2031 | 0.0542 |

| 14 | 0.4273 | 0.2063 | 0.0559 |

| 15 | 0.5012 | 0.1648 | 0.0162 |

| 16 | 0.4434 | 0.1562 | 0.1018 |

| 17 | 0.4471 | 0.2241 | 0.0882 |

| 18 | 0.4030 | 0.2017 | 0.0671 |

| 19 | 0.3493 | 0.2318 | 0.1115 |

| 20 | 0.3860 | 0.1759 | 0.0357 |

| Scheme 1 | Scheme 2 | Scheme 3 | |

|---|---|---|---|

| Time (s) | 7.56 × 10−3 | 9.01 × 10−3 | 2.12 × 10−2 |

| Sensor | Product Model | Collection Frequency (Hz) |

|---|---|---|

| Optical flow | Px4flow v1.3.1 | 20 |

| Stereo camera | 640 × 480 × 2 OV7725 | 30 |

| IMU | MPU9250 | 40 |

| RGB-D Camera | ASUS Xtion Pro Live | 40 |

| Vicon | Vero 360 | 100 |

| RTK GNSS receiver | Ublox M8P | 10 |

| Error | Pitch (°) | Roll (°) | Yaw (°) | VX (m/s) | VY (m/s) | VZ (m/s) | X (m) | Y (m) | Z (m) | |

|---|---|---|---|---|---|---|---|---|---|---|

| Scheme 1 | MAE | 1.56 | 1.62 | 3.01 | 0.30 | 0.25 | 0.22 | 0.26 | 0.24 | 0.19 |

| STD | 0.95 | 0.97 | 1.97 | 0.23 | 0.22 | 0.19 | 0.50 | 0.55 | 0.64 | |

| Scheme 2 | MAE | 1.08 | 1.12 | 1.78 | 0.15 | 0.14 | 0.12 | 0.13 | 0.12 | 0.11 |

| STD | 0.81 | 0.85 | 1.25 | 0.15 | 0.11 | 0.09 | 0.32 | 0.45 | 0.84 | |

| Scheme 3 | MAE | 0.62 | 0.55 | 0.95 | 0.07 | 0.07 | 0.06 | 0.06 | 0.07 | 0.06 |

| STD | 0.41 | 0.23 | 0.52 | 0.10 | 0.08 | 0.07 | 0.15 | 0.18 | 0.14 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dai, J.; Hao, X.; Liu, S.; Ren, Z. Research on UAV Robust Adaptive Positioning Algorithm Based on IMU/GNSS/VO in Complex Scenes. Sensors 2022, 22, 2832. https://doi.org/10.3390/s22082832

Dai J, Hao X, Liu S, Ren Z. Research on UAV Robust Adaptive Positioning Algorithm Based on IMU/GNSS/VO in Complex Scenes. Sensors. 2022; 22(8):2832. https://doi.org/10.3390/s22082832

Chicago/Turabian StyleDai, Jun, Xiangyang Hao, Songlin Liu, and Zongbin Ren. 2022. "Research on UAV Robust Adaptive Positioning Algorithm Based on IMU/GNSS/VO in Complex Scenes" Sensors 22, no. 8: 2832. https://doi.org/10.3390/s22082832

APA StyleDai, J., Hao, X., Liu, S., & Ren, Z. (2022). Research on UAV Robust Adaptive Positioning Algorithm Based on IMU/GNSS/VO in Complex Scenes. Sensors, 22(8), 2832. https://doi.org/10.3390/s22082832