Abstract

Foot progression angle (FPA) analysis is one of the core methods to detect gait pathologies as basic information to prevent foot injury from excessive in-toeing and out-toeing. Deep learning-based object detection can assist in measuring the FPA through plantar pressure images. This study aims to establish a precision model for determining the FPA. The precision detection of FPA can provide information with in-toeing, out-toeing, and rearfoot kinematics to evaluate the effect of physical therapy programs on knee pain and knee osteoarthritis. We analyzed a total of 1424 plantar images with three different You Only Look Once (YOLO) networks: YOLO v3, v4, and v5x, to obtain a suitable model for FPA detection. YOLOv4 showed higher performance of the profile-box, with average precision in the left foot of 100.00% and the right foot of 99.78%, respectively. Besides, in detecting the foot angle-box, the ground-truth has similar results with YOLOv4 (5.58 ± 0.10° vs. 5.86 ± 0.09°, p = 0.013). In contrast, there was a significant difference in FPA between ground-truth vs. YOLOv3 (5.58 ± 0.10° vs. 6.07 ± 0.06°, p < 0.001), and ground-truth vs. YOLOv5x (5.58 ± 0.10° vs. 6.75 ± 0.06°, p < 0.001). This result implies that deep learning with YOLOv4 can enhance the detection of FPA.

1. Introduction

Plantar image analysis is an effective tool for assessing pathological gait and rehabilitation effectiveness widely used in clinical practice [1]. Plantar pressure patterns and distributions, such as foot progression angle (FPA), provide detailed information to evaluate walking abnormalities [2,3,4]. FPA is defined as the angle made between the line of walking progression and the long axis of the foot. FPA represents the foot placement angle of the longitudinal foot axis during gait [5,6,7]. In-toeing and out-toeing, the most common types of FPA deviations, are associated with knee pain and fall risk [8,9]. The average values of in-toeing and out-toeing are established when the FPAs are <0° and >20°, respectively [10,11]. In addition, detecting the FPA can accelerate the rehabilitation process and reduce knee pain [12], such as using ranges of modifications in step width with various amplitudes and gait retraining in everyday walking [13,14] and for in-toeing and out-toeing through proving the effectiveness of medial-wedge insoles and smart shoes [15,16]. However, FPA will determine the gait pathology’s treatment progression, and getting the precise FPA will help the rehabilitation process more efficiently [17,18].

In addition, the impact of FPA may be vital to indicate the plantar pressures changes that can be attributed to chronic disease [19]. The chronic disease is related to knee injury due to excessive toe-in or toe-out [20]. Furthermore, externally rotated FPA and increased medial loading play important roles in flatfoot [21,22]. Moreover, foot placement angle was the best single predictor of total rearfoot motion, and the FPA may be useful to correct atypical rearfoot kinematics [23,24]. Classifying the left foot and the right before measuring the FPA may play an important role in providing information on the postural changes [25]. For example, a decrease in the percentage of body weight on the left heel in asthmatic patients may be related to the postural changes characteristic of asthma [26].

The successful detection of FPA is required to calculate the abnormality angle [27]. The FPA abnormality would not be traced with unexperienced clinical experience, especially in data acquisition and calculation of remote areas [28]. One-dimensional plantar pressure signals [29,30] and two-dimensional plantar pressure images [31] are two methods to capture information on pressure patterns. In addition, the pressure patterns provide detailed information about foot movement [32,33]. Moreover, two-dimensional plantar pressure images can be used to reliably determine the long axis of the foot during walking [34]. Even though plantar pressure software can identify the FPA with a masking algorithm on a foot scan, the masking algorithms present some limitations. The plantar pressure software may not learn the specific classification of foot profiles and detect FPA abnormalities on in-toeing and out-toeing [35,36]. The limitation is an opportunity for deep learning object detection to predict the foot profiles and diagonal FPA in plantar pressure images to get an accurate FPA [37].

This study is intended to examine the effectiveness of deep learning performance on FPA measurements which can be beneficial in excessive in-toeing, or out-toeing foot rotation which alters gait appearance [38]. A more out-toeing gait might reduce pain in patients with knee osteoarthritis. Furthermore, extreme out-toeing reduces patients’ ankle power, potentially mitigating the forces and knee adduction moment, reducing gait speed and efficiency [39]. However, the in-toeing of the FPA induces the reduction of the knee adduction moment. In-toeing is responsible for increasing the knee flexion angle. Therefore, the variation in the in-toeing with the knee flexion angle should be monitored because increasing the knee flexion angle has undesirable effects on knee osteoarthritis progression [36].

Using deep learning for object detection is widely used in biomedical applications [40,41,42]. For example, the deep learning model can identify plantar pressure patterns for early abnormal detection of foot problems [43]. In addition, deep learning-based approaches have presented a state-of-the-art performance in image classification, segmentation, object detection, and tracking tasks [44]. Object detection is suitable to determine where objects are located in a given image and which category each object belongs to [45]. Object detection has become more streamlined, accurate, and faster as the technology has progressed from Region-based Convolutional Neural Network (R-CNN) to Region-based Fully Network (R-FCN). However, these algorithms are region-based [46]. Therefore, image proposals should be created to begin implementing these algorithms. You Only Look Once (YOLO) is not a region-based algorithm and can provide an end-to-end service that makes it more efficient in measuring the FPA. YOLO uses a single neural network design to forecast bounding boxes and class probabilities directly from entire images that may be essential to classifying the left and right foot [47]. Kim et al. found that YOLO outperforms faster than R–CNN, Fast-RCNN, and single-shot detector (SSD) [48]. In addition, YOLO showed good performance in two-dimensional signal detecting medical images [49].

YOLO is a deep learning model commonly used to predict image data such as plantar images [50,51]. YOLO is one of the most powerful and fastest object identification algorithms based on deep learning techniques in providing fast and precise solutions in medical image detection and classification [52,53]. The YOLO networks have several versions that can help accurately detect the FPA. Considering the need for precise results of the FPA, calculations with minimum error values are essential. Therefore, several versions of YOLO networks need to be compared to determine their performance in detecting the FPA in this study. The YOLO network is a one-stage object detection algorithm that can calculate the classification results and position coordinates [54]. Clinical examination of the FPA by the human eye was beneficial to evaluate the in-toeing and out-toeing that related to the basis of postural information [18]. However, evaluating the in-toeing and out-toeing is essential for knee pain information and provides information on the knee pain rehabilitation effect [20]. In addition, changes in FPA affect rearfoot eversion of rearfoot kinematics normalization [55]. This study uses deep learning in object detection for FPA object localization coordinates. Deep learning may improve precision from reported clinical screening results and human accuracies by 10–27% [56]. The precision detection of the FPA can provide information with in-toeing [38], out-toeing [57], and rearfoot kinematics [55] to evaluate the effect of physical therapy programs on knee pain and knee osteoarthritis [5].

2. Materials and Methods

Data used to prepare this article were obtained from the AIdea platform provided by Industrial Technology Research Institute (ITRI) of Taiwan (https://aidea-web.tw, accessed on 21 February 2021). This study used 1424 plantar pressure images as datasets, with each image of 120 pixels × 400 pixels. A professional data annotator from the data provider labeled the dataset to classify the foot axis point coordinates in the plantar pressure dataset. The image data were divided into a training set with 900 images, a validation set with 100 images, and a prediction test with 424 images. The labeled prediction test images were used as the ground-truth dataset in this study. However, the ground-truth dataset only provided the front and rear points of the foot axis in pixel coordinates.

Furthermore, the FPA could be calculated using the arctangent formula. All calculations were performed using computer equipment with the following hardware: Core I7-10700 CPU, 32 GB RAM, NVIDIA RTX 3080 10 Gb. This study was reported according to STROBE guideline recommendations [58] for reporting observational studies that were applied during study design, training, validation, and reporting of the prediction model.

YOLO is a state-of-the-art deep learning framework for real-time object recognition. YOLO supports real-time object detection significantly faster than earlier detection networks [50]. This model can run at various resolutions, ensuring both speed and precision, which can be beneficial in measuring the FPA. YOLOv3 became one of the state-of-the-art object detection algorithms [59]. Instead of utilizing mean square error to calculate the classification loss, YOLOv3 uses multi-label classification and binary cross-entropy loss for each label. YOLOv3’s backbone is DarkNet-53, which replaces DarkNet-19 as a new feature extractor. The entire DarkNet-53 network is a chain of many blocks with some strides and 2 convolution layers in between to decrease dimension. Each block has a bottleneck structure of 1 × 1, followed by 3 × 3 filters with skip connections [60]. Alexey has introduced YOLOv4, the next version of YOLOv3, which runs twice as quickly as EfficientDet while providing equivalent performance [61]. Rather than using darknet-53 layers for feature extraction, YOLOv4 uses a modified version of CSPdarknet-53 as a backbone, with cross-stage-partial connections (CSP) employed to split the feature extraction connection into two pieces [62]. Instead of the leaky ReLU function used in YOLOv3 and YOLOv4-tiny, the Mish activation function is utilized in the YOLOv4. YOLOv5 was initially uploaded on GitHub in May 2020, and the maintainer gave the network the name YOLOv5 to avoid confusion with the previous release of YOLOv4 [63]. Implementing the state-of-the-art for deep learning networks, such as activation functions and data augmentation, and the usage of CSPNet as its backbone, are the key new features and enhancements in YOLOv5 [64]. This study used YOLOv3, YOLOv4, and YOLOv5 for measuring the FPA.

The training images were inserted into the YOLO model and processed for training purposes. The information of the predicted bounding boxes could be obtained based on the anchor boxes in the YOLO model. This study compared three different versions, i.e., YOLOv3, YOLOv4, and YOLOv5x, which solved object detection efficiently and straightforwardly [65]. The model’s hyperparameters were as follows: The batch size and mini-batch size were 16 and 4, respectively; the momentum and weight decay were 0.9 and 0.0005, respectively; the initial learning rate was 0.001; the epoch model was 300. The detectors were based on Python 3.7.6, PyTorch 1.7.0 (used in YOLOv5x models), and the Darknet framework (used in YOLOv3 and YOLOv4 models) Windows 10.

2.1. Regular FPA Detection Procedure

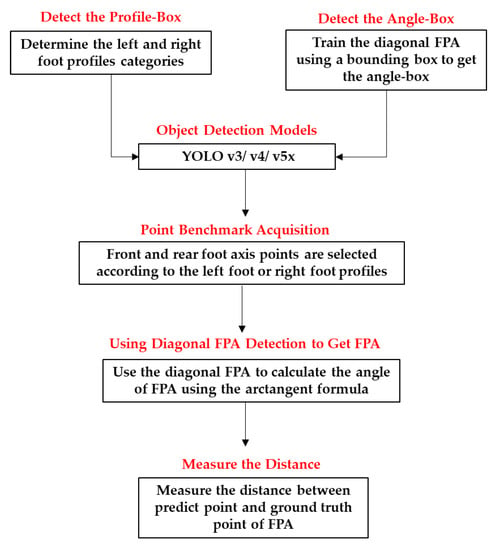

We conducted five steps to get the FPA (Figure 1) from the data training into calculating the angles. First, we needed to determine the foot profile because the diagonal FPA direction of the left and right foot was different. Second, we trained the diagonal FPA using a bounding box to get the angle-box. The box itself has four corner points in its detection. Third, detecting four angle-box corner points in the diagonal FPA requires acquiring two points (front and rear foot axis points) selected according to the left foot or right foot profiles. Fourth, we used the diagonal FPA to calculate the angle of the FPA using the arctangent formula. Fifth, to confirm our two-foot axis point coordinate predictions, we checked the distance between the predicted and ground-truth points.

Figure 1.

Flowchart illustrating the proposed method of foot progression angle (FPA) detection using YOLOv3, YOLOv4, and YOLOv5x.

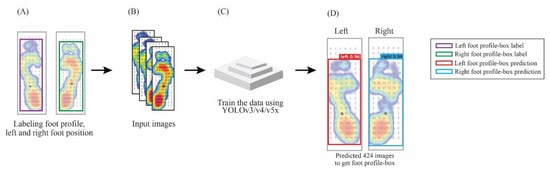

2.1.1. Determine the Left and Right Foot Profiles Categories

The first training section labeled the foot profile regarding the left or right position using the bounding box in the dataset (Figure 2A). Furthermore, we input datasets labeled to three different YOLO models, i.e., YOLOv3, v4, and v5x (Figure 2B,C). For the prediction test section, we used 424 images to get the foot profile-box of the left and right feet (Figure 2D). A foot profile-box was used to determine the left foot or right foot position since detecting the foot profiles essential for the differentiation direction of the FPA.

Figure 2.

The illustration of profile-box for left and right foot in plantar pressure image detection with the YOLO model. (A) Labeling the foot profile in left and right categories using the bounding-box. (B) Input the data labeled for training. (C) Training the dataset using YOLOv3, v4, and v5x. (D) The prediction test used 424 images to get the foot profile-box.

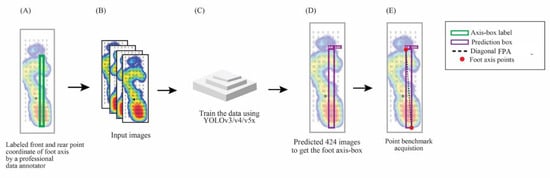

2.1.2. Angle-Box

We used a bounding-box to get the diagonal FPA regarding the angle-box prediction (Figure 3A). In the training section, we input the data labeled by a professional data annotator (Figure 3B) and used the three versions of YOLO models, namely v3, v4, and v5x (Figure 3C). We tested 424 images to get the angle-box prediction and determine the points acquisition based on the foot profile-box (Figure 3D). We used the diagonal FPA on the top left and bottom right for the left foot (Figure 3E), while the right foot diagonal FPA was used on the top right and bottom left.

Figure 3.

The illustration of angle-box for foot progression angle (FPA) in plantar pressure image with the YOLO model. (A) The dataset is labeled by the professional annotator (B). Input the data of labeled images. (C) Training using YOLOv3, v4, and v5x. (D) testing the 424 images to get the foot angle-box. (E) Determine the foot axis point acquisition based on the foot profile-box.

2.1.3. Point Benchmark Acquisition

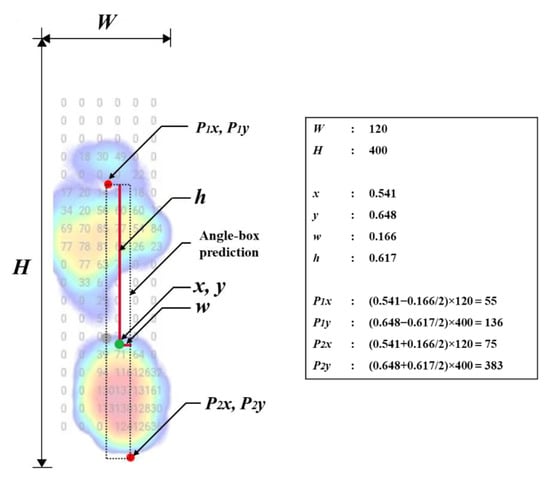

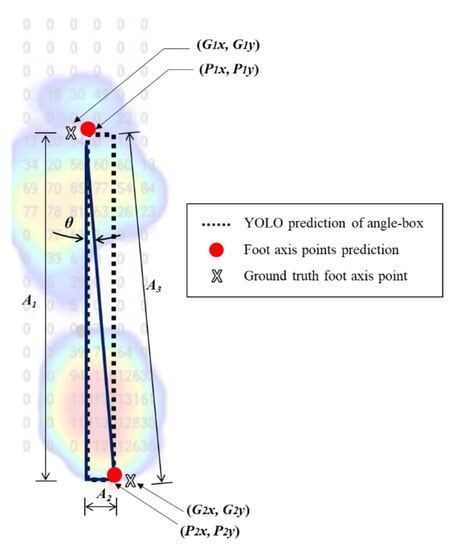

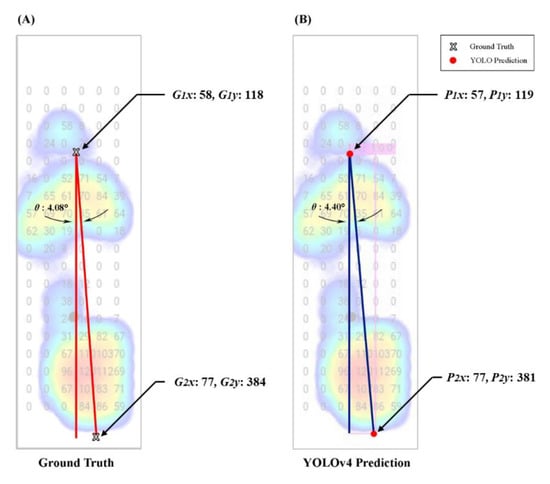

After getting the angle-box, the foot axis points were used to get the distance between the ground-truth and three YOLO models (Figure 4). In addition, the YOLO models record four corner coordinates of the angle-box prediction by converting the YOLO coordinates (x, y, w, h) into pixel coordinate prediction (P1x, P1y, P2x, P2y) [66]. In detail, the horizontal value in the front foot axis point was calculated in Equation (1). Next, the horizontal value in the rear foot axis point was calculated in Equation (2). Then, the vertical value in the front axis point was calculated using Equation (3). Finally, the vertical value in the rear axis point was calculated using Equation (4).

where P1x, P1y represent the front foot axis point coordinates and P2x, P2y represent the rear of the foot axis point coordinates. The center of the box coordinates is x and y, the width and height of the bounding box are w and h, width and height of the images are W and H (Figure 4). While P1x and P1y are the top left corner coordinates for the left foot and the top right corner for the right foot. The lower right corner coordinates for the left foot and the lower-left corner for the right foot are P2x, P2y.

Figure 4.

The example of converting YOLO coordinates (i.e., angle-box) into pixel coordinates. YOLO coordinates are x, y, w, h, W, and H. Pixel coordinates P1x, P1y, P2x, P2y; X and Y, coordinates represent the center of the box; w, the width of the bounding-box; h, the height of the bounding-box; W, the width of image; H, the height of images.

2.1.4. Using Diagonal FPA Detection to Get the FPA

After getting the foot axis points in P1x, P1y, P2x, and P2y, we used diagonal FPA from P1x and P1y to P2x and P2y to get the FPA results. Then, we calculated the FPA using the arctangent formula [67] to get the angle of the A1 and A2 (Figure 5). For the calculation, we used Equation (5).

where θ is the angle in the degree of FPA in each image, the θ will be used to differentiate between the ground-truth and the three YOLO prediction results. For example, A1 is the height of the angle-box, and A3 is the diagonal FPA of the angle-box. The least angles differentiation will conclude the suitable model of YOLO versions in this study.

Figure 5.

The example of diagonal foot progression angle (FPA) of the angle-box in the YOLO model; G1 (G1x and G1y), Ground-truth for the front foot axis point; G2, ground-truth for the rear foot axis point in (G2x and G2y); P1 (P1x and P1y), YOLO models prediction for front foot axis point; P2 (P2x and P2y), YOLO models prediction for the rear foot axis point; A1, the height of angle-box; A2, the width of the angle-box; A3, the diagonal FPA of the angle-box; θ, in degrees for FPA.

2.1.5. Measure the Distance

Confirming the foot axis’s front or rear points can affect the diagonal FPA. This study used the two-point distance formula [68] for each image’s ground-truth coordinates and YOLOv3, YOLOv4, and YOLOv5x coordinates values. We calculated the distance of Gi (i.e., G1 and G2) and Pi (i.e., P1 and P2) (Figure 4) by Equation (6).

where is the distance value between the “ground-truth diagonal FPA points coordinates” and “YOLO’s diagonal FPA points coordinates”. We conducted this formula three times to get the distance between the ground-truth and the three YOLO models.

2.2. Statistical Analysis

After getting all the values of FPA in the ground-truth and three YOLO models in each image, we compared the front and rear of the foot axis points on three YOLO models using a paired t-test. The paired t-test was used to describe the differences between points, determine which point affected detecting the FPA, and get the angle differentiation between the ground-truth and YOLO models. Finally, we used one-way ANOVA and LSD post hoc at the significance level of 0.01 to describe the significant difference between YOLO models and the ground-truth. The data were processed using SPSS 26 (IBM, Somers, New York, NY, USA).

3. Results

3.1. Training Results

Average Precision (AP) and Mean Average Precision (mAP) are the most popular metrics used to evaluate object detection models [69]. A high mAP means that the trained model performs well [60]. Average precision (AP) and loss values of YOLOv3, YOLOv4, and YOLOv5x were calculated, as shown in Table 1. For training results of the profile-box, we used the AP to get detailed results of each class of foot profile-box prediction to determine the left and right foot position [70]. For example, YOLOv4 got the precision of the foot profile-box with an AP of 100.00% for the left foot and was the same high average precision similar for the right foot in 99.78% of AP results. For the foot angle-box, we used mAP. Here, the mAP and AP are the same as the mean because there is only one object. Furthermore, the average precision (mAP) of the training for the foot angle-box for YOLOv4 (97.98%) was 14.38% which was higher than YOLOv5x (96.90%) and 11.88% higher than YOLOv3 (86.32%).

Table 1.

YOLOv3, v4, and v5x performance in bounding box training on the foot profile (profile-box) and FPA (angle-box).

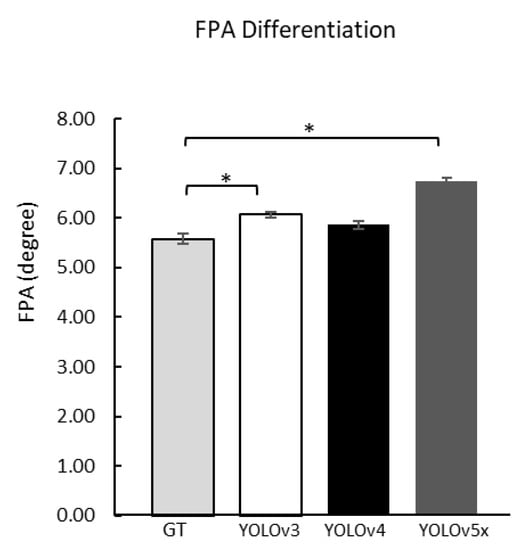

3.2. FPA Comparison

The total sample data is 424 images, while the usable sample data is 367 images. This was caused by 57 samples having missing values. Missing values occurred because the deep learning model could not recognize the image; the data were excluded from further analysis [71,72]. Compared with the FPA from the ground-truth, three versions of YOLO models were calculated using one-way ANOVA and LSD post hoc to get the angle differentiation. YOLOv4 FPA (5.86 ± 0.09°) did not show any significant difference compared to ground-truth (5.58 ± 0.10°) (Table 2). However, YOLOv3 and YOLOv5x were different compared to the ground-truth (Figure 6).

Table 2.

One-way ANOVA of FPA comparison between the ground-truth angle and different YOLO versions.

Figure 6.

FPA comparison between GT with YOLOv3, v4, and v5x. GT, Ground-truth; *, a significant difference (p < 0.01).

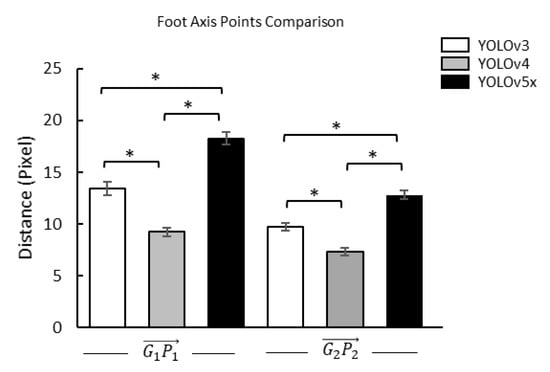

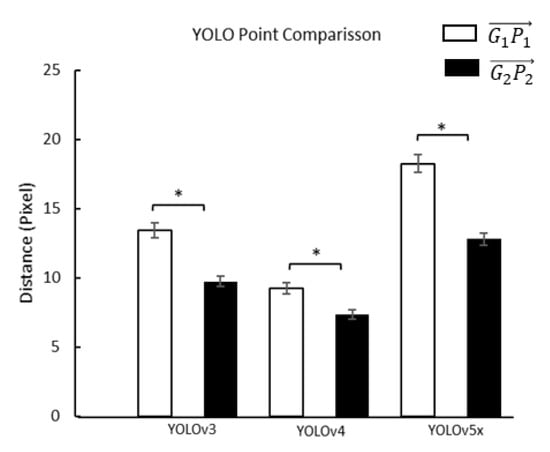

3.3. Distance between Ground-Truth Point and Prediction Point

To confirm the foot axis point, we used paired t-test to get the distance differentiation between and in three YOLO models. Furthermore, we used one-way ANOVA and Fisher’s LSD post hoc to get the distance differentiation of three YOLO models on and (Table 3 and Table 4). The results showed that all comparisons were significantly different (Figure 7 and Figure 8).

Table 3.

Effect of different YOLO models on the distance between the ground-truth point and prediction point.

Table 4.

Effect of different points of FPA on the distance between the ground-truth point and prediction point.

Figure 7.

Comparisons of the front () and rear of the foot axis point () of the angle-box on the distances between ground-truth and prediction points in different YOLO models. , the distance between the front points of the ground-truth (G1) and YOLO model prediction (P1); , the distance between the rear points of the ground-truth (G2) and YOLO model prediction (P2); *, a significant difference (p < 0.01).

Figure 8.

Comparisons of the effect of different YOLO models on the distances between the ground-truth point and prediction point at the front point of the angle-box (), and rear points of the angle-box (). *, a significant difference (p < 0.01).

To evaluate the FPA results we found in YOLO models predictions, we used an example plantar image to test our results using angle calculations through digital image software (Photoshop CS.5, Adobe Inc., San Jose, CA, USA) by comparing the ground-truth with YOLO prediction results [73]. First, we measured the ground-truth coordinate and got the FPA. Second, we validated our prediction of the foot axis points coordinates and calculated the FPA. As a result, our forecast approached the ground-truth angle (Figure 9).

Figure 9.

Examples of validation using photoshop software for the left foot profile. (A) the ground-truth angle of 4.08° was compared with (B) the same images from the YOLOv4 prediction with an angle of 4.40°. Note: G1x and G1y are front foot axis points of the ground-truth. G2x and G2y are rear foot axis points of the ground-truth. P1x and P1y are the front foot axis point of the YOLO model. P2x and P2y are the rear foot axis point of the YOLO model.

4. Discussion

This study used the profile-box and the angle-box labeling names to get the FPA. The profile-box uses the whole plantar pressure images to determine left and right foot profiles. The angle-box is inside a plantar pressure image from the heel to the metatarsal head without the toe region and is used to predict the FPA.

This study shows the effectiveness of deep learning with a small-scale data test containing 367 plantar images. In the profile-box, the YOLO training results showed that the YOLOv4 model has the highest mAP with 99.89%, the left foot profile gets the AP with 100.00% accuracy and the right foot profile with 99.78%. Furthermore, the YOLO training showed that the YOLOv4 model gets the highest mAP with 97.98% in the angle-box. However, the results of the FPA between the YOLOv4 prediction and ground-truth angle did not significantly differ, indicating that YOLOv4 and the ground-truth have similar results (Figure 6). Besides, the foot axis’s front point may affect the accuracy of detecting the FPA (Figure 7).

Therefore, the YOLO model is suitable for detecting the FPA from plantar pressure images based on object detection. These results may indicate that YOLO can help predict the FPA. In addition, the precision of YOLO models on the FPA may contribute to clinical practice by providing information on in-toeing, out-toeing, and rearfoot kinematics, in evaluating the effect of physical therapy programs on knee pain and knee osteoarthritis.

4.1. YOLO Deep Learning Performance

The normal FPA is an out-toeing angle that ranges from 5° to 13° in children [21]. For the adult population, a normal FPA is defined as between 0° and 20° [10]. Our results indicate that the data used in this study was for a normal FPA (Table 2). Our results showed that the FPA was different from the ground-truth (5.58 ± 0.10°) and three YOLO models (v3: 6.07 ± 0.06°, v4: 5.86 ± 0.09°, and v5x 6.75 ± 0.06°) estimated between 1.3° to 1.9°. The YOLO model can detect and estimate the precise FPA direction of the plantar pressure image. Deep learning can also detect and estimate the spinal curve angle of the trunk kinematics and limb. For spinal disorders and deformities object detection, Galbusera et al. showed that deep learning was trained to predict kyphosis angle, lordosis angle, and Cobb angle. The predicted parameters with an automated method resulted in standard estimate errors between 2.7° and 9.5° [74]. Alharbi et al. showed that deep learning object detection was used to automatically measure the scoliosis angle based on X-rays images and the differentiation from results was estimated at 5°–10° [75].

Furthermore, Hernandez et al. predicted lower limb joint angles from inertial measurement units using deep learning for the lower limb detector and got an estimated average of 2.1° between their ground-truth and predicted joint angles [76]. Pei et al. used deep learning to detect hip–knee–ankle angles in X-rays images, comparing the other deep learning model with a calculated angle ratio that had a deviation from the ground-truth estimate of 1.5° [77]. Our results of different FPAs between YOLO prediction angles and ground-truth angles ranged from 1.3° to 1.9°, similar to the results for lower limb areas in other studies. Therefore, the YOLO models is suitable for detecting the FPA from plantar pressure images based on object detection.

4.2. YOLOv4 Showed Superior Results

In our results, YOLOv4 showed excellent performance in detecting the FPA based on plantar pressure images with a single-frame task. The reason would be that YOLOv4 had the backbone network modifications, especially in single-frame tasks, and optimized accuracy for object detection based on images [78]. Whereas YOLOv5 is advantageous in the detection based on video with a multi-frame task [64]. For example, Zheng et al. detected concealed cracks using YOLOv3, v4, and v5x with YOLOv4, proving superior prediction based on single-frame tasks [79]. Furthermore, Andhy et al. applied YOLOv4 to detect waste images based on images and precision results with the actual data [62]. Therefore, YOLOv4′s good performance in the FPA of plantar pressure may be due to the single-frame task.

The results of profile-box training showed that YOLOv4 gets 99.78% (right foot) to 100.00% (left foot) AP due to the characteristic of plantar images with one class and one object in an image. By utilizing boundaries from plantar images, the labeling makes it easier for YOLO to detect foot profiles [80]. Our result was similar to the study by Gao et al. facilitating a robotic arm grasping system in nonlinear and non-Gaussian environment detection using labeling objects on the boundary, with a YOLOv4 range of 96.70% to 99.50% AP. Therefore, YOLOv4 was chosen rather than YOLOv3 and YOLOv5 [81].

In addition, the mAP of angle-box was 97.98% in YOLOv4 was lower than the profile-box mAP of 99.89% (left foot 100.00% and right 99.78% AP). The detection of the angle-box may have limitations on prediction due to the position of the angle-box inside the pressure images with similar background color and density from the pressure. Similar background color and density were the problems of detecting a cluster of flowers and detecting eyes, nose, and mouth in the face. Wu et al. detected apple flowers in natural environments. They got the result of 97.31% mAP on YOLOv4, which had a bounding-box in the flowers with a similar background color and density of flower clusters [11]. Dagher et al. predicted that face recognition to detect the eyes, nose, and mouth was more complex than predicting the whole face [82]. It is concluded that YOLO might be good at profile detection.

4.3. Foot Profiles Prediction and Foot Axis Points Distance

Specific markers could predict the FPA front and rear point in two small bounding-boxes. However, the two small bounding-boxes in the front and rear were very similar. Therefore, YOLO was not the best performance for similar objects in one image [83]. The low performance is caused by the fact that just two small boxes in the grid are anticipated and only belong to a new class of objects within the same category, resulting in an abnormal aspect ratio and other factors such as low generalization capacity [84]. Due to these reasons, we used one bounding-box, including front and rear points, to get the FPA.

Similar background color and density were problems in the angle-box and may have affected FPA accuracy in object detection. FPA accuracy is based on the two points of the diagonal FPA (front and rear foot axis point). Therefore, the distance between the predicted and ground-truth points is necessary to investigate. The FPA, especially in the front foot axis point between the three YOLO models prediction and the ground-truth (9.23 to 18.25 pixel), was longer than the rear foot axis point (7.34 to 12.80 pixel). Furthermore, the front foot axis point as a density area also has a similar background of pressure to the metatarsal-phalangeal joints bone and near the other bone, affecting the detection of the used plantar pressure images [85,86]. The density and similar background can lead to low performance in predicting the bounding boxes [87]. However, the rear foot axis point is clearer than the front foot axis point. The rear foot axis point has pressure from calcaneus bone, allowing the YOLO model optimum detection with a non-maximum suppression feature [88,89]. In addition, the rear of the foot axis point is around the boundary of the plantar pressure distribution area with minimum density [90]. The results represent that the front foot axis point due to increasing density from metatarsal-phalangeal joints bone and near the other bone may affect detecting the FPA.

4.4. Limitation in Diagonal FPA Acquisitions

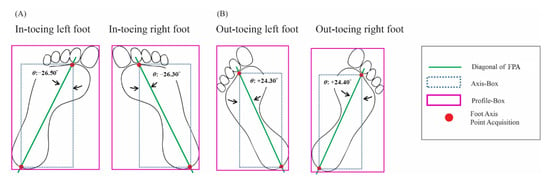

The main limitation of our study was the analysis dataset without in-toeing data. As we know, in-toeing is a symptom of illness in the FPA and needs further intervention. Even though we did not have the plantar image with the FPA of in-toeing in this study, our standard methods can be used to measure out-toeing. However, in-toeing measurement has required the addition of the “regular-FPA-procedure” in “labeling the foot profile in left and right categories” and “point benchmark acquisition.”

“Labeling the foot profile in left and right categories” needs to be modified into four classifications: “labeling the foot profile in left-in-toeing, left-out-toeing, right-in-toeing, and right-out-toeing categories.” To determine foot profiles associated with in-toeing conditions by labeling plantar pictures, we used YOLO to do the first classification to get the left and right foot profiles of in-toeing conditions such as left-in-toeing and right-in-toeing. The in-toeing foot profiles position may have the other condition to measure the FPA than the out-toeing condition. In out-toeing, the diagonal FPA acquisition is the same as the “regular-FPA-detection-procedure.” In contrast, in-toeing diagonal FPA acquisition is the patient’s normal foot profile (Figure 10). Therefore, it is necessary to classify the foot position before detecting the foot axis points.

Figure 10.

Different foot positions of in-toeing and out-toeing will acquire other foot axis points. (A) the method for diagonal FPA acquisition of in-toeing. (B) the method for diagonal FPA acquisition of out-toeing.

Furthermore, “point benchmark acquisition” was based on the angle-box. YOLO can detect the 4-corner coordinates of the angle-box prediction through the converting stage and then acquire the 2-point benchmark referred to as the foot position of the in-toeing foot direction (Figure 10) [91]. The left and right foot profiles of in-toeing determine the front and rear axis points used to get the diagonal FPA used to measure the angle of the FPA [92].

In addition, using more data validation sets over 3500 images may increase YOLO performance [93]. However, the current study using a small-scale validation set under 350 images showed good performance [42]. Therefore, this study used a small-scale validation set using plantar pressure images and achieved a suitable YOLO performance.

5. Conclusions

This study proposed three YOLO models for a suitable model for detecting the FPA. YOLOv4 showed superior results in detecting the left and right foot profiles. Deep learning with YOLOv4 has the advantage of improving predictions of the FPA without significant differences from the ground truth. Besides, YOLOv4 has a reliable detection accuracy of FPA from plantar pressure images. The effects of the accuracy of the FPA may be from the front of the FPA point. The precision detection of the FPA can provide information with in-toeing, out-toeing, and rearfoot kinematics, to evaluate the effect of physical therapy programs on knee pain and knee osteoarthritis.

Author Contributions

Conceptualization, P.A. and C.-W.L.; methodology, C.-Y.L. and J.-Y.T.; writing—original draft preparation, P.A. and R.B.R.S.; writing—review and editing, C.-W.L. and V.B.H.A.; supervision, B.-Y.L. and Y.-K.J. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by a grant from the Ministry of Science and Technology of the Republic of China (MOST 110-2221-E-468-005, MOST 110-2637-E-241-002, and MOST 110-2221-E-155-039-MY3). The funding agency did not involve data collection, data analysis, and data interpretation.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.

Acknowledgments

Data collection and sharing for this project were found SHUI-MU International Co., Ltd., Taiwan, which is available on the AIdea platform (https://aidea-web.tw (accessed on 13 March 2022)) provided by Industrial Technology Research Institute (ITRI) of Taiwan. The authors wish to express gratitude to Sunardi, Fahni Haris, Jifeng Wang, Taufiq Ismail, Jovi Sulistiawan, Wei-Cheng Shen, and Quanxin Lin for their assistance.

Conflicts of Interest

The authors declare that they have no conflict of interest.

References

- Ramirez-Bautista, J.A.; Hernández-Zavala, A.; Chaparro-Cárdenas, S.L.; Huerta-Ruelas, J.A. Review on plantar data analysis for disease diagnosis. Biocybern. Biomed. Eng. 2018, 38, 342–361. [Google Scholar] [CrossRef]

- Rai, D.; Aggarwal, L. The study of plantar pressure distribution in normal and pathological foot. Pol. J. Med. Phys. Eng 2006, 12, 25–34. [Google Scholar]

- Wafai, L.; Zayegh, A.; Woulfe, J.; Aziz, S.M.; Begg, R. Identification of foot pathologies based on plantar pressure asymmetry. Sensors 2015, 15, 20392–20408. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Buldt, A.K.; Allan, J.J.; Landorf, K.B.; Menz, H.B. The relationship between foot posture and plantar pressure during walking in adults: A systematic review. Gait Posture 2018, 62, 56–67. [Google Scholar] [CrossRef] [PubMed]

- Simic, M.; Wrigley, T.; Hinman, R.; Hunt, M.; Bennell, K. Altering foot progression angle in people with medial knee osteoarthritis: The effects of varying toe-in and toe-out angles are mediated by pain and malalignment. Osteoarthr. Cartil. 2013, 21, 1272–1280. [Google Scholar] [CrossRef] [Green Version]

- Lösel, S.; Burgess-Milliron, M.J.; Micheli, L.J.; Edington, C.J. A simplified technique for determining foot progression angle in children 4 to 16 years of age. J. Pediatr. Orthop. 1996, 16, 570–574. [Google Scholar] [CrossRef]

- Huang, Y.; Jirattigalachote, W.; Cutkosky, M.R.; Zhu, X.; Shull, P.B. Novel foot progression angle algorithm estimation via foot-worn, magneto-inertial sensing. IEEE Trans. Biomed. Eng. 2016, 63, 2278–2285. [Google Scholar] [CrossRef]

- Yan, S.-h.; Zhang, K.; Tan, G.-q.; Yang, J.; Liu, Z.-c. Effects of obesity on dynamic plantar pressure distribution in Chinese prepubescent children during walking. Gait Posture 2013, 37, 37–42. [Google Scholar] [CrossRef]

- Tokunaga, K.; Nakai, Y.; Matsumoto, R.; Kiyama, R.; Kawada, M.; Ohwatashi, A.; Fukudome, K.; Ohshige, T.; Maeda, T. Effect of foot progression angle and lateral wedge insole on a reduction in knee adduction moment. J. Appl. Biomech. 2016, 32, 454–461. [Google Scholar] [CrossRef]

- Lerch, T.D.; Eichelberger, P.; Baur, H.; Schmaranzer, F.; Liechti, E.F.; Schwab, J.M.; Siebenrock, K.A.; Tannast, M. Prevalence and diagnostic accuracy of in-toeing and out-toeing of the foot for patients with abnormal femoral torsion and femoroacetabular impingement: Implications for hip arthroscopy and femoral derotation osteotomy. Bone Jt. J. 2019, 101, 1218–1229. [Google Scholar] [CrossRef]

- Wu, D.; Lv, S.; Jiang, M.; Song, H. Using channel pruning-based YOLO v4 deep learning algorithm for the real-time and accurate detection of apple flowers in natural environments. Comput. Electron. Agric. 2020, 178, 105742. [Google Scholar] [CrossRef]

- Müller, M.; Schwachmeyer, V.; Tohtz, S.; Taylor, W.R.; Duda, G.N.; Perka, C.; Heller, M.O. The direct lateral approach: Impact on gait patterns, foot progression angle and pain in comparison with a minimally invasive anterolateral approach. Arch. Orthop. Trauma Surg. 2012, 132, 725–731. [Google Scholar] [CrossRef]

- Ulrich, B.; Edd, S.; Bennour, S.; Jolles, B.; Favre, J. Ranges of modifications in step width and foot progression angle for everyday walking. Ann. Phys. Rehabil. Med. 2018, 61, e485. [Google Scholar] [CrossRef]

- Chen, D.K.; Haller, M.; Besier, T.F. Wearable lower limb haptic feedback device for retraining foot progression angle and step width. Gait Posture 2017, 55, 177–183. [Google Scholar] [CrossRef]

- Mouri, H.; Kim, W.-C.; Arai, Y.; Yoshida, T.; Oka, Y.; Ikoma, K.; Fujiwara, H.; Kubo, T. Effectiveness of medial-wedge insoles for children with intoeing gait who fall easily. Turk. J. Phys. Med. Rehabil. 2019, 65, 9. [Google Scholar] [CrossRef]

- Xia, H.; Xu, J.; Wang, J.; Hunt, M.A.; Shull, P.B. Validation of a smart shoe for estimating foot progression angle during walking gait. J. Biomech. 2017, 61, 193–198. [Google Scholar] [CrossRef]

- Young, J.; Simic, M.; Simic, M. A Novel foot Progression Angle Detection Method. In Computer Vision in Control Systems-4; Springer: Berlin/Heidelberg, Germany, 2018; pp. 299–317. [Google Scholar]

- Ranawat, A.S.; Gaudiani, M.A.; Slullitel, P.A.; Satalich, J.; Rebolledo, B.J. Foot progression angle walking test: A dynamic diagnostic assessment for femoroacetabular impingement and hip instability. Orthop. J. Sports Med. 2017, 5, 2325967116679641. [Google Scholar] [CrossRef]

- Kim, H.-j.; Park, I.; Lee, H.-j.; Lee, O. The reliability and validity of gait speed with different walking pace and distances against general health, physical function, and chronic disease in aged adults. J. Exerc. Nutr. Biochem. 2016, 20, 46. [Google Scholar] [CrossRef]

- Rutherford, D.; Hubley-Kozey, C.; Deluzio, K.; Stanish, W.; Dunbar, M. Foot progression angle and the knee adduction moment: A cross-sectional investigation in knee osteoarthritis. Osteoarthr. Cartil. 2008, 16, 883–889. [Google Scholar] [CrossRef] [Green Version]

- Lai, Y.-C.; Lin, H.-S.; Pan, H.-F.; Chang, W.-N.; Hsu, C.-J.; Renn, J.-H. Impact of foot progression angle on the distribution of plantar pressure in normal children. Clin. Biomech. 2014, 29, 196–200. [Google Scholar] [CrossRef]

- Tareco, J.M.; Miller, N.H.; MacWilliams, B.A.; Michelson, J.D. Defining flatfoot. Foot Ankle Int. 1999, 20, 456–460. [Google Scholar] [CrossRef]

- Kernozek, T.W.; Ricard, M.D. Foot placement angle and arch type: Effect on rearfoot motion. Arch. Phys. Med. Rehabil. 1990, 71, 988–991. [Google Scholar]

- Mousavi, S.H.; van Kouwenhove, L.; Rajabi, R.; Zwerver, J.; Hijmans, J.M. The effect of changing foot progression angle using real-time visual feedback on rearfoot eversion during running. PLoS ONE 2021, 16, e0246425. [Google Scholar] [CrossRef]

- Hertel, J.; Gay, M.R.; Denegar, C.R. Differences in postural control during single-leg stance among healthy individuals with different foot types. J. Athl. Train. 2002, 37, 129. [Google Scholar]

- Painceira-Villar, R.; García-Paz, V.; Becerro de Bengoa-Vallejo, R.; Losa-Iglesias, M.E.; López-López, D.; Martiniano, J.; Pereiro-Buceta, H.; Martínez-Jiménez, E.M.; Calvo-Lobo, C. Impact of Asthma on Plantar Pressures in a Sample of Adult Patients: A Case-Control Study. J. Pers. Med. 2021, 11, 1157. [Google Scholar] [CrossRef]

- Charlton, J.M.; Xia, H.; Shull, P.B.; Hunt, M.A. Validity and reliability of a shoe-embedded sensor module for measuring foot progression angle during over-ground walking. J. Biomech. 2019, 89, 123–127. [Google Scholar] [CrossRef]

- Franklyn-Miller, A.; Bilzon, J.; Wilson, C.; McCrory, P. Can RSScan footscan® D3D™ software predict injury in a military population following plantar pressure assessment? A prospective cohort study. Foot 2014, 24, 6–10. [Google Scholar] [CrossRef]

- Hua, X.; Ono, Y.; Peng, L.; Cheng, Y.; Wang, H. Target detection within nonhomogeneous clutter via total bregman divergence-based matrix information geometry detectors. IEEE Trans. Signal. Process. 2021, 69, 4326–4340. [Google Scholar] [CrossRef]

- Li, H.; Wang, F.; Zeng, C.; Govoni, M.A. Signal Detection in Distributed MIMO Radar With Non-Orthogonal Waveforms and Sync Errors. IEEE Trans. Signal. Process. 2021, 69, 3671–3684. [Google Scholar] [CrossRef]

- Im Yi, T.; Lee, G.E.; Seo, I.S.; Huh, W.S.; Yoon, T.H.; Kim, B.R. Clinical characteristics of the causes of plantar heel pain. Ann. Rehabil. Med. 2011, 35, 507. [Google Scholar]

- Liu, W.; Xiao, Y.; Wang, X.; Deng, F. Plantar Pressure Detection System Based on Flexible Hydrogel Sensor Array and WT-RF. Sensors 2021, 21, 5964. [Google Scholar] [CrossRef] [PubMed]

- Hagan, M.; Teodorescu, H.-N. Sensors for foot plantar pressure signal acquisition. In Proceedings of the 2021 International Symposium on Signals, Circuits and Systems (ISSCS), Iasi, Romania, 15–16 July 2021; pp. 1–4. [Google Scholar]

- Caderby, T.; Begue, J.; Dalleau, G.; Peyrot, N. Measuring Foot Progression Angle during Walking Using Force-Plate Data. Appl. Mech. 2022, 3, 174–181. [Google Scholar] [CrossRef]

- Nieuwenhuys, A.; Papageorgiou, E.; Desloovere, K.; Molenaers, G.; De Laet, T. Statistical parametric mapping to identify differences between consensus-based joint patterns during gait in children with cerebral palsy. PLoS ONE 2017, 12, e0169834. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xia, H.; Charlton, J.M.; Shull, P.B.; Hunt, M.A. Portable, automated foot progression angle gait modification via a proof-of-concept haptic feedback-sensorized shoe. J. Biomech. 2020, 107, 109789. [Google Scholar] [CrossRef]

- Su, K.-H.; Kaewwichit, T.; Tseng, C.-H.; Chang, C.-C. Automatic footprint detection approach for the calculation of arch index and plantar pressure in a flat rubber pad. Multimed. Tools Appl. 2016, 75, 9757–9774. [Google Scholar] [CrossRef]

- Schelhaas, R.; Hajibozorgi, M.; Hortobágyi, T.; Hijmans, J.M.; Greve, C. Conservative interventions to improve foot progression angle and clinical measures in orthopedic and neurological patients–A systematic review and meta-analysis. J. Biomech. 2022, 130, 110831. [Google Scholar] [CrossRef]

- Chang, A.; Hurwitz, D.; Dunlop, D.; Song, J.; Cahue, S.; Hayes, K.; Sharma, L. The relationship between toe-out angle during gait and progression of medial tibiofemoral osteoarthritis. Ann. Rheum. Dis. 2007, 66, 1271–1275. [Google Scholar] [CrossRef]

- Yang, W.; Jiachun, Z. Real-time face detection based on YOLO. In Proceedings of the 2018 1st IEEE International Conference on Knowledge Innovation and Invention (ICKII), Jeju, Korea, 23–27 July 2018; pp. 221–224. [Google Scholar]

- Ardhianto, P.; Tsai, J.-Y.; Lin, C.-Y.; Liau, B.-Y.; Jan, Y.-K.; Akbari, V.B.H.; Lung, C.-W. A Review of the Challenges in Deep Learning for Skeletal and Smooth Muscle Ultrasound Images. Appl. Sci. 2021, 11, 4021. [Google Scholar] [CrossRef]

- Tsai, J.-Y.; Hung, I.Y.-J.; Guo, Y.L.; Jan, Y.-K.; Lin, C.-Y.; Shih, T.T.-F.; Chen, B.-B.; Lung, C.-W. Lumbar Disc Herniation Automatic Detection in Magnetic Resonance Imaging Based on Deep Learning. Front. Bioeng. Biotechnol. 2021, 9, 691. [Google Scholar] [CrossRef]

- Chen, H.-C.; Sunardi; Jan, Y.-K.; Liau, B.-Y.; Lin, C.-Y.; Tsai, J.-Y.; Li, C.-T.; Lung, C.-W. Using Deep Learning Methods to Predict Walking Intensity from Plantar Pressure Images. In Proceedings of the International Conference on Applied Human Factors and Ergonomics, Virtual Conference, 25–29 July 2021; pp. 270–277. [Google Scholar]

- Zhou, T.; Ruan, S.; Canu, S. A review: Deep learning for medical image segmentation using multi-modality fusion. Array 2019, 3, 100004. [Google Scholar] [CrossRef]

- Zhao, Z.-Q.; Zheng, P.; Xu, S.-T.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef] [Green Version]

- Shaodan, L.; Chen, F.; Zhide, C. A ship target location and mask generation algorithms base on mask rcnn. Int. J. Comput. Intell. Syst. 2019, 12, 1134–1143. [Google Scholar] [CrossRef] [Green Version]

- Tao, J.; Wang, H.; Zhang, X.; Li, X.; Yang, H. An object detection system based on YOLO in traffic scene. In Proceedings of the 2017 6th International Conference on Computer Science and Network Technology (ICCSNT), Dalian, China, 21–22 October 2017; pp. 315–319. [Google Scholar]

- Kim, J.-A.; Sung, J.-Y.; Park, S.-H. Comparison of Faster-RCNN, YOLO, and SSD for real-time vehicle type recognition. In Proceedings of the 2020 IEEE International Conference on Consumer Electronics-Asia (ICCE-Asia), Seoul, Korea, 1–3 November 2020; pp. 1–4. [Google Scholar]

- Zhuang, Z.; Liu, G.; Ding, W.; Raj, A.N.J.; Qiu, S.; Guo, J.; Yuan, Y. Cardiac VFM visualization and analysis based on YOLO deep learning model and modified 2D continuity equation. Comput. Med. Imaging Graph. 2020, 82, 101732. [Google Scholar] [CrossRef]

- Li, G.; Song, Z.; Fu, Q. A new method of image detection for small datasets under the framework of YOLO network. In Proceedings of the 2018 IEEE 3rd Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 12–14 October 2018; pp. 1031–1035. [Google Scholar]

- Ahmad, T.; Ma, Y.; Yahya, M.; Ahmad, B.; Nazir, S. Object detection through modified YOLO neural network. Sci. Program. 2020, 2020, 8403262. [Google Scholar] [CrossRef]

- Ünver, H.M.; Ayan, E. Skin lesion segmentation in dermoscopic images with combination of YOLO and grabcut algorithm. Diagnostics 2019, 9, 72. [Google Scholar] [CrossRef] [Green Version]

- Baccouche, A.; Garcia-Zapirain, B.; Olea, C.C.; Elmaghraby, A.S. Breast lesions detection and classification via yolo-based fusion models. Comput. Mater. Contin. 2021, 69, 1407–1425. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Mousavi, S.H.; van Kouwenhove, L.; Rajabi, R.; Zwerver, J.; Hijmans, J.M. The effect of changing mediolateral center of pressure on rearfoot eversion during treadmill running. Gait Posture 2021, 83, 201–209. [Google Scholar] [CrossRef]

- Zhou, H.; Li, L.; Liu, Z.; Zhao, K.; Chen, X.; Lu, M.; Yin, G.; Song, L.; Zhao, S.; Zheng, H. Deep learning algorithm to improve hypertrophic cardiomyopathy mutation prediction using cardiac cine images. Eur. Radiol. 2021, 31, 3931–3940. [Google Scholar] [CrossRef]

- He, Z.; Liu, T.; Yi, J. A wearable sensing and training system: Towards gait rehabilitation for elderly patients with knee osteoarthritis. IEEE Sens. J. 2019, 19, 5936–5945. [Google Scholar] [CrossRef]

- Fu, Q.; Chen, Y.; Li, Z.; Jing, Q.; Hu, C.; Liu, H.; Bao, J.; Hong, Y.; Shi, T.; Li, K. A deep learning algorithm for detection of oral cavity squamous cell carcinoma from photographic images: A retrospective study. EClinicalMedicine 2020, 27, 100558. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Kumar, C.; Punitha, R. YOLOv3 and YOLOv4: Multiple Object Detection for Surveillance Applications. In Proceedings of the 2020 Third International Conference on Smart Systems and Inventive Technology (ICSSIT), Tirunelveli, India, 20–22 August 202; pp. 1316–1321.

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Andhy Panca Saputra, K. Waste Object Detection and Classification using Deep Learning Algorithm: YOLOv4 and YOLOv4-tiny. Turk. J. Comput. Math. Educ. (TURCOMAT) 2021, 12, 5583–5595. [Google Scholar]

- Zhou, F.; Zhao, H.; Nie, Z. Safety Helmet Detection Based on YOLOv5. In Proceedings of the 2021 IEEE International Conference on Power Electronics, Computer Applications (ICPECA), Shenyang, China, 22–24 January 2021; pp. 6–11. [Google Scholar]

- Xiaoping, Z.; Jiahui, J.; Li, W.; Zhonghe, H.; Shida, L. People’s Fast Moving Detection Method in Buses Based on YOLOv5. Int. J. Sens. Sens. Netw. 2021, 9, 30. [Google Scholar] [CrossRef]

- Du, J. Understanding of object detection based on CNN family and YOLO. In Proceedings of the Journal of Physics: Conference Series, Hong Kong, China, 23–25 February 2018; p. 012029. [Google Scholar]

- Gandhi, J.; Jain, P.; Kurup, L. YOLO Based Recognition of Indian License Plates. In Advanced Computing Technologies and Applications; Springer Singapore: Singapore, 2020; pp. 411–421. [Google Scholar]

- Herschel, J.F.W. On a remarkable application of Cotes’s theorem. Philos. Trans. R. Soc. Lond. 1813, 103, 8–26. [Google Scholar] [CrossRef]

- Weisstein, E.W. Pythagorean Theorem. Available online: https://mathworld.wolfram.com/ (accessed on 13 March 2022).

- Nasirahmadi, A.; Sturm, B.; Edwards, S.; Jeppsson, K.-H.; Olsson, A.-C.; Müller, S.; Hensel, O. Deep learning and machine vision approaches for posture detection of individual pigs. Sensors 2019, 19, 3738. [Google Scholar] [CrossRef] [Green Version]

- Li, S.; Gu, X.; Xu, X.; Xu, D.; Zhang, T.; Liu, Z.; Dong, Q. Detection of concealed cracks from ground penetrating radar images based on deep learning algorithm. Constr. Build. Mater. 2021, 273, 121949. [Google Scholar] [CrossRef]

- Zhao-zhao, J.; Yu-fu, Z. Research on Application of Improved YOLO V3 Algorithm in Road Target Detection. In Proceedings of the Journal of Physics: Conference Series, Xi’an, China, 28–30 August 2020; p. 012060. [Google Scholar]

- Lan, W.; Dang, J.; Wang, Y.; Wang, S. Pedestrian detection based on YOLO network model. In Proceedings of the 2018 IEEE International Conference on Mechatronics and Automation (ICMA), Changchun, China, 5–8 August 2018; pp. 1547–1551. [Google Scholar]

- Lee, Y.S.; Kim, M.G.; Byun, H.W.; Kim, S.B.; Kim, J.G. Reliability of the imaging software in the preoperative planning of the open-wedge high tibial osteotomy. Knee Surg. Sports Traumatol. Arthrosc. 2015, 23, 846–851. [Google Scholar] [CrossRef] [PubMed]

- Galbusera, F.; Niemeyer, F.; Wilke, H.-J.; Bassani, T.; Casaroli, G.; Anania, C.; Costa, F.; Brayda-Bruno, M.; Sconfienza, L.M. Fully automated radiological analysis of spinal disorders and deformities: A deep learning approach. Eur. Spine J. 2019, 28, 951–960. [Google Scholar] [CrossRef] [PubMed]

- Alharbi, R.H.; Alshaye, M.B.; Alkanhal, M.M.; Alharbi, N.M.; Alzahrani, M.A.; Alrehaili, O.A. Deep Learning Based Algorithm For Automatic Scoliosis Angle Measurement. In Proceedings of the 2020 3rd International Conference on Computer Applications & Information Security (ICCAIS), Irbid, Jordan, 7–9 April 2020; pp. 1–5. [Google Scholar]

- Hernandez, V.; Dadkhah, D.; Babakeshizadeh, V.; Kulić, D. Lower body kinematics estimation from wearable sensors for walking and running: A deep learning approach. Gait Posture 2021, 83, 185–193. [Google Scholar] [CrossRef] [PubMed]

- Pei, Y.; Yang, W.; Wei, S.; Cai, R.; Li, J.; Guo, S.; Li, Q.; Wang, J.; Li, X. Automated measurement of hip–knee–ankle angle on the unilateral lower limb X-rays using deep learning. Phys. Eng. Sci. Med. 2021, 44, 53–62. [Google Scholar] [CrossRef]

- Jiang, J.; Fu, X.; Qin, R.; Wang, X.; Ma, Z. High-Speed Lightweight Ship Detection Algorithm Based on YOLO-V4 for Three-Channels RGB SAR Image. Remote. Sens. 2021, 13, 1909. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2020; pp. 12993–13000. [Google Scholar]

- Jeong, H.-J.; Park, K.-S.; Ha, Y.-G. Image preprocessing for efficient training of YOLO deep learning networks. In Proceedings of the 2018 IEEE International Conference on Big Data and Smart Computing (BigComp), Shanghai, China, 15 January 2018; pp. 635–637. [Google Scholar]

- Gao, M.; Cai, Q.; Zheng, B.; Shi, J.; Ni, Z.; Wang, J.; Lin, H. A Hybrid YOLO v4 and Particle Filter Based Robotic Arm Grabbing System in Nonlinear and Non-Gaussian Environment. Electronics 2021, 10, 1140. [Google Scholar] [CrossRef]

- Dagher, I.; Al-Bazzaz, H. Improving the component-based face recognition using enhanced viola–jones and weighted voting technique. Model. Simul. Eng. 2019, 2019, 8234124. [Google Scholar] [CrossRef] [Green Version]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo Algorithm Developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Qiao, X.; Zhu, W.; Guo, D.; Jiang, T.; Chang, X.; Zhou, Y.; Zhu, D.; Cao, N. Design of Abnormal Behavior Detection System in the State Grid Business Office. In Proceedings of the International Conference on Artificial Intelligence and Security, Dublin, Ireland, 19–23 July 2021; pp. 510–520. [Google Scholar]

- Day, E.M.; Hahn, M.E. A comparison of metatarsophalangeal joint center locations on estimated joint moments during running. J. Biomech. 2019, 86, 64–70. [Google Scholar] [CrossRef]

- Hu, X.; Liu, Y.; Zhao, Z.; Liu, J.; Yang, X.; Sun, C.; Chen, S.; Li, B.; Zhou, C. Real-time detection of uneaten feed pellets in underwater images for aquaculture using an improved YOLO-V4 network. Comput. Electron. Agric. 2021, 185, 106135. [Google Scholar] [CrossRef]

- Yap, M.H.; Hachiuma, R.; Alavi, A.; Brüngel, R.; Cassidy, B.; Goyal, M.; Zhu, H.; Rückert, J.; Olshansky, M.; Huang, X. Deep learning in diabetic foot ulcers detection: A comprehensive evaluation. Comput. Biol. Med. 2021, 135, 104596. [Google Scholar] [CrossRef]

- Yu, J.; Zhang, W. Face mask wearing detection algorithm based on improved YOLO-v4. Sensors 2021, 21, 3263. [Google Scholar] [CrossRef]

- Yu, T.; Yang, Y.; Li, B.; Sah, S.; Chen, K.; Yu, G. Importance of assistant intra-operative medial distraction technique for intraarticular calcaneus fractures. Acta Orthop. Belg. 2019, 85, 130–136. [Google Scholar]

- Abd-Elaziz, H.; Rahman, S.A.; Olama, K.; Thabet, N.; El-Din, S.N. Correlation between Foot Progression Angle and Balance in Cerebral Palsied Children. Trends Appl. Sci. Res. 2015, 10, 54. [Google Scholar] [CrossRef]

- Chen, R.-C. Automatic License Plate Recognition via sliding-window darknet-YOLO deep learning. Image Vis. Comput. 2019, 87, 47–56. [Google Scholar]

- Naraghi, R.; Slack-Smith, L.; Bryant, A. Plantar pressure measurements and geometric analysis of patients with and without Morton’s neuroma. Foot Ankle Int. 2018, 39, 829–835. [Google Scholar] [CrossRef] [PubMed]

- Ling, X.; Liang, J.; Wang, D.; Yang, J. A Facial Expression Recognition System for Smart Learning Based on YOLO and Vision Transformer. In Proceedings of the 2021 7th International Conference on Computing and Artificial Intelligence, Tianjin, China, 23–26 April 2021; pp. 178–182. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).