Intercomparison of PurpleAir Sensor Performance over Three Years Indoors and Outdoors at a Home: Bias, Precision, and Limit of Detection Using an Improved Algorithm for Calculating PM2.5

Abstract

:1. Introduction

2. Materials and Methods

The ALT-CF3 Algorithm

3. Results

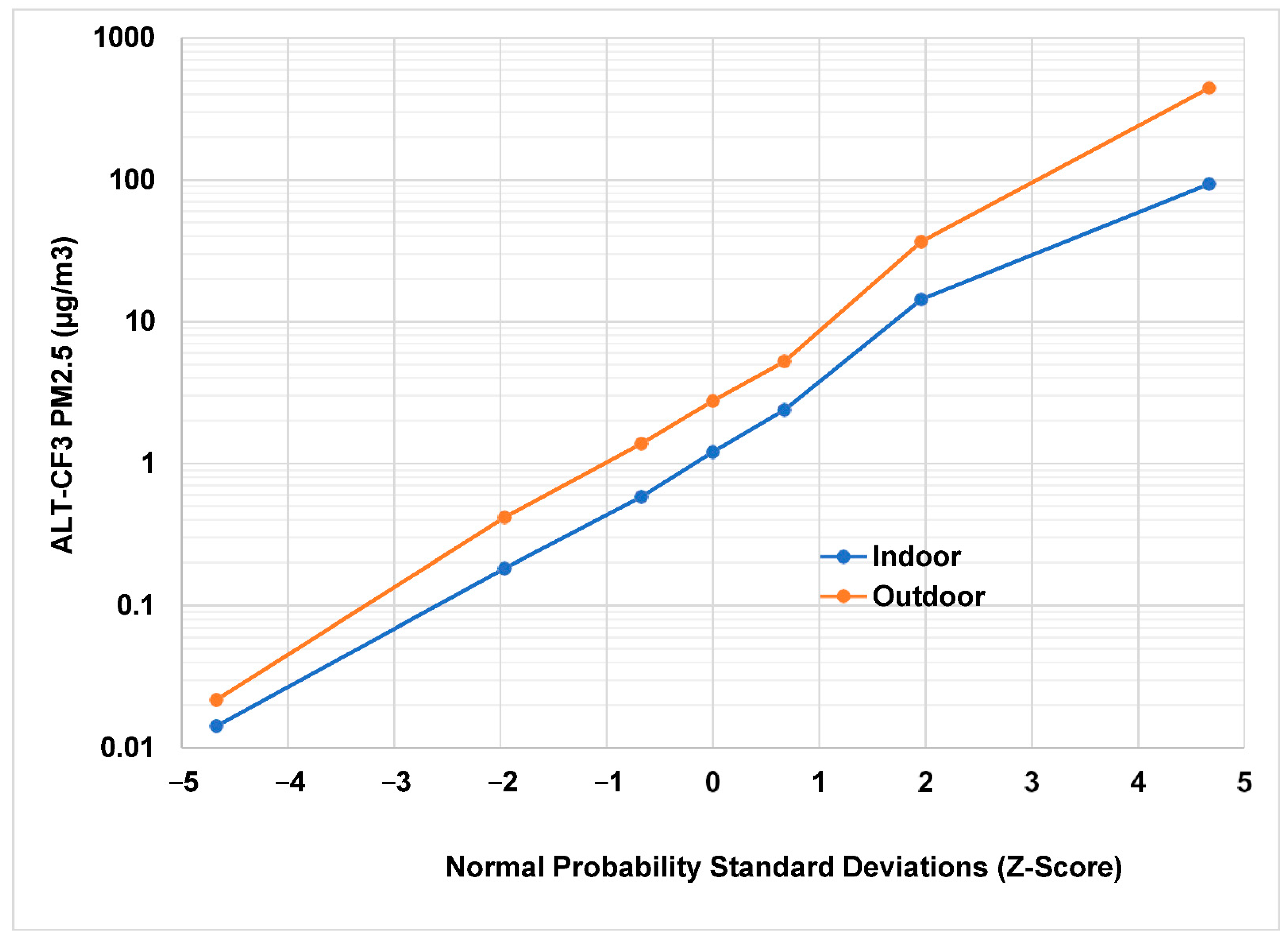

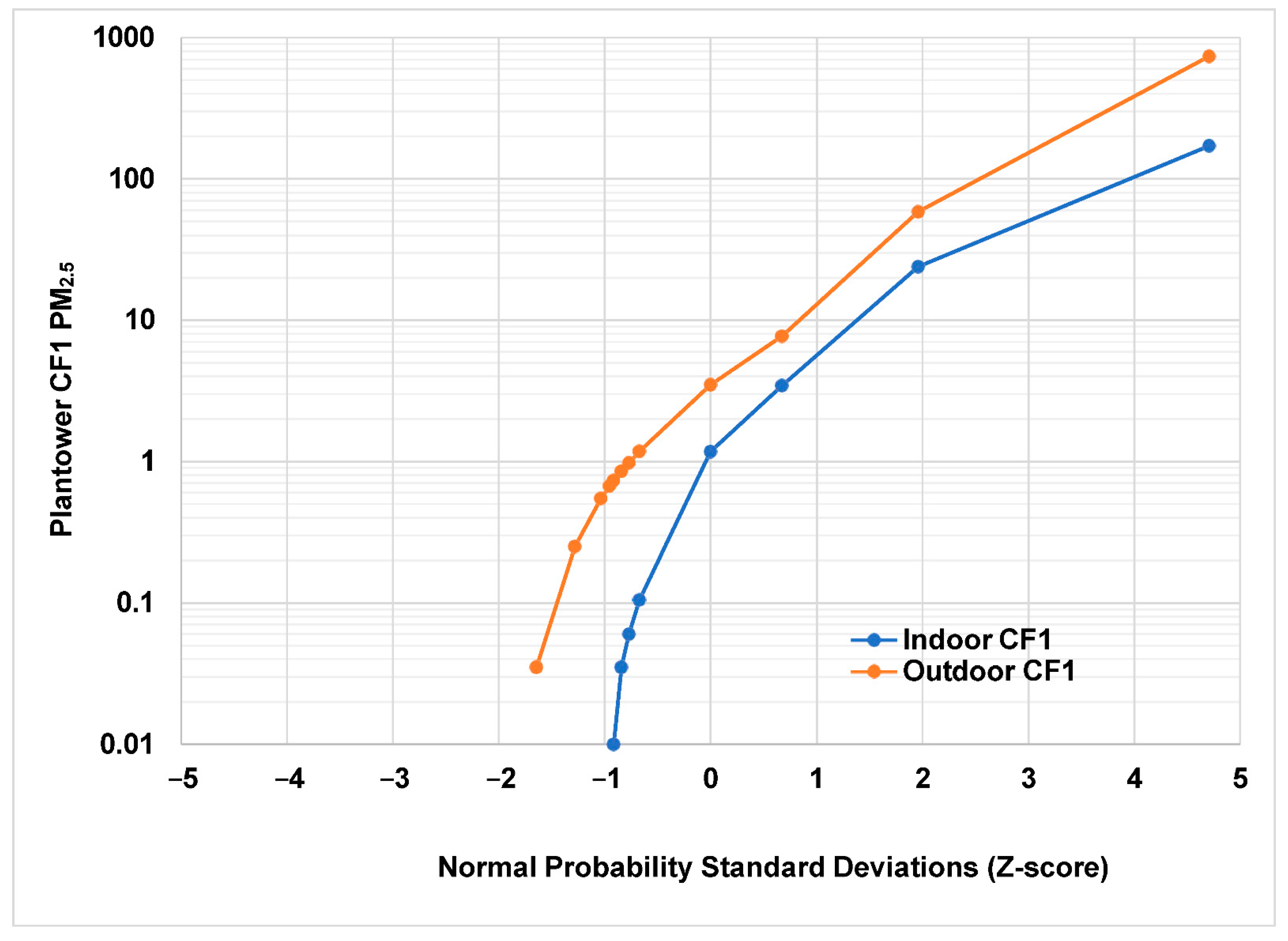

3.1. Relative Bias

Comparison with FEM Bias

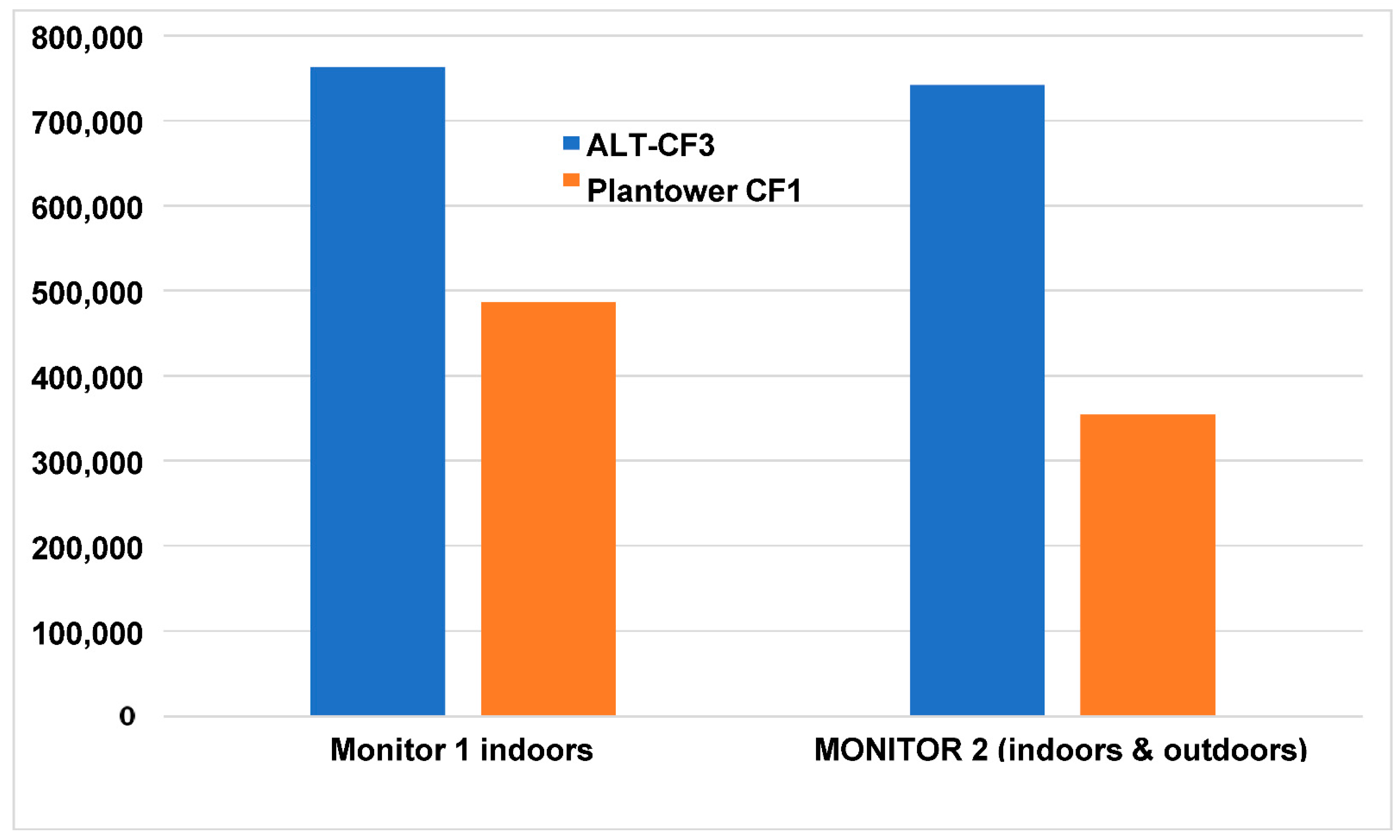

3.2. Precision

3.3. PM2.5 Concentrations of Zero

3.4. Variation of Precision over Time

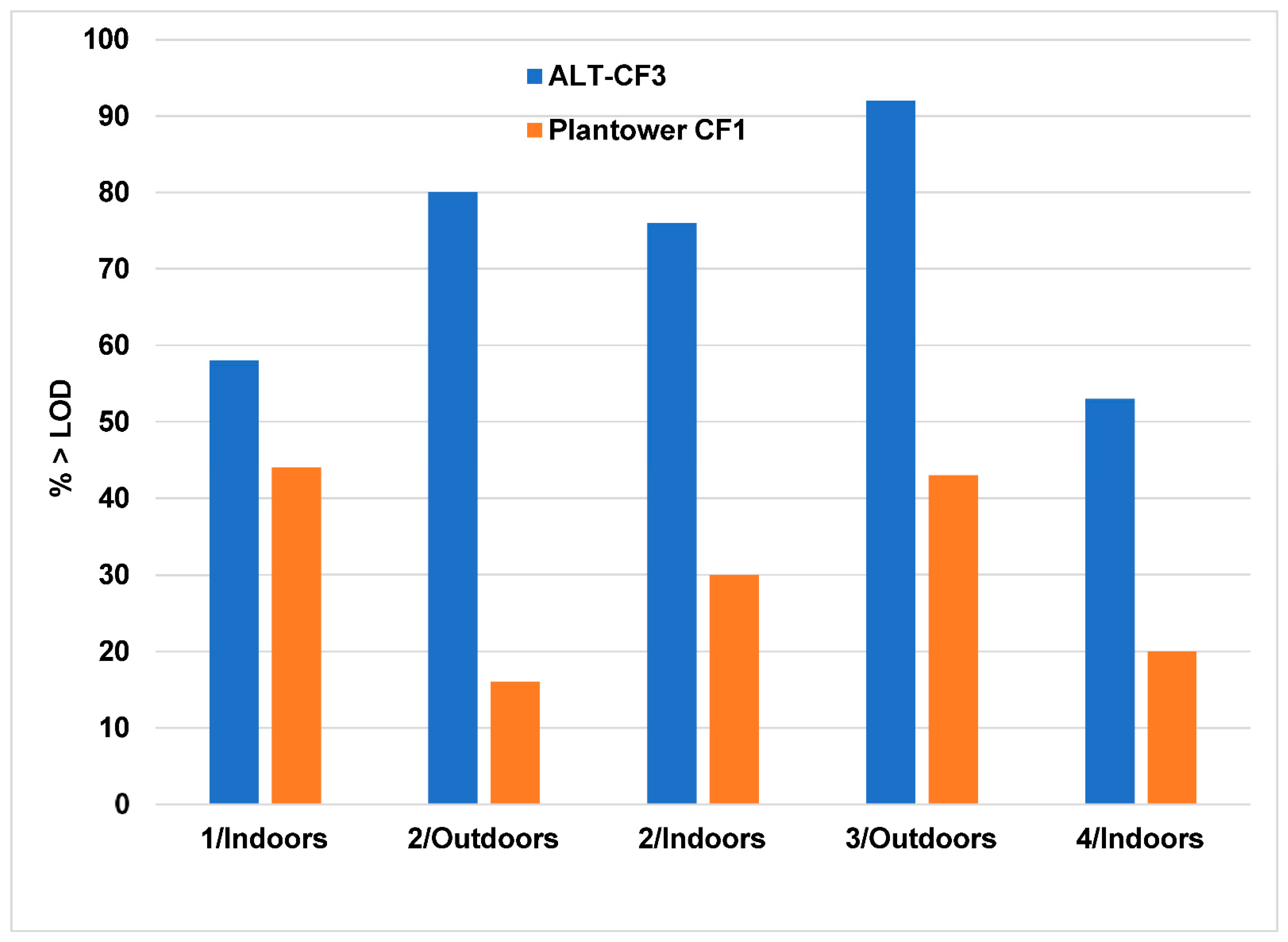

3.5. Limit of Detection (LOD)

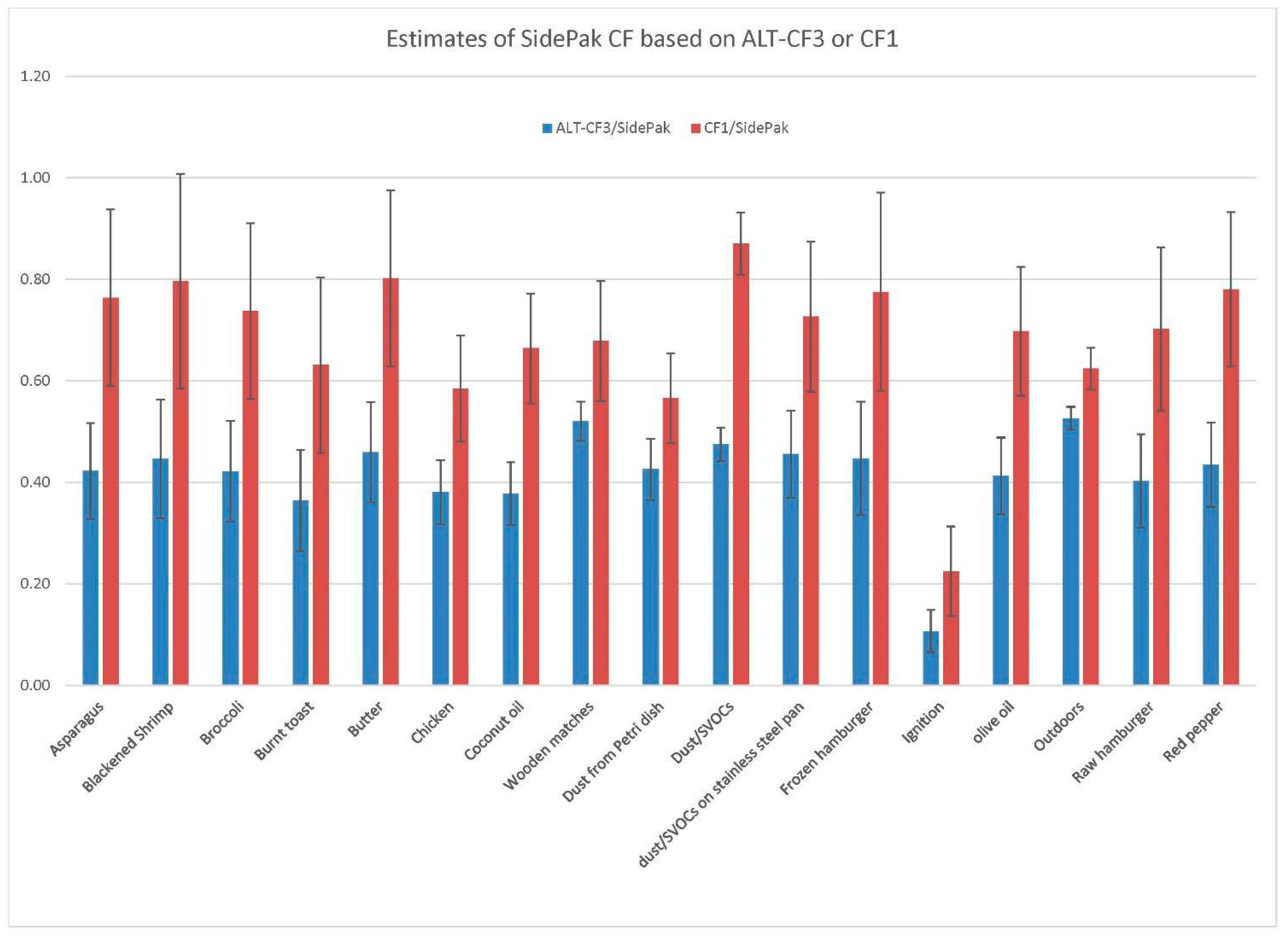

3.6. Comparison with Co-Located Research-Grade SidePak Monitors

3.7. Limitations

4. Discussion

“The evaluated versions of the AirBeam, AirVisual, Foobot, and Purple Air II monitors were of sufficient accuracy and reliability in detecting large sources that they appear suitable for measurement-based control to reduce exposures to PM2.5 mass in homes. The logical next steps in evaluating these monitors are to study their performance in occupied homes and to quantify their performance after months of deployment.”

5. Conclusions

Supplementary Materials

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- AQ-SPEC. Field Evaluation Purple Air PM Sensor. 2016. Available online: http://www.aqmd.gov/docs/default-source/aq-spec/field-evaluations/purpleair---field-evaluation.pdf (accessed on 30 March 2022).

- He, M.; Kuerbanjiang, N.; Dhaniyala, S. Performance characteristics of the low-cost Plantower PMS optical sensor. Aerosol Sci. Technol. 2020, 54, 232–241. [Google Scholar] [CrossRef]

- Kelly, K.E.; Whitaker, J.; Petty, A.; Widmer, C.; Dybwad, A.; Sleeth, D.; Martin, R.; Butterfield, A. Ambient and laboratory evaluation of a low-cost particulate matter sensor. Environ. Pollut. 2017, 221, 491–500. [Google Scholar] [CrossRef] [PubMed]

- Singer, B.C.; Delp, W.W. Response of consumer and research grade indoor air quality monitors to residential sources of fine particles. Indoor Air 2018, 28, 624–639. [Google Scholar] [CrossRef] [PubMed]

- Tryner, J.; Quinn, C.; Windom, B.C.; Volckens, J. Design and evaluation of a portable PM2.5 monitor featuring a low-cost sensor in line with an active filter sampler. Environ. Sci. Process. Impacts 2019, 21, 1403–1415. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Delp, W.W.; Singer, B.C. Performance of low-cost indoor air quality monitors for PM2.5 and PM10 from residential sources. Build. Environ. 2020, 171, 106654. [Google Scholar] [CrossRef]

- Bi, J.; Wildani, A.; Chang, H.H.; Liu, Y. Incorporating low-cost sensor measurements into high-resolution PM2.5 modeling at a large spatial scale. Environ. Sci. Technol. 2020, 54, 2152–2162. [Google Scholar] [CrossRef]

- Gupta, P.; Doraiswamy, P.; Levy, R.; Pikelnaya, O.; Maibach, J.; Feenstra, B.; Polidori, A.; Kiros, F.; Mills, K.C. Impact of California fires on local and regional air quality: The role of a low-cost sensor network and satellite observations. GeoHealth 2018, 2, 172–181. [Google Scholar] [CrossRef]

- Levy Zamora, M.; Xiong, F.; Gentner, D.; Kerkez, B.; Kohrman-Glaser, J.; Koehler, K. Field and laboratory evaluations of the low-cost Plantower particulate matter sensor. Environ. Sci. Technol. 2018, 53, 838–849. [Google Scholar] [CrossRef]

- Magi, B.I.; Cupini, C.; Francis, J.; Green, M.; Hauser, C. Evaluation of PM2.5 measured in an urban setting using a low-cost optical particle counter and a Federal Equivalent Method Beta Attenuation Monitor. Aerosol Sci. Technol. 2019, 54, 147–159. [Google Scholar] [CrossRef]

- Sayahi, T.; Butterfield, A.; Kelly, K.E. Long-term field evaluation of the Plantower PMS low-cost particulate matter sensors. Environ. Pollut. 2019, 245, 932–940. [Google Scholar] [CrossRef]

- Zusman, M.; Schumacher, C.S.; Gassett, A.J.; Spalt, E.W.; Austin, E.; Larson, T.V.; Carvlin, G.C.; Seto, E.; Kaufman, J.D.; Sheppard, L. Calibration of low-cost particulate matter sensors: Model development for a multi-city epidemiological study. Environ. Int. 2020, 134, 105329. [Google Scholar] [CrossRef] [PubMed]

- US EPA. 2017. Available online: https://www.epa.gov/air-sensor-toolbox/how-use-air-sensors-air-sensor-guidebook (accessed on 30 March 2022).

- Jayaratne, R.; Liu, X.; Ahn, K.H.; Asumadu-Sakyi, A.; Fisher, G.; Gao, J.; Mabon, A.; Mazaheri, M.; Mullins, B.; Nyaku, M.; et al. Low-cost PM2.5 sensors: An assessment of their suitability for various applications. Aerosol Air Qual. Res. 2020, 20, 520–532. [Google Scholar] [CrossRef]

- Bi, J.; Wallace, L.; Sarnat, J.A.; Liu, Y. Characterizing outdoor infiltration and indoor contribution of PM2.5 with citizen-based low-cost monitoring data. Environ. Pollut. 2021, 276, 116763. [Google Scholar] [CrossRef] [PubMed]

- Kaduwela, A.P.; Kaduwela, A.P.; Jrade, E.; Brusseau, M.; Morris, S.; Morris, J.; Risk, V. Development of a low-cost air sensor package and indoor air quality monitoring in a California middle school: Detection of a distant wildfire. J. Air Waste Manag. Assoc. 2019, 69, 1015–1022. [Google Scholar] [CrossRef] [PubMed]

- Klepeis, N.E.; Bellettiere, J.; Hughes, S.C.; Nguyen, B.; Berardi, V.; Liles, S.; Obayashi, S.; Hofstetter, C.R.; Blumberg, E.; Hovell, M.F. Fine particles in homes of predominantly low-income families with children and smokers: Key physical and behavioral determinants to inform indoor-air-quality interventions. PLoS ONE 2017, 12, e0177718. [Google Scholar] [CrossRef] [PubMed]

- Wallace, L.A.; Wheeler, A.; Kearney, J.; Van Ryswyk, K.; You, H.; Kulka, R.; Rasmussen, P.; Brook, J.; Xu, X. Validation of continuous particle monitors for personal, indoor, and outdoor exposures. J. Expo. Sci. Environ. Epidemiol. 2010, 21, 49–64. [Google Scholar] [CrossRef]

- Wallace, L.A.; Ott, W.R.; Zhao, T.; Cheng, K.-C.; Hildemann, L. Secondhand exposure from vaping marijuana: Concentrations, emissions, and exposures determined using both research-grade and low-cost monitors. Atmos. Environ. X 2020, 8, 100093. Available online: https://www.sciencedirect.com/science/article/pii/S2590162120300332?via%3Dihub (accessed on 9 January 2022). [CrossRef]

- Wang, K.; Chen, F.E.; Au, W.; Zhao, Z.; Xia, Z.-L. Evaluating the feasibility of a personal particle exposure monitor in outdoor and indoor microenvironments in Shanghai, China. Int. J. Environ. Health Res. 2019, 29, 209–220. [Google Scholar] [CrossRef]

- Zheng, T.; Bergin, M.H.; Johnson, K.K.; Tripathi, S.N.; Shirodkar, S.; Landis, M.S.; Sutaria, R.; Carlson, D.E. Field evaluation of low-cost particulate matter sensors in high-and low-concentration environments. Atmos. Meas. Tech. 2018, 11, 4823–4846. [Google Scholar] [CrossRef] [Green Version]

- Badura, M.; Batog, P.; Drzeniecka-Osiadacz, A.; Modzel, P. Evaluation of low-cost sensors for ambient PM2.5 monitoring. J. Sens. 2018, 2018, 5096540. [Google Scholar] [CrossRef] [Green Version]

- Klepeis, N.E.; Nelson, W.C.; Ott, W.R.; Robinson, J.; Tsang, A.M.; Switzer, P.; Behar, J.V.; Hern, S.; Engelmann, W. The National Human Activity Pattern Survey (NHAPS): A resource for assessing exposure to environmental pollutants. J. Expo. Sci. Environ. Epidemiol. 2001, 11, 231–252. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Apte, J.S.; Brauer, M.; Cohen, A.J.; Ezzati, M.; Pope, C.A., III. Ambient PM2.5 reduces global and regional life expectancy. Environ. Sci. Technol. Lett. 2018, 5, 546–551. [Google Scholar] [CrossRef] [Green Version]

- Cohen, A.J.; Brauer, M.; Burnett, R.; Anderson, H.R.; Frostad, J.; Estep, K.; Balakrishnan, K.; Brunekreef, B.; Dandona, L.; Dandona, R.; et al. Estimates and 25-year trends of the global burden of disease attributable to ambient air pollution: An analysis of data from the Global Burden of Diseases Study 2015. Lancet 2017, 389, 1907–1918. [Google Scholar] [CrossRef] [Green Version]

- Hystad, P.; Larkin, A.; Rangarajan, S.; Al Habib, K.F.; Avezum, Á.; Calik, K.B.T.; Chifamba, J.; Dans, A.; Diaz, R.; du Plessis, J.L.; et al. Associations of outdoor fine particulate air pollution and cardiovascular disease in 157 436 individuals from 21 high-income, middle-income, and low-income countries (PURE): A prospective cohort study. Lancet Planet. Health 2020, 4, e235–e245. [Google Scholar] [CrossRef]

- Dockery, D.W.; Pope, C.A.; Xu, X.; Spengler, J.D.; Ware, J.H.; Fay, M.E.; Ferris, B.G.; Speizer, F.E. An association between air pollution and mortality in six U.S. cities. N. Engl. J. Med. 1993, 329, 1753–1759. [Google Scholar] [CrossRef] [Green Version]

- Özkaynak, H.; Xue, J.; Spengler, J.; Wallace, L.; Pellizzari, E.; Jenkins, P. Personal exposure to airborne particles and metals: Results from the Particle TEAM study in Riverside, California. J. Expo. Anal. Environ. Epidemiol. 1996, 6, 57–78. [Google Scholar]

- Kearney, J.; Wallace, L.; MacNeill, M.; Xu, X.; Van Ryswyk, K.; You, H.; Kulka, R.; Wheeler, A. Residential indoor and outdoor ultrafine particles in Windsor, Ontario. Atmos. Environ. 2011, 45, 7583–7593. [Google Scholar] [CrossRef]

- Wallace, L.; Williams, R.; Rea, A.; Croghan, C. Continuous weeklong measurements of personal exposures and indoor concentrations of fine particles for 37 health-impaired North Carolina residents for up to four seasons. Atmos. Environ. 2006, 40, 399–414. [Google Scholar] [CrossRef]

- Wallace, L.; Williams, R.; Suggs, J.; Jones, P. Estimating Contributions of Outdoor Fine Particles to Indoor Concentrations and Personal Exposures: Effects of Household and Personal Activities. APM-214; Office of Research and Development Research Triangle Park: Durham, NC, USA, 2006. [Google Scholar]

- Wallace, L.; Bi, J.; Ott, W.R.; Sarnat, J.A.; Liu, Y. Calibration of low-cost PurpleAir outdoor monitors using an improved method of calculating PM2.5. Atmos. Environ. 2021, 256, 118432. Available online: https://www.sciencedirect.com/science/article/abs/pii/S135223102100251X (accessed on 2 February 2022). [CrossRef]

- Helsel, D. Much ado about next to nothing: Incorporating nondetects in science. Ann. Occup. Hyg. 2010, 54, 257–262. [Google Scholar]

- International Organization for Standardization (ISO). Capability of Detection; Report No. ISO 11843-1; ISO: Geneva, Switzerland, 1997. [Google Scholar]

- Jiang, R.; Acevedo-Bolton, V.; Cheng, K.; Klepeis, N.; Ott, W.R.; Hildemann, L. Determination of response of real-time SidePak AM510 monitor to secondhand smoke, other common indoor aerosols, and outdoor aerosol. J. Environ. Monit. 2011, 13, 1695–1702. [Google Scholar] [CrossRef] [PubMed]

- Zhu, K.; Zhang, J.; Lioy, P. Evaluation and comparison of continuous fine particulate matter monitors for measurement of ambient aerosols. J. Air Waste Manag. Assoc. 2007, 57, 1499–1506. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liang, Y.; Sengupta, D.; Campmier, M.J.; Lunderberg, D.M.; Apte, J.S.; Goldstein, A.H. Wildfire smoke impacts on indoor air quality assessed using crowdsourced data in California. Proc. Natl. Acad. Sci. USA 2021, 118, e2106478118. [Google Scholar] [CrossRef] [PubMed]

- Delp, W.W.; Singer, B.C. Wildfire smoke adjustment factors for low-cost and professional PM2.5 monitors with optical sensors. Sensors 2020, 20, 3683. [Google Scholar] [CrossRef] [PubMed]

| Valid N | Mean | Std. Err. | Lower Quartile | Median | Upper Quartile | 90th %Tile | Max | |

|---|---|---|---|---|---|---|---|---|

| ALT-CF3 algorithm (using precision cutoff of 0.2) | ||||||||

| Monitor 1 indoors | 763,102 | 0.064 | 0.000055 | 0.025 | 0.053 | 0.094 | 0.14 | 0.2 |

| Monitor 2 indoors | 499,296 | 0.067 | 0.000068 | 0.027 | 0.057 | 0.097 | 0.14 | 0.2 |

| Monitor 2 outdoors | 242,663 | 0.058 | 0.000093 | 0.021 | 0.046 | 0.084 | 0.13 | 0.2 |

| Plantower CF1 algorithm (using ALT-CF3 cutoff of 0.2) | ||||||||

| Monitor 1 indoors | 647,757 | 0.192 | 0.000334 | 0.034 | 0.084 | 0.20 | 0.57 | 1 |

| Monitor 2 indoors | 448,867 | 0.337 | 0.000495 | 0.072 | 0.205 | 0.51 | 1 | 1 |

| Monitor 2 outdoors | 234,814 | 0.293 | 0.000631 | 0.065 | 0.172 | 0.41 | 0.92 | 1 |

| Plantower CF1 algorithm (using precision cutoff of 0.2) | ||||||||

| Monitor 1 indoors | 486,614 | 0.067 | 0.000074 | 0.025 | 0.055 | 0.10 | 0.15 | 0.2 |

| Monitor 2 indoors | 224,877 | 0.081 | 0.000118 | 0.033 | 0.071 | 0.13 | 0.17 | 0.2 |

| Monitor 2 outdoors | 129,081 | 0.082 | 0.000157 | 0.033 | 0.073 | 0.13 | 0.17 | 0.2 |

| Sensor | Location | N Obs. | N Zeros | Fraction = 0 |

|---|---|---|---|---|

| 1a | Indoors | 815,558 | 165,732 | 0.20 |

| 1b | Indoors | 817,696 | 164,399 | 0.20 |

| 2a | Indoors | 530,781 | 63,867 | 0.12 |

| 2b | Indoors | 558,322 | 130,263 | 0.23 |

| 4a | Indoors | 406,059 | 61,444 | 0.15 |

| 4b | Indoors | 406,068 | 69,435 | 0.17 |

| 2a | Outdoors | 252,532 | 10,324 | 0.04 |

| 2b | Outdoors | 253,439 | 35,374 | 0.14 |

| 3a | Outdoors | 363,786 | 23,516 | 0.06 |

| 3b | Outdoors | 363,783 | 18,757 | 0.05 |

| 3 Year Period (10 January 2019 to 14 January 2022) | 18 Month Period (18 June 2020 to 14 January 2022) | |||||

|---|---|---|---|---|---|---|

| Monitor | 1 IN | 2 IN | 2 OUT | 3 OUT | 3 IN | 4 IN |

| Location | Indoors | Indoors | Outdoors | Outdoors | Indoors | Indoors |

| N | 763,102 | 499,296 | 242,663 | 356,484 | 42,204 | 370,906 |

| Intercept | −0.28 | −0.33 | 0.61 | −0.27 | 1.6 | 0.1 |

| SE (Int.) | 0.007 | 0.010 | 0.040 | 0.019 | 0.039 | 0.022 |

| Slope | 7.8 × 10−6 | 9.0 × 10−6 | −1.2 × 10−5 | 7.4 × 10−6 | −3.4 × 10−5 | −8.7 × 10−7 |

| SE (slope) | 1.7 × 10−7 | 2.3 × 10−7 | 9.1 × 10−7 | 4.3 × 10−7 | 8.8 × 10−7 | 4.8 × 10−7 |

| R2 (adj.) | 0.0028 | 0.00319 | 0.00076 | 0.00082 | 0.034 | 0.00006 |

| SE of estimate | 0.048 | 0.048 | 0.046 | 0.042 | 0.032 | 0.050 |

| F-value | 2181 | 1599 | 186 | 296 | 1500 | 3.2 |

| z | 47 | 40 | −14 | 17 | −39 | −2 |

| p-value | 0 | 0 | 0 | 0 | 0 | 0.072 |

| starting precision | 0.060 | 0.062 | 0.083 | 0.054 | 0.058 | 0.068 |

| ending precision | 0.068 | 0.072 | 0.070 | 0.058 | 0.038 | 0.067 |

| Relative annual increase (%) | 4.8 | 5.3 | −5.3 | 5.3 | −22.6 | −0.49 |

| Sensor | Location | Valid N | CF3 LOD | # Obs with CF3 > LOD | % Obs with CF3 > LOD | CF1 LOD | # Obs with CF1 > LOD | % Obs with CF1 > LOD |

|---|---|---|---|---|---|---|---|---|

| 1 | Indoors | 406,108 | 0.99 | 233,900 | 58 | 2.9 | 177,908 | 44 |

| 2 | Outdoors | 253,454 | 0.92 | 203,384 | 80 | 9.9 | 39,487 | 16 |

| 2 | Indoors | 146,229 | 0.72 | 110,674 | 76 | 3.2 | 44,289 | 30 |

| 3 | Outdoors | 363,797 | 0.6 | 334,973 | 92 | 4.4 | 156,850 | 43 |

| 4 | Indoors | 406,092 | 1.32 | 215,872 | 53 | 5.3 | 79,371 | 20 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wallace, L. Intercomparison of PurpleAir Sensor Performance over Three Years Indoors and Outdoors at a Home: Bias, Precision, and Limit of Detection Using an Improved Algorithm for Calculating PM2.5. Sensors 2022, 22, 2755. https://doi.org/10.3390/s22072755

Wallace L. Intercomparison of PurpleAir Sensor Performance over Three Years Indoors and Outdoors at a Home: Bias, Precision, and Limit of Detection Using an Improved Algorithm for Calculating PM2.5. Sensors. 2022; 22(7):2755. https://doi.org/10.3390/s22072755

Chicago/Turabian StyleWallace, Lance. 2022. "Intercomparison of PurpleAir Sensor Performance over Three Years Indoors and Outdoors at a Home: Bias, Precision, and Limit of Detection Using an Improved Algorithm for Calculating PM2.5" Sensors 22, no. 7: 2755. https://doi.org/10.3390/s22072755

APA StyleWallace, L. (2022). Intercomparison of PurpleAir Sensor Performance over Three Years Indoors and Outdoors at a Home: Bias, Precision, and Limit of Detection Using an Improved Algorithm for Calculating PM2.5. Sensors, 22(7), 2755. https://doi.org/10.3390/s22072755