Geometric Calibration for Cameras with Inconsistent Imaging Capabilities

Abstract

:1. Introduction

- We proposed a method to estimate the inverse covariance matrix of detected control points;

- We incorporated the measured uncertainties into the optimization process of camera calibration and proved the effectiveness of our method in eight imaging systems;

- We measured the MTF of the imaging systems and analyzed the MTF results for the poor imaging systems.

2. Related Works

3. Preliminary

3.1. Notations

3.2. Control Point Detection

3.3. Canonical Camera Calibration

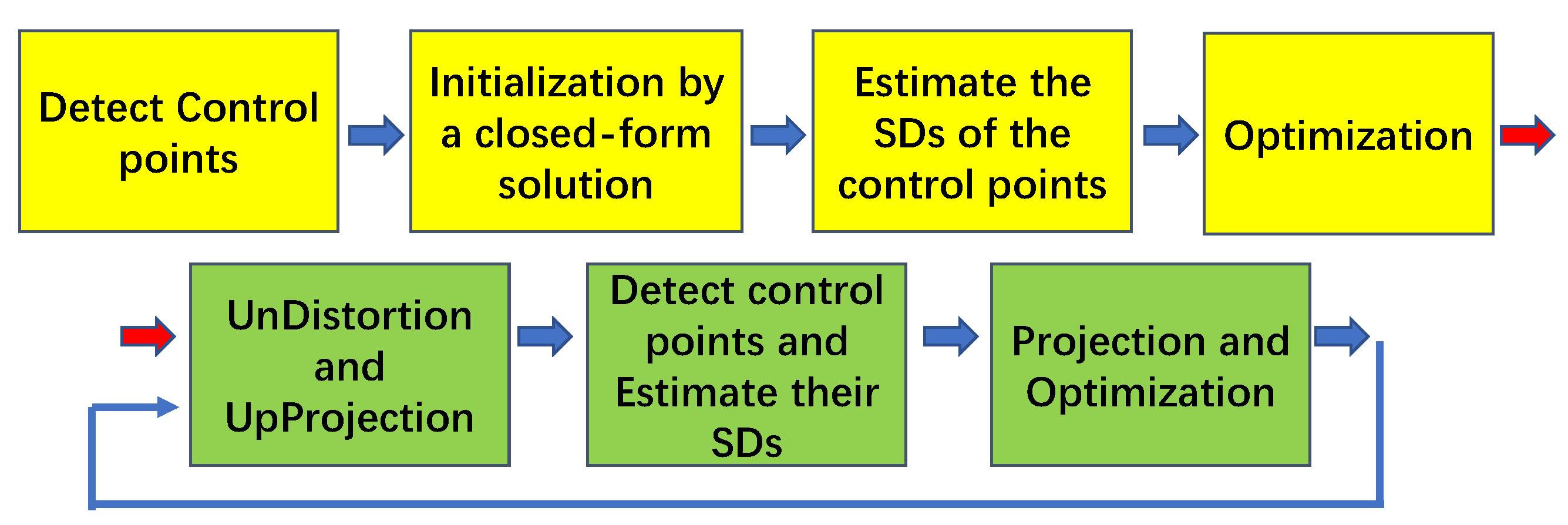

4. Approach

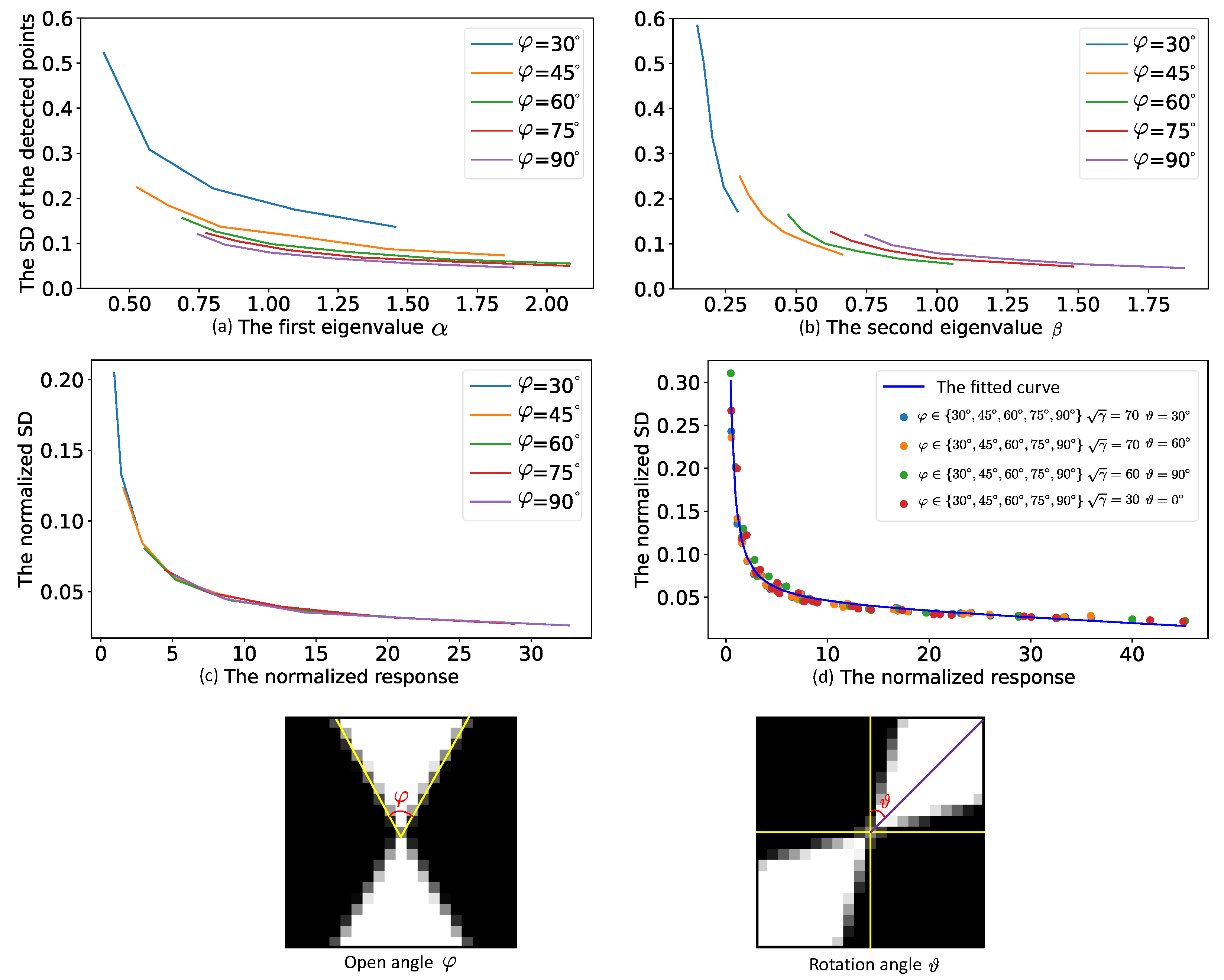

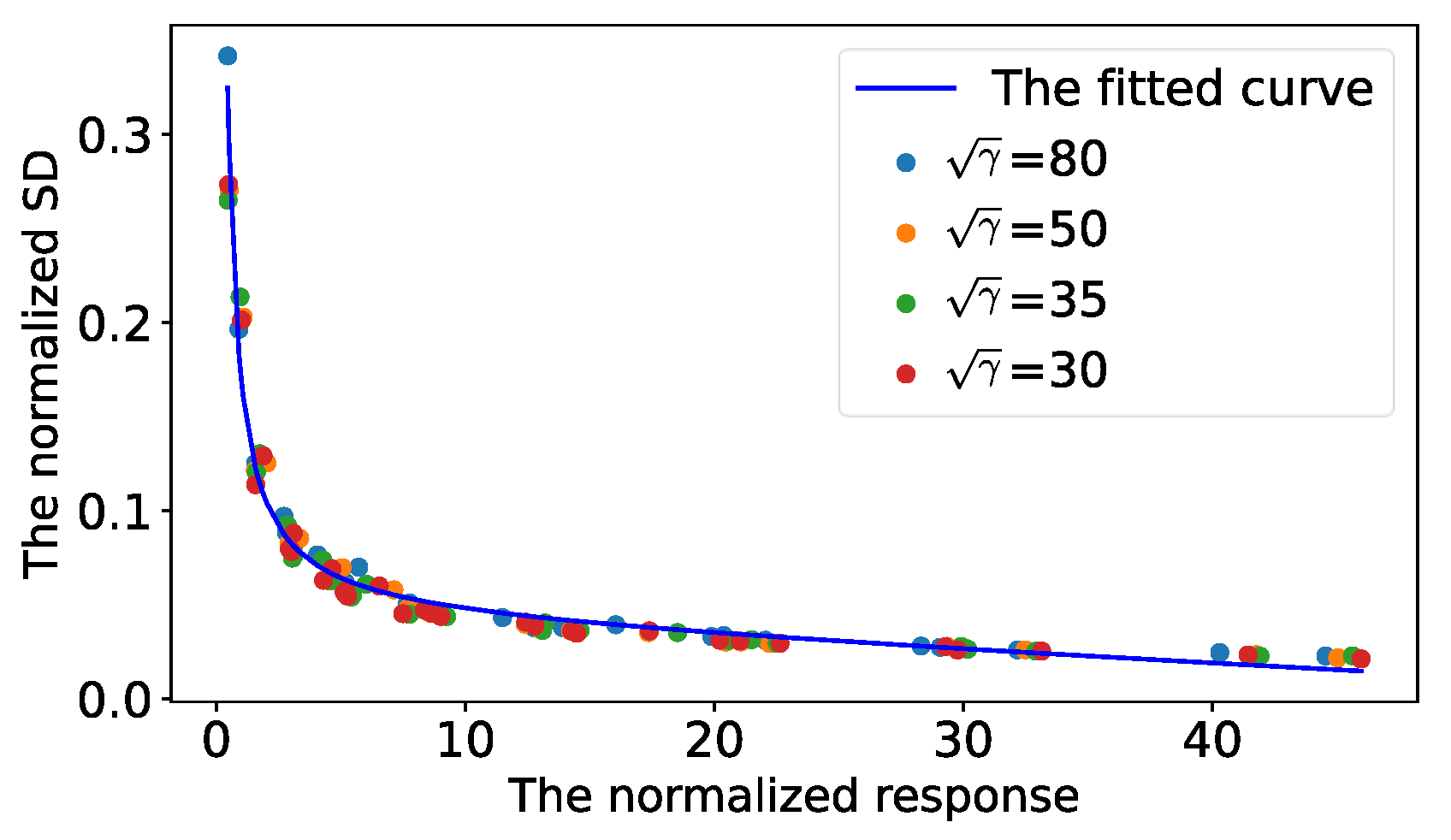

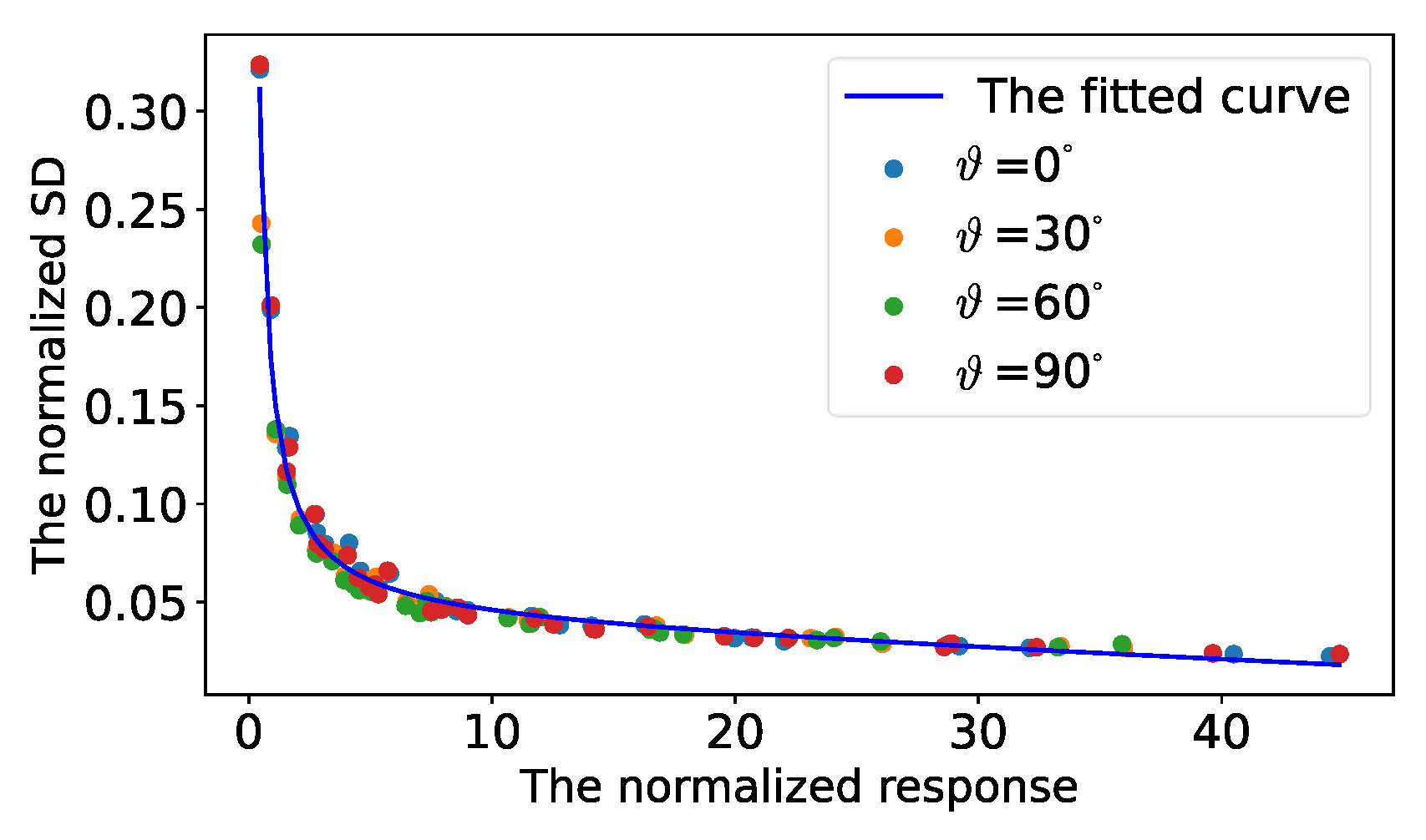

4.1. Uncertainty Measurement for Detected Control Points

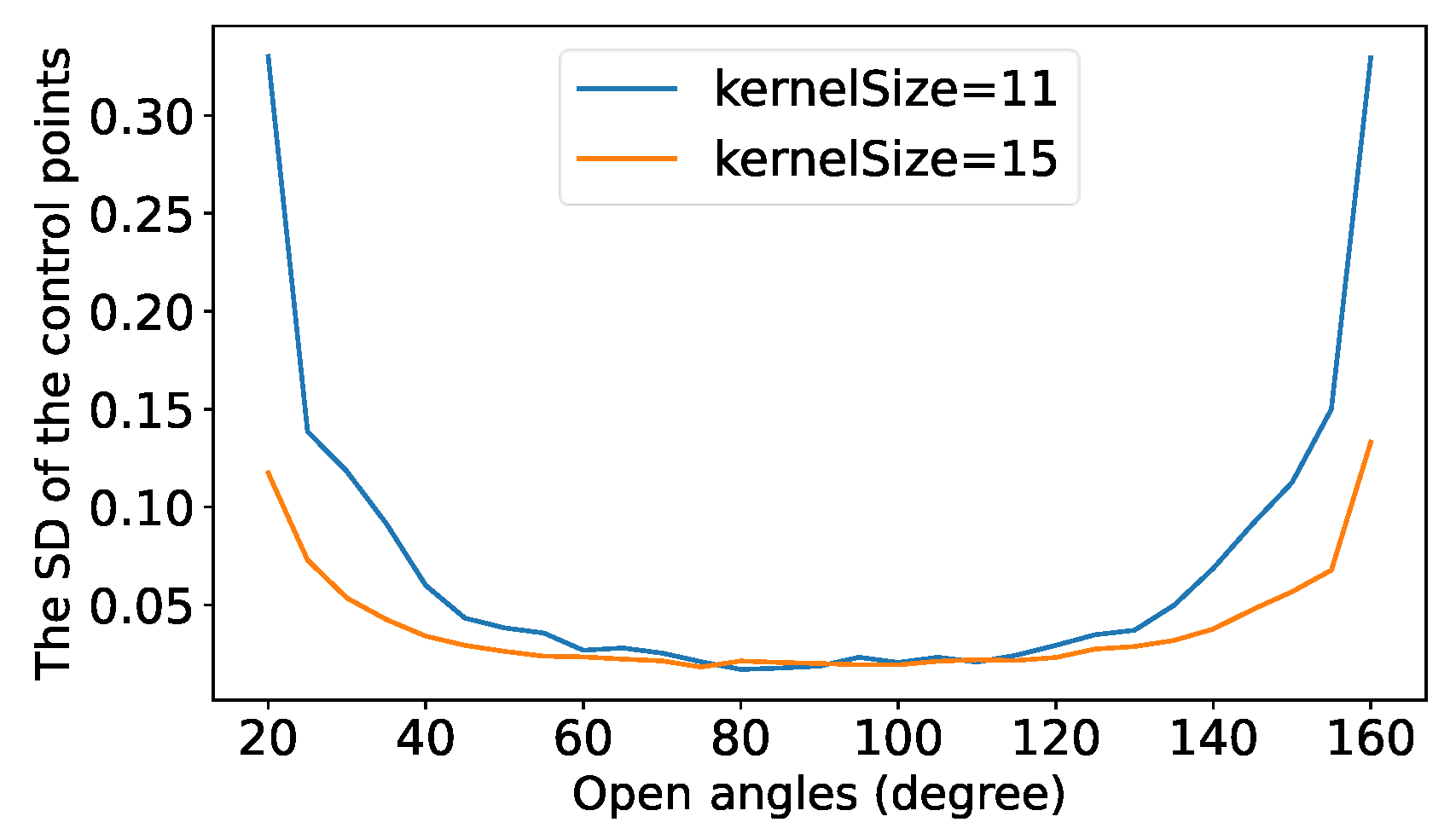

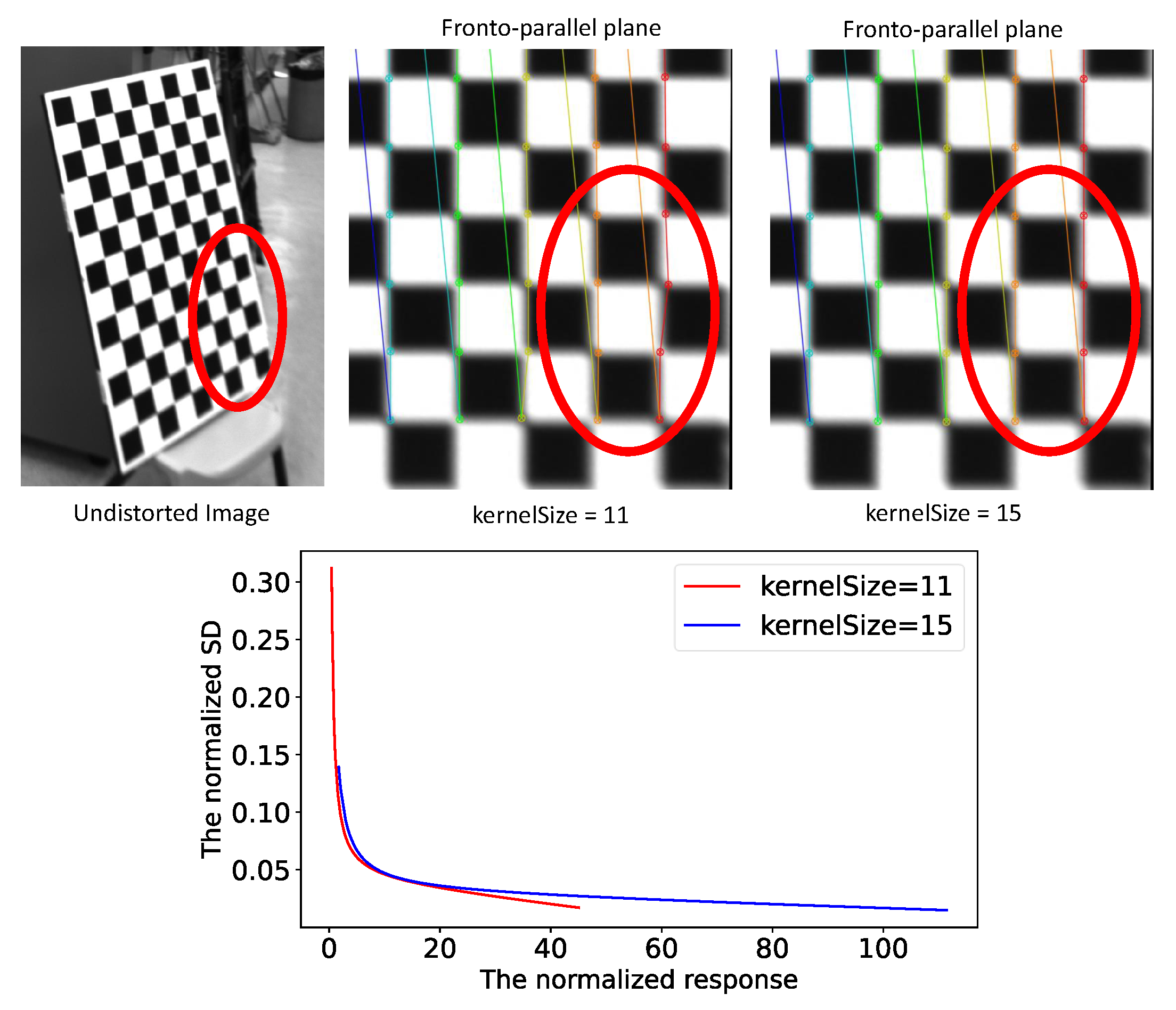

4.2. Point Standard Deviation vs. Kernel Size

4.3. Iterative Refinement

5. Experiments

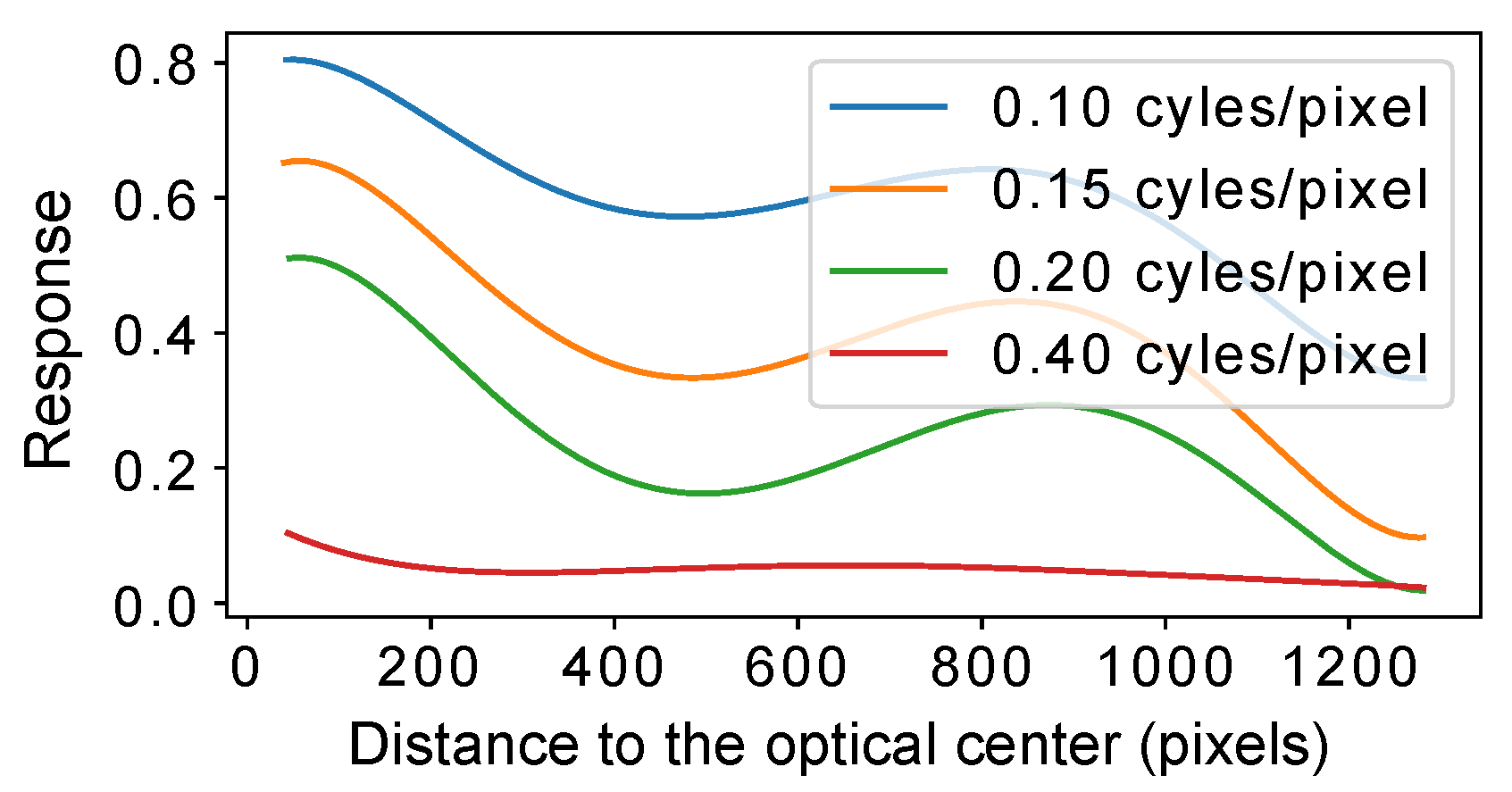

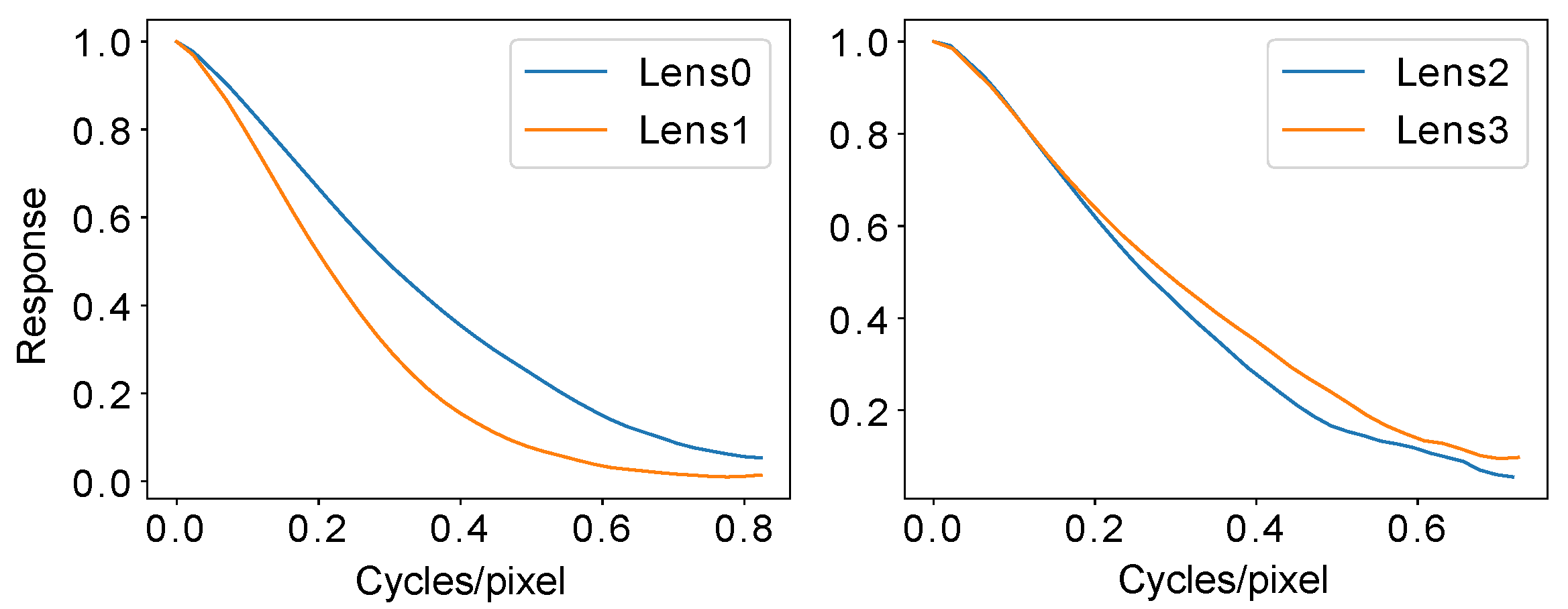

5.1. Consistency Analysis for Imaging Systems

5.2. Generation of Training and Testing Datasets

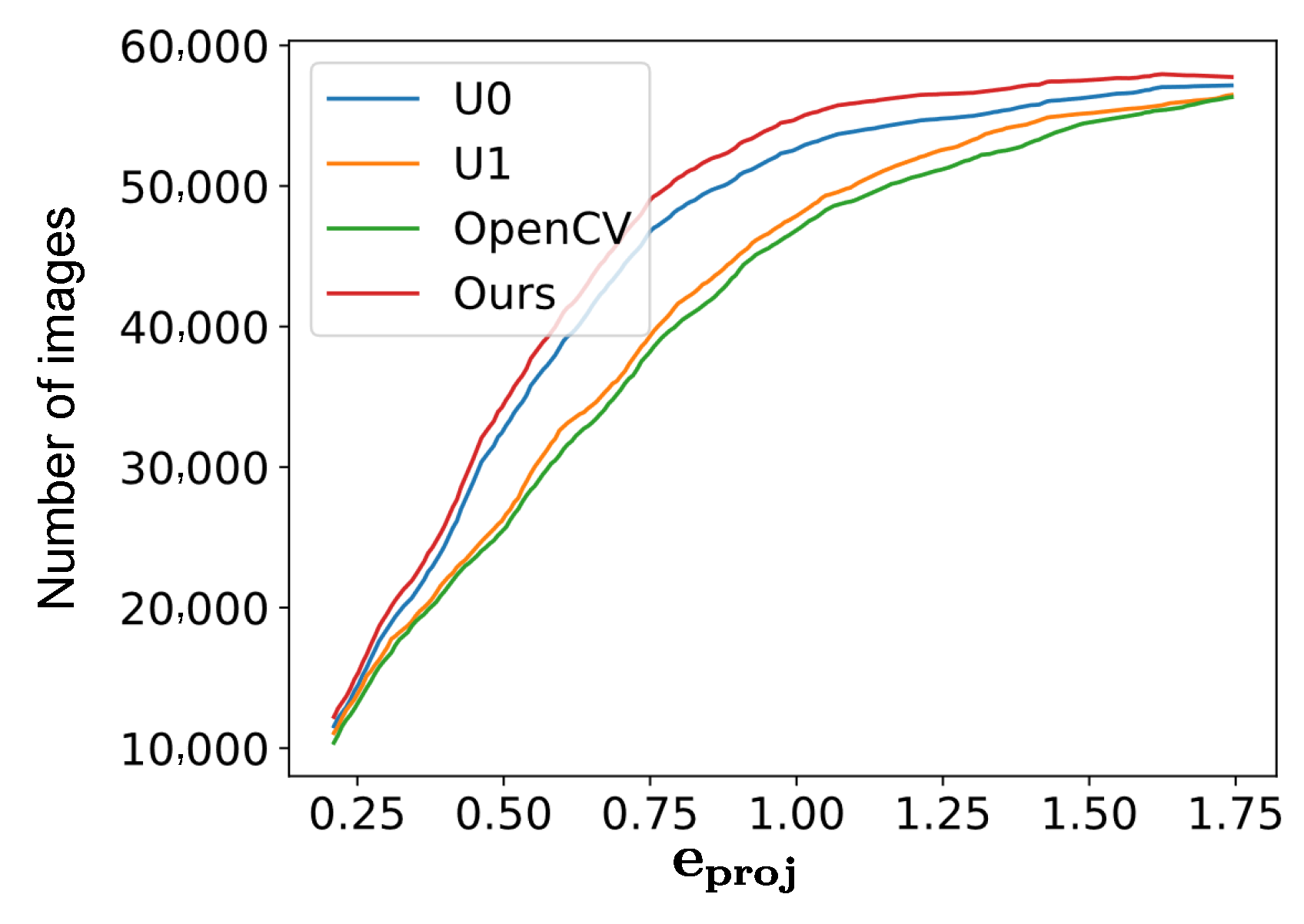

5.3. Evaluation of Our Method

5.4. Switching Kernel Size during Point Detection

5.5. Calibration for Stereo

5.6. Notice

5.7. Runtime Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Bradski, G.; Kaehler, A. Learning OpenCV: Computer Vision with the OpenCV Library; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2008. [Google Scholar]

- Peng, S.; Sturm, P. Calibration wizard: A guidance system for camera calibration based on modelling geometric and corner uncertainty. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 1497–1505. [Google Scholar] [CrossRef] [Green Version]

- Datta, A.; Kim, J.S.; Kanade, T. Accurate camera calibration using iterative refinement of control points. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision Workshops, ICCV Workshops, Kyoto, Japan, 27 September–4 October 2009; pp. 1201–1208. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Wilczkowiak, M.; Sturm, P.; Boyer, E. Using geometric constraints through parallelepipeds for calibration and 3D modeling. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 194–207. [Google Scholar] [CrossRef]

- Meng, X.; Hu, Z. A new easy camera calibration technique based on circular points. Pattern Recognit. 2003, 36, 1155–1164. [Google Scholar] [CrossRef] [Green Version]

- Ha, H.; Perdoch, M.; Alismail, H.; So Kweon, I.; Sheikh, Y. Deltille grids for geometric camera calibration. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5344–5352. [Google Scholar]

- Atcheson, B.; Heide, F.; Heidrich, W. Caltag: High precision fiducial markers for camera calibration. In Proceedings of the Vision, Modeling, and Visualization (VMV), Siegen, Germany, 15–17 November 2010; Volume 10, pp. 41–48. [Google Scholar]

- Ramalingam, S.; Sturm, P. A unifying model for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1309–1319. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Grossberg, M.D.; Nayar, S.K. A general imaging model and a method for finding its parameters. In Proceedings of the Eighth IEEE International Conference on Computer Vision (ICCV 2001), Vancouver, BC, Canada, 7–14 July 2001; Volume 2, pp. 108–115. [Google Scholar]

- Sturm, P.; Ramalingam, S. A generic concept for camera calibration. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2004; pp. 1–13. [Google Scholar]

- Ricolfe-Viala, C.; Sánchez-Salmerón, A.J. Robust metric calibration of non-linear camera lens distortion. Pattern Recognit. 2010, 43, 1688–1699. [Google Scholar] [CrossRef]

- Kannala, J.; Brandt, S. A generic camera calibration method for fish-eye lenses. In Proceedings of the 17th International Conference on Pattern Recognition (ICPR 2004), Cambridge, UK, 26 August 2004; Volume 1, pp. 10–13. [Google Scholar]

- Harris, C.G.; Stephens, M. A Combined Corner and Edge Detector. 1988. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.434.4816&rep=rep1&type=pdf (accessed on 20 February 2022).

- Lucchese, L.; Mitra, S.K. Using saddle points for subpixel feature detection in camera calibration targets. In Proceedings of the Asia-Pacific Conference on Circuits and Systems, Denpasar, Indonesia, 28–31 October 2002; Volume 2, pp. 191–195. [Google Scholar]

- Cai, B.; Wang, Y.; Wu, J.; Wang, M.; Li, F.; Ma, M.; Chen, X.; Wang, K. An effective method for camera calibration in defocus scene with circular gratings. Opt. Lasers Eng. 2019, 114, 44–49. [Google Scholar] [CrossRef]

- Bell, T.; Xu, J.; Zhang, S. Method for out-of-focus camera calibration. Appl. Opt. 2016, 55, 2346–2352. [Google Scholar] [CrossRef]

- Albarelli, A.; Rodolà, E.; Torsello, A. Robust camera calibration using inaccurate targets. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 376–383. [Google Scholar]

- Strobl, K.H.; Hirzinger, G. More accurate pinhole camera calibration with imperfect planar target. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011; pp. 1068–1075. [Google Scholar]

- Chen, Q.; Wu, H.; Wada, T. Camera calibration with two arbitrary coplanar circles. In Proceedings of the European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2004; pp. 521–532. [Google Scholar]

- Colombo, C.; Comanducci, D.; Del Bimbo, A. Camera calibration with two arbitrary coaxial circles. In Proceedings of the European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2006; pp. 265–276. [Google Scholar]

- Lee, H.; Shechtman, E.; Wang, J.; Lee, S. Automatic upright adjustment of photographs with robust camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 833–844. [Google Scholar] [CrossRef] [PubMed]

- Pritts, J.; Chum, O.; Matas, J. Detection, rectification and segmentation of coplanar repeated patterns. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2973–2980. [Google Scholar]

- Workman, S.; Greenwell, C.; Zhai, M.; Baltenberger, R.; Jacobs, N. Deepfocal: A method for direct focal length estimation. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 1369–1373. [Google Scholar]

- Rong, J.; Huang, S.; Shang, Z.; Ying, X. Radial lens distortion correction using convolutional neural networks trained with synthesized images. In Proceedings of the Asian Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 35–49. [Google Scholar]

- Hold-Geoffroy, Y.; Sunkavalli, K.; Eisenmann, J.; Fisher, M.; Gambaretto, E.; Hadap, S.; Lalonde, J.F. A perceptual measure for deep single image camera calibration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2354–2363. [Google Scholar]

- Lopez, M.; Mari, R.; Gargallo, P.; Kuang, Y.; Gonzalez-Jimenez, J.; Haro, G. Deep single image camera calibration with radial distortion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 11817–11825. [Google Scholar]

- Nocedal, J.; Wright, S. Numerical Optimization; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Foi, A.; Trimeche, M.; Katkovnik, V.; Egiazarian, K. Practical Poissonian-Gaussian Noise Modeling and Fitting for Single-Image Raw-Data. IEEE Trans. Image Process. 2008, 17, 1737–1754. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Burns, P.D. Slanted-edge MTF for digital camera and scanner analysis. In Is and Ts Pics Conference; Society for Imaging Science & Technology: Springfield, VA, USA, 2000; pp. 135–138. [Google Scholar]

- Hesch, J.A.; Roumeliotis, S.I. A direct least-squares (DLS) method for PnP. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 383–390. [Google Scholar]

| Id | Resolution | Distortion | Consistency | OpenCV | U0 | U1 | Ours |

|---|---|---|---|---|---|---|---|

| 1 | High | Large | Poor | 1.173 | 0.935 | 0.906 | 0.815 |

| 2 | High | Large | Poor | 0.941 | 0.796 | 0.925 | 0.764 |

| 3 | High | Small | Good | 0.359 | 0.358 | 0.394 | 0.356 |

| 4 | High | Small | Good | 0.204 | 0.211 | 0.237 | 0.205 |

| 5 | Low | Large | Poor | 0.857 | 0.705 | 0.862 | 0.689 |

| 6 | Low | Large | Poor | 0.936 | 0.832 | 0.871 | 0.713 |

| 7 | Low | Large | Good | 0.301 | 0.279 | 0.323 | 0.278 |

| 8 | Low | Large | Good | 0.485 | 0.431 | 0.517 | 0.442 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, K.; Liu, C.; Shen, S. Geometric Calibration for Cameras with Inconsistent Imaging Capabilities. Sensors 2022, 22, 2739. https://doi.org/10.3390/s22072739

Wang K, Liu C, Shen S. Geometric Calibration for Cameras with Inconsistent Imaging Capabilities. Sensors. 2022; 22(7):2739. https://doi.org/10.3390/s22072739

Chicago/Turabian StyleWang, Ke, Chuhao Liu, and Shaojie Shen. 2022. "Geometric Calibration for Cameras with Inconsistent Imaging Capabilities" Sensors 22, no. 7: 2739. https://doi.org/10.3390/s22072739

APA StyleWang, K., Liu, C., & Shen, S. (2022). Geometric Calibration for Cameras with Inconsistent Imaging Capabilities. Sensors, 22(7), 2739. https://doi.org/10.3390/s22072739