UWB and IMU-Based UAV’s Assistance System for Autonomous Landing on a Platform

Abstract

:1. Introduction

2. State of the Art of UWB-Based Systems

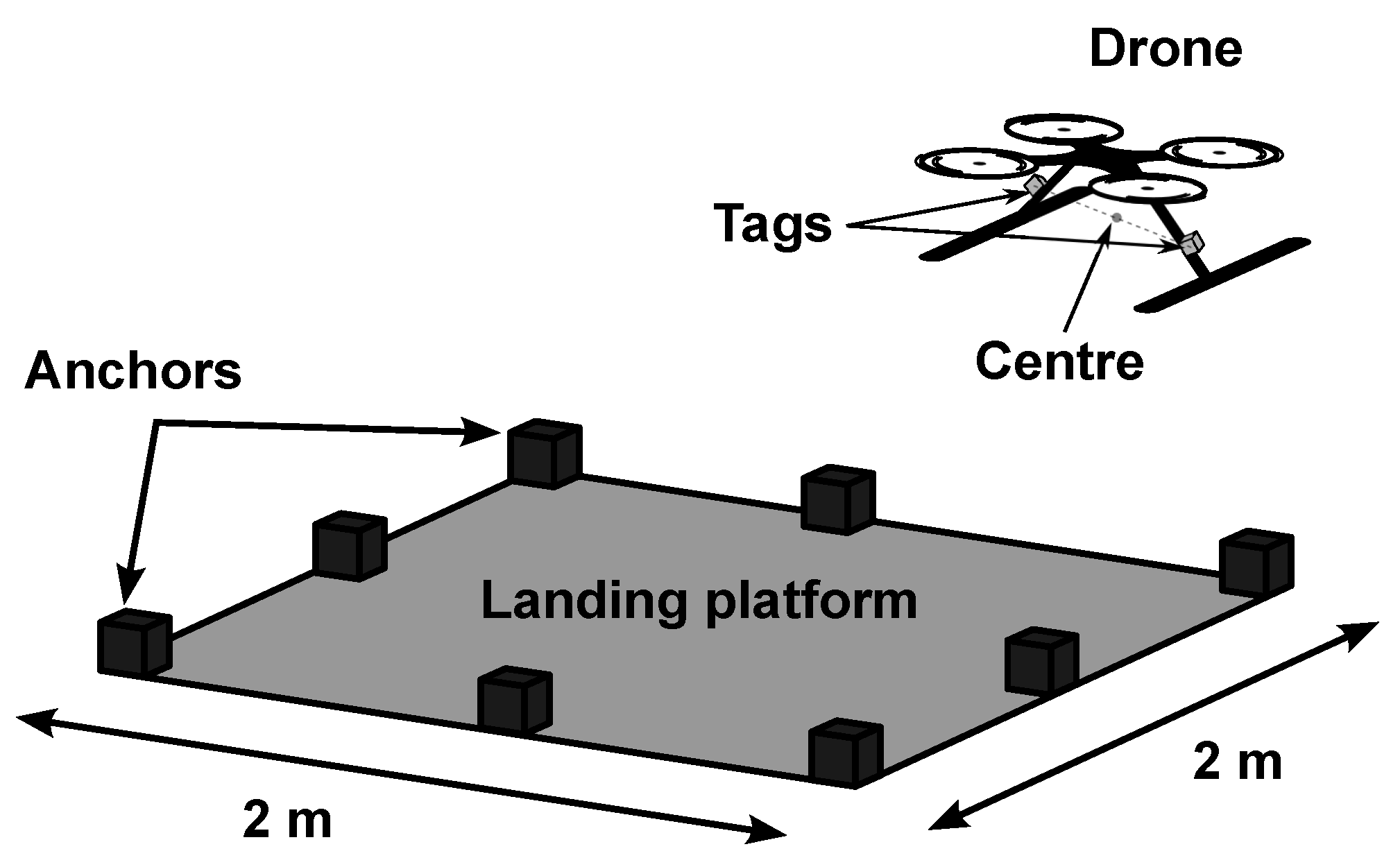

3. Proposed LAS

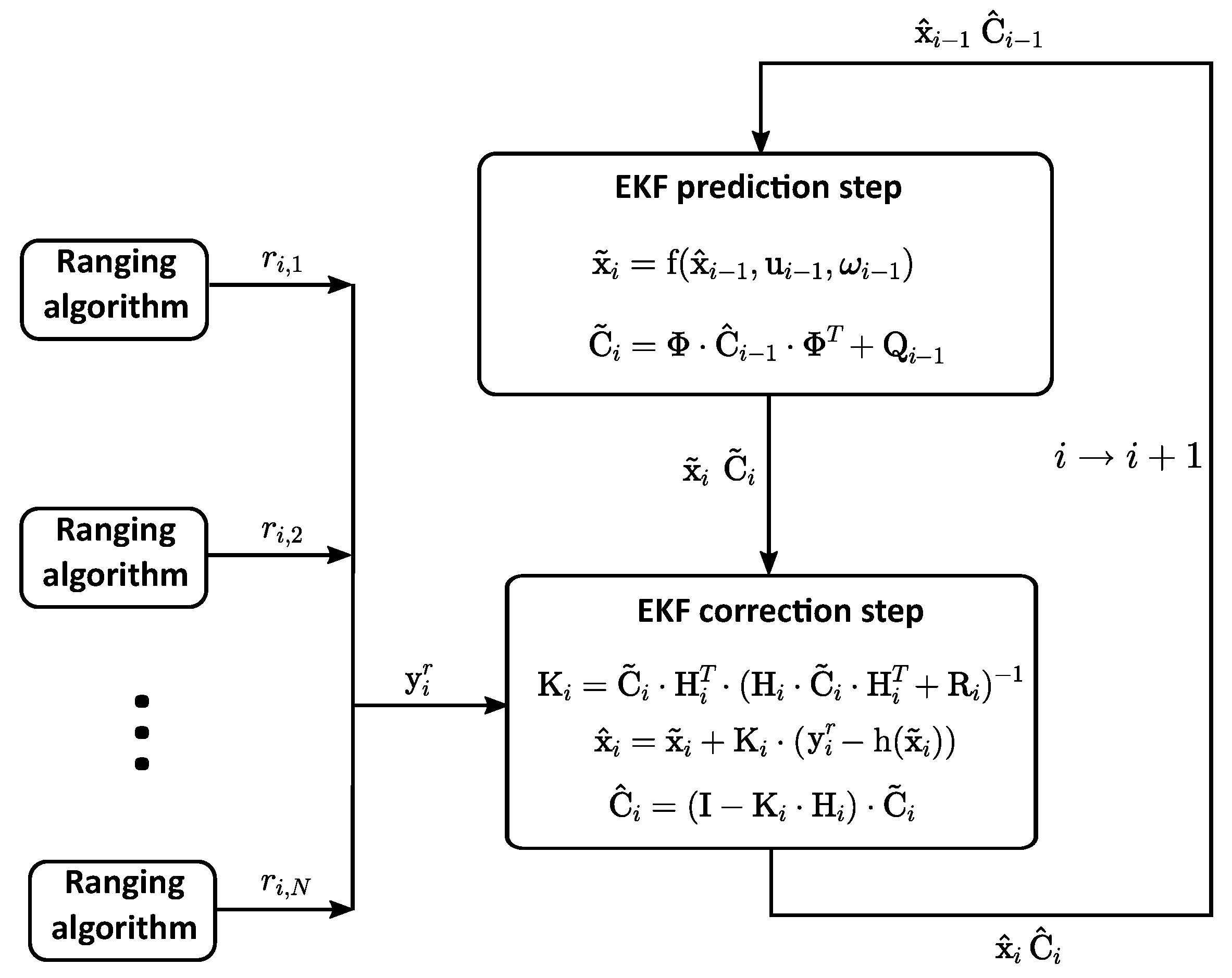

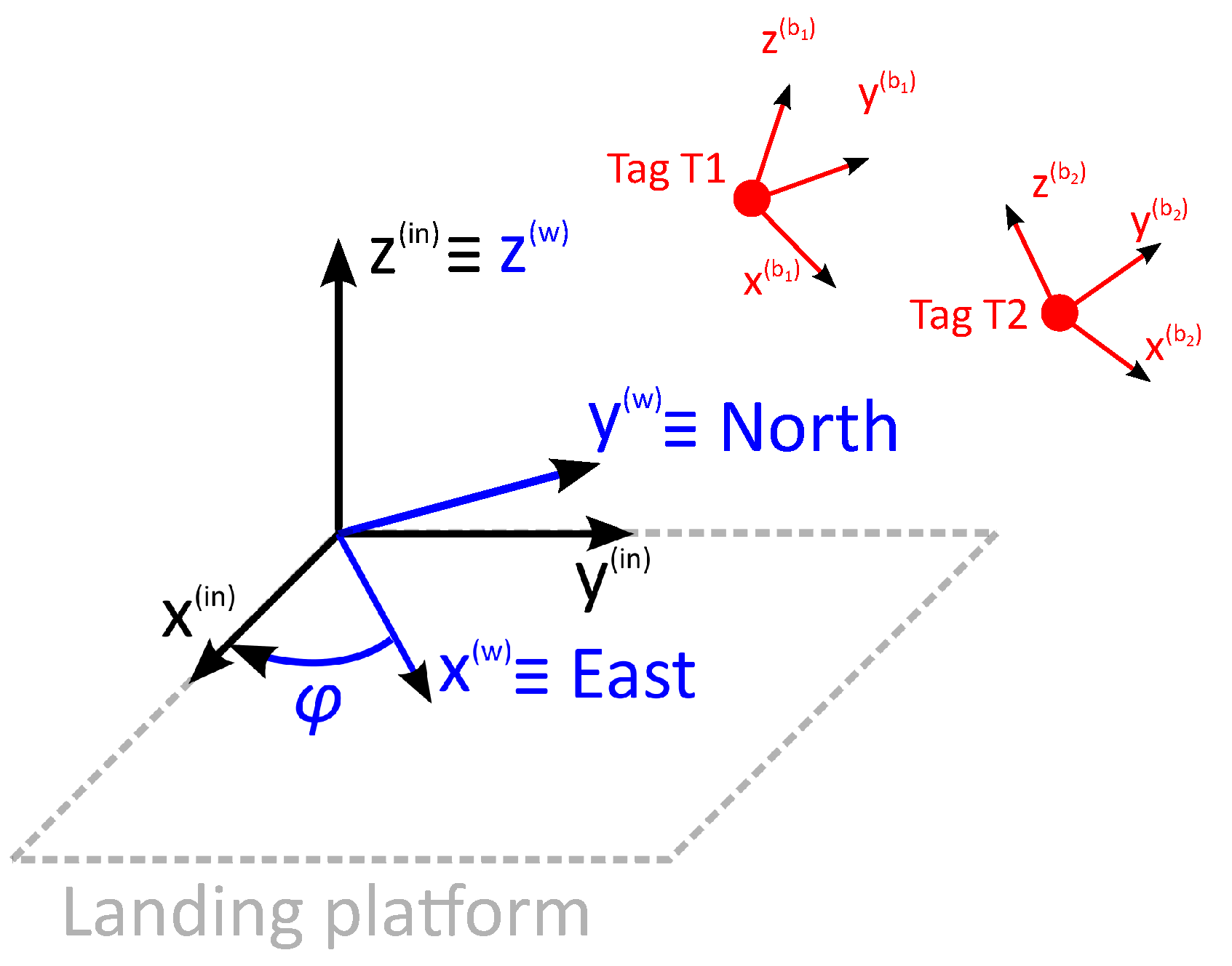

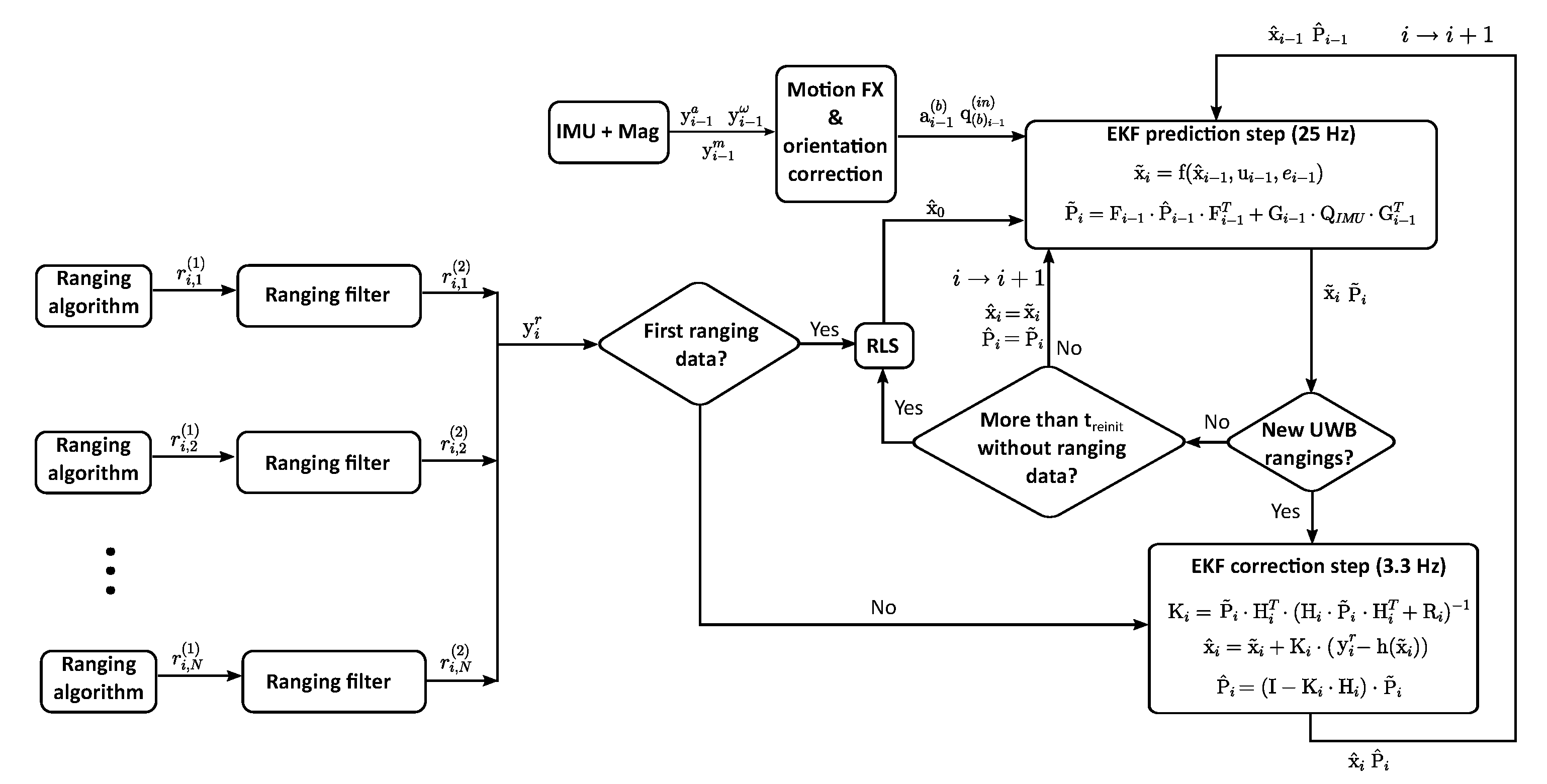

3.1. EKF with Fusion of Sensors

3.2. Combination of Tags

4. Methodology

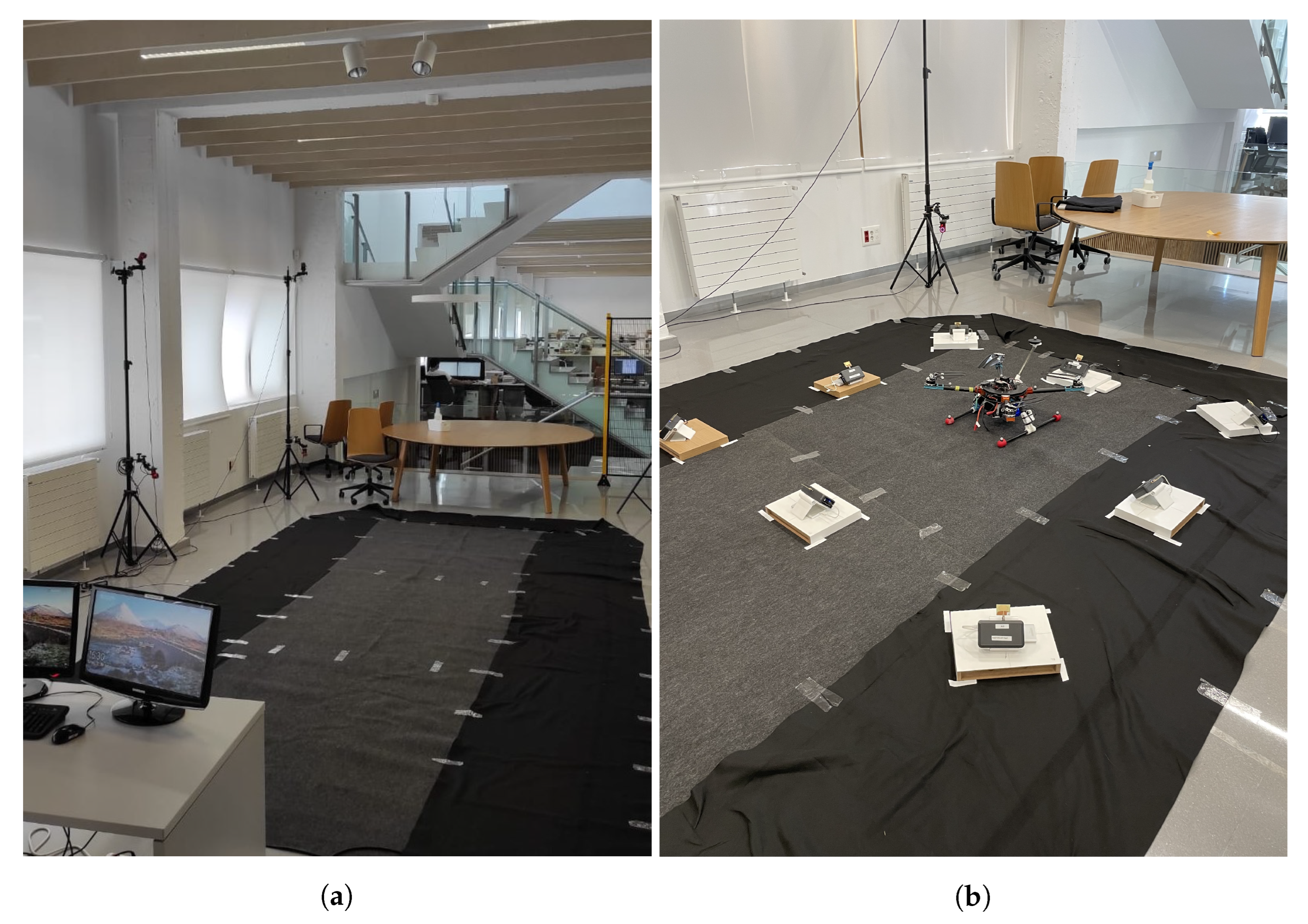

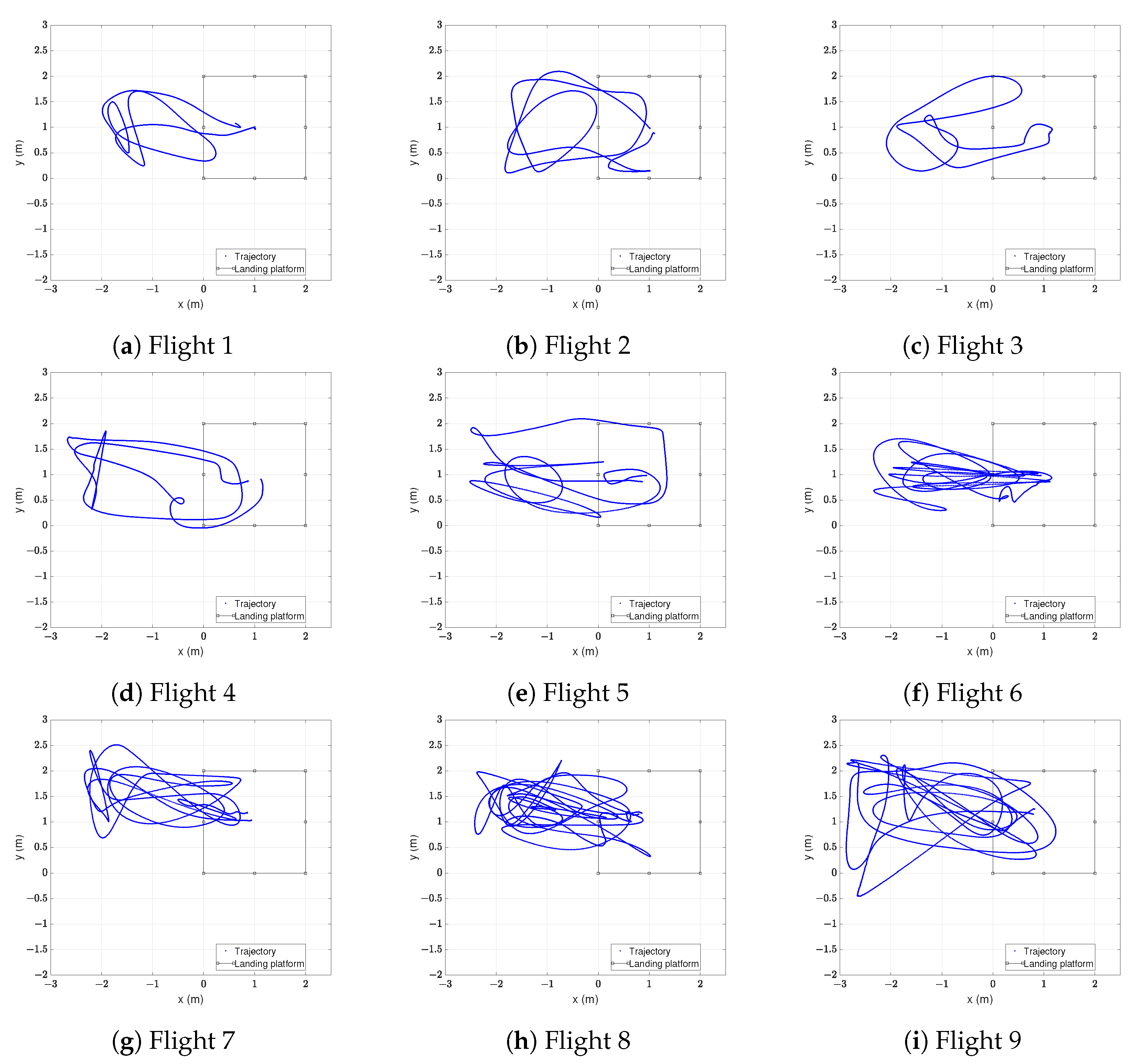

4.1. Indoor Experiments

4.2. Outdoor Experiments

4.3. Calculation of Errors

5. Results

5.1. Indoor Results

5.1.1. Accuracy with Four UWB Anchors

5.1.2. Accuracy with Eight UWB Anchors

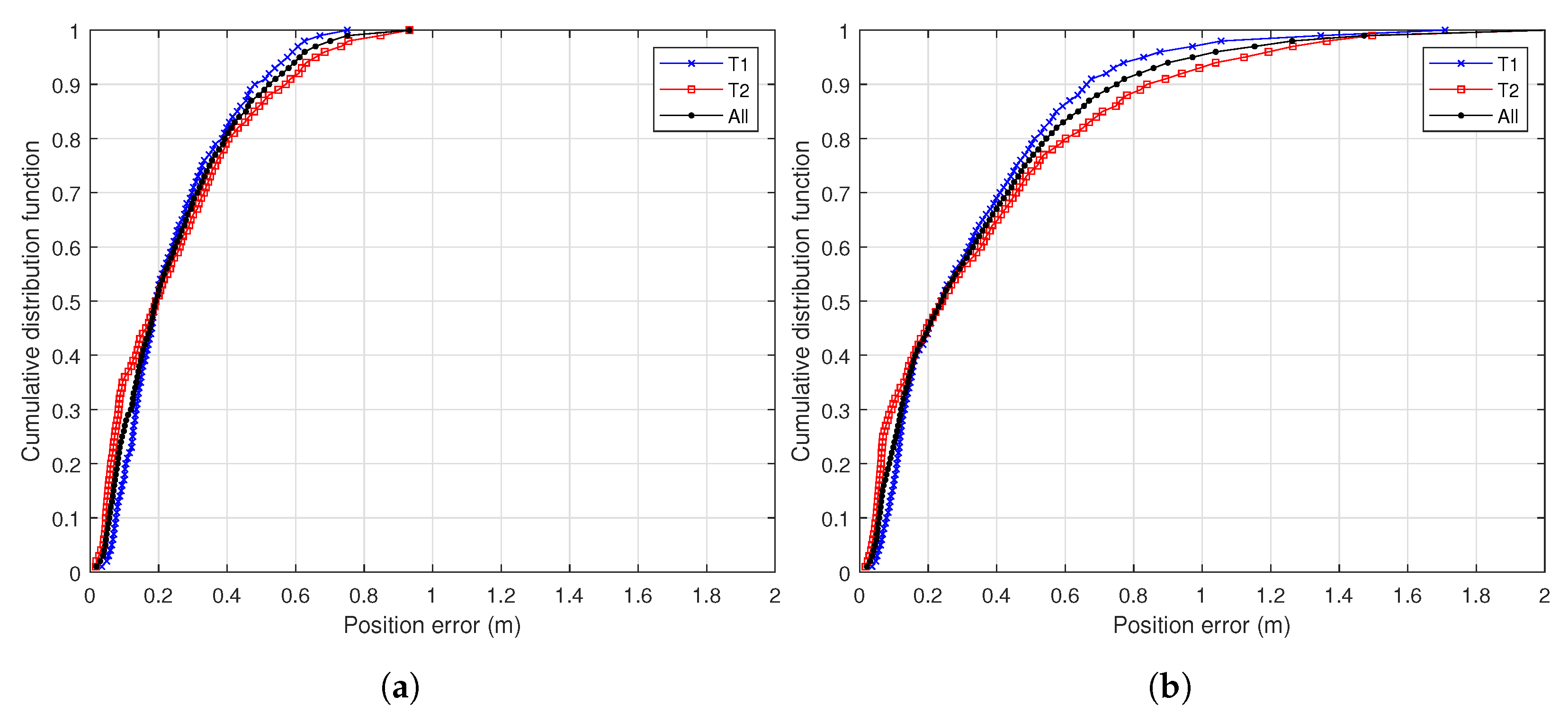

5.1.3. Accuracy with Fusion of Data

5.1.4. Accuracy with a Combination of Tags

5.1.5. Summary of Results

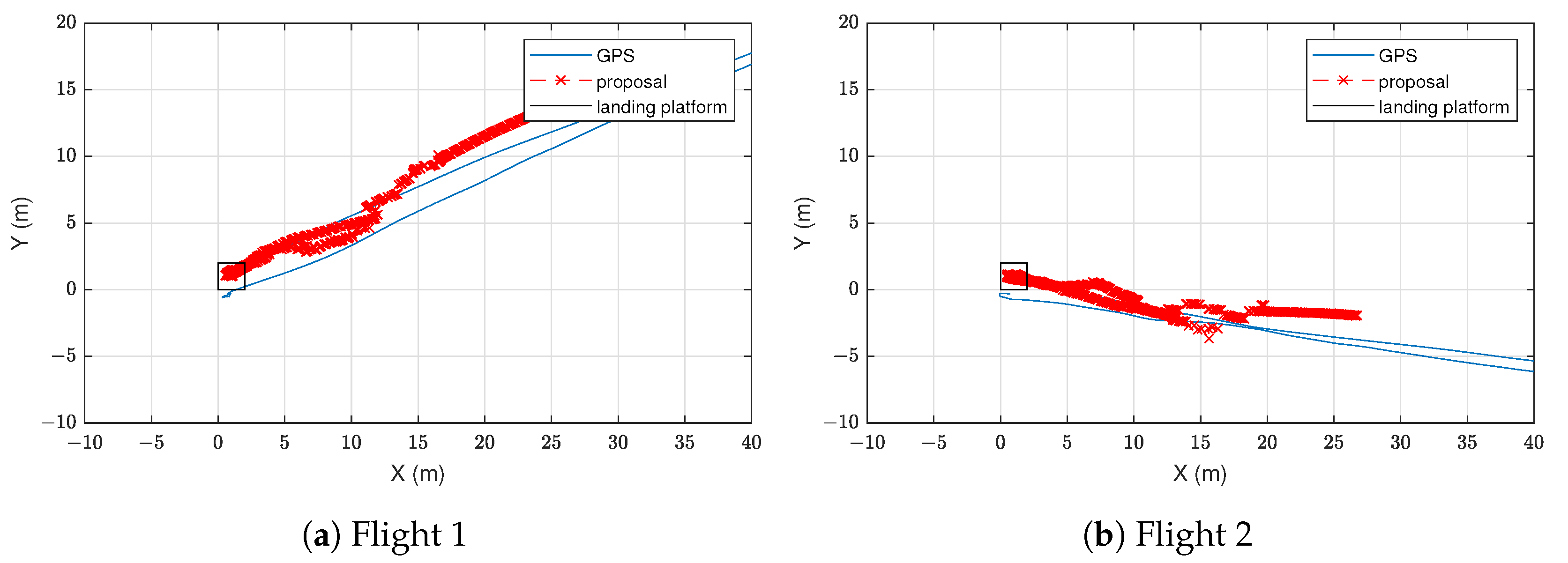

5.2. Results in a Real Environment

5.3. Comparison with State of the Art Technologies

6. Conclusions and Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AGV | Automated Guided Vehicle |

| EKF | Extended Kalman Filter |

| FMCW | Frequency Modulated Continuous Wave |

| GNSS | Global Navigation Satellite System |

| IMU | Inertial Measurement Unit |

| IR-UWB | Impulse Radio Ultra-wideband |

| LAS | Landing Assistance System |

| LIDAR | Laser Imaging Detection and Ranging |

| PRF | Pulse Repetition Frequency |

| RLS | Recursive Least Squares |

| RMSE | Root Mean Square Error |

| RTK-GPS | Real-time Kinematic Global Positioning System |

| RTLS | Real Time Locating System |

| SBC | Single Board Computer |

| SFD | Start of Frame Delimiter |

| ToF | Time-of-Flight |

| TWR | Two-Way Ranging |

| UAV | Unmanned Aerial Vehicle |

| UWB | Ultra-wideband |

References

- Jalil, B.; Leone, G.R.; Martinelli, M.; Moroni, D.; Pascali, M.A.; Berton, A. Fault Detection in Power Equipment via an Unmanned Aerial System Using Multi Modal Data. Sensors 2019, 19, 3014. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rahman, E.U.; Zhang, Y.; Ahmad, S.; Ahmad, H.I.; Jobaer, S. Autonomous Vision-Based Primary Distribution Systems Porcelain Insulators Inspection Using UAVs. Sensors 2021, 21, 974. [Google Scholar] [CrossRef]

- Suo, C.; Zhao, J.; Zhang, W.; Li, P.; Huang, R.; Zhu, J.; Tan, X. Research on UAV Three-Phase Transmission Line Tracking and Localization Method Based on Electric Field Sensor Array. Sensors 2021, 21, 8400. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Q.; Dinh, T.H.; Phung, M.D.; Ha, Q.P. Hierarchical Convolutional Neural Network With Feature Preservation and Autotuned Thresholding for Crack Detection. IEEE Access 2021, 9, 60201–60214. [Google Scholar] [CrossRef]

- Car, M.; Markovic, L.; Ivanovic, A.; Orsag, M.; Bogdan, S. Autonomous Wind-Turbine Blade Inspection Using LiDAR-Equipped Unmanned Aerial Vehicle. IEEE Access 2020, 8, 131380–131387. [Google Scholar] [CrossRef]

- Lee, D.; Liu, J.; Lim, R.; Chan, J.L.; Foong, S. Geometrical-Based Displacement Measurement With Pseudostereo Monocular Camera on Bidirectional Cascaded Linear Actuator. IEEE/ASME Trans. Mechatron. 2021, 26, 1923–1931. [Google Scholar] [CrossRef]

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned Aerial Vehicles (UAVs): A Survey on Civil Applications and Key Research Challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Sivaneri, V.O.; Gross, J.N. Flight-testing of a cooperative UGV-to-UAV strategy for improved positioning in challenging GNSS environments. Aerosp. Sci. Technol. 2018, 82–83, 575–582. [Google Scholar] [CrossRef]

- Sharp, C.; Shakernia, O.; Sastry, S. A vision system for landing an unmanned aerial vehicle. In Proceedings of the 2001 ICRA. IEEE International Conference on Robotics and Automation (Cat. No.01CH37164), Seoul, Korea, 21–26 May 2001; Volume 2, pp. 1720–1727. [Google Scholar] [CrossRef]

- Xu, G.; Zhang, Y.; Ji, S.; Cheng, Y.; Tian, Y. Research on computer vision-based for UAV autonomous landing on a ship. Pattern Recognit. Lett. 2009, 30, 600–605. [Google Scholar] [CrossRef]

- Nguyen, P.H.; Kim, K.W.; Lee, Y.W.; Park, K.R. Remote Marker-Based Tracking for UAV Landing Using Visible-Light Camera Sensor. Sensors 2017, 17, 1987. [Google Scholar] [CrossRef] [Green Version]

- Nguyen, P.H.; Arsalan, M.; Koo, J.H.; Naqvi, R.A.; Truong, N.Q.; Park, K.R. LightDenseYOLO: A Fast and Accurate Marker Tracker for Autonomous UAV Landing by Visible Light Camera Sensor on Drone. Sensors 2018, 18, 1703. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wubben, J.; Fabra, F.; Calafate, C.T.; Krzeszowski, T.; Marquez-Barja, J.M.; Cano, J.C.; Manzoni, P. Accurate Landing of Unmanned Aerial Vehicles Using Ground Pattern Recognition. Electronics 2019, 8, 1532. [Google Scholar] [CrossRef] [Green Version]

- Antenucci, A.; Mazzaro, S.; Fiorilla, A.E.; Messina, L.; Massa, A.; Matta, W. A ROS Based Automatic Control Implementation for Precision Landing on Slow Moving Platforms Using a Cooperative Fleet of Rotary-Wing UAVs. In Proceedings of the 2020 5th International Conference on Robotics and Automation Engineering (ICRAE), Singapore, 20–22 November 2020; pp. 139–144. [Google Scholar] [CrossRef]

- Lin, S.; Jin, L.; Chen, Z. Real-Time Monocular Vision System for UAV Autonomous Landing in Outdoor Low-Illumination Environments. Sensors 2021, 21, 6226. [Google Scholar] [CrossRef] [PubMed]

- Kim, I.; Viksnin, I.; Kaisina, I.; Kuznetsov, V. Computer Vision System for Landing Platform State Assessment Onboard of Unmanned Aerial Vehicle in Case of Input Visual Information Distortion. In Proceedings of the 2021 29th Conference of Open Innovations Association (FRUCT), Tampere, Finland, 12–14 May 2021; pp. 192–198. [Google Scholar] [CrossRef]

- Gupta, P.; Pareek, B.; Kumar, R.; Aeron, A.C. Vision-Based Safe Landing of UAV using Tiny-SURF Algorithm. In Proceedings of the 2021 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Melbourne, Australia, 17–20 October 2021; pp. 226–231. [Google Scholar] [CrossRef]

- Lee, B.; Saj, V.; Benedict, M.; Kalathil, D. Intelligent Vision-based Autonomous Ship Landing of VTOL UAVs. arXiv 2022, arXiv:2202.13005. [Google Scholar]

- Patruno, C.; Nitti, M.; Petitti, A.; Stella, E.; D’Orazio, T. A Vision-Based Approach for Unmanned Aerial Vehicle Landing. J. Intell. Robot. Syst. 2019, 95, 645–664. [Google Scholar] [CrossRef]

- Chen, X.; Phang, S.K.; Shan, M.; Chen, B.M. System integration of a vision-guided UAV for autonomous landing on moving platform. In Proceedings of the 2016 12th IEEE International Conference on Control and Automation (ICCA), Kathmandu, Nepal, 1–3 June 2016; pp. 761–766. [Google Scholar] [CrossRef]

- Araar, O.; Aouf, N.; Vitanov, I. Vision Based Autonomous Landing of Multirotor UAV on Moving Platform. J. Intell. Robot. Syst. 2017, 85, 369–384. [Google Scholar] [CrossRef]

- Yang, Q.; Sun, L. A fuzzy complementary Kalman filter based on visual and IMU data for UAV landing. Optik 2018, 173, 279–291. [Google Scholar] [CrossRef]

- Wang, J.; McKiver, D.; Pandit, S.; Abdelzaher, A.F.; Washington, J.; Chen, W. Precision UAV Landing Control Based on Visual Detection. In Proceedings of the 2020 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), Shenzhen, China, 6–8 August 2020; pp. 205–208. [Google Scholar] [CrossRef]

- Chang, C.W.; Lo, L.Y.; Cheung, H.C.; Feng, Y.; Yang, A.S.; Wen, C.Y.; Zhou, W. Proactive Guidance for Accurate UAV Landing on a Dynamic Platform: A Visual-Inertial Approach. Sensors 2022, 22, 404. [Google Scholar] [CrossRef]

- Bigazzi, L.; Gherardini, S.; Innocenti, G.; Basso, M. Development of Non Expensive Technologies for Precise Maneuvering of Completely Autonomous Unmanned Aerial Vehicles. Sensors 2021, 21, 391. [Google Scholar] [CrossRef]

- Santos, M.C.; Santana, L.V.; Brandão, A.S.; Sarcinelli-Filho, M.; Carelli, R. Indoor low-cost localization system for controlling aerial robots. Control Eng. Pract. 2017, 61, 93–111. [Google Scholar] [CrossRef]

- Demirhan, M.; Premachandra, C. Development of an Automated Camera-Based Drone Landing System. IEEE Access 2020, 8, 202111–202121. [Google Scholar] [CrossRef]

- Xing, B.Y.; Pan, F.; Feng, X.X.; Li, W.X.; Gao, Q. Autonomous Landing of a Micro Aerial Vehicle on a Moving Platform Using a Composite Landmark. Int. J. Aerosp. Eng. 2019, 2019, 4723869. [Google Scholar] [CrossRef]

- Supriyono, H.; Akhara, A. Design, building and performance testing of GPS and computer vision combination for increasing landing precision of quad-copter drone. J. Phys. Conf. Ser. 2021, 1858, 012074. [Google Scholar] [CrossRef]

- Benjumea, D.; Alcántara, A.; Ramos, A.; Torres-Gonzalez, A.; Sánchez-Cuevas, P.; Capitan, J.; Heredia, G.; Ollero, A. Localization System for Lightweight Unmanned Aerial Vehicles in Inspection Tasks. Sensors 2021, 21, 5937. [Google Scholar] [CrossRef]

- Paredes, J.A.; Álvarez, F.J.; Aguilera, T.; Aranda, F.J. Precise drone location and tracking by adaptive matched filtering from a top-view ToF camera. Expert Syst. Appl. 2020, 141, 112989. [Google Scholar] [CrossRef]

- Paredes, J.A.; Álvarez, F.J.; Aguilera, T.; Villadangos, J.M. 3D Indoor Positioning of UAVs with Spread Spectrum Ultrasound and Time-of-Flight Cameras. Sensors 2018, 18, 89. [Google Scholar] [CrossRef] [Green Version]

- Paredes, J.A.; Álvarez, F.J.; Hansard, M.; Rajab, K.Z. A Gaussian Process model for UAV localization using millimetre wave radar. Expert Syst. Appl. 2021, 185, 115563. [Google Scholar] [CrossRef]

- Shin, Y.H.; Lee, S.; Seo, J. Autonomous safe landing-area determination for rotorcraft UAVs using multiple IR-UWB radars. Aerosp. Sci. Technol. 2017, 69, 617–624. [Google Scholar] [CrossRef]

- Mohamadi, F. Software-Defined Multi-Mode Ultra-Wideband Radar for Autonomous Vertical Take-Off and Landing of Small Unmanned Aerial Systems. U.S. Patent 9,110,168, 18 August 2015. [Google Scholar]

- Mohamadi, F. Vertical Takeoff and Landing (vtol) Small Unmanned Aerial System for Monitoring Oil and Gas Pipeline. U.S. Patent 8,880,241, 4 November 2014. [Google Scholar]

- Kim, E.; Choi, D. A UWB positioning network enabling unmanned aircraft systems auto land. Aerosp. Sci. Technol. 2016, 58, 418–426. [Google Scholar] [CrossRef]

- Cisek, K.; Zolich, A.; Klausen, K.; Johansen, T.A. Ultra-wide band Real time Location Systems: Practical implementation and UAV performance evaluation. In Proceedings of the 2017 Workshop on Research, Education and Development of Unmanned Aerial Systems, RED-UAS 2017, Linköping, Sweden, 3–5 October 2017; pp. 204–209. [Google Scholar] [CrossRef] [Green Version]

- Shin, Y.; Kim, E. Primitive Path Generation for a UWB Network Based Auto Landing System. In Proceedings of the 3rd IEEE International Conference on Robotic Computing, IRC 2019, Naples, Italy, 25–27 February 2019; pp. 431–432. [Google Scholar] [CrossRef]

- Zekavat, R.; Buehrer, R.M. Handbook of Position Location: Theory, Practice, and Advances; Wiley-IEEE Press: Hoboken, NJ, USA, 2019. [Google Scholar]

- Zamora-Cadenas, L.; Velez, I.; Sierra-Garcia, J.E. UWB-Based Safety System for Autonomous Guided Vehicles Without Hardware on the Infrastructure. IEEE Access 2021, 9, 96430–96443. [Google Scholar] [CrossRef]

- Queralta, J.P.; Martínez Almansa, C.; Schiano, F.; Floreano, D.; Westerlund, T. UWB-based System for UAV Localization in GNSS-Denied Environments: Characterization and Dataset. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25 October 2020–24 January 2021; pp. 4521–4528. [Google Scholar] [CrossRef]

- Khalaf-Allah, M. Particle Filtering for Three-Dimensional TDoA-Based Positioning Using Four Anchor Nodes. Sensors 2020, 20, 4516. [Google Scholar] [CrossRef] [PubMed]

- Mueller, M.W.; Hamer, M.; D’Andrea, R. Fusing ultra-wideband range measurements with accelerometers and rate gyroscopes for quadrocopter state estimation. In Proceedings of the IEEE International Conference on Robotics and Automation, Seattle, WA, USA, 26–30 May 2015; pp. 1730–1736. [Google Scholar] [CrossRef]

- Fresk, E.; Ödmark, K.; Nikolakopoulos, G. Ultra WideBand enabled Inertial Odometry for Generic Localization. IFAC-PapersOnLine 2017, 50, 11465–11472. [Google Scholar] [CrossRef]

- Song, Y.; Hsu, L.T. Tightly coupled integrated navigation system via factor graph for UAV indoor localization. Aerosp. Sci. Technol. 2021, 108, 106370. [Google Scholar] [CrossRef]

- Wang, C.; Li, K.; Liang, G.; Chen, H.; Huang, S.; Wu, X. A Heterogeneous Sensing System-Based Method for Unmanned Aerial Vehicle Indoor Positioning. Sensors 2017, 17, 1842. [Google Scholar] [CrossRef] [PubMed]

- Zahran, S.; Mostafa, M.M.; Masiero, A.; Moussa, A.M.; Vettore, A.; El-Sheimy, N. Micro-radar and UWB aided UAV navigation in GNSS denied environment. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.-ISPRS Arch. 2018, 42, 469–476. [Google Scholar] [CrossRef] [Green Version]

- Gryte, K.; Hansen, J.; Johansen, T.; Fossen, T. Robust Navigation of UAV using Inertial Sensors Aided by UWB and RTK GPS. In Proceedings of the AIAA Guidance, Navigation, and Control Conference, Grapevine, TX, USA, 9–13 January 2017. [Google Scholar] [CrossRef] [Green Version]

- Tiemann, J.; Ramsey, A.; Wietfeld, C. Enhanced UAV indoor navigation through SLAM-Augmented UWB Localization. In Proceedings of the 2018 IEEE International Conference on Communications Workshops, ICC Workshops 2018, Kansas City, MO, USA, 20–24 May 2018; pp. 1–6. [Google Scholar] [CrossRef]

- d’Apolito, F.; Sulzbachner, C. System Architecture of a Demonstrator for Indoor Aerial Navigation. IFAC-PapersOnLine 2019, 52, 316–320. [Google Scholar] [CrossRef]

- Hoeller, D.; Ledergerber, A.; Hamer, M.; D’Andrea, R. Augmenting Ultra-Wideband Localization with Computer Vision for Accurate Flight. IFAC-PapersOnLine 2017, 50, 12734–12740. [Google Scholar] [CrossRef]

- Nguyen, T.H.; Cao, M.; Nguyen, T.M.; Xie, L. Post-Mission Autonomous Return and Precision Landing of UAV. In Proceedings of the 2018 15th International Conference on Control, Automation, Robotics and Vision, ICARCV 2018, Singapore, 18–21 November 2018; pp. 1747–1752. [Google Scholar] [CrossRef]

- Orjales, F.; Losada-Pita, J.; Paz-Lopez, A.; Deibe, Á. Towards Precise Positioning and Movement of UAVs for Near-Wall Tasks in GNSS-Denied Environments. Sensors 2021, 21, 2194. [Google Scholar] [CrossRef]

- Zamora-Cadenas, L.; Arrue, N.; Jiménez-Irastorza, A.; Vélez, I. Improving the Performance of an FMCW Indoor Localization System by Optimizing the Ranging Estimator. In Proceedings of the 2010 6th International Conference on Wireless and Mobile Communications, Valencia, Spain, 20–25 September 2010; pp. 226–231. [Google Scholar] [CrossRef]

- LSM6DSO. Available online: https://www.st.com/en/mems-and-sensors/lsm6dso.html (accessed on 26 January 2022).

- LIS2MDL. Available online: https://www.st.com/en/mems-and-sensors/lis2mdl.html (accessed on 26 January 2022).

- Getting started with MotionFX Sensor Fusion Library in X-CUBE-MEMS1 Expansion for STM32Cube. Available online: https://www.st.com/en/embedded-software/x-cube-mems1.html#documentation (accessed on 26 January 2022).

- Kay, S.M. Fundamentals of Statistical Signal Processing: Estimation Theory; Prentice-Hall, Inc.: Upper Saddle River, NJ, USA, 1993. [Google Scholar]

- Kok, M.; Hol, J.D.; Schön, T.B. Using Inertial Sensors for Position and Orientation Estimation; Now Foundations and Trends: Boston, MA, USA, 2017. [Google Scholar]

- Solà, J. Quaternion kinematics for the error-state Kalman filter. arXiv 2017, arXiv:1711.02508. [Google Scholar]

- Rubinstein, R.Y.; Kroese, D.P. Simulation and the Monte Carlo Method, 3rd ed.; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2017. [Google Scholar]

| Parameter | Value | Units |

|---|---|---|

| Carrier frequency | 3.9936 | GHz |

| Bandwidth | 499.2 | MHz |

| Channel | 2 | - |

| Bitrate | 6.8 | Mbps |

| PRF (pulse repetition frequency) | 16 | MHz |

| Preamble length | 128 | symbols |

| Preamble code | 3 | - |

| SFD (start of frame delimiter) | 8 | symbols |

| Ranging rate | 3.3 | Hz |

| Parameter | Value |

|---|---|

| Sampling rate | 25 Hz |

| output_type | 1 |

| acc_orientation | ENU |

| gyro_orientation | ENU |

| mag_orientation | ESU |

| LMode | 1 |

| ATime | 0.9 |

| MTime | 1.5 |

| FrTime | 0.667 |

| modx | 2 |

| Anchor Name | x (m) | y (m) | z (m) |

|---|---|---|---|

| A0 | 1.998 | 0.0 | 0.145 |

| A1 | 1.0 | 0.0 | 0.149 |

| A2 | 0.0 | 0.0 | 0.147 |

| A3 | 0.0 | 0.999 | 0.151 |

| A4 | 0.0 | 1.998 | 0.155 |

| A5 | 1.001 | 1.998 | 0.153 |

| A6 | 1.998 | 1.998 | 0.157 |

| A7 | 1.998 | 0.999 | 0.159 |

| Flight | Mean Acceleration () | Max Acceleration () |

|---|---|---|

| Flight 1 | 0.456 | 1.632 |

| Flight 2 | 0.483 | 1.651 |

| Flight 3 | 0.445 | 1.588 |

| Flight 4 | 0.563 | 1.838 |

| Flight 5 | 1.092 | 4.145 |

| Flight 6 | 1.958 | 6.496 |

| Flight 7 | 1.404 | 4.412 |

| Flight 8 | 1.374 | 4.199 |

| Flight 9 | 1.285 | 4.807 |

| Tag | Flight | μ (m) | σ (m) | RMSE (m) | P (m) | P(%) < 1 m (%) | ϵmax (m) |

|---|---|---|---|---|---|---|---|

| T1 | Flight 1 | 0.233 | 0.144 | 0.273 | 0.346 | 100 | 0.608 |

| Flight 2 | 0.245 | 0.148 | 0.286 | 0.384 | 100 | 0.598 | |

| Flight 3 | 0.214 | 0.176 | 0.277 | 0.336 | 100 | 0.751 | |

| Flight 4 | 0.273 | 0.161 | 0.317 | 0.407 | 100 | 0.685 | |

| Flight 5 | 0.264 | 0.279 | 0.383 | 0.356 | 97.46 | 1.609 | |

| Flight 6 | 0.227 | 0.161 | 0.278 | 0.372 | 100 | 0.681 | |

| Flight 7 | 0.311 | 0.249 | 0.398 | 0.490 | 97.67 | 1.075 | |

| Flight 8 | 0.339 | 0.245 | 0.418 | 0.566 | 98.12 | 1.214 | |

| Flight 9 | 0.411 | 0.323 | 0.522 | 0.618 | 94.95 | 1.709 | |

| All | 0.293 | 0.238 | 0.377 | 0.461 | 98.39 | 1.709 | |

| T2 | Flight 1 | 0.184 | 0.133 | 0.227 | 0.318 | 100 | 0.565 |

| Flight 2 | 0.221 | 0.160 | 0.273 | 0.375 | 100 | 0.649 | |

| Flight 3 | 0.256 | 0.233 | 0.346 | 0.455 | 100 | 0.854 | |

| Flight 4 | 0.327 | 0.246 | 0.409 | 0.580 | 100 | 0.932 | |

| Flight 5 | 0.336 | 0.346 | 0.481 | 0.529 | 93.78 | 1.716 | |

| Flight 6 | 0.301 | 0.302 | 0.426 | 0.480 | 96.00 | 1.499 | |

| Flight 7 | 0.369 | 0.397 | 0.541 | 0.666 | 92.35 | 1.790 | |

| Flight 8 | 0.333 | 0.310 | 0.455 | 0.551 | 94.14 | 1.283 | |

| Flight 9 | 0.433 | 0.387 | 0.580 | 0.736 | 90.15 | 2.001 | |

| All | 0.316 | 0.309 | 0.442 | 0.521 | 95.71 | 2.001 |

| Tag | Flight | μ (m) | σ (m) | RMSE (m) | P (m) | P(%) < 1 m (%) | ϵmax (m) |

|---|---|---|---|---|---|---|---|

| T1 | Flight 1 | 0.226 | 0.161 | 0.277 | 0.334 | 100 | 0.720 |

| Flight 2 | 0.237 | 0.116 | 0.263 | 0.356 | 100 | 0.528 | |

| Flight 3 | 0.208 | 0.153 | 0.258 | 0.347 | 100 | 0.774 | |

| Flight 4 | 0.240 | 0.135 | 0.275 | 0.365 | 100 | 0.581 | |

| Flight 5 | 0.278 | 0.290 | 0.401 | 0.460 | 96.98 | 1.431 | |

| Flight 6 | 0.259 | 0.205 | 0.330 | 0.498 | 100 | 0.865 | |

| Flight 7 | 0.265 | 0.196 | 0.329 | 0.450 | 100 | 0.789 | |

| Flight 8 | 0.343 | 0.238 | 0.417 | 0.571 | 99.38 | 1.067 | |

| Flight 9 | 0.357 | 0.289 | 0.459 | 0.554 | 95.38 | 1.304 | |

| All | 0.280 | 0.222 | 0.357 | 0.445 | 98.86 | 1.431 | |

| T2 | Flight 1 | 0.169 | 0.113 | 0.203 | 0.279 | 100 | 0.492 |

| Flight 2 | 0.204 | 0.144 | 0.250 | 0.323 | 100 | 0.650 | |

| Flight 3 | 0.246 | 0.224 | 0.333 | 0.433 | 100 | 0.857 | |

| Flight 4 | 0.275 | 0.206 | 0.344 | 0.469 | 100 | 0.904 | |

| Flight 5 | 0.342 | 0.370 | 0.503 | 0.566 | 90.31 | 1.623 | |

| Flight 6 | 0.379 | 0.360 | 0.522 | 0.737 | 91.71 | 1.722 | |

| Flight 7 | 0.327 | 0.269 | 0.423 | 0.555 | 97.00 | 1.267 | |

| Flight 8 | 0.324 | 0.268 | 0.420 | 0.582 | 99.33 | 1.099 | |

| Flight 9 | 0.372 | 0.325 | 0.493 | 0.611 | 93.81 | 1.487 | |

| All | 0.303 | 0.282 | 0.414 | 0.505 | 96.69 | 1.722 |

| Tag | Flight | μ (m) | σ (m) | RMSE (m) | P (m) | P(%) < 1 m (%) | ϵmax (m) |

|---|---|---|---|---|---|---|---|

| T1 | Flight 1 | 0.175 | 0.105 | 0.204 | 0.265 | 100 | 0.630 |

| Flight 2 | 0.199 | 0.122 | 0.233 | 0.306 | 100 | 0.613 | |

| Flight 3 | 0.176 | 0.094 | 0.200 | 0.245 | 100 | 0.578 | |

| Flight 4 | 0.193 | 0.077 | 0.208 | 0.259 | 100 | 0.434 | |

| Flight 5 | 0.165 | 0.103 | 0.194 | 0.241 | 100 | 0.751 | |

| Flight 6 | 0.195 | 0.139 | 0.240 | 0.298 | 100 | 0.814 | |

| Flight 7 | 0.193 | 0.151 | 0.245 | 0.302 | 99.82 | 1.090 | |

| Flight 8 | 0.207 | 0.153 | 0.257 | 0.336 | 100 | 0.794 | |

| Flight 9 | 0.243 | 0.179 | 0.301 | 0.380 | 99.70 | 1.203 | |

| All | 0.198 | 0.137 | 0.241 | 0.296 | 99.93 | 1.203 | |

| T2 | Flight 1 | 0.177 | 0.118 | 0.213 | 0.289 | 100 | 0.496 |

| Flight 2 | 0.167 | 0.110 | 0.200 | 0.252 | 100 | 0.523 | |

| Flight 3 | 0.155 | 0.114 | 0.192 | 0.262 | 100 | 0.577 | |

| Flight 4 | 0.225 | 0.187 | 0.293 | 0.349 | 100 | 0.941 | |

| Flight 5 | 0.209 | 0.135 | 0.249 | 0.326 | 100 | 0.791 | |

| Flight 6 | 0.237 | 0.201 | 0.310 | 0.398 | 99.45 | 1.314 | |

| Flight 7 | 0.218 | 0.137 | 0.258 | 0.346 | 100 | 0.731 | |

| Flight 8 | 0.236 | 0.185 | 0.300 | 0.368 | 99.49 | 1.269 | |

| Flight 9 | 0.223 | 0.156 | 0.272 | 0.325 | 99.79 | 1.060 | |

| All | 0.210 | 0.159 | 0.263 | 0.329 | 99.82 | 1.314 |

| Flight | (m) | (m) | RMSE (m) | (m) | P(%) < 1 m (%) | (m) |

|---|---|---|---|---|---|---|

| Flight 1 | 0.145 | 0.081 | 0.166 | 0.234 | 100 | 0.350 |

| Flight 2 | 0.142 | 0.088 | 0.167 | 0.230 | 100 | 0.403 |

| Flight 3 | 0.129 | 0.083 | 0.154 | 0.196 | 100 | 0.417 |

| Flight 4 | 0.156 | 0.095 | 0.182 | 0.223 | 100 | 0.473 |

| Flight 5 | 0.157 | 0.103 | 0.188 | 0.244 | 100 | 0.546 |

| Flight 6 | 0.195 | 0.156 | 0.250 | 0.321 | 100 | 0.862 |

| Flight 7 | 0.179 | 0.135 | 0.224 | 0.299 | 100 | 0.660 |

| Flight 8 | 0.182 | 0.139 | 0.229 | 0.296 | 100 | 0.845 |

| Flight 9 | 0.199 | 0.154 | 0.251 | 0.317 | 99.67 | 1.150 |

| All | 0.168 | 0.124 | 0.208 | 0.260 | 99.95 | 1.150 |

| System | μ (m) | σ (m) | RMSE (m) | P (m) | P(%) < 1 m (%) | ϵmax (m) |

|---|---|---|---|---|---|---|

| UWB as [42] | 0.304 | 0.275 | 0.410 | 0.488 | 97.10 | 2.001 |

| Proposal | 0.168 | 0.124 | 0.208 | 0.260 | 99.95 | 1.150 |

| Indoor Accuracy RMSE | Maximum Tested Indoor Range | Maximum Indoor Horizontal Velocity | Maximum Tested Outdoor Range | Minimum Positioning Rate | Sensitivity to Lighting Conditions | ||

|---|---|---|---|---|---|---|---|

| X (m) | Y (m) | (m) | (m/s) | (m) | (Hz) | ||

| Vision [19] | 0.012 | 0.014 | 2 | 0.190 | - | 10 | Yes |

| UWB as [42] | 0.355 | 0.205 | 4.5 | 3.139 | - | 3.3 | No |

| This work | 0.177 | 0.115 | 4.5 | 3.139 | 20 | 25 | No |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ochoa-de-Eribe-Landaberea, A.; Zamora-Cadenas, L.; Peñagaricano-Muñoa, O.; Velez, I. UWB and IMU-Based UAV’s Assistance System for Autonomous Landing on a Platform. Sensors 2022, 22, 2347. https://doi.org/10.3390/s22062347

Ochoa-de-Eribe-Landaberea A, Zamora-Cadenas L, Peñagaricano-Muñoa O, Velez I. UWB and IMU-Based UAV’s Assistance System for Autonomous Landing on a Platform. Sensors. 2022; 22(6):2347. https://doi.org/10.3390/s22062347

Chicago/Turabian StyleOchoa-de-Eribe-Landaberea, Aitor, Leticia Zamora-Cadenas, Oier Peñagaricano-Muñoa, and Igone Velez. 2022. "UWB and IMU-Based UAV’s Assistance System for Autonomous Landing on a Platform" Sensors 22, no. 6: 2347. https://doi.org/10.3390/s22062347

APA StyleOchoa-de-Eribe-Landaberea, A., Zamora-Cadenas, L., Peñagaricano-Muñoa, O., & Velez, I. (2022). UWB and IMU-Based UAV’s Assistance System for Autonomous Landing on a Platform. Sensors, 22(6), 2347. https://doi.org/10.3390/s22062347